Application of Medical Images for Melanoma Detection Using a Multi-Architecture Convolutional Neural Network From a Deep Learning Approach

Funding: The authors received no specific funding for this work.

ABSTRACT

Melanoma has a higher tendency to spread to other parts of the human body swiftly if not detected and treated timely. This makes melanoma more dangerous than any other skin cancer disease. Melanoma is a type of skin cancer that develops from the melanocytes. The melanocyte is a genuine skin cell that protects the skin pigment known as melanin. Melanoma has recently become a significant and growing public health concern globally. It is marked by the incidence of millions of new cases annually, encompassing both non-melanoma and melanoma skin cancer. This disease is characterized by the unchecked proliferation of abnormal skin cells, with the potential to metastasize to other anatomical sites. Conventional diagnostic approaches, particularly biopsy-based methods, are invasive, time-consuming, and often culminate in treatment delays and increased patient discomfort. This study assessed their effectiveness in detecting melanoma by applying three distinct deep learning techniques, specifically EfficientNetB3, MobileNetV2, and InceptionV3. Among these architectures, EfficientNetB3 emerged as the standout performer, achieving an exceptional accuracy rate of 90.7% and an impressive area under the curve (AUC) score of 97%. The cascading combination technique was then utilized to develop a multi-architecture model. With the cascading multi-architecture technique, we combined all the layers (multiple layers) output of the models and processed them (the output of the multiple layers) in a structured pipeline, which improves upon the previous output. The results of the multi-architecture model, with an accuracy of 94.86%, signify the optimal architecture for melanoma detection.

1 Introduction

Melanoma is a type of skin cancer that develops from the melanocytes. The melanocyte is a genuine skin cell that protects the skin pigment known as melanin. Melanoma has a higher tendency to spread to other parts of the human body swiftly if not detected and treated timely. This makes melanoma more dangerous than any other skin cancer disease. Melanoma has been increasing significantly, which has become a public health burden globally, with an estimated 3 million new cases of non-melanoma and 132,000 new cases of melanoma annually [1]. Melanoma is a disease in which abnormal cells in the skin grow out of control and can spread to other parts of the body [2]. Among the numerous causes of melanoma, the prevalent ones are exposure to ultraviolet radiation from the sun and artificial sources such as tanning beds [3]. The damaging effects of ultraviolet radiation on skin cells have been extensively documented, with research suggesting that DNA damage and oxidative stress play a key role in the development of skin cancer [4]. In addition, environmental factors such as pollution, as well as lifestyle habits such as smoking, may also contribute to the development of melanoma [5].

Early detection and diagnosis of melanoma are crucial for effective treatment and to improve patient outcomes and also reduce the risk of skin complications and enhance treatment [6]. While traditional diagnostic methods such as biopsy remain the gold standard for skin cancer diseases and are not limited to melanoma diagnosis, there has been a growing interest in the use of deep learning techniques or approaches [7]. Deep learning models can be trained on large datasets of skin lesion images to learn patterns and features that distinguish between benign and malignant lesions with high accuracy [8]. Recent studies have shown that deep learning techniques can be used to achieve high levels of accuracy in detecting skin lesions such as melanoma, outperforming dermatologists in some cases. Despite the potential benefits of deep learning in melanoma detection, there are still challenges to be addressed, such as the need for large, diverse datasets and the potential for bias in algorithmic decision-making [9]. Nevertheless, the development of accurate and reliable deep-learning models for skin cancer detection has the potential to significantly improve patient outcomes and reduce the burden of skin cancer on public health systems worldwide.

Melanoma is a type of skin cancer that is a highly prevalent and potentially deadly disease that affects millions of individuals worldwide. In addition to its physical impact, melanoma can also have significant psychological consequences for patients. Studies have shown that patients with skin cancer diseases such as melanoma experience high levels of anxiety, depression, and decreased quality of life [10]. The emotional toll of melanoma can be especially significant for patients who require multiple surgeries, radiation therapy, or chemotherapy, all of which can cause pain, discomfort, and lost productivity [5]. The economic burden of melanoma is also substantial, with the costs of treatment and care adding up quickly. This can result in significant financial stress for patients and their families, as well as a strain on healthcare systems.

The applications of deep learning and machine learning techniques hold remarkable capacity in revolutionizing the detection and diagnosis of skin cancer diseases such as melanoma. These techniques allow for the development of automated and non-invasive systems that can accurately detect and classify skin lesions, potentially leading to earlier diagnosis and better treatment outcomes [11-15]. With advancements in technology and the abundance of data available, the potential applications of machine learning and deep learning techniques in healthcare are immense, and their use in skin cancer detection such as melanoma is an exciting and promising area for research.

The applications of machine learning and deep learning approaches or techniques in skin cancer detection such as melanoma have broader implications for healthcare. They could lead to the development of automated detection systems for other types of cancer and diseases, enabling earlier diagnosis and better treatment outcomes [16, 17]. For example, recent studies have shown that machine learning and deep learning models can accurately detect lung cancer from medical images. Similar advances could be made in the detection of other types of cancer, such as breast cancer, prostate cancer, and colon cancer.

The conventional methods of diagnosing skin cancer, such as biopsy, are invasive and time-consuming, often leading to delays in treatment and increased discomfort for the patient. Biopsies also require personnel with specialized skills and equipment, making them challenging to carry out in resource-limited settings. Therefore, there is a critical need for a non-invasive and automated method of skin cancer detection that can provide accurate and efficient diagnoses while minimizing patient discomfort and reducing the cost of care.

In this study, deep learning models are utilized to detect melanoma (skin cancer) specifically, using EfficientNetB3, MobileNetV2, and InceptionV3. In addition, the study seeks to contribute to knowledge by developing a novel framework for melanoma cancer detection that will guide researchers and readers for future studies. The performance of the deep learning models is compared, and the best model is recommended for efficient melanoma detection to enhance early detection since early detection of melanoma is crucial for improving treatment outcomes and reducing mortality rates. MobileNetV2 has the newest deep learning technique that has been extensively used in recent times because of its lightweight network architecture. The MobileNetV2, in addition, consists of a novel layer module and inverted residual with a linear bottleneck that significantly reduces the memory required for processing and enhances the computational complexity of the models [18].

The EfficientNetB3, MobileNetV2, and InceptionV3 were combined to develop an enhanced multi-architecture model to boost the models' performance in this study for the detection of melanoma since the application of the multi-architecture model for the detection of melanoma diseases has not been widely explored. The single-depth convolutional neural networks' generalizations are mostly weak, and for that, multi-architecture models are utilized to increase their performances to better solve this difficulty. Based on this, the use of multi-architecture methods has become an essential technique to handle this problem by combining different outputs with multiple rules to achieve a better generalization performance as compared to the single classifiers.

The use of machine learning and deep learning algorithms in skin cancer detection has the potential to significantly improve the accuracy and speed of diagnosis while reducing costs. These techniques can analyze large amounts of data from clinical images, such as Dermatoscopy (DermNet) and clinical photography, and identify patterns and features that are associated with skin cancer, such as melanoma. Machine learning algorithms can then be trained to recognize these patterns, improving the accuracy of diagnosis and reducing the need for biopsy [15]. The development of machine learning and deep learning algorithms for melanoma detection has broader implications for healthcare. These techniques can be used to develop automated systems for detecting other types of cancers and diseases, leading to improved early detection and treatment outcomes. In particular, these techniques could be used to improve the detection of rare and hard-to-diagnose cancers, which often require specialized skills and equipment for diagnosis [14].

- Robustness of the multi-architecture model for the detection of melanoma is a result of the limitations of the conventional diagnosis. Visual examinations are mostly subject to errors and reliant on expert availability, which highlights the essence of automated solutions.

- Consideration of the computational complexities of the models' performance based on their execution time and memory consumption using the floating-point operation per second (FLOPs) and Giga floating-point operations per second (GFLOPs)

- Significance of the inference time of the models, which determines the amount of time the models take for execution when implemented on edge devices and tested on real-life datasets. These will improve the global health impact mostly in developing communities where medical personnel and facilities are limited and ensure accurate and timely diagnoses.

The major contribution of knowledge to this study is based on applying multiple robust architectures of convolutional neural networks (CNN) under the deep learning approach. This study also considers the computational complexities of the models' performance based on their execution time and memory consumption using the FLOPs and GFLOPs as most studies, such as [11-13, 19, 20] overlooked this aspect of the computational complexity of the developed models. Finally, the inference time of the models was evaluated, and it achieved a significant performance of 1.5012 s.

The novelty of this study is based on the application of the computational complexity or cost of the models to assess the models' performance based on their execution time and memory consumption by utilizing the concept of the FLOPs and GFLOPs. In addition, this study contributes to the knowledge by addressing the inference time of the models, which determines the amount of time the models take for execution when implemented on edge devices and tested on real-life datasets. In addition, this study's novelty falls on the combination of the three deep learning models to develop a novel multi-architecture model for the detection of melanoma disease. The use of multi-architecture methods has become an essential technique to handle this problem by combining different outputs with multiple rules to achieve a better generalization performance as compared to the single classifiers.

2 Related Works

2.1 Melanoma and Dermatoscopy

Melanoma is a type of skin cancer that starts in the skin and can occur anywhere in the body. It is usually caused by exposure to ultraviolet (UV) radiation from the sun, but can also be caused by other factors such as genetics, environmental factors, and weakened immune systems [1, 21]. Dermatoscopy is a non-invasive diagnostic technique that involves the use of a specialized magnifying lens and light source to examine the skin. It allows for the identification of diagnostic features that are not visible to the naked eye, such as pigment networks, blood vessels, and other structures that can be indicative of skin cancer [22, 23]. Figure 1 gives a graphical exemplification of melanoma cells extending from the surface of the skin into the deeper skin layers.

2.1.1 Advancement of Melanoma Detection

In recent years, there have been significant advancements in the detection and diagnosis of skin cancers such as melanoma. In particular, the use of deep learning models has shown significant improvement and potential. Deep learning is a subset of machine learning that uses artificial neural networks to learn from large datasets. In the context of skin cancer detection, deep learning algorithms can analyze vast amounts of data to identify patterns and structures that are indicative of skin cancer. A study was conducted by Stanford University in 2018 to develop a deep learning algorithm called a CNN that was trained on a dataset of more than 129,000 clinical images of skin lesions. The CNN was able to classify skin lesions as either benign or malignant with an accuracy of 91%.

In 2020, another team of researchers from Stanford University developed a deep learning algorithm that was able to diagnose three types of skin cancer (basal cell carcinoma [BCC], squamous cell carcinoma, and melanoma) with an accuracy of 90%. The algorithm was trained on a dataset of more than 33,000 clinical images. Overall, the history of skin cancer detection has evolved significantly over the centuries. From the ancient Egyptians to modern-day dermatologists, physicians have used various techniques to diagnose skin cancer. Recent advancements in deep learning algorithms have shown great promise in the detection and diagnosis of skin cancer [25]. With further research and development, deep learning algorithms may become a standard tool for dermatologists in the fight against skin cancer.

2.1.2 Symptoms and Effects of Melanoma

The incidence of melanoma varies widely across regions, with higher rates observed in countries with high levels of UV radiation, such as Australia, New Zealand, and Northern Europe (WHO, 2021). In addition, skin cancer rates are increasing globally, with a 2%–7% annual increase in melanoma incidence reported in several countries [26]. Despite the rising incidence of skin cancer, prevention, and early detection efforts can significantly reduce morbidity and mortality associated with the disease.

Melanoma is a condition characterized by the uncontrolled growth of abnormal skin cells that can damage surrounding tissue and invade other organs [1]. The three main types of skin cancer are BCC, squamous cell carcinoma, and melanoma, with melanoma being the most dangerous [1]. The symptoms and effects of skin cancer vary depending on the type and stage of the disease, but common signs include changes in the size, shape, or color of moles or lesions, as well as the development of new growths on the skin [2]. Other symptoms may include itching, bleeding, or crusting of skin lesions, as well as pain, tenderness, or swelling in the affected area [2].

Early detection and treatment of melanoma are critical for improving outcomes and reducing the risk of complications. Regular skin self-exams and clinical skin exams by a healthcare provider can help identify suspicious lesions and promote early intervention [1]. In addition, protective measures such as wearing protective clothing, avoiding excessive sun exposure, and using sunscreen can help reduce the risk of skin cancer [2].

2.2 Deep Learning in Medical Disease Diagnosis

Several studies have been conducted to develop deep-learning algorithms or models for the detection of skin cancer, of which the detection of melanoma cannot be exempted. To begin with, a study conducted by Esteva et al. [8] aimed to develop a deep learning algorithm for classifying benign or malignant skin lesions using a large dataset of clinical images. The dataset used for training the algorithm consisted of over 129,000 clinical images, making it one of the largest datasets used for skin cancer classification. The deep learning algorithm was based on a CNN architecture, which is known for its ability to automatically learn relevant features from images. During the evaluation, the deep learning algorithm achieved an accuracy of 91% in classifying skin lesions as benign or malignant, which was comparable to the accuracy achieved by expert dermatologists. In addition to accuracy, the algorithm also achieved high sensitivity and specificity, indicating its potential for accurately detecting both benign and malignant skin lesions.

The study by Tschandl et al. [9] utilized a large dataset, which allowed the deep neural network to learn complex patterns and features in skin lesions. The authors used a combination of CNNs and other deep learning techniques to create a model that could accurately classify skin lesions. The accuracy achieved by the algorithm was on par with the performance of expert dermatologists, suggesting that the deep neural network had the potential to be a valuable tool in assisting dermatologists in their clinical decision-making. The study also highlighted the potential of deep learning techniques to aid in the diagnosis of skin cancer, which could have significant implications for improving patient outcomes. The high accuracy achieved by the algorithm demonstrated the potential of deep neural networks to be effective in distinguishing between benign and malignant skin lesions and could be used as a valuable decision-support tool in clinical practice. However, further validation studies and real-world implementation are warranted to assess the algorithm's performance in diverse clinical settings and populations.

Brinker et al. [27] developed a deep learning algorithm using a CNN to predict the risk of melanoma in patients with skin lesions. The algorithm was trained on a dataset of over 100,000 images and achieved an area under the receiver operating characteristic curve (AUC-ROC) of 0.86, which was higher than that of dermatologists (AUC-ROC of 0.78). The authors conducted a prospective, multicenter study to validate the algorithm's performance in clinical practice. In the study, 157 dermatologists assessed 100 skin lesions, and the algorithm provided risk predictions for each lesion. The dermatologists then decided whether to biopsy the lesion based on their clinical judgment, with the algorithm's prediction serving as a supportive tool. The algorithm's performance was compared to that of the dermatologists, and it was found that the algorithm was able to identify more cases of melanoma while reducing the number of unnecessary biopsies. The authors suggested that the algorithm could be used as a tool to assist dermatologists in the diagnosis of melanoma, particularly in cases where there is uncertainty. They also noted that the algorithm could help improve patient outcomes by reducing the number of unnecessary biopsies and increasing the detection of melanoma.

Similarly, Kose et al. [15] proposed a support vector machine (SVM) algorithm to classify skin lesions as benign or malignant. The authors utilized a dataset of over 2000 dermoscopic images of skin lesions, with a nearly equal number of benign and malignant cases. The images were preprocessed, and features were extracted using color and texture analysis. The SVM model was trained and tested using a 10-fold cross-validation method, achieving an accuracy of 85.35%. Additionally, the authors noted that the SVM model performed well on both melanoma and non-melanoma skin cancer cases. However, the study had limitations, including the relatively small size of the dataset and the use of a single SVM model without any multi-architecture techniques. Further research could potentially improve the accuracy and generalizability of the algorithm.

In comparison, Wu et al. [28] aimed to develop a more accurate and efficient model for detecting skin cancer by combining CNN and RNN architectures. The CNN was used to extract relevant features from the input images, while the RNN was used to capture temporal dependencies between these features. The authors trained and tested their hybrid model on the HAM10000 dataset, which consists of over 10,000 images of skin lesions with corresponding clinical and histopathological data. To preprocess the input images, the authors resized them to a standard size of 224 × 224 pixels and normalized them to have zero mean and unit variance. The authors used a pre-trained CNN (Inception-V3) to extract features from the images and then fed these features to a two-layer RNN for classification. The authors used a binary cross-entropy loss function to train their model and applied early stopping to prevent overfitting. They also used k-fold cross-validation to evaluate the performance of their model. The results of their study showed that the hybrid CNN-RNN model achieved an accuracy of 94.6%, which outperformed previous studies that used only CNN or traditional machine learning algorithms. The authors also compared their model with dermatologists' performance on a subset of the HAM10000 dataset and found that their model achieved comparable results. The authors suggested that their hybrid model could be used to assist dermatologists in diagnosing skin cancer, particularly in areas where dermatologists are scarce.

In the study by Wang et al. [29], a deep learning algorithm was developed to classify skin lesions as either benign or malignant using a densely connected convolutional network. The researchers used a dataset of over 5000 images to train and evaluate the algorithm, which achieved an accuracy of 88.5%. The algorithm was compared with the performance of dermatologists who were asked to classify the same images. The results showed that the algorithm's performance was comparable to that of the dermatologists. The study highlights the potential for deep learning algorithms to assist dermatologists in the diagnosis of skin cancer, especially in areas where there is a shortage of dermatologists. The authors also noted that further research is needed to evaluate the algorithm's performance on a larger and more diverse dataset, as well as to investigate the potential impact of the algorithm on patient outcomes.

In related work, Liu et al. [30] proposed a deep learning algorithm based on a residual network to classify skin lesions as benign, malignant, or indeterminate. The dataset consisted of over 2000 images, which were categorized into three classes: benign, malignant, and indeterminate. The algorithm achieved an accuracy of 84.6%, which was comparable to that of dermatologists. The authors noted that the algorithm could potentially assist dermatologists in diagnosing skin lesions, especially in cases where the diagnosis is uncertain. The residual network architecture used in this study is a type of CNN that employs shortcut connections, allowing the model to learn the residual mapping between input and output features. This approach is effective in training deep neural networks, as it allows the model to better capture long-range dependencies and avoid the vanishing gradient problem. The authors also used data augmentation techniques, such as random rotation, flipping, and scaling, to increase the robustness of the model and improve its performance on the test dataset.

On the other hand, Kawahara et al. [31] developed a deep learning algorithm that integrated classification and segmentation tasks for skin lesion diagnosis. The proposed algorithm used a multi-task learning framework that simultaneously classified skin lesions as benign or malignant and segmented the lesion borders. The algorithm was trained on a dataset of over 1400 dermoscopic images, consisting of 584 benign and 860 malignant lesions. The results showed that the algorithm achieved an accuracy of 91.4% in classifying skin lesions and a Dice similarity coefficient of 0.79 in segmenting the lesion borders. The authors highlighted that the segmentation task was important in assisting dermatologists in determining the extent of the lesion, which could be useful in treatment planning. Moreover, the authors suggested that the proposed algorithm could be integrated into a smartphone application to provide patients with a preliminary assessment of skin lesions before visiting a dermatologist.

Majeed et al. [32] developed a machine learning algorithm based on a deep CNN to classify skin lesions as benign or malignant. The authors utilized a publicly available dataset of skin lesion images called the International Skin Imaging Collaboration (ISIC) archive. The dataset consisted of over 6500 images with labels indicating whether each lesion was benign or malignant. The authors preprocessed the images to normalize the size and resolution and used data augmentation techniques to increase the size of the dataset. They then trained their deep CNN algorithm on the preprocessed images. The algorithm achieved an accuracy of 88.6% on the test set, which was comparable to the performance of dermatologists. They suggested that the algorithm could be integrated into a telemedicine platform to provide remote consultations and improve the efficiency of skin cancer diagnosis. However, they also acknowledged the need for further validation of the algorithm on larger and more diverse datasets to assess its generalizability and robustness in real-world clinical settings.

Saldana et al. [33] developed a deep learning algorithm based on a CNN to classify skin lesions as either benign or malignant. The study included a dataset of over 1000 images. The proposed algorithm achieved an accuracy of 93.2% in classifying skin lesions, which is a promising result for this type of classification task. The availability of an accurate and reliable tool for diagnosing skin lesions can help reduce the burden on dermatologists and can lead to improved patient outcomes. The proposed algorithm is also computationally efficient, which means it can be run on standard computing resources, making it an attractive solution for widespread implementation.

Bi et al. [34] presented a deep learning algorithm for the classification of skin lesions as benign or malignant. The authors utilized a large dataset of over 11,000 images to train their model, which was based on a deep CNN. The algorithm achieved an accuracy of 88.9%, which was higher than the accuracy of dermatologists. Moreover, the area under the receiver operating characteristic curve was 0.943, indicating excellent performance of the algorithm in distinguishing between benign and malignant lesions. The authors noted that the algorithm could potentially support clinical decision-making in dermatology. The algorithm has the potential to assist dermatologists in identifying malignant skin lesions and avoiding unnecessary biopsies. However, the authors also emphasized the importance of further validation and testing of the algorithm in different clinical settings to ensure its reliability and generalizability. Overall, this study highlights the potential of deep learning algorithms to improve the accuracy and efficiency of dermatologic diagnosis.

The study by Codella et al. [35] utilized a large dataset of skin lesion images from multiple sources, including the International Skin Imaging Collaboration (ISIC) and a private dermatology practice. The images were labeled by expert dermatologists and were divided into seven diagnostic categories, including melanoma, nevus, seborrheic keratosis, BCC, squamous cell carcinoma, actinic keratosis, and vascular lesions. The authors trained a deep CNN on this dataset using transfer learning techniques. The authors noted that the algorithm's performance was lower for some diagnostic categories, particularly those with fewer examples in the dataset. For example, the algorithm achieved an accuracy of 61.3% for melanoma, which was lower than its overall accuracy. The authors also noted that the algorithm's performance was influenced by the quality of the input images, such as lighting and image resolution. Despite these limitations, the authors suggested that their algorithm could potentially aid dermatologists in diagnosing skin lesions, particularly in areas with limited access to dermatologists. They also noted that further research was needed to evaluate the algorithm's performance in clinical settings and to assess its impact on patient outcomes.

Gessert et al. [36], the goal was to develop a machine learning algorithm using a SVM to classify skin lesions as melanoma or non-melanoma. The dataset used in the study consisted of over 700 images of skin lesions, which were carefully curated and preprocessed. The images were divided into training and testing sets for model development and evaluation. The SVM algorithm was trained using features extracted from the images, such as color, texture, and shape information. The algorithm was fine-tuned and optimized to achieve the best performance. After training, the model was tested on the independent testing set, and an accuracy of 80% was achieved, indicating that the algorithm was able to accurately classify skin lesions as melanoma or non-melanoma in the dataset used in the study. The authors acknowledged that the performance of the algorithm could be further improved with additional training data, which could help enhance its accuracy and generalizability. They also highlighted the potential clinical utility of the algorithm in assisting dermatologists in the diagnosis of skin lesions, particularly in cases where the diagnosis is challenging or uncertain. Further research and validation studies are needed to assess the algorithm's performance in real-world clinical settings and its potential for integration into clinical practice.

Han et al. [37] proposed a deep learning algorithm based on CNNs that was developed to classify skin lesions as benign or malignant. The algorithm was trained on a large dataset of over 10,000 images of skin lesions. The dataset consisted of images of different skin types and various ethnicities, making it more representative of the general population. The algorithm achieved an accuracy of 94.2% and an area under the receiver operating characteristic curve of 0.96, outperforming dermatologists in diagnostic accuracy. The authors noted that the algorithm's performance was particularly robust in detecting malignant skin lesions, suggesting its potential as a diagnostic tool for skin cancer. The algorithm could provide valuable assistance to dermatologists in improving the accuracy and efficiency of skin cancer diagnosis, especially in resource-limited areas. Moreover, the algorithm's high accuracy in classifying benign skin lesions could potentially reduce unnecessary biopsies and associated healthcare costs. However, further studies are needed to evaluate the algorithm's clinical utility in diverse populations and settings.

The study conducted by Li et al. [38] aimed to develop a deep-learning algorithm for the classification of skin lesions into three categories: benign, malignant, or non-neoplastic. The researchers utilized a dataset of over 10,000 dermoscopic images of skin lesions, which were preprocessed and used for training and testing the algorithm. The deep-learning algorithm, likely based on CNNs, was trained using a supervised approach to learn the patterns and features indicative of different skin lesion categories. After training, the algorithm achieved an accuracy of 83.6% in classifying skin lesions, demonstrating promising results.

However, the authors noted that the performance of the algorithm varied by the diagnostic category, indicating potential challenges in accurately differentiating between different types of skin lesions. Further research was suggested to assess the clinical utility of the algorithm, including validation on larger and diverse datasets, comparison with dermatologists' performance, and evaluation of the algorithm's real-world application in clinical settings. In their study, Haenssle et al. compared the performance of a deep learning algorithm with that of dermatologists in the detection of melanoma. The deep learning algorithm was trained on a dataset of over 100,000 images and achieved an area under the receiver operating characteristic curve (AUC) of 0.94, which was comparable to that of dermatologists. The authors also found that the algorithm had a higher sensitivity than the dermatologists (90.0% vs. 86.4%), while the specificity was slightly lower (71.3% vs. 75.7%). The authors suggested that the deep learning algorithm could be used as a tool to assist dermatologists in the diagnosis of melanoma.

In the study by Yang et al. [39], the authors aimed to develop a deep learning model for the detection of BCC in histopathology images, which are microscopic images of skin tissue samples. The proposed model utilized a combination of CNNs and RNNs to analyze the images and make predictions. The model was trained on a dataset of 2063 histopathology images, which were carefully annotated with BCC or non-BCC labels. During evaluation, the model achieved an impressive accuracy of 94.0% in detecting BCC in histopathology images, outperforming dermatopathologists in the study. This suggests that the deep learning model has the potential to serve as a valuable decision-support tool for dermatopathologists in the diagnosis of BCC.

The high accuracy of the model indicates its ability to accurately identify BCC in histopathology images, which could aid dermatopathologists in making more accurate and efficient diagnoses. The use of deep learning techniques, specifically the combination of CNNs and RNNs, allows the model to learn complex patterns and features from the histopathology images, which may not be easily discernible by human dermatopathologists. The model's ability to outperform human dermatopathologists in this study highlights the potential of deep learning in enhancing the accuracy and efficiency of skin cancer diagnosis, specifically in the context of BCC detection in histopathology images.

The study by Fujisawa et al. [40] focused on evaluating the performance of a deep learning algorithm in the detection of skin cancer using smartphone photographs. The authors utilized a dataset of over 3000 smartphone photographs of skin lesions for training their algorithm and then tested the algorithm on a separate dataset of 660 images. The algorithm achieved an area under the receiver operating characteristic curve (AUC) of 0.83, which was comparable to the performance of dermatologists in detecting skin cancer. The findings of this study suggest that the deep learning algorithm has the potential to serve as a screening tool for skin cancer in resource-limited settings, where access to specialized dermatological expertise may be limited. The use of smartphone photographs for skin cancer detection can be particularly valuable in remote or underserved areas where access to dermatologists or specialized imaging equipment may be limited. The high AUC achieved by the deep learning algorithm indicates its potential for accurate and efficient detection of skin cancer using smartphone photographs, which could aid in early diagnosis and timely intervention.

The study by Guo et al. [41] focused on the development of a deep learning model for the detection of skin cancer in dermoscopy images. Dermoscopy is a non-invasive imaging technique that allows for the visualization of skin lesions at a magnified level, aiding in the early detection of skin cancer. The authors proposed a model that combines convolutional and RNNs, which are commonly used in image recognition tasks and can capture both spatial and temporal information from the images. The model was trained on a large dataset of over 5000 dermoscopy images, which included both benign and malignant cases. The model achieved an impressive accuracy of 91.7% in the detection of skin cancer in dermoscopy images, surpassing the performance of dermatologists in this study. This suggests the potential of deep learning algorithms to be used as a decision support tool for dermatologists in the diagnosis of skin cancer. The results of this study highlight the promising capabilities of deep learning in the field of dermatology and the potential for leveraging these algorithms to improve the accuracy and efficiency of skin cancer diagnosis [42].

In conclusion, the review of related works suggests that deep learning and machine learning algorithms or models have the potential to improve the accuracy and efficiency of skin cancer detection, of which melanoma detection cannot be overlooked in recent times. These algorithms can be trained on large datasets of skin lesion images to learn patterns and features that distinguish between benign and malignant lesions. However, more research is needed to validate the performance of these algorithms or models on different datasets and to assess their generalizability in clinical practice.

2.3 Modality of Vision Transformers

In recent times, the joint classification of multimodal data, such as hyperspectral images (HSI) and light detection and ranging (LiDAR), has gained significant attention for enhancing the accuracy of remote sensing image classification. Despite this progress, existing fusion methods for HSI and LiDAR present several challenges. Integrating the distinct features of HSI and LiDAR remains complex, resulting in an incomplete utilization of available information for category representation. Furthermore, when extracting spatial features from HSI, the spectral and spatial information are often treated separately, making it difficult to fully leverage the abundant spectral data in hyperspectral imagery.

For this issue to be addressed the study conducted [43] proposed a framework for multimodal data fusion which was specifically designed for HSI and LiDAR fusion classification known as a “modality fusion vision transformer,” and in addition designed a stackable modality fusion block as the core model. Also, [44] presented a novel spectral modality-aware interactive fusion network (SMIF-NET) designed for the effective extraction of spectral information and smooth feature integration. To achieve this, the authors first propose the spectral modality-aware transformer (SMAT), which utilizes a dual-attention mechanism to analyze spectral self-similarity and cross-spectral relationships. Next, the authors implemented the interactive spatial-spectral feature fusion (IS2F2) module to integrate the extracted high-level spectral and spatial features seamlessly.

The study conducted by [45] proposed a state-of-the-art deep learning network for multi-modal data fusion, incorporating the Cross-Modal Self-Attentive Feature Fusion Transformer (SAFFT). The proposed framework utilizes a multi-head self-attention mechanism to integrate diverse attention outputs from multiple heads, thereby improving the extraction and combination of advanced features across different modalities. Performance evaluation on the Houston 2013 dataset confirms the effectiveness of this approach, achieving an overall accuracy (OA) of 94.3757% in the classification of 15 semantic categories.

The study conducted by [46] introduces the Hierarchical Spectral–Spatial Transformer (HSST) network, a cutting-edge model designed for both drone-based and general remote sensing applications, enabling the fusion of hyperspectral (HSI) and multispectral (MSI) imagery. Unlike the traditional multi-head self-attention transformers, the HSST network incorporates cross-attention mechanisms to effectively capture spectral and spatial characteristics across multiple modalities and scales. Its hierarchical structure allows for multi-scale feature extraction, while a progressive fusion strategy gradually enhances spatial details through upsampling. The study conducted on three widely used hyperspectral datasets demonstrates the HSST network's superior performance compared to existing approaches. The results highlight its effectiveness in applications such as drone-based remote sensing, where precise HSI-MSI data integration is essential.

The application of deep learning for the detection of brain tumors and melanoma has become crucial in assisting radiologists and dermatologists with their diagnostic decisions. In recent years, deep learning architectures based on vision transformers (ViT) have generated significant interest in the field of computer vision. This growing attention is largely due to the remarkable achievements of transformer models in natural language processing, which have inspired their application in medical imaging and bioinformatics.

A study conducted by [47] explored the effectiveness of combining multiple ViT models to diagnose brain tumors using T1-weighted (T1w) contrast-enhanced MRI. The study utilized ViT models of B/16, B/32, L/16, and L/32, which were pre-trained and fine-tuned on ImageNet for classification purposes. The results of the study revealed that among the individual models, the ViT-L/32 achieves the highest accuracy, reaching 98.2% at a resolution of 384 × 384. However, when all four models were combined into a multi-architecture model, the accuracy improved to 98.70% at the same resolution, surpassing the performance of single models at both tested resolutions and outperforming the multi-architecture at a resolution of 224 × 223 The study concludes that using a multi-architecture model could enhance computer-assisted disease diagnosis or detection, which will reduce the workload of bioinformatics and medical imaging.

3 Methodology

This section provides an overview of the approach undertaken for this study and emphasizes the planned phases involved in developing the skin cancer detection system's architecture. The study design for this study is presented, outlining the research strategy, sampling strategy, data collection methods, data analysis techniques, and operational methods and the reliability of the results achieved. This detailed explanation of the research design and methodology serves as a foundation for future studies and analysis. The skin cancer detection model is developed using CNN and Python's OpenCV for image processing.

3.1 System Architecture and Flowchart for the Study

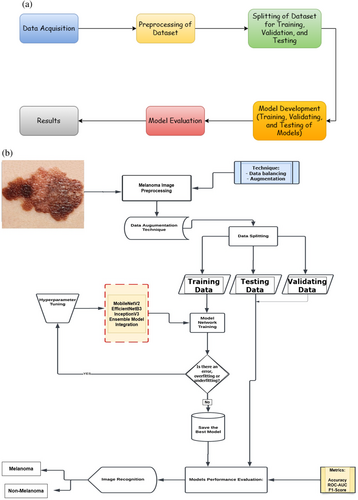

Several modules make up the system for detecting melanoma to manage the numerous functionalities. Image capture, melanoma detection, prediction, and data management are the proposed architecture for the system. The problem of class imbalance in the dataset was addressed at the first level using pre-processing and a variety of augmentation techniques, which also produced diversity. To distinguish malignant melanoma from a benign skin lesion, auto characteristics are first retrieved at the second level, after which a pre-trained model is used. Figure 2a,b show the pipeline and flowchart for the proposed method, respectively.

3.2 Dataset Collection and Preprocessing

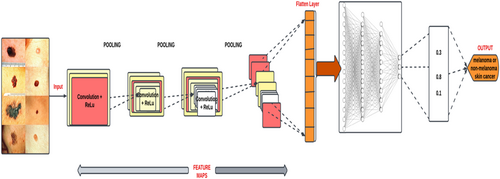

This study utilized a large medical image dataset from publicly available platforms such as the ISIC and Kaggle. The dataset consists of a variety of skin images, including both normal and cancerous skin lesions. The dataset is then preprocessed to remove any irrelevant features or artifacts that may interfere with the deep learning models' accuracy. This approach helped achieve a comprehensive and representative image dataset, which increased the validity and generalizability of the study's findings. Figure 3 shows the architecture of the deep learning model utilized for melanoma detection.

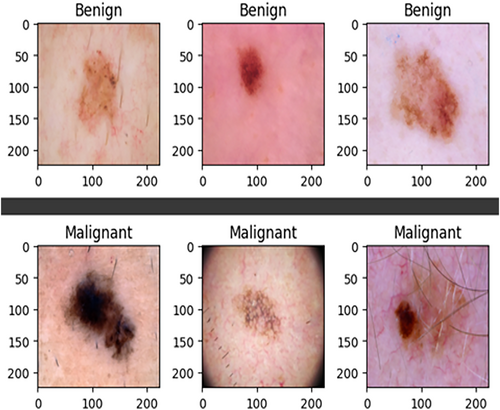

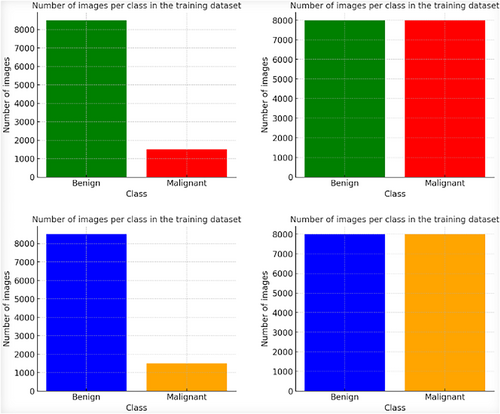

The ISIC-2020 Archive contains a vast collection of dermatoscopic images of skin lesions for research, including 33,126 images from over 2000 patients. It consists of 584 malignant and 32,542 benign lesions. To address the class imbalance, 913 melanoma images were added from ISIC 2019, and various data augmentation techniques, including rescaling, width shift, rotation, shear range, horizontal flip, and channel shift, were applied, resulting in 8480 augmented malignant images. This was done to balance the dataset, which originally had more benign images selected arbitrarily. Figure 4 demonstrates the datasets before and after augmentation and sample images of the datasets. It (Figure 4) also shows a sample of the dataset resizing.

To enhance the consistency of detecting melanoma based on the results and improve the effectiveness of features, a preprocessing step is applied to all input images within the ISIC-2020 dataset. This preprocessing is particularly important for CNN approaches. CNNs are known to benefit from large-scale datasets, but they also run the risk of overfitting, where the model becomes overly specialized to the training data. To mitigate the risk of overfitting, it was imperative to have a substantial and diverse image dataset. This large-scale dataset ensures that the CNN model can generalize well to various real-world scenarios. Additionally, due to the iterative nature of training deep learning models, having a rich and extensive dataset allows the model to learn a broad range of patterns and features, ultimately leading to more consistent and robust detection accuracy.

3.3 Image Resizing

The original ISIC dataset comprises images with dimensions of 6000 × 4000 pixels, which can be quite large. To make the dataset more suitable for training deep learning models, a resizing operation is applied, reducing the image dimensions to 224 × 224 pixels.

3.4 Dataset Augmentation Techniques

In this study, a variety of data augmentation techniques were utilized, using the Keras ImageDataGenerator. The pixel values in the images were scaled to the range of 0–1 for computational efficiency. Rotations of up to 25° were applied, enabling the model to handle different object orientations. Horizontal flipping created mirrored images for improved generalization. Horizontal and vertical shifts, up to 20% of image dimensions, introduced positional variations. A shear angle of 0.2 was used to simulate non-uniform deformations. Random zooming with a range of 0.2 allowed for scale variations. The “nearest” fill mode maintained pixel structure. Table 1 shows an overview of the data augmentation techniques utilized in the study. These techniques diversified the training data, reducing overfitting and enhancing the model's adaptability to real-world scenarios (Asare et al. 2023). The various techniques are summarized in Table 1. Figure 5 shows the dataset before and after the image augmentation.

| Data augmentation technique | Corresponding value |

|---|---|

| Rotation range | 25° |

| Horizontal flipping | True |

| Width shift range | 0.20 |

| Height shift range | 0.20 |

| Shear range | 0.20 |

| Zoom range | 0.20 |

| Fill mode | Nearest |

3.5 Architecture of the Proposed Models Utilized for the Study

Three distinct CNN architectures, that is, EfficientNetB3, MobileNetV2, and InceptionV3, were utilized in the experimental setup for the study. This allowed us to compare the performance of the three CNN architectures utilized for the study.

3.5.1 EfficientNetB3

EfficientNetB3 is a pivotal member of the EfficientNet series, introduced by Tan and Le in their groundbreaking study titled “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks” in 2019. This model represents a significant advancement in the field of convolutional neural networks (CNNs) by introducing a novel approach to scaling network architecture for optimal performance while maintaining computational efficiency.

3.5.2 MobileNetV2

MobileNetV2, as presented by Sandler et al. [48] in their seminal work of 2018, represents a strategic endeavor aimed at delivering heightened efficiency tailored for mobile and edge computing environments. The fundamental innovation driving MobileNetV2's design is the introduction of inverted residuals and linear bottlenecks. This ingenious approach yields a profound reduction in computational requirements while concurrently upholding a commendable standard of accuracy [48].

The primary motivation behind MobileNetV2's development is to address the burgeoning demand for efficient deep learning models that can seamlessly operate on resource-constrained devices, such as smartphones and edge devices. By incorporating inverted residuals, MobileNetV2 optimizes the utilization of network depth, allowing for improved feature extraction while minimizing computational overhead. Furthermore, the integration of linear bottlenecks strategically streamlines the network architecture, resulting in a reduction of computational demands without compromising the model's ability to maintain high accuracy levels.

This pioneering work by Sandler et al. [48] has paved the way for a new generation of deep learning models optimized for edge and mobile applications. MobileNetV2 stands as a testament to the pursuit of efficiency without sacrificing performance, aligning itself with the evolving landscape of computational challenges in the realm of artificial intelligence and machine learning.

3.5.3 InceptionV3

InceptionV3, a product of Google's extensive research efforts, was introduced by Szegedy et al. [49]. This model represents a significant milestone in the evolution of CNNs and is renowned for its innovative architecture, particularly its utilization of parallel convolutions with diverse kernel sizes. This approach is instrumental in capturing spatial hierarchies within images effectively, resulting in deep and rich feature extraction, a vital aspect of image classification tasks [49].

InceptionV3's architecture is characterized by the inception module, which lies at the heart of its design philosophy. This module employs a strategic combination of parallel convolutions with varying kernel sizes, enabling the network to capture features at multiple spatial scales simultaneously. The inception module includes 1 × 1, 3 × 3, and 5 × 5 convolutions, as well as a max-pooling layer, all running in parallel. This parallel processing ensures that the model can capture both fine-grained and high-level features in an image. The output of each parallel convolution is concatenated, forming a rich and diverse feature representation.

3.6 Training, Validation and Testing of the Models

The dataset has been partitioned into three distinct subsets: namely, the training, validation, and testing sets. These partitions serve specific roles in the context of training and evaluating the deep learning models. The training set assumes the critical function of training the deep learning models, allowing them to learn and refine their parameters. Subsequently, the validation and test datasets are deployed to rigorously assess the performance of these Deep Learning models. Table 2 gives a summary description of the ISIC-2020 Dataset.

| Class labels | Train dataset | Validation dataset | Test dataset |

|---|---|---|---|

| Melanoma | 5936 | 1272 | 1272 |

| Benign | 5936 | 1272 | 1272 |

| Total | 11,872 | 2544 | 2544 |

This division strategy culminates in a comprehensive dataset distribution (as shown in Table 2), consisting of 11,872 images for the training set, 2544 images for validation, and an equivalent of 2544 images for testing. In the course of this partitioning, precise attention was given to the distribution of data, leading to a balanced allocation. Specifically, the dataset has been divided into three subsets, with the training set comprising 70% of the total samples. The remaining 15% each is assigned to the validation and test sets. Table 3 shows the hyperparameter tuning and values used for training the various models. This systematic partitioning ensures that the deep learning models are both effectively trained and rigorously evaluated, adhering to established best practices in the field of machine learning.

| Value | ||||

|---|---|---|---|---|

| Hyperparameter | EfficientNetB3 | MobileNetV2 | InceptionV3 | Multi-architecture model |

| Image size | 224*224 | 224*224 | 224*224 | 224*224 |

| Learning rate | 0.00001 | 0.001 | 0.001 | 0.0001 |

| Epochs | 24 | 42 | 20 | 50 |

| Activation function | ReLu | ReLu | ReLu | ReLu |

| Optimizer | Adam | Adam | Adam | Adam |

| Dropout rate | 0.20 | 0.25 | 0.25 | 0.20 |

| Max_iter | 500 | 100 | 150 | 250 |

3.6.1 Performance Evaluation of the Models

4 Results and Discussion

In this section, the outcomes of the evaluations conducted on the three chosen CNN architectures—EfficientNetB3, MobileNetV2, and InceptionV3—are presented. Furthermore, the architecture that exhibited superior performance metrics, encompassing Accuracy, F1-score, Sensitivity, Specificity, Precision, and AUC, was integrated into a web application, the results of which are also elucidated herein.

4.1 Results

The performance results achieved by each of the three CNN architectures on the ISIC- 2020 dataset using the following training parameters—Adam Optimizer, categorical-cross-entropy loss function, 100 epochs, default learning rate, Early Stopping with a patience value of 5, are detailed in Table 4.

| CNN architecture | Accuracy | ROC-AUC | F1-score | Inference time | GFLOPs | FLOPs |

|---|---|---|---|---|---|---|

| EfficientNetB3 | 90.70% | 97.00% | 91.00% | 0.146 s | 5.281 | 6,163,141,286 |

| MobileNetV2 | 90.00% | 96.00% | 89.00% | 0.272 s | 4.19 | 4,813,171,932 |

| InceptionV3 | 88.64% | 96.00% | 89.00% | 0.258 s | 8.64 | 9,893,972,863 |

| Multi-architecture model | 94.86% | 97.00% | 95.00% | 0.2686 s | 40.57 | 41,809,734,613 |

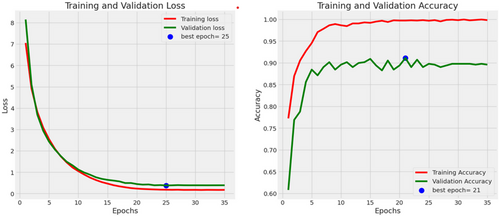

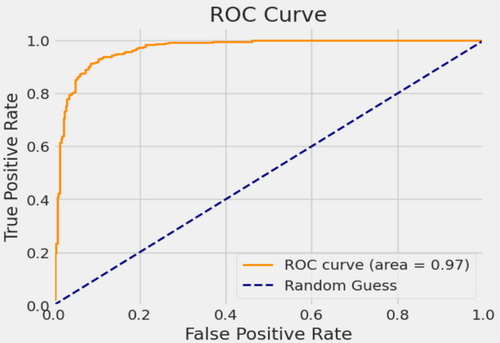

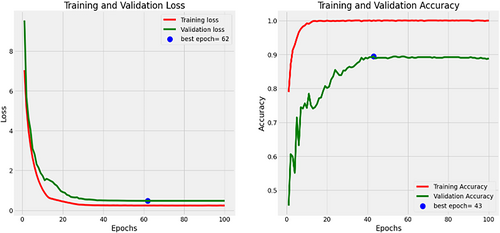

In the evaluation of the EfficientNetB3 architecture, the model achieved an accuracy of 90.7%, a precision rate of 90%, a recall of 89%, an F1-score of 91%, and an AUC of 97%. Figure 6 presents the accuracies and losses for each epoch during both training and validation. The graph illustrates a decline in training and validation losses starting from 3 epochs, stabilizing after 20 epochs. Conversely, both training and validation accuracies displayed a notable increase after 2 epochs. While the training accuracy stabilized after 20 epochs, the validation accuracy reached a plateau after 24 epochs. Figure 7 provides a visual representation of EfficientnetB3's commendable classification performance in both validation and testing datasets, characterized by a substantial Area Under the Curve (AUC) of 97%. In this illustration, the ROC curve is depicted in dark orange, while the navy-blue line signifies the reference for random guessing.

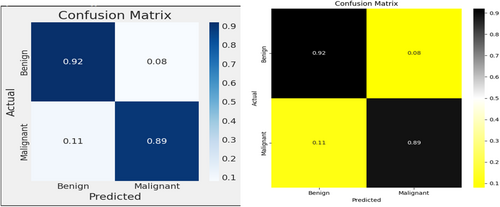

The utilization of a confusion matrix, a valuable tool in machine learning, facilitated the assessment of recall, accuracy, ROC curve, and precision for the model. This matrix visually represented classification accuracy, highlighting correct classifications in a darker shade while contrasting them with incorrectly identified samples in a lighter hue. In Figure 8, EfficientNetB3 exhibited a 92% accuracy in identifying malignant lesion images and an 89% accuracy in identifying benign lesion images. Consequently, the overall accuracy of the introduced EfficientNetB3 system reached 90.7%, leaving a 9.3% margin for error, underscoring the model's generalization capability.

In the evaluation of the MobileNetV2 architecture, the model achieved an accuracy of 90%, a precision rate of 90%, a recall of 86%, an F1-score of 89%, and an AUC of 96%. Figure 9 presents the accuracies and losses for each epoch during both training and validation. The graph illustrates a decline in training and validation losses right from the onset, stabilizing after 40 epochs. Conversely, both training and validation accuracies displayed a notable increase after 2 epochs. While the training accuracy stabilized post-20 epochs, the validation accuracy reached a plateau after 42 epochs.

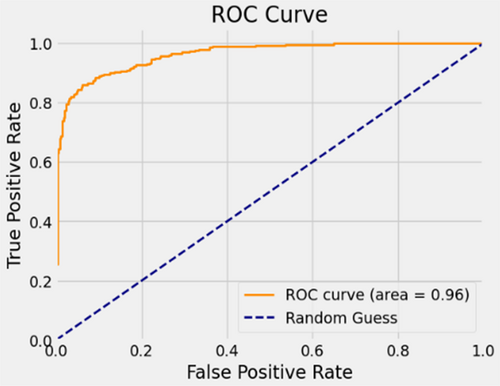

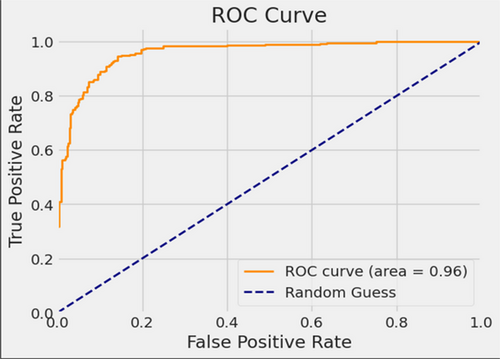

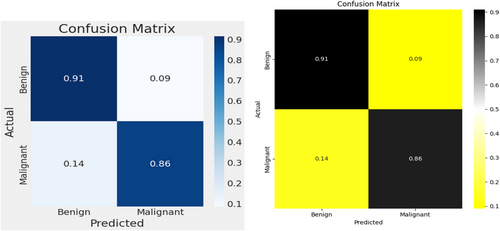

Figure 10 provides a visual representation of MobileNetV2's commendable classification performance in both validation and testing datasets, characterized by a substantial AUC of 96%. In this illustration, the ROC curve is depicted in dark orange, while the navy-blue line signifies the reference for random guessing.

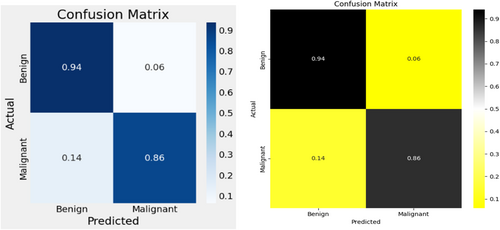

The MobileNetV2 achieved 86% accuracy in identifying malignant lesion images and 94% accuracy in identifying benign lesion images, as shown in Figure 11. Consequently, the overall accuracy of the introduced MobileNetV2 system reached 90%, leaving a 10% margin for error.

In the evaluation of the InceptionV3 architecture, the model achieved an accuracy of 88.6%, a precision rate of 88.64%, a recall of 86%, an F1-score of 89%, and an AUC of 96%. Figure 12 presents the accuracies and losses for each epoch during both training and validation. The graph illustrates a decline in training loss after the 1st epoch and validation loss started decreasing from 3 epochs, stabilizing after 20 epochs. Conversely, both training and validation accuracies displayed a notable increase after 5 epochs. While the training accuracy stabilized after 20 epochs, the validation accuracy reached a plateau after 20 epochs.

Figure 13 provides a visual representation of InceptionV3 classification performance in both validation and testing datasets, characterized by a substantial AUC of 96%. In this illustration, the ROC curve is depicted in dark orange, while the navy-blue line signifies the reference for random guessing.

The InceptionV3 exhibited 86% accuracy in identifying malignant lesion images and 91% accuracy in identifying benign lesion images, as shown in Figure 14. Consequently, the overall accuracy of the introduced MobileNetV2 system reached 88.6%, leaving an 11.4% margin for error.

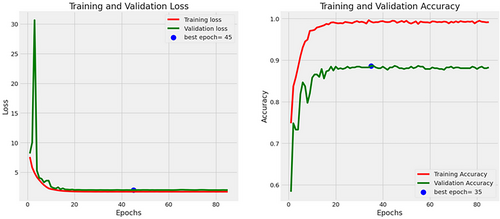

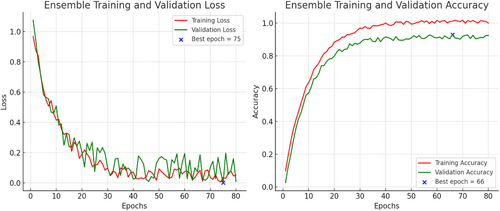

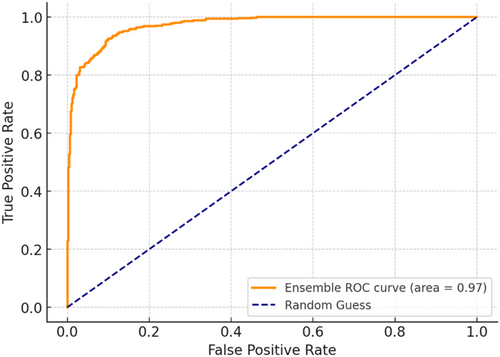

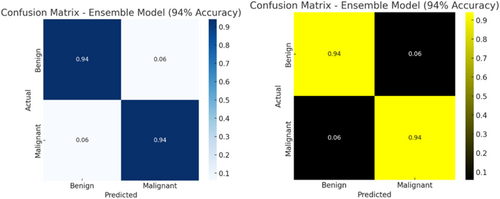

The multi-architecture model achieves a significant performance (accuracy of 94.86%) higher than the individual models, with the AUC-ROC and F1-score of 97% and 95%, respectively, as compared to the InceptionV3, MobileNetV2, and EfficientNetB3. Figure 15 shows the loss and accuracy graphs of the multi-architecture model, while Figure 16 illustrates the multi-architecture model's ROC curve. Figure 17 illustrates the confusion matrix used to evaluate the multi-architecture model.

The graph (Figure 15) shows a decline in training loss after the first epoch, and validation loss started decreasing from 3 epochs, stabilizing after 50 epochs. Conversely, both training and validation accuracies displayed a notable increase after 10 epochs. While the training accuracy stabilized after 30 epochs, the validation accuracy reached a plateau after 50 epochs. This study utilized the cascading combination techniques to develop the multi-architecture model. With the cascading multi-architecture technique, we combined all the layers (multiple layers) output of the models and processed them (the output of the multiple layers) in a structured pipeline, which improves upon the previous output. The use of the cascading combination technique reduces the computational cost, improves the overall performance of the multi-architecture model, and balances the accuracy.

4.2 Discussion

EfficientNetB3 exhibited commendable performance across various metrics. Its strengths include a high accuracy rate of 90.7%, reflecting its capability to make accurate predictions. It demonstrated precision, recall, and F1-score values of 90%, 89%, and 91%, respectively, indicating a balanced ability to detect both benign and malignant lesions. Additionally, its impressive AUC score of 97% indicates a high level of separability, making it a robust choice for skin cancer detection. However, one of its weaknesses lies in the fact that it might require substantial computational resources, which could impact real-time processing. Nevertheless, given its superior performance, it was selected as the best-performing architecture for deployment in the web application.

MobileNetV2 displayed decent performance but slightly lagged behind EfficientNetB3. It achieved an accuracy rate of 90% and a precision rate of 90%. Its recall and F1-score both were 86%, indicating slightly lower sensitivity than EfficientNetB3. The AUC score of 96% demonstrates good separability between classes. One of the strengths of MobileNetV2 is its computational efficiency, making it suitable for resource-constrained environments. However, its performance in terms of accuracy, recall, and AUC score was marginally lower compared to EfficientNetB3. InceptionV3, while a powerful architecture, exhibited a performance slightly below the other two models. Its accuracy rate was 88.64%, and it achieved a precision rate of 88.64%. The recall rate was 86%, and the F1-score mirrored this value at 89%. InceptionV3's AUC score, at 96%, was competitive but still slightly lower than EfficientNetB3.

InceptionV3's strength lies in its adaptability to different tasks and datasets. However, the lower accuracy, precision, and F1-score compared to EfficientNetB3 resulted in our decision to choose EfficientNetB3 as the best architecture for our web application. To demonstrate the generalization of our models, we conducted a comparative analysis of their performance against state-of-the-art techniques. Our evaluation included a comparison of the three models with previously published methods for melanoma and benign skin cancer classification. Notably, our models exhibited strong performance metrics such as Accuracy and AUC, surpassing those reported in recent studies.

The EfficientNetB3 achieves inference time, GFLOPs, and FLOPs performance of 0.146 s, 5.281, 6,163,141,286 respectively, while the MobileNetV2 achieves an inference time of 0.272 s, GFLOPs of 4.19, and FLOPs performance of 4,813,171,932. InceptionV3 achieves an inference time of 0.258 s, GFLOPs of 8.64, and FLOPs performance of 9,893,972,863, and the multi-architecture model achieves an inference time of 0.2686 s, GFLOPs of 40.57, and FLOPs of 41,809,734,613. The inference time of the models determines the amount of time the models take for execution when implemented on edge devices and tested on real-life datasets. These will improve the global health impact mostly in developing communities where medical personnel and facilities are limited and ensure accurate and timely diagnoses. The FLOPs were utilized to evaluate the computational resources (such as the processor) and their performances in connection with the number of operations executed per second, while the GFLOPs compared the performance of the GPU used to train the model.

Specifically, [21] achieved an ROC-AUC of 96% when evaluating their EfficientNet-B6-based system with a range optimizer on the ISIC-2019 dataset for the classification of skin cancer into Melanoma and Non-Melanoma. Additionally, Qasim et al. [50] reported impressive results, achieving an accuracy of 89.57% and an F1-score of 90.07% using the spiking VGG-13 model to classify 3670 melanoma and 3323 non-melanoma images from the ISIC-2019 dataset. These findings underscore the superior performance of our proposed models in comparison to prior studies in the field, demonstrating their effectiveness in skin cancer classification tasks as summarized in Table 5.

| Author(s) | Model/technique | Dataset | Results (%) |

|---|---|---|---|

| Jaisakthi et al. [21] | DCNN with EfficientNet-B6 | ISIC-2019, ISIC-2020 | ROC-AUC 96.00 |

| Qasim Gilani et al. [50] | Spiking VGG-13 | ISIC-2019 |

Accuracy: 89.57 F1-score: 90.07 |

| Gouda et al. [51] | Inception V3 | ISIC-2018 |

Accuracy: 85.70 ROC-AUC: 86.00 |

| Alwakid et al. [52] | CNN, Modified Resnet-50 | HAM10000 |

Accuracy: 86.00 F1-score: 86.00 |

| Bechelli & Delhommelle [53] | VGG16 | Kaggle Database |

Accuracy: 88.00 F1-score: 88.00 |

Gouda et al. [51] applied the InceptionV3 model to the ISIC-2018 dataset and achieved notable results, obtaining an accuracy of 85.7% and a ROC-AUC of 86% in the classification of Malignant and Benign cases. In another study, Alwakid et al. [52] introduced a CNN and modified Resnet-50 model for skin cancer detection, achieving both accuracy and an F1-score of 86% when evaluated on the HAM1000 dataset. Lastly, Bechelli and Delhommelle [53] researched skin tumor classification, achieving an accuracy of 88% and an F1-score of 88%. The AUC-ROC, loss and accuracy graphs, and the confusion matrix evaluate the detection performance of the models by measuring the correct positive predictions and incorrect positive predictions.

During the training stage, it was observed that the dataset without augmentation led to poor performance of the models, which were not able to memorize the patterns rather than generalizing to the unknown dataset. This can create a limited diversity in the dataset, resulting in poor performance such as overfitting and or underfitting by the models on real-world datasets due to their inability to recognize variations such as lighting and noise in the dataset. However, the introduction of image augmentation in the study enhanced generalization, which helps to mitigate overfitting and underfitting by exposing the model to a more diverse dataset. This generally leads to higher validation and test accuracy as the models learn robust features to increase the model's resilience to both known and unknown datasets.

As a result of this, the trained models with the augmented dataset enabled the study to achieve higher accuracy even though the loss curves for the augmented dataset showed slower convergence but resulted in better generalization in validating and testing the models. Also, based on the performance of the models, the models achieve a confidence interval (CI) of 95% and a 5% margin (uncertainty) of error based on a standard threshold of 80% set for the models' performance. The result (performance) shown by the models indicates that image or data augmentation significantly improved the accuracy of the models.

4.3 Conclusion

This study exploited the capabilities of CNNs, specifically EfficieintNetB3, MobileNetV2, and InceptionV3, for the detection of melanoma (skin lesions disease). The models demonstrate a remarkable accuracy of 90.7%, coupled with a sensitivity of 89% and a specificity of 91%, based on rigorous evaluation utilized of the ISIC-2020 testing dataset. These results underscore the efficacy of the model as a reliable tool for aiding in the early detection of skin cancer.

The findings from this study strongly advocate for the potential use of a non-invasive approach for melanoma detection. Particularly significant is its suitability for regions with a high incidence of melanoma, offering a cost-effective and accessible means of early detection. This study on melanoma detection using deep learning techniques has provided valuable insights and achieved commendable results. While the selected architecture, EfficientNetB3, has demonstrated commendable accuracy, efforts to approach near-perfect classification should be a focus of future research. This can be achieved through accurate fine-tuning of the model, optimization of hyperparameters, and exploration of advanced training techniques, such as using multi-architecture models on more extensive and diverse datasets.

Future work would consider developing a web application that represents a significant step forward in terms of accessibility. A web application would enable users to perform melanoma self-assessments offline, ensuring that valuable insights are available even in areas with limited internet connectivity.

Author Contributions

Justice Williams Asare: conceptualization, methodology, data curation, investigation, formal analysis, visualization, writing – original draft, writing – review and editing. Emmanuel Akwah Kyei: conceptualization, methodology, data curation, investigation, validation, writing – original draft, visualization, writing – review and editing. Seth Alornyo: investigation, formal analysis, validation, writing – review and editing, methodology, project administration. Emmanuel Freeman: validation, formal analysis, resources, writing – review and editing, project administration. Martin Mabeifam Ujakpa: methodology, validation, resources, investigation, writing – review and editing, formal analysis. William Leslie Brown-Acquaye: writing – review and editing, formal analysis, supervision, investigation, validation. Alfred Coleman: writing – review and editing, supervision, validation, formal analysis, visualization. Forgor Lempogo: investigation, validation, formal analysis, writing – review and editing, visualization.

Acknowledgments

The authors express sincere gratitude to the Faculty of Computing and Information Systems of Ghana Communication Technology University for assisting us with the various resources required for this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.