Design and Research of Intelligent Chatbot for Campus Information Consultation Assistant

ABSTRACT

The expansion of artificial intelligence is advancing rapidly, and chatbots have become an important component of human life. This paper aims to explore the design principles, implementation methods, and applications of chatbots in real life. Through the comprehensive application of natural language processing, machine learning, and other technologies, this paper designs and implements a chatbot consultation assistant with intelligent chat functions, which can understand the natural language input by users and give reasonable and interesting answers. The workload of teachers can be reduced. Teachers often need to publish learning materials and interact with students in instant communication software such as WeChat and QQ. Chatbots can automatically handle these tasks, such as automatically publishing learning materials and answering students' questions. It can also automatically collect and process teaching data, such as students' learning progress and grades, to help teachers better understand students' learning situations and optimize teaching strategies. They can improve the experience of students. Through chatbots, more and more environments are funny and vivid. They can be used as the interface of human-computer interaction in this assistant; learning becomes more interesting. Students can get instant feedback and guidance through interaction with the chatbot consultation assistant, thereby improving learning effects and enthusiasm.

1 Introduction

The study on chatbots can be dated from 1950s, when Alan Turing proposed a turing test to answer the question “Can machines think?”, which subsequently sparked a wave of research on artificial intelligence [1]. Chatbots can be applied to multiple human-computer interaction scenarios, such as question-answering systems, negotiations, e-commerce, and coaching [2]. Recently, with the mobile terminals increasing, they can also be used as virtual assistants on mobile terminals, such as Siri of the company Apple, the Cortana of the Microsoft, the Messenger, and Google Assistant, allowing users to more easily obtain information and services from the terminal [3]. The design goals of current mainstream chatbots are mainly concentrated in four aspects: (1) Small talk, that is, answering greetings, emotions, and entertainment information; (2) Command execution, helping users complete specific tasks, including hotel and restaurant reservations, flight ticket inquiries, travel guides, and web searches; (3) Question and answer, meeting users' needs for knowledge and information acquisition; (4) Recommendation, recommending personalized content by analyzing user interests and conversation history [4].

The chatbot consultation is an intelligent tool that can talk and communicate with humans in natural language. It provides users with an easy and pleasant communication experience by simulating human conversation. With the continuous advancement of artificial intelligence technology, chatbots have shown broad application prospects in entertainment, customer service, education, and other fields [5]. Therefore, the design and implementation of the chatbot consultation assistant have important theoretical value and practical significance [6].

By assisting teaching, the chatbot assistant could play an essential part in the educational or management systems platforms [7]. Students can get much support and be tutored with it. It can interact with students and answer course-related questions such as fees, courses covered, and completion dates. Thus, it can improve information transparency and student satisfaction.

Through natural language processing and machine learning capabilities, chatbots are able to provide personalized real-time support to help students better understand and master knowledge [8].

Chatbots are mainly trained on NLP, machine learning, speech collection, various images, and videos. These technologies enable chatbots to understand the meaning of human language, perform semantic analysis and information extraction based on the context, and provide answers or suggestions that meet users' needs [9].

With the artificial intelligence rapidly advancing and developing, chatbots are also constantly innovating in technology. For example, models such as BERT and GPT-4 provide chatbots with powerful semantic understanding and generation capabilities [10]. At the same time, the application of technologies such as predictive maintenance and natural language control has further improved the intelligence level of chatbots [11]. Chatbots already have many applications in the fields below.

1.1 Customer Service

In terms of customer service, chatbots are widely used. They can handle a large number of repeated customer inquiries and provide instant answers. Through chatbots, people can get 24-h uninterrupted support. They can enhance and satisfy the customer's experience [12].

1.2 Health and Medical

Speaking of health and medical care, chatbots can provide users with health consultation, disease diagnosis, and treatment advice. By talking to patients, chatbots can understand the condition in a timely manner and provide timely guidance and support [13].

1.3 Entertainment and Socialization

Chatbots can generate and manufacture various types of entertainment content. They can engage in interesting conversations with users and provide entertainment content such as jokes and stories [14]. In addition, chatbots can simulate human conversations, engage in emotional communication with users, and meet their social needs [15].

1.4 Finance and Insurance

Chatbots can assist customers in querying account information, financial advice, insurance quotes, etc., and provide answers to common questions about the financial industry [16].

1.5 Education and Training

Chatbots also have great potential in the field of education and training, and can be used in language learning, programming education, etc. They can provide personalized learning support based on students' needs and improve learning outcomes through interactive dialog [17].

Despite the continuous advancement of technology, chatbots still face some challenges. For example, how to accurately identify the meaning of human language, how to protect user privacy and security, and so on. In addition, chatbots in different fields need a lot of training data and algorithm models to better play their roles, which also places high demands on technology and data [18].

The University of Cambridge in the UK has launched an educational product based on chatbots, aiming to help students learn more effectively and improve their learning efficiency [19]. Harvard University's computer science course CS50 introduced an AI chatbot called “CS50 Duck” to help students check and improve their code [20]. According to survey data, most students think the tool is very useful. Stanford University in the UK has launched a campus assistant app “Beacon” which allows students to conduct personalized course inquiries by typing or voice [21].

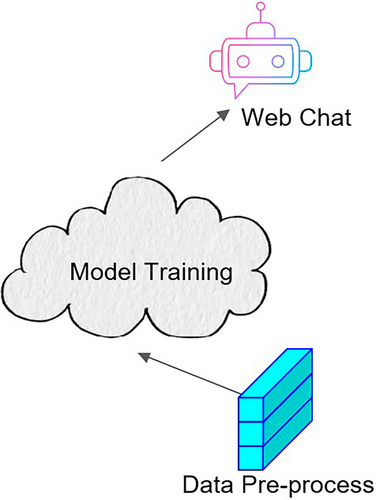

This system is written in Python and is based on the seqtoseq deep learning model for dataset training. In terms of system design, it follows the idea of flexible configuration and modular code. It is divided into three modules: data preprocessor, training model, and visualization display. The logical relationship between modules is roughly as follows: (1) Data preprocessing is a combination of data cleaning, integration, transformation, and feature engineering of the data processing module. (2) The training model is a seqtoseq model based on tensorflow, which is used to perform various calculations through this network model. (3) The visualization display is a simple human-computer interaction program written in a web framework, which calls the application interface for human-computer dialog at runtime.

2 Overall Structure

2.1 Introduction to Structure

The design principles of chatbots mainly include three parts: natural language processing, data set construction, and dialog generation.

2.1.1 Natural Language Processing

Natural language processing is one of the core technologies of chatbots. It involves multiple aspects such as all kinds of analysis and understanding. By analyzing the language input, the chatbot can understand the user's intentions and needs, providing a basis for subsequent dialog generation [22].

2.1.2 Dataset Construction

The data set is an important basis for chatbots to generate dialogs [23]. It contains rich knowledge information and dialog templates. When building a knowledge base, it is necessary to consider the source, organization, and update mechanism of knowledge [24]. At the same time, it is also necessary to reasonably classify and annotate knowledge so that the chatbot can quickly retrieve and match relevant knowledge during the dialog process [25].

Sometimes the amount of information in the dataset far exceeds the user's processing capacity, and the model information is overloaded and exceeds the effective processing capacity [26, 27]. Difficulty in filtering valuable information from it leads to a decrease in information utilization efficiency. It requires more time and effort to process information, increases user burden, and prevents users from fully and accurately understanding relevant information, which affects the quality of decision-making. It is particularly important to filter the dataset to reduce the interference of invalid information [28].

Constructing a personalized intelligent chat assistant model needs a focus on studying users, category interests, and user feature word preferences [29]. We have established a campus information technology professional dataset by obtaining user interests such as registration, browsing information pages, information query keywords, forum publishing, and replies. In order to update the weights of feature words, we use a forgetting function that can be updated over time. It updates topics of interest to the user and thus updates the user model. Furthermore, the personalized intelligent chatbot assistant question-answering model is modified to construct an upgradable dataset of user concerns.

2.1.3 Dialog Generation

Dialog generation is one of the core tasks of chatbots [30]. It retrieves relevant knowledge and templates from the knowledge base based on what the user gave and the current context information of the interaction and generates reasonable answers [31]. In the process of dialog generation, it is necessary to consider the coherence, rationality, and fun of the answer [25]. At the same time, it is also necessary to continuously adjust and optimize the dialog generation strategy based on user feedback and the progress of the dialog [31].

The overall framework diagram is as follows in Figure 1.

The system saves the trained model in a fixed directory and establishes the model name by configuring the relevant model parameters. The corpus data set is trained by setting parameters, including the number of samples per batch and the training epochs, the sample size, and the training dropout retention ratio.

The implementation methods of the chatbot mainly include regular rule methods, based template methods, and machine study methods [32].

2.1.4 Regular Rule Methods

The rule-based method is to achieve dialog generation by predefining a series of rules. These rules determine what kind of answers the robot should give [25]. The user gave the related information of the dialog based on this. This method is simple and not difficult to practice, but it lacks flexibility and scalability.

2.1.5 Template-Based Methods

The template-based method is to achieve dialog generation by building a series of dialog templates. These templates contain fixed sentences and replaceable variables [31]. During the dialog process, the chatbot selects a suitable template and fills in the corresponding variables to generate answers based on the user's concern and the related information of the current interaction. This method has certain flexibility and scalability, but the design and construction of the template require a lot of time and effort [33].

2.1.6 Method Based on Machine Learning

The method based on machine learning is to achieve dialog generation by training models. This method first requires preprocessing and labeling of a large amount of conversation data and then uses machine learning algorithms to train a model that can generate reasonable answers [34]. During the conversation, the chatbot takes what the user inputs and the current context information of the conversation as input and generates answers through the model. This method is highly flexible and scalable but requires a lot of data and computing resources to support the model to train and to optimize [35].

2.1.7 Data Analysis Methods

This includes mathematical analysis, logical analysis and reasoning, statistical models, and other data analysis methods. It also includes statistical model establishment, model verification and calculation, model reasoning, some results in the process, model evaluation, and comparison [36].

2.2 SeqtoSeq Training Model

2.2.1 Introduction to SeqtoSeq Model

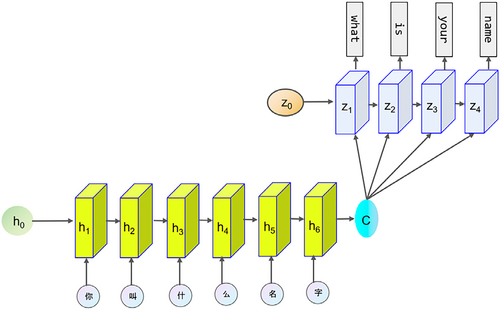

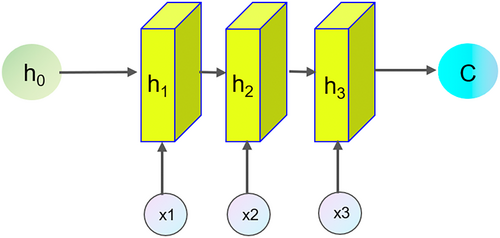

When the output length of a sequence frequently changes over time, we usually choose the seqtoseq model. This situation usually occurs in machine translation tasks. For example, common translation problems, such as different expressions and grammar between languages, result in varying content lengths, leading to many variations in the length of the translation results [17]. As shown in Figure 2 below, the length of the Chinese input is 6, and the length of the English output is 4.

In the network structure, a Chinese sequence is the input, and then its corresponding Chinese translation is the output. After the output part of the result prediction, according to the above example, “what” is output first, “what” is used as the next input, and then “is your name” is output one by one. So that any length of sequence can be output. Such as machine translation, human-computer dialog and chatbots etc., all of these are more or less applied to what we call seqtoseq in today's society. For a simple example, when we input Hello, output changes to hello by the machine translation. For another example, in human-computer dialog, we ask the machine that who you are, and the machine will give the answer that I am so-and-so.

2.2.2 SeqtoSeq Structure

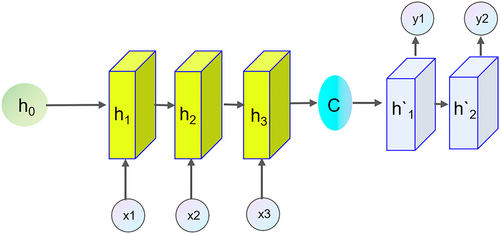

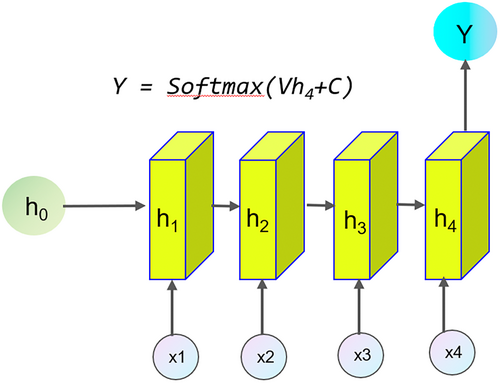

The seqtoseq structure is a progressive structure that is particularly suitable for situations when the current and future content are uncertain [18], such as the conversations between people. As shown in Figure 3, we usually only care about the final step of input.

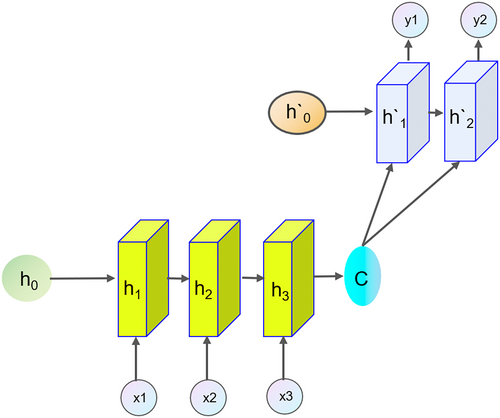

The decoding process usually involves decoding semantic vectors to generate a certain sequence [20]. The vector C serves as a bridge in the process by connecting the beginning and end together like a connecting dragon, as shown in Figure 4.

Models are typically trained using paired input and output sequences [37]. Throughout the entire calculation process, the input and output at each moment are interrelated, and vector C always exists, as shown in Figure 5 below.

3 Data

Chatbots can automatically collect, process, and access relevant teaching data in the teaching database. They can apply advanced artificial intelligence technology to research data and deal with the data, and show it in a variety of forms. This helps teachers better understand students' learning situations and optimize teaching design and strategy [38].

3.1 Corpus Collection

The word corpus refers in linguistics to a large amount of text [39]. It is usually organized with a defined format and markup. Basic corpus resources are stored in computers as carriers. They store content that has actually appeared in the actual use of the language [24]. They can be classified according to different standards, and the real language materials need to be processed before they can be used in practical work and production.

The content and quality of the corpus determine the final top that the model can reach. The cleaning of the corpus is also very important. It directly determines the right or wrong of the model and even affects the convergence of the model, incorrect answers, and grammatical errors, etc. Therefore, the selection and processing of the corpus are very important.

The corpus of chatbot consultation assistant for campus informatization comes from a broad range of sources. Some aspects are included in the following below:

(1) Historical experience: This includes various documents, records, and frequently asked questions accumulated in the history of the school, which are the knowledge foundation of the question answering chatbot system.

(2) Online Q&A System: The school portal has an online Q&A platform that includes questions from teachers and students as well as official answers. These data can be directly used to train an intelligent chatbot assistant system.

(3) Telephone consultation: Through telephone consultation records, many questions that teachers and students are concerned about can be collected, and this data can help the intelligent question-answering chatbot system better understand user needs. The questions and answers generated during these service processes are valuable linguistic resources.

(4) WeChat repair: Through the WeChat repair platform, it provides convenient repair services for teachers and students and also accumulates a large amount of service data, which can be used to develop the corpus and level the intelligent Q&A.

(5) Communication and exchange with other departments: Through communication with various departments of the school, various business processes, regulations, and other information can be collected, which are important resources for building an intelligent question-solving and answering chatbot assistant system.

At present, a corpus of 2000 intelligent chatbots for campus information has been collected, covering knowledge bases in multiple fields such as campus informatization, finance, research institutes, and libraries. By collecting data from these sources, a comprehensive, rich, and practically applicable corpus of intelligent question-answering chatbot assistants can be constructed. The corpus thereby improves the accuracy and practicality of the chatbot system.

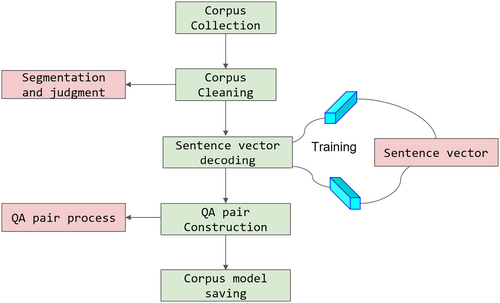

3.2 Corpus Preprocessing

After we construct the corpus, we begin to process the original corpus to meet the requirements of model training and improve training effectiveness. The main process of corpus preprocessing is shown in Figure 6.

3.2.1 Corpus Cleaning

Data cleaning, as the name implies, is to find what we are interested in the corpus. It should clean and delete the content that we are not interested in and regard as noise, which includes extracting information such as titles, abstracts, and texts from the original text. It also includes removing tags, HTML, JS, and other codes and comments from crawled webpage content. Common data cleaning methods include manual deduplication, alignment, deletion, and annotation, or rule extraction, regular expression matching, extraction based on parts of speech and named entities, script writing, or code batch processing, etc. Since the corpus itself implements most of the cleaning functions, we only need to perform word segmentation and remove stop words, etc.

3.2.2 Word Segmentation

The Chinese corpus data is a collection of short or long texts, such as sentences, article summaries, paragraphs, or entire articles. Generally, the characters and words between sentences and paragraphs are continuous and have certain meanings. When performing text mining analysis, we hope that the minimum unit granularity of text processing is words or phrases. So at this time, word segmentation is needed to segment the entire text.

3.2.3 Sentence Normalization

In fact, this part is to further clean and filter the data and normalize the sentences. The main processing content are the spaces after word segmentation, irregular symbols, long symbols, and special characters. The main processing methods include regular expressions, segmentation, traversal judgment, etc. The system returns the filtered string and saves it as a sequence file using pickle. After preprocessing the corpus, it is necessary to determine whether it is a good corpus before use, such as whether it contains Chinese characters and numbers. In addition, it is also necessary to determine whether the length of the QA pair meets the settings of min_q_len, max_q_len, min_a_len, and max_a_len to prevent too long or too short conversations.

3.3 Word to Index

3.3.1 Vocabulary and Index Generation

After completing the corpus preprocessing, we need to consider how to represent the characters and words after word segmentation into a type that can be calculated by computers. Obviously, if we want to calculate, we need to at least convert the Chinese word segmentation string into a number, or more precisely, a vector in mathematics. So we need to digitize each word, and here we choose to create an index. Based on the vocabulary, we create an index library to quickly find paragraphs containing specific keywords based on the keywords in the user's questions.

The entire vocabulary is represented by values from 0 to n. Each value represents a word, which is defined here by VOCAB_SIZE. There are also the maximum and minimum lengths of questions and the maximum and minimum lengths of answers. In addition, we need to define the UNK, SOS, EOS, and PAD symbols, which represent unknown words, respectively. For example, if you exceed the VOCAB_SIZE range, it is considered an unknown word. GO represents the symbol at the beginning of the decoder. EOS represents the symbol at the end of the answer. And PAD is used for padding. Because all QA pairs that are put into the same seqtoseq model must have the same input and output, shorter questions or answers need to be padded with PAD.

All words have different frequencies of occurrence in the entire corpus, and we need to gather relevant data, and we also need to count the top n words as the entire vocabulary based on the frequency, which is the corresponding VOCAB_SIZE. In addition, we also need to get the index of the word based on the index situation, and the index of the corresponding index situation based on the word. After getting word2index and index2word, we also need to save these index files persistently into w2i.pkl files so that they can be used directly.

3.3.2 Indexing Process

The basis of information retrieval technology is to record the location information of basic elements in the index table after preprocessing the original document. The index library is usually established based on the word list. Information indexing is to create feature records of documents. It is usually in the form of an inverted list. The preprocessing module of the system preprocesses the document and establishes the information index. When the user asks a question, all paragraphs that meet the conditions will be found from the index table based on the keywords that are extracted by the system. The intersection will be returned. The index structure is resident in the memory, and the retrieval speed is fast. Once the paragraph is located, further semantic analysis and processing can be performed. After the information index is established, the retrieval process is to sort the relevant paragraphs according to the weight and output them in sequence. The paragraph weight is calculated as follows: First, the weight is set for the keyword (including its extension) output by the part of speech question processing module. Second, weights are assigned to each keyword according to the definition of similar DF. Finally, the retrieved paragraphs are sorted according to the weight and sent to the answer extraction module in sequence.

3.4 Generate Batch Training Data

The model randomly takes out a batch_size of pre-training corpus and converts the words in the questions and answers in the corpus into indexes. It pads questions and answers of different lengths and converts each dialog index into an array and returns it.

3.5 Special Tags

Some special tags such as completion characters on the decoder side, sentence start identifiers, sentence end identifiers, infrequently used and appearing vocabulary, as well as inputs and outputs involved on the encoder– decoder side should be processed.

For the encoder, the length of the questions is different. So the sentences that are not long enough need to be padded with PAD. For example, if the question is “what is your name”, if the length is set to 6, it needs to be padded with “what is your name pad”. For the decoder, it should start with GO and end with EOS. If it is not long enough, it needs to be padded. For example, “my name is lucy” should be processed into “go my name is lucy pad”. The third thing to be processed is our target. The target is actually the same as the decoder input, but it happens to be offset by a position. For example, go should be removed above and it becomes “my name is lucy pad”.

4 DATA Update and Model Training

4.1 Data Update

The information obtained from user interest data of the campus information intelligent chatbot includes information pages browsed by users, information query keywords, campus online platform Q&A posts, and reply posts.

With regard to Q&A expressions of user interest, the explicit implicit combination model is issued by Yang et al., which combines interest granularity representation and the space of the vector model representation [40]. It has achieved good calculations when users browse content without frequent changes. The concerns of users may change, and this study draws on the ideas of this model to construct a campus information intelligent chatbot that can update the categories of user concerns. The information on the campus website is displayed in categories, so the questions that users are interested in are presented in the categories of the information. Representing each category that the user is interested in and the degree of interest in that category using a vector is called a category interest vector, defined as CI = [(C1, W1)…(Cn, Wn)], where n is the category number that users are interested in, Cj is the jth category, Wj is the corresponding weight, and the sum of Wj is one.

The system extracts useful features from user behavior data through feature extraction of user concerns, which may include user preferences, activity time, behavior patterns, and so on.

In the field of information processing, text representation typically relies on an efficient and widely adopted method—vector space. The core idea is to map textual data to a vector in a multidimensional space for numerical processing and analysis.

The method calculates the weight of feature words related to user concerns by extracting them. There are various methods for calculating weights, including the function of Bool and frequency, root mean square function, logarithmic function, entropy function and TF * IDF function. TF * IDF has been favored by relevant researchers and many application fields, because its algorithm is simple and its accuracy and recall rate are high. Salton proposed the TF * IDF heuristic weight algorithm calculation formula in 1973. Many scholars have analyzed the shortcomings of TF * IDF and proposed various improved algorithms. Shi Congying et al. tested the algorithms of “TF * IDF considering inter class and intra class differences”, “TF * IWF * IWF”, “TF * IWF * IWF introducing variance”, and “TF * IDF frequency”. The F1 test value of “TF * IWF * IWF introducing variance” was very high in both open and closed tests, reflecting the role of variance in suppressing interference. We use the weight algorithm of “TF * IWF * IWF with introduced variance” proposed by Chen Keli et al. for calculation.

Among them, Pij = Tij/Lj, where Lj is the occurrence sum of all the special inputs contained in category MCj. Tij is the occurrence number of the special word ti in category MCj. Pid = Tid/Ld, in which Ld is the occurrence sum of all feature words contained in document d and Tid is the occurrence number of the feature word ti in document d. Pi = ∑Tic/m, Where m is the number of categories. A is a positive integer. N(ti) is the number of times the feature word ti appears in all documents, and N is the sum of the number of times all feature words appear in all documents. The value of a here is 3.

Among them, ∑Tic represents the word count of the feature ti in the main text. ∑Tit represents the count of the characteristic word ti in the title. ∑Tis represents the count of the feature word ti in the query.

4.2 Train a SeqtoSeq Model

Among them, Vg in the classifier is the output of the previous module. G represents the category, C is the total number of categories, representing the index of the current element divided by the sum of all element indices. Softmax converts continuous values into relative probabilities, which is more conducive to our understanding, as shown in Figure 7.

For the encoder-decoder model, there are input sequences x1, xT and output sequences y1, yT. The lengths of the sequences on the beginning and end sides can vary. So, we need to get the possibility of the output sequence based on the input sequence. Thus, we have the following conditional probability. The probability of y1, yT occurring when x1, xT occurs is equal to the product of p (yt|v, y1, … yt−1). V represents the implicit state vector corresponding to x1, xT, which can actually represent the input sequence.

The implicit state of the decoder is related to the state at the previous moment, the output at the previous moment, and the state vector v. This is different from RNN, which is related to the input at the moment now, while the decoder inputs the output at the last moment into the RNN.

To maximize it, θ is the model parameter to be determined.

5 Training Results

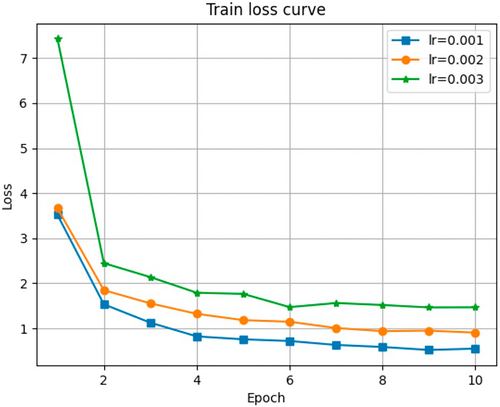

Training is based on the dataset. The training process requires the dataset to be professional, accurate, and representative. Reasonable hyperparameters, such as learning rate, batch size, layer size, and the quantity of epochs trained, can enhance the effectiveness and convergence speed of training. For better training, we utilized a pre-organized professional dataset, and the learning rate is set to 0.001 for the first time. We set epoch iteration 10 times, hidden size = 256, and layer size = 8. The curve of the change in model training loss is shown in Figure 8.

During the training process, control experiments were conducted to optimize hyperparameters, and the epoch value was set to 10 based on the comparison results. The loss changes throughout the entire training process are shown in Tables 1–3 below.

| Learning rate | |||

|---|---|---|---|

| Training loss | L-R = 0.001 | L-R = 0.002 | L-R = 0.003 |

| E1 | 3.522 | 3.669 | 7.431 |

| E2 | 1.534 | 1.848 | 2.448 |

| E3 | 1.127 | 1.556 | 2.139 |

| E4 | 0.822 | 1.323 | 1.791 |

| E5 | 0.757 | 1.183 | 1.766 |

| E6 | 0.720 | 1.147 | 1.472 |

| E7 | 0.632 | 1.008 | 1.563 |

| E8 | 0.587 | 0.938 | 1.519 |

| E9 | 0.521 | 0.950 | 1.465 |

| E10 | 0.551 | 0.905 | 1.468 |

| Encoder/decoder layer size (Lr = 0.001) | |||

|---|---|---|---|

| Training loss | Ls = 4 | Ls = 8 | Ls = 16 |

| E1 | 3.522 | 4.056 | 5.812 |

| E2 | 1.534 | 1.647 | 2.401 |

| E3 | 1.127 | 1.266 | 1.681 |

| E4 | 0.822 | 1.069 | 1.312 |

| E5 | 0.757 | 0.903 | 1.122 |

| E6 | 0.720 | 0.750 | 1.013 |

| E7 | 0.632 | 0.711 | 0.911 |

| E8 | 0.587 | 0.623 | 0.815 |

| E9 | 0.521 | 0.563 | 0.729 |

| E10 | 0.551 | 0.540 | 0.663 |

| Training loss (Lr = 0.001) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Hidden size | Ep1 | Ep2 | Ep3 | Ep4 | Ep5 | Ep6 | Ep7 | Ep8 | Ep9 | Ep10 |

| Hs = 128 | 4.012 | 1.597 | 1.096 | 0.860 | 0.725 | 0.588 | 0.534 | 0.485 | 0.472 | 0.425 |

| Hs = 256 | 3.522 | 1.534 | 1.127 | 0.822 | 0.757 | 0.720 | 0.632 | 0.587 | 0.521 | 0.551 |

6 Visualization

6.1 Chatbot Structure

The chatbot assistant provides a friendly machine chat page to users. The user and the system communicate through asynchronous HTTP calls to realize the machine chat API service. The user submits the conversation request to the machine conversation Web service, and the system returns the result to the user for display after completion.

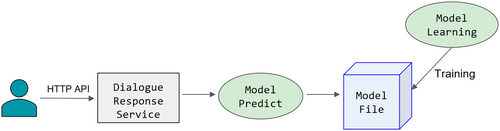

The chatbot assistant system uses the seqtoseq model based on the TensorFlow framework to train the model and obtain the model file of the corresponding language. Then, sentence-level chat can be performed through python commands, which are called by the machine chat API service. The logical functional architecture is shown in Figure 9.

6.2 Web Analysis and Design

If we want a good experience, we need a good web interface for users to use, shielding the bottom layer so that users can simply use our products.

The design of web interfaces mainly consists of many technologies developed by web page developers. Their main functions are as follows. HTML hypertext markup language determines the structure and content of the web page and what content to display. HTML can be said to be static code. Hypertext means that a page can contain various elements such as images, text, sound, animation, and even links. Cascading style sheets designs the presentation style of the web page and how to display the relevant content. CSS is a markup language that separates style information from web page content. We use CSS to define the style for each element. It is mainly used to beautify HTML pages. JavaScript is a dynamic scripting language that controls the behavior (effect) of the web page and how the content should respond to events. Using JavaScript code can make the user and the system interactive. Through it, we can make our web page more diverse, innovative in themes, and presented domestically. Various functional changes can be added by embedding various JS library files.

For the web page, HTML defines the hierarchy of the web page, CSS describes the appearance of the page, and Javascript controls the behavior of the page. A classic example is that HTML is like a person's body, CSS is the person's clothes, and Javascript is like the person's thoughts and behaviors.

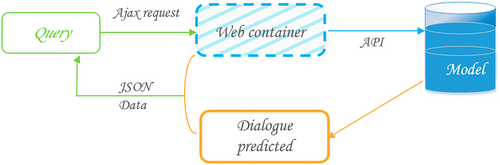

6.3 Workflow

The chatbot consultation assistant is a conversation interface, where you input a sentence and it outputs a response. The system should be designed as an API for external calls, which receives the question and answer information input by the user. The API calls the model based on the received information to predict the response conversation and returns it to the server.

API calls need to be provided by a web server. We use Tornado as a web-based framework that is highly suitable for developing small web applications. It is composed of web frameworks, HTTP client and server implementations, and various types of library tools. So here we use tornado to bind API objects, set listening ports, and listen for user service requests.

The chatbot assistant system designs the structure and content of HTML, sets the article title, dialog box, text input box, sends information, displays information, and loads the background. It also includes the reference of JS files to respond to browser events, read data, and update the page.

The chatbot designs CSS files to beautify HTML pages using selectors to select elements for the layout design of their length, width, height, etc. The system writes JS files to respond to page events, dynamically updates the page, and uses ajax to achieve asynchronous communication effects. Ajax can achieve dynamic non-refresh (partial refresh), which means that data can be maintained without updating the entire page. This makes web applications respond to user actions more quickly and avoids sending unchanged information over the network.

The system opens the web container through code, listens to the port, and the chatbot web page requests the system to interface through ajax to return the robot's response data. The overall steps and process are as follows: The browser loads index.html, references the CSS file for rendering, and executes JS where JS is referenced. After the page is loaded, chatbot.js starts to execute. At this time, you can have a conversation, enter question and answer information, click send, and start an event. The code responds to the event, takes the input information as a parameter, and sends information to the server through ajax. The server calls the bound API, and the API calls the model through the passed parameters, generates a prediction session, and returns the session to the server. The server returns a JSON format file to the ajax engine. The chatbot.js file inserts the received data into the DOM tree through the ajax function and then calls the show function to update the web page. After a round of conversation is completed, you can continue to enter information for conversation. The workflow process diagram is shown in Figure 10.

The chatbot assistant system provides an interface for external calls and calls the seqtoseq model based on the received information to return the prediction result. If the input is a space, return to enter the chat information. The server adds the function of solving cross-domain request access and exception handling. If an exception occurs during the API call, the server internal error is returned to the user. The Message function performs data exchange and web page updates. First, it determines whether the input is empty. If it is empty, it returns and does nothing. If it is not empty, it locks the send box to prevent continuous submission, displays the input information in the dialog box, and clears the input box. Execute requests asynchronously through ajax to exchange data with the server. First, send data to the server, and the server calls the bound API to return data. After the request service is successful, call the show function again to use the received data to update the DOM, and then display it in the dialog box. If you enter a space, the system will prompt you to return and enter chat information. Entering simple and direct questions can basically achieve the desired effect.

7 Conclusions

The chatbot consultation assistant has shown broad application prospects in many fields. In the field of entertainment, chatbots can provide users with interesting interactive experiences as virtual companions and game characters. In the field of customer service, chatbots can automatically handle users' questions and improve service efficiency and quality. In the field of education, chatbots can provide students with personalized learning support as intelligent consultation assistants and learning partners. As artificial intelligence continues to evolve and its applications become more expansive, the potential for chatbots to be utilized in various ways is likely to broaden significantly.

Chatbots will be more personalized, intelligent, and ecological. In terms of personalization, chatbots will provide personalized services through personalized recommendations and accurate analyzes of user needs. In terms of intelligence, chatbots will improve the accuracy of conversations through technologies such as enhanced learning and machine vision. In terms of ecology, chatbots will integrate with more fields to build a more comprehensive intelligent ecosystem. At the same time, with the continuous expansion of application scenarios, the market demand for chatbots will continue to increase.

The chatbot consultation assistant has a very positive impact on students' enthusiasm for learning. This means that the use of chatbots can significantly improve students' learning results. The improvement of the system's interactive mode is needed in the furture. It is necessary to add an interactive mode that combines text with voice and images. This multi-modal interaction effect will be significantly better than the pure text interaction mode. This suggests that combining multiple interactive methods can more effectively promote student learning. From an educator's perspective, the main advantages of chatbots are time-saving assistance and the ability to improve teaching methods. This shows that teachers can teach more efficiently by using chatbots. Feedback from college teachers and students on the use of chatbot assistants is generally positive.

This paper designs and implements a chatbot consultation assistant system with an intelligent chat function. Through the comprehensive application of natural language processing, knowledge base construction, and dialog generation, the system can understand the natural language input by users and give reasonable and interesting answers.

This paper discusses the design principles and implementation methods of chatbots, as well as their applications and prospects in the true world. The technical architecture and functional modules of chatbots will be deeply excavated and improved to realize the demand and desire of more users. Chatbots play multiple roles in the informatization of colleges and universities, such as assisting teaching, reducing teachers' workload, improving learning experience, special teaching applications, and automatic collection and processing of teaching data. With the deepening of informatization construction in universities, the application of chatbots will become increasingly widespread and profound.

Author Contributions

Yao Song: conceptualization, methodology, validation, investigation, visualization, writing – review and editing, writing – original draft. Chunli Lv: methodology, software, data curation, supervision, resources. Kun Zhu: methodology, data curation, supervision, project administration, writing – review and editing. Xiaobin Qiu: software, data curation, validation, visualization, project administration, writing – review and editing.

Consent

The authors agreed that the paper could be published.

Conflicts of Interest

The authors declare no conflicts of interest.

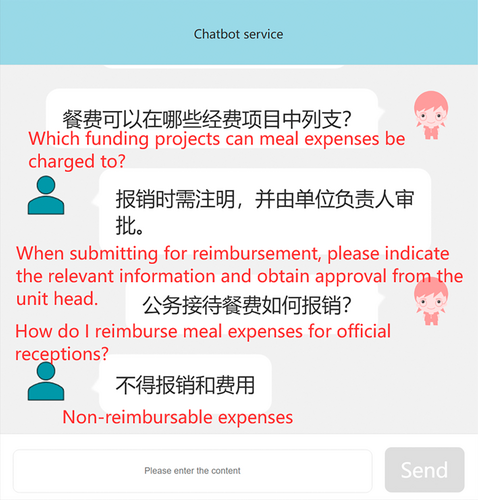

Appendix A: The Chatbot Service Display

Open Research

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.