Formal concept analysis with negative attributes for forgery detection

Funding information: European Cooperation in Science and Technology, CA17124; Junta de Andalucía, UMA2018-FEDERJA-001; Ministerio de Ciencia e Innovación, PGC2018-095869-B-I00

Abstract

Europe's system of open frontiers, commonly known as “Schengen,” let people from different countries travel and cross the inner frontiers without problems. Different documents from these countries, not only European, can be found in road checkpoints and there is no international database to help Police forces to detect whether they are false or not. People who need a driver license to access to specific jobs, or a new identity because of legal problems, often contact forgers who provide false documents with different levels of authenticity. Governments and Police Forces should improve their methodologies, by ensuring that staff is increasingly better able to detect false or falsified documents through their examination, and follow patterns to detect and situate these forgers. In this work, we propose a method, based in formal concept analysis using negative attributes, which allows Police forces analyzing false documents and provides a guide to enforce the detection of forgers.

1 INTRODUCTION

Every day citizens are required to show their documents, such as ID card, passport, or driving licence in Police checkpoints. These documents are registered in national databases if they belong to a national citizen, but this situation differs if the person that shows the document has a different nationality.

The problem of false documents affects the security of all citizens. A person who has a false document is a potential risk. We do not know the reason why this person created or bought the document, but it is seldom a legal purpose. This situation covers from wanted criminals to young tourists which get a falsified ID to buy alcoholic drinks (this is an old problem that increases every summer in touristic zones and generates undesirable deaths which could have been avoided1-3), and also includes dangerous non-qualified drivers.

Some European Police forces have access to EUCARIS, that stands for EUropean CAR and driving licence Information System. It is a unique system, developed by and for governmental authorities, that provides opportunities for countries to share their vehicle and driving licence registration information and/or other transport-related data, helping to fight against vehicle theft and registration fraud.

EUCARIS is not a database but an exchange mechanism that connects the Vehicle and Driving Licence Registration Authorities in Europe, hence it is not a solution to check all the documents, not only due to the fact that ID cards are not included in this information system, but also because there are some countries that do not share their national data in this system, or do not provide the complete data to identify these citizens properly. Moreover, not all Police forces have the adequate access to this information system.

In collaboration with different Police forces around Europe, there is one consensus in the idea that an international database will be the best option, but due to political reasons and not compatible databases, this solution will not be feasible in a near future.

There are emergent groups of Police forces that are specializing in training with documents, preparing their eyes and their fingers to see and feel how different documents are manufactured. “Lost and found” offices are one of the references to find diverse real and fake documents for this training.

When a false document is detected, we need information about its origin. In general, the owner of the document provides incomplete information (if any), avoiding answering who is the responsible for these forgeries: price, city, a name, and so on, only partial information. These information holes that represent unknown data have to be filled-in with information from other possible victims of the same forger, or use any reasoning system capable of handling such imperfect information and determine if we have a false document, for example a driver without a valid license; or a false identity, like a wanted person that falsified her/his data. It is highly frequent the use of cloned data, from a legal person that maybe does not know that her/his lost document is being used for this purpose. If the original document is legal, it is difficult to recognize that the photography is changed in the false document or there is a chemical deletion for some data.

Previous approaches exist in the literature that study how to detect false identities using related information between the false and the real identity,4 or with forensic metrics,5, 6 but Police forces need enough information to detect a false document with a simple analysis. Maybe it is not possible to detect all of them if the forgery has a high quality, but at least some of them.

In this article, we propose to apply the mathematical framework of formal concept analysis (FCA, an applied lattice theory using words of its creator7) to recognize signatures of forgers in documents, and relate them in order to obtain details about their activities.The particular approach to be used is that of FCA with negative attributes managing information that usually is discarded because researchers do not know how to manage properly this negative information. The obtained knowledge define the lines that Police carry out in their criminal investigations.

2 PREVIOUS APPLICATIONS OF FCA IN POLICE RESEARCH

- Domestic violence is one of the problems that are difficult to solve due to lack of information about potential victims that do not report these situations. In References 9 and 10, the authors use emergent self organizing maps with FCA to analyze different reports to locate potential victims. Text mining from police reports is used and shows that there exist problems with labeling, confusing situations, missing values, and so on.

- Radicalization and terrorism were investigated by the National Police Service Agency of the Netherlands,11 which developed a model to classify potential jihadists. The goal of this model is to detect the potential jihadist to prevent him/her to enter the dangerous phase. They use temporal concept analysis to visualize how a possible jihadist radicalizes over time.

- Human trafficking and forced prostitution research12 try to discover these situations in police reports using text mining, in order to filter out interesting persons for further investigation, and use the temporal variant of FCA to create a visual profile of these persons, their evolution over time and their social environment. For these purposes, finding different specified indicators in reports (lover boys, big amount of money, expensive cars, etc.) allows researchers to obtain a lattice where suspects and victims can be related.

- Pedosexual chat conversations analysis13 to prevent child abuse and violence.

- Areas of greater intensity, called “hot spots,” indicate where large amounts of reports were collected. With the help of geolocalization tools, this research14, 15 supports the distribution of resources, such as police officers, patrolling cars, and surveillance cameras, as well as the definition of strategies for crime combat and prevention.

- Criminal networks analysis,8 looking for specified data in Police reports, such as vehicle plate number or personal identification number, to locate and relate different suspects for criminal activities. Negative attributes were first used there.

- Pattern detection of criminal activities analyzing data from traffic cameras for Italian National Police, doing a comparison with previous patterns detected in Spain.16 Since FCA is not developed to work with big data, some studies in partial databases detect some patterns in real data that fit with the proposed theoretical patterns.

3 FCA WITH NEGATIVE ATTRIBUTES

The basic notions of FCA17 and attribute implications are briefly presented in this section. See Reference 18 for a more detailed explanation.

A formal context is a triple where G and M are finite non-empty sets and I ⊆ G × M is a binary relation. The elements in G are called objects, the elements in M are called attributes and ⟨g, m⟩ ∈ I means that the object g has the attribute m.

X↑ is the subset of all attributes shared by all the objects in X and Y↓ is the subset of all objects that have the attributes in Y. The pair (↑, ↓) constitutes a Galois connection between 2G and 2M and, therefore, both compositions are closure operators.

This lattice called the concept lattice of and is denoted by .

Another important notion for our purposes is that of (attribute) implications, that is, A → B where A, B ⊆ M. An implication A → B is said to hold in a context (or that is a model for A → B) if A↓ ⊆ B↓, this is, if any object that has all the attributes in A has also all the attributes in B.

Concept lattices can be characterized in terms of attribute implications and, hence, they can be analysed by using logical tools such as automated reasoning systems. In this way, the knowledge contained in the formal context is interpreted as (and represented by) a set of implications which entail all those that hold in . Formally, an implication A → B can be derived from a set of implications (denoted ) if any model for the implications in is also a model for A → B.

Simplification Logic (see Reference 19 for more details) provides an automated reasoning method to decide whether , which will be our main tool to be used in the practical application.

We still need a slightly more general framework to deal with the kind of imperfect information stated in the introduction. In Reference 20, we have tackled this issue focusing on the problem of mining implication with positive and negative attributes from formal contexts. As a conclusion of that work, we emphasized the necessity of a full development of an algebraic framework that was initiated in Reference 21.

We begin with the introduction of an extended notation that allows us to consider the negation of attributes. From now on, the set of attributes is denoted by M, and its elements by the letter m, possibly with subscripts. That is, the lowercase character m is reserved for what we call positive attributes. We use to denote the negation of the attribute m and to denote the set whose elements will be called negative attributes.

The derivation operators defined in FCA (↑, ↓) are extended in Reference 20 introducing a new theoretical framework that homogenizes the use of positive and negative attributes.

Definition 1.Let be a formal context. The mixed derivation operators and are defined as follows: for A ⊆ G and ,

The classical derivation operators and the mixed ones render different subsets of attributes and objects. The pair of derivation operators (⇑, ⇓) introduced in Definition 1 is a Galois Connection. As a direct consequence, we have that, similarly to the classical case, the closed sets constitute a lattice that we call mixed concept lattice and it is defined as follows.

Definition 2. (Mixed concept lattice)Let be a formal context. A mixed formal concept (briefly, m-concept) in is a pair of subsets ⟨A, B⟩ with A ⊆ G and such that A⇑ = B and B⇓ = A. The lattice of all m-concepts with the order relation

Definition 3.A context is called mixed-clarified (briefly, m-clarified) if the following conditions hold:

- 1. g⇑ = h⇑ implies g = h for each g, h ∈ G.

- 2. a⇓ = b⇓ implies a = b for each .

It is easy to prove that, for every formal context, there exists an m-clarified one whose mixed concept lattice is isomorphic to the original one. The m-clarified lattice is obtained by removing both dual columns and repeated rows/columns.

Given a formal context, a set of mixed implications can be computed using the algorithm proposed in Reference 21, which complements the knowledge provided by classical algorithms. Mixed-InClose (see Figure 1) is the fastest algorithm22 for obtaining the mixed concept lattice. The basic notions and notation used in the description of the algorithms to obtain mixed concept lattices is given below.

Notation 1.Let be a formal context and < be a strict order relation in M (ie, an antireflexive, antisymmetric and transitive relation). For each ,

is a mapping such that for each m ∈ M.

denotes the subset ,

denotes the subset .

A more detailed explanation about the theory related to the use of negative attributes in FCA can be seen in References 22 and 23.

Example 1.Given the formal context in Table 1, we extract the set of mixed formal concepts by using Mixed InClose.

| m1 | m2 | m3 | m4 | |

|---|---|---|---|---|

| g1 | 1 | 0 | 0 | 1 |

| g2 | 1 | 1 | 0 | 0 |

| g3 | 1 | 0 | 1 | 1 |

| g4 | 0 | 1 | 0 | 1 |

For simplicity, we write the mixed concepts using just the set of attributes: .

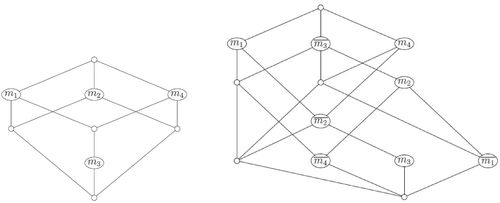

This set could be shown in a concept lattice for checking visually how the concepts are related. Figure 2 shows the (standard) concept lattice and the mixed concept lattice associated with the given context.

4 METHODOLOGY

Sometimes forgers are design artists and copy all the details with powerful computers so that it is increasingly difficult to distinguish it from a valid one, in this case, it is still possible to validate the written information (control digits, specific format of information, etc). As a result, the details (or signs) in a document that could be checked can be classified into two classes: the first one is related to the graphical details, that is, how the document is drawn, and the second one is related to the validity of the written data.

The different mistakes found in a forged document can be considered as the signature of its author; hence, if these mistakes are classified, one could detect the identity of the forgers and/or advise countries that a certain security measure in their documents is no longer valid because it has been already imitated by forgers. When we refer to the signature of a forger in a document (object), we will refer to the set of values of its attributes, either positive or negative. It is worth considering that the signature can evolve over time and some mistakes could be fixed by the forger. A reasonable number of detected mistakes are needed because, when the case is presented in Court, the judge should be given enough details to accept that a signature is linked to the forger.

4.1 Initial analysis

Different types of documents have been collected and analysed using just visual examination. Our goal is that every Police officer without external tools, such as ultraviolet lights, should be capable to detect a false document, so we only consider signs that are not hidden at first glance.

Due to legal restrictions, Police forces cannot keep personal documents from citizens, so the allowed time for checking the validity of these physical documents is very limited, and this leads to the need of training with images of both sides of the documents.

Some false documents were analyzed after this previous training phase, marking the differences that were found into a specimen of each document under study. All these differences were agreed with experts in false documents in order to design an official file for future analysis of potentially false documents. The specific security measures were determined by Forensic Police5, 6 when false documents were detected with simple methods during an investigation.

4.2 Construction of the formal context and associated measures

The comparison between the specimen and the false document is stored in a database (our formal context). The different attributes are marked as true or false according to whether the forger succeeded in reproducing the detail of the security measure or not, leading to a binary sequence of Boolean values representing the signature of the document, which could then be compared with other documents.

The formal context is built by associating a row (the previous sequence of Boolean values) to each false document detected. For instance, given the document in Figure 3, we check six attributes (shown in the figure): the signature of the document is the row immediately below the document, and states that the item fails at satisfying attributes d and f.

Associated with this dataset, we have two measures: the accuracy of an attribute and the correctness of an object.

Definition 4. (Accuracy of an attribute)

A high level of accuracy means that the attribute is useful as a security measure for detecting forgeries. If we detect a security measure that it is correctly copied in all the cases, it has 0 accuracy, meaning that this attribute is useless because it is known by forgers. This security measure is not a reference of quality, and needs improvement, and the issuing country should be informed about this fact.

Definition 5. (Correctness of an object)

It measures the attributes that meet the security requirements of the specimen (vulnerated security).

Correctness is highly related to accuracy. A low level of correctness means that the document has poor quality with respect to the security measures.

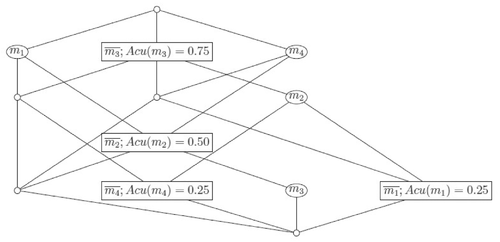

Example 2.Continuing with Table 1 we have that Acu(m1) = Acu(m4) = 0.25, Acu(m2) = 0.5, and Acu(m3) = 0.75; this means that m3 is the best security measure of the sample and m1, m4 the worst security measures. These accuracies have influence in the correctness measures, the values Corr(g1) = 0.29, Corr(g2) = Corr(g4) = 0.43, and Corr(g3) = 0.71 mean that forgery g3 is quite good. Note that g2 and g4 have the same correctness, but different signatures.

4.3 FCA-based analysis of information

Using FCA on the obtained datasets, a mixed concept lattice can be created to explore the relations among the objects (documents) and the attributes (security measures). This knowledge allows the Police to simplify their exams, focusing just on the potentially most relevant security measures of the document.

In a standard concept lattice, attributes with 0 values never appear since the construction emphasizes solely those attributes which are satisfied. In this application, we need to pay attention also to the 0s in the formal context because they represent attributes that some forgers cannot copy. The more objects with 0 value in an attribute, the higher position of the attribute in the mixed concept lattice. This is why we just focus on negative attributes.

In the corresponding mixed concept lattice (see Figure 4), we can search for the negative attributes that are included in the new object from top to bottom. We can observe that are ordered by accuracy, so we can classify the objects in an easy way. In Figure 4, we can check that Acu(m3) = 0.75, so we select . The only object that it is not covered has the atributes , so we can choose the negative attribute , with Acu(m2) = 0.50, to complete the attributes needed to cover all the objects. This reduction means that by checking just we can detect all the false documents.

- 1.

Check the attributes in a document which is known to be false, in order to relate it to a forger.

If the new object has the same values for their attributes than other existing objects, we need to check metadata associated to this object (for instance, details associated with the forged element: where it was found, textual description, etc). The collected information from interrogatories can be used to relate signatures with information provided from owners of forgeries. Different pieces of partial information might provide complete data related to forgers. More objects with the same signature mean more details of the forger.

Otherwise, we have a new signature that needs to be added to the dataset. We need to explore the concept lattice in order to classify it and relate it with an existing signature if it is possible.

Checking the concept lattice, we can detect similar signatures and where the new object could be classified, focusing on certain attributes. The number of explored concepts is restricted by the number of attributes. Proceeding in this way, checking time is reduced if we compare with checking all the objects of the dataset. In large datasets, this checking time is considerably reduced.

The restricted exploration can be seen in Example 1. If we have the values , traversing top-down the lattice in Figure 2, we begin in the mixed concept with attribute m1. From this mixed concept, we can only explore or , so we choose the first one. From this mixed concept, we can only get to or . This means that is a new signature.

- 2.

Check whether we are dealing with a false document.

In this second case, the different attributes (possible false details in the document) are explored following a top-down method according to their accuracy. This methodology allow Police evaluate in a fast way the documents because the search is focused on relevant points. More details on this will be shown in the following section where we deal with a practical case of use.

5 CASE-STUDIES BASED ON REAL SAMPLES

We will show two cases of use of false documents detected by Police forces in European countries. The proposed security attributes related to the documents are those used as a reference by some Police forces. There are two different investigations, one about Italian driving licenses and another about Romanian identity cards, in which we use the approach stated in the previous section to consider the most important details on the different signatures.

As usual in this type of research, specific attributes representing a security measure will not be explicitly described, and we will refer to them in an abstract way: variables Ai will be used to encode attributes related to data (control digits, issued dates), Bj to denote attributes related to graphical information (shields, stamps), and Ck to refer to attributes about the location of information in the document (alignment).

5.1 Italian driving licenses

We have a sample composed by 36 false documents, divided into two models according the issuing year. These models share some attributes but others are different so, a separated study has been done because the signatures will be different.

In the first group, corresponding to driving licences previous to year 2013, we have 10 initial attributes {A1 − 2, B1 − 8} and there were detected seven attributes with failures, two of them (labeled with A) corresponding to the filling of data (attributes B2, B6, and B7 are discarded due to their accuracy being 0). There exist 13 different signatures for the 24 documents with a level of correctness between 0.05 and 0.75, being the average in 0.17.

Taking into account the accuracy, attribute A1 (which is a data attribute) is the most useful. It has a high accuracy value of 0.96. Among the graphical attributes, the highest accuracy was 0.88 for B4. These two attributes, A1 and B4, allow us to detect all the false documents in the sample. On the opposite side, attribute B8 has a 0.08 of accuracy. That means that most forgers know it well, and can simulate with precision.

In the second group, corresponding to driving licenses issued after 2013, we have 11 initial attributes {A1 − 2, B1 − 9} and there were detected failures in all of them. The attributes related to data information remain the same.

There exist eight different signatures for the twelve documents with a correctness between 0 and 0.79, being the average 0.24. The signature that is composed by all the failures in a document could not be associated with a forger and was discarded.

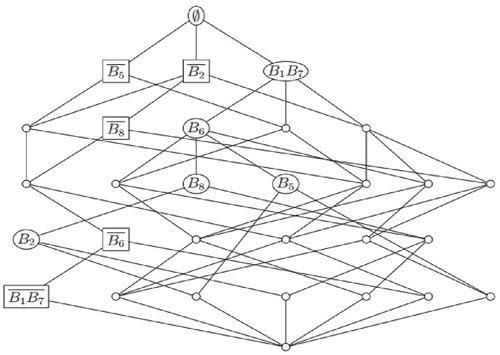

Considering the accuracy of the attributes, A1 is the most useful again with a high value of 0.92. Among the graphical attributes, the highest accuracy was 0.83 for B1 and B9. These graphical attributes are related between them, since the negation of one of them implies the negation of other. This is not the only coincidence, and attributes B2, B6, B7, and B8 have the same accuracy in the same documents. For visualization purposes, we can apply a reduction in the number of attributes introducing B10 = {B1, B9} and B11 = {B2, B6, B7, B8}. Attributes A1 and B10 allow to detect all the false documents in the sample.

So far, all the information about accuracy and correctness could have been extracted directly from the dataset, hence the actual advantages of using FCA are not shown properly. The benefits can be seen when large datasets are used, because the exploration of the mixed concept lattice will reduce the time of research when new objects need to be checked.

-

An attribute (negated or not) located in the upper levels of the concept lattice means that this attribute is common to more signatures than an attribute located in a lower level. As a result, if the negation of a parameter is in the top level, this attribute will be enough to detect a forgery because it means that has a value 1 for accuracy; moreover, if a negated attribute is close to the top of the lattice, then it is a good attribute to detect forgeries; however, n positive (not negated) attribute in the upper part of the lattice is not useful for verification.

FIGURE 5Concept lattice for Italian driving licences issued after year 2013

FIGURE 5Concept lattice for Italian driving licences issued after year 2013As stated previously, A1 and B10 are sufficient to demonstrate that a document is false, and this situation is easy to check in the concept lattice because the negation of these attributes are in the second level.

-

If both versions of an attribute, negated or not, are in similar levels of the lattice, we could consider both to be relevant to verify signatures. Attributes located in the same level of the concept lattice could have different accuracies. If we order them by top-down level and accuracy, from high to low values, we can determine which attributes are enough to detect a forgery.

In our example, if we choose B4 instead of A1 or B10, we could detect all the false documents from the sample but it is not the desirable option because A1 and B10 have got bigger accuracies.

Checking a new sample of false documents with the existing mixed concept lattice, approximately 80% of them where detected only using parameter A1 and the remaining ones using B10 (all of which had correctly copied attribute A1). Approximately 60% of them where related to existing signatures in the sample, and the rest generated new ones. The overall checking time is considerably reduced because just two attributes had to be checked to detect forgeries.

A number of improvements can be applied in this situation for practical purposes; for instance, it is recommended that the selection of the attributes mix different categories because forgers usually fail in one of them; this was the reason for choosing A1 and B10, because A1 is related to data and B10 is related to graphical details. Furthermore, the top-down search of negative attributes in the mixed concept lattice has to be complemented with certain positive attributes, exactly those which are implied by the negative ones already collected. These details lead to further optimization of exploration time or detection of new forgeries not related to the sample.

5.2 Romanian identity cards

These documents have 21 different attributes used in the field of document authentication in order to provide a complete and detailed signature, separated in three sections: document data {A1 − 3}, graphical details {B1 − 8}, and alignments {C1A − D, C2A − E, C3}. There are three attributes for document data because there are two fields in the document for personal identification instead of the only field in Italian driving license. The attribute with higher accuracy is A2, with 0.83, so the highest accuracy is once again a data-related attribute. The highest accuracy among the six graphical attributes is B5 with 0.58 and the highest accuracy in the 10 alignment-related attributes is C2D with 0.67. In the sample, the graphical attributes are reduced to six elements because the accuracy of B3 and B4 is 0; the existence of this kind of attributes proves how the forging technique evolves as details are known to counterfeiters.

In comparison with the previous example of the Italian driving license, this document has more attributes because of the alignment features that do not exist in the Italian document, Figure 6. The graphical attributes have high accuracy due to the simplicity of the document, so expert designers need to add details in the alignment of some words that appear in the document with the shield located in the background.

In all the studied cases, each group of attributes separately cannot detect all the false documents in the sample; the combination of two attributes from different groups achieve better results, but still does not cover all the documents. The best combination is composed by three attributes, one from each group, which coincides with the attributes with the best accuracy: A2, B5, and C2D. The proposed methodology has been applied, checking a new sample of false documents in the existing mixed concept lattice, all of them where detected using just attribute A2 (but attribute B5 also works). The new signatures did not exist in the previous sample, so they cannot be associated with existing forgeries.

The information obtained in these samples has been put into practice in which the efficiency of the methodology in real cases. Exploring time is highly reduced because Police officers do not need to explore all attributes and focus on the most important and decisive attributes.

6 CONCLUSIONS

We have proposed the use of FCA with negative attributes in order to recognize signatures of forgers in documents and find relations between them to obtain details of their activities. This research constitutes a preliminary step to a more detailed forensic analysis toward providing a useful work tool to Police Forces.

The existing approach in the fight against forgery is based on a preliminary analysis in which some patterns of the signatures are found to help on the recognition of false documents. The most representative patterns for each document are extracted and, then, Police forces focus their inspections on these patterns, simplifying their work. More detailed analysis and Police research could lead to arrest forgers identified by their signature (which contains the failures in their forgery), combining the information provided by individual owners of these documents. Our approach is based on the automatic generation of the mixed formal concept from the existing dataset, it helps by making a classification of the different attributes in terms of relevance with respect to the security measures, saving analysis time and; moreover, the whole process is transparent for the Police officers at work.

It is worth recalling that the more attributes considered in a signature, the better identification. Sometimes the number of attributes studied can be increased by specific forensic tools not within the usual resources of a Police officer on duty; but it is also possible to use implicational systems, another tool in FCA, so that we can improve the extracted knowledge from datasets; for instance, the search of negative attributes in the mixed concept lattice could be complemented with certain positive attributes, exactly those which are implied by the negative ones already collected. These details lead to further optimization of exploration time or detection of new forgeries not related to the sample which are left as future work.

A number of improvements can be applied in this situation for practical purposes; for instance, it is recommended that the selection of the attributes mix different categories because forgers usually fail in one of them. Furthermore, the top-down search of negative attributes in the mixed concept lattice has to be complemented with certain positive attributes, exactly those which are implied by the negative ones already collected. These details lead to further optimization of exploration time or detection of new forgeries not related to the sample.

ACKNOWLEDGMENTS

J.M. Rodriguez-Jimenez thanks the ADOFOR group for its support in this research. This work has been partially supported by the Spanish Ministry of Science, Innovation, and Universities (MCIU), the State Agency of Research (AEI), the Junta de Andalucía (JA), the Universidad de Málaga (UMA), and the European Social Fund (FEDER) through the research projects with reference PGC2018-095869-B-I00 (MCIU/AEI/FEDER, UE) and UMA2018-FEDERJA-001 (JA/UMA/FEDER, UE), and is developed within the COST Action CA17124 DigForASP (Digital Forensics: evidence Analysis via intelligent Systems and Practices).

CONFLICT OF INTEREST

The authors declare no potential conflict of interests.

Biographies

Manuel Ojeda-Aciego is a Full Professor of Applied Mathematics in the University of Malaga, Spain. He has coauthored more than 150 papers in scientific journals and proceedings of international conferences. His current research interests include formal concept analysis, fuzzy measures in terms of functional degrees, and algebraic structures for computer science. He serves the Editorial Board of Fuzzy Sets and Systems and the Intl J on Uncertainty and Fuzziness in Knowledge-based Systems.

José M. Rodríguez-Jiménez received the degree in Mathematics in 2000, M.Sc. degree in Software Engineering and Artificial Intelligence in 2011 and Ph.D. degree in Mathematics in 2017, all from the University of Málaga. Currently he collaborates with Applied Mathematics Dept. of the University of Málaga. He has (co)authored around 20 papers in scientific journals and proceedings of international conferences. Nowadays his research is related to Mathematics applied to Police activities.