Is artificial intelligence getting too much credit in medical genetics?

Abstract

Artificial intelligence has lately proven useful in the field of medical genetics. It is already being used to interpret genome sequences and diagnose patients based on facial recognition. More recently, large-language models (LLMs) such as ChatGPT have been tested for their capacity to provide medical genetics information. It was found that ChatGPT performed similarly to human respondents in factual and critical thinking questions, albeit with reduced accuracy in the latter. In particular, ChatGPT's performance in questions related to calculating the recurrence risk was dismal, despite only having to deal with a single disease. To see if challenging ChatGPT with more difficult problems may reveal its flaws and their bases, it was asked to solve recurrence risk problems dealing with two diseases instead of one. Interestingly, it managed to correctly understand the mode of inheritance of recessive diseases, yet it incorrectly calculated the probability of having a healthy child. Other LLMs were also tested and showed similar noise. This highlights a major limitation for clinical use. While this shortcoming may be solved in the near future, LLMs may not be ready yet to be used as an effective clinical tool in communicating medical genetics information.

1 COMMENTARY

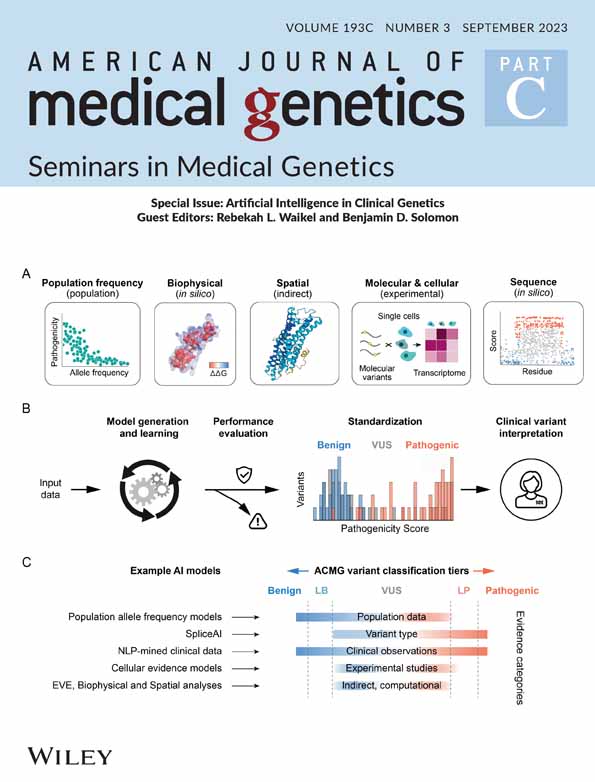

Over the past few years, artificial intelligence (AI) has been rapidly improving and has reached a stage where its performance rivals or even exceeds that of humans in certain fields. Medical genetics is one such field that has experienced a rapid adoption of AI. It is already being used as an effective tool in interpreting genome sequences and making variant classification much faster and more optimized (Meng et al., 2023). By using AI to prioritize variants, it can even accelerate the discovery of novel disease gene candidates (Sundaram et al., 2018). Another exciting application of AI in medical genetics is its ability to rapidly diagnose patients with genetic syndromes using facial recognition with an accuracy that outperforms clinical geneticists (Gurovich et al., 2019; Porras et al., 2021). This development has the potential to accelerate diagnosis at the point of care and reduce healthcare disparity and will likely be an integral component of the diagnostic process (Solomon et al., 2023).

More recently, there has been a growing interest in the use of AI-powered chatbots to facilitate communication of medical information to patients. The medical genetics profession in particular stands to benefit the most given the well-known shortage in the workforce, even in high income countries (Dragojlovic et al., 2020). A recent paper published by Duong and Solomon investigated the performance of the popular large-language model (LLM) ChatGPT (one type of AI) and compared it to human respondents in answering medical genetics questions (Duong & Solomon, 2023). Both groups were asked to solve questions which were either primarily memorization focused or required critical thinking. Interestingly, while the overall performance of ChatGPT was similar to humans with an accuracy of 68.2%, there was a significant difference in how it performed on memorization and critical thinking questions where it had an accuracy of 80.3% and 26.3%, respectively. Deeper analysis of the data reveals that within critical thinking questions, ChatGPT had a very low accuracy when addressing recurrence risk calculation. Of the six recurrence risk questions (see Q329, Q347, Q353, Q363, Q370, and Q391) it only managed to answer one correctly (see Q353) (Duong & Solomon, 2023). This is even more concerning when one considers that those questions only dealt with the recurrence risk of one Mendelian disease at a time.

Recurrence risk is a core component of medical genetics practice. Therefore, it is crucial that an AI-powered chatbot designed to serve as a medical genetics tool is able to give consistent and accurate estimates of recurrence risk if it is to be considered clinically useful. Given ChatGPT's struggle with calculating recurrence risk of a single Mendelian disease, I set out to investigate if challenging it (GPT-3.5) with more than one disease may more clearly reveal the flaws in the logic it uses in its answers. This should not be viewed as a purely hypothetical scenario since recurrence risk involving more than one autosomal recessive disease is not uncommon in consanguineous populations (AlAbdi et al., 2021). When prompted three times with the same simple question: “Two parents come for counseling. Both are carriers of two autosomal recessive diseases. What is the probability that their child will be unaffected?” it consistently gave incorrect answers but with a different logic each time (File S1). In Answer 1, it did not incorporate the second disease in its calculation. In Answer 2, it correctly stated that the probability of passing down a normal allele from each parent to the child is 0.5 but fell short during its calculations by incorrectly mixing up the two parents with the two diseases somehow. Answer 3 followed a similar logic to Answer 2, except it had a gap in its logic by using the probabilities of one allele from one parent for calculating the probability of two diseases. Please note that the correct answer is 0.5625 [see Alkuraya (2023) for explanation of this and more complex scenarios].

Interested in seeing if other LLMs might answer the same question differently, I tested Bard and Claude with the same approach as with ChatGPT. Bard's answers (File S2) were far more consistent than ChatGPT, giving almost the exact same response each of the three times it was prompted. However, the accuracy of these responses was inferior. It used a very rudimentary logic to approach the question and, in turn, stated incorrectly that the probability is 0.25 each time. On the other hand, the answers given by Claude (File S3) were far more nuanced. In each of its responses, it used a step-by-step bullet-point method to approach the problem by laying out all the given information before performing any calculations. This allowed for much more logical and complex answers compared to Bard and ChatGPT. Its first two responses were fairly similar with each calculating an incorrect probability of 0.0625. On its third attempt, however, it managed to correctly answer the question and give a logical explanation of how it reached it. While this success is certainly impressive, it is worth noting that this only occurred after Claude answered incorrectly in the previous two attempts. Furthermore, this achievement becomes even less impressive when considering that this correct answer was only achieved after nine attempts across three different LLMs.

After seeing how ChatGPT was able to give a very compelling explanation while still presenting an incorrect answer, I was curious as to the risk this may pose not only to patients but also to medical geneticists. This could potentially be very deleterious if used in a clinical setting where a medical professional may believe an LLM's convincing, but ultimately incorrect, logic. To formally test this, I asked ChatGPT a question a medical geneticist may have prior to performing a genetic test: “A homozygous VUS in CFTR was identified in a child with cystic fibrosis born to first cousin parents. How many unaffected siblings need to be tested for this VUS to achieve a less than 1/8 probability of segregating by chance alone?” This 1/8 cutoff is important for using segregation analysis to support the pathogenicity of variants (Jarvik & Browning, 2016). The response given by ChatGPT (File S4) shows that it was able to arrive at the correct equation of (3/4)n < 1/8. Interestingly, however, instead of correctly calculating n to be approximately 7.23, it actually calculated it to be approximately 10.43, even though it was able to correctly rearrange the equation. This mistake happened due to an incorrect simplification between the second and third steps, where it simplified log(1/8) to −3 instead of −3log(2). This is a stark example of how LLMs can deceive even professionals by providing a very compelling explanation, even a correct equation, yet concluding with an incorrect answer.

We have thus far seen two types of noise with LLMs; one related to the inaccuracy of their responses and the other related to the faulty logic behind them. This raises the question of how different LLMs can be evaluated in order to be compared objectively. This question was recently addressed by Singhal et al., who introduced a new method of benchmarking LLMs (Singhal et al., 2023). By judging the quality of a model's response along multiple axes (e.g., missing content, evidence of correct/incorrect reasoning), LLMs can be fine-tuned to improve their capabilities in answering medical questions and enhance their recall, reading comprehension, and reasoning skills (Singhal et al., 2023).

In its current state, AI-based tools can be very useful in fields that mainly involve factual questions. However, as was shown in the answers and their analysis above, LLMs still have a long way to go before serving as a trusted tool in communicating medical genetics information to patients. This also serves as an important reminder that not all tools must include AI in order to be helpful. For example, ConsCal can accurately calculate recurrence risk of any number of diseases purely using a simple mathematical equation (Alkuraya, 2023). In fact, it seems surprising that a powerful AI such as ChatGPT should struggle to solve what is essentially a probability question. Admittedly though, as with much of the recently published literature regarding AI, this commentary will probably soon become obsolete given the unprecedented speed at which this technology is developing. However, just like the Internet with its massive potential did not make medical doctors irrelevant, there is still a place for future geneticists from my generation to make an impact, even in an era dominated by AI.

CONFLICT OF INTEREST STATEMENT

The author declares no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

The data that supports the findings of this study are available in the supplementary material of this article.