Applications of artificial intelligence in clinical laboratory genomics

Abstract

The transition from analog to digital technologies in clinical laboratory genomics is ushering in an era of “big data” in ways that will exceed human capacity to rapidly and reproducibly analyze those data using conventional approaches. Accurately evaluating complex molecular data to facilitate timely diagnosis and management of genomic disorders will require supportive artificial intelligence methods. These are already being introduced into clinical laboratory genomics to identify variants in DNA sequencing data, predict the effects of DNA variants on protein structure and function to inform clinical interpretation of pathogenicity, link phenotype ontologies to genetic variants identified through exome or genome sequencing to help clinicians reach diagnostic answers faster, correlate genomic data with tumor staging and treatment approaches, utilize natural language processing to identify critical published medical literature during analysis of genomic data, and use interactive chatbots to identify individuals who qualify for genetic testing or to provide pre-test and post-test education. With careful and ethical development and validation of artificial intelligence for clinical laboratory genomics, these advances are expected to significantly enhance the abilities of geneticists to translate complex data into clearly synthesized information for clinicians to use in managing the care of their patients at scale.

1 NECESSITY OF ARTIFICIAL INTELLIGENCE IN GENOMICS

Constrained over the past 50 years to the realms of microscopes, gel electrophoresis, and radiographs, clinical laboratory genomics is now dominated by digital data due to the rapid adoption of next-generation sequencing (NGS). Exome and genome sequencing—even multigene panels and chromosomal microarrays—have already been performed for tens of millions of individuals around the world, and vast amounts of digitized data from millions of these individuals are available to those interested in mining the data for discoveries or for insights that can improve standards of practice in clinical laboratory genomics. These genomic data are essential in precision medicine, where a variety of complex molecular data are combined with clinical information to diagnose and treat individuals with disease (Rajpurkar et al., 2022; Yu et al., 2018). However, even the amount of extractable data in a single genome—with millions of sequence variants, many copy number variants, somatic mosaicism, and variable expression of transcript isoforms—exceeds the capacity of human beings alone to identify answers to specific questions about health and traits. One solution is to incorporate advanced artificial intelligence (AI) into genomic analysis (Diao et al., 2018).

Following the completion of the Human Genome Project, genomes from multiple human populations and diverse primate and non-primate species have been sequenced and deposited in public databases (e.g., gnomAD), enabling important discoveries through DNA sequence comparisons and assessments of allele frequencies in different populations (Karczewski et al., 2020; Margulies & Birney, 2008). Alongside such advances in understanding DNA sequence variation (genomics) over the past two decades, considerable progress has been made in characterizing protein sequence variation and function (proteomics) and RNA transcript isoforms and their expression patterns (transcriptomics) (GTEx Consortium, 2015; Jumper et al., 2021). Furthermore, the ClinVar database at the National Institutes of Health (www.ncbi.nlm.nih.gov/clinvar/) has amassed more than two million DNA variants observed in individuals undergoing genetic testing for a broad variety of hereditary diseases, providing a rich dataset that has been used to improve and standardize clinical variant classification (Harrison et al., 2016). Collectively, these data are an enormous resource that can inform many aspects of biology and precision medicine, from discovery of gene-disease associations to insight into how variants in a given gene affect molecular mechanisms of disease. These data are also useful in virtually every step of the clinical genetic testing workflow, including effectively capturing relevant target DNA from a patient sample, aligning NGS reads, training and evaluating complex machine learning (ML) tools that can analyze genomic variation, and providing evidence to support categorization of individual DNA variants as disease-causing or benign.

2 ARTIFICIAL INTELLIGENCE METHODS IN LABORATORY GENOMICS

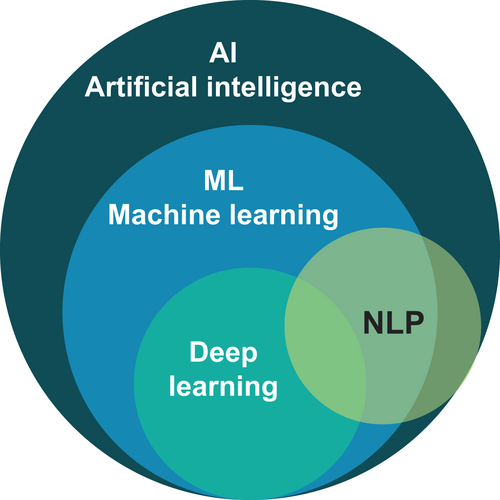

Paralleling the dramatic evolution in scale and speed of DNA sequencing methods, AI has rapidly advanced since the term was coined and the field born at the Dartmouth Summer Research Project on Artificial Intelligence in 1956 (Artificial Intelligence (AI) Coined at Dartmouth, n.d.). Broadly, AI encompasses the theory and development of intelligent systems—systems that act rationally in response to their inputs (Russell & Norvig, 2021). Decades of nearly exponential growth in computing power (Moore's law) and the commensurate increase in data availability have enabled both theoretical and applied achievements in a variety of AI disciplines, including ML, natural language processing (NLP), and computer vision. As described in the next sections, ML and NLP have particularly powerful applications in clinical laboratory genomics.

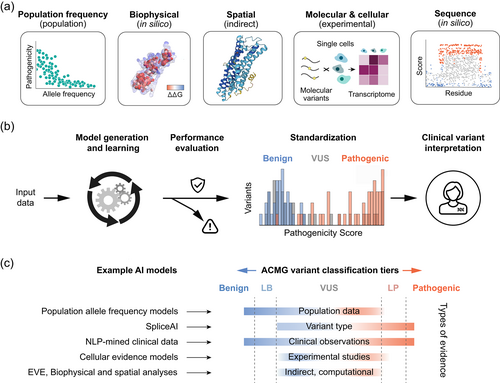

ML is an AI discipline that involves applying statistical models and algorithms to analyze and draw inferences from data (Figure 1). Within clinical laboratory genomics, ML techniques can be applied to tasks as diverse as predicting the effect of an altered amino acid residue in a protein or identifying phenotypically similar groups of patients. In simple terms, an ML-based computer algorithm is trained using a dataset with well-characterized examples, such as known pathogenic or benign DNA variants, to recognize specific patterns in those examples. A trained ML system can then be used to predict patterns from new data (e.g., quantitatively determining if a novel DNA variant appears to be more like a pathogenic variant or more like a benign one). Broadly, ML techniques cover a spectrum that spans from supervised methods—in which a true value or label, such as a variant's pathogenicity, is known for each example used for training—to unsupervised methods, in which no such labels are used. The ML toolkit comprises a wide range of statistical and algorithmic approaches, each with its own tradeoffs with respect to model complexity, interpretability, computational and data requirements, and ease of use. Classical techniques (e.g., logistic regression, linear regression, K-nearest neighbors, random forests, programmed decision trees), neural networks (i.e., deep learning), and Bayesian methods (e.g., Gaussian processes, multilevel models) all have suitable applications in clinical laboratory genomics.

NLP is the branch of AI that involves the application of computers to understanding, interpreting, and generating human language, often with deep learning (Figure 1). NLP methods have been used to develop a variety of tools ranging from machine translation (e.g., Google Translate) to voice assistants (e.g., Amazon Alexa) to large language models and chatbots (e.g., GPT-4, ChatGPT) (Box 1). Large language models represent some of the largest and most complex ML models ever developed, with hundreds of billions of trainable parameters and trillions of examples used for training. These types of models have important uses in clinical genomics, such as in text mining and simple chatbots, and are expected to expand rapidly in scope and utility.

Question posed: “How can AI improve genetic testing?” ChatGPT response: “Artificial intelligence (AI) has the potential to improve genetic testing in several ways. Here are a few examples: Developing more accurate algorithms for interpreting genetic data: AI can be used to develop sophisticated algorithms that can accurately interpret genetic data. These algorithms can help identify genetic variants associated with diseases, assess an individual's risk of developing a disease, and provide personalized recommendations for treatment or preventive measures. Identifying novel genetic variants: AI can be used to identify previously unknown genetic variants that are associated with diseases. This can be achieved by analyzing large datasets of genetic information using machine learning techniques. Streamlining genetic testing workflows: AI can be used to automate many of the steps involved in genetic testing, from sample preparation to data analysis. This can help reduce the turnaround time for test results and increase the efficiency of the testing process. Enhancing genetic counseling: AI can be used to analyze a patient's genetic information and provide personalized counseling based on their individual risk factors. This can help patients make informed decisions about their health and well-being. Facilitating the integration of genetic data into electronic health records (EHRs): AI can be used to help integrate genetic data into EHRs, making it easier for healthcare providers to access and utilize this information in patient care. Overall, AI has the potential to revolutionize genetic testing and improve our ability to diagnose and treat diseases. However, it is important to ensure that AI-based solutions are developed and implemented in a responsible and ethical manner, with appropriate safeguards in place to protect patient privacy and ensure the accuracy and reliability of test results.” |

Compared to the use of traditional methods, the application of AI technologies represents a critical opportunity for dramatically improving the scalability, accuracy, and utility of clinical genomics. Diverse AI approaches are already being applied in this realm (Ledgister Hanchard et al., 2022)—to support the identification of rare diseases through analysis of facial gestalt in the clinic (Gurovich et al., 2019; Hsieh et al., 2022; Myers et al., 2020; Porras et al., 2021), to non-invasively select embryos for preimplantation genetic testing or direct uterine transfer (Barnes et al., 2023; Dimitriadis et al., 2022), and to provide information to individuals with genetic health concerns (Schmidlen et al., 2022; Smith et al., 2023).

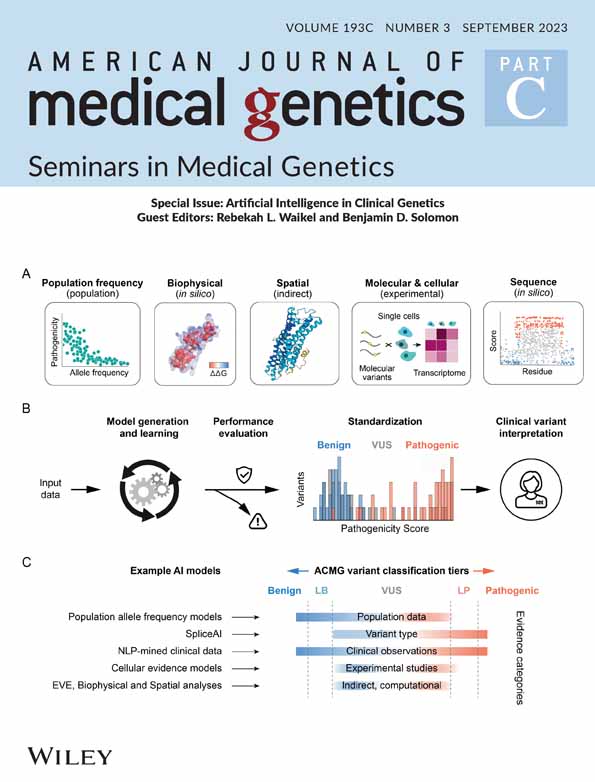

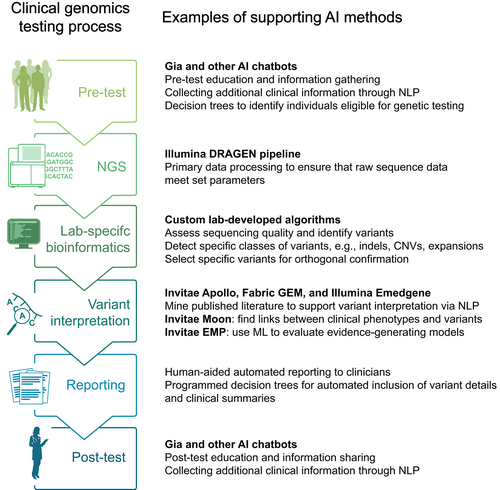

Several publications have described the use of AI in genomics in general, and mostly within the context of basic research (Diao et al., 2018; Ledgister Hanchard et al., 2022; Libbrecht & Noble, 2015), but an article specifically on the applications of AI in clinical laboratory genomics has become both timely and necessary. Here we discuss new applications of AI within clinical laboratory genomics (Figure 2) and provide real-world data to illustrate their use and value. To preserve clarity in describing the various applications, we discuss use cases for AI rather than the technical details of each AI method.

3 SEQUENCING AND BIOINFORMATICS

The types and scale of sequencing data have dramatically changed over the past decade, owing to rapid improvements in NGS chemistry and throughput. The massive outputs from NGS demand large computing power to process the data into readable formats for downstream analyses. As an example, the predominant DNA sequencing equipment vendor, Illumina, uses the DRAGEN™ Pipeline to accelerate data processing, relying in part on AI (DRAGEN Sets New Standard for Data Accuracy in PrecisionFDA Benchmark Data, n.d.). Once primary NGS data have been assessed for quality and completion, GATK HaplotypeCaller has been the benchmark tool for calling variants from aligned sequence reads (DePristo et al., 2011). The critical step of accurately identifying variants is being further improved through newer algorithms, some based on AI methods (Alharbi & Rashid, 2022; Olson et al., 2023; Poplin et al., 2018). For instance, a deep learning method (DeepVariant-AF) developed recently by Google Health considers population allele frequencies from the 1000 Genomes Project and appears to call variants more accurately than prior methods (Chen, Kolesnikov, et al., 2023).

We and others have also developed methods for detecting copy number variants from NGS, by applying logistic regression on data from known copy number samples to generate a mean model that provides a baseline for calling actual copy number events in clinically tested samples (Hill & Unckless, 2019; Lincoln et al., 2021; Özden et al., 2022; Truty et al., 2019; Välipakka et al., 2020). Similarly, we use an AI approach to identify which variants from primary sequencing analysis need confirmation through orthogonal methods (Lincoln et al., 2019; unpublished data). In sum, AI is being used both to accelerate data processing and to improve the accuracy of variant calling, including for variant types that have historically been difficult to detect from NGS data (e.g., copy number variants).

4 CLINICAL CLASSIFICATION OF SEQUENCE VARIANTS

The significance of DNA variants observed in individuals undergoing clinical genetic testing for hereditary disease is determined through a variant classification process based on guidelines prescribed by the American College of Medical Genetics and Genomics (ACMG) (Richards et al., 2015). This process uses a standardized approach for collating diverse types of evidence to classify variants into one of five tiers: pathogenic, likely pathogenic, variant of uncertain significance (VUS), likely benign, or benign. Types of evidence include the nature of the DNA sequence variant and its intragenic location, evolutionary conservation at the variant position, prevalence of the variant in a general population cohort, predicted effect of the variant on the downstream transcribed mRNA or translated protein, presence of protein functional domains at or near the variant position, clinical phenotype of the individual undergoing genetic testing, published reports of that variant or other variants at the same position or nearby positions, and family history. How these types of evidence are interpreted and weighed relative to each other determines the final clinical classification of the observed variant.

The types of evidence used for variant classification fall into two highly correlated but conceptually distinct classes: evidence demonstrating a deleterious effect of a DNA variant on gene function and evidence demonstrating a detrimental effect of the variant on the health and well-being of an individual carrying that variant. Examples of a variant's deleterious effect on protein function include its impact on protein structure and stability or on enzymatic, receptor, or channel activity as demonstrated through in silico, in vivo, or cellular models. In contrast, examples of a variant's detrimental health effects include clinical observations, such as segregation of a variant with disease in families or presence of the variant in affected patients in different families, as well as population effects over time such as degree of evolutionary conservation of a residue in orthologous proteins among different species or allele frequencies of the variant in various human populations.

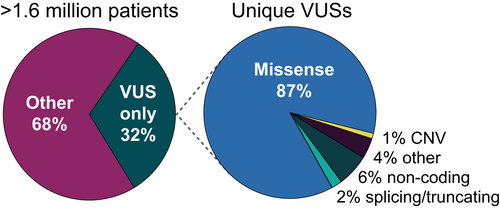

The greatest source of uncertainty in clinical genetic testing for hereditary disease today stems from our limited ability to accurately predict the functional consequences of protein sequence changes that result from missense DNA variants. This class of variants makes up the largest proportion of those clinically classified as VUSs. We conducted a study of the full spectrum of variants observed in clinical genetic testing and found that 87% of VUSs were missense variants (submitted manuscript), underscoring the urgent need to improve functional prediction methods for this class of variants encountered in clinical laboratory genomics. A smaller proportion of VUSs comprise variants that might have possible effects on splicing, protein synthesis, or gene expression (Figure 3).

4.1 Predicting the effects of DNA variants on protein structure and function

Clinical laboratories typically use a suite of in silico models designed to predict the consequences of DNA sequence variants, and these predictions represent evidence that contributes to classifying variants. Our group uses a collection of these evidence-generating models, all of which are trained using ML and validated in ways that ensure high positive predictive values. Predictions from these models are incorporated into variant classification only when a high performance (accuracy) threshold is met. These models together comprise Invitae's Evidence Modeling Platform (EMP) (Figure 4).

In silico algorithms have long been available for predicting the effects of missense changes, including some that leverage ML (e.g., PolyPhen-2, MutationTaster, CADD) (Garcia et al., 2022). However, when these in silico algorithms are applied broadly (i.e., to predict the effects of variants in all coding genes in the genome), their accuracy tends to be inconsistent. Factors contributing to this inconsistency include the relative paucity of definitively classified variants in some genes, inadequate consideration of gene-specific molecular mechanisms of disease, and challenges in ruling out circularity or double counting of redundant data during AI training and evaluation (Ghosh et al., 2017; Grimm et al., 2015). As a result, the ACMG guidelines award little weight to outputs from each of the algorithms when used by itself, and instead recommend the use of multiple algorithms to obtain consensus scores (Richards et al., 2015; Variant Effect Predictors, n.d.).

Recent improvements in algorithms designed to predict the effects of missense variants, especially those developed as ensemble predictors (e.g., REVEL), have overall led to higher accuracy (Pejaver et al., 2022). However, their accuracy is still diminished in certain types of genes because they extrapolate from a limited training dataset to score variants in diverse genes that often do not resemble the training set. To address this limitation, as part of Invitae's EMP (Figure 4) we have developed new ML-based algorithms using carefully curated training sets of pathogenic or benign variants from select genes, and these algorithms show high positive predictive values when applied to evaluating new variants in those same genes (manuscript in preparation). This gene-by-gene training and implementation approach achieves both high performance and systematic incorporation into the overall process of clinical variant classification.

A quantum leap occurred recently when AlphaFold—a deep learning-based algorithm developed by DeepMind—accurately predicted the structures of ~100,000 proteins (Jumper et al., 2021). This and similar advances raise an important question: How can such technologies be incorporated into clinical variant classification to accurately indicate the effects of DNA variants on downstream protein structure and function and, ultimately, on health? Some have explored whether evidence from the analysis of protein structures can be used to help classify variants (Caswell et al., 2022). In a more elaborate approach, to support clinical classification of missense variants, our group has developed methods that use AlphaFold output to predict the effects of amino acid substitutions on the molecular stability of resulting proteins (manuscript in preparation). These methods have been incorporated into Invitae's EMP, where the accuracy of the molecular instability scores are assessed and, if they meet a strict quality threshold, are converted into gene-specific calibrated evidence that is used for classifying missense variants. This process for validating and integrating AI-based evidence into variant classification is described in more detail in Figure 4.

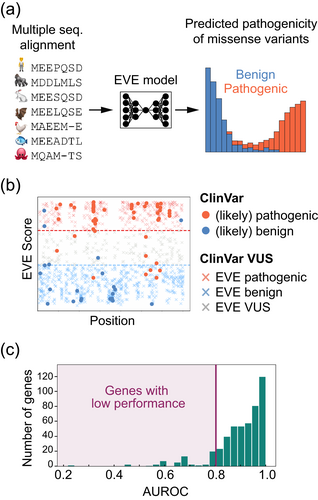

Another important piece of evidence that clinical laboratories use to interpret the significance of a DNA variant within a protein-coding region is the degree of evolutionary conservation at the affected DNA sequence and its corresponding amino acid position. A high degree of conservation at a specific amino acid position across many species, or even complete conservation between vertebrates and invertebrates, suggests the necessity of that residue for normal protein structure or function. Simple sequence alignments that help determine the degree of conservation among genomic sequences of different species have been used to predict the consequences (if any) of novel DNA variants. However, it was not until recently that sophisticated in silico tools could demonstrate high accuracy in those predictions. Among these tools, ML-based EVE (evolutionary model of variant effect) in particular has demonstrated strong performance in predicting the pathogenicity of a DNA variant (Figure 5a), as measured by concordance with clinical classifications in the ClinVar database (Figure 5b) and with experimental data (Frazer et al., 2021). Despite its improved proteome-wide performance, EVE still demonstrates variable accuracy in predicting the effects of amino acid changes in certain proteins. To address this limitation, we use Invitae's EMP to systematically evaluate the output from EVE, gene-by-gene, and use it for variant interpretation only when it meets the required quality threshold (Figure 5c).

Newly described in a publication in Science, Primate-AI3D is a deep learning model that leverages natural variation in primates to make inferences about the impact of DNA variants in humans (Gao et al., 2023). Built on the premise that protein-altering variants commonly found in any non-human primate have been tolerated by natural selection—and are thus likely benign in humans—Primate-AI3D uses deep learning to map genetic variants onto 3D protein structures partially derived from AlphaFold (Jumper et al., 2021) to make predictions about their pathogenicity. Prior to building Primate-AI3D, the authors showed that among missense variants that had clinical classifications in the ClinVar database that were concordant between two or more laboratory submitters and were also common in at least one non-human primate, ~99% were classified as benign, demonstrating the validity of their model's premise. Notably, sequence data collected from just 809 individual non-human primates (233 species, some critically endangered) contained ~20% more exome variants than all human data currently in gnomAD, illustrating the value of this biodiversity. The authors then used this wealth of primate data and Primate-AI3D to predict the pathogenicity of all possible human missense variants (>70 million variants). In validation tests, Primate-AI3D performed strongly at separating pathogenic and benign ClinVar-classified variants. Thus, while EVE leverages evolutionary conservation broadly across diverse animals, Primate-AI3D may be more powerful in part because it focuses on the taxa most closely related to us—though both methods illustrate the promise of applying AI-based methods to sequence data from diverse species.

Another valuable source of evidence totally distinct from in silico models comes from experimental assessments of the impact of variants on cellular function in vitro. Over the past decade, high-throughput cellular assays, collectively termed multiplex assays of variant effect (MAVEs), have been developed to systematically characterize—on a very large scale—the impact of DNA variants on a wide array of molecular functions, including protein–protein interactions (Araya et al., 2012), enzymatic activity (Romero et al., 2015), regulatory control (Kwasnieski et al., 2012), and protein stability (Hasle et al., 2019). Unlike previous approaches for determining variant effects, MAVEs enable the characterization of many DNA variants within a single experiment. As a result, MAVEs present a useful opportunity to incorporate new, highly informative functional data into variant classification during clinical genetic testing for hereditary disease (Esposito et al., 2019). Notwithstanding the importance and richness of this resource, it remains imperative that MAVE outputs are carefully evaluated gene-by-gene and experiment-by-experiment to ensure that the data are suitable for variant classification. To that end, again using Invitae's EMP, we examined 49 MAVE datasets from 22 publications and discovered that 42 were relatively poor at discriminating between benign and pathogenic DNA variants (i.e., the AUROC was <0.80). In contrast, MAVE data related to BRCA1, TP53, and several other genes were extremely useful for variant classification, because those data faithfully represented the effects of DNA variants on gene function in ways that correlated strongly with pathogenicity in individuals diagnosed with cancer (Findlay et al., 2018; Giacomelli et al., 2018). These results underscore the need to consider deleterious effects of variants on protein structure and function as contributing to, and not unilaterally, determining whether a variant is clinically pathogenic.

4.2 Predicting the effects of variants on gene splicing

In addition to methods that enhance our ability to interpret the clinical significance of amino acid changes in protein sequences, other newly developed methods have become useful for predicting the effect of DNA variants on RNA splicing. Although variants that may alter RNA splicing account for a small proportion of VUSs (Figure 3), these variants are nevertheless responsible for a broad variety of hereditary diseases and must be carefully evaluated during clinical genetic testing (Kamps-Hughes et al., 2023; Karam et al., 2019). RNA sequencing is increasingly used alongside DNA sequencing to identify splice alterations directly; however, transcripts for some genes are poorly expressed in available biological specimens and are therefore not amenable to this parallel testing approach. It is therefore critical that laboratory geneticists have access to robust algorithms that can predict the effects of DNA variants on splicing.

Algorithms to identify splice sites were developed years ago, and some were co-opted to predict how specific novel DNA variants might impact splicing. Examples of these algorithms include MaxEntScan, NNSplice, and GENSCAN. Similar to algorithms designed to predict the effects of missense variants, most splice-predicting algorithms have shown moderate positive predictive values because they were trained on limited datasets but then used to predict the potential splicing effects of variants in all genes (Jian et al., 2014; Ohno et al., 2018). However, deep learning-based algorithms with superior performance, such as SpliceAI, are now available (Jaganathan et al., 2019). More recently, the Pangolin deep learning model has been developed, leveraging splicing data from closely related species to improve upon results from SpliceAI (Zeng & Li, 2022). We and others are working on using Pangolin to annotate exome and genome sequences in the next version of the gnomAD database (Karczewski et al., 2020).

4.3 Impact of ML-based evidence modeling on providing definitive clinical results

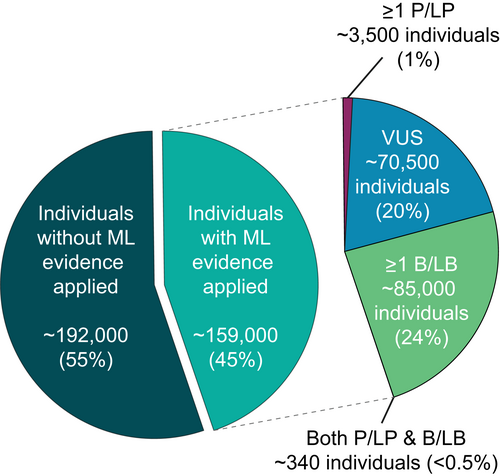

A genetic testing result of a VUS is not clinically actionable. Although in silico methods for predicting the effects of DNA sequence variants have improved, their ability to help laboratory geneticists reduce the number of VUSs reported and resolve uncertainty for patients has not been directly assessed. Therefore, to empirically examine the magnitude of the impact of Invitae's EMP system (Figure 4) on providing definitive answers to individuals referred for clinical genetic testing, we evaluated data from 350,695 patients who underwent diagnostic multi-gene panel testing over a six-month period for a variety of hereditary diseases. At least one evidence-generating model was available for 3087 genes, and ~159,000 patients (45%) received results that included EMP evidence for at least one reported variant (Figure 6). The application of these models contributed to the classification of at least one B/LB variant in ~85,000 individuals (24%), one P/LP variant in ~3500 individuals (1%), and both P/LP and B/LB variants in ~340 individuals (~0.1%) (Figure 6). Among individuals who had at least one variant with EMP evidence applied toward its interpretation, more than 50% resulted in definitive (B/LB or P/LP) classifications.

5 DECIPHERING CHROMOSOMAL STRUCTURAL VARIANTS

Although most DNA changes associated with genetic disease are sequence variants, large deletions and duplications within chromosomes are also frequently pathogenic, particularly in prenatal and pediatric disorders. These structural variants can be detected using G-banded karyotyping, the mainstay of constitutional cytogenetic testing for nearly three decades, or high-resolution chromosomal microarrays, which became the standard about a decade ago (Miller et al., 2010). Because AI methods have been developed for visual pattern recognition in X-rays, computed tomography scans, and stained tissue slices, one might predict that these methods could also be applied to the analysis of chromosomal karyotypes for constitutional rearrangements or to the analysis of tumor tissue for chromosomal rearrangements. To date, little to no AI appears to be used to routinely analyze chromosomal karyotypes for constitutional rearrangements (Tseng et al., 2023), although various efforts have been used to decipher chromosomal rearrangements in cancer specimens, such as from karyotyped hematologic malignancies (Bokhari et al., 2022; Cox et al., 2022; Vajen et al., 2022; Walter et al., 2021). As genomic analysis increasingly shifts toward molecular approaches, even for chromosomal disorders, AI methods are being developed to identify chromosomal deletions, duplications, and other types of rearrangements from NGS data directly (Lin et al., 2022; Popic et al., 2023).

6 MINING PUBLISHED LITERATURE OR ELECTRONIC HEALTH RECORDS

NLP has become ubiquitous in modern society, including in genomic medicine (Simmons et al., 2016). Important uses, such as mining published literature or electronic health records for clinical phenotype information, are expected to improve genetics research, innovation, and clinical diagnostics (Birgmeier et al., 2020; Luo et al., 2021; Son et al., 2018). One powerful application is within the context of rapid whole genome sequencing in the neonatal intensive care unit for children with suspected genetic disease. AI methods, including NLP-based text mining, have facilitated rapid identification of the molecular causes of disease in newborns (Clark et al., 2019; Owen et al., 2022; Peterson et al., 2023), significantly reducing the time to diagnosis and the associated costs. Because of the advantages of such AI-based approaches, they could conceivably be applied to screening of newborns to identify those at risk for severe disease (Kingsmore et al., 2022). Similarly, AI-based approaches for virtual screening for cardiovascular conditions, including familial hypercholesterolemia, have been explored by mining electronic health records for phenotypic information suggestive of elevated risk of disease (Pina et al., 2020; Safarova et al., 2016; Sheth et al., 2021). Finally, clinical laboratories use NLP to rapidly identify relevant evidence (e.g., data on genotypes or clinical phenotypes) from the published literature that helps to classify new variants observed in patients or resolve variants of uncertain significance. A proliferation of such examples of applied NLP in genomic medicine should be expected in the coming years.

7 CORRELATING GENOTYPES AND PHENOTYPES FOR CLINICAL DIAGNOSES

Diagnostic rates in hereditary disease, particularly involving neurodevelopmental disability, have improved substantially in recent years thanks to AI-based approaches. Clinical whole exome sequencing (WES) or whole genome sequencing require robust tools to sift through the thousands of variants typically found in an individual and identify the one or few variants responsible for that individual's clinical condition. Roughly a dozen years ago, clinical analysis of WES relied primarily on manual analysis due to a paucity of powerful software tools that could pull together diverse evidence types including genotype-disease annotations, relevant published medical literature, genome sequence annotation resources, and predictions from in silico modeling algorithms. Various sophisticated proprietary software platforms for analyzing WES have since been developed, many of which incorporate AI (e.g., Invitae's Moon™, Fabric GEM™, Illumina's Emedgene™, FindZebra) (De La Vega et al., 2021; Dragusin et al., 2013; Meng et al., 2023; O'Brien et al., 2022; Wright et al., 2023). These platforms have become faster and more accurate by incorporating improved genotype–phenotype annotations from the Human Phenotype Ontology, the Monarch Initiative, DisGeNET, and other research efforts (Köhler et al., 2021; Pilehvar et al., 2022; Piñero et al., 2020; Robinson et al., 2008, 2014; Shefchek et al., 2020); by refining the heuristics used to analyze WES (by mimicking the analysis processes used by experienced clinical laboratory geneticists); and by deploying NLP to mine published literature for phenotype and DNA variant information that could be relevant to identifying the molecular cause of an individual's clinical condition. In addition, global sharing of clinical WES data among clinical labs, clinicians, and researchers has accelerated the discovery of disease genes, further fueling improvements in diagnostic rates of WES (Boycott et al., 2022; Kirkpatrick et al., 2015; Osmond et al., 2022).

8 PRE-TEST AND POST-TEST APPLICATIONS

As genomic information becomes more voluminous and complex—with NGS panels, exome or genome sequencing, family variant testing, follow-up testing to resolve VUSs, and the need to confirm results in certain circumstances (e.g., in non-invasive prenatal screening)—it is imperative that patients and clinicians have access to pre-test and post-test education and other information resources. A relatively new type of resource that has demonstrated significant benefits in this regard is the interactive AI-based chatbot (Nazareth, Hayward, et al., 2021; Siglen et al., 2022). A chatbot can be built either with simple rules to respond to expected questions or, on a more sophisticated level, as a generative large language model that can self-learn and improve its performance over time.

The arrival of ChatGPT has understandably created widespread excitement and, at the same time, trepidation for what it may eventually mean for human participation in different work environments. In the healthcare context, after more carefully designed trials and validations are completed, interactive tools like ChatGPT are expected to become ubiquitous, powerful tools for identifying individuals who should consider genetic testing due to their elevated risk profiles (Heald et al., 2021; Nazareth, Nussbaum, et al., 2021), for exchanging information during the informed consent process (Schmidlen et al., 2019), for sharing information between patients and their relatives, healthcare providers, and others (Chavez-Yenter et al., 2021); and for providing education before and after genetic testing (Chavez-Yenter et al., 2021). For instance, a recent publication (Kurian et al., 2023) describes a disappointingly low rate of germline genetic testing (only 6.8%) among individuals with a cancer diagnosis, despite existing guidelines that recommend testing for such individuals. The authors recommend AI-supported chatbots as one approach to increasing rates of genetic testing in these patients. As chatbots become more sophisticated and natural (human-like), users may be unable to distinguish between chatbots and humans. Some have suggested that patients may even prefer chatbots because of their inherent ability to better modulate responses to a wide range of user needs and personalities, and because of the high quality of their interactions with users (Ayers et al., 2023). While additional research is still needed to understand in detail how physicians may benefit from the use of AI chatbots within their clinical genetics workflows (e.g., Smith et al., 2023), the breadth of applications for AI chatbots in healthcare will likely increase overall. As we described recently (Snir et al., 2021), the successful integration of genomics into all areas of medicine will in part require the use of chatbots and other software tools, many of which will be developed using AI.

9 CONSIDERATIONS FOR USE OF AI IN CLINICAL LABORATORY GENOMICS

The deployment of AI models for clinical laboratory genomics presents unique opportunities, whether those models are designed for classification and reporting of sequence variants, chromosomal changes or, perhaps in the future, other molecular changes (e.g., transcriptional, epigenetic, or proteome changes). There are several pitfalls, however, that need to be recognized. First, incorrect assumptions or inappropriate training sets used during the development or validation of AI models could lead to spurious results (Grimm et al., 2015). For example, if models that contribute evidence toward classifying variants rely too heavily on determining whether a variant is deleterious at the molecular level without having complementary evidence of pathogenicity (e.g., case reports, population frequency, evolutionary conservation), and these variants are submitted into ClinVar, future researchers may be at risk of using AI-corrupted training data to develop new AI models. This potential pitfall may be mitigated by carefully recognizing which classifications in ClinVar are AI-supported and ensuring the use of only high-quality inter-lab consensus submissions derived from multiple types of evidence.

A second challenge to the adoption of AI models is determining how much weight should be assigned to evidence generated from different models, and how that evidence should be considered in combination with other types of evidence during variant classification. Although some methods are being proposed to calibrate in silico models and determine the value of their outputs (Pejaver et al., 2022), integrating the full spectrum of evidence generated from increasingly diverse AI-based models into variant classification will require a systematic approach. One solution could be to develop a holistic and quantitative variant classification framework, for example by using probabilistic Bayesian inference that allows systematic assignment of weight to each type of evidence and combinations of evidence to output an overall probability of pathogenicity.

A third challenge facing AI approaches to variant classification is that the use of genomic datasets with underrepresentation of individuals of non-Northern European ancestry could perpetuate inadequacies in the delivery of definitive variant classification for such groups. For example, it is well documented that individuals with some genetic ancestries receive more VUSs than others because of a paucity of population frequency data in public databases such as gnomAD (Appelbaum et al., 2022; Florentine et al., 2022). Because some genetic ancestry groups are better represented in public databases, they are also better represented in the data used for training and validating AI models for variant interpretation. With the development of any AI model for clinical laboratory genomics, it is necessary to exercise caution and monitor for potential reinforcement of genetic ancestry biases in AI-generated evidence. The deployment of AI models that are informed by biological principles and are agnostic to genetic ancestry should help to correct such biases and lead to more equity in variant classification.

10 CONCLUSIONS AND FUTURE DIRECTIONS

The number of known monogenic diseases has increased rapidly over the past decade and is expected to climb further. Importantly, the number of genetic diseases with available therapies and precision clinical management is also increasing (Bick et al., 2021). AI methods will continue to improve and be used to rapidly diagnose hereditary diseases, identify those who can benefit from available therapies, recognize those at risk of genetic disease, and fuel further discovery of novel disease genes.

AI-based resources will also become richer as genomics databases grow in both volume and quality. Some of this data accumulation will occur through basic research aimed at further characterizing the structure of the human genome (Liao et al., 2023), and through insights into less understood elements such as promoters, enhancers, alternative transcripts, functional non-coding regions, methylated sites, and heterochromatin regions. Tens of millions of new exome and genome sequences are expected to soon be added to public databases through international research efforts (Manolio et al., 2020). Importantly, these studies are aggregating genomic data from diverse populations around the world (Wang et al., 2022), thereby improving the representation of genetic ancestry groups and the accuracy of studies on genomic contributions to complex diseases and other clinical questions. Complementing this, phenomics research with electronic health records can reveal important gene–disease associations that may be translated into useful screening and diagnostic approaches to reduce disease burden (Hebbring, 2019; Linder et al., 2021; Movaghar et al., 2021; Robinson et al., 2018; Yang et al., 2022). In addition, large-scale population data from genome-wide association studies are increasingly being leveraged to identify polygenic risk for disease, and AI-based methods are being used to conduct these types of studies and develop risk scores (Nicholls et al., 2020; Steinfeldt et al., 2022). Together, often with the support of AI methods, these complementary advances in genomics and phenomics are expected to catalyze faster drug discovery for hereditary diseases (Alves et al., 2022; Boniolo et al., 2021; Visibelli et al., 2023) and, importantly, extend to groups historically underserved by clinical genomics.

Although this article has largely focused on AI applications in relation to hereditary disease, AI approaches for clinical laboratory genomics in relation to precision oncology likely hold equal promise for improving human health (Stenzinger et al., 2022). This topic is too vast in scope to be covered here and deserves its own article. However, in brief, AI may be explored to investigate the complex landscape of somatic DNA variants detectable in tumors (i.e., through NGS-based cancer genome profiling). Applying powerful AI methods in this manner promises to uncover relationships between cancer risk or diagnosis and multi-omic data derived from DNA sequencing, RNA sequencing, epigenetic signatures of methylation, histology, radiology, and clinical observations (Espín-Pérez et al., 2022; Silvestri et al., 2023; Sun et al., 2023). It also promises to help in monitoring molecular residual disease at different points in an individual's cancer treatment journey (Chen, Zhang, et al., 2023) and in identifying novel biomarkers detectable by liquid biopsy. All of these applications would represent important advancements that are urgently needed in medical oncology.

AI methods are becoming increasingly and urgently necessary as the use of genomic medicine increases rapidly across the world. However, it is worth emphasizing that AI at present still has limited scope of use in clinical laboratory genomics due to continuously evolving genomic databases, a steady pace of discoveries of gene-disease relationships, and limited deployment for providing education and information (Luca et al., 2023). Expert clinicians and laboratory geneticists will therefore need to carefully oversee the emerging use of AI in genomic medicine and help other professionals incorporate genomic information appropriately for clinical management of their patients (Solomon, 2022). It is also important to note the potential lost opportunity costs of delaying the use of AI, since that would hinder diagnosis and treatment for millions of individuals who need conclusive genetic testing results, even as the data that could provide answers are available and waiting to be analyzed. Because the number of AI models and the areas of their potential use are expected to grow rapidly, even exponentially, it is important to standardize how they are used in healthcare. Key guiding principles for the scientific and clinical community need to be developed as genomic medicine evolves to become universal across healthcare specialties (Badal et al., 2023; Rajpurkar et al., 2022), and as the need for individuals to interact with their genomic information for different purposes through their lifespans becomes a reality.

AUTHOR CONTRIBUTIONS

All authors have reviewed, discussed, and agreed to their individual contributions to this manuscript. All authors contributed to conceptualizing, writing, editing, or reviewing the manuscript.

ACKNOWLEDGEMENTS

We thank Dr. Elaine Chen of Invitae for analyzing data for figures and Kerry Aradhya of Invitae for scientific editing.

CONFLICT OF INTEREST STATEMENT

All authors are employees and stockholders of Invitae.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.