The science of evidence synthesis in hematopoietic stem-cell transplantation: Meta-analysis and quality assessment

Abstract

A meta-analysis is a statistical analysis that combines the results of multiple scientific studies. After being introduced in the 1970s, meta-analysis significantly influenced decision making in many scientific disciplines, helping to establish evidence-based medicine and to resolve seemingly contradictory research outcomes. Since the first meta-analysis on autologous hematopoietic stem-cell transplantation (HSCT) was published in 1989, the implementation of the method into research and clinical guidance in hematopoietic stem-cell transplantation faced challenges specific to the field. Here, we take the opportunity provided by the recent fortieth anniversary of meta-analysis to reflect on the accomplishments, limitations, and developments in the field of research synthesis in HSCT by summarizing the main methodological features of meta-analysis and its extensions, and by exemplifying the power and evolution of evidence synthesis in HSCT.

Most results of scientific studies in hematopoietic stem-cell transplantation (HSCT) are being summarized in narrative reviews culminating into expert consensus.1 However, the difficulties of carrying out narrative reviews and expert statements to identify and summarize evidence in a transparent and objective manner in HSCT have become increasingly apparent.1, 2

During the past few decades, scientifically rigorous systematic reviews and meta-analyses, carried out following formal protocols to ensure reproducibility and reduce bias, have become more prevalent in a range of fields.3, 4 Systematic reviews aim to provide a robust overview of the efficacy of an intervention, or of a problem or field of research and can be combined with quantitative meta-analyses to assess the magnitude of the outcome across relevant primary studies. While narrative reviews remain useful for exploring the development of ideas, they cannot accurately summarize results across studies.5, 6 Furthermore, narrative reviews and expert statements might underestimate existing heterogeneity of the evidence,5 which can be explored using meta-analysis.5-8

Since the first meta-analysis was conducted in the transplant setting almost 30 years ago, we are seeing a more widespread acceptance of meta-analysis as a research synthesis tool in HSCT. However, most evidence synthesis in HSCT will be of low or moderate quality given the relative lack of several randomized controlled trials (RCTs) on a specific intervention and head-to-head comparisons. Over the past years, meta-analyses have been extended to comparisons across networks and the use of patient-level data to account for such limitations in several fields.9-12

Here, we highlight some of the main principles and characteristics of high-quality meta-analytic methodology. We also discuss the limitations, utility, and achievements of meta-analysis in HSCT and, as a case study, its role in outcome-specific clinical guidance.

LITERATURE AND QUESTION

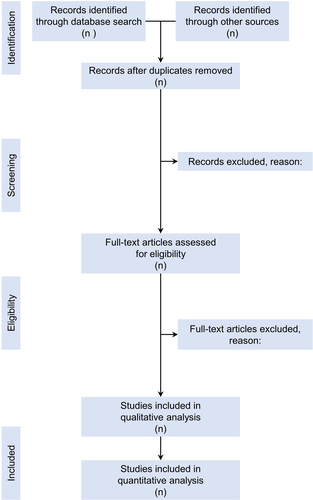

Systematic reviews aim to be transparent, reproducible, and updatable, and to address well-defined questions. The systematic review process includes the use of formal methodological guidelines for the literature search, study screening (including critical appraisal of eligible studies according to pre-defined criteria), data extraction, along with detailed, transparent documentation of each step (Figure 1). Software, protocols, and reporting guidelines for systematic reviews and meta-analyses are well established in many fields; for example, PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) includes a checklist of items and a template flowchart for the presentation of a systematic review,13 which can be extended when network meta-analyses are applied.14

When the goal is to assess evidence for specific interventions, the focus of meta-analyses is primarily on accurately estimating an overall mean effect and may include identifying factors that modify that effect. This approach is exemplified by the PICO (population, intervention, comparator, outcome) framework for formulating questions, in which specification of these elements is central to the purpose of the synthesis. Question formulation using PICO has been adopted in a wide range of fields, including medicine and the social sciences.2, 15 Although moderating factors might be important for understanding how the overall effect is influenced by study or population characteristics, meta-analyses for which the primary goal is to estimate the effects of a specific intervention accurately tend to emphasize the consequences of that intervention for a specific population. This type of meta-analysis must clearly and specifically delineate the population in question. Consequently, the results may apply only to that population, which is specific for synthesis in HSCT.

BIAS

If the systematic review reveals sufficient and appropriate quantitative data from the studies that are being summarized, then a quality assessment can be conducted. The so-called “risk of bias” can be assessed using several tools, with the Cochrane Collaboration's risk of bias tool being one of the most widespread, whereas others are designed to specifically assess the quality of non-randomized trials16-18; for example, assessment using Cochrane Collaboration's tool strongly depends on information about the design, conduct, analysis, and reporting of the trial determining whether the criteria are met resulting in a summary description: low risk, high risk, or unclear risk of bias. Each outcome is assessed for six sources of bias: selection bias, performance bias, detection bias, attrition bias, reporting bias, and other bias; resulting in an overall judgment of risk of bias regarding each outcome.16

SYNTHESIS

Then, a meta-analysis can be conducted, extracting one or more outcomes in the form of effect sizes from each study. Effect sizes are designed to put the outcomes of interest of the different studies being combined on the same scale, using a suite of metrics that includes, (among others) hazard, odds and risk ratios for dichotomous outcomes, and standardized mean differences for continuous end points. Another useful effect size is the area under the curve (AUC), or the probability that if two patients are sampled from the population, one given treatment A and the other treatment B, the patient given A will survive longer than the one given B. If the patient surviving longer is labeled a success and the other a failure, a number needed to treat can be calculated. The smaller the magnitude of number needed to treat, the more superior one treatment is over the other.

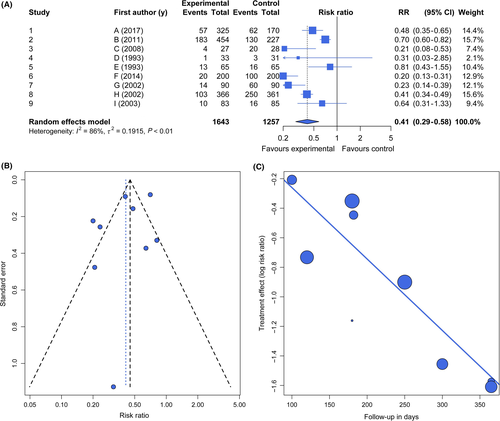

The effect sizes are then entered into a statistical model with the goal of assessing overall effects (Figure 2A). These models are based on an assumption of either a common effect (“fixed effect”) or random effect.8 The common-effect (or fixed-effect) model assumes that variation in effect sizes among studies is due to within-study (sampling) variance and that all studies share a common “true” effect. Common-effect models assume that the results apply only to a given group of studies. The random-effects model assumes that, in addition to sampling variance, the true effects from different studies also differ from one another, representing a random sample of a population of outcomes. Thus, random-effects models include an extra variance component to account for between-study variance (heterogeneity) in addition to within-study variance and apply more generally. In carrying out a meta-analysis, the central tendency and its confidence limits are evaluated.19, 15

In meta-analysis, three principal sources of heterogeneity can be distinguished: clinical baseline heterogeneity between patients from different studies; statistical heterogeneity, quantified on the outcome measurement scale; heterogeneity from other sources, eg design-related heterogeneity.19 Here, we only deal with statistical heterogeneity and present three measures mostly used in meta-analysis. Q, which follows a chi-squared—distribution with K − 1 degrees of freedom under the null hypothesis of no heterogeneity, is the weighted sum of squared differences between the study means and the fixed effect estimate. It always increases with the number of studies (K) in the meta-analysis. In contrast to Q, the statistic I² was introduced by Higgins and Thompson20 as a measure independent of K, the number of studies in the meta-analysis and is one of the most well-known measures. I² is interpreted as the percentage of variability in the treatment estimates which is attributable to heterogeneity between studies rather than to sampling error. It ranges from 0 (no heterogeneity) to 100% (total heterogeneity) while 40% (moderate) and 70% (high) are used to evaluate the grade of bias included in the final model (see below). Last, τ² describes the underlying between-study variability. Its square root (τ) is measured in the same units as the outcome. Its estimate does not systematically increase with either the number of studies or the sample size. Furthermore, we are often interested in comparing subgroups of studies in a meta-analysis to investigate reasons for existing heterogeneity. For example, if studies differ in the eligibility criteria for patients (according to disease, conditioning regimen, CMV serostatus etc.), we might ask whether the treatment is more effective in some studies than in others.21 In this case, the factor which defines the subgroups is said to be an effect moderator. To address this question, we need to test for a treatment-subgroup interaction, ie whether the treatment effect is modified, or moderated, by subgroup membership.

To identify the magnitude and sources of variation in effect size among studies, earlier studies relied on simple heterogeneity tests, whereas more recent work often uses meta-regressions.22 As this model has both fixed effect and random effects terms, this meta-regression model is also called a mixed effects model. Most heterogeneity tests as well as meta-regressions both use weighting based on the precision of the estimate of the effect: larger studies with higher precision are weighted more heavily than smaller and/or more variable studies. The use of meta-regression is of crucial importance in HSCT since many studies differ in population, treatments before transplantation and follow-up, which can be (in part) be adjusted for with this tool. Furthermore, quantifying heterogeneity is universally important in HSCT with respect to a clinical environment in which expert consensus mainly forms decision.1

In addition, tools have been developed for evaluating publication bias and power and for conducting sensitivity analyses (Figure 2B-C).23, 24 A funnel plot can be used to depict potential publication bias or small-study effects and shows the estimated treatment effects on a suitable scale (usually on the x-axis) against a measure of their precision, usually the standard error, on the y-axis with standard error at top, ie an inverted axis.25 In the absence of small-study effects, treatment effects of large and small studies would scatter around a common average treatment effect. If no excessive between-study heterogeneity exists, smaller studies (with larger standard errors) would scatter more than larger studies. That is, the funnel plot would show the form of a triangle symmetric with respect to the average treatment effect, with broad variability for small imprecise studies (at the bottom of the plot) and small dispersion for large, precise studies (at the top). Figure 2B shows such an example with nine fictional studies generated from a random effect model with a risk ratio of 0.41.

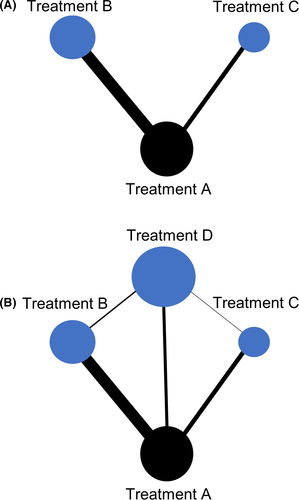

While conventional meta-analyses need to compare an intervention (which is hypothesized to be homogeneous in each study included in the analysis) with a control, disciplines such as HSCT often lack several studies investigating one intervention using the same control. Therefore, meta-analyses have been extended to evaluations across networks, the so-called network meta-analysis (NMA). The NMA is based on the idea that studies investigating treatment A vs control C and other studies evaluating treatment B vs control C provide indirect evidence for the comparison of A and B from the treatment difference A-C and B-C (Figure 3A-B). To limit the number of tests for both heterogeneity and inconsistency, a global test was proposed being the sum of a statistic for heterogeneity and a statistic for inconsistency that represents the variability in treatment effect between direct and indirect comparisons at the meta-analytic level.

Finally, the use of patient-level data for meta-analysis instead of aggregate data (as described above) may facilitate standardization of analyses across studies and direct derivation of the information desired, independent of significance or how it was reported. Individual participant data may also have a longer follow-up time, more participants, and more outcomes than were considered in the original study publication.9 On the other hand, this approach may be resource intensive, because substantial time and costs are required to contact study authors, obtain their individual participant data, input and “clean” the provided individual participant data, resolve any data issues through dialog with the data providers, and generate a consistent data format across studies.9, 26 Most recent approaches in clinical oncology even analyzed individual patient data using network meta-analysis, which bares the danger of simplistic conclusions that might be drawn from such complex statistical constructions.27, 28

QUALITY OF EVIDENCE

If a meta-analysis has been published, clinicians need to interpret its utility and quality. There are two main frameworks that are currently used in the design of most clinical practice guidelines: the Oxford University Centre for Evidence-Based Medicine (OCEBM) and the GRADE working group (Grading of Recommendations Assessment, Development and Evaluation). Regarding OCEBM, levels of evidence were designed in a hierarchical manner. While this system is simple and easy to use, early hierarchies that placed randomized trials categorically above observational studies were criticized for being simplistic; for example, in HSCT, observational or even retrospective studies give us the only evidence regarding some diseases or treatments.1, 29 Schemes such as GRADE avoid this common objection by allowing evidence from observational studies with large effect sizes to be upgraded, and analyses from RCTs may be downgraded for quality.30 The GRADE methodology characterizes the quality of a body of evidence based on the study limitations (design, risk of bias), imprecision (effect size not in favor for any intervention), inconsistency (heterogeneity), indirectness (different population investigated), and publication bias (small study effects, funnel plot). Furthermore, this approach is outcome-specific and can be even extended to network meta-analyses31; and, based on that, might be of advantage in HSCT syntheses.

CASE STUDY IN HSCT

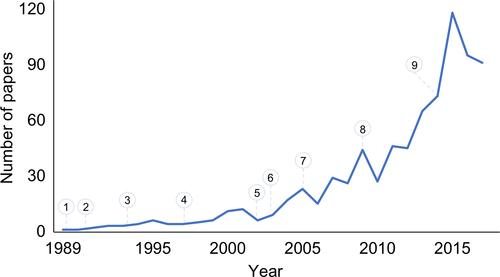

Meta-analysis was first adopted by HSCT clinicians some 30 years ago in an autologous transplant setting (Figure 4) and showed significant impact on clinical decision making over time.32-35 Analyses in the autologous setting have some advantages since most studies evaluate the same disease (ie, multiple myeloma [MM]) and reduce the potential bias for heterogeneity and indirectness providing insights into the rationale, evolution, and challenges of meta-analyses in HSCT.

Several analyses evaluated the comparative efficacy of single, tandem autologous or autologous/reduced-intensity allogeneic HSCT or chemotherapy in newly diagnosed MM.36-39 Tandem in comparison with single autologous transplant showed similar overall survival while response rates were significantly higher after tandem transplant.37 Regarding tandem vs allogeneic transplant, higher rates of transplant-related mortality and response were found after allogeneic HSCT without improvement in survival.38, 39 Another analysis on high-dose therapy with single autologous HSCT vs chemotherapy indicated improvement in progression-free survival after HSCT while overall survival did not differ.36 Significant heterogeneity was present in all analyses. Quality of evidence regarding overall and progression-free survival of each analysis is low.

These results together with the introduction of novel agents over the years led to a recent conventional meta-analysis and network meta-analysis on autologous HSCT in MM,40 which found that high-dose single autologous HSCT was associated with superior progression-free survival compared with standard-dose therapy. Both tandem and single transplant with bortezomib, lenalidomide, and dexamethasone were superior to single transplant alone and standard-dose therapy while no improvement in overall survival could be identified. Using meta-regression detected longer follow-up leading to benefit in outcome but may be affected by effective treatment after relapse. Although the quality of evidence regarding overall survival is low, in terms of progression-free survival GRADE assessment yields moderate quality while risk for bias in terms of methodology of the network meta-analysis is also low. While these findings highlight the complex landscape of HSCT in MM and give the general suggestion that autologous HSCT remains the preferred therapy in transplant-eligible patients, they also provide researchers and clinicians with open questions and possible outcomes and factors of interest that need to be investigated in future HSCT studies to improve overall survival. This evolution of aggregating evidence in HSCT not only characterizes MM but also other areas such as graft-vs-host disease prophylaxis.41, 35, 42-44

With respect to these cases, most syntheses used binary outcome data (alive or dead), whereas not only the information that an event occurred but also when the event happened is of central interest for most recent investigators, HSCT clinicians and patients.40, 44 This type of data is called time-to-event or survival data if the event of interest is death. Time to an event is a continuous quantity, however, in contrast to examples with continuous outcomes, time to an event can typically not be observed for all participants as the maximum follow-up time is limited in a study. Patients where the event of interest did not occur during the follow-up period are called censored observations. Another important aspect of time-to-event data are competing events, eg time to either relapse or non-relapse mortality.45 Specific statistical methods for survival data have been developed and should be used in the analysis.46 A meta-analysis with survival time outcomes is typically based on the hazard ratio as measure of treatment effect. As hazard ratio and corresponding standard error are not always reported in publications, several methods exist to derive these quantities, eg from published survival curves.47, 48

LIMITATIONS AND DEVELOPMENTS

Despite its current utility and future potential, meta-analysis has various limitations as a tool for research synthesis and for informing decisions in HSCT. Meta-analyses and systematic reviews can highlight areas in which evidence is deficient, but they cannot overcome these deficiencies—they are statistical and scientific techniques, not magical ones. However, although the existence of knowledge gaps limits the generality of conclusions that can be drawn from the existing literature, the ability of systematic reviews and meta-analyses to identify these gaps is a strength of these approaches because it directs future primary studies to the areas for which evidence is most needed.

Other challenges for meta-analyses and systematic reviews include publication bias and research bias, the latter describing the over- or under-representation of populations, which results in a biased view of the totality. The presence of these issues can be strongly suspected by scientists, but although their magnitude can sometimes be estimated in a meta-analysis, it cannot be truly corrected in research syntheses.22, 49, 50 Similarly, a synthesis may be constrained by either selective or incomplete data reporting in primary publications. While many of such limitations can be accounted for, at least by identifying them using frameworks such as GRADE, rigorous applications of these methods are not generally found or arbitrarily used in syntheses of HSCT or hematologic malignancy studies and thus lead to less-well-justified methodologies that are sometimes inaccurately referred to as meta-analyses.16, 15, 51

Therefore, open-science practices have emphasized full and unbiased access to code and scientific data, which is of long-standing importance and central to future progress in meta-analysis.52 Registration of planned studies can reduce selective reporting of outcomes and offer transparency of the study process (https://www.crd.york.ac.uk/PROSPERO/); publication of “registered reports” in which the methods and proposed analyses for a study are peer-reviewed and published before the research is conducted can reduce publication bias.53 By minimizing selective and poor reporting and advocating full access to the data and code associated with each analysis, open-science standards, including guidelines such as those in the Equator Network (https://www.equator-network.org) can minimize bias.54 Another possible methodological solution for problems that are particularly acute with small sample sizes, poor reporting and different follow-up in HSCT studies, particularly when synthesizing evidence over a long time, is to use one of the various methods that have been developed for imputing or otherwise modeling missing data, weighting, and meta-regression.22, 55, 56

Last, evidence in HSCT will still mostly be built on findings from retrospective or single-center experience, given the general difficulty of conducting randomized trials in HSCT due to the reality of cellular therapeutics that they have a narrow therapeutic window, with significant toxicities that often accompany their efficacy.57 Notwithstanding that randomized trials remain the gold standard for evidence-based clinical decision-making in HSCT. Thus, an increasing use of well-conducted meta-analysis including observational data on large populations from the European Blood and Marrow Transplantation Registry as well as the Center for International Blood and Marrow Transplant Research evaluating well-formulated clinical questions is strongly encouraged.58 Another tool of overcoming the relative lack of several randomized trials is the prospective collection of data regarding a specific intervention (matched-cohort analyses) or condition (case-control). If randomized trials on a specific well-defined question exist, syntheses may harness this evidence in an integrated manner. First, data from previous phase 2 trials on a specific intervention which was then investigated in a phase 3 may be integrated in the final model. The inclusion of phase 2 findings evaluating different dosages in several intervention arms without further increasing the heterogeneity can be achieved by a cooperative approach using clinical and methodological expertise.21 Second, as conventional syntheses are by nature retrospective as the trials included are usually identified once they have been completed and the results reported, investigators may use and register meta-analyses prospectively which identify, evaluate and determine trials to be eligible for the meta-analysis before the results of any of those trials become known.15 Prospective meta-analyses can therefore help to overcome some of the recognized problems of retrospective meta-analyses by enabling hypotheses to be specified a priori; prospective application of selection criteria; and a priori statements of intended analyses, including subgroup analyses, to be made before the results of individual trials are known. This avoids potentially unreliable data-dependent emphasis on particular subgroups.59, 60

Collectively, meta-analysis can be a key tool for facilitating rapid progress in HSCT, especially in the wake of new cellular therapies and treatment pre- and post-transplant, by quantifying actual effects and identifying what is not yet known. Evidence synthesis in HSCT should be applied transparently and methodologically concise while combining analyses across networks as well as controlling for moderators to capture clinical together with analytic evidence in the most realistic manner. This will require collaboration from start to finish between statisticians, primary researchers, and clinicians.

SOFTWARE

The most suitable program for a meta-analysis will depend on the user's needs and preferences. Many of them are shareware or freeware programs and others are commercially available programs.

Commercially available programs are: Comprehensive Meta-Analysis (https://www.meta-analysis.com/), STATA (https://www.stata.com/support/faqs/statistics/meta-analysis/), and SAS (http://www2.sas.com/proceedings/sugi27/p250-27.pdf); while RevMan (http://community.cochrane.org/help/tools-and-software/revman-5), WinBUGS (http://www.mrc-bsu.cam.ac.uk/software/bugs/), and R (https://www.r-project.org/; packages meta, metafor, netmeta) are freely available options.

CONFLICT OF INTEREST

All authors declare no conflict of interest related to this article.

ETHICS STATEMENT

The authors confirm that the ethical policies of the journal, as noted on the journal's author guidelines page, have been adhered to. No ethical approval was required as this is a review article with no original research data.

CLINICAL IMPLICATIONS

This article highlights the key methods, limitations and developments of evidence synthesis in general and in HSCT. Although most clinical decision making in HSCT is based on narrative evidence, this review supports the application of meta-analysis and network meta-analysis adhering to high-quality methodology to facilitate evidence-based practice in a rapidly evolving field.