A Practical Bearing Failure Detection Method Using a New Efficient Deep Network With the Knowledge Self-Adaptive Evolution

Abstract

Intelligent fault diagnosis technology based on the deep neural network has shown significant advancements in recent years. However, it is difficult and expensive to deploy a fault diagnosis neural network with a huge number of parameters to an embedded computing platform with limited hardware resources. To address this issue, a practical bearing failure detection method using a new efficient deep network with the knowledge self-adaptive evolution, named autonomous compression method based on network pruning and knowledge distillation (AMC-NPKD), is proposed in this paper. In the proposed method, the reinforcement learning technique based on the deep deterministic policy gradient (DDPG) is employed to iteratively prune the network’s structure. The knowledge distillation (K-D) process is employed to fine-tune the pruned network after each pruning iteration. The results based on two datasets demonstrate that the proposed method effectively optimizes the structure of fault diagnosis networks. The proposed AMC-NPKD method is meaningful for promoting the engineering development of the intelligent fault diagnosis technology.

1. Introduction

The condition monitoring and fault diagnosis have increasingly become a hot research field in recent years [1]. Condition monitoring and fault diagnosis technology can determine the needs of the equipment maintenance and improvement [2]. The timely maintenance plan for the equipment can be carried out according to the monitoring result. This is crucial for improving the reliability and the safety of the equipment [3]. In recent years, bearing fault diagnosis has emerged as a prominent area of interest for researchers. The field of bearing fault diagnosis has a rich developmental history [4]. The most up-to-date methods for bearing fault diagnosis can be broadly classified into three categories: classical signal processing algorithms, machine learning-based algorithms, and intelligent fault diagnosis algorithms that leverage deep learning technology [5].

The classical signal processing-based algorithm for bearing fault diagnosis is one of the earliest and widely adopted methods. This algorithm relies on a well-established mathematical principle [6]. Some commonly used fault diagnosis methods based on classical signal processing include fast Fourier transformation (FFT) [7], short-time Fourier transform (STFT) [8], spectral kurtosis analysis [9], wavelet transform [10] (ensemble) empirical mode decomposition [11], the Lyapunov method [12], and Hilbert–Huang transform (HHT) [13], among others. The primary technical approach involves analyzing the monitoring signal in the time domain, frequency domain, or time-frequency domain, extracting characteristic vectors that are sensitive to different fault types, and subsequently classifying the monitoring signals based on specific criteria.

With the advancements in machine learning technology, the machine learning-based algorithms for bearing fault diagnosis have shown significant progress [14]. The general approach of the machine learning-based algorithms involves extracting fault feature vectors through signal processing techniques and using machine learning methods to autonomously classify them in the feature space. Commonly used algorithms include expert systems [15, 16], the K-nearest neighbor (KNN) algorithm [17, 18], the decision tree [19, 20], the support vector machine [21, 22], the hidden Markov model [23, 24], and others. The process of feature extraction still relies on manual application of classical signal processing methods. Subsequently, a classifier based on machine learning techniques is constructed to achieve automatic signal classification.

In recent years, intelligent fault diagnosis algorithms based on deep learning methods have experienced significant advancements. One notable advantage of these algorithms is their ability to complete the “end-to-end” process of fault diagnosis, eliminating the need for manual feature extraction and screening. This category of fault diagnosis algorithms exhibits remarkable benefits [25]. Typical intelligent fault diagnosis methods based on deep learning techniques include artificial neural networks (ANN) [26, 27], the auto-encoder network [28], the one-dimensional convolutional neural network [29], the adaptive deep convolution neural network (ADCNN) [30], the WDCNN [31], the multitask convolutional neural network (MCNN) [32], the deep belief network (DBN) [33], the long-short-time memory (LSTM) recurrent neural network [34], the residual network (ResNet) [35], and the graph convolutional network (GCN) [36]. These fault diagnosis methods can directly use the original monitoring data as network input. The entire process of feature extraction and classification can be automatically completed by the network, eliminating the need for manual feature extraction and setting classification criteria.

Despite the significant advantages and wide application prospects of intelligent fault diagnosis methods, fault diagnosis networks encounter challenges such as a large number of network parameters, low computing efficiency, and high hardware performance requirements [37]. These issues greatly limit the practical application of intelligent fault diagnosis methods in industrial scenarios. Research on neural network model compression methods has made significant progress in the field of image processing. Typical network compression methods include NP, parameter quantization, and K-D [38].

Zhang et al. proposed the NP method to compress the network, which primarily focuses on removing redundant structures by pruning the network in various dimensions, such as channels [39], convolutional kernel [40], neuron [41], or kernel parameters. Through the NP process, the redundant structures of the network will be removed. Ma et al. adopted parameter quantization methods to reduce parameter resolution in the network by converting 32-bit floating-point parameters to 8-bit or 4-bit low-precision floating-point parameters [42, 43]. In certain tasks, this conversion does not compromise the final network performance. Prakosa et al. used K-D methods that utilize a large-scale teacher network to guide the training process of a smaller student network [44, 45]. By reconstructing the loss function of the student network, knowledge from the teacher network is transferred to improve the performance of the smaller network. In the field of bearing fault diagnosis, there are only a few works on the network compression method for this purpose in existing reports. Si et al. [46] adopted a NP method based on the Taylor expansion criterion to prune the VGG-16 network and used it to classify bearing fault data. Zhang et al. [47] and Shen et al. [48] employed the K-D method to guide the training process of a small fault diagnosis neural network online, resulting in a lightweight student network for bearing fault diagnosis.

However, the current network model compression methods require manual intervention, which is time-consuming and laborious. To address these issues, the AMC-NPKD method is proposed in this paper. The proposed algorithm treats the NP process as a Markov model and utilizes the DDPG algorithm to optimize it. In accordance with the AutoML method [49], an iterative NP algorithm is devised to progressively prune the original network. Furthermore, a network fine-tuning step based on the K-D method is introduced to retrain the network after each iteration of the iterative pruning process. This fine-tuning step enables the full exploration of the performance of the pruned network structure.

- 1.

A novel and efficient bearing failure detection framework is introduced, leveraging a deep network with self-adaptive knowledge evolution. This framework tackles the challenge of high computational demands by employing convolution kernel pruning and neuron pruning to compress both convolutional layer (CL) and fully connected layer (FL). The subsequent K-D process fine-tunes the pruned network, ensuring minimal loss in performance while achieving a substantial reduction in model size and computational complexity. Experimental results demonstrate that the proposed method achieves a compression ratio of over 10× for the WDCNN network, significantly reducing its hardware resource requirements.

- 2.

An iterative pruning strategy is integrated into the AMC-NPKD framework to further enhance compression efficiency. This strategy addresses the limitation of single-pass pruning by iteratively pruning the network using the optimal strategy for the current state, fine-tuning the pruned network, and repeating the process. This iterative approach not only improves the compression ratio but also ensures that the pruned network maintains high classification accuracy. The method effectively resolves the trade-off between model compression and performance retention, making it highly suitable for resource-constrained environments.

- 3.

Enhanced optimization through K-D is applied after each pruning iteration to fine-tune the pruned network parameters. This step ensures that the compressed network achieves performance levels comparable to or even better than the original model. By leveraging K-D, the proposed method not only reduces the network’s computational demands but also improves its classification accuracy, ultimately enabling higher compression ratios without sacrificing diagnostic performance.

The proposed method provides a practical solution to the challenges of deploying large-scale neural networks for bearing failure detection in resource-constrained environments. It achieves a remarkable reduction in the model size and computational requirements while maintaining or even enhancing diagnostic accuracy, as evidenced by the experimental results.

The remaining sections of the paper are organized as follows: Section 2 introduces the basic theory of reinforcement learning, NP, and K-D. Section 3 presents the proposed neural network compression method for fault diagnosis in bearings. Section 4 presents the experimental results, the comparative experiment, and the ablation studies of the proposed method. Finally, Section 5 concludes the paper.

2. Theory

In this section, the details of the key steps contained in the proposed method are presented. Initially, the basic principles of the DDPG algorithm are elucidated, encompassing the agent model and the optimization algorithm for network parameters. Subsequently, the process of NP is delineated, and the calculation method for network parameters and FLOPs is derived. Following this, the fundamental method and the process of K-D are demonstrated, and the loss function of the pruned network is redesigned to fine-tune the parameters. Upon the completion of pruning and fine-tuning the compressed network, the specifics of parameter quantization are expounded. Ultimately, the pertinent knowledge of FPGA in the context of neural network-accelerated computing is introduced.

2.1. DDPG

To achieve the autonomous pruning process of the network, we have designed an agent model consisting of an actor network and a critic network. The actor network is responsible for determining the pruning ratio for each layer of the network, while the critic network evaluates the pruning ratios provided by the actor network. In order to optimize the parameters of both the actor and critic networks, we have employed the DDPG algorithm. The following section provides a detailed explanation of the steps and specifics involved in designing the agent model.

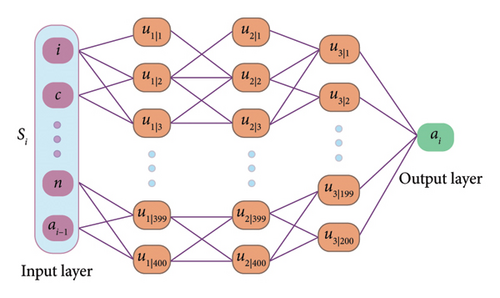

The objective of the actor network is to determine the optimal pruning ratio ai under the given state Si. The goal is to maximize the reward of the pruning process with ai and Si. The actor network takes the real-time state of the pruning network as input vectors and outputs the pruning ratio of each layer to guide the NP process. The actor network is structured as a multilayer fully connected network, as shown in Figure 1.

As shown in Figure 1, the input layer contains nine neurons, and the current state Si is adopted as the input vector of the actor network. The output of the actor network is the pruning ratio of the fault diagnosis network, which is signified as ai. The actor network in this paper contains three hidden layers, and the numbers of the neurons in three hidden layers are 400, 400, and 200, respectively.

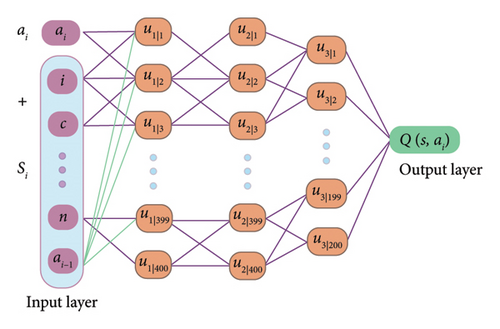

The critic network is responsible for predicting the value of the actor ai. Unlike the actor network, the critic network takes the pruning ratio and the state as the input vector. The output of the critic network is the expected value of the actor ai. The basic structure of the critic network is a multilayer fully connected network, which is identical to the actor network. The structure of the critic network is illustrated in Figure 2.

The proposed compression method prunes the 1-DCNN for the fault diagnosis based on the reinforcement learning method. The environment for the NP should include the network for the fault diagnosis, the dataset, and the reward function.

The DDPG algorithm is employed to optimize the pruning process. The training process, which is based on the NP process, is shown in Table 1.

| Algorithm 1: Pruning the kernels in each layer of the 1DCNN using the DDPG algorithm |

|---|

| Randomly initialize the actor network μ(S|θμ) and the critic network Q(S, a|θQ) with the weights θμ and θQ |

| Initialize the reply buffer M |

| For episode = 1, N do |

| Initialize a random process Π for the action exploration |

| Receive the initial observation state (S1) |

| For t = 1 to T do |

| Select an action(at = μ(St|θμ) + Πt) according to the current policy and the exploration noise |

| Execute the action (at) and observe the reward (rt), observe the new state (St+1) |

| Store the transition (St, at, rt, St+1) in the buffer M |

| Sample a random mini-batch of N transitions (Si, ai, ri, Si+1) from the buffer M |

| Set |

| Update the parameters of the critic network by minimizing the loss: |

| LossCritic = MSE(Qw(Si, ai), r + γQ′(Si+1, ai+1)) |

| Update the parameters of the actor network with the loss function of: |

| LossActor = −Qw(Si, ai) = −Qw(Si, μθ(Si)) |

| Update the parameters of the target networks with the function of: |

| End for |

| End for |

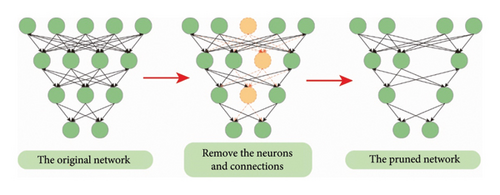

2.2. NP

The NP methods can be broadly categorized into two main types: structured pruning and unstructured pruning [50]. In the unstructured pruning method, less important neurons or parameters in the network are removed. Consequently, the connections between the pruned neurons and other neurons are disregarded during computation. However, accelerating the pruned network after the unstructured pruning process is challenging for existing typical hardware architectures. The deployment of the pruned network with fine-grained pruning methods necessitates specialized hardware platforms [51]. This requirement poses a hindrance to the widespread application of such pruning algorithms at a large scale.

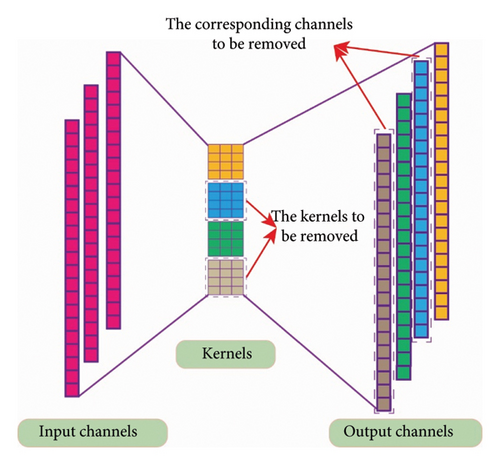

Structured pruning typically involves pruning at the filter or entire network layer, and the next feature maps undergo corresponding changes, but the overall structure of the model remains intact. As a result, it can still be accelerated using GPUs or other hardware, making it known as structured pruning. The deployment of the pruned network using this method does not rely on specialized hardware platforms. This type of pruning method offers good versatility. Therefore, for the 1-DCNN, the compression method based on pruning convolutional kernels and neurons in FL is adopted. This article primarily focuses on pruning convolutional kernels in CL and neurons in FL.

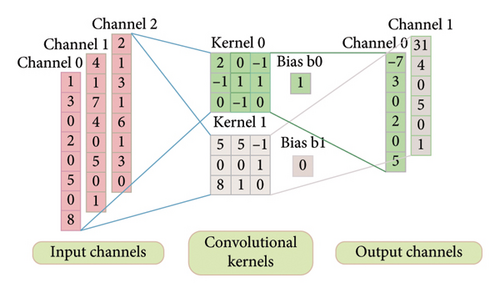

The larger the value of the L1 norm, the greater the importance of the convolutional kernel. For the pruning process, we remove the convolutional kernels with the less importance value. When the number of the convolutional kernels is changed, the number of the output channels accordingly. For the number of the output channels, it must keep the same as the convolutional kernels. The schematic diagram of the convolutional kernel pruning process is shown in Figure 3.

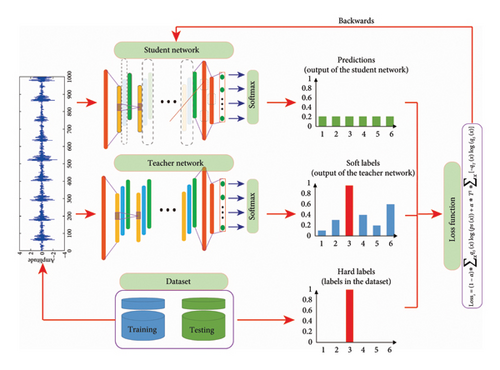

2.3. K-D

After pruning the convolutional kernels or neurons in the network, the performance of the network will be somewhat affected. This is due to the alterations in the structure and parameters of the network resulting from the pruning process. To enhance the performance of the pruned network, the K-D process is introduced to fine-tune the parameters of the pruned network in each iteration. The fundamental process of K-D is illustrated in Figure 5.

2.4. The Calculation Method of the FLOPs and the Amount of the Parameters

When deploying the network on an embedded hardware platform, the primary considerations are the storage requirements and the number of FLOPs of the network. During inference computation on the hardware platform, a smaller size of network parameters results in less memory space being needed. Similarly, a smaller number of FLOPs leads to faster inference speed and better real-time performance. In the field of neural network compression, the abovementioned two metrics are commonly used to evaluate the compression algorithm. Therefore, this section provides a brief introduction to the calculation method to determine the number of parameters and FLOPs in the network. The basic structure of the one-dimensional layer is illustrated in Figure 6.

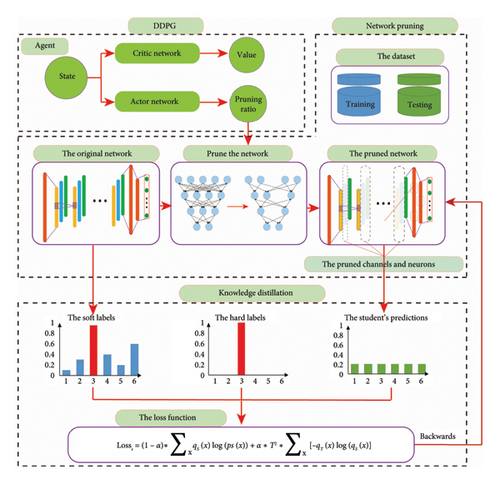

3. Methodology

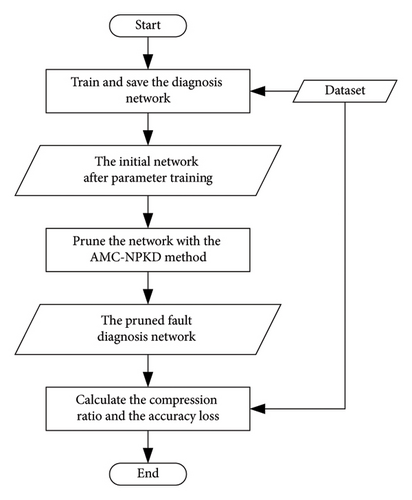

To address the issue of poor real-time performance in deep neural networks, the process of NP and K-D is employed to compress the pretrained network. To minimize manual effort during the compression process, the DDPG algorithm is utilized to automate the pruning operation. The network, dataset, and pruning process together form the environment. The information of each layer (kernels, size, and padding) is treated as the state strategy that is adopted to enhance the overall compression rate of the network. After each round of pruning, the K-D process is the subsequent pruning round. The flowchart of the proposed method is depicted in Figure 7.

The proposed method can be summarized as follows:

Firstly, the agent is built, consisting of an action network and a critic network. The action network takes the state information of the network in the pruning environment as input and outputs the pruning ratio for each layer. The critic network evaluates the value of the pruning ratio, taking the state information and pruning ratio as inputs. Typically, both the actor and critic networks can be implemented using a multilayer fully connected network.

Secondly, the network is pruned based on the given pruning ratio. Specifically, the focus is on pruning the commonly used convolutional neural network for bearing fault diagnosis. Structured pruning is adopted to CL, and pruning is performed in the dimension of convolutional kernels, while for FL, pruning is performed in the neuron dimension.

Thirdly, the pruned network is fine-tuned using the K-D method. This step aims to further optimize the parameters of the pruned network and compensate for the loss of accuracy caused by the pruning process. The original network serves as the teacher network, with its outputs used as soft labels. The pruned network in each iteration is treated as the student network. The one-hot labels in the dataset are considered hard labels. The loss function of the student network is reconstructed based on the hard labels, soft labels, and outputs of the student network. Back-propagation is then performed to optimize the student network.

Finally, the pruning and fine-tuning process is conducted based on the DDPG algorithm. The entire process is treated as a Markov process, and an environment is built to encompass network pruning, fine-tuning, accuracy testing, and network analysis. By considering factors such as accuracy and parameter scale of the pruned network, a value function is established to guide the NP process. Using this value function and DDPG, the optimal pruning strategy for each iteration can be determined. The pruned network is fine-tuned using the K-D process, and this network becomes the original network for the next iteration. The pruning and fine-tuning process is repeated, resulting in a balanced parameter scale and performance.

4. Experiment Results and Discussion

To validate the effectiveness of the proposed AMC-NPKD method, experiments are conducted on two different test benches with distinct structures. The detailed experimental flowchart is in Figure 8. The experiments are performed using JetBrains PyCharm with the 2019® community edition as the software environment. The PyTorch deep learning framework is utilized. The experimental setup consists of an i3-8100 CPU, a GTX3060 GPU, 32 GB of memory, and a 2 TB hard drive.

4.1. Results on the CWRU Dataset

4.1.1. The Dataset Description

In this experiment, the dataset from the CWRU Bearing Data Center [52] is utilized for testing. The device used to generate the bearing fault dataset is depicted in Figure 9. The dataset comprises monitoring data from four bearing states: the health state, the inner race fault state, the ball fault state and the outer race fault state. The inner ring fault in the experiment was collected during tests conducted on a 2-hp Reliance electric motor. Vibration data were recorded for motor loads ranging from 0 to 3.

For the experiment, monitoring data with different health states and running speeds were utilized. The details of the data used in this experiment are presented in Table 2. There are 8 types of monitoring data used for network training and testing, with labels ranging from 0 to 7. Each sample has a length of 2048. For each type of monitoring data, 4000 samples are used for training, and 1000 samples are used for testing in the K-D process. In total, the dataset consists of 40,000 samples, enabling the compression process for network training and testing.

| Running speed (rpm) | Health | Inner race fault | Ball fault | Outer race fault |

|---|---|---|---|---|

| 1797 | 97.mat | 105.mat | 118.mat | 130.mat |

| 1730 | 100.mat | 108.mat | 121.mat | 133.mat |

4.1.2. The Structure of the Network to be Pruned

In the field of the bearing fault diagnosis, the vibration signal is commonly used for the monitoring task. In order to facilitate the process of the fault diagnosis, the 1-DCNN is adopted to directly process the vibration signals in the time domain. Therefore, this experiment mainly prunes the 1-DCNN, which was proposed in our previous work [31], to verify the effectiveness of the proposed AMC-NPKD method. The structure and the details of the original network are shown in Table 3.

| Layers | Kernel size/stride | Kernel number | Output size | Padding |

|---|---|---|---|---|

| CL1 | 64 × 1/8 × 1 | 16 | 256 × 16 | Yes |

| PL1 | 2 × 1/2 × 1 | 16 | 128 × 16 | No |

| CL2 | 3 × 1/1 × 1 | 32 | 128 × 32 | Yes |

| PL2 | 2 × 1/2 × 1 | 32 | 64 × 32 | No |

| CL3 | 3 × 1/1 × 1 | 64 | 64 × 64 | Yes |

| PL3 | 2 × 1/2 × 1 | 64 | 32 × 64 | No |

| CL4 | 3 × 1/1 × 1 | 64 | 32 × 64 | Yes |

| PL4 | 2 × 1/2 × 1 | 64 | 16 × 64 | No |

| CL5 | 3 × 1/1 × 1 | 64 | 16 × 64 | Yes |

| PL5 | 2 × 1/2 × 1 | 64 | 8 × 64 | No |

| FL1 | / | / | 512 × 1 | |

| FL2 | / | / | 200 × 1 | |

| Output | / | / | 8 |

From Table 3, it is evident that the proposed WDCNN consists of 5 CL and 2 FL. The length of the convolutional kernels in the first layer is 64, while the numbers of the neuron in the two FL are 512 and 200, respectively. Based on calculations of the number of parameters and FLOPs, the WDCNN mentioned above has 273.7 k parameters and 1648.6 k FLOPs.

4.1.3. The Results on the CWRU Dataset

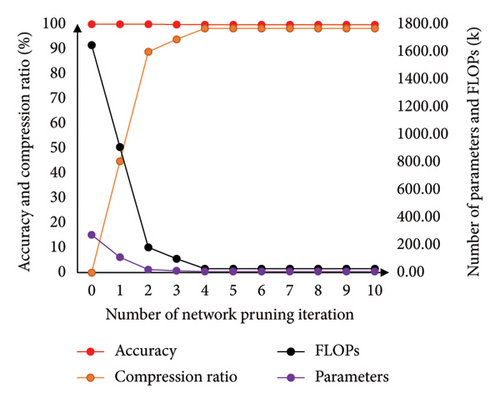

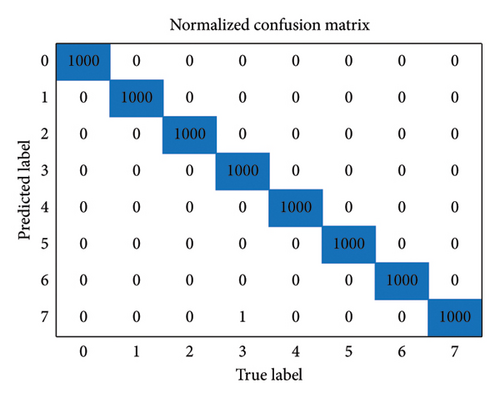

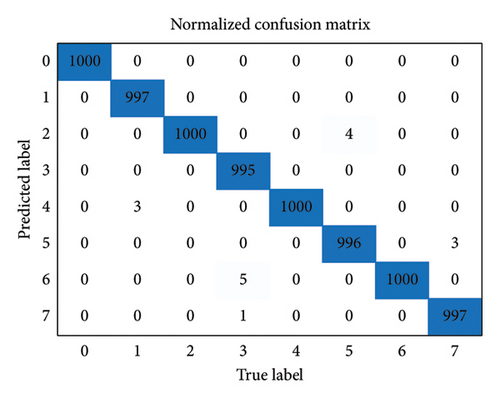

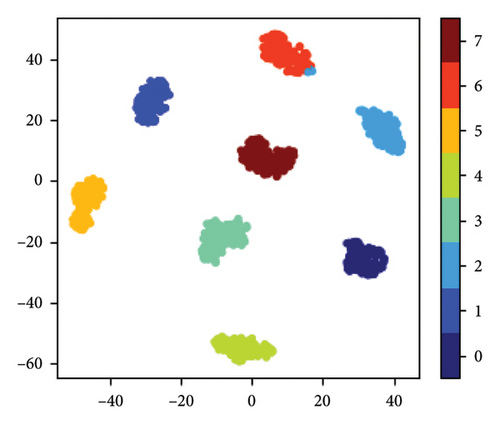

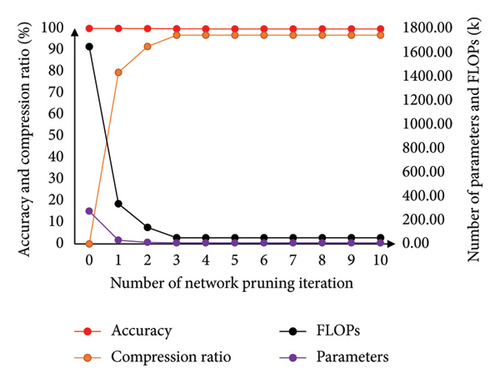

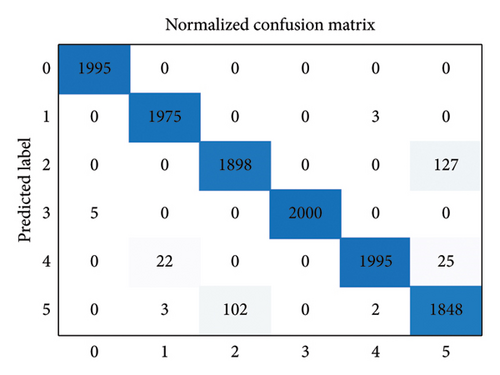

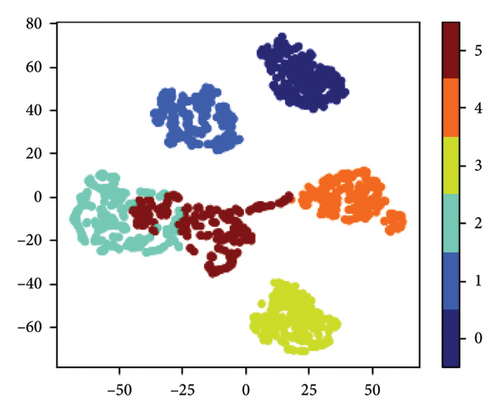

In each iteration of the DDPG training process, the specifications and experimental parameters in the experiment are set as in Table 4. The pruning results for each iteration throughout the entire pruning process are presented in Table 5 and Figure 10. The confusion matrices of the original network and the pruned network are displayed in Figure 11. The results of feature visualization for the two networks are shown in Figure 12.

| Name | Training times for DDPG | Maximum pruning ratio for each layer | Training epoch of K-D | Learning rate | Batch size |

|---|---|---|---|---|---|

| Value | 1000 | 0.99 | 1000 | 1.0e − 4 | 40 |

| Iteration number | The shape of the pruned network | Parameters (K) | FLOPs (K) | Accuracy (%) | Compression ratio (%) |

|---|---|---|---|---|---|

| 0 | [16, 32, 64, 64, 64, 512, 200, 8] | 273.7 | 1648.6 | 100 | 0 |

| 1 | [12, 22, 51, 46, 47,376, 88, 8] | 110.8 | 909.6 | 100 | 44.83 |

| 2 | [5, 9, 22, 14, 12, 96, 40, 8] | 22.1 | 183.3 | 99.96 | 88.88 |

| 3 | [3, 6, 17, 10, 11, 88, 17, 8] | 13.0 | 100.5 | 99.87 | 93.90 |

| 4 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

| 5 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

| 6 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

| 7 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

| 8 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

| 9 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

| 10 | [1, 5, 4, 5, 10, 80, 10, 8] | 7.1 | 28.2 | 99.81 | 98.29 |

From Table 4 and Figure 10, it can be observed that the accuracy of the original network is 100%, while the pruned network achieves an accuracy of 99.81%. This indicates that there is minimal loss in accuracy after the pruning process. The FLOPs of the original network and the pruned network are 1648.6.2 and 28.2 K, respectively, resulting in a compression ratio of 98.29%. The number of parameters in the original network is 273.7 K, while the pruned network has 7.1 K parameters, resulting in a compression ratio of 97.41%. These results demonstrate that the proposed AMC-NPKD method can significantly compress the size of the neural network while maintaining a high level of accuracy.

In Table 4, it can be observed that the pruning ratio varies in each iteration, as well as across different layers. This is because the actor network provides the pruning ratio based on the real-time state. By optimizing the parameters of the actor network using the DDPG algorithm, the actor network outputs the best pruning ratio according to the current state.

From Figures 11 and 12, it can be concluded that the classification performance and robustness of the pruned network have not been significantly affected. The confusion matrices show that the classification performance for each sample type remains nearly the same after the pruning process. The robustness of the network is also maintained, as the distance between the extracted features is not shortened. Despite the significant compression achieved by the AMC-NPKD method, the performance of the pruned network remains largely unaffected. This further validates the effectiveness of the proposed AMC-NPKD method.

4.2. Results on HIT-SM Datasets

4.2.1. The Dataset Description

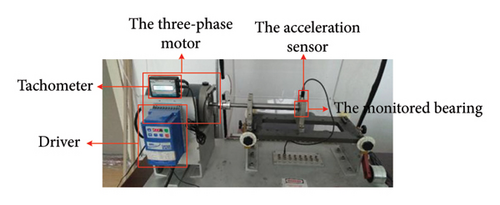

To further validate the effectiveness of the proposed AMC-NPKD method, an experiment is conducted using the HIT-SM bearing datasets [53]. The composition of the equipment used in the experiment is depicted in Figure 13. The bearing under test is a deep groove ball bearing with a model number of 6205. Three states of the bearings are tested: the healthy state, the inner ring failure state (as shown in Figure 14(a)), and the outer ring failure state (as shown in Figure 14(b)). The fault area is created using electrical discharge machining (EDM). The dataset is collected at speeds of 900 and 1200 RPM, with a sampling frequency of 51.2 kHz.

To evaluate the classification performance of the networks, six types of monitoring data are utilized. The dataset consists of three states of bearings under different speeds. The specifics of the dataset are presented in Table 6. For each type of sample, there are a total of 10,000 samples. Among these, 8000 samples are used for training, while the remaining 2000 samples are used for testing.

| Running speed (rpm) | Health | Inner race fault | Outer race fault |

|---|---|---|---|

| 900 | 10,000 | 10,000 | 10,000 |

| 1200 | 10,000 | 10,000 | 10,000 |

4.2.2. The Results on the HIT-SM Bearing Dataset

In this experiment, the WDCNN is also used as the original network to be pruned. The details of the WDCNN adopted in this experiment are similar to those in Table 2, with the only difference being that the output layer of the WDCNN used in this experiment contains 6 neurons. This is because the dataset used in this experiment consists of only 6 types of samples. The hyperparameters used in this experiment remain the same as those in the previous experiment.

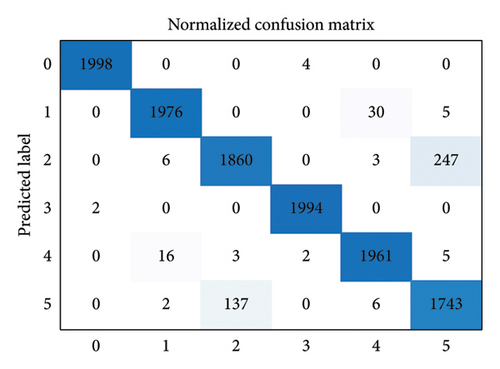

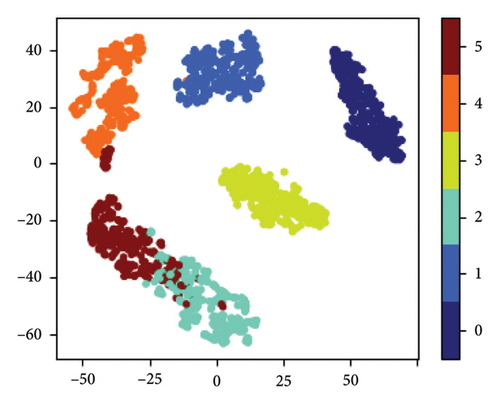

The structure of the network to be pruned in this experiment is the same as before, with the only difference being that the output layer now has six neurons to accommodate the six types of samples in the dataset. The remaining hyperparameters remain unchanged from the previous experiment. The pruning results for each iteration throughout the entire pruning process are presented in Table 7 and Figure 15. The confusion matrices of the original network and the pruned network are displayed in Figure 16. The results of feature visualization for the two networks are shown in Figure 17.

| Iteration number | The shape of the pruned network | Parameters (K) | FLOPs (K) | Accuracy (%) | Compression ratio (%) |

|---|---|---|---|---|---|

| 0 | [16, 32, 64, 64, 64, 512, 200, 6] | 273.3 | 1648.2 | 97.59 | 0 |

| 1 | [9, 14, 26, 18, 16, 128, 42, 6] | 30.9 | 335.6 | 97.48 | 79.64 |

| 2 | [5, 8, 14, 10, 7, 56, 21, 6] | 13.4 | 138.1 | 96.17 | 91.62 |

| 3 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 4 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 5 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 6 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 7 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 8 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 9 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

| 10 | [2, 5, 4, 14, 6, 48, 15, 6] | 8.3 | 51.4 | 96.10 | 96.88 |

From Table 7 and Figure 15, it can be observed that the accuracy of the original network is 97.59%, while the pruned network achieves an accuracy of 96.10%. This indicates that the loss in accuracy after the pruning process is only 1.49%. The FLOPs of the original network and the pruned network are 1648.2 and 51.4 K, respectively, resulting in a compression ratio of 96.88%. The number of parameters in the original network is 273.3 K, while the pruned network has 8.3 K parameters, resulting in a compression ratio of 96.96%. The accuracy of the pruned network remains nearly unchanged compared to the original network. The proposed AMC-NPKD method effectively compresses the size of the neural network using the home-made dataset. These experimental results further demonstrate the effectiveness and generalization of the proposed AMC-NPKD method.

From Figures 16 and 17, it can be concluded that the classification performance and robustness of the pruned network have not been significantly affected using the home-made dataset. The robustness of the network is also maintained, as the distance between the extracted features remains unchanged. Despite the significant compression achieved by the AMC-NPKD method, the performance of the pruned network remains largely unaffected. This further validates the effectiveness of the proposed AMC-NPKD method.

4.3. Comparative Study

To further validate the superiority of the proposed AMC-NPKD method, we compare it with two manually designed lightweight networks and three compression methods commonly used for bearing fault diagnosis. These five algorithms are mixed precision quantization (MPQ) [54], Taylor expansion-based pruning (TEP) [55], neural architecture search (NAS) [56], K-D [48], and AutoML [49]. For the last three compression methods, the original networks used for fault diagnosis are WDCNNs. The results of the compared methods and the proposed AMC-NPKD method are presented in Table 8.

| Method | Accuracy (%) | Parameters (K) | FLOPs (K) | Compression rate (%) |

|---|---|---|---|---|

| MPQ | 95.83 | 273.3 (3∼4 bit) | 172.5 | 91.52 |

| TEP | 95.90 | 20.7 | 167.3 | 89.85 |

| NAS | 92.89 | 22.6 | 322.0 | 79.16 |

| K-D | 93.15 | 29.4 | 322.2 | 79.14 |

| AutoML | 93.07 | 44.9 | 781.8 | 49.40 |

| AMC-NPKD | 96.10 | 8.3 | 51.4 | 96.88 |

From Table 8, the compression rates of the compared algorithms are 91.52%, 89.85%, 79.16%, 79.14%, and 49.40%, respectively. The accuracies of the networks obtained with the compared algorithms are 95.83%, 95.90%, 92.89%, 93.15%, and 93.07%, respectively. The proposed AMC-NPKD method achieves the compressed network with the fewest parameters and smallest FLOPs, while maintaining the accuracy of the pruned network. This demonstrates the superiority of the proposed AMC-NPKD method.

The MPQ method compresses the storage space of neural network parameters by reducing the precision of network parameters from the perspective of parameter quantization. The choice of quantization precision needs to be determined based on the specific task and network state. However, the quantized neural network still contains redundant parameters, and it fundamentally cannot eliminate such redundancy.

The TEP method primarily focuses on evaluating the importance of convolutional kernels during a single compression process. In contrast, our paper proposes a cyclic pruning-optimization strategy. Essentially, this strategy enables iterative pruning, making it easier to achieve a higher network compression ratio. The iterative nature of our approach allows for a more refined adjustment of the network structure, which is a significant advantage over the single-step evaluation of the Taylor expansion-based method.

The NAS method is a technique for searching network architectures. It requires manual definition of the search dimensions. Moreover, it fails to achieve the knowledge distillation effect from large models to small models. Additionally, the search process of NAS demands substantial computational resources. The proposed AMC-NPKD method, which adopts the DDPG strategy and the K-D method, does not have these limitations. It can adaptively find an optimal solution without the need for predefined search dimensions and can transfer knowledge effectively during the compression process.

The K-D method is a method for optimizing the training process of the lightweight network. It requires professionals with a certain task experience to design a lightweight neural network model in advance based on task characteristics and manual experience. Then, the K-D method is used to optimize and train this lightweight network model. However, the designed lightweight neural network model is difficult to approach the optimal network state. In contrast, our proposed AMC-NPKD method, by adopting a reinforcement learning strategy combined with the characteristics of specific datasets/tasks, enables the pruning strategy agent model to automatically converge to the optimal state. This ensures that the resulting lightweight network after compression can reach the optimal state within the dimensions of the evaluation function, which takes into account both the network’s computational burden (FLOP) and its task performance (accuracy).

Compared with the AutoML method, the proposed AMC-NPKD method can perform cyclic compression and optimization of the network to be compressed, prune out the redundant neuron nodes in the neural network through automatic compression, and then optimize the training of the pruned neural network through the K-D process to recover the performance loss caused by structural clipping. Through the iterative pruning strategy and the K-D process, the compressed neural network can converge to an optimal structural state.

4.4. Ablation Study

The effectiveness of the proposed method can be aforementioned cases. The efficiency of the proposed method is achieved through the combination of several improvements, including the iterative pruning process and the fine-tuning step based on K-D. The effects of each improvement are analyzed separately in this section.

4.4.1. The Influence of the Iterative Pruning Process

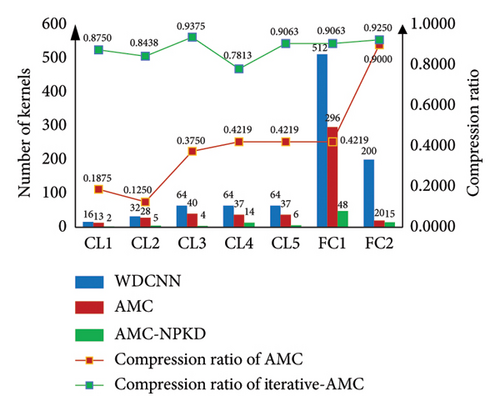

He et al. proposed the AutoML method for automatic network compression [49]. In this study, we enhance the AutoML method by introducing an iterative pruning process. This iterative pruning step allows for a higher pruning ratio to be achieved for the network. To evaluate the effectiveness of the iterative pruning step, comparative experiments are conducted in this section. The accuracy and compression ratios of the AutoML method and the proposed AMC-NPKD method are presented in Table 9. The compression ratios and details of the pruned networks using both methods are illustrated in Figure 18.

| Method | Accuracy (%) | Parameters (K) | FLOPs (K) | Compression rate (%) |

|---|---|---|---|---|

| WDCNN (original network) | 97.59 | 169.2 | 1648.2 | — |

| AutoML | 93.07 | 50.8 | 787.7 | 52.21 |

| AMC-NPKD | 96.10 | 8.3 | 51.4 | 96.88 |

From Table 9 and Figure 18, it is evident that the accuracy of the pruned network using the AutoML method is 93.07%, with a loss of 3.29% compared to the original network. However, the loss in accuracy for the pruned network using the proposed AMC-NPKD method is only 1.49%. In terms of the network compression ratio, the pruning ratios for the original network are 52.21% and 96.88% for AutoML and AMC-NPKD, respectively. Regarding the scale of the pruned networks, the number of parameters in the pruned network obtained with AMC-NPKD is only 0.163 times that of AutoML. Similarly, the FLOPs of the pruned network with AMC-NPKD is only 0.065 times that of AutoML. Through the ablation study and result analysis, it is evident that by incorporating the iterative pruning strategy, the proposed AMC-NPKD method achieves a higher NP ratio.

The proposed AMP-NPKD method can repeatedly prune and train the compressed neural network through the iterative pruning strategy. After a single round of NP, the parameters of the new neural network obtained after pruning are optimized and allocated, so as to maximize the performance potential of the neural network obtained after pruning. Finally, a lightweight neural network with a more streamlined structure can be obtained. Therefore, a higher compression ratio of the neural network can be addressed.

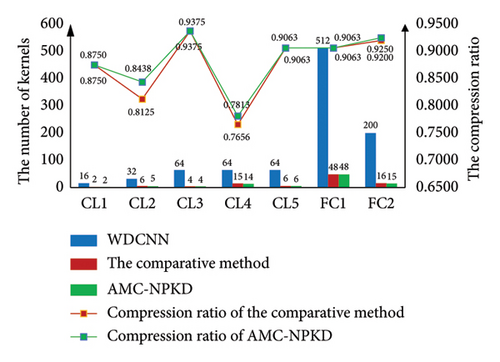

4.4.2. The Influence of the Fine-Tuning Step With the K-D Method

In the proposed AMC-NPKD method, a fine-tuning step based on the K-D process is implemented after each pruning iteration. When the pretrained network is pruned, the performance of the pruned network may be affected due to the reduction in parameters and structures. Additionally, the parameter distribution of the pruned network may not be optimally suited for the current network structure. To further enhance the performance of the pruned network, the K-D process is employed to fine-tune the pruned network after each iteration. The fine-tuned pruned network then serves as the original network for the subsequent pruning iteration. To evaluate the impact of the K-D fine-tuning process, an ablation experiment is conducted. The comparative method used is an iterative AutoML method, where a fine-tuning step is performed after each pruning iteration using the normal network training process without the K-D process. The results of the original WDCNN, the comparative method, and the proposed AMC-NPKD method are presented in Table 10 and Figure 19.

| Method | Accuracy (%) | Parameters (K) | FLOPs (K) | Compression rate (%) |

|---|---|---|---|---|

| WDCNN (original network) | 97.59 | 273.3 | 1648.2 | — |

| The comparative method | 96.06 | 8.8 | 53.8 | 96.74 |

| AMC-NPKD | 96.10 | 8.3 | 51.4 | 96.88 |

From Table 10 and Figure 19, the results demonstrate that the proposed AMC-NPKD method achieves a higher compression ratio and classification accuracy compared to the comparative method. The final compression rates of the pruned networks using the two methods are 96.74% and 96.88%, respectively. The accuracies of the pruned networks using the two methods are 96.06% and 96.10%, respectively.

The proposed method, which incorporates the K-D process in the fine-tuning steps, outperforms the iterative AutoML method without the K-D process. This is because the K-D process can enhance the performance of the pruned network. Compared to the normal network training process, the K-D process further explores the potential performance of the small pruned network. As a result, the proposed AMC-NPKD method achieves a higher network compression ratio.

5. Conclusion

In order to solve the problem of a large parameter scale and large consumption of hardware computing resources in the current fault diagnosis neural network model, this paper proposes an AMC-NPKD method to achieve deep compression and optimization of the fault diagnosis neural network. Based on the AutoML method, the proposed AMC-NPKD method introduces the iterative pruning strategy and the K-D process to realize cyclic pruning and parameter optimization for the compressed neural network model and finally obtains a higher network compression ratio. Experimental results show that the proposed AMC-NPKD method compresses the computational amount of the WDCNN network by more than 96% and the compression rate of parameters by more than 95%. The comparative experimental results show that the AMC-NPKD method also achieves significant advantages compared with the current typical lightweight neural network model design and compression methods. The AMC-NPKD method proposed in this paper is a kind of the network compression algorithm with strong generality, which is not only suitable for bearing fault diagnosis research but also is expected to have good performance in speech recognition, image recognition, and other research fields. The further research can be carried out in this field in the follow-up work.

The proposed AMC-NPKD method is a structured pruning method, where the entire convolution kernel or neuron is pruned. In future studies, we plan to explore network pruning in smaller dimensions, such as the channel of the convolutional kernel.

Nomenclature

-

- acc_loss

-

- The loss of the network’s accuracy after each pruning action

-

- ADCNN

-

- Adaptive deep convolutional neural network

-

- ai−1

-

- The pruning ratio of the previous layer

-

- AMC-NPKD

-

- Autonomous compression method based on network pruning and knowledge distillation

-

- ANN

-

- Artificial neural networks

-

- c

-

- The index of the current layer

-

- DBN

-

- Deep belief network

-

- DDPG

-

- Deep deterministic policy gradient

-

- FFT

-

- Fast Fourier transformation

-

- FLOPs

-

- Floating point operation

-

- FLOPs(i)

-

- The number of floating-point operations of the ith layer

-

- FLOPs_CL

-

- The FLOPs of the one-dimensional convolutional layer

-

- FLOPs_FC

-

- The FLOPs of the fully connected layer

-

- g

-

- The number of striding

-

- GCN

-

- Graph convolutional network

-

- HHT

-

- Hilbert–Huang transform

-

- i

-

- The index of the current layer

-

- importancei

-

- The importance of the ith convolutional kernel

-

- importancej

-

- The importance of the jth neuron

-

- K-D

-

- Knowledge distillation

-

- KNN

-

- K-nearest neighbor

-

- l

-

- The length of the data in each channel of the convolutional kernel

-

- LossActor

-

- The loss of the actor network

-

- LossCritic

-

- The loss of the critic network

-

- LossS

-

- The loss of the student network

-

- LSTM

-

- Long-short-time memory

-

- MCNN

-

- Multitask convolutional neural network

-

- n

-

- The index of the current layer

-

- NP

-

- Network pruning

-

- p

-

- The number of padding data

-

- Parameters_CL

-

- The number of parameters in the one-dimensional layer

-

- Parameters_FC

-

- The number of parameters in the fully connected layer

-

- ps(x)

-

- The distribution of the hard label

-

- qs(x)

-

- The outputs of the student network

-

- qT(x)

-

- The output of the teacher network

-

- qw(Si, ai)

-

- The expected value of the actor ai under the state Si

-

- θμ

-

- The parameter state of the actor network

-

- θQ

-

- The parameter state of the critic network

-

- rate_FLOPs

-

- The compression ratio of the FLOPs

-

- rate_parameters

-

- The compression ratio of the parameters

-

- rd

-

- The number of floating-point operations of the ith layer

-

- ri

-

- Reward

-

- ResNet

-

- Residual network

-

- Si

-

- State of the network

-

- STFT

-

- Short-time Fourier transform

-

- T

-

- The hyperparameter of the distillation temperature

-

- WDCNN

-

- Deep convolutional neural networks with the wide first-layer kernel

-

- wc,k

-

- The value of the kth element in the cth channel of the convolutional kernel

-

- α

-

- The adjustment parameter

-

- β

-

- The adjustment parameter

-

- γ

-

- The adjustment parameter

-

- μθ(Si)

-

- The output of the actor network under the parameter matrix θ

-

- τ

-

- The learning ratio

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This research was supported by the Aviation Key Laboratory of Science and Technology on Aero Electromechanical System Integration, Nanjing Engineering Institute of Aircraft Systems, AVIC.

Acknowledgments

This research was supported by the Aviation Key Laboratory of Science and Technology on Aero Electromechanical System Integration, Nanjing Engineering Institute of Aircraft Systems, AVIC.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.