LBN-YOLO: A Lightweight Road Damage Detection Model Based on Multiscale Contextual Feature Extraction and Fusion

Abstract

Detecting and classifying road damage are crucial for road maintenance. To address the limitations of existing road damage detection methods, including insufficient fine-grained contextual feature extraction and complex models unsuitable for deployment, this paper proposes a lightweight backbone and neck road damage detection model named LBN-YOLO. First, the backbone and neck of the original model are improved to be lightweight, and the C2f-dilation wise residual (C2f-DWR) module is integrated in the backbone to extract multiscale contextual information. Second, a simplified bidirectional feature pyramid network is employed in the neck structure to optimize the feature fusion network, reducing the number of parameters and simplifying the model complexity. Finally, a dynamic head with self-attention is introduced to enhance the sensing capability of the detection head, thus improving the precision of detecting occluded small objects. The proposed model’s detection ability is evaluated using a custom road damage dataset. The experimental results demonstrate that our proposed LBN-YOLO model achieves superior performance compared with the YOLOv8n model, with an increase of 4.1% in [email protected] and a 5.2% enhancement in precision, outperforming other detection models. In addition, the model is evaluated on two public datasets, showing improved detection performance compared with the original model, demonstrating strong generalization capabilities. Code and dataset are available at https://github.com/gzNiuadc/Road-crack-dataset.

1. Introduction

Roads are an important part of the transportation system. As usage duration and road loading rise, various kinds of damage gradually occur, with road cracks being the most common [1]. Serious road damage can be a potential threat to the safety of pedestrians and vehicles, so it is particularly important to monitor road damage and its severity in a timely manner. How to realize high-precision automated road damage detection with high efficiency and low cost is a great challenge nowadays.

Over the past 2 decades, conventional image processing techniques such as edge detection [2], morphological operations [3], and threshold segmentation have been widely employed for road damage detection. However, these methods are sensitive to light variations and noise, making them susceptible to uneven illumination and noise, which lead to lower precision in detection results. To solve these limitations, researchers apply deep learning approaches for road damage detection. Compared with traditional image processing methods, the deep learning method is faster and more robust [4].

1.1. Related Works

As a classical deep learning algorithm, the object detection algorithm can locate and classify road damage. Object detection models are divided into one-stage object detection and two-stage object detection [5]. One-stage object detection models include SSD [6], RetinaNet [7], and YOLO series algorithms [8, 9]. Typical two-stage detection models are Fast R-CNN [10] and Faster R-CNN [11]. The one-stage object detection algorithm directly obtains detection boxes, eliminating the step of determining the candidate boxes, resulting in a faster detection speed for the one-stage detection model. The YOLO series model is a traditional one-stage object detection approach with both detection speed and precision.

To further improve the detection precision of the YOLO series of models, Liu et al. [12] optimized the YOLOv3 model by adding a four-scale detection layer and replacing the intersection over union (IoU) with the EIoU, effectively enhancing the detection capability for small and hidden cracks in pavements. Xing et al. [13] incorporated an additional Swin-Transformer prediction head (SPH) into the YOLOv5 model to enhance feature extraction in complex environments. The improved YOLOv5 model increased road crack detection accuracy by 15.4%, with ability to detect cracks as small as 1.2 mm. Xiong et al. [14] proposed an improved model, YOLOv8-GAM-wise-IoU, based on YOLOv8, which significantly improves the accuracy and efficiency of bridge crack detection by introducing a global attention module and the wise IoU loss function. Dong et al. [15] integrated visual attention networks (VANs), large convolutional attention (LCA) module, and large separable kernel attention (LSKA) module into the YOLOv8 model to enhance the extraction of damaged shapes and localized features of concrete surfaces, achieving a 15% improvement in [email protected].

The lightweight model facilitates deployment across various applications. To achieve this, Yu et al. [16] used YOLOv4-FPM, based on YOLOv4, for real-time bridge crack detection. A pruning algorithm was applied to simplify the network structure, increasing the detection speed by 20 times. Diao et al. [17] replaced the backbone network of YOLOv5 with the lightweight MobileNetV3 and substituted the squeeze-and-excitation (SE) channel attention mechanism in the MobileNetV3 network with the shuffle attention (SA) mechanism. This adaptation not only improved information exchange between channels and spatial dimensions but also reduced the model’s parameter count, providing an important reference for lightweight road damage detection algorithms. Ning et al. [18] proposed an improved YOLOv7-RDD model, which integrates a lightweight aggregation network, an enhanced spatial feature pyramid structure, and a similarity-based attention mechanism (SimAM), achieving 145 FPS on the CQURDD dataset and demonstrating high detection efficiency. Zhao et al. [19] introduced a lightweight real-time road damage detection model by incorporating MobileNetv3 as the backbone and the efficient channel attention (ECA) module, reducing computational complexity by 46.2% while maintaining real-time performance.

Although numerous advancements have been made in improving either the speed or accuracy of road damage detection models, research addressing both aspects simultaneously remains limited. Xing et al. [20] enhanced the YOLOv5 model by introducing an efficient decoupled header and replacing traditional convolution with the GCC3 module for global context modeling, improving road damage detection. On the RDD2022 dataset, accuracy increased by 1.5% but parameters and computational cost doubled compared with the original model. Wang and Chen [21] proposed a transformer-based detector incorporating receptive field attention blocks and a feature assignment mechanism to enhance crack detection accuracy. However, this increases in GFLOPs and parameters by 11% and 15%, respectively, resulted in reduced detection speed. Wan et al. [22] improved the BR-DETR model for bridge damage detection by introducing deformable conv2d and employing copy–paste augmentation, boosting accuracy. However, the high complexity of the transformer limits real-time detection. Meng et al. [23] developed a lightweight pavement crack detection method based on YOLOv8 and a knowledge distillation model with multiple teacher–assistants (KDMTA), achieving a 79.6% improvement in image processing speed. However, detection accuracy and mean average precision (mAP) decreased by 2.14% and 4.64%, respectively. He et al. [24] replaced standard convolution in YOLOv7 with ghost convolution and applied depthwise separable convolution to reduce parameters. By combining channel width, depth optimization, and knowledge distillation, the model achieves a lightweight design with a slight degradation in detection accuracy.

1.2. Motivations

In summary, road damage detection requires a balanced emphasis on both detection precision and real-time capabilities. However, the complexity of the detection models at this stage is high and the detection efficiency is low [20–23]. The intricate morphological traits of different road damages, coupled with complex background interference and fluctuating lighting conditions, present significant challenges for accurate road damage recognition. Therefore, this study aims to develop a robust road damage detection model capable of accurately identifying and categorizing various road damages, while also minimizing training costs and detection time to overcome current limitations.

1.3. Contributions

- 1.

A dataset of road damages in real scenes has been constructed, consisting of seven types of road damages in various lighting conditions and diverse backgrounds. This dataset enhances the available data for road damage detection.

- 2.

The proposed LBN-YOLO model is characterized by its lightweight design. It integrates dilation-wise residual (DWR) into the C2f module of the feature extraction network, forming the C2f-DWR module. This augmentation enhances the backbone network’s ability to extract multiscale contextual and global information.

- 3.

The neck section of the detection model is enhanced by eliminating nodes with minimal impact on feature fusion to reduce feature redundancy and proposes a simplified bidirectional feature pyramid network (SBiFPN) based on the feature fusion concept of the bidirectional feature pyramid network (BiFPN). In addition, the cross-level connection is introduced to fuse the feature information of multiple scales to improve the detection capability of objects at various scales.

- 4.

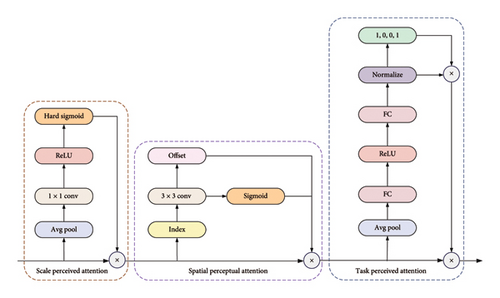

Dynamic head (Dyhead) is employed as the detection head, utilizing scale awareness, spatial awareness, and task awareness among different extraintegral layers, spatial positions, and within the output channel, respectively. This significantly enhances the classification and localization precision of small objects affected by shadows and occlusions.

The rest of the paper is organized as follows. Section 2 presents the details of the road damage detection model proposed in this study. Section 3 elaborates on the dataset used, experimental configurations, and outcomes. Sections 4 and 5 summarize the paper’s findings and outline potential directions for future research.

2. Algorithms for Road Damage Detection

This section introduces the improved design of the LBN-YOLO model, including the C2f-DWR module in the backbone network, the SBiFPN feature fusion network, and the Dyhead detection head incorporating multiple self-attention mechanisms, aiming to enhance the accuracy and efficiency of road damage detection.

2.1. LBN-YOLO Algorithm

YOLOv8 is an enhanced version of YOLOv5. Recently, YOLOv8 has demonstrated excellent performance in road damage detection [25, 26]. YOLOv8 consists of five pretrained versions with different model sizes, namely, YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x [19]. Among them, YOLOv8n is the model with the minimum parameters, saving computational resources while maintaining high detection precision, which is consistent with our research goal. Therefore, this paper chooses YOLOv8n as the baseline model. YOLOv8 introduces three significant improvements compared with YOLOv5. First, it replaces the C3 module in the backbone with the C2f that contains rich information of gradient flow to reduce the computational complexity. Second, it eliminates the 1 × 1 convolution preceding the upsampling layer in the neck section. Lastly, the head section uses the decoupled head structure to separate the classification task from the regression task, and Anchor-Based is replaced with Anchor-Free [27, 28].

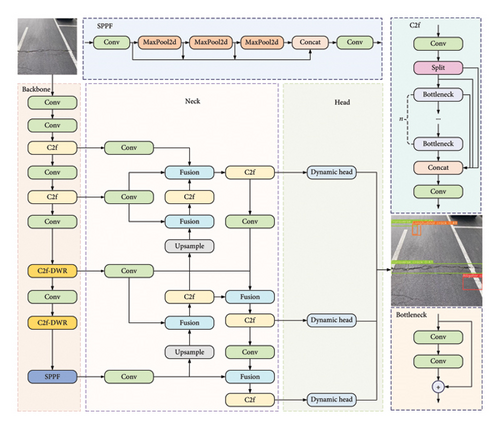

Due to the complexity of the backgrounds presented in the road damage images we collected, such as water damage, leaves, and shadows, which can interfere with road damage detection, the YOLOv8n detection model is prone to misdetection and omission. To address these challenges, this study proposes an improved model named LBN-YOLO. Figure 1 illustrates the architecture of LBN-YOLO model. In the Trunk section, two C2f-DWR modules replace the C2f module at the end, enhancing the extraction capability of contextual information and multi-scale object features. In the Neck section, SBiFPN is introduced for bidirectional fusion and interaction of multiscale feature information, while simultaneously achieving a lightweight design. To further enhance precision in detecting occluded small objects, the dynamic detection head Dyhead with a self-attention mechanism is employed.

2.2. C2f-DWR Module

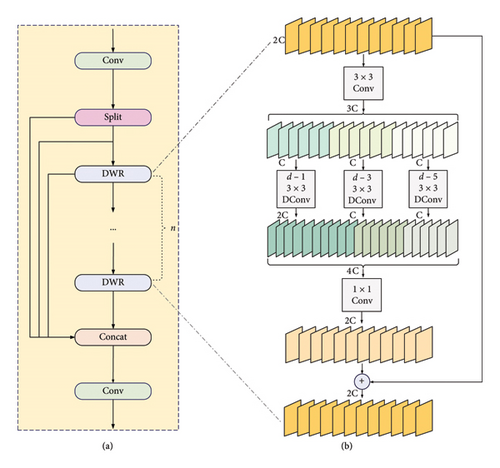

The C2f module in YOLOv8 acquires rich gradient flow information through residual concatenation. The module’s construction is illustrated in Figure 1. The object scales in our collected road damage images are of various sizes. However, the standard convolution in the C2f module limits the sensory field of the network and lacks the ability to extract multiscale contextual information, potentially leading to false detection of damaged objects. To resolve this limitation, this paper proposes the C2f-DWR module in this study to efficiently capture multi-scale contextual information based on C2f. The C2f-DWR contains multiple DWR modules, as illustrated in Figure 2(b).

The DWR module is structured residually, featuring three dilated convolutional modules with dilation rates of 1, 3, and 5 [29], alongside two standard convolutional modules with convolutional kernels of 1 and 3, as shown in Figure 2(b). It extracts the contextual information of multiscale features through two steps. First, the initial feature extraction is carried out using 3 × 3 convolution. Subsequently, the feature maps obtained in the first step are input into three expansion convolution layers with different expansion rates to extract features with rich contextual semantic information. The output feature maps of the three expansion convolutions are spliced in channel dimension. Finally, the output feature map after feature integration is concatenated with the residuals of the original feature map to produce a feature map containing both the original feature information and multiscale contextual information.

The scales and shapes of the road damages we captured exhibit significant variation. However, DWR can expand the receptive field without sacrificing the size of the feature map, thereby enhancing the extraction of multiscale features. Consequently, integrating the C2f-DWR module into the backbone network enables the extraction of more comprehensive feature information. This integration allows for fusing and interacting local fine-grained features, captured when the receptive field is small, with global coarse-grained features, captured when the receptive field is large.

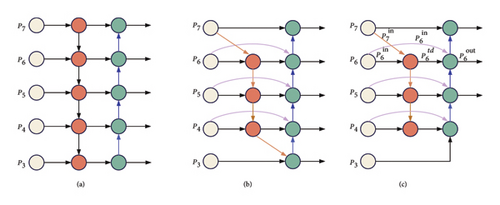

2.3. Improved Feature Fusion Network SBiFPN

The feature fusion network of YOLOv8 utilizes feature pyramid network (FPN) and path aggregation network (PAN), as shown in Figure 3(a). PANet [30] achieves bidirectional fusion of features by upsampling and downsampling, which might lead to feature information loss or redundancy. BiFPN [31] network carries out complicated bidirectional cross-scale fusion based on PAN, while simultaneously eliminating unidirectional input nodes that do not contribute to feature fusion. In Figure 3(b), the node positioned as the first node on the right side of P7 exemplifies this kind of node. Because its contribution to the feature fusion network is minimal, its removal has negligible impact on the overall network. However, it is worth noting that the BiFPN network remains its complexity.

Since feature fusion primarily occurs at the P4 and P6 levels, and large-scale objects are relatively rare, the P3 level output node shown in Figure 3(c) is removed to enhance small object detection performance, simplify the network, and further reduce computational overhead. Road cracks are typically small, irregularly shaped, and defined by subtle features, relying heavily on detailed information, such as edges and textures, embedded in low-level features. In small object detection, low-level features contain abundant positional information critical for accurate localization and boundary delineation. Directly connecting low-level P3 features to the high-level output allows detailed information from the lower layers to be efficiently propagated to the final output, preserving fine-grained features critical for effective detection.

The optimized feature fusion network fuses multiscale positional and semantic information with different weights, removes redundant feature information, significantly improves the detection precision of small objects, and overcomes the defects of the traditional feature pyramid network.

2.4. Dyhead

There are small objects with uneven illumination and shadow occlusion in the pictures captured by the real scene, which are difficult to detect. To improve the precision of detection, Dyhead [32] detection head based on self-attention mechanism is introduced. Dyhead combines scale-aware attention module, spatial-aware attention module, and task-aware attention module. By incorporating the three self-attention modules, the feature representation ability of the detection head is enhanced, and the detection precision of small objects is improved.

The structure of Dyhead is shown in Figure 4. A single Dyhead module structure is composed of three kinds of attention modules in series and can also be stacked with multiple dynamic modules.

3. Experiment and Analysis

This section provides an overview of the experimental setup, evaluation methods, and analysis for model training, including data preprocessing, evaluation metrics, ablation experiments for individual modules, performance comparison with other algorithms, and validation results on different datasets.

3.1. Dataset and Preprocessing

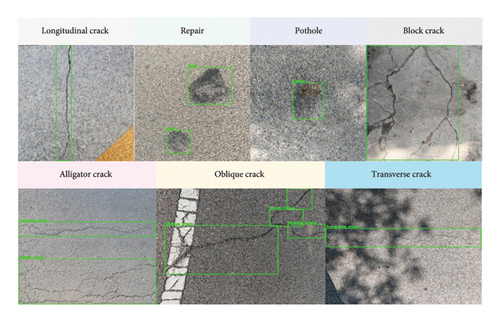

Since there is a small dataset of publicly available road damage images containing complex environmental disturbances, this paper captured 1300 road damage images of 3000 × 4000 pixels using a smartphone. This paper captured images of road damage on various road surfaces, including asphalt concrete, cement concrete, and masonry pavement. Subsequently, this paper filtered the acquired data to eliminate excessively blurred, poorly lit, and overexposed images, ensuring high image quality. The censored road damage dataset consists of seven types of road damage: transverse crack (TC), longitudinal crack (LC), oblique crack (OC), alligator crack (AC), block crack (BC), pothole (Po), and repair (Re), and an example of the damage is shown in Figure 5. Since repair damage is relatively uncommon in real road scenes and the amount of collected image data is limited, this paper considers selecting some high-quality repair-type images from the public dataset RDD2020 [34] to augment the self-constructed dataset. The merged dataset is divided into training set, validation set, and test set according to the ratio of 7:2:1.

The richness of the dataset is closely related to the detection effectiveness, and the use of datasets containing a variety of complex environments to train the model can achieve better results and stronger robustness [35], effectively preventing the model from overfitting. Therefore, this paper employs both offline and online methods for data enhancement. Online enhancement involves using Mosaic data enhancement, while offline enhancement includes common methods such as random flipping and color perturbation. Data enhancement enriches the location and color information of the damaged images in the dataset, and the enhanced dataset contains a total of about 3000 road damage images. The sample sizes for the various road damage types in the dataset are shown in Table 1. The proportion of each damage type in the training, validation, and testing sets remains consistent with the overall dataset distribution.

| Type of road damage | Total number | Training set | Validation set | Test set |

|---|---|---|---|---|

| Transverse crack | 1221 | 917 | 225 | 79 |

| Longitudinal crack | 1608 | 1108 | 335 | 165 |

| Oblique crack | 2059 | 1538 | 329 | 192 |

| Block crack | 377 | 243 | 80 | 54 |

| Alligator crack | 447 | 333 | 77 | 37 |

| Pothole | 341 | 251 | 62 | 28 |

| Repair | 602 | 438 | 126 | 38 |

Training on 3000 × 4000 pixel images is time consuming and computationally demanding. Directly cropping damaged images may lead to the loss of critical information. Therefore, determining an optimal input image size that balances detection performance and training efficiency is crucial. To achieve this, a comparative experiment was conducted with different input sizes (320 × 320, 640 × 640, and 1280 × 1280) while keeping other hyperparameters constant. The experimental results are shown in Table 2. The results indicate that 640 × 640 provides the best balance between detection accuracy and computational efficiency. A smaller input size (320 × 320) reduces detection accuracy whereas a larger size (1280 × 1280) slightly improves accuracy but significantly increases memory consumption. Due to GPU limitations, the batch size must be reduced from 32 to 8, thereby prolonging training time. Furthermore, previous research [36] indicates that increasing image resolution does not substantially enhance detection accuracy. Therefore, images are resized to 640 × 640 for the YOLOv8 model to optimize performance and efficiency.

| Image size | [email protected] (%) | [email protected]:0.95 (%) | GPU memory usage (G) | Training time (h) |

|---|---|---|---|---|

| 320 × 320 | 63.4 | 44.9 | 2.5 | 1.4 |

| 640 × 640 | 74.0 | 51.9 | 9.2 | 3.4 |

| 1280 × 1280 | 64.5 | 38.4 | 9.2 | 9.2 |

To ensure the accuracy and reliability of the annotations, the collected road damage image dataset is manually annotated by experts using LabelImg. Detailed annotation guidelines are established based on international standards, with a strict verification procedure. Randomly selected annotated samples are validated to enhance dataset consistency and credibility.

3.2. Experimental Parameter Setting and Model Training

The model training and testing for the experiments in this paper were conducted on an Intel i5-12400F CPU and NVIDIA 4060-Ti GPU. The experimental framework is PyTorch 1.13.1, CUDA Version 11.3, and the algorithms are written in Python, Python Version 3.9.18.

The hyperparameter settings during the training process are shown in Table 3. Considering the size of the computational resources, the batch size is set to 32, the model is trained for 400 epochs, the optimizer is chosen to be stochastic gradient descent (SGD), and in order to prevent the model from overfitting, an early stopping strategy is used.

| Hyperparameters | Value |

|---|---|

| Batch size | 32 |

| Epoch | 400 |

| Initial learning rate | 0.01 |

| Warmup | 3 |

| Optimizer | SGD |

| Momentum | 0.9 |

| Weight decay | 0.0005 |

| Training mechanism | EarlyStopping |

3.3. Evaluation Indicators

This paper used commonly used evaluation metrics in the field of object detection, such as precision (P), recall (R), [email protected], [email protected]:0.95, parameter number (Para), frames per second (FPS), and F1-score (F1) to evaluate the road damage detection model. In object detection, the objects are classified into two categories: positive samples and negative samples. A positive sample refers to a correctly detected object, where the IoU between the predicted bounding box and the ground truth box exceeds a predefined threshold. A negative sample refers to a sample where no object is detected or the IoU is below the threshold.

Ttotal is the sum of inference time, preprocessing time, and nonmaximum suppression (NMS) time, and Nimage represents the total number of images.

3.4. Model Validation and Results

This paper trained the model using a self-built dataset and the detection results for the seven road damage types are shown in Table 4. “Total” denotes the overall detection results for all categories. From Table 4, it can be concluded that in the detection using the LBN-YOLO proposed in this study, the total value of [email protected] for all types increased by 4.3%.

| Class | P (%) | R (%) | F1 (%) | [email protected] (%) | [email protected]:0.95 (%) |

|---|---|---|---|---|---|

| YOLOv8n: | |||||

| Total | 69.9 | 63.7 | 66.7 | 69.7 | 43.7 |

| Alligator crack | 84.4 | 76.7 | 80.4 | 83.9 | 49.0 |

| Block crack | 31.4 | 100.0 | 47.8 | 99.5 | 84.5 |

| Longitudinal crack | 68.2 | 53.2 | 59.8 | 59.1 | 31.6 |

| Oblique crack | 67.1 | 47.9 | 55.9 | 53.1 | 31.9 |

| Pothole | 91.7 | 41.7 | 57.3 | 48.2 | 15.7 |

| Repair | 71.5 | 81.1 | 76.0 | 77.1 | 59.3 |

| Transverse crack | 75.3 | 59.1 | 66.2 | 68.0 | 34.2 |

| LBN-YOLO: | |||||

| Total | 75.1 | 65.9 | 70.6 | 74 | 51.9 |

| Alligator crack | 89.8 | 73.3 | 80.7 | 87.4 | 55.2 |

| Block crack | 35.9 | 100.0 | 52.8 | 99.5 | 94.5 |

| Longitudinal crack | 72.0 | 51.6 | 60.1 | 60.0 | 37.3 |

| Oblique crack | 69.8 | 45.6 | 55.2 | 55.5 | 32.7 |

| Pothole | 92.9 | 41.7 | 57.6 | 62.5 | 32.1 |

| Repair | 82.0 | 86.2 | 84.1 | 83.8 | 71.9 |

| Transverse crack | 83.4 | 59.1 | 69.2 | 69.5 | 39.9 |

When using the original YOLOv8n model for road damage detection, the detection results for pothole types are notably lower, with a [email protected] value of only 48.2%, owing to the scarcity of pothole images, which often appear in varying sizes and shapes. It is worth noting that the LBN-YOLO model incorporates the C2f-DWR module and SBiFPN, enabling more effective extraction and fusion of the irregular features of pothole across multiple scales. Utilizing LBN-YOLO for detection, the [email protected] value for pothole types increased by 14.3%, and the [email protected]:0.95 value increased by 16.4%, representing the highest improvement among several damage categories. This indicates the exceptional performance of our proposed model on the more challenging damage categories, reducing both missed detections and false alarms.

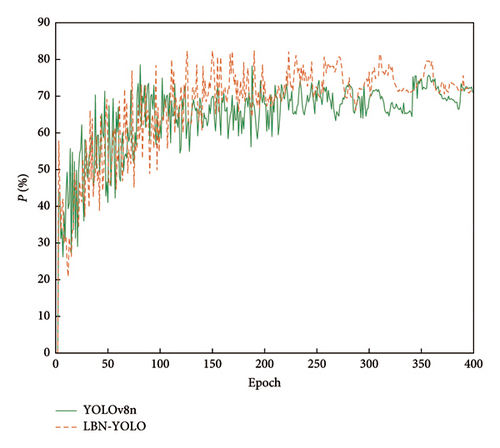

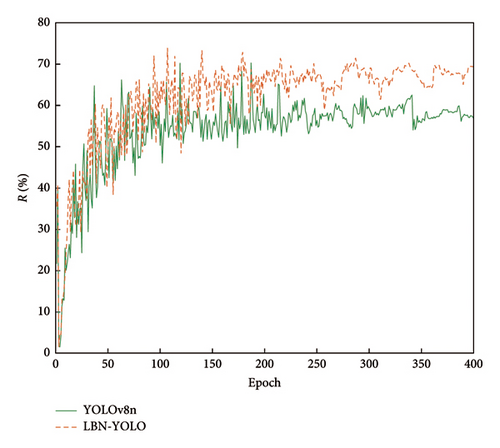

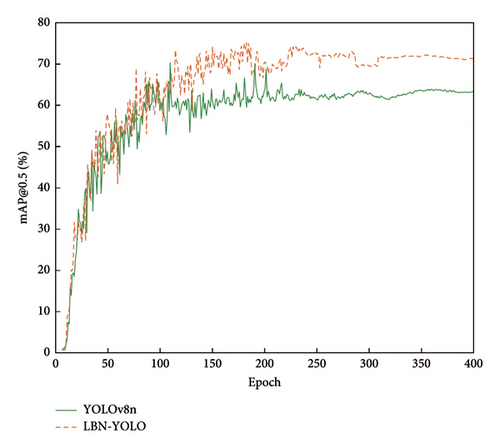

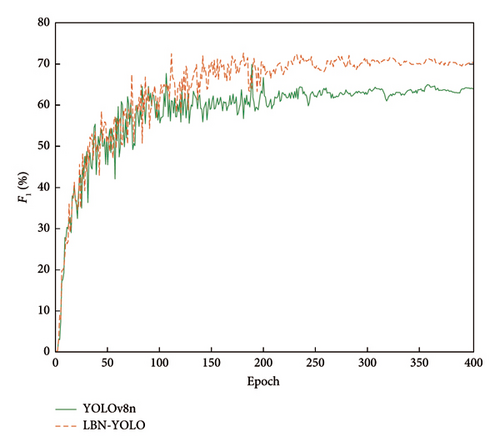

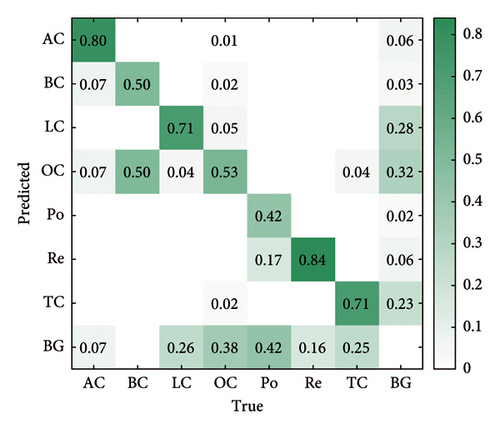

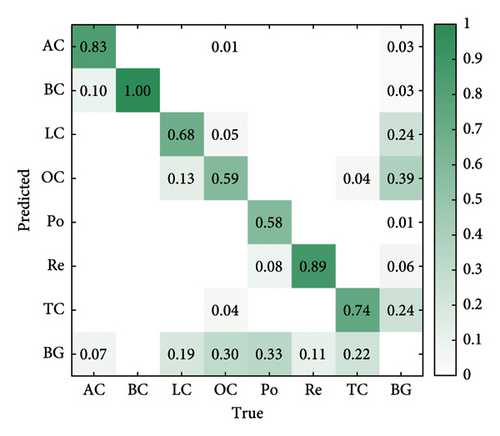

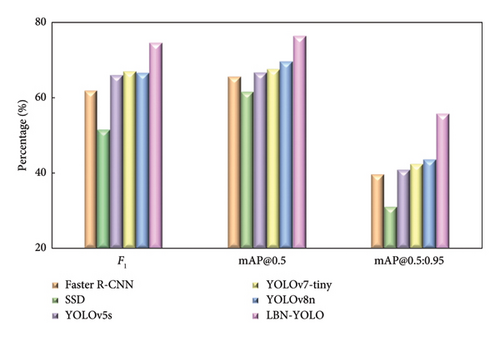

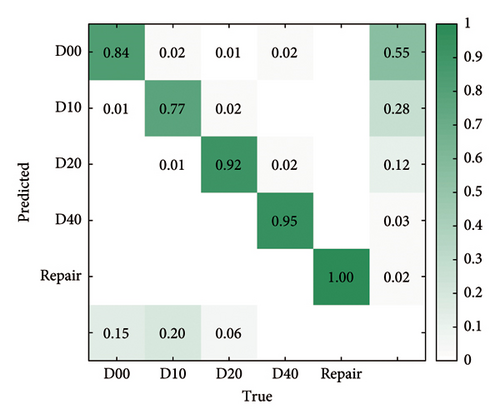

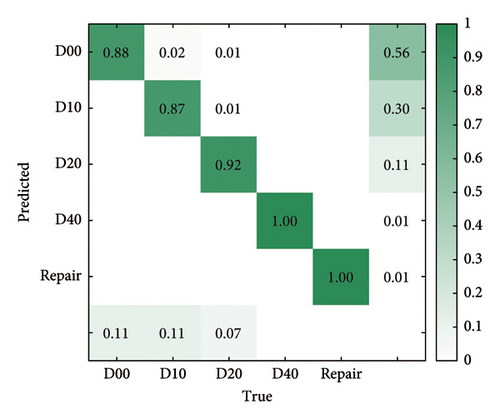

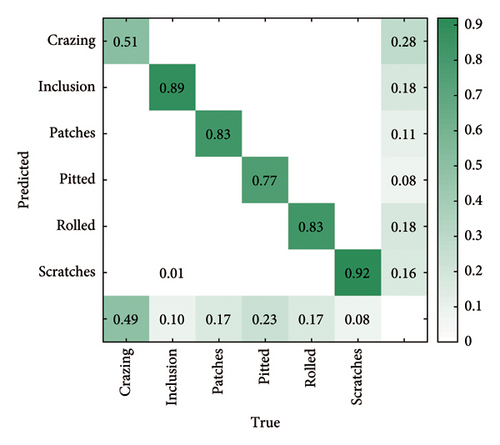

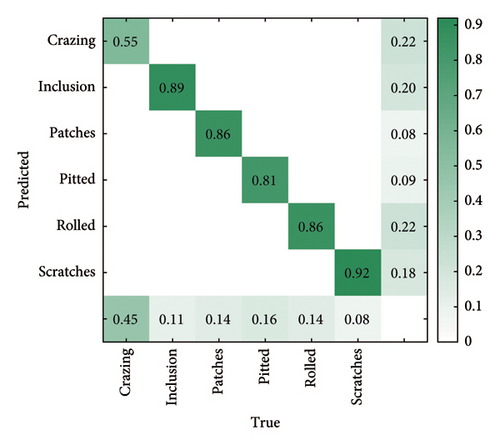

The LBN-YOLO model proposed in this study demonstrates superior improvements in P, R, and F1 compared with the original YOLOv8n model, as visually represented in Figures 6 and 7 with confusion matrices. It is evident from Figures 6(a) and 6(d) that the performance of the enhanced LBN-YOLO model surpasses that of the original model trained for 400 epochs, demonstrating significantly improved performance. Figure 7 illustrates a comparison of the confusion matrices between the two models before and after improvement. The horizontal axis represents the actual damage categories, while the vertical axis represents the predicted categories. Notably, the classification precision depicted in Figure 7(b) surpasses that of Figure 7(a), implying the superior performance of our LBN-YOLO algorithm compared to YOLOv8n in detecting road damages.

3.5. Ablation Experiment

To verify the effectiveness of several modules proposed in this paper, ablation experiments are designed. Accordingly, the proposed C2f-DWR, SBiFPN, and Dyhead are added into the model for the ablation test. The experiments are conducted on the self-constructed dataset presented in Section 3.1, and the results are shown in Table 5.

| YOLOv8n | C2f-DWR | SBiFPN | Dyhead | F1 (%) | [email protected] (%) | [email protected]:0.95 (%) | Para (M) |

|---|---|---|---|---|---|---|---|

| √ | 67.7 | 69.7 | 43.7 | 3.0 | |||

| √ | √ | 69.4 | 71.7 | 46.1 | 2.9 | ||

| √ | √ | 65.7 | 69.0 | 43.1 | 2.0 | ||

| √ | √ | 70.0 | 71.2 | 48.9 | 3.4 | ||

| √ | √ | √ | 69.7 | 72.8 | 51.1 | 1.9 | |

| √ | √ | √ | 69.2 | 72.1 | 51.0 | 3.4 | |

| √ | √ | √ | 68.9 | 71.9 | 49.5 | 2.5 | |

| √ | √ | √ | √ | 70.6 | 74.0 | 51.9 | 2.4 |

In the first row of Table 5, the detection results using the original YOLOv8n model serve as the baseline. Subsequently, the C2f-DWR module is separately added to the baseline model, resulting in a 2% increase in [email protected]. The addition of SBiFPN achieves lightweight of the original model, with a significant 34% reduction in parameter count while maintaining comparable precision to YOLOv8n. When combining two modules, the results surpass those of using each module individually. Notably, the combination of C2f-DWR and SBiFPN modules increases [email protected] from 69.7% to 72.8%, a 3.1% improvement, while also reducing computational costs. Finally, incorporating all three improvement modules simultaneously results in the best performance. Compared with the original model, F1 scores increase by 2.9%, [email protected] by 4.3%, and [email protected]:0.95 by 8.2%, with a 20% reduction in parameter count. These experimental results robustly demonstrate the significant advantages of the LBN-YOLO model proposed in this study in terms of detection precision, speed, and the number of parameters, with each module making a positive contribution.

To further verify the effectiveness of the improved SBiFPN and evaluate its impact on road damage detection models, this study conducts a comparative analysis of the original BiFPN and SBiFPN, as shown in Table 6. The results indicate that incorporating the original BiFPN into YOLOv8n improves all performance metrics, primarily due to BiFPN’s strong multiscale feature fusion capabilities. Integrating the SBiFPN into YOLOv8n reduces fusion nodes, simplifies the feature fusion network, and retains efficient feature fusion capabilities, while reducing parameters by approximately 34% with only a slight decline in evaluation metrics. Compared with BiFPN, the integration of SBiFPN with the C2f-DWR and Dyhead modules yields significant improvements in mAP performance, with [email protected] increasing by 1.5% and [email protected]:0.95 increasing by 4.7%. The SBiFPN reduces the model complexity and the number of parameters, achieving better detection performance.

| Models | F1 (%) | [email protected] (%) | [email protected]:0.95 (%) | Para (M) |

|---|---|---|---|---|

| YOLOv8n | 66.7 | 69.7 | 43.7 | 3.0 |

| YOLOv8n + BiFPN | 67.4 | 70.1 | 44.3 | 3.1 |

| YOLOv8n + SBiFPN | 65.7 | 69.0 | 43.1 | 2.0 |

| YOLOv8n + C2f-DWR + Dyhead + BiFPN | 70.2 | 72.5 | 47.2 | 3.4 |

| LBN-YOLO (ours) | 70.6 | 74.0 | 51.9 | 2.4 |

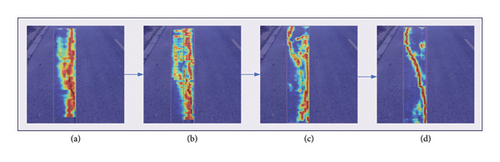

To visualize the image regions that the detection model focuses on when making predictions, this study uses a heatmap visualization technique. Specific layers in the model are selected to generate the heat map. Figure 8 highlights the image regions on which the model relies when identifying cracks. It can be observed from Figure 8 that during the gradual addition of the improvement module, the attention of the model is progressively focused on the region where the cracks are located. In particular, in Figure 8(d), that is, after all the improvement modules have been added, the model performs best by focusing its attention almost exclusively on the crack range.

3.6. Comparing Different Object-Detection Algorithms

According to previous studies on road damage detection, models such as Faster-RCNN [11], SSD-MobileNet [6], YOLOv5 [37], and YOLOv7 [38] have been used for road damage detection. To evaluate the detection performance of the improved LBN-YOLO model, comparative experiments were conducted between these models and LBN-YOLO. The experimental results are shown in Table 7 and Figure 9, where the inference times are tested on the same RTX4060TI.

| Models | F1 (%) | [email protected] (%) | [email protected]:0.95 (%) | Para (M) | FPS (f·s−1) |

|---|---|---|---|---|---|

| Faster R-CNN | 62.0 | 65.7 | 39.7 | 28.3 | 16.2 |

| SSD | 51.6 | 61.7 | 31.1 | 4.3 | 83.8 |

| YOLOv5s | 66.1 | 66.8 | 41.1 | 7.0 | 85.1 |

| YOLOv7-tiny | 67.1 | 67.7 | 42.5 | 6.0 | 61.7 |

| YOLOv8n | 66.7 | 69.7 | 43.7 | 3.0 | 74.5 |

| LBN-YOLO (ours) | 70.6 | 74.0 | 51.9 | 2.4 | 87.7 |

Note that our model performs better than YOLOv5, YOLOv7-tiny, YOLOv8n, SSD, and Faster R-CNN in detecting pavement damages. The [email protected] of LBN-YOLO is 74%, showing a 6.3% improvement compared to the top-performing YOLOv7-tiny algorithm among other state-of-the-art models. When compared with the YOLOv8n model, there is a notable 4.3% enhancement in [email protected], demonstrating significant detection efficacy. Faster R-CNN, as a two-stage detection model, operates at slower speeds and involves more parameters. Conversely, the proposed model exhibits a 20% reduction in parameters compared with YOLOv8n, facilitated by the adoption of a SBiFPN as the feature fusion network, thereby achieving a lightweight design. Moreover, the FPS reaches 87.7 frames/s, surpassing that of other models. The experimental results validate that the proposed model performs exceptionally well in detection speed and precision, making it highly cost-effective for comprehensive evaluation.

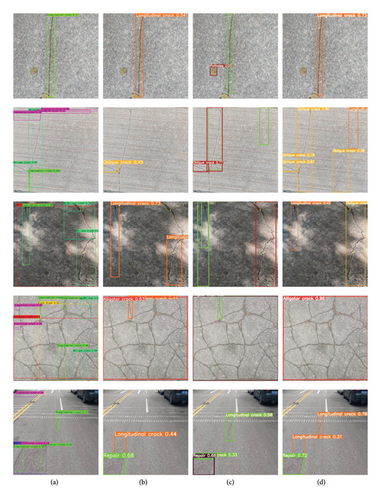

3.7. Visualization of Test Results

After the model training is completed, in order to verify the detection performance of the model and distinguish the advantages and disadvantages of the model more intuitively, the detection results are visualized in this paper. A comparison of the visualization results is shown in Figure 10.

In the first image set of Figure 10, YOLOv7-tiny misidentifies leaves as pothole damage, whereas there is no misidentification in LBN-YOLO. Road damages typically exhibit spatial continuity, making them susceptible to misdetection or multiple detections when the model lacks the capability to extract global information adequately. In the second set of images, Faster R-CNN, YOLOv5s, and YOLOv7-tiny fail to detect, while LBN-YOLO can successfully detect all the fine cracks, which indicates that it is stronger in extracting multi-scale contextual information. In the third set of images, YOLOv5s has leakage detection due to shadow interference and Faster R-CNN and YOLOv7-tiny generate multiple detection boxes. LBN-YOLO improves its ability to detect occluded cracks through Dyhead’s self-attention mechanism, leading to better detection results. In the fourth set of images, alligator cracks are a rare type of road damage and are more difficult to detect. Faster R-CNN fails to detect cracks completely, while YOLOv5s and YOLOv7-tiny detect complete cracked areas but the redundant detection boxes indicate their insufficient global information feature extraction. In contrast, the detection boxes of LBN-YOLO are accurate with high confidence. In the fifth set of images, Faster R-CNN misidentifies Road Markings as transverse cracks, while YOLOv5s and YOLOv7-tiny miss more detections. LBN-YOLO detects all road damages in the images with excellent performance.

Based on the experimental results, the LBN-YOLO model proposed in this paper exhibits superior performance in road damage detection. It effectively detects road damage across various scales and under complex conditions while maintains high detection precision. Moreover, the model has minimal parameters and high detection speed, making it highly suitable for road damage detection applications.

3.8. Performances on China_MotorBike Dataset and NEU-DET

To verify the generality of LBN-YOLO, the China_MotorBike part of the publicly available road damage dataset RDD2022 [39] and the steel surface defects dataset NEU-DET [40] are selected for model validation, and the training parameters are kept the same as those in Section 3.2.

The China_MotorBike dataset is a road damage dataset containing five label types. Detection results are presented in Table 8. Experimental results show that the proposed LBN-YOLO model surpasses the baseline YOLOv8n model in detecting all five damage types while reducing the miss rate. Figure 11 shows the confusion matrix, clearly illustrating the improved detection performance for each class.

| Class | P (%) | R (%) | F1 (%) | [email protected] (%) | [email protected]:0.95 (%) |

|---|---|---|---|---|---|

| YOLOv8n: | |||||

| Total | 87.6 | 84.5 | 86.0 | 91.3 | 62.8 |

| D00 | 83.2 | 74.5 | 78.6 | 84.9 | 52.7 |

| D10 | 84.7 | 62.2 | 71.7 | 80.1 | 46.7 |

| D20 | 85.8 | 88.1 | 86.9 | 93.5 | 59.9 |

| D40 | 89.9 | 97.5 | 93.5 | 98.6 | 60.8 |

| Repair | 94.5 | 100 | 97.2 | 99.5 | 93.9 |

| LBN-YOLO: | |||||

| Total | 90.9 | 88.2 | 89.5 | 92.9 | 66.5 |

| D00 | 86.8 | 80.0 | 83.3 | 87.1 | 60.1 |

| D10 | 87.0 | 71.1 | 78.3 | 83.9 | 49.3 |

| D20 | 88.9 | 89.8 | 89.3 | 94.1 | 66.3 |

| D40 | 92.8 | 100 | 96.3 | 99.5 | 63.1 |

| Repair | 95.5 | 100 | 97.7 | 99.7 | 94.2 |

The NEU-DET dataset, designed for steel surface defect detection, contains 1800 images with six label types. As shown in Table 9, experimental results comparing the YOLOv8n and LBN-YOLO models demonstrate that the proposed LBN-YOLO model outperforms YOLOv8n in [email protected] and [email protected]:0.95 for most defect types. However, the detection accuracy for Patches and Pitted_Surface slightly decreases due to their significant shape differences from road damage. Nevertheless, the LBN-YOLO model achieves a lower miss rate for these categories. Figure 12 presents the comparative confusion matrix for the improved and original models.

| Class | P (%) | R (%) | F1 (%) | [email protected] (%) | [email protected]:0.95 (%) |

|---|---|---|---|---|---|

| YOLOv8n: | |||||

| Total | 75.5 | 67.4 | 71.2 | 74.9 | 39.8 |

| Crazing | 51.5 | 26.2 | 34.7 | 32.7 | 11.6 |

| Inclusion | 80.6 | 81.0 | 80.8 | 84.8 | 44.6 |

| Patches | 82.7 | 78.1 | 80.3 | 85.2 | 52.7 |

| Pitted_surface | 81.0 | 74.4 | 77.6 | 81.2 | 45.6 |

| Rolled_in_scale | 77.4 | 63.5 | 69.8 | 77.1 | 36.2 |

| Scratches | 79.7 | 81.4 | 80.5 | 88.5 | 48.4 |

| LBN-YOLO: | |||||

| Total | 73.2 | 73.9 | 73.5 | 77.7 | 44.6 |

| Crazing | 51.6 | 35.5 | 42.1 | 40.6 | 13.8 |

| Inclusion | 81.3 | 83.4 | 82.4 | 84.9 | 45.5 |

| Patches | 80.8 | 79.1 | 79.9 | 87.7 | 58.8 |

| Pitted_surface | 77.1 | 74.6 | 75.8 | 83.4 | 52.6 |

| Rolled_in_scale | 72.8 | 80.8 | 76.6 | 81.2 | 41.9 |

| Scratches | 77.4 | 89.8 | 83.1 | 88.7 | 55.2 |

The experimental results above indicate that LBN-YOLO improves detection performance on both public datasets while reducing parameters by 20%. Thus, the proposed model exhibits strong generalization ability and is suitable for most datasets.

4. Discussion

Existing deep learning-based road damage detection models generally have low detection precision, and it is difficult to achieve the detection of multiscale and small-object road damage and other difficulties, so this paper focuses on overcoming the above difficulties and proposes an improved model LBN-YOLO; according to the results obtained from the above experiments, this study can accurately locate and classify a variety of road damage.

The experimental results demonstrate that integrating DWR modules into the last two C2f modules of the backbone network significantly enhances the model’s ability to extract global context information and obtain a more comprehensive feature representation. Utilizing SBiFPN as the feature fusion network further reduces redundant features and minimizes the number of model parameters. In addition, incorporating cross-level horizontal connections effectively fuses multiscale features with original features. Adopting Dyhead as the detection head, which combines scale-aware, spatial-aware, and task-aware attention mechanisms, enhances the model’s ability to detect occluded small objects. The proposed LBN-YOLO effectively balances precision and speed in road damage detection, reducing model parameters and computational load without compromising precision, thereby facilitating the model’s deployment in practical applications.

Although this study has achieved high detection precision in road damage detection, the dataset limitation, especially in capturing images under complex backgrounds like foggy weather, poses a challenge. Future work will focus on collecting more diverse road damage images under complex conditions and considering integration with road damage segmentation models such as U-Net, FCN, and PSPNet. This approach aims to further improve the road damage detection precision by extracting shape features and refining the road damage detection system.

5. Conclusions

This paper proposes a road damage detection model named LBN-YOLO, aiming to improve detection precision and speed. To achieve this goal, this paper has done the following. (1) To address the challenge of a small original dataset and data imbalance prone to model overfitting, data augmentation is carried out using offline and online data enhancement methods. (2) This paper addresses the issue of feature information loss due to downsampling by introducing DWR modules into the last two C2f modules of the backbone network. (3) To improve feature fusion efficiency and reduce redundancy, SBiFPN is used as the feature fusion network to achieve cross-level weighted fusion. (4) Utilizing Dyhead as the detection head enhances the scale, space, and task-sensing ability for small objects, thereby improving the classification and localization effect of occluded small objects.

This study employs LBN-YOLO for detecting seven types of road damage. Experimental results indicate that an [email protected] of 74% at 87.7 FPS, marking a 4.3% improvement over YOLOv8n. Furthermore, in comparison to other advanced object detection models such as Faster R-CNN, SSD, YOLOv5s, and YOLOv7-tiny, the proposed method demonstrates significant advantages with an 8.3%, 12.3%, 7.2%, and 6.3% increase in [email protected], respectively. Moreover, the proposed model has been validated on publicly available road damage datasets, such as the China_MotorBike dataset and the steel surface defect dataset NEU-DET, obtaining better detection results compared with the original model and indicating good generalizability. Ablation experiments and visual validations further confirm the effectiveness of the utilized modules. For future work, the model might be deployed on edge devices, such as UAVs, to achieve real-time automatic detection of road damage.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported in part by Weihai Sunshine Engineering Technology Co., Ltd. (Project No. 1010025055); and in part by the Science and Technology Development Plan Project of Weihai Municipality under Grant 2022DXGJ13.

Acknowledgments

This research received no external funding.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.