A Review of Deepfake and Its Detection: From Generative Adversarial Networks to Diffusion Models

Abstract

Deepfake technology, leveraging advanced artificial intelligence (AI) algorithms, has emerged as a powerful tool for generating hyper-realistic synthetic human faces, presenting both innovative opportunities and significant challenges. Meanwhile, the development of Deepfake detectors represents another branch of models striving to recognize AI-generated fake faces and protect people from the misinformation of Deepfake. This ongoing cat-and-mouse game between generation and detection has spurred a dynamic evolution in the landscape of Deepfake. This survey comprehensively studies recent advancements in Deepfake generation and detection techniques, focusing particularly on the utilization of generative adversarial networks (GANs) and diffusion models (DMs). For both GAN-based and DM-based Deepfake generators, we categorize them based on whether they synthesize new content or manipulate existing content. Correspondingly, we examine various strategies employed to identify synthetic and manipulated Deepfake, respectively. Finally, we summarize our findings by discussing the unique capabilities and limitations of GANs and DM in the context of Deepfake. We also identify promising future directions for research, including the development of hybrid approaches that leverage the strengths of both GANs and DM, the exploration of novel detection strategies utilizing advanced AI techniques, and the ethical considerations surrounding the development of Deepfake. This survey paper serves as a valuable resource for researchers, practitioners, and policymakers seeking to understand the state-of-the-art in Deepfake technology, its implications, and potential avenues for future research and development.

1. Introduction

The development of deep learning (DL) and artificial intelligence (AI) has dramatically accelerated the synthesis of media content, such as images and videos. Within AI, a specific area of focus lies in the generation of synthetic and manipulated human faces, commonly referred to as Deepfake techniques. Initially gaining public attention through amusing events where individuals could swap celebrities’ faces or alter politicians’ speeches via lip-syncing, Deepfake images and videos have become increasingly sophisticated. Unlike manual manipulation or creation using graphics applications, well-trained Deepfake generation models excel in both performance and speed of generation. Variational autoencoders (VAEs), generative adversarial networks (GANs), and diffusion models (DMs) are among the Deepfake generation models capable of synthesizing nonexistent faces by learning the data distribution of real-world human faces. This significantly lowers the barrier to artistic creation. At the same time, these models can also be employed to manipulate existing media, allowing for the creation of Deepfake content with alarming realism and ease. When pioneering works such as GANs and DMs are introduced, subsequent efforts focus on enhancing their structure and improving attributes such as training stability, convergence speed, and generation diversity. These improved generative models are then applied to various tasks, including new content synthesis and media manipulation. To comprehensively evaluate the generation and manipulation performance of Deepfake models, some metrics are proposed. Inception score (IS) measures the quality of generated images based on the classification confidence of a pretrained inception model. It computes the KL divergence between the predicted class probabilities for each generated sample and the marginal class distribution. A higher IS indicates both sharpness and diversity in the generated images. The Frechet Inception Distance (FID) measures the similarity between the feature distributions of real and generated images by comparing their means and covariances in the Inception network’s feature space. Lower FID values indicate higher quality and similarity between generated and real data. Peak signal-to-noise ratio (PSNR) measures the quality of generated images by comparing the pixel-wise error between the generated image and the ground truth. Higher PSNR values indicate better image quality. Structural similarity index (SSIM) evaluates the similarity between the generated and real images by considering luminance, contrast, and structure. A value closer to 1 indicates higher similarity. These metrics evaluate the performance of Deepfake generators in terms of generation quality (FID, SSIM, PSNR, and IS) and diversity (IS and FID).

While Deepfakes showcase AI’s creative and manipulative potential, concerns about their potential misuse in fraud, disinformation, and privacy violations have also arisen. The rapid progression from early, rudimentary Deepfakes to current seamless fabrications underscores the need for robust forensic detection methods. Despite proactive approaches like digital watermarking, most Deepfake detectors typically frame detection as binary classification tasks. These detectors leverage labeled fake and real images to identify various artifacts such as biometrics, texture distortion, blending edges, temporal inconsistencies, and multimodal mismatches as distinctive features. Evaluating the performance of Deepfake detectors is crucial to ensure their effectiveness. Two commonly used metrics in Deepfake detection are the area under the ROC curve (AUC) and accuracy (ACC). Accuracy measures the proportion of correct predictions (both true positives and true negatives) made by the model out of all predictions. AUC is a performance metric that evaluates the ability of a model to distinguish between classes (real vs. Deepfake). It calculates the AUC, which plots the true positive rate (TPR) against the false positive rate (FPR) at various thresholds. Compared with ACC, AUC is more robust in cases of imbalanced data since AUC provides a comprehensive measure of model performance across all classification thresholds, making it more robust than accuracy in cases of imbalanced data. However, generative methods continually evolve to evade the latest forensic techniques, leading to a cat-and-mouse game between Deepfake generation and detection. Both fields evolve through innovations in neural structure, objective functions, and data modeling, ensuring a dynamic landscape of technological advancement and countermeasure development.

- •

Comprehensive examination of Deepfake generation and detection in both GAN and DM eras, marking the first comprehensive analysis of GAN-based and DM-based Deepfake to our knowledge.

- •

Categorization of Deepfake generation and detection based on tasks (i.e., manipulation and synthesizing), providing a more intuitive understanding of Deepfake and its detection.

- •

Comparative analysis of GANs and DMs in various aspects, alongside proposed research directions and protective advice based on their development.

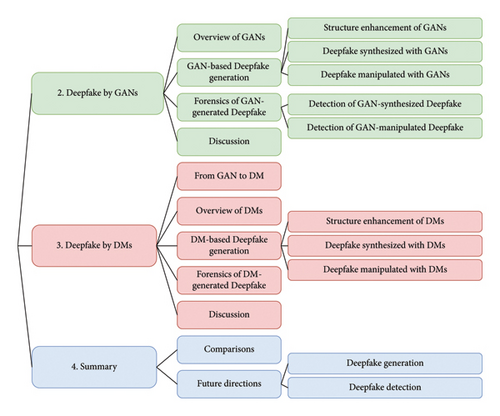

The organization of the subsequent sections is outlined in Figure 1: GAN and DM are introduced in Section 2 and Section 3, respectively. For each section, we first introduce the generation pipelines to illustrate the source of generative capacity (2.1 and 3.2). Subsequently, we introduce some representative generation and detection works in two subsections (2.2 and 2.3 for GANs and 3.3 and 3.4 for DMs). Notably, in Section 4, we compare GANs and DMs with regard to generation and detection, and propose potential future research directions.

2. Deepfake by GANs

2.1. Overview of GANs

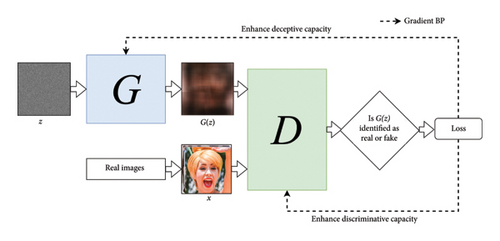

The generator tries to minimize this function while the discriminator tries to maximize it, and the adversarial training objective enables the generator to progressively improve its outputs based on the feedback from the discriminator. The introduction of GANs has paved the way for numerous applications, ranging from image synthesis to data augmentation.

2.2. GAN-Based Deepfake Generation

After GAN was proposed, a large number of papers have proposed various GAN-based generation methods. Some works focus on improving GAN performance by improving attributes of GANs such as training stability, training speed, and generation diversity. More works focus on adopting GANs in various generation tasks, including media synthesis and manipulation. Therefore, in this subsection, we examine GANs focusing on structural enhancement to optimize their performance. Secondly, we will investigate the synthesis of entirely new, non-existing content through GANs. Lastly, we will delve into the generation of fake content by manipulating existing media. This segment will cover a range of techniques, such as object replacement, style transfer, and detail reenactment within images. Works of each category are listed in corresponding tables (Tables 1, 2, and 3) in terms of the proposed model (Structure), generation condition (condition, guidance to classifiers from users, can be descriptive text, class labels, etc.), specific task (Task), and modality (Modal).

| Ref | Structure | Condition | Task | Modal |

|---|---|---|---|---|

| [15] | GAN | — | Generation | Image |

| [16] | DRAGAN | — | Generation | Image |

| [17] | WGAN | — | Generation | Image |

| [18] | WGAN-GP | —/class | Generation | Image |

| [19] | CVAE-GAN | class | Generation | Image |

| [20] | PGGAN | — | Generation | Image |

| [21] | LSGAN | —/class | Generation | Image |

| [22] | BigGAN | — | Generation | Image |

| [23] | SNGAN | —/class | Generation | Image |

| [24] | AC-GAN | class | Generation | Image |

| [25] | AdaGAN | — | Generation | Image |

| [26] | UGAN | — | Generation | Image |

| [27] | U-Net GAN | —/class | Generation | Image |

| [28] | FastGAN | — | Generation | Image |

| Ref | Structure | Condition | Task | Modal |

|---|---|---|---|---|

| [29] | DCGAN | — | Generation | Image |

| [30] | HistoGAN | — | Generation | Image |

| [19] | CVAE-GAN | — | Generation | face |

| [31] | StyleGAN | — | Generation | Image |

| [32] | StyleGAN2 | — | Generation | Image |

| [33] | StyleGAN3 | — | Generation | Image |

| [34] | StyleGAN-V | — | Generation | Video |

| [35] | StyleGAN-T | Text | Generation | Image |

| [36] | DAE-GAN | Text | Generation | Image |

| [37] | 3D-GAN | — | Generation | 3D object |

| [38] | VQGAN | —/class | Generation | Video |

| [39] | SSA-GAN | Text | Generation | Video |

| [40] | GT-GAN | — | Generation | Time series |

| Ref | Structure | Condition | Task | Modal |

|---|---|---|---|---|

| [41] | StarGAN | Image | I2I | Image |

| [42] | StarGANv2 | Image | I2I | Image |

| [43] | FSGAN | — | Faceswap/editing | Image |

| [44] | FSGANv2 | Image | Faceswap/editing | Image |

| [45] | AttGAN | Image | Editing | Image |

| [46] | CAGAN | Text | Editing | Image |

| [47] | CycleGAN | Image | I2I | Image |

| [48] | SPMPGAN | Image | Editing | Image |

| [49] | Anyconst GAN | —/image | Editing | Image |

| [50] | T2CI-GAN | Text | Generation | Image |

| [51] | StyleCLIP | Text | Reenactment | Image |

2.2.1. Structure Enhancement of GANs

Deep Regret Analytic GAN [16] (DRAGAN) proposes viewing GAN training as a regret minimization process rather than divergence minimization between real and fake distributions. Through theoretical analysis of GAN convergence under this regret minimization process, the authors connect common training instabilities like mode collapse to undesirable local equilibria. They identify sharp discriminator gradients around some real data points as a characteristic of these poor local equilibria. To address this, the authors propose DRAGAN, a gradient penalty scheme that avoids degenerating local equilibrium and enables faster, more stable training with fewer mode collapses.

Wasserstein GAN [17] (WGAN) introduces a key change in the training of a discriminator. Instead of classifying inputs as real or fake, WGAN changes the discriminator to a critic that outputs real-valued estimates. This is achieved using the Wasserstein distance, a measure of distance in probability space, which provides a smoother and more meaningful gradient to the generator. Although WGAN shows improved training instability, WGAN uses weight clipping to enforce Lipschitz’s constraint on the critic, which can still lead to poor performance and convergence failures. WGAN with gradient penalty [18] (WGAN-GP) proposes to penalize the gradient norm of the critic’s input rather than clipping weights, which not only enhances the stability of WGANs but also supports stable training across various GAN structures with minimal hyperparameter adjustments.

CVAE-GAN [52] merges a VAE with a GAN to synthesize images in specific, fine-grained categories. It represents images as a combination of label and latent attributes within a probabilistic model, allowing for the generation of category-specific images by altering the category label and varying values in a latent attribute vector.

Progressive growing GAN [20] (PGGAN) was proposed to synthesize high-resolution human faces. The key idea of PGGAN is to improve the generator and the discriminator progressively. In detail, PGGAN starts generation from a model structure that can only generate low-resolution images. Then, PGGAN adds new layers that model increasingly fine details as training progresses, and the details of generated images, as training progresses, are also becoming more and more abundant. By adding details progressively, PGGAN can generate high-resolution images. Compared with low-resolution images, high-resolution images can better persuade recipients of content authenticity and better evade visual detection.

Least square GAN [21] (LSGAN) focuses on the vanishing gradient issues during training. This is because regular GANs model the discriminator as a classifier with sigmoid cross entropy loss. LSGAN proposed a least square loss for the discriminator. The least square loss is applied in an a-b coding scheme for the discriminator, where “a” and “b” represent the labels for fake and real data. LSGAN shows its effectiveness in improving both training stability and generated image quality.

BigGAN [22] added the orthogonal regularization and truncation trick in the large-scale training of GAN to achieve both high-fidelity images and a wide variety of generated samples.

Spectral normalization GAN [23] (SNGAN) stabilizes GAN training by normalizing discriminator weights in each iteration. Spectral normalization constrains the Lipschitz constant and limits the capacity of the discriminator. This prevents unstable oscillatory dynamics between the generator and discriminator during adversarial learning.

Auxiliary classifier GAN [24] (AC-GAN) improved training techniques for GANs to synthesize high-resolution 128 × 128 images with global coherence. The proposed label-conditioned GAN structure generates samples with clearer object details compared to lower-resolution images. Besides, the progressive growing technique also speeds up the training process and enhances its stability.

Adaptive GAN [25] (AdaGAN) aimed at addressing the problem of missing modes in GANs, which refers to the inability of a model to produce examples in certain regions of the data space. AdaGAN is an iterative procedure that enhances the training of GANs by adding a new component to a mixture model at each step. This is achieved by running a GAN algorithm on a reweighted sample, drawing inspiration from boosting algorithms.

Unrolled GAN [26] (UGAN) proposed a novel method to stabilize the training of GANs by redefining the generator’s objective in relation to an unrolled optimization of the discriminator. UGAN addresses a critical challenge in GAN training: balancing between using the optimal discriminator in the generator’s objective, which is theoretically ideal but practically infeasible, and relying on the current value of the discriminator, which often leads to instability and suboptimal solutions.

U-Net GAN [27] proposes a U-Net-based discriminator structure for GANs to improve global and local image coherence, which provides detailed per-pixel feedback in addition to global image assessment. This guides the generator in synthesizing shapes/textures that are indistinguishable from real images. A per-pixel consistency regularization is also introduced using CutMix augmentation on the U-Net input. This focuses the discriminator on semantic/structural changes rather than perceptual differences. The proposed U-Net discriminator and consistency regularization improve GAN performance, enabling the generation of images with shapes, textures, and details highly faithful to the real data distribution.

FastGAN [28] studies few-shot high-resolution image synthesis with GANs using minimal computation and limited a small number of training samples. The proposed FastGAN is a lightweight GAN structure that is capable of producing superior image quality at a resolution of 1024 × 1024, with the training process requiring only a few hours on a single GPU.

2.2.2. Deepfake Synthesized With GANs

Deep convolutional GAN [29] (DCGAN) was proposed to synthesize new faces with a class of CNNs. By applying a set of skills to the structure of networks, such as replacing pooling layers with convolutions, adopting BatchNormalization (BN), and adopting a proper activation function, DCGAN stabilizes the training of the network. Though only able to generate low-resolution human faces, DCGAN is considered an early and classic work for fake face generation.

HistoGAN [30] was proposed to deal with the color inconsistency of GAN-generated images. Inspired by the idea of “style mixing” in StyleGAN [31, 32], HistoGAN first obtains histogram features by converting images into the log-chroma space. Then, a GAN network is established by replacing the last two blocks of StyleGAN a with the color histogram feature adopted to learn a lower dimensional representation. With the output of the network and a histogram-aware color-matching loss, the generated images better match the color histogram of the target images.

CVAE-GAN [19] combines a VAE with a GAN to synthesize images in fine-grained categories, such as faces of a specific person or objects in a category. CVAE-GAN models an image as a composition of labels and latent attributes in a probabilistic model. By varying the fine-grained category label fed into the resulting generative model, CVAE-GAN generates images in a specific category with randomly drawn values on a latent attribute vector.

The StyleGAN series comprises a progression of Deepfake generation models featuring progressively enhanced generative capabilities. StyleGAN [31] was proposed by making improvements to PGGAN. StyleGAN does not feed the learned latent code to the generator. Instead, latent code is applied for style mixing before arriving at the generator. Style mixing aims to discover the position of latent code that controls different styles by a mapping network. The latent code is mapped to style code through the network, and the style code is mixed and then fed to the generator. Style mixing allows StyleGAN to control style at each layer in the generator. Besides, StyleGAN introduced noise at each layer in the generator to improve generation diversity. By style mixing and introduction of noise in the generator, StyleGAN synthesizes high-quality images of high resolution and better diversity. StyleGAN2 [32] focuses on the water droplet disadvantage of StyleGAN. It is pointed out that the water droplet appears due to the AdaIN operation, which normalizes each feature map separately and destroys the information between feature maps. StyleGAN2 adopted weight demodulation to remove the water droplet while retaining full controllability. Noticing that the details of generated images are unnaturally fixed to pixel coordinates rather than object surfaces due to aliasing in the generator, StyleGAN3 [33] analyzed that the root cause of this is traced to careless signal processing. Interpreting all signals as continuous, StyleGAN3 derives small architectural changes to prevent unwanted information from leaking into the hierarchical synthesis. StyleGAN-V [34] focuses on video generation by treating videos as continuous signals. StyleGAN-V is a continuous-time video generator that leverages continuous motion representations through positional embeddings. StyleGAN-V also adopted a holistic discriminator that aggregates temporal information more efficiently by concatenating frame features. By training the model on high-resolution images, StyleGAN-V generates high-resolution long videos at high frame rates. StyleGAN-T [35] is a model designed to meet the specific demands of large-scale text-to-image synthesis. Compared with existing generators that require iterative evaluation to generate a single image, StyleGAN-T offers a much faster alternative as they can generate images in a single forward pass. StyleGAN-T is also designed to meet the specific demands of large-scale text-to-image synthesis, including large capacity, stable training on diverse datasets, strong alignment with text inputs, and a balance between controllable variation and text alignment.

Dynamic aspect-aware GAN [36] (DAE-GAN) is a text-to-image synthesizer representing text at multiple levels such as sentence and word aspect. DAE-GAN mimics human iterative drawing by globally enhancing the image and then locally adjusting details based on text. In detail, DAE-GAN generates an initial image from the sentence embedding and then refines it using a novel aspect-aware dynamic re-drawer (ADR). ADR alternates between global refinement with word vectors (attended global refinement) and local detail refinement with aspect vectors (aspect-aware local refinement).

3D-GAN [37] is capable of generating 3D objects by leveraging volumetric convolutional networks and GANs to create 3D objects from a probabilistic space. 3D-GAN features an adversarial criterion, which enables the generator to implicitly understand and replicate the structure of 3D objects, resulting in high-quality outputs. 3D-GAN can generate 3D objects without reference images or CAD models, exploring the manifold of 3D objects.

VQGAN [38] aims to generate long videos by combining 3D-VQGAN with transformers to generate videos comprising thousands of frames. Trained on various datasets, VQGAN is able to generate high-quality long videos of impressive diversity and coherency. By incorporating temporal information with text and audio, VQGAN can generate meaningful long videos that are contextually rich and aligned with the given inputs.

Semantic-spatial aware GAN [39] (SSA-GAN) is designed to generate photorealistic images that are not only semantically consistent with the overall text description but also align with specific textual details. In detail, SSA-GAN enhances the fusion of text and image features and introduces a semantic mask learned in a weakly supervised manner to guide spatial transformation in the image.

GT-GAN [40] generates time series data by handling both regular and irregular time series data without requiring modifications to the model. GT-GAN integrates a range of related techniques, from neural ordinary/controlled differential equations to continuous time-flow processes, into a cohesive system. This integration is a critical factor in the GT-GAN’s success.

2.2.3. Deepfake Manipulated With GANs

StarGAN [41] enables image-to-image translations across multiple domains with a single model. StarGAN’s unified structure allows for simultaneous training on multiple datasets with different domains within one network. This leads to superior quality in translated images compared to existing models and provides the flexibility to translate any input image into any desired target domain. StarGAN can be used on facial attribute transfer and expression synthesis. StarGANv2 [42] presents a single, scalable framework for diverse high-quality image-to-image translation across multiple domains, unlike existing approaches with limited diversity or separate models per domain. Through experiments on CelebA-HQ and a new animal faces dataset AFHQ, StarGAN v2 demonstrates superior visual quality, diversity, and scalability compared to baselines. The release of AFHQ provides a valuable benchmark for assessing image translation models in terms of large inter- and intradomain variability. Overall, StarGAN v2 tackles the key criteria of diversity and scalability for multidomain image mapping within a single unified generator model.

FSGAN [43] enables face swapping and reenactment between pairs of faces without requiring model training on those faces specifically. Key technical contributions include an RNN-based approach to adjust for varying poses and expressions, continuous view interpolation for video, completion of occluded regions, and a blending network for seamless skin and lighting preservation. A novel Poisson blending loss integrates Poisson optimization and perceptual loss. FSGANv2 [44] is unique in that it is subject agnostic, enabling face swapping on any pair of faces without specific training on those faces. It features an iterative DL-based approach for face reenactment, capable of handling significant pose and expression variations in both single images and video sequences. For videos, it offers continuous interpolation of face views using reenactment, Delaunay triangulation, and barycentric coordinates. A face completion network manages occluded face regions, and a face blending network ensures seamless blending while preserving skin color and lighting conditions, utilizing a novel Poisson blending loss.

AttGAN [45] was proposed to make more accurate facial attribute manipulation. The proposed method is based on the observation that attribute-independent latent representation applied in some attribute manipulation methods restricts the capacity of the latent representation. Thus, the generated human faces are oversmooth and distorted. To make an improvement, AttGAN proposed reconstruction learning and adversarial learning to make the generated images visually more realistic. In detail, the proposed reconstruction learning preserves attribute-excluding details by making attribute editing, and the reconstruction task shares the entire encoder–decoder network. The proposed adversarial learning provides the whole network with the capacity for visually realistic editing. Thus, AttGAN can achieve high-quality facial attribute manipulation by making faces more natural.

CAGAN [46] tackles automatic global image editing from linguistic requests to significantly reduce labor for novices. An editing description network (EDNet) is proposed to predict embeddings for image generator cycles. Despite data imbalance, several augmentation strategies combined with an Image-Request Attention module enable spatially adaptive edits. A new semantic evaluation metric is also introduced as superior to pixel losses. Extensive experiments on two benchmarks demonstrate state-of-the-art performance, with effectiveness stemming from explicitly handling limited language-image examples and selective region-based editing guided by linguistic cues.

CycleGAN [47] is an image-to-image translation GAN, which has been used for face-swapping and expression reenactment manipulation. The basic idea under CycleGAN design is interesting. It assumes that for two domains, the source domain and the target domain, the mapped target domain representation of an input, when mapped in the opposite direction, the backward-mapped result should be close to the input, which also explains its name. Based on this idea, the cycle loss is proposed to optimize the whole network.

SPMPGAN [48] achieves semantic image editing by generating desired content in specified regions based on local semantic maps. SPMPGAN introduces a style-preserved modulation with two steps: fusing contextual style and layout to generate modulation parameters and then employing those to modulate feature maps. This injects a semantic layout while maintaining context style. A progressive structure further enables coarse-to-fine-edited content generation. By preserving style, more consistent results are obtained with less noticeable boundaries between edited regions and known pixels. Overall, explicit style modeling significantly improves semantic editing quality and coherence.

Anyconst GAN [49] enables interactive image editing with GANs by allowing fast, perceptually similar previews at reduced computational costs. Inspired by quick preview features in creative software, Anyconst GAN supports variable resolutions and channels for generation at adjustable speeds. Subset generators serve as proxies for full results, trained using sampling, adaptation, and conditional discrimination to improve visual quality over separately tuned models. Novel projection techniques also encourage output consistency across subsets. Anyconst GAN provides up to 10x cost reduction and 6–12x preview speedups on CPUs and edge devices without perceivable quality loss, crucially enabling interactivity.

T2CI-GAN [50] addresses the challenge of generating visual data from textual descriptions, a task that combines natural language processing (NLP) and computer vision techniques. While current methods use GANs to create uncompressed images from text, T2CI-GAN focuses on generating images directly in a compressed format for enhanced storage and computational efficiency. T2CI-GAN employs DCGAN and proposes two models: One trained with JPEG-compressed DCT images to generate compressed images from text and another trained with RGB images to produce JPEG-compressed DCT representations from text.

StyleCLIP [51] manipulates images using the StyleGAN framework, inspired by its ability to generate highly realistic images. The focus is on utilizing the latent spaces of StyleGAN for image manipulation, which traditionally requires extensive human effort or a large annotated image dataset for each manipulation. StyleCLIP introduces the innovative use of contrastive language-image pretraining (CLIP) models to develop a text-based interface for StyleGAN image manipulation, eliminating the need for manual labor. The approach includes an optimization scheme that modifies an input latent vector based on a text prompt using a CLIP-based loss. A latent mapper is also developed to infer text-guided latent manipulation steps for input images, allowing quicker and more stable text-based manipulation. The paper also presents a method to map text prompts to input-agnostic directions in StyleGAN’s style space, facilitating interactive text-driven image manipulation.

2.3. Forensics of GAN-Generated Deepfake

Detecting Deepfakes involves distinguishing between two primary categories: synthesized Deepfake and manipulated Deepfake. Synthesized Deepfake detection focuses on identifying media generated entirely by AI algorithms, while detecting manipulated Deepfake involves recognizing authentic media that has been altered or manipulated using AI-based tools. The investigated works of synthetic Deepfake and manipulated Deepfake are listed in Tables 4 and 5, where we show the adopted or proposed classifier (Classifier), adopted datasets (Datasets) and the modality (Modal).

| Ref | Classifier | Datasets | Modal |

|---|---|---|---|

| [53] | Xception | FF++ | Image |

| [54] | DeepFD | Self-built (StarGAN, DCGAN, WGAN, WGAN-GP, LSGAN, PGGAN) | Image |

| [55] | CNNs | FF++, self-built (ProGAN, Glow) | Image |

| [56] | Capsule-Forensics | FF++, self-built (ProGAN, Glow) | Image/video |

| [57] | CNNs | FF++, DFDC | Video |

| [58] | Various | ForgeryNet | Image/video |

| [59] | MT-SC | Self-built (CycleGAN, ProGAN, Glow, StarGAN) | Image |

| [60] | SVM | FF++ | Image |

| [61] | F3-Net | FF++ | Image |

| [62] | CNNs, LSTM | FF++, Celeb-DF, DFDC preview | Video |

| [63] | MesoInception, ResNet, Xception | FF++, self-built (ProGAN, StyleGAN, StyleGAN2) | Image |

| [64] | FakeLocator | FF++, self-built (STGAN, AttGAN, StarGAN, IcGAN, StyleGAN, PGGAN, StyleGAN2) | Image |

| [65] | PCL + I2G | FF++, DFD | Image |

| [66] | Xception | FF++, DFD, DFDC, Celeb-DF, DF1.0 | Image |

| [67] | FD2 Net | FF++, DFD, DFDC | Image |

| [68] | Transformer | FF++, DFD, DFDC | Video |

| [69] | Transformer | FF++, FaceShifter, DeeperForensics, DFDC | Video |

| [70] | CNNs | FF++, DFDC, Celeb-DF | Image |

| [71] | Transformer | FF++, Celeb-DF, self-built: SR-DF (FSGAN, FaceShifter, first-order motion, IcFace) | Image |

| [72] | ICT | MS-Celeb-1M, FF++, DFD, CelebDFv1, CelebDFv2, DeeperForensics | Image |

| [73] | HCIL | FF++, Celeb-DF, DFDC preview, WildDeepfake | Video |

| [74] | FST-matching | FF++ | Image |

| [75] | CNNs | FF++, Celeb-DF | Image |

| [76] | UIA-ViT | FF++, Celeb-DF, DFD, DFDC | Image |

| [77] | HFI-Net | FF++, Celeb-DF, DFDC, TIMIT | Image |

| [78] | CNNs | FF++, DFDC, Celeb-DF | Image |

| [79] | EfficientNet, transformers | FF++, DFDC | Video |

| [80] | CNNs | Self-built (ProGAN, SN-DCGAN, CramerGAN, MMDGAN) | Video |

| [81] | LRNet | FF++, Celeb-DF | Video |

| [82] | Transformer | FF++, FaceShifter, DeeperForensics, Celeb-DF, DFDC | Video |

| [63] | CNNs | Self-built (PGGAN, StyleGAN, StyleGAN2) | Image |

| [83] | HAMMER | DGM4 | Image |

| [84] | MRE-Net | FF++, Celeb-DF, DFDC, Wild Deepfake | Video |

| [85] | CNNs | DF_TIMIT, FF++, DFD, DFDC, Celeb-DF | Image |

| [86] | TI2 Net | FF++, Celeb-DF, DFD, DFDC | Video |

| [87] | IIL | FF++, DFDC, Celeb-DF | Image |

| [88] | ISTVT | FF++, FaceShifter, DeeperForensics, Celeb-DF, and DFDC datasets | Image |

| [89] | AVoiD-DF | DefakeAVMiT, FakeAVCeleb, DFDC | Video |

| Ref | Classifier | Datasets | Modal |

|---|---|---|---|

| [54] | DeepFD | Self-built (StarGAN, DCGAN, WGAN, WGAN-GP, LSGAN, PGGAN) | Image |

| [90] | RNN/VAE | Self-built (FaceApp) | Video |

| [91] | Face X-ray | FF++, DFD, DFDC, Celeb-DF | Image |

| [55] | CNNs | FF++, self-built (ProGAN, Glow) | Image |

| [60] | SVM | FF++ | Image |

| [92] | ResNet-50 | Self-built (ProGAN, StyleGAN, BigGAN, CycleGAN, StartGAN, GauGAN, etc.) | Image |

| [93] | XceptionNet, VGG16 | FF++, self-built (FaceApp, StarGAN, PGGAN, StyleGAN) | Image |

| [63] | MesoInception4, ResNet, Xception | FF++, self-built (ProGAN, StyleGAN, StyleGAN2 | Image |

| [64] | FakeLocator | FF++, self-built (STGAN, AttGAN, StarGAN, IcGAN, StyleGAN, PGGAN, StyleGAN2) | Image |

| [71] | Transformer | FF++, Celeb-DF, self-built: SR-DF (FSGAN, FaceShifter, first-order-motion, IcFace) | Image |

2.3.1. Detection of GAN-Synthesized Deepfake

2.3.1.1. Detectors Based on Spatial Artifacts

Spatial artifacts are intuitive and are adopted widely to detect GAN-generated Deepfake.

Xception was first adopted in [53] to detect Deepfake. The detector is tested on the proposed benchmark dataset, FaceForensics++. Thorough analysis shows that while manipulations can fool humans, data-driven detectors leveraging domain knowledge can achieve high accuracy even with strong compression.

DeepFD [54] adopted contrastive loss while seeking typical features of the synthesized images generated by different GANs.

Nguyen et al. [56] tackle open-world detection through incremental learning. Experiments on a dataset of various GAN-generated images demonstrate that the proposed approach can correctly discriminate when new GAN structures are presented, enabling continuous evolution with emerging data types.

Bonettini et al. [57] ensemble CNN models for video face forgery detection targeting modern techniques. An EfficientNetB4 base network is augmented with attention layers and siamese training for diversity. Combining these complementary models provides promising detection results on two datasets with over 119,000 videos. The study demonstrates integrating multiple specialized networks through attention and metric learning improves generalization to evolving forgery methods compared to individual models.

ForgeryNet [58] is introduced as an extensive benchmark dataset to advance digital forgery analysis, addressing limitations of diversity and task coverage in prior face forgery corpora. It contains 2.9M images and 221K videos with unified annotations for four key tasks: image classification (binary, 3-class, 15-class), spatial localization, video classification with random frame manipulation, and temporal localization. By providing massive scale, variety, and multitask annotations, ForgeryNet aims to promote research and innovation in facial forgery detection, spatial localization, temporal localization, and related directions.

Pairwise self-consistency learning (PCL) [65] detects Deepfake through inconsistent source features preserved despite manipulation. A novel PCL method trains models to extract these indicative cues. An inconsistency image generator (I2G) synthesizes training data with source mismatches. By exposing remnants of original input traces, the learned representations reliably detect Deepfake generated even by advanced methods. The strong cross-dataset performance demonstrates real-world viability.

FD2 Net [67] processed biological information in a more complex way, and they proposed that a face image is a production from the intervention of the underlying 3D geometry and the lighting environment. The lighting environment can further be decomposed into direct light, common texture, and identity texture. Noticing that direct light and identity texture contain critical clues, these two components are merged and named facial detail images. The proposed Forgery-Detection-with-Facial-Detail (FD2 Net) is mainly based on facial images and facial detail images. The two types of images are passed through a two-stream network, and outputs of the two streams are then fused and passed to a classifier.

Vision transformer (ViT) uses self-attention mechanism to process all input tokens simultaneously, allowing for better parallelization and capturing long-range dependencies more effectively. Khan and Dai [68] tackle Deepfake video detection through a novel incremental learning video ViT. 3D face reconstruction provides aligned UV textures and poses. Dual image-texture inputs extract complementary cues. Incremental fine-tuning further improves performance with limited additional data. Comprehensive experiments on public datasets demonstrate state-of-the-art Deepfake video detection. Sequenced feature learning from image pairs and incremental optimization enables robust identification of forged footage spread online.

Nirkin et al. [70] detect identity manipulations in faces by exposing discrepancies between the face region and surrounding context. While methods like Deepfakes aim to adjust the face to match the context, inconsistencies that reveal manipulation arise. A two-network approach is proposed, with one network focused on the segmented face and the other recognizing the face context. By comparing their recognition outputs, discrepancies indicative of manipulation are identified, complementing conventional real versus fake classifiers. Crucially, the approach generalizes to unseen manipulation methods by capitalizing on the common mask-context mismatch.

FST-matching (fake, source, target images matching) [74] provides insights into how Deepfake detection models learn artifact cues when trained on binary labels. Three hypotheses based on image matching are proposed and verified: (1) Models rely on visual concepts unrelated to the source or target as artifact-relevant. (2) FST-matching between fake, source, and target images enables implicit artifact learning. (3) Concepts learned from uncompressed data fail on compression. An FST-matching detection model is proposed that matches triplets to make compression-robust concepts explicit.

Liang et al. [75] address overfitting in CNN Deepfake detection caused by reliance on dataset content biases over manipulation artifacts. A disentanglement framework is designed to remove content interference. Content consistency and global representation contrastive constraints further enhance independence. The proposed framework significantly improves generalization by guiding focus toward artifacts rather than contextual cues specific to a dataset. Visualizations and results demonstrate state-of-the-art detection through explicit content disentanglement and constraint-driven artifact emphasis.

Yu et al. [78] improve Deepfake video detection generalization through commonality learning across manipulation methods. Specific forgery feature extractors (SFFExtractors) are first trained separately on each method using losses to ensure detection ability. A common forgery feature extractor (CFFExtractor) is then trained under SFFExtractor supervision to discover shared artifacts. The proposed strategy provides an effective way to learn broadly indicative forged video traits that transcend specific manipulation types and improve out-of-distribution detection.

Chai et al. [63] analyze spatial patterns revealing fakes using a patch-based classifier to identify more easily detectable regions. A technique is proposed to exaggerate these detectable properties. Experiments show even an adversarially trained generator leaves localized flaws that can be exploited. The analysis and visualization of spatial detection cues provide insights into artifacts persistent across datasets, structures, and training variations. Localizing and amplifying subtle clues improves understanding of what makes current forged multimedia detectable even as human perception fails.

HAMMER [83] was proposed to tackle the newly proposed detecting and grounding multimodal media manipulation (DGM4), which exposes subtle forgery across images and text. Beyond binary classification, DGM4 grounds specific manipulated regions in both modalities through deeper reasoning. A large dataset with diverse manipulation types and annotations is constructed to enable investigation. The proposed HAMMER captures fine-grained cross-modal interactions via manipulation-aware contrastive learning across encoders and modality-aware aggregation. Dedicated heads integrate uni- and multimodal reasoning for detection and spatial grounding. DGM4 defines a new problem and benchmark for tackling realistic multimodal misinformation by moving beyond binary single-modality classification to spatial localization and reasoning.

Li et al. [85] propose forensic symmetry to detect Deepfake by comparing natural features between symmetrical facial patches. A multistream model extracts symmetry features from frontal images and similarity features from profiles. Collectively representing natural traits, discrepancies in feature space indicate manipulation likelihood. Face patch pairs are mapped to an angular hyperspace to quantify natural feature differences and tampering probability. A heuristic algorithm aggregates frame-level predictions for video-level judgment. The proposed forensic technique reliably detects subtle asymmetric perturbations indicative of manipulation by explicitly encoding and contrasting innate facial symmetries. The robustness to compression and generalization across datasets highlights applicability to real-world Deepfake detection.

Dong et al. [87] analyze generalization issues in Deepfake detection models stemming from implicit identity representations. Binary classifiers unexpectedly learn dataset-specific identity traits rather than generalizable manipulation cues. This implicit identity leakage severely harms out-of-distribution detection. To mitigate, an ID-unaware model is proposed that reduces identity influence by obscuring hair/background. By identifying and addressing identity overfitting, this exploration provides critical insights into improving Deepfake detection reliability when distributions shift. The proposed techniques move toward adaptable and widely applicable models.

2.3.1.2. Detectors Based on Frequency Artifacts

Detectors are also looking for more subtle artifacts in the frequency domain to recognize Deepfake.

Frank et al. [80] proposed the first work introducing frequency information to Deepfake detection. They observed that although some GAN-generated images can be visually undetectable, obvious artifacts can be easily identified in the frequency domain. In detail, spatial images are first transformed to the frequency domain by DCT. With frequency features, even a simple classifier can achieve a classification accuracy of over 80%. A CNN-based classifier easily achieved a classification accuracy of over 99% on both LSUN [94] and CelebA [95] datasets. However, such a simple utilization of frequency features performs poorly on more challenging datasets.

F3-Net [61] captures both global and local frequency features of images. F3-Net captures global frequency features with a frequency-aware decomposition (FAD) module. Together with simple fixed filters, FAD applies a set of learnable filters to decompose images into different frequency component images. The decomposed image components are stacked and fed into a CNN to generate deeper features. Local frequency statistics (LFS) is the second frequency-aware module of F3-Net to capture local frequency information. LFS first collects frequency responses in small patches of an image with sliding window discrete Fourier transform (SWDCT). Then, LFS calculates the mean frequency responses at a series of learnable frequency bands. The features learned by FAD and LFS are then fused by a cross-attention for later prediction.

Luo et al. [66] relied on high-frequency features to make their detector more generalizable. They proposed a two-stream framework. One stream is the RGB stream, and the other one is the high-frequency stream. The RGB stream utilizes raw images, while the high-frequency stream utilizes residual images generated by SRM filters. The raw image features and residual features then go through three flows: entry flow, middle flow, and exit flow. Entry flow extracts multiscale high-frequency features by the combination of down-samplings and multiscale SRMs. The output of entry flow is then passed to middle flow, where dual cross-modality attention is applied to model the correlations between the two modalities at different scales. The exit flow contains the fusion model and the final classifier. The proposed method achieved over 99% AUC if trained and tested on images manipulated by the same techniques.

HFI-Net [77] tackles face forgery detection through hierarchical frequency modeling to improve generalization. A dual CNN-transformer network captures multiscale cues. A novel frequency-based feature refinement (FFR) module applies frequency attention to emphasize artifacts while suppressing pristine semantics. FFR enables cosharing global–local interactions to fuse complementary branches using frequency guidance. Stacking at multiple levels exploits hierarchical frequency artifacts. By thoroughly exposing frequency-domain discrepancies at multiple semantic levels, the hierarchical framework significantly advances cross-dataset and robust Deepfake detection.

2.3.1.3. Detectors Based on Temporal Artifacts

Temporal artifacts are also explored to analyze sequential information within image patches and video frames.

Videos naturally contain dynamic information, which can be used to detect their authenticity. Masi et al. [62] adopted a recurrent neural network (RNN) to better capture time serial features. It proposed a two-branch framework to detect Deepfake videos by color domain information and frequency-domain clues. The frequency branch is based on the Laplacian of Gaussian. In detail, the input is processed with two sets of filters. The first set is fixed and nonlearnable, which works as a 2D Gaussian kernel. The second set, a dimensionality reduction filter, maps back the dimensionality to the input expected by the next layer. The separated two branches are then fused by the fusion layer, and the outputs of the fusion layer then go through the backbone network and a bidirectional LSTM module for temporal feature analysis.

LRNet [81] aims at detecting Deepfake videos via temporal modeling of precise geometric features rather than vulnerable appearance cues. A calibration module enhances the precision of extracted geometry. A two-stream RNN structure sufficiently exploits temporal patterns. Compared to prior methods, LRNet has lower complexity, easier training, and increased robustness to compression and noise.

Zheng et al. [69] leverages temporal coherence for video face forgery detection through a two-stage framework. First, a fully temporal convolution network with 1 × 1 spatial kernels extracts short-term patterns while improving generalization. Second, a temporal transformer captures long-term dependencies. Trained from scratch without pretraining, the approach achieves state-of-the-art performance and transfers effectively to new forgery types—highlighting robust sequence modeling. By thoroughly exploiting motion cues absent in images, the work advances temporal machine learning for identifying fake video faces.

Gu et al. [73] detect Deepfake videos through hierarchical contrastive learning of temporal inconsistencies in facial movements. Unlike binary supervision methods, a two-level contrastive paradigm is proposed for local and global inconsistency modeling. Multisnippet inputs enable contrasting real and fake videos from both intra- and intersnippet perspectives. Region-adaptive and cross-snippet fusion modules further enhance discrepancy learning. The hierarchical framework advances Deepfake video detection through comprehensive modeling of subtle spatiotemporal discrepancies in facial dynamics.

TI2Net [86] detects Deepfake video by exposing temporal identity inconsistencies. It captures discrepancies in face identity across frames of the same person. Encoding identity vectors and modeling their evolution as a temporal embedding enables reference-agnostic fake detection without dataset-specific cues. The identity inconsistency representation is used for authenticity classification. Triplet loss improves embedding discriminability. By focusing on semantic identity integrity rather than superficial artifacts, TI2 Net advances reliable Deepfake video detection through a principled temporal discrepancy modeling approach.

Dynamic prototype network (DPNet) [82] uses dynamic prototypes to expose temporal inconsistencies. Most methods process videos frame-by-frame, lacking temporal reasoning, despite artifacts across frames being essential to human judgment. DPNet represents videos through evolving prototypes that capture inconsistencies and serve as visual explanations. Extensive experiments show competitive performance even on unseen datasets while providing intuitive dynamic prototype explanations. Further temporal logic specifications verify model compliance to desired behaviors, establishing trust.

Multirate excitation network (MRE-Net) [84] leverages dynamic spatial–temporal inconsistencies for Deepfake video detection. MRE-Net uses bipartite group sampling at diverse rates to cover varying facial motion. Early stages excite momentary inconsistencies via spatial and short-term cues. Later stages capture long-term intergroup dynamics. This holistic modeling of transient, localized flaws and long-range coherence surpasses methods relying on isolated spatial or temporal cues.

Coccomini et al. [79] detect video Deepfake of faces as advances in VAEs and GANs enable highly realistic forged footage. Combining EfficientNet feature extraction with ViTs provides comparable performance to the state-of-the-art without requiring ensembles or distillation. A simple voting scheme handles multiple faces. The best model achieves 0.951 AUC on DFDC, nearing the state-of-the-art for detecting sophisticated forgeries. Unlike complex prior art, transformers and streamlined inference provide efficient and accurate Deepfake detection ready for real-world deployment. By showing strong results are possible without weighting or model combinations, this exploration demonstrates the power of transformers and presents a straightforward framework for exposing falsified video faces.

While transformers can be adopted to process video to explore temporal patterns among video frames, they can also process an image as a series of patch embeddings. Therefore, transformer-based detectors first split images into patches and represent the patches in latent space as patch embeddings. Then, self-attention is adopted to explore the sequential inconsistencies between patches to recognize Deepfake. The process considers both patch content and the correlation between patches, allowing for better exploration of structural information for Deepfake detection. Identity consistency transformer (ICT) [72] detects face forgery by exposing identity mismatches between inner and outer facial regions. It incorporates a consistency loss to determine the identity (in)congruence of face parts. Experiments demonstrate superior generalization across datasets and real-world image degradations like Deepfake videos. Leveraging semantics outperforms low-level artifacts prone to obfuscation. ICT also readily integrates external identity cues, providing particular utility for celebrity Deepfake. By honing in on high-level facial identity integrity, ICT advances robust and semantically grounded fake face detection. UIA-ViT [76] enables inconsistency-aware Deepfake detection without pixel-level supervision. Vision transformers are proposed to capture patch consistency relations via self-attention implicitly. Two key components are introduced, unsupervised patch consistency learning (UPCL) with pseudolabels for consistency representations and progressive consistency weighted assemble (PCWA) to enhance classification. Without location annotations, UPCL and PCWA guide transformers to focus on discrepancies indicative of manipulation. By capitalizing on self-attention localization and consistency-driven learning, the approach advances robust generalization of Deepfake detection without expensive pixel-level labels. Interpretable spatial–temporal video transformer (ISTVT) [88] improves the exploitation of joint spatial–temporal cues for robust Deepfake detection. A decomposed spatial–temporal self-attention captures localized artifacts and temporal dynamics. Visualization via relevance propagation explains model reasoning on both dimensions. Strong detection performance is demonstrated on FaceForensics++ and other datasets, with superior cross-dataset generalization. The transformer structure enhanced by explicit spatial–temporal modeling outperforms recurrent and 3D convolutional approaches. Interpretability insights are provided into spatiotemporal patterns indicative of manipulation learned by the model. By advancing joint spatial–temporal reasoning while remaining interpretable, ISTVT moves toward truly understanding and exposing the underlying footprint of forged multimedia.

The mismatched temporal patterns between audio and video can also help detect AI-generated videos. AVoiD-DF [89] tackles Deepfake detection by modeling audio-visual inconsistencies in multimodal media. AVoiD-DF encodes spatiotemporal cues and then fuses modalities through joint decoding and cross-modal classification. The framework exploits intermodal and intramodal discrepancies indicative of manipulation. A new benchmark dataset, DefakeAVMiT, contains videos with either visual or audio tampering via diverse methods, enabling multimodal research.

2.3.2. Detection of GAN-Manipulated Deepfake

Neural ODE [90] was proposed and trained on original videos’ heart rates. Testing on commercial and database Deepfake, the model successfully predicts fake heart rates. By evidencing detectable physiological signals in synthesized video, this exploration of Neural-ODE-based heart rate prediction represents a novel approach to automatically discerning real from forged footage.

Face X-ray [91] reveals blended boundaries in forged faces without relying on manipulation technique specifics. Observing a common blending step across methods, face x-ray exposes composites via blending edges in forged images and absence in real ones. Requiring only blending logic at training, face X-ray generalizes to unseen state-of-the-art techniques, unlike existing detectors reliant on method artifacts.

Matern et al. [55] investigated detecting artifacts in video face editing to expose manipulated footage. Many algorithms exhibit classical vision issues from tracking/editing pipelines. Analyzing current facial editing approaches identifies characteristic flaws like Deepfakes and Face2Face. Simple visual feature techniques can effectively expose such manipulations, using explainable signals accessible even to non-experts. These methods easily adapt to new techniques with little data yet achieve high AUC despite simplicity.

Durall et al. [60] made a theoretical analysis and suggested that upsampling (up-convolutional) operation in generative models leaves frequency artifacts in generated images, which may lead to GAN fingerprints. They further noticed that frequency artifacts exist, especially in the high-frequency spectrum, which is consistent with the observation in [96] that high-frequency components carry more GAN fingerprint information. Thus, the GAN fingerprint can be considered a consequence of frequency distortion in the generative model caused by upsampling operations. The observation of fingerprints makes sense, since it is closely related to frequency distortions and inspired many works to look for Deepfake clues in the frequency domain.

Wang et al. [92] explore a “universal” detector to distinguish real images from various CNN-generated images regardless of structure or dataset. With preprocessing, augmentation, and a classifier trained only on ProGAN, surprising cross-structure and dataset generalization are achieved, even with methods like StyleGAN2. The findings suggest modern CNN-generated images share systematic flaws preventing realistic synthesis, evidenced by the success of the simple universal fake detector.

Dang et al. [93] tackle detecting manipulated faces and localizing edited regions, which is crucial as synthesis methods produce highly realistic forgeries. Simply using multitask learning for classification and segmentation prediction provides limited performance. Instead, an attention mechanism is proposed to process and improve classification-focused embeddings by highlighting informative areas. This simultaneously benefits binary real versus fake classification and visualizes manipulated areas for localization. A large-scale fake face dataset enables thorough analysis, showing attention-enhanced models outperform baselines in detecting facial forgeries and revealing the edits through interpretable attention maps. Overall, selective feature improvement and visualization via attention mechanisms provide superior hybrid detection and localization of modern forged face imagery.

Chai et al. [63] proposed to recognize Deepfake by the analysis of image patches, where the final classification was not made globally on the image. Instead, a shallow network with local receptive fields was applied to first predict whether patches are real or fake. The final decision of image authenticity is made by aggravating the classification results of all patches. In this way, the general classifier achieves both detection and localization tasks.

FakeLocator [64] tackles detecting and locating GAN-based fake faces by tracing upsampling artifacts within generation pipelines. Observing such imperfections provides valuable clues. A technique called FakeLocator is proposed to produce high-accuracy localization maps at full resolution. An attention mechanism guides training for universality across facial attributes. Data augmentation and sample clustering further improve generalization over various Deepfake methods. By capitalizing on tell-tale upsampling artifacts, FakeLocator advances multimedia forensics by exposing manipulation boundaries in addition to classification.

M2TR [71] detects Deepfakes through multiscale transformers capturing subtle manipulations. Most methods map images to binary predictions, missing consistency patterns indicative of forgery. Multi-modal Multi-scale TRansformer (M2TR) applies transformers across patch scales, exposing local inconsistencies. Frequency information further improves detection and compression robustness via cross-modality fusion. A new high-quality Deepfake dataset, SR-DF, addresses the limitations of diversity and artifacts in existing benchmarks.

2.3.3. Detection Performance Comparisons

We also compare the detection performance of some state-of-the-art methods in Table 6. We compared the performance of the detector on the test subset of the training dataset (in-set) and its generalization performance on an unseen dataset (cross-set). It can be observed that the most Deepfake detectors can perform well and achieve over 90% classification accuracy and AUC, some even achieve over 99% prediction accuracy (F3-Net and FakeLocator). However, the generalization ability of these detectors remains a significant challenge, as their performance drops considerably when evaluated on the cross-set. The generalization ability is heavily influenced by the data distribution differences between the training and testing datasets. Detectors generally perform better on datasets with similar data distributions to the training set. For instance, UIA-ViT and Face X-ray demonstrate strong generalization when tested on datasets with similar context, quality, and identities to the training set, achieving over 90% AUC and accuracy, respectively. These generalization challenges highlight the primary difficulty in deploying Deepfake detectors in real-world applications, where the diversity of data (contexts, qualities, and identities) can vary greatly.

| Classifier | Artifact type | In-set | Cross-set |

|---|---|---|---|

| Xception | Spatial | 99.26/— | —/— |

| UIA-ViT | Spatial | —/99.33 | —/94.68 |

| Capsule-Forensics | Spatial | 93.11/- | —/— |

| F3-Net | Frequency | 99.99/99.9 | —/— |

| Face X-ray | Frequency | —/99.53 | —/95.40 |

| LRNet | Temporal | —/99.90 | —/57.4 |

| FakeLocator | Temporal | 100/ | 93.04/— |

2.4. Discussion of GAN-Based Deepfake

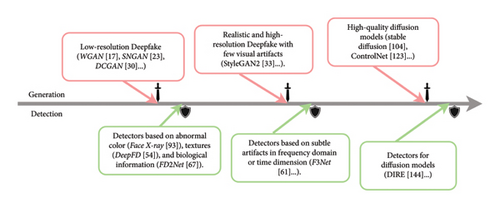

When new Deepfake techniques appear and bring threats, new detectors with corresponding detective capacity will be proposed to protect people from misinformation about Deepfake. The generation development enables Deepfake to survive existing detectors with more realistic outputs. The generation and detection race is naturally a cat-and-mouse race. We summarize the adversarial development of Deepfake generation and detection in Figure 3.

When Deepfake was only able to generate low-quality outputs [16, 17, 52], detectors adopt intuitive features such as abnormal color [70, 91], textures [53, 54, 56, 65], and biological information [67, 72, 86, 90].

Enhanced GANs then try to improve the generative quality in terms of realism and resolution [31–34, 36], and the enhanced image quality can evade the detection of the above naive detectors. Therefore, the detectors look into more subtle artifacts such as frequency [61, 66, 77, 80] and dynamic artifacts [73, 84, 88].

Both generation and detection are becoming increasingly powerful and advancing rapidly through mutual competition.

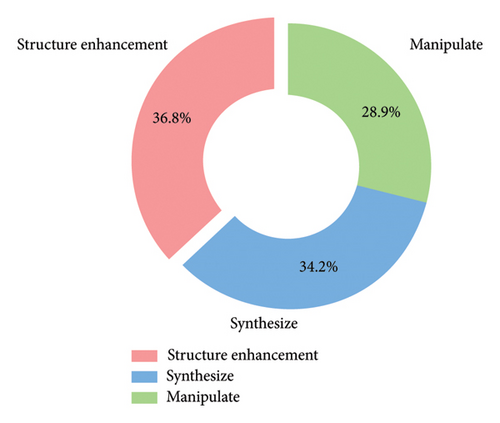

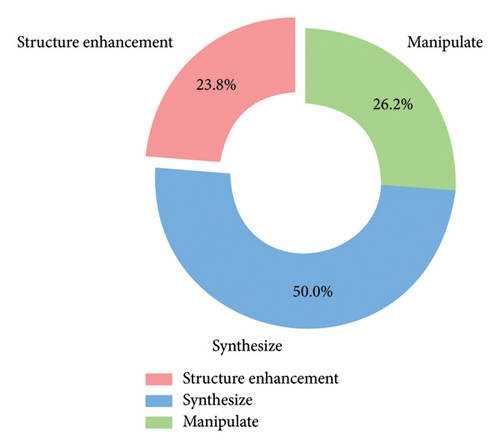

Therefore, when more powerful detectors have been developed to recognize GAN-generated Deepfake, the research on generation has naturally focused on improving GANs to produce more realistic Deepfake content that can evade detection. Despite numerous improvements and variations to enhance generation quality, the researchers encountered several inherent limitations when refining GAN models. Our analysis in Figure 4 illustrates the distribution of research efforts targeting structure enhancement, media manipulation, and synthesizing. We note that 36.8% of the surveyed GANs focus on overcoming known limitations of GANs, such as training instability, mode collapse, and limited diversity, highlighting the fragile nature of the GAN structure.

- •

The adversarial design results in instability in GAN training. The adversarial nature of GANs necessitates a delicate balance between the generator and discriminator, imposing strict requirements on the convergence path during gradient optimization. Oscillations or divergence may appear in the training process when GAN improves in one network but leads to deterioration in the other.

- •

The adversarial design leads to mode collapse if the training process fails to reach a Nash equilibrium. Neither the generator nor the discriminator can unilaterally improve when mode collapse appears, and the GAN fails to converge to the optimal results.

- •

The adversarial design leads to insufficient diversity of generations because the generator focuses on fooling the discriminator rather than capturing the full diversity of the target distribution. Besides, the adversarial design of GANs also limits attribution in other aspects, such as interpretability and scalability.

Therefore, researchers are eager to find new generator models that are easier to train and can produce more realistic outputs. The models are also expected to have better interpretability, allowing researchers to better control the training process. Better scalability may also significantly extend generators’ ability to cross-modal generation. We summarize the adversarial development of Deepfake generation and detection (from GAN to DM) in Figure 3.

3. Deepfake by DMs

3.1. From GAN to DM

Researchers are exploring alternatives to GANs due to their inherent limitations in training stability and generative performance. In recent years, DMs have emerged as a promising alternative, gaining significant traction in various generation tasks, including Deepfake generation. To illustrate the inherent connection between GAN and DM and why DM has gained more popularity in Deepfake generation, we investigate deeper into the image generation task.

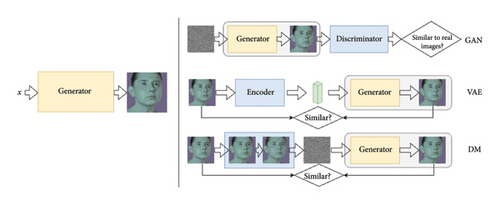

Figure 5 shows the prototype of image generation and generation pipelines of three generators (i.e., GAN, VAE, and DM). Essentially, a Deepfake generator is expected to produce an image with a given latent variable x (i.e., a latent code) as input. Different generators adopt different strategies to enable the pipelines to learn from real data and empower the generative capacity. In GAN, the discriminator compares the generated image with real samples to update the generator and improve the generation performance. However, the adversarial design brings the limitations mentioned above. Instead of an adversarial setting, VAE adopted a reconstruction strategy to learn from real data, which provides another possible solution for training a Deepfake generator. However, VAE first compresses the original image to a much smaller dimension with an encoder and then uses a low-dimension latent code for generation. The low-dimension latent code greatly limits its representational capacity and the diversity of generated images.

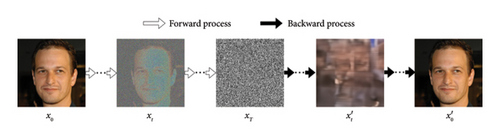

By replacing the encoder of VAE with progressive adding noise, the latent code (the noise-perturbed image) remains its original size and enhances the representational capacity of the generator. By removing the adversarial design of GAN and adopting the progressive adding noise steps as an encoder in VAE for reconstruction, DMs achieve more stable training and better generative performance.

3.2. Overview of DMs

The backward denoising process is optimized to generate Deepfake from noise images, serving as the source of generative capacities of DMs.

DMs can capture multimodal data density functions by training networks to optimally denoise. The iterative guidance also creates tractable sampling, allowing DMs to trade off diversity and quality without network changes. By modeling reverse dynamical systems, DMs have rapidly advanced across domains from images to video, audio, and 3D shapes, achieving best-in-class results with stability and flexibility advantages over GANs and VAEs.

3.3. DM-Based Deepfake Generation

Similar to the development of GAN, works on DMs first focus on enhancing the attributes of DM structures. Then, DM-based generators are widely studied in Deepfake generation tasks, including synthetic and manipulated Deepfake. Therefore, we categorize DM works according to their structure enhancement, synthesizing, and manipulating tasks and list them in Tables 7, 8, and 9, respectively.

| Ref | Structure | Condition | Task | Modal |

|---|---|---|---|---|

| [97] | DDPM | — | Generation | Image |

| [98] | DDIM | — | Generation | Image |

| [99] | DDPM-SDE | —/class | Generation | Image |

| [100] | Improved DDPM | — | Generation | Image |

| [101] | DDPM | — | I2I/editing | Image |

| [102] | ADM | —/class | Generation | Image |

| [103] | ADM | —/class | Generation | Image |

| [104] | VQ-DDM | — | SR | Image |

| [105] | CycleDiffusion | Text | I2I | Image |

| [106] | DPM-Solver++ | — | Generation | Image |

| Ref | Structure | Condition | Task | Modal |

|---|---|---|---|---|

| [107] | ADM | Text | Generation, inpainting | Image |

| [108] | DALL-E | Text | Generation | Image |

| [109] | VQ-DDM | — | Generation | Image |

| [110] | LVDM | —/Text | Generation | Video |

| [111] | Imagen | Text | Generation | Image |

| [112] | GLIDE | Text | Generation | Image |

| [113] | Continual diffusion | Text | Generation | Image |

| [114] | LFDM | Image | Generation | Video |

| [115] | DreamBooth | Text | Generation | Image |

| [116] | DDPM, VQ-DDM | Text | Generation | Image |

| [117] | VQ-DDM | Text | Generation | Image |

| [118] | DCFace | Identity/style | Generation | Image |

| [119] | VQ-DDM | Text | Generation | Image |

| [120] | Imagen Video | Text | Generation | Video |

| [121] | Diffused Heads | Audio | Generation | Video |

| [122] | DDPM | —/Image | Generation | Video |

| [123] | ControlNet | Various | Generation | Images |

| [124] | Uni-ControlNet | Various | Generation | Images |

| [125] | GaussianDreamer | Text | Generation | 3D assets |

| [126] | VideoCrafter1 | Image/text | Generation | Video |

| [127] | VideoCrafter2 | Image/text | Generation | Video |

| Ref | Structure | Condition | Task | Modal |

|---|---|---|---|---|

| [128] | SDEdit | — | Generation, editing | Image |

| [129] | DiffFace | — | Generation | face |

| [130] | RePaint | — | Inpainting | Image |

| [131] | DiffEdit | class | Editing | Image |

| [132] | UniTune | Text | Editing | Image |

| [133] | DDIM | Text | Editing | Image |

| [134] | DiffusionCLIP | Text | Editing | Image |

| [135] | Blended diffusion | Text | Editing | Image |

| [135] | Palette | Image | I2I | Image |

| [136] | Imagic | Text | Editing | Image |

| [137] | DragDiffusion | Motion | Editing | Image |

3.3.1. Structure Enhancement of DMs

The trailblazing work of DMs is the denoising diffusion probabilistic model (DDPM) [97]. DDPM is a class of latent variable models drawing connections to nonequilibrium thermodynamics. Based on a link between DMs and denoising score matching with Langevin dynamics, a weighted variational training approach is proposed in DDPM. This also enables a progressive lossy decoding scheme generalizing autoregressive generation. By formalizing connections to thermodynamic diffusion and denoising objectives, this work advances DMs to match or surpass GAN performance on image datasets. Theoretical grounding and interpretable decoding are valuable properties alongside the sample quality achievements.

DDIM [98] accelerates sampling for DDPM. While DDPMs generate high-quality images without adversarial training, sampling is slow as it requires simulating a Markov chain. DDIMs instead utilize non-Markovian diffusion processes corresponding to faster deterministic generative models. The same training procedure as DDPMs is used. Experiments show DDIMs produce equal quality samples up to 50x faster than DDPMs in terms of wall-clock time. DDIMs also enable computation-quality trade-offs, semantically meaningful latent space interpolation, and low-error observation reconstruction.

Song et al. [99] present a framework unifying score-based generative modeling and diffusion probabilistic models under continuous stochastic processes. A forward SDE injects noise to perturb data into a known prior, while a reverse-time SDE generates back to the data distribution by removing noise. The key insight is the reverse SDE that only requires estimating the score (gradient) of the perturbed data. Neural networks estimate the scores for accurate generative modeling. Encapsulating prior methods, new sampling procedures and capabilities are introduced—including a predictor–corrector to correct errors and an equivalent neural ODE for sampling and likelihood evaluation. The unified perspective also enables high-fidelity 1024 × 1024 image generation for the first time among score-based models. By bridging score modeling and diffusion through continuous processes, the work significantly improved both sampling techniques and sample quality.

Improved DDPM [100] proposed that simple modifications to the standard DDPM framework allow for high-fidelity generation and density estimation. Learning variances of the reverse diffusion further reduces sampling cost by 10x with negligible quality loss, which is important for practical deployment. Precision–recall metrics show that DDPMs cover the target distribution better than GANs. Crucially, DDPM performance smoothly scales with model capacity and compute, enabling straightforward scalability. By enhancing DMs to match or exceed GANs in sample quality, likelihood evaluation, and efficiency, this work greatly expands their capabilities and viability as generative models for images. The demonstrations of precise control over quality-compute trade-offs and scalability further attest to their value for real-world usage.

Iterative latent variable refinement (ILVR) [101] addresses controllable image generation by guiding the stochastic diffusion process in DDPMs. A method called ILVR leverages the generative reversibility of DDPMs to synthesize high-quality images matching a reference image. By iterative refinement, a single unconditional DDPM can adaptively sample diverse sets specified by references without retraining. Applications in multiscale image generation, multidomain translation, paint-to-image synthesis, and scribble-based editing demonstrate controllable generation using a single model. Enhanced control addresses a key limitation of DDPMs while retaining benefits like efficient sampling and good image fidelity. By steering random diffusion trajectories toward desired targets, ILVR significantly advances both the flexibility and practicality of DMs for computer graphics tasks demanding guided synthesis.

ADM [102] improved DMs for unconditional and conditional image generations through structure enhancements and classifier guidance, a method that effectively trades off diversity for fidelity using gradients from a classifier. ADM reports remarkable results on various benchmarks, achieving lower FID scores on datasets like ImageNet, which indicates higher quality and diversity in generated images. Furthermore, the paper discusses the societal impacts and limitations of DMs, highlighting their potential for wide-ranging applications while also acknowledging the challenges in sample speed and the absence of explicit latent representations.

Ho and Salimans [103] introduce classifier-free guidance for conditional DMs to improve sample quality without requiring a separate classifier. Classifier guidance leverages classifier gradients to trade off diversity and fidelity, needing an additionally trained classifier. Instead, a conditional and unconditional DMs are jointly trained. Combining the conditional and unconditional score estimates creates a similar fidelity–diversity spectrum as classifier guidance. This classifier-free technique avoids the cost and complexity of a second model. By showing the generative model alone that contains all necessary signals for controllable generation post-training, this exploration makes guidance simpler and more efficient for denoising diffusion.

Stable diffusion (SD) [104] formulates diffusion generative modeling in latent spaces of pretrained autoencoders. While pixel-based models achieve a promising synthesis, their optimization is expensive and sampling sequentially. By balancing complexity reduction and detail preservation in latent spaces, SD reaches near-optimal trade-offs, enabling high-fidelity generation with greatly reduced computing. The latent formulation also allows convolutional high-resolution synthesis. With cross-attention layers, SD conditions are flexible on inputs like text and bounding boxes. Experiments across unconditional generation, class conditioning, inpainting, text-to-image synthesis, and super-resolution achieve new state-of-the-art results while significantly lowering cost compared to pixel models. By shifting the modeling domain from pixel to latent space, SD unlocks the practical deployment of DMs through substantial computational savings and conditional generation capabilities.

CycleDiffusion [105] formulates an alternative Gaussian latent space for DMs along with a reconstructing encoder mapping images into this space. While DMs typically utilize a denoising sequence space, unlike GANs and VAEs, CycleDiffusion explicitly models a continuous latent code and confers benefits. One key finding is emergent common latent spaces across DMs trained on related domains. This enables applications like unpaired image translation and text-to-image editing with a single encoder–decoder cycle. Additionally, the latent formulation unlocks unified guidance of DMs and GANs via energy-based control signals. Experiments on conditional image generation guided by CLIP demonstrate DMs better capture distribution modes and outliers than GANs. By exposing and harnessing continuous latent structures amid the sequential sampling process, Gaussian embedding confers new applications to leverage DMs intuitively as latent variable models.

DPM-Solver++ [106] proposed a single approach for both continuous and discrete-time DMs. By introducing more accurate numerical methods for solving the diffusion ODEs, DPM-Solver++ achieves high-quality sampling in significantly fewer steps than previous methods.