Collaborative Integration of Vehicle and Roadside Infrastructure Sensor for Temporal Dependency-Aware Task Offloading in the Internet of Vehicles

Abstract

With advancements of in-vehicle computing and Multi-access Edge Computing (MEC), the Internet of Vehicles (IoV) is increasingly capable of supporting Vehicle-oriented Edge Intelligence (VEI) applications, such as autonomous driving and Intelligent Transportation Systems (ITSs). However, IoV systems that rely solely on vehicular sensors often encounter limitations in forecasting events beyond current roadways, which are critical for regional transportation management. Moreover, the inherent temporal dependency in VEI application data poses risks of interruptions, impeding the seamless tracking of incremental information. To address these challenges, this paper introduces a joint task offloading and resource allocation strategy within an MEC environment that collaboratively integrates vehicles and Roadside Infrastructure Sensors (RISs). The strategy carefully considers the Doppler shift from vehicle mobility and the Tolerance for Interruptions of Incremental Information (T3I) in VEI applications. We establish a decision-making framework that actively balances delay, energy consumption, and the T3I metric by formulating the task offloading as a stochastic network optimization problem. Utilizing Lyapunov optimization, we dissect this complex problem into three targeted subproblems that include optimizing local computational capacity, MEC computational capacity and comprehensive offloading decisions. To tackle the efficient offloading, we develop algorithms that separately optimize offloading scheduling, channel allocation and transmission power control. Notably, we incorporate a Potential Minimum Point (PMP) algorithm to boost parallel processing and simplify computational scale through matrix decomposition. Evaluations of our algorithm show that it excels in both complexity and accuracy, with accuracy improvements ranging from 74.3% to 114.0% in asymmetric resource environments. Simulation and experimental studies on offloading performance validate the effectiveness of our framework, which significantly balances network performance, reduces latency, and improves system stability.

1. Introduction

The advent and commercial deployment of Fifth-generation (5G) and Beyond 5G (B5G) communication technologies have catalysed an unprecedented expansion in network access and interconnectivity [1], effectively steering the Internet of Things (IoT) [2] toward a more comprehensive Internet of Everything (IoE) [3]. The 5G and B5G support Enhanced Mobile Broadband (eMBB), providing a 100 Mbps end-user data rate and peak data rates of 10–20 Gbps. They also enable Massive Machine-type Communications (mMTCs) and Ultra-reliable Low-latency Communications (URLLCs), achieving end-to-end latency as low as 1 ms and a reliability of 99.99% [4]. Cisco predicts that by 2030, the number of connected devices will reach 500 billion [5]. As a pivotal component of the IoT ecosystem, the Internet of Vehicles (IoV) [6] benefits immensely from this enhanced interconnectivity trend, evolving to enhance the utilization of information through Vehicle-to-Everything (V2X) communications [7]. This evolution trend considerably broadens the information perception capabilities of the IoV, enabling vehicles to access a wide array of data from diverse sources. These sources include not only multimodal data from the vehicle itself, such as wireless sensors, precise positioning, Light Detection and Ranging (LiDAR), and cameras, but also data from other IoE systems. We refer to the sensors that extend the perception capabilities of vehicles from other IoE systems as Roadside Infrastructure Sensors (RISs). Furthermore, the multimodal data captured by vehicles can also be seamlessly integrated into IoE systems such as urban Intelligent Transportation Systems (ITSs) [8, 9]. With the ongoing advancement of Artificial Intelligence (AI) technology, the potential of diverse informational data is being thoroughly explored and leveraged, propelling the IoV beyond mere basic data transmission toward delivering sophisticated intelligent services [10, 11].

However, advancing toward a fully interconnected V2X and intelligent services, which rely on the integration of diverse information sources, requires substantial reliance on Multi-access Edge Computing (MEC) [12]. On one hand, the intelligence achievable solely through vehicle capabilities is limited. Despite rapid developments in processing power and battery capacity in new-energy vehicles [13, 14], their in-vehicle computing capabilities remains inadequate to support complex AI applications like Large Language Models (LLMs) [15]. On the other hand, centralized cloud faces challenges related to high latency and bandwidth limitations, particularly in real-time V2X circumstances [16]. For instance, predicting traffic accidents or congestion requires a rapid response in data processing, which centralized cloud systems struggle to provide due to inherent delays. Conversely, MEC improves network and computational responsiveness by deploying services at the edge of the Radio Access Network (RAN), providing vehicles with low-latency, high-bandwidth services essential for robust applications. What is more, MEC serves as a one-stop platform with extensive computational capabilities, capable of integrating and processing a diverse range of data. These data include perceptual and status information, passenger health data across multiple vehicles, and even helpful auxiliary data from other IoE systems, thereby effectively providing globally optimal support for IoV. The crucial role of MEC in providing a platform for facilitating direct communication among diverse heterogeneous systems has expedited an urgent transition towards a Vehicle-oriented Edge Intelligence (VEI) paradigm [17].

This VEI paradigm refers to a pattern where data from multiple information sources are integrated at the network edge, enabling vehicles, RIS devices, and MEC to collaborate in joint processing to support intelligent services for vehicles. Consequently, the paradigm should possess at least the following characteristics. First, functional decoupling is essential, allowing flexible collaboration between devices and MEC to make autonomous decisions and dynamically adjust processing strategies based on environmental conditions. Second, joint resource allocation is vital, with MEC required to efficiently optimize computational and communication resources to support intelligence services under limited resources. Third, a mechanism is necessary to manage the sequencing of data between vehicles, RIS devices, and MEC. This mechanism should consider temporal constraints in data generation, processing, and transmission, which is crucial for ensuring data coherence and applicability. Neglecting these VEI-specific characteristics could compromise strategic effectiveness and introduce substantial risks in practical implementations. These characteristics offer several advantages. Functional decoupling within IoV services can be categorized into vehicle-local and MEC-based components. Urgent real-time information is processed directly within the vehicle, while data demanding intensive computation and collaboration is offloaded to MEC for joint processing with other vehicles or RIS devices. This approach reduces delays, enhances data processing capabilities, and delivers comprehensive insights across various IoE systems. In addition, local processing of sensitive information strengthens security and reduces vulnerability to network threats. For simplicity, this paper does not explore the complexities introduced by security and privacy issues. In this paper, we refer to the applications within the VEI paradigm as VEI applications, including autonomous driving, Augmented Reality (AR)-assisted driving, ITS, and digital services for transportation and logistics [18–21].

Despite the considerable potential of VEI applications, numerous pressing issues within this paradigm have not received sufficient attention. Deng et al. [22] investigated an edge collaborative task offloading and splitting strategy for MEC in 5G IoV networks. It focuses on managing the frequent handovers due to high mobility by splitting tasks across multiple MEC servers to optimize latency and energy consumption. Sun et al. [23] tackled the issue of task offloading in MEC-enabled IoV networks, focusing on the challenges posed by the interdependence of in-vehicle tasks. It introduces a priority-based task scheduling to minimize processing delays by optimizing the sequence of task offloading. Dang et al. [24] addressed the challenge of computing offloading in IoV with a focus on achieving low latency, low energy consumption, and low cost despite limited resources on MEC servers. It proposes a three-layer system task offloading overhead model that facilitates Edge-Cloud collaboration among multiple vehicle users and MEC servers. These studies mainly focus on the interaction between vehicles and MEC, with the goal of minimizing latency during task offloading. They aim to optimize task between vehicles and MEC servers in different scenarios, addressing factors such as multiple MEC nodes or intervehicle relationships. Some research also considers the vehicle mobility to enhance offloading efficiency. However, these studies tend to concentrate on the vehicle itself and fail to fully utilize the MEC to integrate diverse data sources. Moreover, they do not explore the necessary paradigms for VEI applications, limiting the intelligent potential of vehicle-edge interactions.

Therefore, there is a significant lack of exploration in research regarding service design specifically tailored to VEI applications. Current studies fail to fully leverage the diverse information sources integrated within the MEC platform and often overlook the unique data dependencies and constraints that VEI applications entail. In particular, existing vehicle sensing capabilities are generally confined to the vehicle itself, resulting in insufficient predictive ability for events beyond the current roadway. As a result, collaboration with RIS devices remains underdeveloped, hindering the integration of more comprehensive data sources. In addition, the temporal dependency-aware task data within VEI applications increases the risk of task interruptions during execution, which can compromise the overall system performance. Current studies also neglect effective management of data sequencing dependencies in task offloading for VEI applications. There is a clear gap in the design of mechanisms that ensure data coherence and applicability, which are essential for maintaining the timeliness of task execution. This lack of focus on VEI-specific challenges limits the potential for intelligent and dynamic vehicle-edge interactions, preventing the development of optimized services for dynamic, real-time VEI applications.

- 1.

Introducing the T3I metric: we conduct a thorough analysis of VEI applications, highlighting their reliance on historical data for tasks such as semantic feature tracking and 3D reconstruction. To address this reliance, we propose the T3I metric, a critical constraint applicable to both vehicles and RIS devices. This metric ensures temporal continuity of data during the task offloading process. By maintaining data sequence integrity, T3I improves the adaptability in real-world scenarios, ensuring seamless operation across both vehicles and RIS devices.

- 2.

RIS collaboration strategy: we design a task offloading and resource allocation strategy specifically tailored for VEI applications, integrating the collaboration of vehicles and RIS devices. This strategy dynamically adjusts task offloading and resource allocation, optimizing the utilization of wireless and computational resources. In addition, our strategy addresses channel design issues arising from different multipath propagation scenarios of vehicles and RIS devices, with a particular focus on Doppler shifts caused by vehicle mobility. By addressing the lack of multisource data collaboration, our approach improves overall system efficiency and performance.

- 3.

Decoupled decision-making framework: by applying Lyapunov optimization, we decompose the challenges of VEI system stability and energy efficiency optimization into more manageable subproblems. In addition, we introduce the Potential Minimum Point (PMP) algorithm, which effectively incorporates the T3I metric into the matrix decomposition process. This decomposition strategy not only controls the interruption risk but also leverages this control to accelerate computation, significantly improving both the efficiency and response speed of our solution.

- 4.

Effective performance validation: through extensive simulations and experimental validations, we demonstrate the superiority of the proposed algorithm in managing environments that support VEI applications. The results highlight improvements in network performance, significant reductions in latency, and enhanced system stability. These findings not only confirm the effectiveness of our framework but also highlight its robustness and scalability in VEI scenarios, reinforcing its potential for practical VEI applications and paving the way for widespread adoption in real systems.

The remainder of this article is organized as follows: Section 2 analyses the related works. The system model in the physical layer is described in Section 3. The problem formulation of this queue model is established in Section 4. Simulation results and performance evaluations are discussed in Section 5. The article concludes with Section 6.

2. Related Works

MEC-enabled IoV provides service environments and computational capabilities through RAN close to vehicles, offering several advantages, including reduced latency, improved data collaboration, and enhanced support for diverse services. In addition, the ability of MEC to offload heavy tasks from vehicles to edge servers optimizes resource utilization and energy consumption. However, this technology also faces significant challenges. MEC-enabled IoV can be applied to a variety of scenarios, each with unique challenges related to network topologies, resource availability, and task offloading requirements, which further complicates the design and implementation of efficient solutions. The complexity of these factors makes it challenging to address all aspects comprehensively, leading existing studies to simplify task offloading models for manageability.

Current research on task offloading and resource allocation in MEC-enabled IoV primarily focuses on optimizing power consumption, minimizing task offloading delay, and addressing the challenges posed by vehicle mobility. These studies emphasize different optimization objectives depending on the specific needs of each scenario. The varying priorities, such as reducing energy usage, minimizing latency, or adapting to vehicle mobility, underscore the complexity of optimizing MEC-enabled IoV systems, as each scenario often requires a careful balancing of competing requirements. In terms of power consumption optimization, research has increasingly shifted to green computing, focusing on energy-efficient solutions and sustainable system designs. Tan et al. [25] evaluates energy-efficient computation offloading strategies in MEC-enabled IoV networks, deriving closed-form expressions for energy efficiency. It introduces a multiagent Deep Reinforcement Learning (DRL) algorithm designed to maximize energy efficiency by considering task size and transmission timeout thresholds. Hou et al. [26] addressed the problem of energy wastage by edge servers in IoV during off-peak hours by proposing dynamic sleep decision-making algorithms using DRL technology. It introduces a centralized DRL-based dynamic sleep algorithm that uses deep deterministic policy gradient for optimal policy learning, and a federated DRL-based dynamic sleep algorithm that incorporates federated learning to enhance training and generalization. In addition, Hou et al. [27] proposed a Delay-aware Iterative Self-organized Clustering (DISC) algorithm based on machine learning clustering to address the energy consumption challenges in large-scale MEC deployment. By optimizing dynamic sleep mechanisms and service association, the DISC efficiently improves resource utilization, reduces energy consumption, and enhances the energy efficiency and environmental sustainability.

Regarding the issue of task offloading delay, research has primarily focused on strategies to reduce latency while simultaneously improving service continuity for applications in MEC-enabled IoV systems. Ren et al. [28] addressed the challenge of providing low-latency computing services for latency-sensitive applications in IoV by integrating mobile and fixed edge computing nodes. To optimize the use of these heterogeneous resources, a software-defined networking and edge-computing-aided IoV model is proposed. They also introduce mechanisms for partial computation offloading and reliable task allocation to ensure service continuity amid potential out-ages, utilizing a fault-tolerant particle swarm optimization algorithm designed to maximize reliability while adhering to latency constraints. Nguyen et al. [29] addressed the challenges of data redundancy and computational load in MEC for IoV applications. They propose a two-pronged approach: first, it models data redundancy as a set-covering problem and uses submodular optimization to reduce unnecessary data transfers to MEC servers while maintaining application quality. Wang et al. [30] tackled the challenge of computation offloading in IoV, where the computing limitations of vehicles necessitate offloading intensive tasks to MEC servers. It presents a joint optimization scheme that uses decentralized multiagent deep reinforcement learning to dynamically adjust computation offloading decisions and resource allocation among competing and cooperating vehicles.

Concerning the vehicle mobility, research has focused on optimizing task offloading and resource allocation by factoring in various mobility patterns of vehicles and their cooperation impact on MEC-enabled IoV performance. Zhang et al. [31] developed a task offloading scheme for MEC-enabled IoV that prioritizes low latency and operational efficiency while considering task types and the selection of appropriate offloading nodes. By integrating mobile and fixed nodes, the scheme strategically divides tasks between same-region and cross-region offloading modes based on delay constraints and the travel times of vehicles, effectively minimizing total execution time. Chen et al. [32] developed a distributed multihop task offloading decision model for IoV using MEC to enhance task computing and offloading efficiency. The model includes a vehicle selection mechanism to identify potential offloading candidates within specific communication ranges and an algorithm to determine optimal offloading solutions, utilizing both greedy and discrete bat algorithms. Meneghello et al. [33] explored the optimization of virtual machine migration in vehicles that function as data hubs, utilizing a novel approach that combines vehicular mobility estimates with an online algorithm to manage Virtual Machine (VM) migration across 5G network cells. Utilizing a Lyapunov-based method solved in closed form, the study introduces a low-complexity, distributed algorithm that significantly reduces energy consumption.

Some existing studies have also highlighted the limitations of the sensing range in MEC-enabled IoV systems when relying solely on vehicle sensors. These studies point out that the vehicle’s sensing capabilities are often confined to its immediate surroundings, which may not be sufficient for predicting events or hazards beyond its direct vicinity. Zhao et al. [34] proposed an Integrated Sensing, Computation, and Communication (ISCC) system for IoV, combining MEC and Integrated Sensing and Communication (ISAC). This system uses a collaborative sensing data fusion architecture, where vehicles and Roadside Units (RSUs) cooperate to extend the sensing range. To optimize resource allocation, the paper introduces a DRL approach, which adapts wireless and edge computing resources to maximize task completion rates while ensuring service delays are minimized. Yang et al. [35] aimed to optimize execution latency, processing accuracy, and energy consumption in a MEC-based cooperative vehicle infrastructure system, where the RSU equipped with a MEC server and sensors collaborates with vehicles. It proposes a two-stage DRL-based offloading and resource allocation strategy to determine task offloading and transmit power, considering the limited computing resources on vehicles and varying communication conditions. Shi et al. [36] proposed a task offloading strategy for environmental perception in autonomous driving, where RSUs proactively collect environmental data, which is then processed through edge computing. The task offloading problem is formulated as an integer programming model, with the objective of minimizing task delay, and is solved using a reinforcement learning-based approach. It is worth noting that the extension of vehicle sensing capabilities mentioned in the aforementioned studies often relies on RSUs, which does not fully leverage the potential advantages of the MEC platform. Specifically, sensing data from RSUs must first be transmitted to the vehicle and then the integrated sensing data from both the vehicle and the RSUs is offloaded to the MEC, introducing additional communication overhead. This two-step process not only increases latency but also limits data handling efficiency. In contrast, RIS devices in this paper collaborate directly with the MEC platform, enabling more seamless and efficient integration of sensing data and offloading processes.

The aforementioned research extensively explores task offloading and resource allocation in MEC-enabled IoV. However, they rarely delve deeply into these issues from the perspective of VEI application characteristics. While some studies have acknowledged these unique characteristics and proposed solutions, there is still a significant gap in research specifically focused on them. Dai et al. [37] presented an MEC-based architecture for adaptive-bitrate multimedia streaming in IoV, addressing challenges with dynamic environments and resource heterogeneity by formulating a joint resource optimization problem that enhances service quality and playback smoothness, incorporating algorithms like multiarmed bandit and Deep-Q-Learning (DQN) for chunk placement, and an adaptive-quality-based algorithm for efficient chunk transmission. Wang et al. [38] explored Collaborative Edge Computing (CEC) in the social IoV system to reduce urban traffic congestion by utilizing city-wide MEC servers and intelligent traffic light control. It introduces a CEC-based traffic management system that employs multiagent-based DRL for dynamic traffic light adjustments, creating green waves at congested intersections, with results showing significant reductions in vehicles’ average waiting times. Chen et al. [39] proposed a Road Hazard Detection (RHD) solution based on Cooperative Vehicle-Infrastructure Systems (CVISs), where Onboard Computing Devices (OCDs) analyse road conditions. By utilizing a metalearning paradigm for feature generalization and a knowledge distillation framework to simplify the model, the lightweight RHD model ensures efficient performance with fast inference times, contributing to safer intelligent transportation systems. Yang et al. [40] proposes a two-layer distributed online task scheduling framework for Vehicular Edge Computing (VEC) to efficiently handle computational tasks in ITSs with varying Quality of Service (QoS) requirements. The framework includes computation offloading and transmission scheduling policies, along with a distributed task dispatching policy at the edge, optimizing task acceptance and minimizing transmission delays caused by vehicle mobility. Although research on VEI application scenarios is growing, academic papers that thoroughly investigate the characteristics of VEI applications remain relatively scarce, highlighting significant research opportunities and potential in this field.

In conclusion, while numerous studies on MEC-enabled IoV have successfully addressed issues related to resource scarcity, such as computational resource allocation and power optimization for data transmission, they are increasingly insufficient to meet the evolving demands of future VEI applications. Specifically, VEI applications require the integration of diverse IoE data sources to extend vehicle sensing capabilities. However, current RSU-based solutions necessitate a two-step process, where data are first transmitted from RSUs to vehicles and then offloaded to MEC servers. This introduces unnecessary delays and inefficiencies, limiting the optimization potential of VEI systems. In addition, many existing studies fail to fully explore the unique characteristics of VEI applications, such as their temporal dependencies and the need for collaborative solution designs. This gap highlights significant research opportunities to develop more adaptive and efficient solutions that leverage MEC-supported IoV to better meet the increasing complexity and dynamic needs of these systems.

3. System Model

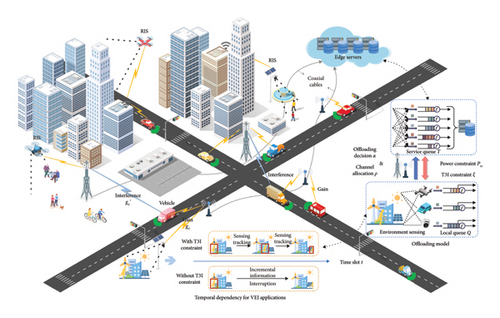

As illustrated in Figure 1, we consider a MEC-enabled IoV scenario that supports VEI applications, in which RIS plays a crucial role. These RIS devices include surveillance cameras, AR-supporting devices such as smartphones and smart glasses, and drones. Surveillance cameras not only monitor road conditions but also extend their coverage beyond the roads for a comprehensive environmental perception. Smartphones and smart glasses provide real-time location information, navigation data, and enhanced visual details. Meanwhile, drones are utilized in specific areas that are blind spots for traditional surveillance to offer detailed perception and gather data. These RIS devices collaboratively enhance the vehicle’s perceptual capabilities, enabling a more comprehensive understanding of the surrounding environment and thereby improving driving safety and efficiency.

To seamlessly integrate these vehicles and RIS devices, we implement an end-to-edge MEC architecture. In this architecture, distributed edge servers within the region are deployed by the cloud edge to facilitate dynamically computation offloading. When vehicles and RIS devices produce Sensing Information (SI) encompassing large data volumes, the MEC platform intelligently create and implement scheduling policies. Subsequently, the vehicles and RIS devices that are authorized for offloading can transmit their SI data to the Service Queue (SQ) of the MEC platform through Base Stations (BSs) or other access points. For consistency, we will refer to all such access points uniformly as Wireless Access Points (WAPs) in the following text.

For clarity, a mathematical description of this scenario is provided. We consider time to be divided into discrete slots, denoted by t ∈ {1, 2, …, T}, with each slot spanning a duration of τ. We define the set of vehicles within the region at time t as and denote their arrival rate within the region as Γv. For each i in , represents the corresponding velocity of vehicle i at time t. We assume the number of RIS devices remains constant over a significant period and define their set within the region as . For convenience, the combined set of Sensing Devices (SDs), including both vehicles and RIS devices, is denoted as . Due to the use of high-speed coaxial cables in the backhaul links of the WAP, the backhaul transmission delay is negligible. Consequently, we consolidate all edge servers in the region into an edge server cluster with multiple SQs, defined as .

For all and , the indicator function αij(t) is defined such that αij(t) = 1 indicates an established offloading from SD i to SQ j during slot t, and αij(t) = 0 otherwise. The offloading strategy ensures that during each time slot, each SD can offload its task to at most one SQ, and each SQ can handle tasks from at most one SD. The first condition, αij(αij − 1) = 0, ensures that if an SD offloads to a given SQ, it does so exclusively, and no SD can be assigned to multiple SQs simultaneously in the same time slot. This condition guarantees that task offloading remains efficient and nonconflicting. The second condition, , ensures each SD offloads to only one SQ in the same slot, while guarantees each SQ can only handle one SD per time slot. These constraints together manage task distribution and ensure proper load balancing across the available resources.

3.1. Metric of T3I

This constraint ensures that the incremental information maintains sequential stability and aids in decision-making and processing in VEI applications.

3.2. Channel Model of Offloading

When an SD is authorized to offload tasks to the SQ on the MEC platform, the offloading process for VEI applications involves three main stages: (1) uplink transmission of SI data from the SD to the WAPs. (2) Processing of SI data at the SQ. (3) Downlink transmission of the processed results from the SQ back to the SD. Given that the volume of processed task result is typically small, as demonstrated in studies such as [43–45], we assume that the MEC platform can accurately deliver the task processing results to the vehicles. Therefore, we focus only on the uplink channel during the offloading process.

For all and , the indicator function ρik(t) is defined such that ρik(t) = 1 if SD i selects channel k during slot t, and ρik(t) = 0 otherwise. The first condition ρik(ρik − 1) = 0 ensures that each SD can only select one channel in each time slot, meaning that if an SD selects channel k, it cannot select any other channel at the same time. The second condition ensures that each channel k can only be assigned to one SD during a given time slot. The third condition ensures that each SD can select at most one channel during each time slot. These constraints ensure that each SD can only use one channel at a time, and each channel is assigned to no more than one SD per time slot, thus preventing any conflicts during the channel allocation process.

Subsequently, considering that vehicles on roads face fewer obstructions, their wireless channel multipath propagation typically comprises a dominant Line-of-Sight (LOS) component along with several Nonline-of-Sight (NLOS) components. In contrast, RIS devices often hindered by various buildings, primarily experience multipath propagation as a superposition of multiple NLOS paths. To more accurately depict these distinct channel characteristics, we apply the Nakagami-m fading [50] with different parameters to simulate the multipath propagation conditions for vehicles and RIS devices during each time slot t. Specifically, the initial channel condition for each vehicle is denoted as , and for RIS devices , as .

3.3. Queue Model of SDs

3.4. Queue Model of SQs

3.5. Power Consumption

4. Problem Formulation

Optimizing the performance of the VEI applications is crucial in MEC-enabled IoV scenario. However, the challenge arises when an excessive number of SDs connect to the MEC platform, leading to an unreasonable consumption of MEC platform resources. In addition, offloading interruption of SD for extended durations result in outdated information, necessitating the recomputation of SI data from SDs. Continuous disconnection between SDs and MEC platform leads to the elevated T3I, causing a loss of information track for SDs and distortions. These issues necessitate a balanced approach to maintain the continuity and freshness of SI data in the MEC-enabled IoV system.

To address these issues, we focus on optimizing the equilibrium of three pivotal performance metrics of delay, power, and T3I. Specifically, according to Little’s Law [52], we interpret queue length as an indicator for delay. Improving computing capabilities generally results in reduced delay, which thus necessitates a delicate balance between delay and power. In conclusion, our solution is geared towards optimizing power consumption while maintaining stable queue lengths, simultaneously within the constraint of T3I.

4.1. Queue Stability

4.2. Power Consumption Minimization

4.3. Lyapunov Optimization

To solve the problem P0, we employ the theory of Lyapunov optimization [54] to devise an online algorithm. This algorithm strikes a balance optimization between power and delay under the T3I constraint and operates at each time slot. Notably, Lyapunov optimization simplifies the complex stochastic network optimization into three decoupled subproblems with reduced complexity. These subproblems are designed to be adaptively solved at their respective locations, simplifying the overall process and enhancing efficiency.

The subproblem SP3, characterized as a Mixed Integer Nonlinear Programming (MINLP) problem, is further complicated by the constraint ξi(t) ≤ ξmax. To address this intricate issue, we opt for a decoupling strategy that separates the offloading schedule and power control decisions. The resolution process is divided into two distinct phases. In the first phase, we employ an algorithm named PMP to determine the optimal offloading schedule strategy, denoted as α∗(t) and ρ∗(t). The second phase involves leveraging convex optimization techniques to derive the optimal transmission power, denoted by .

Apparently, the coefficient matrix Ψα(t) dominates the offloading scheduling α(t), indicating that the solution of strives to select small values of under the constraint in Formula (27). To solve the subproblem , we develop a PMP algorithm. Initially, we perform a trial solution on α(t). We then apply the T3I constraint to decompose the coefficient matrix into offloading submatrixes Γ and Λ. This decomposition allows for the separate processing of submatrix elements that are not constrained by T3I. For the submatrix Λ, in order to minimize the optimization objective, whenever Λij > 0, indicating that the corresponding offloading strategy must be interrupted; therefore, is set to +∞. The algorithm seeks potential minimum values within each row and column of the two submatrices, iteratively evaluating all potential minimums to determine the optimal solution. This solution must adhere to the constraint that only one offloading strategy is allowed per row and column of the matrix α(t). Next, we verify the maximum channel resource constraint specified in Formula (27) using the solved α∗(t). If the constraint is exceeded, we then set ψα for any channel exceeding this maximum to the highest value found in the matrix Ψα(t). The algorithm iteratively approximates until all constraints are satisfied. The specifics of this algorithmic procedure are detailed in Algorithm 1.

-

Algorithm 1: PMP algorithm.

-

Input: Coefficient matrix Ψα, T3I ξ, WAP position Φ

-

Output: Optimal offloading decision α∗

-

Initialization:, ,

- 1:

Whiledo

- 2:

Find set , then decompose the matrix Ψα into and ;

- 3:

For each element Λij in the matrix Λ, construct a new matrix such that if Λij > 0, and if Λij ≤ 0, then ;

- 4:

WhileΓ ≠ ∅do

- 5:

Set and , denote the dimensions of matrix as ;

- 6:

For each row i in {1, ⋯, mΓ}: for all j;

- 7:

For each column j in {1, ⋯, nΓ}: for all i;

- 8:

Record all zero elements after normalization as ;

- 9:

Perform a similar subtraction of for columns first and then for rows to get ;

- 11:

;

- 12:

Sort in ascending order and find indices that minimize ensuring different rows and columns, record the index as ;

- 13:

Delete the rows and columns in Γ where indices of are located, update submatrix as ;

- 14:

;

- 15:

End While

- 16:

Perform steps 4–15 for matrix Λ to obtain ;

- 17:

Form α∗ by setting for all and otherwise;

- 18:

For in Φdo

- 19:

Verify the channel allocation constraints by ;

- 20:

Ifthen

- 21:

Denote the coefficient set under the coverage of BS as , then Sort in ascending order by ;

- 22:

Fork in do

- 23:

;

- 24:

;

- 25:

End For

- 26:

End If

- 27:

End For

- 28:

;

- 29:

End While

After obtaining the offloading scheduling solution α∗(t), the MEC platform can efficiently allocate channel resources to minimize regional interference. We employ a channel allocation algorithm that optimizes communication efficiency by selecting appropriate channels for RIS devices and vehicles. Initially, the algorithm constructs an adjacency matrix between BSs to document the distances between them and identifies the nearest BS for each, generating a list of available candidate channels for each BS. Subsequently, based on the channel used by the connected BS and its nearest BS for each device, the algorithm selects potentially noninterference channels for each RIS device and vehicle, updating the channel allocation decisions accordingly. This process is designed to reduce interference and increase channel capacity during the offloading process, thereby enhancing the overall performance of the MEC system. The details of this algorithmic procedure are provided in Algorithm 2.

-

Algorithm 2: Channel allocation algorithm.

-

Input: Offloading decision α∗, WAP position Φ

-

Output: Channel allocation ρ∗

-

Initialization:ρ = 0

- 1:

Compute the distance adjacency matrix Π according to BS set Φ;

- 2:

Find the index of the nearest adjacent BS for each in Φ, denoted by ;

- 3:

Set channel candidates for each BS as

- 4:

Fori⟵1 to do

- 5:

Ifthen

- 6:

Calculate potential noninterference channels set as

- 7:

Ifthen

- 8:

Randomly select a channel ;

- 9:

ρik(t)⟵1;

- 10:

Else

- 11:

Randomly select a channel

- 12:

ρik(t)⟵1;

- 13:

End If

- 14:

End If

- 15:

End For

In summary, the MEC-enabled IoV scenario prioritizes balancing three critical metrics for VEI applications: delay, power consumption, and T3I metric. Using Lyapunov optimization, the challenge of minimizing power consumption is divided into three subproblems focused on local computing frequencies at SDs, computation frequencies at MEC’s SQs, and offloading decisions. In addition, efficient algorithms are employed to address offloading scheduling, channel allocation, and transmission power control, optimizing resource use and reducing regional interference. This comprehensive decision strategy not only tackles the main challenges faced by VEI applications but also boosts overall system performance.

5. Results and Discussion

In this section, we begin with a comprehensive evaluation of the proposed PMP algorithms tailored for our system model, focusing on error tolerance and computational complexity. We then proceed with simulations to assess the performance of task offloading in VEI applications within a MEC-enabled IoV architecture, examining factors such as delay, power consumption, and the impact of sensing data volume on the VEI application and the T3I metric. In addition, we explore the offloading performance across various scenarios, assessing the influence of different numbers of RIS devices and vehicles, as well as diverse service capabilities. In summary, we analyse the benefits of our proposed task offloading strategy, which includes collaborative integration of vehicles and RIS devices. This strategy effectively addresses the temporal dependencies inherent in VEI applications, ensuring optimized operational efficiency.

5.1. Simulation Settings

In our simulations, we model a 1000m × 1000m square grid, bisected by a cross-shaped road at the center that divides the area into four equal parts. RIS devices are uniformly dispersed across this grid and remain stationary to collaboratively provide enhanced perception capabilities for VEI applications. Vehicles randomly enter the four intersections with a total arrival rate of Γv, and their movement speed is uniformly distributed, denoted as . In addition, we assume that vehicles can automatically overtake each other to avoid collisions, simplifying the simulation complexity. The SI data size for RIS devices and vehicles is normally distributed, denoted as . BSs are deployed across the grid following a PPP model with an intensity of λ = 30. Each BS is equipped with K = 20 channel resources, with each channel providing a bandwidth of W = 10MHz. The simulation is designed to run up to Tmax, which corresponds to 105 ms. To ensure the statistical reliability of results, default parameter values are thoroughly documented in Table 1.

| Parameter | Value |

|---|---|

| N0 | −174 dBm/Hz |

| W | 20 MHz |

| g0 | −40 dB |

| d0 | 1 m |

| θ | 4 |

| κl,i, κs,j | 10−27 Watt s3/cycle3 |

| fc | 5.9 GHz |

| U(10, 30) m/s | |

| Ai(t) | |

| τ | 1 ms |

| 0.1 ms | |

| L | 737.5 |

| ϑ | 107 |

| mv | 1.5 |

| mr | 1 |

| χv, χr | 1 |

| 109 cycle/s | |

| 5 × 109 cycle/s | |

| Tmax | 105 ms |

| ξmax | 10 ms |

| K | 20 |

| λ | 30 |

| c | 3 × 108 m/s |

| ωl,i | 1 |

| ωs,j | 0.1 |

| 500 mW | |

| 10 mW |

5.2. The Performance of Algorithm Comparison

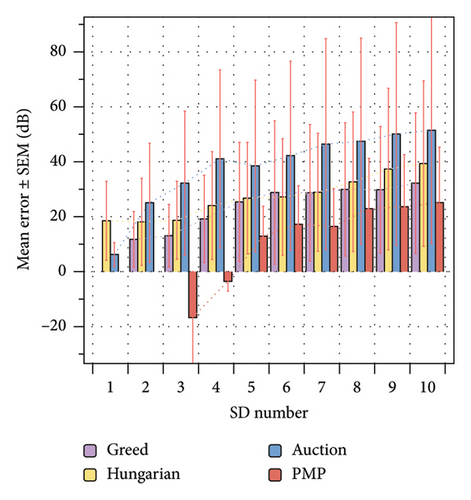

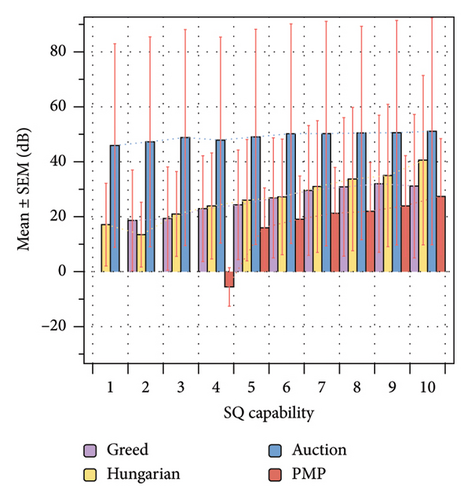

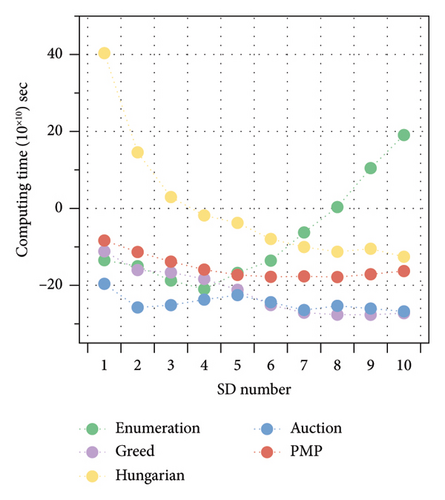

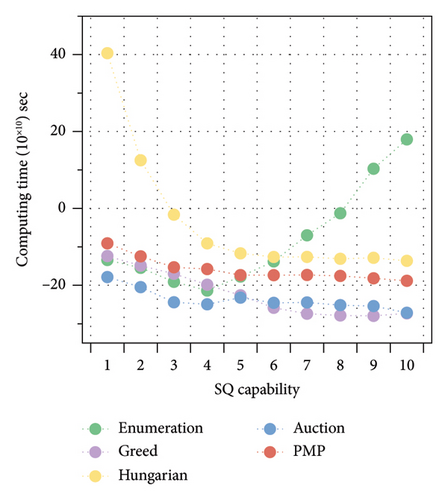

We initially evaluate the performance of the proposed algorithms by conducting over 100 runs to account for randomness, utilizing a hardware environment equipped with an AMD-7950x processor. The comparative analysis of algorithm performance, specifically between the PMP algorithm and other common allocation algorithms, is depicted in Figure 2. This comparison strictly considers the constraints of α(t) as specified in Formula (1), without considering power and channel resource constraints. It is worth noting that, for the purpose of comparability, all other comparison algorithms in our simulation, except for the proposed PMP algorithm, disregard the constraints specified in condition (3). Due to the computational complexity inherent in the enumeration method, our comparisons are confined to low dimensions, with the number of SDs ranging from 1 to 10 and SQ capabilities also from 1 to 10 .

Figures 2(a) and 2(c) illustrate the variation of precision and complexity with an increasing number of SDs under the condition of SQ capability . Figures 2(b) and 2(d) illustrate the variation of precision and complexity with the increasing SQ capability under the condition of SD number . In Figures 2(a) and 2(b), it is evident that our proposed PMP algorithm outperformed the Hungarian and greedy algorithms, particularly in scenarios with an asymmetric scale between SDs and SQs. The computational error of the proposed PMP compared with the enumeration method is nearly negligible in these cases. Conversely, while the greedy method exhibits acceptable error rates when dealing with low computational scales, it suffers from poor stability. The traditional Hungarian algorithm’s performance is notably subpar, primarily because it is designed for assignment problems with only positive values. In Figure 2(a), the average calculation error of our proposed PMP algorithm is 49.6%, 54.8%, and 67.8% lower than those of the greedy, Hungarian, and auction algorithms, respectively. In the asymmetric case, where , the average calculation error of our proposed PMP algorithm is 114.0%, 111.4%, and 108.5% lower than that of the greedy, Hungarian, and auction algorithms, respectively. Similarly, in Figure 2(b), the proposed PMP algorithm achieves a reduction in average calculation error of 32.4%, 34.2%, and 63.9% compared with the greedy, Hungarian, and auction algorithms, respectively. In the asymmetric case, where , the average calculation error of our proposed PMP algorithm is 75.5%, 74.3%, and 89.1% lower than that of the greedy, Hungarian, and auction algorithms, respectively.

In Figures 2(c) and 2(d), the greedy method and auction method both perform well in terms of computational time requirements, with computational complexities of and , respectively. The time difference between the greedy, the auction and the PMP algorithm falls within an acceptable range. The complexity of the PMP with surpasses that of the Hungarian algorithm, which has a complexity of and is prone to invalid iterations when dealing with asymmetric dimensions due to the necessity of filling in zero elements to create a symmetric matrix. On the other hand, the enumeration method, with an algorithmic complexity of , experiences fluctuations in execution time due to the omission of elements greater than zero, resulting in a computing time decrease in low dimensionality. In Figure 2(c), the computation time of our PMP algorithm is, on average, 29.6% and 37.5% longer than that of the greedy and auction algorithms, respectively, while the Hungarian and enumeration algorithms show average increases of 99.9% and 51.1% compared with the PMP algorithm, respectively. In Figure 2(d), the computation time of our PMP algorithm is 28.6% and 32.9% longer than that of the greedy and auction algorithms, respectively, whereas the Hungarian and enumeration algorithms result in increases of 78.4% and 49.2% in computation time compared with the PMP algorithm, respectively. In the asymmetric case, where .

5.3. The Performance of Collaborative Offloading

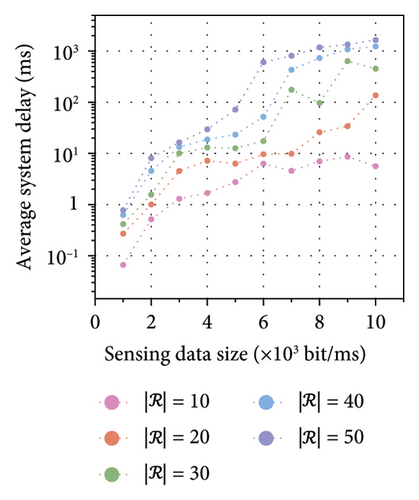

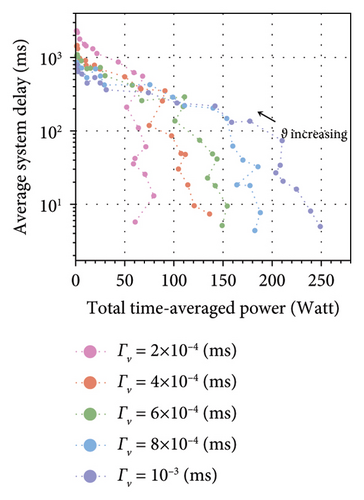

Figure 3 presents the performance analysis of temporal dependency-aware task offloading in VEI applications in scenarios influenced by RIS devices. Panels (a), (b), and (c) of Figure 3 illustrate how performance varies with different numbers of RIS devices, ranging from to , under conditions of a vehicle arrival rate Γv = 4 × 10−4/ms and SQ capability . Specifically, in Figure 3(a), the curves represent scenarios where the power delay weight factor ϑ fluctuates between 108 and 1012. A notable trend is observed that an augmentation in factor ϑ leads to a reduction in power expenditure by the offloading in VEI applications but incurs an escalation in delay.

Figure 3(a) elucidates that an increase in the number of RIS devices correlates with a rise in the system’s total time-averaged power consumption, which is necessary to maintain the corresponding average system delay. In addition, the surge in the number of RIS devices leads to a higher minimum delay threshold of the system. In other words, within a system with fixed MEC SQ capacity, as the number of RIS devices increases, the lowest achievable system delay also increases. Consequently, it becomes impossible to further reduce latency simply by increasing power.

Figure 3(b) depicts the trend in average system delay as the size of the sensing data from RIS devices changes, under varying numbers of RIS devices. It is evident that an increase in sensing data size results in higher average system delay. Furthermore, as the number of RIS devices grows, the average system delay also increases. Consequently, for VEI applications with smaller data sizes, increasing the number of RIS devices to support vehicle perception can improve system performance without significantly impacting system delay. However, for VEI applications with larger data sizes, the deployment of RIS devices must be carefully balanced according to actual system conditions.

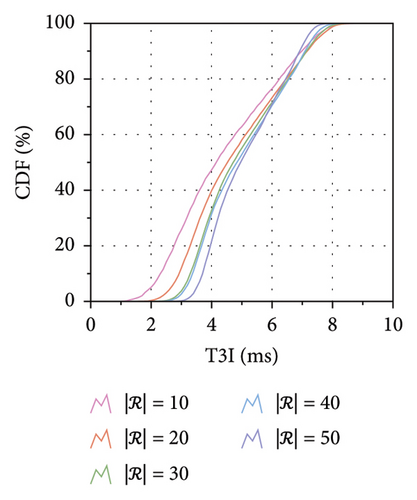

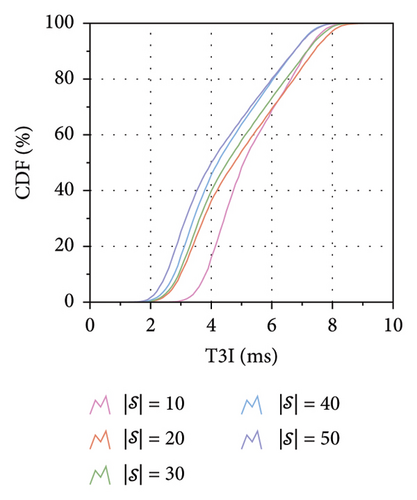

Figure 3(c) displays the Cumulative Distribution Function (CDF) of T3I metric as influenced by varying numbers of RIS devices. The results demonstrate that our proposed PMP algorithm effectively maintains a lower level of T3I for task offloading in VEI applications, thereby minimizing the gap caused by inaccurate incremental information. Furthermore, it is evident that an increased number of RIS devices results in a more concentrated distribution of T3I, indicating enhanced performance consistency, and reducing the interruptions of incremental information as well as the risk of recalculation.

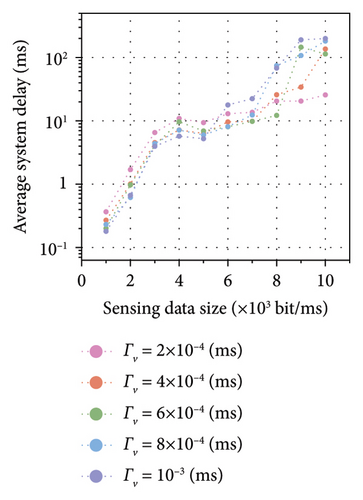

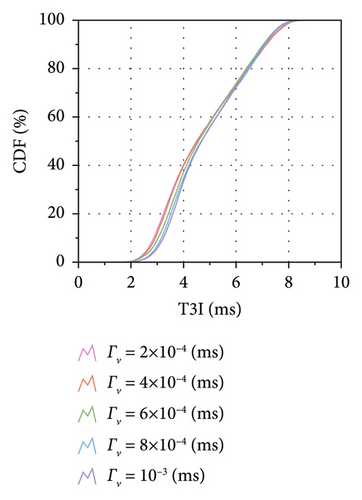

Figure 4 showcases the performance analysis of temporal dependency-aware task offloading in VEI applications, emphasizing scenarios influenced by vehicles. Panels (a), (b), and (c) of Figure 4 demonstrate how performance varies with different vehicle arrive rates, ranging from Γv = 2 × 10−4/ms to Γv = 10−3/ms, under conditions with RIS device number and SQ capability . Similarly, in Figure 4(a), the curves depict variations in the power delay weight factor ϑ fluctuates between 108 and 1012.

Figure 4(a) demonstrates that higher vehicle arrival rates are associated with increased total time-averaged power consumption. Notably, at higher values of the factor ϑ, a lower vehicle arrival rate at the same power level leads to increased delay. Conversely, at lower values of ϑ, a higher vehicle arrival rate at the same system delay level results in greater power consumption. This phenomenon is attributed to the fact that, at higher ϑ, vehicles can more efficiently utilize MEC computing resources under denser traffic conditions.

Figure 4(b) illustrates how average system latency varies as the size of vehicle sensing data changes under different vehicle arrival rates. It is evident that an increase in sensing data size leads to higher average system latency. Furthermore, at larger sensing data sizes, higher vehicle arrival rates exacerbate system latency. In contrast, at smaller vehicle sensing data sizes, vehicle arrival rates have a minimal impact on system latency. In fact, at these lower data sizes, higher vehicle arrival rates can slightly reduce system latency due to more effective utilization of MEC system resources, facilitated by higher traffic density.

Figure 4(c) displays the CDF of the T3I metric as influenced by varying numbers of vehicles. The results confirm that our proposed PMP algorithm effectively maintains a lower level of T3I for task offloading in VEI applications. In addition, the data reveal that a higher concentration of vehicles compared with RIS devices in the area leads to a more uniform distribution of T3I metric.

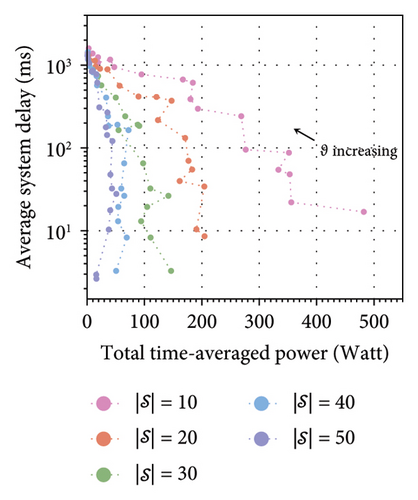

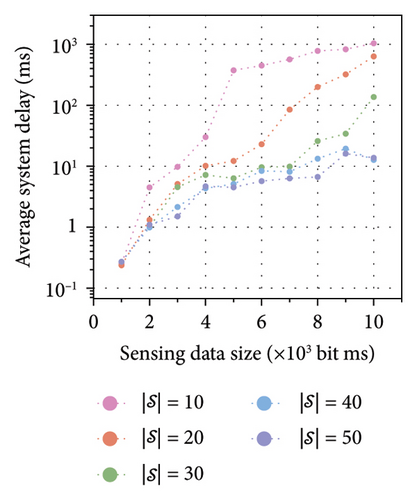

Figure 5 showcases the performance analysis of temporal dependency-aware task offloading in VEI applications, emphasizing scenarios influenced by SQ capability . Panels (a), (b), and (c) of Figure 5 show how performance varies with different SQ capabilities, ranging from to , under conditions with RIS device number and vehicle arrival rate Γv = 4 × 10−4/ms. Similarly, in Figure 5(a), the curves depict variations in the power delay weight factor ϑ fluctuates between 108 and 1012.

Figure 5(a) demonstrates that an increase in SQ capacity is associated with a decrease in total time-averaged system power consumption. Specifically, the curve at highlights a scenario where, relative to the number of SDs, a suboptimal SQ capacity necessitates significant power expenditure to meet required system latency. Moreover, considering the cubic relationship between computing power and frequency, appropriately increasing SQ capacity can effectively reduce power consumption while satisfying the latency demands of VEI applications. However, it is important to note that beyond a certain point, increasing SQ capacity yields diminishing returns on power savings and can lead to escalated infrastructure construction costs.

Figure 5(b) shows that an increase in SQ capacity correlates with a decrease in average system delay. Simultaneously, as the volume of sensing data increases, the corresponding delay also rises. Notably, the curves for and exhibit a certain degree of overlap, suggesting that beyond a certain point, additional increases in SQ capacity do not significantly reduce delay. This overlap indicates that when SQ capacity substantially exceeds the number of SDs, transmission delay becomes the predominant factor influencing overall average latency.

Figure 5(c) displays the CDF of the T3I metric as influenced by varying capabilities of SQs. The results confirm that our proposed PMP algorithm effectively maintains a lower level of T3I for task offloading in VEI applications. In addition, the data reveal that increased SQ capacity correlates with lower T3I metrics, demonstrating improved efficiency in handling temporal dependencies as the system’s computational resources expand.

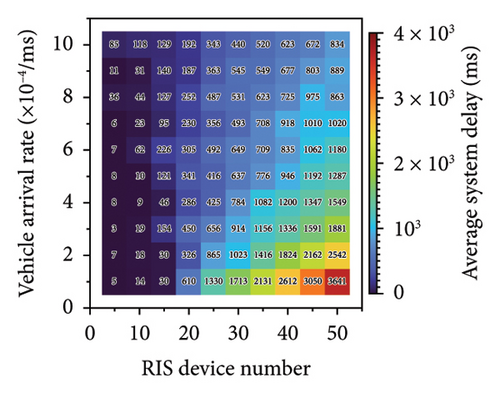

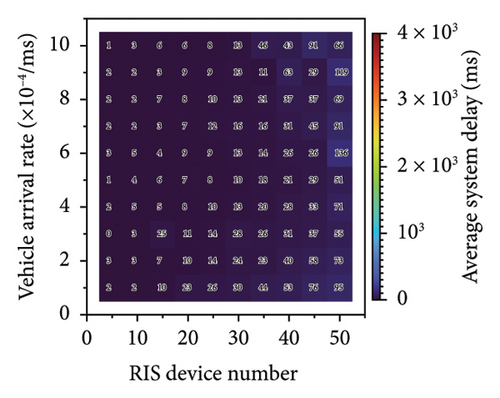

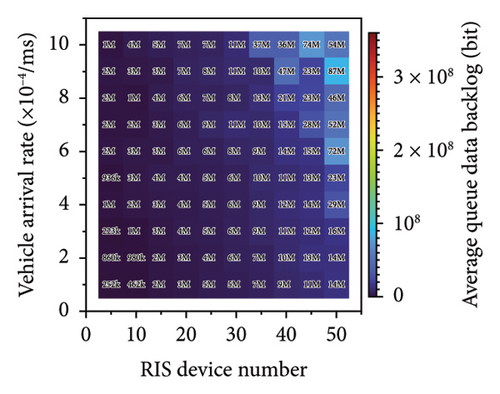

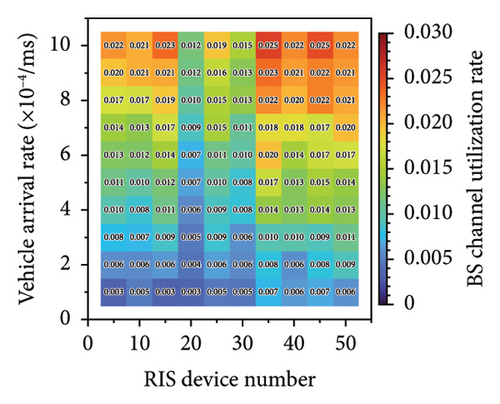

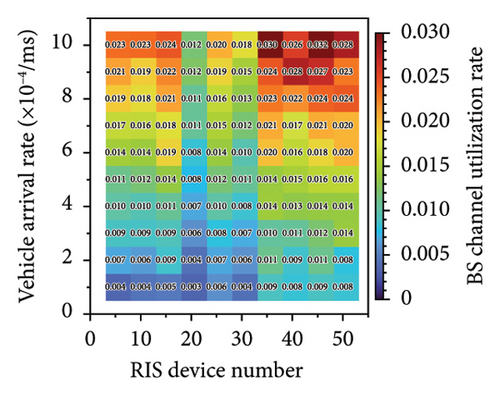

Figure 6 presents a performance heatmap in the VEI application, illustrating the impact of varying the number of RIS devices and vehicle arrival rates on system latency, MEC platform data backlog, and BS channel utilization, with the SQ number fixed at . The number of RIS devices ranges from to , recorded at intervals of 5. The vehicle arrival rate spans from Γv = 1 × 10−4/ms to Γv = 1 × 10−3/ms, recorded at intervals of 10−4 ms. The heatmap provides a comprehensive visualization of how these parameters influence system performance under the specified conditions.

Figure 6(a) presents a heatmap of average system delay, illustrating that under insufficient SQ service (), both increasing the number of RIS devices and higher vehicle arrival rates lead to rising delays. However, the heatmap shows that RIS devices have a more substantial impact on delay. This could be attributed to vehicles being able to more effectively utilize MEC channel resources in denser traffic conditions. On the other hand, RIS devices, which are subject to poorer channel conditions due to potential obstructions and fixed positions, experience difficulties in offloading data to MEC, leading to local queue buildup and increased delays.

Figure 6(b) presents a heatmap illustrating the average queue data backlog, defined as the time average of queue sizes after accounting for the data that have been served. In the context of an insufficient SQ service (), the heatmap reveals that both an increase in the number of RIS devices and a higher vehicle arrival rate lead a corresponding rise in the average queue data accumulation. Notably, the increase in RIS device numbers is the main factor driving the significant data pileup. This can be attributed to the limitations of RIS devices, such as poorer channel conditions and sustained data offloading demands, which hinder efficient data offloading and result in more pronounced queue buildup compared to vehicles.

Figure 6(c) presents a heatmap of BS channel utilization under conditions of insufficient SQ service (). The heatmap indicates that although both the number of RIS devices and vehicle arrival rates increase, the BS channel utilization remains relatively low. This is attributed to the limitation imposed by the MEC service capability. As the MEC struggles to process the incoming data from both RIS devices and vehicles efficiently, it creates a bottleneck, preventing full utilization of the BS channels (Figure 7).

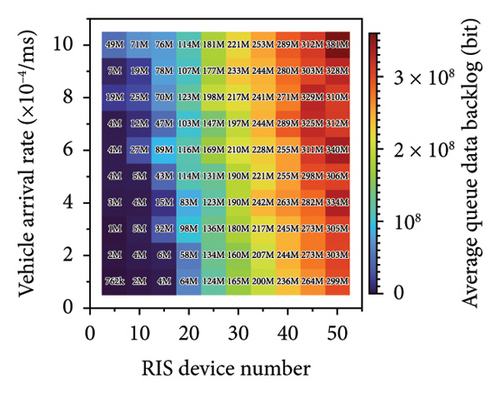

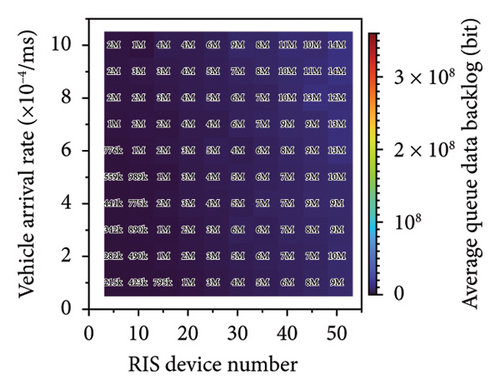

Figure 7 presents performance heatmaps for a VEI application, illustrating the impact of varying RIS device numbers and vehicle arrival rates on system delay, MEC platform data backlog, and BS channel utilization, with the SQ number fixed at . Similarly, the number of RIS devices ranges from to , recorded at intervals of 5. The vehicle arrival rate spans from Γv = 1 × 10−4/ms to Γv = 1 × 10−3/ms, recorded at intervals of 10−4 ms.

Figures 7(a) and 7(b) present heatmaps for the average system delay and the average queue data backlog, respectively. From the heatmaps, it is evident that as the MEC service capacity increases, both the system delay and the data backlog issues are significantly alleviated. Specifically, the heatmaps show a noticeable reduction in both the average system delay and the queue accumulation as the MEC platform is able to handle more data and provide faster processing, which results in a more efficient overall system performance. This improvement highlights the critical role of MEC service capacity in mitigating the bottlenecks associated with system delay and data backlog, thus enhancing the responsiveness and stability of the system.

Figure 7(c) presents a heatmap of BS channel utilization rate, suggesting that as the number of RIS devices and vehicle arrival rates increase, there is a noticeable improvement in the efficiency of BS channel utilization. It is apparent that the channel utilization for vehicles is considerably higher than that for RIS devices. This suggests that vehicles are able to achieve more effective resource utilization under denser conditions, highlighting the potentially higher resource efficiency of vehicles in high-density scenarios within the designed system.

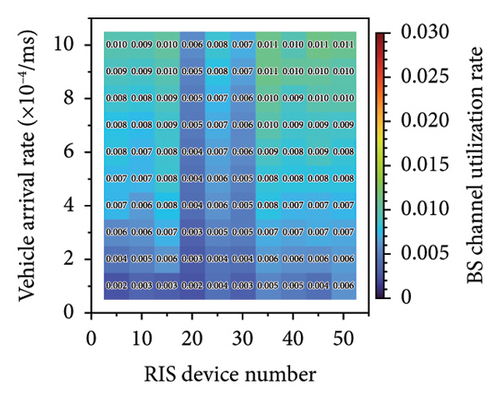

Figure 8 shows performance heatmaps for a VEI application, demonstrating how varying the number of RIS devices and vehicle arrival rates affects system delay, MEC platform data backlog, and BS channel utilization, with the SQ number fixed at . Similarly, the number of RIS devices is varied from to , with measurements taken at 5 device intervals. The vehicle arrival rate ranges from Γv = 1 × 10−4/ms to Γv = 1 × 10−3/ms, recorded at intervals of 10−4 ms.

Figures 8(a) and 8(b) present heatmaps for the average system delay and average queue data backlog, respectively, with . In these scenarios, it becomes apparent that the bottleneck for both system delay and data backlog is no longer the service capacity of the MEC platform. Instead, the limiting factor is the system data transmission and offloading capabilities. This shift in the bottleneck suggests that optimizing transmission strategies and enhancing communication efficiency are key areas that need further attention to improve overall system performance.

Figure 8(c) displays the heatmap of BS channel utilization, showing that as the number of RIS devices and vehicle arrival rates increase, there is a significant improvement in BS channel utilization efficiency. Similarly, the channel utilization for vehicles is notably higher than that for RIS devices. This further suggests that vehicles are better able to utilize communication resources under denser conditions.

In summary, our proposed system model suggests that vehicles traveling on roads, when more concentrated, achieve more effective resource utilization. Compared with RIS devices, the density of vehicles has a lesser impact on the system. However, adding RIS devices significantly affect overall system latency while enhancing VEI application performance; thus, RIS devices should be deployed judiciously to support vehicle perception. In addition, increasing the SQ capacity of MEC can reduce system latency under conditions of lower power consumption, but it also raises the costs associated with system infrastructure construction.

6. Conclusions

This study investigates an MEC-enabled IoV framework tailored for VEI applications, focusing on a system model that integrates vehicles and RIS devices to potentially improve system efficiency and performance. We assessed the impact of SQ capacity, the number of RIS devices, and vehicle arrival rates on key performance metrics such as latency, power consumption, and the T3I metric. The proposed PMP algorithm has shown promise in maintaining low levels of T3I, enabling effective task offloading in VEI applications and reducing errors due to inaccurate incremental information.

Our findings suggest that higher vehicle density can lead to more efficient resource utilization without significantly degrading overall system performance, illustrating the potential of vehicular networks to manage resource allocation effectively in dense traffic scenarios. However, the introduction of RIS devices, while beneficial, tends to increase system latency. Therefore, a careful deployment of RIS devices is recommended to find an optimal balance between enhancing vehicle perception and maintaining acceptable latency levels. In addition, our results highlight the crucial role of SQ capacity within the MEC framework, where increasing SQ capacity under conditions of low power consumption can effectively decrease system latency, improving computational resource allocation and task execution.

In conclusion, while the proposed VEI within an MEC environment shows potential, there remains much to explore and refine. Our study contributes preliminary insights into system performance under various configurations and operational conditions, suggesting avenues for future research to further explore and potentially maximize the benefits of edge computing in meeting the demands of modern vehicular networks. As research progresses, we hope to further understand and optimize the interactions between vehicles, RIS devices, and MEC infrastructure, with the aim of informing development for ITS in urban environments. This work modestly adds to the ongoing dialogue in the field, and we look forward to contributing more as our investigations continue.

Conflicts of Interest

The authors declare no conflicts of interest. Specifically, Alibaba Cloud Computing Co., Ltd. acted solely as a project coordinator under the National Key Research and Development Program of China (2022YFB2503202), with no participation in the design, execution, analysis, or authorship of this study.

Author Contributions

Kaiyue Luo: conceptualization, methodology, data curation, writing the original draft, and visualization. Yumei Wang: supervision, project administration, and reviewing and editing. Yu Liu: resources and reviewing and editing. Konglin Zhu: supervision, funding acquisition, and reviewing and editing. The manuscript was approved by all authors.

Funding

This work was supported by the National Key Research and Development Program of China (2022YFB2503202) and Fundamental Research Funds for the Central Universities (2024ZCJH07).

Open Research

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon reasonable request.