Exposing the Forgery Clues of DeepFakes via Exploring the Inconsistent Expression Cues

Abstract

The pervasive prevalence of DeepFakes poses a profound threat to individual privacy and the stability of society. Believing the synthetic videos of a celebrity and trumping up impersonated forgery videos as authentic are just a few consequences generated by DeepFakes. We investigate current detectors that blindly deploy deep learning techniques that are not effective in capturing subtle clues of forgery when generative models produce remarkably realistic faces. Inspired by the fact that synthetic operations inevitably modify the regions of eyes and mouth to match the target face with the identity or expression of the source face, we conjecture that the continuity of facial movement patterns representing expressions that existed in the veritable faces will be disrupted or completely broken in synthetic faces, making it a potentially formidable indicator for DeepFake detection. To prove this conjecture, we utilize a dual-branch network to capture the inconsistent patterns of facial movements within eyes and mouth regions separately. Extensive experiments on popular FaceForensics++, Celeb-DF-v1, Celeb-DF-v2, and DFDC-Preview datasets have demonstrated not only effectiveness but also the robust capability of our method to outperform the state-of-the-art baselines. Moreover, this work represents greater robustness against adversarial attacks, achieving ASR of 54.8% in the I-FGSM attack and 43.1% in the PGD attack on the DeepFakes dataset of FaceForensics++, respectively.

1. Introduction

In recent years, remarkable advancements in the computer vision domain, notably in generative adversarial networks (GANs) [1, 2], have made it possible to generate realistic images and videos, rendering them exceedingly difficult for human eyes to distinguish genuine faces. Particularly, DeepFakes have the ability to manipulate faces in diverse ways, such as identity swap, facial expression, or attributes manipulation to generate photorealism and accurately mimic the celebrities, presenting a distinct threat to the authenticity of the information in the digital landscape [3].

In the wake of DeepFakes’ proliferation, the wide availability of generative models has brought significant benefits to film production and entertainment. However, this accessibility has also led to a mass of misinformation, posing threats to both individual’s and societal stability. To combat the issues posed by DeepFakes, researchers are actively proposing various countermeasures aimed at determining the authenticity of images and videos. These efforts primarily focus on capturing subtle differences between real and synthetic faces passively, e.g., investigating spatial clues at the pixel-level [4], examining artifacts in the invisible frequency domain [5], and identifying differences in biological signals [6]. Unfortunately, with the rapid advancement of generative models, the clues of forgery are gradually being eliminated, rendering the majority of detectors ineffective.

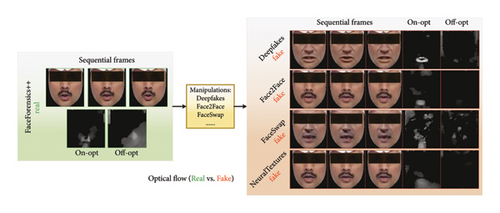

When generating synthetic faces using various manipulations, it becomes evident that DeepFake techniques can disrupt the smooth and continuous muscular movements, as depicted in Figure 1. These irregularities serve as strong indicators for detectors to distinguish between natural and manipulated faces. In this work, we propose a novel idea by exploring the high-level inconsistent expressions to expose the subtle differences between genuine and fabricated faces, thereby offering valuable insights for DeepFake detection. We envision that such semantically high-level inconsistent expressions could provide enhanced robustness against various perturbations and adversarial attacks.

Several researchers have delved into expression-based DeepFake detectors, the negative impact of the prior studies emphasizing on entire face and ignoring the distinctive movement patterns present in different facial regions. In addition, they tend to overlook the importance of temporal consistency across frames, as they primarily consider the isolated images. To effectively address the challenge of exploring inconsistent expressions in synthetic faces, it becomes imperative to develop a methodology that encompasses both spatial continuity and temporal coherence. This approach will enable a more comprehensive understanding of the intricate dynamics within the facial expressions and pave the way for improving DeepFake detection.

Our aim is to develop a DeepFake detector focusing on the inconsistent motion patterns of eyes and mouth regions through exploring expression clues. Traditional methods for exploring expression features often employ optical flow [8], which is commonly used in current expression recognition approaches [9]. However, such techniques may overlook the crucial texture information present in faces, which holds significant relevance in the domain of DeepFake detection. Furthermore, the negative impact of the existing expression-based detectors ignores the different patterns of facial movements in various regions. To overcome these limitations, we are committed to integrating spatial continuity and temporal coherence to accentuate the representations of expression motion patterns. In addition, our approach will delve into the inconsistent patterns of facial movements to enhance the effectiveness of DeepFake detection.

- 1.

Poor general capability to unseen manipulations: Many existing DeepFake detectors primarily aim to enhance performance on datasets with known synthetic techniques. However, when these models are transferred to unseen manipulation datasets, they often experience a significant performance decline. This is due to the fact that different datasets are generated using specific algorithms, causing the previously trained detectors to be overly tailored to the limited training data, thus limiting their general capabilities. Most previous detectors focus on different regions of interest based on distinctive data, resulting in a well-trained model that lacks generalization across datasets. Some previous detectors have focused mainly on improving their general potentialities, e.g., they search for common artifacts [4] or concentrate on blending boundaries [13]. However, generalization is disrupted by advanced generative models, and blending boundaries are vulnerable to low-level image transformation operations.

- 2.

Not robust to various perturbations. In real-world scenarios, DeepFakes are susceptible to various degradations. These perturbations can be categorized into two main types: common image transformations (e.g., noise, compression, and blur) [4] and imperceptible adversarial attacks (e.g., PGD and I-FGSM) [14]. For simple image transformations, most current detectors heavily rely on specific image textures, pixel distributions, or structural information to distinguish between authentic and manipulated faces. However, these clues can be easily disrupted or eliminated after undergoing common image transformations. In the case of adversarial attacks, existing studies often employ deep neural networks (DNNs) to design detectors, and attackers can exploit this vulnerability by introducing specific perturbations to a fake video, which can mislead the detector into classifying it as real.

To tackle the two fundamental challenges faced by DeepFake detectors, we first craft the movement spatial–temporal representations (MSTRs) of sequential frames to emphasize the spatial continuity and temporal coherence of movement patterns, and then we implement a dual-branch architecture to learn distinct movement patterns of eyes and mouth regions separately to explore the high-level expression clues, which are not susceptible to various perturbations and adversarial attacks.

- 1.

We observe that existing DeepFakes disrupt the continuity of facial muscle movements. To capture these forgery cues, we propose a DeepFake detection method that includes extracting spatiotemporal representations of facial movements, and a dual-branch network is designed to explore inconsistent expression cues in the eyes and mouth regions, enabling effective detection of DeepFake videos.

- 2.

To enhance robustness against various degradations and adversarial attacks, we design spatiotemporal representations of facial movements. Image degradations and adversarial attacks typically destroy texture details within frames. However, motion patterns extracted across frames, as a high-level semantic feature, remain insusceptible and can strengthen the robustness of our method.

- 3.

To improve generalization to unknown DeepFakes, the dual-branch network is designed to independently learn expression cues from the eyes and mouth regions. By extracting motion patterns from different facial areas, the method reduces reliance on specific datasets or forgery techniques, enhancing the generalization capability of our method.

- 4.

Experimental results on FaceForensics++, Celeb-DF-v1, Celeb-DF-v2, and DFDC-Preview demonstrate that our method outperforms state-of-the-art approaches. Furthermore, we conduct experiments under various degradations and two typical adversarial attacks, achieving an average AUC of 92.6% and an ASR of 48.0%. The results indicate that our model exhibits superior robustness compared to other advanced detectors.

The rest of this manuscript is structured as follows. Section 2 provides a review of related works on DeepFake generation, DeepFake detection, and expression-based DeepFake detection. Section 3 defines the materials and methods of this study. Section 4 offers experimental details and comprehensive results. Section 5 discusses the limitations of current generative models in replicating human facial expressions. Lastly, Section 6 concludes the paper, with limitations and future research directions.

2. Related Works

2.1. DeepFake Generation

GANs [1, 2] have witnessed significant advancements in image generation and manipulation. DeepFakes exploit the power of GANs to create realistic multimedia content, posing potential risks to personal privacy and societal stability. Generally, there are four primary types of DeepFakes: entire face synthesis, attribute manipulation, identity swap, and face reenactment.

The entire face synthesis generates faces which are nonexistent in the real world, such as PGGAN [16] and StyleGAN [17]. The attribute manipulation can modify simple facial attributes (e.g., hair color and smile) and retouch complex attributes (e.g., gender and age), such as StarGAN [18] and STGAN [19]. The identity swap can replace the face between the target and source image, and CycleGAN [20] and FaceSwap [21] are the two classical works. Similar to identity swap, face reenactment is able to swap the facial expression between the target and source image by deploying popular methods, such as Face2Face [22].

Nevertheless, the existing DeepFake techniques do not explicitly preserve the natural expression movements of eyes and mouth because they generate faces focused on the quality of frame level, which fails to capture the continuity of expression movements and model the temporal information across sequential frames in a video. Furthermore, current generative models encounter challenges in learning sufficiently continuous and natural patterns of expressions from limited data samples, inspiring us to explore the indicators of inconsistent expressions to classify the real and synthesized faces.

2.2. DeepFake Detection

In recent years, numerous studies have demonstrated that even the minor differences between genuine and forged faces can be captured to distinguish authenticity. Therefore, researchers are actively engaged in devising various countermeasures to detect DeepFakes, which fall into two categories, that is, frame-level and video-level approaches.

Frame-level approaches always focus on the authenticity of single frames. Afchar et al. propose a MesoNet designed for analyzing the faces generated by DeepFake and Face2Face methods at a mesoscopic level [23]. Xception is a conventional CNN trained on ImageNet and is adapted for DeepFake detection tasks through replacing the fully connected layer with a pair of outputs [7]. Li et al. provide a general method in which most face forgery methods rely on a blending step and do not depend on any knowledge of the artifacts linked to a particular facial manipulation technique [24]. Zhao et al. propose a framework with an attention module for combining textural and semantic features to detect forgery images [25]. Wang, Sun, and Tang take the original images and their corresponding quality-degraded images as the inputs of Siamese networks [26]. Cao et al. use a reconstruction network to learn the common features of real images, and they utilize the reconstruction differences to classify real and fake images [27]. Shiohara and Yamasaki suggest a method to reproduce the common forgery clues by blending the pseudosource and target faces from the same pristine faces [13]. Ba et al. fuse broader semantic forgery features to improve the generalizability of DeepFake detection [28].

Video-level approaches distinguish the forgery of all video frames and add temporal information during forgery detection. Güera and Delp utilize a convolutional neural network (CNN) to extract features at the frame level and train a recurrent neural network (RNN) for DeepFake classification [29]. Haliassos et al. pretrain a spatial–temporal network to acquire high-level semantic representations associated with natural lipreading, which can be designed for general DeepFake detection [15]. Gu et al. present a network to learn the inconsistencies of local and global features to capture more general forgery clues [30].

In fact, most of the current methods are impractical in real-world scenarios, as they exhibit significant performance drops when encountering unseen manipulations. This is primarily due to their focus on distinct regions with unique data characteristics, and they suffer from various perturbations because they rely on low-level image features which are easily destroyed by common image transformation. Some existing works explore the generalization, they search common artifacts of multiple synthetic methods [13], but the artifacts are easy to destroy by advanced generative models and the blending boundary is susceptible to low-level image transformation operations. To address the pivotal challenges, we propose a movement representation for representing high-level semantic expression features.

2.3. Expression-Based DeepFake Detection

Since the expression recognition techniques achieved great development [9], some researchers use expression clues for DeepFake detection. Researchers exploit facial emotions learned from the expression recognition model to classify real and fake faces. In addition, some studies combine the multimodel features, for example, works capture the inconsistencies between visual and voice models to detect DeepFakes [31, 32].

Although current expression-based DeepFake detection methods have shown some progress, they still suffer from fundamental shortcomings. These approaches typically utilize the entire face during training, overlooking the fact that different regions of the face exhibit distinct movement patterns. Furthermore, they often fail to capture the crucial temporal coherence across frames, as they primarily focus on isolated images. Moreover, existing methods predominantly rely on in-dataset evaluation, neglecting the vital challenges related to general capabilities and robustness that current detectors encounter. To address these limitations, our work delves into exploring the diverse expression patterns exhibited by different facial regions. We recognize that fake faces may still display expressions but their movement patterns are disrupted by forgery techniques, leading to discernible deviations from genuine faces.

To solve the aforementioned issues, previous studies have noted that most forgery videos alter the eyes region [33] or mouth region [15] in order to align the target face with the identity or expression of the source face. In this paper, our objective is to expose inconsistent clues by exploring facial expressions. While generative models can indeed synthesize photorealistic faces, the natural movement patterns of facial expressions are driven by the coordinated actions of associated muscles, making them difficult to replicate. This is primarily because current generative models produce continuous videos always focusing on the quality of frame level, and they do not explicitly capture the temporal relationship and the continuity of subtle movements across multiple frames. To synthesize continuous motion features, generative models often through interpolation techniques introduce jitter and discontinuity in fake videos. This presents a significant challenge when attempting to create convincing and coherent expressions that flow naturally.

3. Materials and Methods

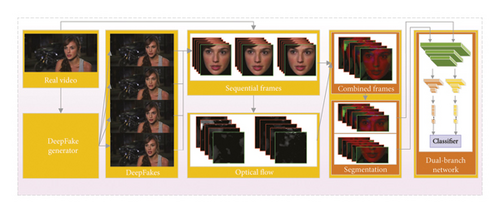

We present a novel approach aimed at effectively exposing DeepFakes by exploring the inconsistent facial expression patterns. In Section 3.1, we delve into the insights behind this work, followed by an overview of our methodology in Section 3.2. We then proceed to elaborate on the techniques employed to design spatial–temporal representations of facial movements in Section 3.3, as well as the process of learning expression inconsistency in Section 3.4. In addition, we outline the loss functions utilized in Section 3.5. The pipeline of our method is illustrated in Figure 2(a).

3.1. Insight

In practical scenarios, existing DeepFake detectors suffer from the challenges in terms of generalization capabilities and robustness. To effectively tackle these key issues, our goal is to identify forgery clues which are independent of specific manipulations and remain resistant to common perturbations. Our work is inspired by the observation that the current DeepFake techniques struggle to replicate continuous facial expressions due to their reliance on single-frame generation, and generative models encounter difficulties in capturing subtle and continuous changes in facial expressions. To capture these inconsistent expression clues, we investigate the role of optical flow in determining facial expressions, which are widely used for recognizing different categories of emotions and play a crucial role in analyzing the content of expressions. In addition, different action units labels describe either eyes or mouth movements, but not both, and the categories of expressions are determined by the combination of action units’ labels. Consequently, the movement patterns of the eyes and mouth exhibit differences [34].

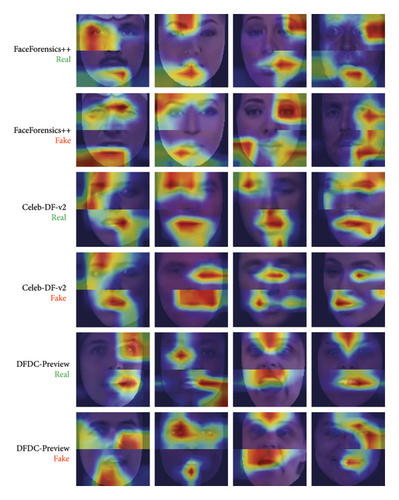

Forgery operations have an impact on the continuous and natural movements present in authentic faces. This is due to the fact that current synthetic methods primarily focus on capturing and reproducing facial expressions by single frames, lacking the ability to accurately capture complex muscular movements and model temporal information. Therefore, the presence of inconsistent expression cues can be leveraged to reveal subtle differences between real and forged videos. As depicted in Figure 1, manipulation operations can easily disrupt and diminish the continuity of optical flow, which represents the speed and direction of facial movements. Building upon this understanding, we propose a novel approach that explores expression clues for DeepFake detection. In Figure 2(a), we illustrate the pipeline of our method, which conducts MSTRs to highlight the spatial continuity and temporal coherence of sequential frames, within a dual-branch network architecture to learn the different expression patterns of eyes and mouth regions separately.

3.2. Overview

Given a video that contains T frames, the target is to judge whether this video is real or fake by exploring expression features. For this purpose, we first develop the MSTR in Section 3.3 for the input video, which can highlight the spatial continuity and temporal coherence, and output a combined image with optical flow and the interframe through three sequential frames, that is, , where T is the number of three sequential frames, is the regions of the face we focus on, and C is the number of channels. In this stage, we formulate with a single color channel for computing optical flow. Intuitively, x represents the temporal variation of region movements (i.e., eyes and mouth region in our work) in the faces, which could highlight the expression patterns.

We design a dual-branch neural network that takes the MSTR feature map as input and predicts whether the input faces are real. However, various regions of a face have different motion patterns, e.g., eyebrows raise or droop, eyelid raise or tighten, and lips stretch or tight, and these motions are related to particular muscular movements. If we just take the whole face as the input, we may corrupt the distinct motion patterns of different face regions. To address this problem, we choose the most significant regions of the eyes and mouth as the input feature maps (e.g., the process shown in Figure 2(a)).

Our objective is to capture the diverse motion patterns exhibited by the eyes and mouth areas. To achieve this, we present a dual-branch network that effectively learns the motion of the eyes and mouth separately through the design of the base layer and branch layer.

3.3. MSTR

Utilizing expression features for DeepFake detection can be approached by using existing expression representations designed for expression recognition. However, they may ignore the significant texture information present in faces, which holds significant relevance in DeepFake detection. To track this problem, we employ the optical flow technique to capture continuous expression patterns across frames and combine the interframe as a new input. As illustrated in Figure 3, we apply the optical flow diagram to three consecutive frames of both real and synthetic faces. We observe that real facial expressions involve natural movements caused by the contraction of relevant muscles. For instance, in the first row and second column, we can see uniform and consistent movements in the cheek region of real faces. In contrast, the movements in synthetic faces appear discontinuous and irregular. In addition, in the fourth column, we observe that manipulation operations diminish the movements in the region of the eyes. These observations suggest that expression motion patterns can serve as strong indicators of authenticity.

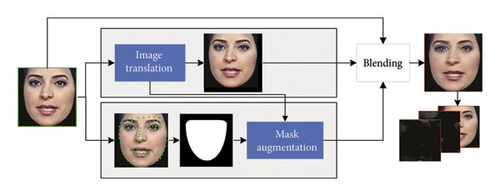

Figure 2(a) shows examples of real and forged faces and their MSTR images. We have observed that the inconsistencies can be easily exposed on our MSTR images which will provide a more powerful indicator for distinguishing authenticity. In the cross-dataset evaluation stage, to help the model learn common forgery cues, we deploy a landmark augmentation method [13] for the fake interframe as shown in Figure 4.

3.4. Expression Inconsistency Learning

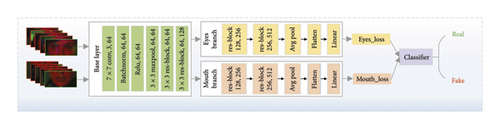

In this section, we describe the dual-branch network, which serves as the foundation for our effective DeepFake detector, leveraging both the MSTR and distinct movement patterns of the eyes and mouth regions. According to the works [36, 37], the network structures are shown in Figure 2(b). In our paper, we reconstruct the base layer and branch layer to capture distinct features from the eyes and mouth separately. For specific details regarding the backbone architecture, refer to Table 1.

| Usage | Layers | Operator | Input size | Output size |

|---|---|---|---|---|

| Base layer | Layer 1 | Conv2d 7 × 7 | 3 × 112 × 224 | 64 × 56 × 112 |

| MaxPool2d 3 × 3 | 64 × 56 × 112 | 64 × 28 × 56 | ||

| Layer 2 | Res-block 3 × 3 | 64 × 28 × 56 | 64 × 28 × 56 | |

| Res-block 3 × 3 | 64 × 28 × 56 | 64 × 28 × 56 | ||

| Layer 3 | Res-block 3 × 3 | 64 × 28 × 56 | 128 × 14 × 28 | |

| Res-block 3 × 3 | 128 × 14 × 28 | 128 × 14 × 28 | ||

| Branch layer | Layer 4 | Res-block 3 × 3 | 128 × 14 × 28 | 256 × 7 × 14 |

| Res-block 3 × 3 | 256 × 7 × 14 | 256 × 7 × 14 | ||

| Layer 5 | Res-block 3 × 3 | 256 × 7 × 14 | 512 × 4 × 7 | |

| Res-block 3 × 3 | 512 × 4 × 7 | 512 × 4 × 7 | ||

| Layer 6 | AdaptiveAvgPool | 512 × 4 × 7 | 512 × 1 × 1 | |

| Flatten | 512 × 1 × 1 | 512 | ||

- Note: This table shows the size of kernels, the resolution of the input feature map, and the input channels of each layer.

3.4.1. The Base Layer

- 1.

The convolutional layer with a kernel size of 7 × 7 is applied to extract low-level features (e.g., edges and textures), and after that, the input eyes or mouth images are transformed into a 64 × 56 × 112 feature map. After passing through the BatchNorm2d and ReLU layers, we obtain

(8) -

where Wconv is the weight of the convolutional kernel and bconv is the bias term of the convolutional operation.

- 2.

The MaxPool2d layer reduces the size of the feature map while preserving the most significant features, so we have a 64 × 28 × 56 feature map represented as follows:

(9) - 3.

A series of Res-block with a kernel size of 3 × 3 is employed to further capture subtle features and inconsistencies in forgery images, resulting in a 128 × 14 × 28 feature map. Each Res-block contains a Conv2d layer, BatchNorm2d layer, and ReLU layer. In the key Res-block, a SA layer is added, which introduces an attention mechanism, enabling the model to enhance important features, and this process can be represented as

(10)

Through extensive experiments, we have determined that the four Res-blocks are the optimal choice for our base layer.

3.4.2. The Branch Layer

The layers come into play after extracting the feature maps from the base layer. We have devised a dual-branch model to independently capture the higher-level expression features of eyes and mouth regions. We incorporate another four Res-blocks in this process, which enable the extraction of more abstract and semantically rich features. In summary, our model utilizes the same base layer to extract low-level and midlevel features. The branch layer is dedicated to focusing on higher-level features while retaining the feature information from all channels, leading to more effective detection capabilities.

In summary, we introduce a dual-branch network architecture of the base layer and branch layer. This network effectively extracts comprehensive high-level expression features from both eyes and mouth regions, endowing our model with robustness against various perturbations. The training and testing process is shown in Algorithm 1.

-

Algorithm 1: Training and testing process.

-

Input: RAW video , preprocess method p(·), base layer module B(·; θb), branch layer module E(·; θe), and classification layer C(·; θc)

-

Output: the authenticity probability of each input

- 1.

Preprocessed image

- 2.

while the number of training iterations do

- 3.

me⟵B(xe; θb),

- 4.

,

- 5.

,

- 6.

Optimization by (14), updating weights

- 7.

when training achieves stability

- 8.

end while

- 9.

for detection steps do

- 10.

xe, xm = p(x)

- 11.

me⟵B(xe; θb),

- 12.

,

- 13.

,

- 14.

pred = λfeyes + (1 − λ)fmouth

- 15.

end for

- 16.

returnpred

3.5. Classification Loss Functions

The dual-branch learning strategy in our method aims to capture and learn the distinct expression patterns of the eyes and mouth regions. The learning procedure of our proposed method involves three steps as follows: (1) extracting the same base features, (2) learning various branch features, and (3) performing binary classification. To optimize the model, we design three loss functions: the classification loss for the eyes Leyes_cls, the classification loss for the mouth Lmouth_cls, and the total loss Ltotal. These loss functions are optimized using the general cross-entropy loss to update the parameters of the network.

4. Results and Analysis

In order to assess the effectiveness of our proposed approach, the experiment settings are described in Section 4.1, and we perform in-dataset experiments using a training set and a testing set sourced from the same data distribution, as detailed in Section 4.2. Furthermore, to evaluate the general capability of our method, we conduct cross-dataset evaluations by employing a known training set and an unseen testing set, as discussed in Section 4.3. We also investigate the robustness of our model to diverse perturbations in Section 4.4, and adversarial attacks are examined in Section 4.5. In addition, we employ ablation experiments in Section 4.6 to assess the significance of different components integrated within our model.

4.1. Datasets

- 1.

FaceForensics++ dataset [7] comprises 1000 genuine videos and 4000 fake videos created by face swapping techniques such as DeepFakes and FaceSwap, as well as face reenactment methods such as Face2Face and NeuralTextures. Each video is available in three versions: RAW, slightly compressed, and heavily compressed.

- 2.

Celeb-DF-v1 and Celeb-DF-v2 datasets [38] consist of 540 authentic videos and 5639 forged videos of celebrities on YouTube, generated by using advanced DeepFakes techniques to achieve high-quality forgeries.

- 3.

DFDC-Preview dataset [39], released in conjunction with the competition, comprises 5000 videos generated by two facial manipulation techniques. The dataset exhibits diversity across various attributes such as gender, age, and skin tone.

In our experiments, the video datasets are split into training, validation, and testing sets in an 8:1:1 ratio. Then, we use OpenFace to extract facial images from consecutive frames. Two optical flow images are calculated between three consecutive facial images and combined with the middle facial image to create a new MSTR. For generalization evaluations, we perform data augmentation on the middle facial image. These steps are crucial for standardizing the input data across different datasets and improving model performance.

4.2. Baselines

In this section, we adopt several state-of-the-art DeepFake detection methods for evaluating our proposed model. Considering that most DeepFake detection methods have not yet released their code and weights, for a fair comparison, we directly cite their results from the corresponding original papers. We compare the latest works in both in-dataset and cross-dataset evaluations. For the subsequent robustness evaluations, we select the top four methods that performed best in the cross-dataset evaluations, reproduce their released code, and compare them with our proposed method. These works show good generalization and robustness across multiple datasets, making them suitable as comparison baselines.

4.2.1. In-Dataset Evaluation

We train and test the methods on FaceForensics++ (C23), Celeb-DF-v2, and DFDC-Preview datasets, respectively. The comparative methods include the following (1) ResNet50 [36]: it presents a residual network to alleviate the training process for the task of image classification; (2) MesoNet [23]: it suggests detecting forgery faces by implementing the approach at a mesoscopic analysis level; (3) CNN + LSTM [29]: it utilizes a convolutional LSTM to generate a temporal sequence descriptor for detecting fake videos; (4) Xception [7]: it is released with the FaceForensics++ dataset; (5) SPSL [40]: it proposes a two-branch network combined with original information and multiband frequencies; (6) Face X-ray [24]:it proposes a forgery image, which is detected by detecting the mixed boundary of source image and target image; (7) F3-Net [41]: it takes two different frequency-aware clues to deeply capture the forgery patterns; (8) 3DCNN [42]: it proposes a novel network to extract forgery features of different scales; (9) DeepFakeucl [43]:it uses contrastive learning to design unsupervised detection methods; (10) LipForensics [15]: it extracts advanced semantic features through a pretrained lipreading model; (11) Liu et al. [44]: they present a lightweight 3DCNN to detect DeepFakes; (12) FInfer [45]: it proposes a novel framework to deal with the high-visual–quality synthetic videos; (13) SBIs [13]: it generates forgery images by blending source and target face image from real face images; and (14) ResNet34 [28]: it combines the semantic-rich features to improve the accuracy (Acc) and generalizability of DeepFake detection in real-life scenarios.

4.2.2. Cross-Dataset Evaluation

The cross-dataset evaluation is more challenging than in-dataset, and we train the model on the FaceForensics++ C23 dataset and test them on Celeb-DF-v1, Celeb-DF-v2, and DFDC-Preview datasets, respectively. Frame-level comparison experiments include the following: (1) DSP-FWA [33]: captures the features in the process of affine face warping; (2) Multiattention [25]: employs multiattention heads to design a new DeepFake detection method; (3) LiSiam [26]: considers the localization invariance of various image degradations to detect DeepFakes; (4) RECCE [27]: captures the common representations from real facial images to enhance the generalizability; (5) MesoNet [23]; (6) Xception [7]; (7) Face X-ray [24]; (8) SBIs [13]; and (9) ResNet34 [28]. These have been discussed in Section 4.2.1. Video-level comparison experiments include the following: (1) Xception [7]: it is the frame-level method used to compute video-level metrics; (2) HCIL [30]: adopts a two-level contractive learning to capture more general clues for detecting forgery videos; (3) RATF [46]: designs a module to generate temporal filters for various facial regions; (4) F3-Net [41]; and (5) LipForensics [15]. These have been discussed in Section 3.2.1.

The comparison of state-of-the-art DeepFake detection methods and our proposed method is shown in Table 2. We jointly leverage spatiotemporal features to explore advanced semantic expression clues in the eyes and mouth regions of the human face, which differs from state-of-the-art approaches. In addition, it demonstrates both generalization capabilities for unknown DeepFake technologies and robustness against various image degradations and adversarial attacks.

| Methods | Time | Input object | Extracted features | Capabilities | |||

|---|---|---|---|---|---|---|---|

| Image | Video | Type | Methods | G | R | ||

| ResNet50 [36] | 2016 | ✓ | ✕ | S | DNN-based | ✕ | ✕ |

| MesoNet [23] | 2018 | ✕ | ✓ | S | DNN-based | ✕ | ✕ |

| CNN + RNN [29] | 2018 | ✕ | ✓ | S | DNN-based | ✕ | ✕ |

| Xception [7] | 2019 | ✕ | ✓ | S | DNN-based | ✕ | ✕ |

| Face X-ray [24] | 2020 | ✕ | ✓ | S | Blending boundary | ✓ | ✕ |

| F3-Net [41] | 2020 | ✕ | ✓ | F | Frequency-aware clues | ✕ | ✓ |

| SPSL [40] | 2020 | ✕ | ✓ | S + F | DNN-based | ✓ | ✕ |

| 3DCNN [42] | 2021 | ✕ | ✓ | S + T | DNN-based | ✓ | ✕ |

| DeepFakeucl [43] | 2021 | ✓ | ✕ | S | Image augmentation | ✓ | ✓ |

| DSP-FWA [33] | 2021 | ✕ | ✓ | S + T | Eyes movements | ✓ | ✕ |

| LipForensics [15] | 2021 | ✕ | ✓ | S + T | Mouth motion | ✓ | ✓ |

| Liu et al. [44] | 2021 | ✕ | ✓ | S + T | DNN-based | ✕ | ✕ |

| Multiattention [25] | 2021 | ✓ | ✕ | S | DNN-based | ✓ | ✕ |

| FInfer [45] | 2022 | ✕ | ✓ | S + T | Frame inference–based | ✓ | ✕ |

| HCIL [30] | 2022 | ✕ | ✓ | S + T | Temporal inconsistency | ✓ | ✕ |

| LiSiam [26] | 2022 | ✓ | ✓ | S | Localization invariance | ✓ | ✓ |

| RATF [46] | 2022 | ✕ | ✓ | S + T | Region-aware temporal inconsistency | ✓ | ✕ |

| RECCE [27] | 2022 | ✓ | ✕ | S | Reconstruction-classification learning | ✓ | ✓ |

| SBIs [13] | 2022 | ✓ | ✕ | S | Self-blended augmentations | ✓ | ✓ |

| ResNet34 [28] | 2024 | ✓ | ✕ | S | Global and local features | ✓ | ✕ |

| Ours | ✕ | ✓ | S + T | Expression cues of eyes and mouth | ✓ | ✓ | |

- Note: We mainly show the time, input object (e.g., image or video), type of their extracted features (e.g., spatial (S), frequency (F), or temporal (T)), and methods of their extracted features. G and R represent the capabilities of the detection methods. G denotes whether the detector has the generalization capabilities on unknown DeepFake technologies. R denotes whether the detector is robust against various degradations and attacks.

4.3. Metrics

To evaluate the effectiveness of our DeepFake detection method, we utilize the Acc and AUC as performance metrics to measure its ability to distinguish between real and synthetic face images. In addition, we report the ASR to assess the misclassification of faces when subjected to adversarial attacks.

4.4. Implementation Details

In our evaluation experiments, the optimizer is SGD, the learning rate is 10−3, with a momentum of 0.9, the training epoch is 100, and the batch size is 128. All our experiments are performed on a server with 40 cores 2.20 GHz Xeon CPUs, 64 GB RAM, and two NVIDIA Tesla V100 GPUs with 32 GB memory.

4.5. In-Dataset Evaluation

To comprehensively evaluate the effectiveness of our proposed method, we focus on in-dataset evaluation first. To ensure meaningful comparisons with existing studies, we employ widely recognized datasets, namely, FaceForensics++, Celeb-DF-v2, and DFDC-Preview. Our method is trained and tested within the same dataset to maintain consistent data distribution. Throughout the evaluation process, we conduct thorough comparisons between our proposed method and state-of-the-art approaches in the field.

The performance results on the FaceForensics++, Celeb-DF-v2, and DFDC-Preview datasets are presented in Table 3. Our proposed method demonstrates an average improvement in AUC scores of 8.9%, 5.2%, and 6.0%, respectively, across these datasets. Specifically, on the FaceForensics++ (C23) dataset, our method surpasses the state-of-the-art ResNet34 approach by 1.6% in AUC scores. On the DFDC-Preview dataset, our approach exceeds Liu et al.’s method by 1.3% in AUC scores. For the Celeb-DF-v2 dataset, although our method ranks below the most advanced ResNet36 method, it still achieves the second-highest AUC score of 99.3%.

| FaceForensics++ (C23) | Celeb-DF-v2 | DFDC-Preview | ||||||

|---|---|---|---|---|---|---|---|---|

| Methods | Time | AUC↑ | Methods | Time | AUC↑ | Methods | Time | AUC↑ |

| SPSL [40] | 2020 | 94.3 | Xception [7] | 2019 | 98.5 | ResNet50 [36] | 2016 | 87.7 |

| Face X-ray [24] | 2020 | 87.4 | DeepFakeucl [43] | 2021 | 90.5 | MesoNet [23] | 2018 | 90.6 |

| F3-Net [41] | 2020 | 98.1 | 3DCNN [42] | 2021 | 88.8 | CNN + RNN [29] | 2018 | 91.5 |

| 3DCNN [42] | 2021 | 72.2 | SBIs [13] | 2022 | 93.7 | Xception [7] | 2019 | 94.0 |

| FInfer [45] | 2022 | 95.7 | FInfer [45] | 2022 | 93.3 | LipForensics [15] | 2021 | 78.1 |

| ResNet34 [28] | 2024 | 98.3 | ResNet34 [28] | 2024 | 99.9 | Liu et al. [44] | 2021 | 94.0 |

| Ours | 99.9 | Ours | 99.3 | Ours | 95.3 | |||

- Note: The best and second-best AUC scores (%) are highlighted using bold and italic formatting, respectively. The uparrow indicates that the larger the AUC, the better the experimental performance.

It is worth noting that the results on the DFDC-Preview dataset are lower compared to those on the FaceForensics++ (C23) and Celeb-DF-v2 datasets. This discrepancy is attributed to the presence of low-quality videos in the DFDC-Preview dataset, which complicates the extraction of human faces.

In order to better understand and visualize the spatial regions that our model relies on, we select the real faces and corresponding fake faces to plot the results of Grad-CAM in Figure 5. As expected, our proposed model focuses predominantly on similar areas (eyes and mouth) among real and fake faces.

4.6. Cross-Dataset Evaluation

Given the rapid advancements in DeepFake techniques, it is crucial to develop a detector that can accurately distinguish forged contents generated by novel manipulation methods, even when trained on known datasets. To enable our model to learn common synthetic clues, we employ a data enhancement method [13] to the interframe. Specifically, we replace the preprocessed interframe in Section 3.3 with a data-enhanced frame, as illustrated in Figure 4. For our experiments, we initially train our model using the FaceForensics++ dataset and subsequently evaluate its performance on other datasets such as Celeb-DF-v1, Celeb-DF-v2, and DFDC-Preview. The experimental results are presented in Table 4.

| Methods | Year | Input object | Training dataset | Celeb-DF-v1 AUC↑ | Celeb-DF-v2 AUC↑ | DFDC-Preview AUC↑ |

|---|---|---|---|---|---|---|

| MesoNet [23] | 2018 | Video | FF++ | 42.2 | 53.6 | 59.4 |

| Xception [7] | 2019 | Video | FF++ | 75.0 | 77.8 | 69.8 |

| Face X-ray [24] | 2020 | Video | FF++ | 80.6 | 74.2 | 80.9 |

| F3-Net [41] | 2020 | Video | FF++ | — | 75.7 | — |

| DSP-FWA [33] | 2021 | Video | FF++ | 78.5 | 81.4 | 59.5 |

| LipForensics [15] | 2021 | Video | FF++ | 83.3 | 82.4 | 73.5 |

| HCIL [30] | 2022 | Video | FF++ | — | 79.0 | 69.2 |

| RATF [46] | 2022 | Video | FF++ | — | 76.5 | 69.1 |

| LiSiam [26] | 2022 | Image/Video | FF++ | 81.1 | 78.2 | — |

| Multiattention [25] | 2021 | Image | FF++ | — | 67.4 | — |

| RECCE [27] | 2022 | Image | FF++ | — | 68.7 | — |

| SBIs [13] | 2022 | Image | FF++ | 87.9 | 93.2 | 86.2 |

| ResNet34 [28] | 2024 | Image | FF++ | 81.8 | 86.4 | 85.1 |

| Ours | Video | FF++ | 88.4 | 87.2 | 84.1 | |

- Note: The best and second-best AUC scores (%) are highlighted using bold and italic formatting, respectively. Our proposed method demonstrates superiority over state-of-the-art frame-level detectors on the Celeb-DF-v1 dataset and outperforms advanced video-level methods averagely on three datasets. The uparrow indicates that the larger the AUC, the better the experimental performance.

4.6.1. Comparison With Frame-Level Detectors

Our approach demonstrates a significant improvement over advanced frame-level methods on the Celeb-DF-v1 dataset, achieving an average increase of 13.1% in AUC scores. Although our method shows lower results on the Celeb-DF-v2 and DFDC-Preview datasets compared to the frame-level methods, this can be attributed to the inherent differences in focus. Frame-level models concentrate solely on the detailed information within individual images, ignoring interframe correlations, which enhances their generalization capabilities across various images. Despite the superior generalization ability of frame-level DeepFake detectors, video-level models, such as ours, leverage temporal sequence information, which proves advantageous for in-dataset evaluations as shown in Table 3 and specific scenarios involving interframe manipulations or motion artifacts.

4.6.2. Comparison With Video-Level Detectors

When compared to state-of-the-art video-level methods, our model outperforms the advanced LipForensics, HCIL, and RATF approaches across the three datasets. Specifically, our method achieves an average improvement in AUC scores of 5.1%, 9.7%, and 14.2% on the Celeb-DF-v1, Celeb-DF-v2, and DFDC-Preview datasets, respectively.

4.7. Robustness to Various Perturbations

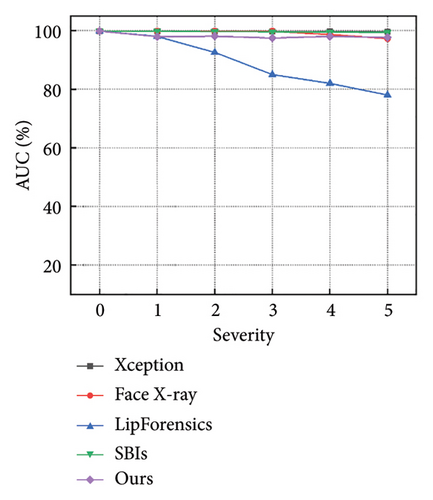

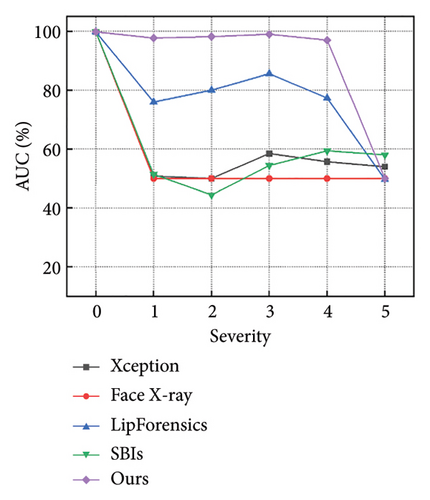

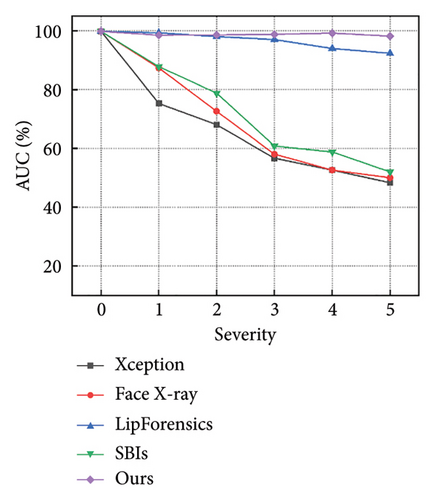

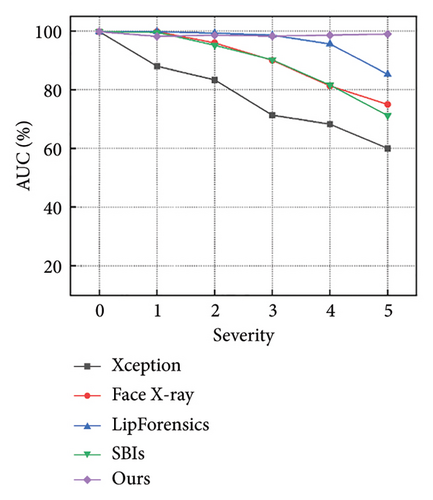

DeepFake detectors should exhibit robustness against various image transformations and adversarial attacks that images may encounter in the real world. To assess the detectors’ robustness to unseen degradations, we consider several simple perturbations at five severity levels, as defined in the DeepForensics-1.0 benchmark [47]. These perturbations include changes in color saturation (CS), color contrast (CC), block-wise operations (Block), Gaussian noise (Noise), Gaussian blur (Blur), JPEG compression (JPEG), and video compression (Comp). Our model is trained on FaceForensics++ (RAW) dataset containing both real and fake videos, and subsequently tested on FaceForensics++ videos that have been subjected to the aforementioned perturbations. Examples of the disturbed images are depicted in Figure 6.

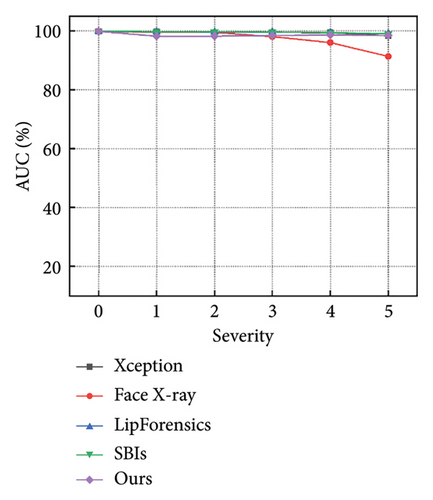

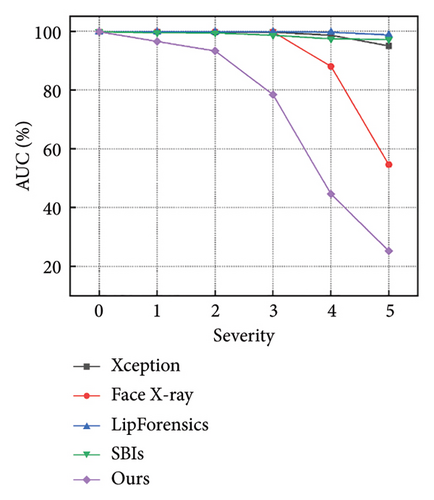

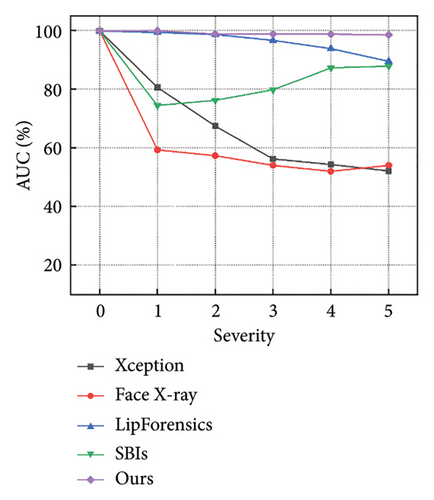

To ensure a fair comparison, we conducted robust experiments using the comparison methods with publicly released codes and weights in their original papers, which achieved the best performance as listed in Table 4. The methods included are Xception [7], Face X-ray [24], LipForensics [15], and SBIs [13].

Figures 7 and 8 show how the model performs with increasing severity levels for each perturbation type, while Table 5 summarizes the average AUC scores across five levels of severity. The results indicate that our method is more resilient to degradations than state-of-the-art approaches. For high-frequency corruptions such as Blur, JPEG, and Comp, our model outperforms the advanced LipForensics [15] and SBIs [13] methods by an average of 3.0% and 20.0% in AUC, respectively. This is because these degradations primarily affect the quality of individual frames without impacting the trajectory of facial muscle movements, allowing our model to overlook the effects caused by these degradations. Although other methods show strong generalization capabilities, they are particularly sensitive to quality degradations, for example, Face X-ray [24] is vulnerable to video compression, and LipForensics [15] is more affected by blockwise perturbations than our method.

| Methods | AUC scores (%)↑ | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Origin | CS | CC | Block | Noise | Blur | JPEG | Comp | Avg | |

| Xception [7] | 99.8 | 99.3 | 98.6 | 99.7 | 53.8 | 60.2 | 74.2 | 62.1 | 78.3 |

| Face X-ray [24] | 99.8 | 97.6 | 88.5 | 99.1 | 49.8 | 63.8 | 88.6 | 55.2 | 77.5 |

| LipForensics [15] | 99.9 | 99.9 | 99.6 | 87.4 | 73.8 | 96.1 | 95.6 | 95.6 | 92.5 |

| SBIs [13] | 99.9 | 99.5 | 98.4 | 99.6 | 53.5 | 67.6 | 87.6 | 81.1 | 83.9 |

| Ours | 99.9 | 98.4 | 67.6 | 97.8 | 88.4 | 98.7 | 98.6 | 99.0 | 92.6 |

- Note: The average AUC scores (%) of the detectors across five severity levels for seven perturbations. The best and second-best AUC scores (%) are highlighted using bold and italic formatting, respectively. “Avg” represents the average value of all severity levels for all perturbations.

In addition, our model’s performance declines under CC perturbation, which we attribute to our method’s reliance on optical flow features driven by brightness changes. Nonetheless, this degradation is not a significant real-world threat, as color-based attacks are typically too conspicuous for practical use. Importantly, our model shows resilience against Noise, and this is because our model focuses on the movement patterns in eyes and mouth regions, capturing high-level semantic features, while Noise mainly affects pixel-level and low-level features. As a result, the noise does not significantly interfere with the microexpression movements.

We further evaluate the robustness of our approach against various video compressions by training each detector on the original dataset and then testing them on three different compression levels of the Celeb-DF-v1 dataset. On Celeb-DF-v1, we compare our work with the best and advanced detectors on its benchmark (Table 5), LipForensics [15], and SBIs [13]. The experimental results are summarized in Table 6. The experimental results demonstrate that our model is less susceptible to video compression. Specifically, our work outperforms the two advanced methods by an average of 4.5%, 5.3%, and 6.4% on RAW, C23, and C40 compression levels, respectively. In addition, the performance decline from RAW to C23 and C40 is notably lower in our method compared to advanced approaches, averaging 0.8% and 2.0%, respectively.

| Methods | Test set AUC scores (%)↑ | Decline↓ | ||

|---|---|---|---|---|

| Celeb-DF-v1 RAW | Celeb-DF-v1 C23 | Celeb-DF-v1 C40 | ||

| LipForensics [15] | 70.3 | 64.2 | 61.9 | 6.1/8.4 |

| SBIs [13] | 77.0 | 70.1 | 66.9 | 6.9/10.1 |

| Ours | 78.1 | 72.4 | 70.8 | 5.7/7.3 |

- Note: The best and second-best results are highlighted using bold and italic formatting, respectively. Our proposed model has higher performance than other methods. The uparrow indicates that the larger the AUC scores, the better the experimental performance. The downarrow indicates that the smaller the decline, the better the experimental performance.

4.8. Robustness to Adversarial Attacks

On social media and online platforms, DeepFakes are widely used for the dissemination of misinformation. To mitigate the security threats posed by DeepFakes to politics, economics, and national stability, effective DeepFake detectors have been developed to identify forgery content. However, adversaries often employ adversarial attacks to inject subtle perturbations into DeepFake images or videos, enabling them to bypass detectors designed with DNNs without noticeable loss of visual quality. If DeepFake detectors lack robustness against adversarial attacks, DeepFake content can easily evade detection, leading to the further spread of misinformation. To simulate real-world scenarios where adversaries deploy such attacks, we utilize widely adopted adversarial attack methods, namely, I-FGSM [11] and PGD [12], to generate adversarial samples.

We further test the robustness of this work to typical adversarial attacks. Experiments use the DeepFakes dataset in FaceForensics++ benchmark and compare it with two typical attack models, including I-FGSM [11] and PGD [12]. We set the max perturbation parameter ε = 8/255, where the iteration number of attacks is 40, and the imperceptible attacks are added to the combined inputs in our work. We evaluate the robustness of this work with advanced DeepFake detectors in a white-box setting, and we select the frame-level detectors and best-performing methods in Table 5, including Xception [7], Face X-ray [24], and SBIs [13].

The results of adversarial attacks are shown in Table 7. In the white-box setting, our work is more robust to imperceptible attacks than state-of-the-art detectors. For instance, when deploying the I-FGSM and PGD attacks, our work has a 46.64% and 56.47% reduction, respectively, in ASR compared to the advanced detectors. This is because adversarial attacks typically alter low-level features, and the perturbations applied are often unable to disrupt the continuity in consecutive frames. In contrast, our designed DeepFake detector focuses on capturing the motion patterns of the eyes and mouth regions, which represent high-level semantic features and maintain continuity between consecutive frames. This enables the detector to effectively resist adversarial noise.

| Methods | Xception [7] | Face X-ray [24] | SBIs [13] | Ours | ||||

|---|---|---|---|---|---|---|---|---|

| Attack model | I-FGSM | PGD | I-FGSM | PGD | I-FGSM | PGD | I-FGSM | PGD |

| Origin Acc↑ | 97.32 | 99.10 | 99.99 | 97.82 | ||||

| Attack Acc↑ | 0.62 | 0.92 | 0.78 | 0.16 | 0.24 | 0.19 | 46.17 | 55.66 |

| ASR↓ | 99.36 | 99.05 | 99.21 | 99.84 | 99.76 | 99.81 | 52.80 | 43.10 |

- Note: The best and second-best results are highlighted using bold and italic formatting, respectively. When subjected to adversarial attacks, our work demonstrates a better ASR (%) compared to other advanced detectors trained and tested on the DeepFakes dataset of FaceForensics++. The downarrow indicates that the smaller the ASR, the better the experimental performance.

4.9. Ablation Study

To investigate the effectiveness of exploring inconsistent facial expression movements, we perform ablation experiments on our proposed method. Typically, current DeepFake detectors employ the entire face for training. To capture the distinct movement patterns of the eyes and mouth regions, we partition the face into two parts and input them into a dual-branch network. To assess the impact of segmentation, we train the network using the whole face, with only the eyes area and the mouth area as inputs.

Evaluations of facial segmentation. The results can be seen in Table 8. Training the whole image still has a good performance, but the performance is lower than the segmentation training method. For instance, the performance from 99.3% AUC in our method decreased to 90.1% AUC on the Celeb-DF-v2 dataset, and from 94.6% AUC to 89.7% AUC on the DFDC-Preview dataset, which indicates that the eyes and mouth have different movement patterns, and training the two regions separately can improve the efficiency of our detector.

| Methods | Celeb-DF-v2 | DFDC-Preview | ||

|---|---|---|---|---|

| Acc (%)↑ | AUC (%)↑ | Acc (%)↑ | AUC (%)↑ | |

| w/o Segmentation | 90.8 | 90.1 | 89.7 | 89.7 |

| w/o Eyes region | 74.1 | 77.9 | 64.2 | 63.3 |

| w/o Mouth region | 80.8 | 80.2 | 85.8 | 83.5 |

| Ours | 96.6 | 99.3 | 94.7 | 95.3 |

- Note: The best results are highlighted using bold formatting. Accuracy and AUC scores of all the models, which are trained and tested on Celeb-DF-v2 and DFDC-Preview. The uparrow indicates that the larger the Acc and AUC, the better the experimental performance.

Evaluations of single facial region. We only train the detector with a single area, and the results are shown in Table 8. We can see that the performance decreases significantly; when only training the eyes region, the performance decreases by 19.1% AUC on the Celeb-DF-v2 dataset and by 11.1% AUC on the DFDC-Preview dataset; when only training the mouth region, the performance drops by 21.4% AUC on the Celeb-DF-v2 dataset and by 31.3% AUC on the DFDC-Preview dataset, which indicates that the expression clues obtained by single area are limited. Furthermore, the results show that the region of the eyes contains more synthetic cues than the mouth.

4.10. Computational Costs

The computational costs of our proposed dual-branch network are presented in Table 9. Our network is a model with 24.99 M parameters and achieves floating point operations (FLOPs) of 1.89 GFLOPs on an NVIDIA Tesla V100 GPU. Due to the robust computational power of the GPU, our model is highly suitable for the real-time detection of DeepFake videos.

| Train process | |||

|---|---|---|---|

| Input size | 3 × 224 × 224 | FLOPs | 1.89 GFLOPs |

| Total parameters | 24.99 M | Trainable parameters | 24.99 M |

| Inference process | |||

| Average inference time | 15 ms | Average inference performance | 66.67 fps |

- Note: FLOPs refer to floating point operations.

For instance, the average inference time (time for one forward pass) of PhaseForensics [48] is 25 ms, and LipForensics [15] achieves 22.2 ms. In contrast, our model delivers a video-friendly inference time of 15 ms, corresponding to an average inference performance of 66.67 frames per second (fps), making it well-suited for real-time inference on standard 30 fps video streams.

5. Discussion

Existing DeepFake generation methods typically focus on the visual quality of a single frame, emphasizing detail optimization in local regions such as the eyes and mouth. However, they lack temporal modeling across consecutive video frames, neglecting the global coordination of facial muscle movements, which results in inconsistent facial expressions. Moreover, current DeepFake detectors suffer from limited generalizability and robustness. In contrast, our proposed method leverages the observation that DeepFakes may disrupt smooth and continuous facial muscle movements. We design a dual-branch network to explore expression cues from the eyes and mouth regions. These high-level semantic features enhance the model’s generalization capability for unknown DeepFakes data and demonstrate strong robustness against various image degradations and adversarial attacks. Evaluation and comparative experiments validate the significant contribution of inconsistent expression cues to DeepFake detection. Ablation studies consistently confirm that the effectiveness of our method lies in combining the forgery clues from the eyes and mouth regions.

More importantly, our proposed method has good cross-dataset generalization capabilities, and with further optimization, it could scale to larger datasets or different types of forgeries, such as multiple forgery regions in a video, as well as DeepFake detection across various devices and platforms. Our method shows strong robustness against various levels of postprocessing and adversarial attacks, making it adaptable to complex real-world scenarios, such as low-resolution videos or other quality degradation challenges. Finally, our method is primarily focused on detecting forgery facial videos and can be integrated with various multimedia verification systems to ensure the privacy and security of individuals’ information, particularly in applications such as facial recognition and emotion analysis.

6. Conclusions

In this paper, we introduce a novel DeepFake detector that relies on inconsistent facial expression cues. Through extensive experiments, we have demonstrated that synthetic faces generated by GANs fail to maintain spatial and temporal consistency in facial expressions. Leveraging our scientific analysis, we have developed a robust and general DeepFake detector that deploys the irregular patterns of facial movements. We extract these different patterns by utilizing MSTR maps of the eyes and mouth regions, and we have designed a dual-branch framework that further enhances the efficiency of our approach. These experiments have validated the high effectiveness of our method, independent of the generator used and the quality of the input video. We believe that a significant contribution of this study lies in our observation of inconsistent expression clues between natural and synthetic faces, particularly in the eyes and mouth regions. We have presented a scientific investigation and explanation of expression motion patterns in forged faces. We hope that our method will make a valuable contribution to combating advanced DeepFake techniques.

We acknowledge that while the proposed method demonstrates outstanding performance in detecting DeepFake videos in the current experiments, it may face certain challenges when dealing with highly sophisticated forgery techniques, such as talking-face videos generated by diffusion models. In future research, we plan to explore more diverse feature extraction methods and incorporate cross-modal information, such as audio and text, to enhance robustness and detection performance against advanced DeepFakes.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the National Natural Science Foundation of China under Grant no. 62372334 and the National Key Research and Development Program of China under Grant no. 2023YFB3106900.

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grant no. 62372334 and the National Key Research and Development Program of China under Grant no. 2023YFB3106900.

Open Research

Data Availability Statement

The datasets are publicly available.