An Overview of Robot Embodied Intelligence Based on Multimodal Models: Tasks, Models, and System Schemes

Abstract

The exploration of embodied intelligence has garnered widespread consensus in the field of artificial intelligence (AI), aiming to achieve artificial general intelligence (AGI). Classical AI models, which rely on labeled data for learning, struggle to adapt to dynamic, unstructured environments due to their offline learning paradigms. Conversely, embodied intelligence emphasizes interactive learning, acquiring richer information through environmental interactions for training, thereby enabling autonomous learning and action. Early embodied tasks primarily centered on navigation. With the surge in popularity of large language models (LLMs), the focus shifted to integrating LLMs/multimodal large models (MLM) with robots, empowering them to tackle more intricate tasks through reasoning and planning, leveraging the prior knowledge imparted by LLM/MLM. This work reviews initial embodied tasks and corresponding research, categorizes various current embodied intelligence schemes deployed in robotics within the context of LLM/MLM, summarizes the perception–planning–action (PPA) paradigm, evaluates the performance of MLM across different schemes, and offers insights for future development directions in this domain.

1. Introduction

Embodied intelligence currently poses the greatest challenge in the field of artificial intelligence (AI). This concept has been around since 1950. In recent years, as algorithms, theories, and hardware support for AI have advanced, both research and industrial circles have shifted their focus to embodied intelligence, aiming to uncover the path to artificial general intelligence (AGI) through its exploration [1–4]. The embodied cognition theory posits that thinking and cognition are largely dependent on and originate from the body. The structure of the body, the structure of the nerves, and the activity of the sensory and motor systems determine how we understand the world, determine our thinking style, and shape the way we see the world. Embodied cognition emphasizes the importance of physical entities; inspired by this, the ultimate objective of AI is to devise human-like intelligent robots capable of autonomous planning, decision-making, and execution. Such agents must possess physical entities to enable multifaceted interactions with the real world, perceive and comprehend their surroundings akin to humans, and proficiently handle complex tasks through autonomous learning—capabilities far beyond current robotic capabilities [5–7]. Achieving this goal necessitates understanding the origins of human intelligence. While certain contributing factors have been identified, the comprehensive mechanism of intelligence generation remains elusive. Consequently, scholars have have proposed various hypotheses, including a prominent theory positing that intelligence stems from environmental interaction, which underpins the concept of embodied intelligence [8–11]. Compared to AI models that typically lack a physical body, humans have demonstrated a distinct ability to learn through interaction, which is postulated to stem from our capacity to perceive our bodies in space. This includes sensing the position of our limbs during movement, discerning other objects and media, and consciously manipulating our body parts to engage with real-world objects [12, 13]. The embodied intelligence theory underscores the integrality of body and mind. Classical AI models, which rely on offline learning and environment interaction primarily sourced from human-annotated datasets due to their absence of a physical body, face limitations when applied to robots. Robots are furnished only with a rigid set of operating rules, constrained to learning within a narrow action space. Consequently, robots lack the autonomous learning capability in dynamic, unstructured environments, making interaction with such environments particularly challenging. Furthermore, in the absence of certain labels, they are unable to acquire related concepts [14–16]. From this perspective, embodied intelligence can be comprehended as the capacity to attain intelligent conduct through the interplay between a physical body and its surrounding environment. In contrast to the traditional third-person learning paradigm of AI, the objective of embodied intelligence is to perceive the environment from an egocentric perspective and continually learn to address the challenges posed by dynamic, unstructured settings via interactive environmental information. An embodied intelligent system is generally regarded as encompassing four pivotal components: (1) A physical entity endowed with environmental perception, motion, and operational execution capabilities; (2) an intelligent core tasked with essential functions like perception, understanding, decision-making, and control. This includes interpreting semantic information within the environment, grasping specific tasks, and making decisions based on environmental changes and target states to direct physical entities in task completion. It also entails learning decision-making and control paradigms from intricate data, evolving continuously to accommodate more sophisticated tasks and environments; (3) extensive training data. Over the past few decades, AI technology has been in a constant state of evolution, with research institutions across various countries persistently developing AGI robots, endeavoring to extend existing AI models into embodied contexts. In 2016, the Go match between Google’s AlphaGo computer and a human represented a milestone in the field of AI, demonstrating the promising applications of deep reinforcement learning (DRL) in interactive settings. Reinforcement learning (RL) primarily focuses on learning a strategy that maximizes the long-term rewards and penalties resulting from the agent’s interaction with the environment. Its core principle involves real-time interaction with the environment, learning from a sequence of successes and failures, maximizing the cumulative reward earned from the environment, and ultimately enabling the agent to adopt the optimal policy. This method is commonly applied to tackle tasks within a limited space of states and actions. Since 2013, researchers have consistently delved into the theory of RL, exerting a substantial influence across numerous domains. Notably, OpenAI has developed Dactyl, a humanoid robot hand adept at manipulating physical objects with dexterity. Meanwhile, DeepMimic, another humanoid robot, excels in mastering intricate motor skills. The fusion of robots and RL enables robots to tackle diverse tasks within a specified spatial range with robust adaptability and autonomy. RL has established the cornerstone for environment interaction in embodied intelligence research. As natural language processing (NLP) capabilities have advanced and subsequent tasks such as visual question answering and image captioning have been introduced, there has been an increasing emphasis on exploring more embodied tasks, with attempts to extend multimodal models into embodied environments. The advent of ChatGPT in 2022 brought large language models (LLMs), like GPT, into the spotlight. This underscores the emergent capabilities of LLMs and their profound impact on preceding multimodal models, subsequently making multimodal large models (MLM) a focal point of research. Various research institutions have incorporated LLM/MLM into embodied robot research, positioning robots as the executors of LLM/MLM directives, while LLM/MLM serves as the controllers and decision-makers for robots, interacting with their environment to accomplish tasks.

This work reviews previous research on embodied intelligence. We summarized various embodied tasks (embodied exploration, visual language navigation (VLN), embodied question answering), in terms of their relevant model frameworks, algorithms, and research tools, and introduced the current research progress and achievements of embodied intelligence guided by LLMs, and the rest of this paper is organized as follows: In Section 2, various embodied tasks (embodied exploration, VLN, and embodied question answering) are summarized in terms of their relevant model frameworks, algorithms, and research tools; Section 3 introduces the current research progress and achievements of embodied intelligence guided by LLM/MLM; in Section 4, the performance of MLMs in those embodied schemes is evaluated; finally, in Section 5, the current development status of embodied intelligence is analyzed, the application limitations of each embodied solution are summarized, and future development challenges are discussed.

2. Review of the Development of Embodied Tasks

The embodied performance of robot systems primarily manifests in their interaction with the environment or scene, where varying embodied tasks pose distinct requirements for this intelligent agent’s scene interaction. In this section, we concisely review various early embodied tasks, delineating their respective meanings, backgrounds, methodologies, and models. Early embodied tasks can broadly be categorized into embodied exploration, embodied navigation, and embodied question answering systems. These tasks are interconnected, exhibiting a dependency relationship among them, which we will elaborate on later.

2.1. Embodied Navigation

Since the introduction of the first mobile robot in the 1960s, navigation technology has garnered significant attention as a pivotal capability of robots. As a core technology, it establishes the groundwork for robots to accomplish diverse complex tasks and finds extensive application across various scenarios, actively engaging in production and daily life. Mobile robot navigation technology encompasses multilayered architectures such as perception, decision-making, and control. Robot navigation can be categorized based on system framework, the presence or absence of environmental priors, and signal input into modular framework-based navigation, end-to-end framework-based navigation, map-based navigation, mapless navigation, visual navigation, and VLN.

2.1.1. Modular Framework–Based Navigation and End-to-End Framework–Based Navigation

The classic approach to robot navigation involves using a modular framework to decompose navigation tasks into several subtasks like positioning, map-building, path planning, and motion. Each subtask corresponds to a particular solution, with the overall framework accommodating diverse algorithms such as classical control and machine learning [17]. Positioning algorithms enable robots to comprehend themselves and their targets, while navigation tasks in open environments can utilize the target point’s orientation as the forward direction. Simultaneous localization and mapping (SLAM) and other algorithms construct map models that provide robots with environmental priors. Path planning aims to find feasible paths from the initial to the target position in configuration space, avoiding obstacles. Positioning, mapping, and planning enhance overall navigation efficiency through endpoint guidance, environmental priors, and global guidance, respectively [18]. Despite the maturity of the modular system–based method, it heavily relies on manual design and is susceptible to sensor noise accumulation, reducing the robustness of related algorithms and hindering deployment in unfamiliar complex environments. In contrast, the end-to-end framework–based navigation method leverages end-to-end training to acquire comprehensive navigation knowledge from data. Its advantage lies in eliminating manual work, even intermediate steps like mapping and planning. For instance, Mousavian et al. [19] proposed using semantic segmentation and detection masks as observations obtained through state-of-the-art computer vision algorithms and a deep network to learn the navigation policy. This data-driven machine learning strategy–based method directly learns a mapping between raw observations and actions in an end-to-end manner for the task. Similarly, the cognitive mapping and planning (CMP) approach suggested by Gupta et al. [20] learns navigation plans from data, albeit still requiring map-building. The entire model comprises a mapper module that processes first-person images from the robot, integrates observations into a latent memory, and allows online usage in novel environments without a preconstructed map. Evidently, in end-to-end framework navigation methods, the navigation problem revolves around learning a policy that utilizes inputs (current image, egomotion, and target specification) at every time step to output actions that swiftly convey the robot to the target.

2.1.2. Map-Based Navigation and Mapless Navigation

Map-based and mapless navigation: Common maps utilized in navigation primarily encompass grid maps, geometric maps, topological maps, and semantic maps. Grid maps depict the environment as an array of grids on a plane, utilizing binary data to designate regions as traversable or obstacle-laden, thereby establishing a foundation for subsequent path planning. Their construction typically relies on LiDAR sensors [21]. Grid maps boast an intuitive format, facilitating ease in creation and maintenance. However, their accuracy in navigation is directly impacted by grid size. A fine grid resolution may consume substantial memory and hamper system search efficiency, whereas a coarse grid may result in an imprecise or distorted representation of regional information. Geometric maps reconstruct the environment by representing obstacle information through geometric attributes such as point, line, and plane features. Localization entails measuring the environmental data perceived by cameras and contrasting it with the reconstructed environmental framework to pinpoint the robot’s precise location within the environment through feature estimation techniques [22]. Topological maps are described through numerous key nodes and the lines connecting them, representing the environment without the need for precise physical coordinates or dimensions. Instead, they focus on nodes and their topological relationships [23, 24]. Nodes signify locations within the environment, while lines denote traversability between connected nodes. Topological maps offer internode distance and orientation information, aiding robotic movement between nodes. They require minimal memory, exhibit short search times, and display excellent real-time performance in navigation and positioning systems. However, they falter in depicting complexly structured maps. As an advanced map representation, semantic maps delineate environmental features and semantic information. They offer extensive data on open spaces, observation points, uncharted territories, observed objects, and agent actions, constituting highly valuable and compact representations for agent navigation [25–27]. Furthermore, with the progression of multimodal technology, emerging map types better encapsulate environmental priors. Huang et al. [28] proposed audio-visual language maps (AVLMaps), a unified 3D spatial map representation that stores cross-modal information derived from audio, visual, and linguistic cues, enhancing navigation target indexing accuracy. When robots navigate unknown environments, they must accomplish three tasks: safe exploration/navigation, surveying, and positioning. Online autonomous completion of exploration and surveying in unknown environments constitutes SLAM. SLAM entails mobile robots incrementally constructing comprehensive environmental maps through continuous environmental observation via cameras without prior knowledge of their location [29].

Mapless navigation necessitates environmental map construction, with robotic activity contingent on real-time environmental observations captured by cameras, obviating the need for absolute obstacle coordinate specification. DRL is the predominant method for mapless navigation. Yet, DRL demands extensive data and its models are susceptible to environmental disturbances, lacking generalization to new targets, thereby challenging real-world application. Consequently, enhancing generalization to new targets and reducing training costs is crucial for DRL-based mapless navigation [30, 31]. Research in this domain has been robust. Zhu et al. [32] proposed a target-driven visual navigation approach aiming to ascertain the shortest action sequence to relocate an agent from its current position to a designated target within an RGB image. It learns a stochastic policy function with dual inputs—current state and target—generating a probability distribution in the action space, thereby obviating the need for model retraining upon incorporating new targets. Kahn et al. [33] introduced a generalized computation graph to learn collision avoidance strategies for mobile robots, formalizing this as a RL problem, rewarding the robot for collision-free navigation.

2.1.3. Visual Navigation and VLN

Within the classic navigation framework, robot navigation technology leveraging laser sensors and infrared ultrasonic sensors has rapidly advanced and is widely utilized. However, their high costs and limited adaptability to adverse weather conditions, such as rainy and snowy days, have impeded their practical deployment in navigation tasks. In contrast, visual sensors offer cost-effectiveness and retention of environmental semantic information, indicating superior development prospects for vision-based navigation. Visual-based indoor navigation technology captures ambient information via cameras, identifies surrounding obstacles and nonobstacles, and plots feasible paths, enabling autonomous mobile robot navigation.

A common approach for map-based visual navigation involves extracting and processing the features of the operational environment prior to navigation. A global map is then established, stored in the robot’s database, and retrieved for matching and positioning during navigation. This involves comparing image features or landmarks captured by cameras with maps in the database, calculating matching probabilities, determining the robot’s pose, and planning an appropriate path via the planning module [34]. Users favor navigation systems with simple structures, robust adaptability to complex environments, and autonomous capabilities. Increasingly, modular framework navigation methods fail to meet the demands of intelligent autonomous navigation. Target-driven visual navigation has emerged as a superior solution [35, 36]. From the perspective of navigation targets, inputs to the navigation system can encompass coordinate points, object labels, graphs, and natural language [37–39]. When targets are defined by coordinate points, the agent’s task typically relies on a metric map, guiding it from a specific point to the target location. With label-specified targets, visual navigation entails observing the surrounding environment to identify instances of specified object categories. For image-based targets, visual observation of the object directs the agent toward the object depicted in the image. Natural language descriptions require the agent to comprehend the description, extract target information, and match it with semantic scene data, encompassing the advanced task of VLN.

| Task | Method | Input | Output | Characteristics | Metric | Dataset/simulator |

|---|---|---|---|---|---|---|

| Embodied navigation | [19] | RGB image, depth image, previous action, success indicator | Action |

|

SR | |

| [20] | Image | Action |

|

ADG, SR | — | |

| [32] | RGB image | Action |

|

ATL, SR | AI2-THOR [42] | |

| [43] |

|

Action |

|

NE, ADG, SR | R2R [44] | |

| [45] |

|

Path |

|

SR | BnB [46] | |

| [47] |

|

Action |

|

PS, PE | — | |

| [48] | RGB-D image | Action |

|

SR, SPL, ADG | ||

| [51] |

|

Action |

|

TL, NE, SR, SPL, CLS, nDTW, SDTW | ||

| [54] |

|

Action |

|

TL, NE, OSR, SR, SPL | R2R | |

| [55] |

|

Action |

|

TL, NE, SR, SPL |

|

|

| Embodied exploration | [56] | Image | Action | Curiosity mechanism | AG |

|

| [58] | Image | Action | Novelty mechanism | AG | Atari games | |

| [59] |

|

Action | Coverage mechanism | Coverage, LSR, SPL | House3D [60] | |

| [61] | Image sequence | Action | Reconstruction mechanism | RE | ||

| Embodied question answering | [64] |

|

|

Modular | QA, NA | EQA-v1 [64] |

| [65] |

|

Answer | Modular | QA | IQUAD V1 [65] | |

| [66] |

|

Answer | Modular | QA, NA, SL | MT-EQA [66] | |

| [67] |

|

Answer | Modular | QA | IQuADv1 | |

VLN aims to develop embodied agents capable of communicating with humans via natural language, perceiving their environment, and navigating within a real 3D setting. Based on natural language instructions, the agent navigates a 3D environment, progressively approaching the target from any random point in the environment under step-by-step guidance, while capturing visual images at each step. This necessitates continuous environmental perception and the generation of action predictions, such as movement and turning, based on language instructions. Compared to pure visual navigation, visual linguistic navigation necessitates effective understanding and coordination of multimodal information while enhancing environmental interaction.

Distinct VLN tasks vary in their requirements for agent–environment interaction. Room-to-Room [68] is a prevalent task where an agent receives a natural language instruction, observes an initial RGB image based on its starting pose, and performs a series of actions (each generating a new pose and image observation) until it executes a specific stop action. The REVERIE task [53] differs, as it tasks an agent with navigating to and identifying a remote object (highlighted in a red bounding box) in a photorealistic 3D environment from multiple potential candidates based on a natural language instruction. Speaker-Follower [43] is a VLN model for Room-to-Room tasks designed to compensate for instruction deficiencies. It comprises a speaker module to enhance instructions, including a speaker model that provides textual route instructions and a follower model that navigates based on these instructions. During training, the speaker model supplements the follower model with additional path instructions, enriching training data and enhancing instructions.

VLN involves multiple modalities, vision, language, and action, posing significant data training challenges due to the high cost and lengthy duration of collecting training data. Current research focuses on adapting virtually trained models to unseen environments, improving generalization, and real-world application. Pretraining models offer a promising solution, enabling the acquisition of transferable multimodal representations to achieve top-tier performance in various vision and language tasks [45, 69, 70]. With the rise of pretrained LLMs and MLMs, their application in VLN has demonstrated substantial advantages. LM-Nav [47] is a robot navigation system leveraging pretrained large language and multimodal models, enabling self-supervised robot navigation to execute natural language instructions without annotated navigation data. Given unstructured text instructions, LM-Nav decodes these into a sequence of text tags using a pretrained language model, establishes textual landmarks in a topological map via a visual language model, and utilizes a novel search algorithm to maximize the probability target, generating and executing a plan via a visual navigation model. L3MVN [48] employs LLMs to convey object search common sense, constructs environment maps based on boundaries, and selects long-term goals for efficient exploration and search.

In addition, VLN represents a sophisticated inference task, with its outcome contingent upon the accumulation of steps. Auxiliary tasks facilitate agents in gaining a deeper understanding of their environment and current state, sans additional labeling. This understanding can be achieved by interpreting past behavior, predicting future decision-related information [71, 72], engaging in ongoing tasks like task completion, and aligning visions with instructions [51, 73, 74]. AuxRN [54] leverages four auxiliary tasks: Trajectory Retelling, Progress Estimation, Cross-modal Matching, and Angle Prediction, to generate precise navigation routes. For HOP [55], the trajectory order is also pivotal, enhanced through masked language modeling and pretraining on five tasks: Action Prediction with History, Trajectory Structure Matching, Trajectory Order Modeling, and Group Order Modeling, thereby refining the model’s trajectory generation capabilities. Furthermore, VLN tasks can be evolved into multiround dialog tasks, better suited for practical applications. The visual dialog navigation task [75] strives to develop an intelligent agent capable of continuous natural language dialog–based communication and navigation grounded in human reactions. This requires the model to possess memory and cross-modal reasoning abilities. The cross-modal memory network (CMN) utilizes independent language and visual memory modules to recall and comprehend dialogs and visual inputs pertinent to past navigation behaviors, further analyzing decision history for current navigation decisions.

2.2. Embodied Exploration

In embodied exploration tasks, agents acquire the ability to navigate unfamiliar environments to gather information essential for future task deployments in those environments [76]. When an agent finds itself in uncharted territory, active exploration and foundational knowledge acquisition about the surroundings are crucial for efficiently undertaking new tasks. Taking a home robot as an example, if it is expected to execute commands such as “bring some fruits from the kitchen to the owner,” it must explore the room in advance, figure out what its purpose is, the path to take, and where the items are located.

Navigation is central to embodied visual exploration. Unlike task-oriented navigation, embodied visual exploration lacks a definitive goal but aims to maximize environmental data collection and form pertinent map structure models, accurately depicting semantic and geometric environmental information for downstream tasks. Historically, SLAM technology has been used for map construction. Agents use this technology for localization and path planning, though it remains a well-established yet passive exploration method requiring advanced environment traversal [77]. Additionally, SLAM heavily relies on sensors, often leading to noise interference issues. Currently, learning-based techniques dominate embodied visual exploration tasks. Compared to traditional SLAM, the learning-based approach constructs maps using visual information less susceptible to noise and actively explores the environment. It employs unsupervised methods, such as RL, to learn within new environments, minimizing manual effort and enhancing efficiency. However, learning-based methods for embodied visual exploration often face sparse rewards challenges. In RL, rewards are granted solely upon reaching a specific state. In complex new environments, an agent’s state cannot be predetermined, resulting in sparse or absent rewards [78]. Hence, exploration strategies are a focal research area in embodied visual exploration. Four distinct exploration methods from the literature—curiosity, novelty, coverage, and reconstruction—can establish reward systems aiding agents in environmental exploration. Agents guided by curiosity are attracted to unexpected states, earning rewards when actual states diverge from predicted ones. The larger the discrepancy, the greater the reward, focusing on intrinsic rewards over external ones [56, 79, 80]. In the novelty mechanism, the goal is to discover unencountered states, rewarding agents based on the infrequency of current state accesses [58, 81, 82]. Coverage mechanisms incentivize agents to observe their environments comprehensively, rewarding them based on the increment of observed objects of interest [59]. Finally, in the reconstruction mechanism, agents attempt to reconstruct scenes using their central view and sensor information. The closer the reconstructed views are to the originals, the higher the reward [61, 83].

2.3. Embodied Question Answering

Malinowski et al. [84] pioneered the visual question answering task. This challenge entails providing an image and a natural language question, the model then answering by interpreting both the natural language and visual information. They subsequently proposed the Neural Image Question Answering model [85] to address the task, modeling the VQA problem as a generation problem, extracting image and problem features stitching as inputs, and then feeding into the LSTM network to output the answer, and it is a classic VQA model type based on multimodal fusion. The key to such a method is how to integrate the vision and text features. A number of academics have devoted their research to it. The most common strategies for fusing visual and text features involve methods like affine transformation [86], vector outer product [87], matrix decomposition [88], etc. Researches have shown that traditional VQA methods possess elements that are not pertinent to providing answers during feature fusion. Therefore, in an effort to raise the accuracy of answers, researchers have optimized the VQA model with attention mechanisms in order to augment the relevant beneficial data in visual and textual information [89–91]. Although the accuracy has been augmented through the utilization of attention mechanisms, the opaque, noninterpretable black box model for answering questions remains a mystery. Scholars have begun to question whether VQA models are using data bias in their answers, instead of making true deductions. How to establish an effective and interpretable universal model has always been a prominent research area. Inspired by the fact that humans divide questions into several steps when answering them, the reasoning process of visual question answering can be serialized. Neural module network (NMN) [92] model constructs multiple submodules required for answering questions, such as “Find,” “Transform,” “Combine,” “Describe,” and “Measure”. Afterward, a combination of submodules is automatically generated according to the structure of question for collaborative learning. In 2016, Stanford and Facebook jointly released the CLEVR dataset [93] and proposed a new modular visual reasoning method for the CLEVR dataset [94]. Due to the simple scenario of the dataset, only attention needs to be paid to the reasoning itself. As a result, research on this composite dataset has grown rapidly. Yi et al. [95] proposed neural-symbolic visual question answering (NS-VQA) system, where a scene parser renders an image to obtain a structured scene representation. NS-VQA is a typical model composed of scene parsers (de-renderers), problem parsers (program generators), and program executors, laying the foundation for subsequent visual reasoning models. The central idea of this type of visual question answering method is task decomposition, which is not only applicable to question answering tasks but also to robot navigation tasks, laying the foundation for subsequent embodied question answering.

The long-term aspiration of embodied question answering is to create an agent that is able to perceive its environment, communicate using natural language, and take action to assist humans. Specifically, the task involves placing the agent in an environment, allowing it to experience the environment from a first-person point of view, and having the capability to perform a series of atomic actions, the agent has to explore the environment to gain visual information to answer relevant questions. To answer questions in an embodied form, an agent requires a wide range of AI capabilities, including visual recognition, language understanding, object-oriented navigation, task planning, and commonsense reasoning. Consequently, embodied question answering is deemed the more difficult and complex challenge in current research on embodied AI.

The method employed initially utilized a hierarchical model comprising four modules: vision, language, navigation, and answering. The adaptative computation time (ACT), a navigator of the navigation module, was integrated with encoding visual and linguistic information to choose the next action, predict its duration, and answer the question on the basis of the collected images from multiple frames [64]. Reference [96] proposes a hierarchical-level embodied question answering method based on neural module control, which divides the problem into multiple subtasks, and then specialized systems complete each subtask through navigation and observation. Reference [65] suggested the interactive question answering (IQA) task, which necessitates an augmented level of interaction between the agent and its environment in terms of embodied question answering. In EQA task, the agent mainly collects information through navigation and observation of the environment to answer questions, while in IQA, the agent needs to take action to act on the environment and change its state, which requires more complex planning and reasoning. In addition, there are multitarget embodied question answering tasks and multiagent embodied question answering tasks. In multitarget tasks, the problem involves not only one target, but also multiple targets distributed in different locations in the environment, which adds complexity to reasoning and navigation [66]. In multiagent embodied question answering, multiple agents work in unison to address the question, thus avoiding overburdening a single agent with complex multitarget tasks. Working out how to distribute tasks to each agent in multiagent embodied question answering to avoid duplication of work is vital. Reference [67] introduces a scalable optimization-based planner to assign different viewpoints to each agent and execute tasks on their respective viewpoints.

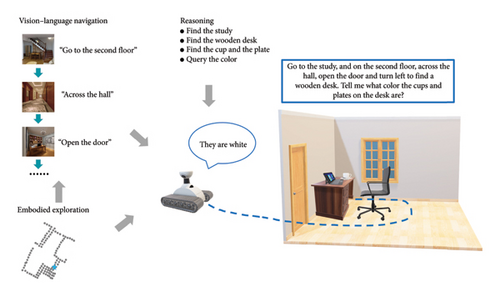

As we mentioned earlier, these embodied tasks are not independent of each other. As shown in Figure 1, embodied exploration tasks can serve as preparatory work for downstream tasks, providing environmental prior knowledge. Both embodied navigation and exploration tasks can serve the comprehensive task of embodied question and answer.

We have summarized the typical work of three embodied tasks in Table 1, which facilitates readers to clearly understand the types of methods, frameworks, architectures, input data (sensors), benchmarks, and other related information, and Table 2 provides a supplementary key to the abbreviations and specialized symbols used therein. Additionally, training intelligent agents capable of performing embodied tasks requires a suitable environment (embodied environment), while the cost of training real robots in the real world is expensive that requires a lot of time and is difficult to provide environmental diversity. Therefore, most works choose to train in a virtual environment first and then deploy it to the real environment. We summarized some commonly used simulation environments in Table 3, where users can directly control the state of objects in the environment through APIs and interact with objects in the environment through an agent or VR devices.

| Abbreviation | Full name |

|---|---|

| SR | Success rate |

| OSR | Oracle success rate |

| TL | Trajectory length |

| NE | Navigation error |

| SPL | Success rate weighted by path length |

| CLS | Coverage weighted by length Score |

| nDTW | The normalized dynamic time warping |

| SDTW | The normalized dynamic time warping the success weighted by nDTW |

| ADG | Average distance to goal |

| ATL | Average trajectory length |

| AG | Average score |

| LSR | Localization success rate |

| RE | Reconstruction error |

| QA | Question answering accuracy. |

| NA | Navigation accuracy |

| SL | Spawn location |

| PS | Planning success |

| PE | Planning efficiency |

| Name | Published time | Scene type | Interactive mode | Basic task types |

|---|---|---|---|---|

| DeepMind lab [97] | 2016 | 3D | API, agent | Composite activities |

| VirtualHome [98] | 2018 | 3D indoor | API, agent | Household activities |

| ALFRED [99] | 2019 | 3D indoor | Agent | Household activities |

| BabyAI [100] | 2019 | 2D | Agent | Instruction following |

| VRKitchen [101] | 2019 | 3D indoor | Agent, VR | Cooking activities |

| Habitat [102] | 2019 | 3D indoor | Agent | Composite activities |

| CHALET [103] | 2019 | 3D indoor | Agent | Household activities |

| SAPIEN [104] | 2020 | 3D indoor | API, agent | Robotic interaction |

| ACTIONET [105] | 2020 | 3D indoor | Agent | Household activities |

| ThreeDWorld [106] | 2021 | 3D indoor, 3D outdoor | API, agent, VR | Composite activities |

| iGibson [107] | 2021 | 3D indoor | API, agent, VR | Household activities |

| RFUniverse [108] | 2022 | 3D indoor | Agent, VR | Household activities |

| AI2-Thor [42] | 2022 | 3D indoor | API, VR | Household activities |

| RCareWorld [109] | 2022 | 3D indoor | Agent, VR | Caregiving activities |

| MINEDOJO [110] | 2022 | 3D indoor,3D outdoor | Agent | Composite activities |

3. LLM and MLM

At present, embodied intelligence exhibits an integrated intelligence, which benefits from the improvement and fusion of single-modal models, especially the joining of vision and language models, allowing the model to handle multimodal inputs, processing visible information in accordance with text prompts, and operating in complicated environments, greatly promoting the model’s embodied ability. The initial fusion of vision and language models was primarily aimed at meeting the needs of specific tasks, such as classic visual question answering and image caption. Models such as LLMs and MLMs, which have universal capabilities, endow physical entities with strong generalization abilities, enabling autonomous robot system to shift from program execution orientation to task goal orientation, thereby allowing them to adjust to more intricate embodied activities, taking solid steps toward universal robots [47, 48]. In this section, we mainly reviewed some current work on LLMs and MLMs.

3.1. LLM

One of the challenges of visual language tasks lies in comprehending natural language and overcoming the bad generalization issues brought about by language diversity. Transformer [111] is the most preferable NLP model at present. What sets Transformer apart from earlier RNN and LSTM models is its reliance on attention mechanism instead of recursion and convolutions for capturing semantic dependencies in sentences, thereby achieving remarkable performance in various NLP tasks. Its computational efficiency and scalability enable model parameters to be expanded to over 100B. Transformer, as an early LLM, had a significant impact on later models. Currently, most LLMs are based on Transformer and are essentially variants of Transformer, for instance, BERT [112] and GPT-3 [113]. BERT is a pretrained language representation model based on the Transformer structure, which is trained on a large corpus using two unsupervised tasks, masked LM and next sentence prediction, and can be finely tuned to downstream tasks. While GPT is an auto-recursive model, GPT-1 was originally developed based on a generative, decoder-only Transformer architecture, using a hybrid method of unsupervised pretraining and supervised fine tuning. Subsequent research into the scaling effect of the model revealed that when the parameters of the model were expanded to a larger size, the model’s performance significantly improved; therefore, when the parameter size of GPT-3 reached 175B, the model showed astonishing emergence ability [114]. Aside from GPT, there are other language models with large-scale parameters such as PaLM [115] and LLaMA [116]. Although there are differences in training process, parameter size, and model structure details (activation functions, embedding layers, etc.), most of them are still based on the transformer structure. We have summarized information about them in Table 4.

| Type | Model | Parameter size | Affiliation |

|---|---|---|---|

| LLM | GPT-3 [113] | 175B | OpenAI |

| PlaM [115] | 540B | ||

| LLaMA [116] | 13B | Meta | |

| LaMDA [117] | 137B | ||

| GLM [118] | 130B | TsingHua | |

| BLooM [119] | 176B | BigScience | |

| LVM | ViT [120] | 632M | |

| CLIP [121] | 695M | OpenAI | |

| MLM | KOSMOS-1 [122] | 1.6B | Microsoft |

| Flamingo [123] | 10.2B | DeepMind | |

| BLIP-2 [124] | 188M | Salesforce research | |

| ML-MFSL [125] | ≈1.5B | UvA | |

| MiniGPT-4 [126] | 13B | KAUST | |

| Video-LLaMA [127] | ≈13B | Alibaba | |

| LLaMA-Adapter [128] | 1.2M | Shanghai AI lab | |

| FROMAGe [129] | 5.5M | CMU | |

3.2. MLM

The LLM has in context learning ability, which is trained through prompt learning and RL from human feedback to learn from human preferences, enabling it to cope with various natural language tasks. Moreover, it also exhibits certain abilities in code generation and solving mathematical problems. Although LLMs exhibit surprising zero/new shot inference performance in most NLP tasks, they are only restricted to discrete text. At the same time, large-scale visual models have experienced accelerated development in terms of visual perception. ViT [120] is a large visual model (LVM) inspired by Transformer, which also eliminates convolution and calculates attention between image regions. It divides the image into small pieces and uses the linear embedding sequence of these small pieces as input to the Transformer, achieving excellent results in image classification tasks and can be widened to multiple other tasks. CLIP [121] is a LVM that combines natural language; during the training process, image text pairs are used instead of image labels to enable the model to learn the correlation between image features and text features, leveraging contrastive learning to hone the model’s general feature representation skill which through the way that boosting the similarity between images and their corresponding text, and decreasing the similarity between images and unrelated text. CLIP’s supervised information acquired via natural language demonstrates how cross-modal learning enhances model training generalization, and its capacity to relate images with text makes it especially suitable for downstream visual language tasks. Visual information and textual information are often complementary by joining together LLMs and LVMs, and it is possible to further enhance the capability of vision–language models in tackling various challenging multimodal tasks. There are two key points in LLM that combined with LVM: (1) The zero-shot/feed-shot capability of the model and (2) the cross-modal alignment capability. Most of the current LVM models utilize pretrained single-mode models, such as pure visual models and pure language models, which can provide models with strong zero-shot/feed-shot capabilities to generalize to new tasks. However, single-mode visual/language models are trained within their respective modes, and how to align cross-modal information is the most critical problem. Presently, two main approaches are direct combination, such as KOSMOS-1 [122], where Transformer is used straightaway to merge textual and visual features, or an additional cross-modal alignment network, like Flamingo [123], and it deploys the Perceiver Resampler and Gated XATTN-dense modules to promote cross-modal information interaction. The Perceiver Resampler is used to generate a fixed number of visual outputs and perform cross-modal alignment through the cross-attention layer in the Gated XATTN-dense module. BLIP-2 [124] fills the modal gap through a lightweight Querying Transformer (Q-Transformer) that directly connects image encoder and LLM. Through two stages of training, the Q-Transformer learns the visual representation extracting and vision-to-language generation of the corresponding text. The Q-Transformer is composed of two modules that share self-attention layers, and it utilizes the cross-attention layer to achieve cross-modal interaction between vision and text as well. ML-MFSL [125] defines a new multimodal few-shot meta learning method to bridge the gap between vision and language patterns. It consists of three components: a vision encoder, a meta mapper, and a language model. The meta mapper, which consists of multiple attention modules, maps the visual encoding to the late space of the language model. During the training process, a set of visual prefixes are added before the visual features extracted from the vision encoder; then, it is fed into the meta mapper to obtain the output results and concatenate them with text features, and sent to LLM later. Overall, the key to cross-modal information alignment lies in finding an appropriate mechanism to deliver visual concepts to language models by accumulating shared knowledge from multimodal tasks. From the perspective of AGI, MLM is more consistent with the way humans comprehend the world and can handle a greater variety of tasks, which has a higher promoting influence on embodied intelligence. We have provided a summary of some widely used MLMs in Table 4.

3.3. Chain of Thought (CoT)

Prompting engineering and fine-tuning LLM has stimulated the model’s emergency capabilities, but these are still insufficient to reach outstanding performance on challenging tasks. OpenAI proposed CoT in 2020, a way that enhances language model reasoning ability and has been validated to be effective in intricate reasoning tasks. It can improve the model performance in a range of mathematical, common sense, and symbolic reasoning tasks [130]. The main idea of CoT seeks to promote LLMs to not only generate the final result, but also to present the thinking process that leads to the answer, just like human mental processes. The construction of CoT includes two modes: (1) filling-based mode and (2) prediction-based mode. Specifically, padding-based patterns require inferring steps between the context (previous and subsequent steps) to fill logical blanks, while prediction-based patterns require extending the reasoning chain with given conditions (instructions and previous reasoning history) [131]. Both patterns are striving for the same goal, which is to ensure that the produced steps are accurate and consistent; moreover, several investigations regarding CoT have proposed broadening single-mode CoT to multimodal CoT (M-CoT), making use of visual data to enhance reasoning [132].

4. LLM/MLM-Based Robot Embodied Intelligence System Schemes

Our ultimate vision for universal AI envisions humanoid robots capable of executing multiple tasks with both dexterity and efficiency. In former times, embodied tasks usually encompassed navigating, exploring, answering questions, but not usually involved doing comprehensive activities with intricate action sequences. LLMs have demonstrated their potential for comprehensive task execution, possessing extensive world knowledge and strong guiding learning abilities [133]. For instance, ChatGPT, as illustrated in reference [134], can tackle diverse robot-related tasks in a zero-shot manner, adapting to various formal elements and enabling closed-loop reasoning through dialog. SayCan [135] integrates robots’ low-level skills with LLMs, where robots function as the “hands and eyes” of the LLM, furnishing high-level semantic knowledge for tasks. SayCan has devised a set of actions articulated in natural language, utilizing prompts and LLMs to devise plausible planning steps and rating allowable actions with a learned value function. PROGPROMPT [136], another robot task planning framework, incorporates programming language structures, predefined operational functions, and environmental objects, formulating robot plans as Python programs, enabling LLMs to directly yield executable plans as code. PROGPROMPT represents task planning as <O, P, A, T, I, G, t> tuples, where t is the task description, O represents the collection of all objects in the environment, P represents the relevant attributes of objects in O, A represents a set of executable operations, T represents the transformation model used to update the current environmental state, s represents the assignment state of the attribute P of object O in the current environment, and I and G represent the initial and target states, respectively. At the prompt of t, s continuously updates based on the previous moment’s s and A until s transitions from the I state to the G state. Both PROGPROMPT and ChatGPT are equipped with predefined function libraries, relying on function calls to achieve tasks. In these schemes, LLMs primarily function as the control hubs for embodied robots, generating task plans. Furthermore, LLM can provide prior knowledge to assist the robot in completing specific tasks, exemplified by Housekeep task [137] and TidyBot [138]. LLM is used to plan home cleaning tasks by moving every object on the floor to its “appropriate position” and utilizing the summarization capabilities of LLMs, to derive general preferences for specific individuals and provide personalized robot services. Visual information is crucial for most tasks in real-world environments, yet LLMs primarily process textual data, necessitating their integration with visual information in embodied tasks.

In the current embodied intelligence paradigm, LLMs can leverage visual information through two approaches: either by invoking diverse visual foundation models (VFMs) through the LLM, utilizing VFMs’ expertise in visual processing for environmental comprehension and interaction, exemplified by Visual ChatGPT [139], MM-REACT [140], and HuggingGPT [141]. This method necessitates clear visual operational prompts; if the prompt’s description of the task is too abstract, it is difficult to determine which VFMs should be called, and invoking multiple models can introduce cumulative errors. Alternatively, directly merging a visual model with an LLM forms a MLM for embodied tasks. PaLM-E [142] is the first large-scale embodied multimodal model that seamlessly integrates continuous multimodal sensor inputs into a LLM, enhancing real-world agent decision-making reliability. The PaLM-E system primarily comprises two components: a pretrained language model, PaLM, and a pretrained visual model, ViT/OSRT, capable of accepting multimodal inputs encompassing text and images. PaLM-E encodes continuous multimodal sensor inputs into vector sequences sharing the same spatial dimension as language embeddings, thereby integrating them into the language model. This enables the model to output results from classical image-language tasks or utilize them to control the robot’s underlying behavioral plan sequence, addressing diverse embodied reasoning challenges.

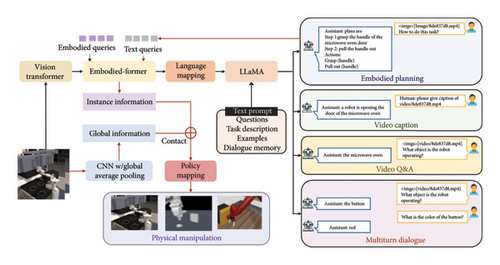

Similar to this work, EmbodiedGPT [143] is another end-to-end MLM. As depicted in Figure 2, EmbodiedGPT comprises four integrated modules: (1) a ViT-G/14 visual model for encoding observed visual features; (2) an LLaMA language model for executing question answering, description, and specific planning tasks; (3) an embedded transformer, serving as a bridge between the visual and language domains, inputting visual features into the language mapping layer to obtain matching modalities or extracting high-level related features to generate low-level control plans for the downstream network based on the plan; and (4) a policy network that generates low-level actions based on task-related features extracted by the embedded transformer, enabling agents to effectively interact with the environment. EmbodiedGPT utilizes CoT to produce embodied planning and trains on ecocentric data. The task planning generated by it has strong executability and granularity at the object part level. Inner Monologue [144] underscores the significance of feedback between agents and the environment in embodied tasks, aiding real-time task planning updates. In Inner Monologue, feedback primarily encompasses Success Detection, Passive Scene Description, and Active Scene Description. Success Detection provides task completion information, Passive Scene Description offers structured semantic scene information at each planning step, and Active Scene Description provides unstructured semantic information only upon querying by the LLM planner. The detailed information of these models is outlined in Table 5, while Table 6 provides a supplementary key to the abbreviations and special symbols used therein.

| Scheme | Visual model | LLM | Task | Benchmark | Metrics |

|---|---|---|---|---|---|

| SayCan [135] | — | PaLM | TP, MP | Mock kitchen, CRWE | PSR, ESR |

| PROGPROMPT [136] | ViLD | GPT-3 | TP, TPE | Virtual Home∗ [98], CRWE | SR, GCR, Exec |

| TidyBot [138] | ViLD, CLIP | GPT-3 | PP | CRWE | OPA |

| PaLM-E [142] | ViT, OSRT | PaLM | TP, VQA, VC | TAMP, language-Table, CRWE | SR |

| EmbodiedGPT [143] | ViT-G/14 | LLaMA | TP, VQA, VC | Meta-World∗ [145], Virtual Home∗, Franka Kitchen∗ [146], CRWE | SR |

| Inner Monologue [121] | MDETR | InstructGPT | TP, TPE | Ravens∗ [147], CRWE | SR |

- ∗Means Public disclosure benchmark.

| Abbreviation | Full name |

|---|---|

| TP | Task planning |

| MP | Motion planning |

| TPE | Task plan executing |

| VQA | Visual question answering |

| VC | Visual captioning |

| PP | Pick and place |

| OPA | The object placement accuracy |

| CRWE | Custom real-world environment |

| PSR | The plan success rate |

| ESR | The execution success rate |

| SR | The success rate |

| GCR | The goal conditions recall |

| Exec | The executability |

| ∗ | Public disclosure benchmark |

The embodied robot system based on MLMs can be characterized by several common features: (1) multimodal input, where intelligent agents must perceive and understand the environment during real-world tasks, as solely relying on text cannot yield feasible task planning in reality. Combining multimodal information like images, videos, and feedback states can enhance planning reliability; (2) multilevel planning, where deploying a complex task on a robot, results in a considerable robot control sequence, challenging to accurately generate solely through a LLM. Therefore, to execute complex tasks in an environment for an extended period, the agent first generates abstract high-level task planning, then sequentially produces low-level fine-grained task planning composed of low-level skills, feeding back the state during the process to the LLM for real-time high-level task planning adjustment, forming a control loop; (3) pretrained models, where both the visual and language components of MLM utilize pretrained large models, adapting to various tasks through transfer learning and enabling few-shot/zero-shot training [148]. Notably, using a frozen pretrained model maintains MLM’s performance in multiple tasks without changing weights during fine-tuning, ensuring the underlying model’s versatility remains intact [149].

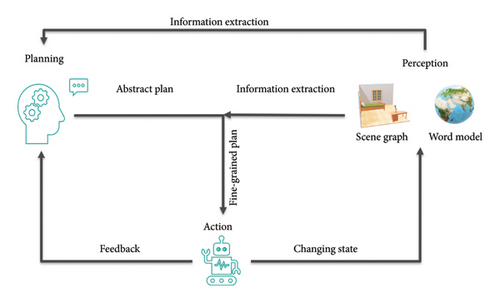

From the above analysis, it emerges that a comprehensive embodied intelligent system typically encompasses multimodal perception, task planning, and command compliance, summarized as the perception–planning–action (PPA) paradigm shown in Figure 3. The perception part acquires information about the agent’s environment, crucial for task planning and execution. It gathers as much information as possible, varying based on the task; for example, in grasping tasks, understanding objects’ detailed physical characteristics is essential, recommending the establishment of a world model [150]; for exploratory tasks, the focus shifts to scene entity relationships, where creating a scene graph is advantageous [151]. The planning part generates executable task planning in natural language form via MLM/LLM based on overall task and environmental information. This planning stage often lacks fine-grained execution details, requiring further planning by the action part, which parses each planning entry into a sequence of actions the agent can execute effectively to interact with the environment. The action stage’s primary goal is instruction compliance, invoking the robot’s low-level skills, including simple operations like forward and backward movement, steering, obstacle avoidance, or advanced operations mentioned earlier, including environmental exploration and VLN, etc. In short, low-level skills significantly impact the robot’s overall physical performance. Much research focuses on expanding low-level skills, including robot action conversion and video learning. Robot action conversion employs a visual language model to convert natural language instructions into robot action sequences based on prompts and scenes, with RT-1/2 being typical related work. RT-1 [152], built on the Transformer architecture, takes images and task descriptions as inputs, directly outputting tokenized actions, and PaLM-E uses RT-1 for underlying strategy provision. Similarly, VIMA [153] encodes input sequences of text and visual cue symbols through a pretrained language model, autoregressively decoding robot control actions for each environmental interaction step, differing from RT-1 by operating on predivided image objects using a detector for subsequent embedding. LATTE [154] considers more detailed and flexible robot control, enabling robots to complete actions in various ways, ensuring safety and modifying motion trajectories based on human intentions and current dynamic spatial state constraints. Video learning involves learning behavior by watching unlabeled online videos and expanding into complex tasks through combination [155], with the main challenge being the lack of video labels for model training. VPT [156] is an example of training inverse dynamic models into pseudo labels using a small amount of labeled data when labels are absent from the learning video dataset, followed by large-scale behavioral cloning or RL, fine-tuning the model to downstream tasks for various skills acquisition. Moreover, the second and third planning stages involve substantial embodied reasoning combined with environmental information, with adding a thinking chain in this part being the current mainstream choice.

5. Conclusion and Future Work

Although AGI remains unrealized, research in the field has progressed into the era of embodied intelligence. In this paper, we provide a comprehensive summary of the task content, model construction, and PPA paradigm associated with embodied intelligence systems. The embodied model aims to accurately perceive the environment, identify relevant objects, analyze their spatial relationships, formulate detailed task plans, and simulate human perception and interaction with the surroundings. Currently, embodied intelligence schemes employed in robots benefit from advancements in previous perceptual intelligence, representing an integration and expansion of these achievements onto embodied intelligence agents. Utilizing LLM or MLM as the backbone, these systems call upon packaged “skills.” Consequently, the overall embodied performance of the current system primarily enhances by combining low-level skills, though it remains limited by them. Here, LLM or MLM primarily serves as a “remote control” with low-level skills being the true drivers. The embodied schemes that integrate diverse VFMs like Visual ChatGPT, MM-REACT, and HuggingGPT have a modular system structure, which requires high execution efficiency for each part, and some generation results are unsatisfied due to the failure of VFMs and the instability of the prompt. The embodied scheme based on MLM has a more concise system structure, but faces challenges in data efficiency, reasoning ability, etc. We can discuss the current development status and future development of embodied intelligent systems based on the PPA paradigm from the following directions.

5.1. Task Complexity

A direct indicator of the ability of an embodied intelligent system is to observe how complex the system can perform tasks. Based on results, we categorize current embodied tasks into two types. The first type, embodied actions, necessitates the agent to execute a series of actions culminating in a completion signal. The second, embodied question and answer, similarly requires a series of actions but necessitates further analysis, ultimately returning an answer. Various embodied schemes often establish embodied environments based on virtual environments and agents, exemplified by the task “Bring me the rice chips from the drawer.” Here, the mobile agent needs to: (1) go to the drawers, (2) open the top drawer, and (3) retrieve the rice chip bag and place it on the counter. These tasks are relatively straightforward, focusing on navigation and retrieval without extensive environmental interaction or analysis. Taking SayCan as an example, PaLM-SayCan performed worst on the most challenging long-horizon tasks, where most failures were a result of early termination by the LLM. Therefore, such systems exhibit limited tolerance for unexpected situations, such as items being hidden or environmental settings contradicting the task description. Hence, during training, it is essential to increase task complexity by incorporating these unexpected factors, enabling embodied systems to overcome obstacles and execute tasks smoothly. Completing long-horizon tasks requires long-horizon reasoning over a required order, an abstract understanding of the instruction, and knowledge of both the environment and robot’s capabilities. Understanding complex environments is paramount, yet current schemes hinge on LLM/MLM-based task decomposition mechanisms that harness common sense for basic task planning but fail to grasp specific scenarios. An exemplary embodied intelligent system must function seamlessly across diverse, unforecasted environments, highlighting the necessity of interpreting and executing natural language instructions to augment knowledge transfer and generalization capabilities within intricate settings.

5.2. Model Reasoning Ability

LLM contains substantial real-world knowledge driven by a large amount of data, but lacks robust reasoning ability. CoT can enhance LLM’s reasoning ability by adding gradual reasoning text to the dataset, improving answer generation accuracy. However, acquiring this prompt is not straightforward, necessitating zero-shot training strategies. Model reasoning methods include probability-based and symbol-based methods. LLM’s reasoning stems from a probability-based methodology, where decisions are formulated based on the inherent correlations within data. In contrast to symbol-based methods, this approach boasts robust generalization abilities. However, it fails to endow the model with a genuine understanding of the causal nexus among knowledge, behavior, and environment, thereby yielding biased outcomes influenced by data. Consequently, these models struggle to operate interpretably, robustly, and reliably in genuine contexts. Reasoning types encompass common sense, relational, logical, and counterfactual reasoning, each requiring distinct solutions. Neither probability-based nor symbol-based methods can cover all reasoning types, suggesting a hybrid approach as a valuable paradigm exploration.

5.3. Migration From Simulation to Reality

In comparison with the extensive language or visual language datasets available, robot data remain notably sparse. Acquiring such data is both time-consuming and resource-intensive. To establish a versatile entity model that spans various scenarios and tasks within robot technology, the construction of large-scale datasets and the utilization of high-fidelity simulated environment data as a supplementary to real-world data are imperative. Nonetheless, the paramount issue with relying solely on simulated data lies in the simulation-to-reality gap. Simulation-to-reality adaptation entails the transposition of competencies or behaviors acquired in a simulated environment (cyberspace) to real-world scenarios (physical world). This process entails validating and refining the efficacy of algorithms, models, and control strategies developed in simulations to guarantee their resilient and dependable performance in physical environments. For instance, reference [135] notes that in a simulated kitchen, PaLM-SayCan attained a planning success rate of 84% and an execution rate of 74%, whereas in an authentic kitchen setting, there was a decrement of 3% in planning performance and 14% in execution. The question then arises: How can models trained in simulated environments be generalized to the real world without a significant decrement in execution performance? Crafting world models that closely mirror real-world environments and procuring high-quality data are pivotal to enhancing generalization capabilities.

5.4. Unified Evaluation Criteria

Although numerous benchmarks exist for assessing low-level control strategies, they frequently exhibit substantial disparities in the evaluated skills. Moreover, the objects and scenes incorporated into these benchmark tests are frequently constrained by simulator limitations. To conduct a thorough evaluation of the physical model, it is imperative to employ realistic simulators for benchmark tests that encompass a wide array of skills. Regarding advanced task planners, many benchmarks concentrate on assessing planning capabilities through question answering tasks. However, a more effective approach would be to simultaneously evaluate both high-level task planners and low-level control strategies to execute long-term tasks and measure success rates, rather than merely relying on isolated evaluations of the planners. This holistic approach offers a more comprehensive assessment of the capabilities of embedded intelligence systems.

5.5. Underlying Skill Expansion

In the realm of multimodal LLMs, current embodied intelligence adopts the PPA paradigm, integrating MLM with thought chains for task planning and reasoning. Essentially, it constitutes an integrated and invoked methodology. The primary limitation of the system lies in the scope and capabilities of the underlying skills. Future endeavors to broaden the skill repertoire and enhance their robustness would alleviate this constraint.

PPA, as the cornerstone paradigm of embodied intelligence, is not the definitive framework for general AI. Yet, it marks a promising beginning. Until we unravel the mysteries of the brain, exploring embodied intelligence from the ground up offers valuable insights for cognitive science.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the Heilongjiang Province Key Research and Development Program (Grant GA21A302).

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.