Intelligent Sensing and Identification of Spectrum Anomalies With Alpha-Stable Noise

Abstract

As the electromagnetic environment becomes more complex, a significant number of interferences and malfunctions of authorized equipment can result in anomalies in spectrum usage. Utilizing intelligent spectrum technology to sense and identify anomalies in the electromagnetic space is of great significance for the efficient use of the electromagnetic space. In this paper, a method for intelligent sensing and identification of anomalies in spectrum with alpha-stable noise is proposed. First, we use a delayed feedback network (DFN) to suppress alpha-stable noise. Then, we use a long short-term memory (LSTM) autoencoder-based attention mechanism to sense anomaly. Finally, we use the deep forest model to identify abnormal spectrum. Simulation results demonstrate that the proposed method effectively suppresses alpha-stable noise, and it outperforms existing methods in abnormal spectrum sensing and identification.

1. Introduction

In recent years, with the rapid development and globalization of new technologies, such as the cloud computing [1–6], Internet of Things (IoT) [7–12], smart cities [13–15], Internet of Vehicles (IoVs) [16], and the wide application of radio communication technology [17], spectrum resources have become increasingly scarce [18–22]. To improve usage, spectrum resources can be used by other users when the current spectrum is idle. However, there are various issues associated with the unregulated use of spectrum resources, such as abnormal spectrum. In the meantime, the emergence of radio interference may also bring potential threats to the regular communication services, causing anomalies in the spectrum signals. Many factors can lead to anomalies in the spectrum. For example, when the authorized useful radio signal is sent from the wireless transmitter, the out-of-band radiation will be generated. For nearly receivers, this out-of-band interference signal can lead to in-band blocking of the receiver and cause errors in the received information [23]. Others include channel interference, adjacent channel interference, intermodulation interference, and blocking interference [24]. When the technical indicators of the communication equipment do not meet regulatory requirements, it may fail to work. These factors will generate spectrum anomalies, including equipment failure and stray radiation exceeding the standard [25]. These abnormal spectrum signals will affect the monitoring of spectrum resources and lead to confusion in the management of radio spectrum resources. Therefore, it is necessary to comprehensively and effectively manage spectrum resources using abnormal spectrum sensing and identification. In this way, we can prevent legitimate frequency bands from being illegally used by malicious users, avoid interference from external signals, and meet the needs of most users. Moreover, in the complex electromagnetic environment, both human and natural factors will lead to the generation of non-Gaussian noise, and we use alpha-stable noise to describe this kind of noise, which is studied in this paper. In addition, alpha-stable noise has impulsive characteristics with the spike pulse.

Regarding abnormal spectrum sensing, the authors in [26] proposed an unsupervised spectrum anomaly sensing approach with explainable features based on the adversarial autoencoder (AAE). During training, it does not require manual labeling of a large number of sample data, and the power spectral density (PSD) spectrum of the signal was extracted as explainable features. When the false alarm probability was 1%, the model achieved an average abnormal sensing accuracy of over 80%. Reference [27] proposed a radio anomaly sensing algorithm based on improved generative adversarial network (GAN). First, short-time Fourier transform (STFT) was applied to obtain the spectrogram from the received signal. Subsequently, the spectrogram was reconstructed by integrating the encoder network into the original GAN. The presence of anomalies can be sensed based on the reconstruction error and discriminator loss. Reference [28] proposed a spectrum sensing method based on the GAN model for many radio interference situations in wireless communication systems. It can be used for sensing abnormal spectrum with impulsive noise.

In the study of abnormal spectrum identification, the authors in [29] proposed an interference classification and identification algorithm based on signal feature space and support vector machine (SVM). It used signal model expression and signal space theory to extract the bandwidth, spatial power spectrum flatness, spatial spectrum peak-to-average ratio, and other characteristics of interference signals. In addition, it combined the SVM method to classify the interference signal samples. Reference [30] proposed a classification algorithm for studying interference signals in the global navigation system. The model consisted of an interference fingerprint spectrum and a convolutional neural network (CNN). The interference fingerprint spectrum can be used to learn the frequency and power distribution of interference signals. At a low interference power of −95dBm, the identification accuracy of nine interference anomaly types can reach more than 90%. Reference [31] proposed a wireless interference classification algorithm based on the time-frequency (TF) component analysis of CNN, which allowed convolutional computation to be performed only in the position where TF components or important parts exist in the TF image of the interfered signal, thus reducing redundant computation. Compared with traditional CNN, the computational complexity of the method was reduced by about 75% while slightly improving the identification accuracy. Reference [32] proposed a learning framework based on long short-term memory (LSTM) denoising autoencoder, which automatically extracted signal features from noisy radio signals and used the learned features to classify the type of modulated signal. The classification of real-world radio data showed that the proposed framework can reliably and efficiently classify the received radio signals.

- •

To suppress the disturbance of alpha-stable noise to the intelligent sensing and identification of abnormal spectrum, a delayed feedback network (DFN) is used;

- •

The characteristics of several proposed abnormal spectrum types are analyzed, and the feature extraction of the abnormal spectrum types is completed;

- •

The LSTM autoencoder based on attention mechanism is used to sense abnormal spectrum;

- •

The deep forest model is used to identify abnormal spectrum.

2. System Model

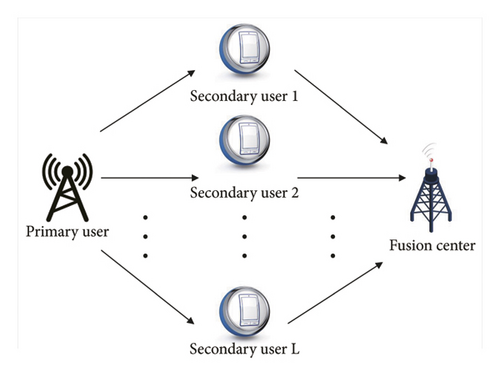

2.1. Spectrum Sensing Model

In this paper, we use the Rayleigh fading channel as the wireless fading channel with , that is, the real and imaginary parts of hd(n) are independent and follow the same Gaussian distribution with a mean of zero and a variance of . So, hd(n) follows a complex Gaussian distribution with a mean of zero.

2.2. Noise Model

3. Abnormal Spectrum Intelligent Sensing and Identification

3.1. Alpha-Stable Noise Suppression

DFN is a type of neural network that enhances the reservoir layer of the traditional pooling computing model. Its delayed network with feedback systems generates short-term dynamic memory, allowing the network to replicate transient neural responses. Based on this characteristic, non-Gaussian noise can be suppressed. Therefore, we use DFN to suppress alpha-stable noise in this paper.

3.2. Abnormal Spectrum Intelligent Sensing

3.2.1. Analysis and Modeling of Abnormal Spectrum States

In the electromagnetic environment, after using spectrum sensing technology to sense the authorized PU signal mixed with non-Gaussian noise, we need to promptly monitor the identified PU signal to determine if its current working state is normal. This process is known as abnormal spectrum sensing. In this paper, we study four types of abnormal spectrum, namely, signal interrupt transmission, frequency shift emission, power exceeding standard, and equipment failure. First, we extract the characteristics of the abnormal spectrum states mentioned above and then construct an LSTM autoencoder based on an attention mechanism to complete the abnormal spectrum intelligent sensing.

The equipment failure refers to the wear or corrosion of equipment caused by external forces during the working process, resulting in the functional damage to the equipment. The occurrence of such damage may cause problems in the starting process of the equipment and may also be accompanied by abnormal vibration phenomena, making it challenging for the equipment to meet the specified technical requirements.

3.2.2. Intelligent Characterization of Abnormal Spectrum

In this paper, the intelligent representations of the abnormal spectrum include time occupancy, frequency shift disturbance sequence, relative wavelet time entropy, and Mahalanobis distance.

3.2.3. LSTM Autoencoder Based on the Attention Mechanism

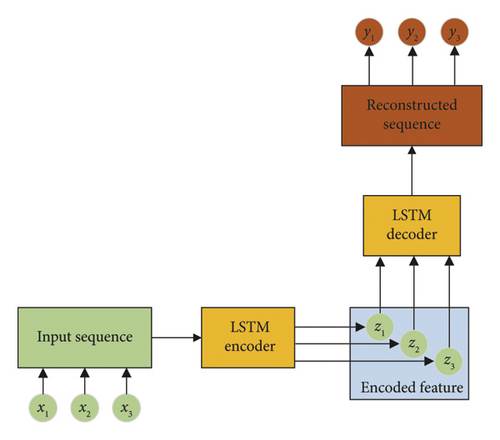

To solve the problem of LSTM network in processing time series data, an autoencoder and LSTM network are combined to obtain the reconstructed sequence of the original input sequence. Figure 2 represents the model diagram of an LSTM autoencoder. The main structure of the LSTM autoencoder consists of an encoder and a decoder, both using the LSTM network model. During the training process, they exclusively use normal electromagnetic samples with high confidence so that the model can only learn the deep semantic features of normal samples. During the training process, the autoencoder will conduct data reduction and compression of the original samples. This process discards irrelevant information, filters noise from the input data, and retains the main information in the sample. After the training, the new sample data are input into the LSTM autoencoder network and then fed into the fully connected layer for feature reconstruction. Afterward, the reconstructed value can be obtained, which is used to compare with the original input value.

3.2.4. Construction of Sensing Statistics

-

Algorithm 1: The abnormal spectrum intelligent sensing using LSTM autoencoder based on attention mechanism.

-

Require:x(k): the received signal.

-

Ensure: result: the abnormal spectrum judgment result.

- 1.

Suppress alpha-stable noise using DFN and use equations (10), (11), (17), and (19) to complete the feature extraction;

- 2.

Input the feaAs per style, Department is mandatory in affiliation. Please provide the Department/Division for affiliations 1, 2, 3, 5, and 7.ture sequence V of the normal sample into the autoencoder network to start network training. Map the input sequence V to the hidden space H through the feature mapping relation gθ : x⟶z to complete the hidden feature learning of the input data;

- 3.

Adjust the length of different input sequences to encode different hidden variables zi using the attention mechanism and use the mapping relationship to convert the hidden layer to the decoding layer;

- 4.

Determine whether the whole network has converged. If it has converged, save the network model M and proceed to Step 5. If not, proceed to Step 2;

- 5.

Denote the test sample sequence input to the network model M as P1. After passing through the encoder network, the reconstructed sequence P2 can be obtained, and the reconstruction error e between P1 and P2 can be calculated by equation (28);

- 6.

Use the dynamic threshold division method to obtain . If , the abnormal spectrum appears in the current monitoring spectrum; otherwise, it does not appear.

3.3. Abnormal Spectrum Intelligent Identification

3.3.1. Intelligent Characterization of Multifractal Spectrum Features

After sensing the abnormal spectrum using the collected signal samples, it is necessary to separate each abnormal spectrum sample using the identification algorithm so that the corresponding measures can be taken to deal with each abnormal spectrum according to its characteristics. This paper focuses on four kinds of abnormal spectrum signal types which have been extracted. We extract multifractal dimension spectrum features from them and then use these features as sample inputs to the deep forest model. Finally, we introduce the multigranularity scanning structure and cascade forest structure in the deep forest structure to enable the identification of several abnormal spectrum types.

3.3.2. Deep Forest Model

Then, we use the deep forest model to identify the abnormal spectrum, which is explained in the following. Random forest is the primary component of the cascade deep forest, consisting of multiple subdecision trees. The decision tree compares the features of the input samples with the internal nodes through a layer-by-layer division process starting from the root node and progressing from top to bottom. This division process aims to classify the input samples into leaf nodes, each of which may contain multiple category labels. Suppose that the number of decision trees forming the random forest is C, the input sample set is E = [(ai1, ai2, …, aid), bi], i ∈ (1, L), L denotes the number of samples in the sample set, aid represents the d th feature in the i th input sample, d is the total number of features in the sample, bi stands for the label of the sample with bi ∈ (1, M), and M represents the total number of categories.

The primary part in the deep forest structure is the multigranularity scanning structure. In DNN, the enhancement of input samples’ features is typically achieved through convolution operations. The multigranularity scanning structure uses sliding window processing in convolution to transform the features of the initial input samples. This integration in breadth helps represent the interrelationship between different categories of samples, enabling a more accurate characterization of the sample. Suppose that the input feature data dimension is 1 × n, the sliding step of the introduced sliding window is 1, and the size of the window is 1 × m. Therefore, when performing feature scanning, a feature vector of size 1 × m will be calculated, and the size of the obtained sample feature dataset is (n − m + 1).

The process of multigranularity scanning can be expressed as follows. We assume that the input one-dimensional sample sequence is X = (x1, x2, …, xn), the sliding window is W = (w1, w2, …, wm) and m < n, and the sliding window step is k and (k < m), so we can obtain the scanned sequence set as N = (N1, N2, …, Nq), the sequence subset as Nq = (xq, xq+1, …, xq+m−1) and q = 1, 2, …, (n − m)/k + 1. After multigranularity scanning of the input data, an n-dimensional sequence segment is expanded into (n − m + 1) new m-dimensional sequence segments to achieve data enhancement. The enhanced new sequence segments will be connected in parallel as the input feature vector Z for the subsequent cascade forest.

After enhancing the features using a multigranularity scanning structure, the next stage of the deep forest involves using a cascade structure to learn the features layer by layer. The input of each layer of the cascade forest consists of two parts, namely, the enhanced feature vector obtained after multigranularity scanning and the feature vector obtained after training the previous layer. To achieve a diverse data distribution, each layer of the cascade forest contains two types of structures, which are random forest and complete random forest. The difference between complete random forest and random forest is that the best-performing features are randomly selected from all input features for classification. Through this layer-by-layer training method, if the number of layers continues to increase and the identification performance is not significantly improved, the training process is terminated. This adaptive training method greatly reduces the impact of manual parameter adjustments on the robustness of identification.

In the defined cascade forest module, the first level of the cascade forest is denoted as ψ1, and the input of the first level is defined as x so that the input vector of the second level of the cascade forest can be represented as [x, ψ1(x)]. ψ1(x) is the output vector of the first layer, and [a, b] stands for the process of forming a feature vector by connecting two feature vectors. We denote the feature tag set of the enhanced feature vector set Z as Y and extract a certain proportion of samples from Z as the training set S = ((z1, y1), (z2, y2), …, (zn, yn)), and they are all subject to the data distribution D. During the training process, the cascade forest learns the features of the input sample.

To describe the deep forest learning process, several characteristic modules in the deep forest learning process are explained in the following. The random forest module θ = (θ1, θ2, …, θd) is a submodule of the entire deep forest. The relationship for learning mapping in the random forest module of the dth layer of the cascade forest in the deep forest can be expressed as thetad, and its specific process has been described above. The cascade forest module with multilayer connection U = (u1, u2, …, ud) can be formed by several random forests and complete random forests, where ud represents the dth layer of the cascade forest structure composed of random forests. Moreover, the output feature vector after passing through the cascade forest is ψ = (ψ1, ψ2, …, ψd), where ψd denotes the output feature vector of the dth layer of the cascade forest. The data distribution in each layer of the cascade forest can be expressed as D = (D1, D2, …, Dd).

-

Algorithm 2: The abnormal spectrum intelligent identification based on the deep forest model.

-

Require:x(k): the received signal.

-

Ensure:type: the abnormal spectrum identification type.

- 1.

Suppress alpha-stable noise using DFNand use equations (10), (11), (17), and (19) to calculate feature sequences for several types of abnormal spectrum. Moreover, use equation (36) to calculate the multifractal spectrum characteristics of the feature sequences to obtain the input feature vector ;

- 2.

Extend the original input n dimensional sequence to (n − m + 1)m dimensional sequence segments with multigranularity scanning structure by using the method of scanning with sliding window and obtain the input feature vector of cascade forest by parallel connection;

- 3.

Input the feature vector into the random forest structure and the completely random forest structure of the first layer of the cascade forest, respectively. In the random forest, for the input i th sample, the kth decision tree will output M decision results pi,k(bi = c), c = (1, 2, …, M). Obtain the mean value of the output results of all subtrees in the forest to derive a class vector pc;

- 4.

Connect the class vectors generated by all forests at each level of the cascade forest to the original inputs after multigranularity scanning to create enhanced features. These enhanced features will then serve as inputs for the next level. In the final layer, calculate the average of the class vectors produced by all the forests. Subsequently, determine the category that corresponds to the maximum mean value as the ultimate identification type;

- 5.

After extensive training, when the identification accuracy of the entire cascade forest no longer increases, the addition of more cascade layers is halted. This indicates that the entire identification process is complete.

4. Experimental Results and Analysis

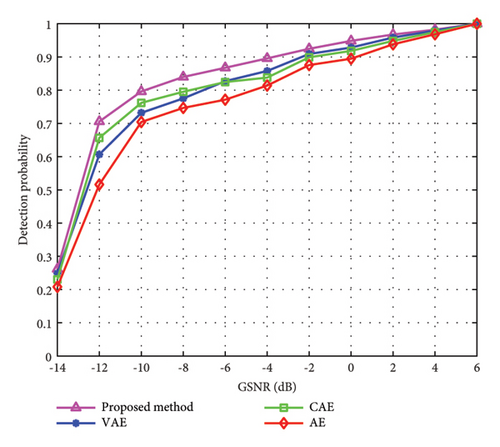

To verify the effectiveness of the proposed method, we established an FM communication system in Matlab simulation platform to simulate and generate broadcast signals. In the simulation, the Simulink platform is used to simulate the medium wave AM broadcast system. The center frequency is 1000kHz and the bandwidth is 12kHz. In the abnormal spectrum sensing experiment, 30,000 samples of normal spectrum signals are collected as the training set required for network training. The signal point length of each signal sample is 1024 and the signal points in each sample are normalized. In the training process, the initial learning rate is 0.0001, the batch size per training is 256, the optimizer uses Adam, and the MSE is used to calculate the network loss. In addition, to illustrate the good performance of the proposed method, we use variational autoencoder (VAE), convolutional autoencoder (CAE), and traditional autoencoder (AE) to compare with the proposed method. In abnormal spectrum identification experiment, for several types of abnormal spectrum, 300 samples are collected under each GSNR as the training set and 200 samples are collected under each GSNR as the test set. The number of random forests in the cascade forest is set to 4 (two random forests and two completely random forests), the number of decision trees in a single random forest is set to 101, and the sliding window and step size in the multigranularity scan are set to 2 and 1, respectively.

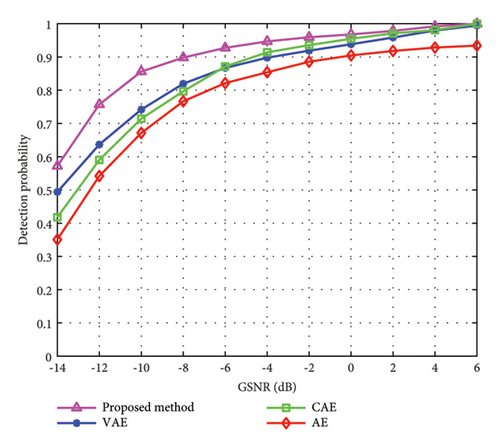

In Figure 3, when SIR is 22 dB, we compare the abnormal spectrum sensing performance of the power exceeding standard of different methods under different GSNR. From Figure 3, it is observed that as the GSNR increases, the abnormal spectrum sensing probability of several methods gradually increases. When the GSNR is 2 dB, the abnormal spectrum sensing probability of the proposed method can reach 97.7%. Therefore, we can conclude that the proposed method outperforms existing methods in abnormal spectrum sensing performance for the power exceeding standard.

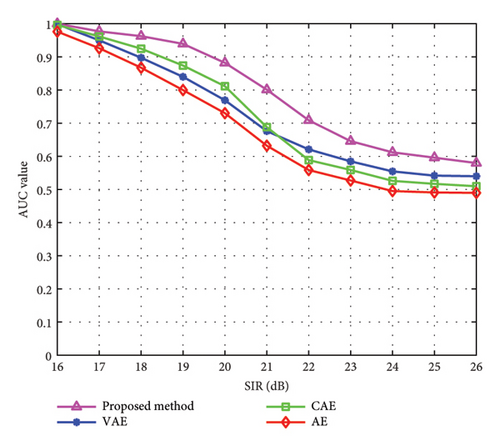

In Figure 4, when SIR is 20 dB, we compare the abnormal spectrum sensing performance of the power exceeding standard of different methods under different SIR. From Figure 4, we know that the proposed method can achieve an abnormal spectrum sensing accuracy of 87.1%. It can be found that when SIR is between 16 dB and 20 dB, the sensing effectiveness of CAE method is higher than that of VAE method. But with the gradual increase of SIR, the sensing performance of CAE method becomes lower than that of the VAE method. It can be seen from the comparison of the four curves that the proposed method has the best abnormal spectrum sensing performance within the specified SIR range when compared with existing methods.

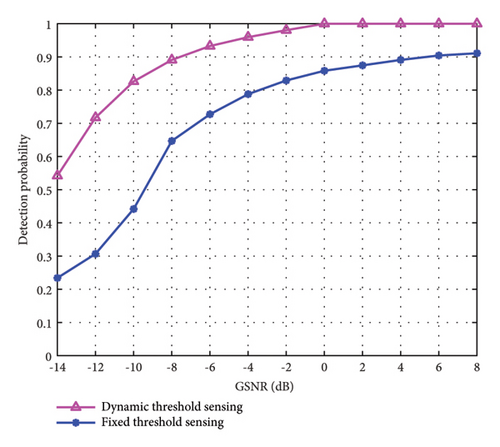

In Figure 5, we verify the influence of different threshold partitioning methods on the abnormal spectrum type sensing result of equipment failure under different GSNR. Moreover, we test the abnormal spectrum sensing performance of the dynamic threshold partitioning method and the fixed threshold partitioning method, respectively. As can be seen from Figure 5, with the continuous increase of GSNR, the sensing accuracy of the two threshold partitioning methods gradually increases. However, the fixed threshold partitioning method is difficult to adjust according to the reconstruction characteristics of different samples, so the overall sensing effectiveness of the fixed threshold partitioning method is significantly lower than that of the dynamic threshold partitioning method. When the GSNR is greater than -8 dB, the sensing accuracy of the dynamic threshold partitioning method is greater than 90%. Therefore, we can know that the dynamic threshold partitioning method has a more accurate sensing effect than the fixed threshold partitioning method, which proves the effectiveness of the proposed method.

In Figure 6, we compare the abnormal spectrum sensing performance of frequency shift emission under different methods. From Figure 6, it can be seen that with the increase of GSNR, the abnormal spectrum sensing accuracy of several methods is constantly improving. Compared with other methods, the proposed method offers slightly higher sensing accuracy. When GSNR is -8 dB, the sensing accuracy of the proposed method can reach more than 90%. So, we can know that the proposed method has good abnormal spectrum sensing validity and is superior to existing methods.

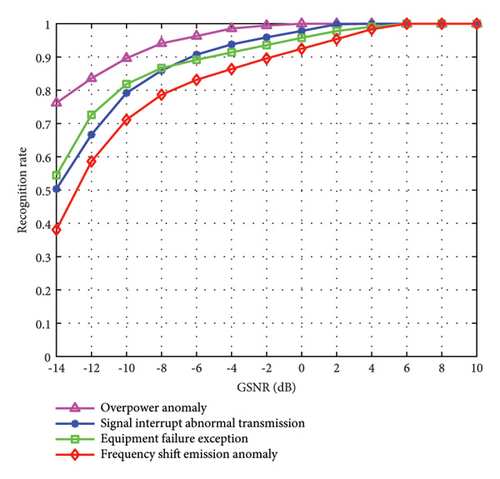

In Figure 7, we demonstrate the identification performance of the proposed method for different types of abnormal spectrum with different GSNR. From Figure 7, we can observe that as the GSNR continues to increase, the identification accuracy of several abnormal spectrum signals also rises. When the GSNR reaches -2 dB, the identification accuracy of all kinds of abnormal spectrum signals reach more than 90%. When the GSNR is 4 dB, the identification accuracy of all kinds of abnormal spectrum signals is close to 98%. For power exceeding standard, it is the easiest to identify. For signal interrupt transmission and equipment failure, the initial identification accuracy of the former is low. However, as the GSNR increases, the useful signals in the channel become more easily sensed, leading to a gradual improvement in the accuracy of identifying this anomaly. It can be seen that the proposed method is effective and feasible for several types of abnormal spectrum mentioned in this paper.

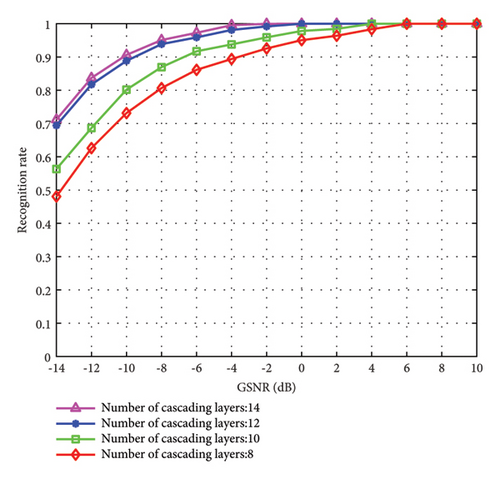

In Figure 8, we demonstrate the identification performance of the proposed method under different numbers of cascade layers with different GSNR. From Figure 8, it can be seen that as the number of cascade layers deepens, the overall identification accuracy shows an upward trend. This is because the deeper the cascade depth, the forest can acquire more enhanced features, enabling better learning of the samples. When the number of cascade layers increases to 12 and 14, the overall identification performance is very similar. This suggests that increasing the number of cascaded layers significantly improves identification accuracy. However, once the number of cascade layers exceeds a certain threshold, the identification accuracy will no longer increase.

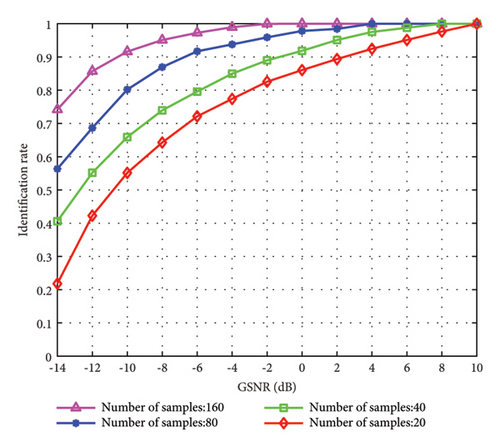

In Figure 9, to verify the impact of the number of training samples on the identification performance of the proposed method in this paper, the number of samples is set to 20, 40, 80, and 160, respectively. From Figure 9, it is seen that as the number of training samples and the GSNR increase, the identification rate of the proposed method continues to rise. When the number of training samples is 20 and the GSNR is 2 dB, the rate can only reach less than 90%. When the number of samples is 160 and the GSNR is -6 dB, the identification rate can reach over 95%. Therefore, we can know that the identification performance of the proposed method can be significantly enhanced by using a large number of samples properly during training.

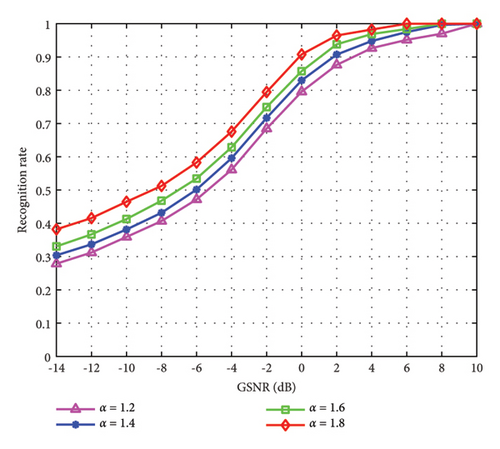

In Figure 10, we demonstrate the identification performance of the proposed method under different characteristic exponents α with different GSNR. From Figure 10, we can observe that as the characteristic exponent increases continuously, the identification performance shows an upward trend. When α = 1.2 and α = 1.4, the identification performances are very close. When α = 1.8, the identification accuracy reaches its maximum. In addition, when α = 1.8 and the GSNR is 0 dB, the identification accuracy of the proposed method can reach more than 90%. So, we can know that setting the value of the characteristic exponent α to a larger value enhances the identification performance of the proposed method.

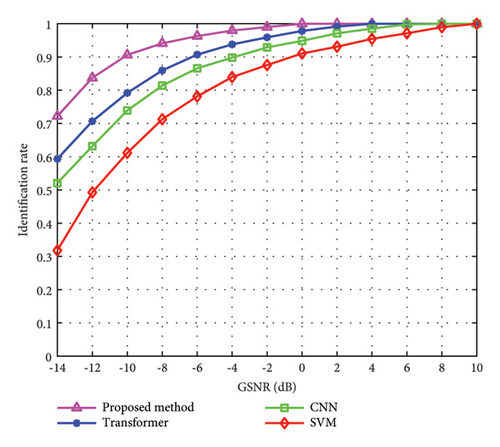

In Figure 11, we compare the abnormal spectrum identification performance between the proposed method and existing methods under different GSNR. From Figure 11, it is shown that the identification rate of the proposed method is significantly better than that of existing methods. With the continuous increase of the GSNR, the identification rate of all methods is rising. When the GSNR reaches −10 dB, the identification rate of the proposed method can reach 90%, while other methods are lower than 80%, indicating that the proposed method has good abnormal spectrum identification performance.

5. Conclusion

This paper proposes the abnormal spectrum intelligent sensing and identification method with alpha-stable noise. First, we suppress alpha-stable noise using the DFN. Then, we propose an attention-mechanism-based LSTM autoencoder method to sense abnormal spectrum. Moreover, in the sensing process, the reconstruction error between the normal sample signal and the abnormal sample signal is used as the sensing statistic, and the sensing threshold is determined by a dynamic threshold division method. Next, we propose a method to identify the abnormal spectrum based on a deep forest model. We extract the multifractal spectrum features from several abnormal spectrum representation sequences and enhance the original input features by using the multigranularity scanning structure. In addition, the class vector generated by all forests is averaged and then the final identification type is determined by maximizing the mean class. The experimental results show that the proposed method is better than existing methods in abnormal spectrum sensing and identification. In abnormal spectrum sensing, the proposed method demonstrates more stable performance compared with existing methods. In abnormal spectrum identification, the proposed method exhibits strong parameter robustness.

Moreover, the integration of satellite and terrestrial networks has a wide coverage area and good communication capability. However, the number of nodes in the network is large, the distance between each other is long, the distribution is irregular, and a large number of spectrum sensing data will make the transmission link become crowded, resulting in unpredictable errors. In addition, the link of the integration of satellite and terrestrial networks changes rapidly, and the spectrum sensing results are not timely, and so on. All these bring challenges to the spectrum sensing discussed in this paper. Therefore, in the future, we will study the challenges posed by the integration of satellite and terrestrial networks to spectrum sensing and optimize the shortcomings of RNN variants such as GRU and bidirectional LSTM so as to better complete the tasks mentioned in this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the National Natural Science Foundation of China under Grants U2441250, 62231027, and 62301380, in part by the Natural Science Basic Research Program of Shaanxi under Grant 2024JC-JCQN-63, in part by the China Postdoctoral Science Foundation under Grant 2022M722504, in part by the Postdoctoral Science Foundation of Shaanxi Province under Grant 2023BSHEDZZ169, in part by the Key Research and Development Program of Shaanxi under Grant 2023-YBGY-249, and in part by the Guangxi Key Research and Development Program under Grant 2022AB46002.

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grants U2441250, 62231027, and 62301380, in part by the Natural Science Basic Research Program of Shaanxi under Grant 2024JC-JCQN-63, in part by the China Postdoctoral Science Foundation under Grant 2022M722504, in part by the Postdoctoral Science Foundation of Shaanxi Province under Grant 2023BSHEDZZ169, in part by the Key Research and Development Program of Shaanxi under Grant 2023-YBGY-249, and in part by the Guangxi Key Research and Development Program under Grant 2022AB46002.

Open Research

Data Availability Statement

No data were used to support the findings of this study.