Recent Advances in Automatic Modulation Classification Technology: Methods, Results, and Prospects

Abstract

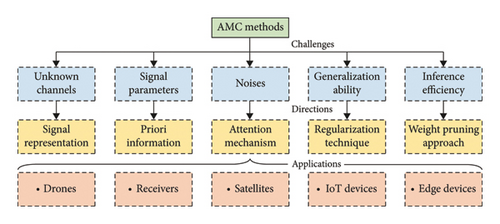

As an essential technology for spectrum sensing and dynamic spectrum access, automatic modulation classification (AMC) is a critical step in intelligent wireless communication systems, aiming at automatically recognizing the modulation schemes of received signals. In practice, AMC is challenging due to the influence of communication environment and signal parameters, such as unknown channels, noise, symbol rate, signal length, and sampling frequency. In this survey, we investigated a series of typical AMC methods, including key technology, performance comparisons, advantages, challenges, and future key development directions. According to the methodology and processing flow, AMC methods are divided into three categories: likelihood-based (Lb) methods, feature-based (Fb) methods, and deep learning methods. The technical details of various types of methods are introduced and discussed, such as likelihood distributions, artificial features, classifiers, and network structures. Then, extensive experimental results of state-of-the-art AMC methods on public or simulated datasets are compared and analyzed. Despite the achievements that have been made, there are still limitations of the individual methods, including generalization capability, reasoning efficiency, model complexity, and robustness. In the end, we summarized the severe challenges faced by AMC and key future research directions.

1. Introduction

With the development of the sixth-generation (6G) wireless communication system, more diversified and complex application scenarios are gradually being considered [1, 2], in which the drone [3] or vehicle communication system [4] can provide real-time and reliable network access for highly mobile users. Drones or vehicles can provide the hotspot coverage for mobile users, make up for the blind areas covered by ground communication base stations, and improve the overall coverage of the network. By deploying communication nodes in the air and realizing the enhancement and supplementation of the ground communication network [5–7], the wireless communication systems can effectively improve the network capacity, reduce the communication congestion, and ensure the smooth operation of the communication service under special scenarios, such as dense urban areas and natural disaster sites. In addition, the high-speed wireless communication system can be combined with ground communication system [8], satellite communication system [9], and other communication modes [10, 11] to realize a highly integrated network architecture, which is able to flexibly meet the requirements of various application scenarios and provide users with efficient and reliable communication services. For example, the combination of self-driving vehicles and intelligent traffic management systems helps to realize real-time and efficient information transmission and exchange, improving the safety, efficiency, and sustainability of road traffic. In the fields of virtual reality (VR) [12], augmented reality (AR) [13], and Internet of Things (IoTs) [14], wireless communication systems can provide flexible and efficient network access to meet the demanding requirements for communication performance in new applications.

In the wireless communication system, the modulation schemes of transmitted signals may need to be adjusted in real-time according to the channel condition due to the signal transmission distance, terrain, buildings, and other factors. As one of the key technologies of wireless communication system, the AMC technique [15] aims to monitor and recognize the signal modulation scheme in real-time, so as to help the wireless communication system adaptively adjust the reception parameters and improve the signal reception quality. This is crucial to ensure stable communication between users and ground control centers in complex environments [16]. On the other hand, wireless communication system faces various non-cooperative interferences in the complex environments, e.g., cities and mountains. By suppressing and filtering the non-cooperative interference signals, the AMC technique is of great significance to improve the anti-interference capability of wireless communication systems in the strong interference environments. Through integrating AMC technology, the wireless communication system can monitor the channel state and modulation scheme, which helps to complete the dynamic spectrum allocation (DSA) and resource management; further improve the spectrum utilization; and optimize the communication quality. Specifically, in the case of poor channel quality, a configuration with lower order modulation and higher coding rate can be used to reduce bit error rates (BERs). In the case of better channel quality, higher order modulation and lower coding rate can be used to improve spectrum utilization and communication rate. Besides, the AMC technique can simplify the design of wireless communication systems by eliminating the need to design separate receivers for different modulation schemes [17], which helps to reduce the system costs and design complexity, and improve the practicability and feasibility of wireless communication systems.

In practical wireless communication environments, signals can be easily affected by adverse factors such as multipath propagation [18], interference [19], and noise [20], resulting in complex signal waveforms and posing formidable challenges to AMC. In a multipath propagation environment, channel estimation becomes more complex and difficult [21]. The received signal copies may have different amplitudes, phases, and time delays due to multipath propagation, resulting in signal fading and distortion at the receiving end [22]. The time delay causes the signal to spread out in the time domain, leading to misaligned symbol boundaries between received signals and transmitted signal. At the low signal-to-noise ratios (SNRs), the signal waveform may be severely disturbed by noise and interference. Circuit elements in wireless communication systems (e.g., oscillators, mixers, and amplifiers) generate phase noise during operation, including thermal noise, flicker noise, and random noise. The Doppler effect [23] caused by the high-speed movement of drones or cars causes the frequency of the received signal to differ from the frequency of the transmitted signal, thus causing frequency offset. Antennas may be affected by environmental factors like wind and ice loads, and changes in orientation and shape can also affect the propagation characteristics of signals [24]. Therefore, the quality of the dataset used for signal modeling determines the actual performance of AMC algorithms. On the other hand, the design and application of more and more complex modulation schemes, such as SQPSK, Π/4 QPSK, and CPFSK, further increase the difficulty of the recognition, since the differences between higher order intraclass modulation schemes are usually difficult to capture. Even some modulation schemes have multilevel modulation or modulation mixing situations [25]. In wireless communication systems, the AMC technique requires high adaptability and the ability to track and recognize changes in the modulation schemes of signals in real time, as the modulations of communication signals may change dynamically over time [26], which places requirements on the complexity and inference speed of AMC methods.

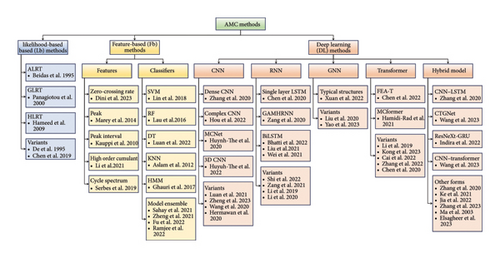

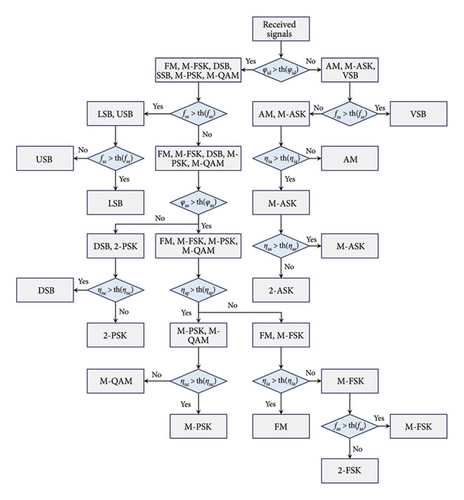

At present, AMC methods are mainly divided into the following three categories: likelihood-based (Lb) methods, feature-based (Fb) methods, and deep learning (DL) methods. The foundational work is shown in Figure 1. The Lb methods achieve the automatic classification of modulation schemes through Bayesian estimation, including average likelihood ratio test (ALRT) [27], generalized likelihood ratio test (GLRT) [28], hybrid likelihood ratio test (HLRT) [29], and some other variants [30, 31]. Although Lb methods can adapt to various types of modulated signals, they are usually difficult to compute analytical solutions and require accurate estimation of priori probabilities and likelihood functions. The Fb methods first extract features from the received signals and then design a machine learning model to fit the features and complete the AMC. Common features include zero-crossing rate [32], peak [33], peak interval [34], high-order cumulants [35], cyclic spectrum [36], etc. These features are usually easy to implement and possess a low computational complexity, but feature extractions are highly susceptible to the noise and interference. Machine learning models, such as support vector machine (SVM) [37], random forest (RF) [38], and decision tree (DT) [39], can learn effective feature representations in large amounts of signals, but there are requirements for the quality and quantity of the training dataset. DL methods utilize overparameterized deep neural networks (e.g., convolutional neural networks (CNNs) [40–44], recurrent neural networks (RNNs) [45–49], graph neural networks (GNNs) [50–52], transformers [53–55], and hybrid neural networks [56–59]) to perform the end-to-end feature extraction and classification of signals without feature designing and engineering, which exhibit strong nonlinear fitting capabilities and can achieve excellent AMC accuracy in the complex wireless communication environments. However, DL models usually have a high structural complexity and thus require large computational resources and storage, which limits their practical applications.

Based on the stacking of nonlinear connections with large-scale parameters, DL models have demonstrated excellent feature learning capabilities and achieved remarkable success in many research fields, including computer vision [60], intelligent manufacturing [61], and signal analysis [62]. In recent years, DL has been gradually developed for AMC and has shown great potential. It is an important research direction in the future. Unlike traditional methods that rely on manually designed features and domain expertise, DL models are able to automatically learn feature representations of signals and thus can be more easily generalized on a variety of application scenarios without the requirements for extensive feature engineering for specific situations. DL methods utilize large amounts of data and complex network structures to achieve the strong generalization capabilities and adapt to multiple modulation schemes and channel conditions. Moreover, DL models can adjust the model structure and parameters to achieve better performance according to actual needs. Although the internal structures of DL models are more complex, they can explain the learned features through visualization techniques, such as feature maps [63], attention weights [64], and heatmaps [65]. This makes DL methods interpretable, helps analyze the working principle of the model, and further improves AMC performance. It can be said that the development of DL has brought new opportunities and breakthroughs to the AMC technique.

In this paper, we provide a comprehensive survey on the recent advancements in AMC techniques from the perspective of traditional methods and deep learning methods, which emphasize the important step between the signal modeling and AMC. We first introduce the specific mainstream AMC methods and discuss of both their advantages and disadvantages. Then, we compare and analyze their AMC performance in terms of classification accuracy and inference speed. Finally, we summarize the critical challenges faced by AMC and future development trends. Although a number of reviews have been investigated, there is currently a lack of joint reviews of methods and results in the AMC field. In this survey, we focus on inspiring and innovative work from the following four aspects: signal representations, model structures, performance evaluation, and robustness to channel conditions.

The rest of the survey paper is organized as follows. In Section 2, we formulate the problem of AMC. Section 3 and Section 4 introduce the Lb and Fb methods, respectively. In Section 5, we describe the popular deep learning methods for AMC. The experimental results and analysis are reported in Section 6. Current challenges and future trends are discussed in Section 7. Finally, the conclusions are summarized in Section 8.

2. Problem Formulation

2.1. Communication Model

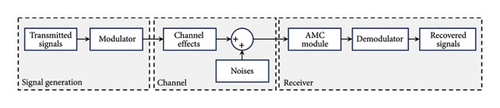

The typical wireless communication system with AMC is shown in Figure 2. First, the encoded information is modulated onto the wireless carrier signals, which are then transmitted through an antenna to other users or ground stations. During the channel transmission, the signal may be affected by multipath decline [66], unknown noises [67], frequency offset [68], phase offset [69], timing error [70], and other interferences. In the receiver, the modulated signals are demodulated from the wireless carrier signals, and then, the original information can be recovered to execute subsequent commands, such as real-time control of devices and video/image transmission. During the communication process, AMC can avoid manual judgment of modulation schemes and reduce labor cost, especially in large-scale telecommunication networks.

The existing communication models only consider single-channel scenarios, such as Rayleigh fading channels and Rician channels, while ignoring the influence of multipath channels. However, there may exist multiple channel scenarios and interference factors simultaneously in practical communication environments [73], so the assumption of a single scenario may limit the generalization capability of AMC methods. In addition, signal transmissions can be subject to various hardware limitations, such as nonlinear effects [74] and filter characteristics [75]. At present, the simplified channels in most studies are hard to accurately reflect the complexity of the actual communication environments, which affect the performance evaluation of various methods [76, 77]. To overcome these limitations, future communication models need to comprehensively consider various factors in the practical communication environments to more accurately explore and evaluate AMC methods.

Besides, the multiple-input multiple-output (MIMO) system makes it challenging to accurately model the wireless channels. The diversity of transmission signals increases the number of candidate modulation schemes and increases the difficulty of AMC. Multiple users transmitting data simultaneously can cause complex interference, resulting in the received signal containing information from multiple users. Similarly, the orthogonal frequency division multiplexing (OFDM) technique divides the frequency band into multiple subcarriers, each of which can independently modulate and transmit different data, thereby achieving higher bandwidth utilization. However, the occurrence of multichannel interference in the frequency band gradually increases the difficulty of signal reception, making automatic modulation recognition more complex. In OFDM systems, both time-domain and frequency-domain jitter may occur. Time-domain jitter can cause subcarrier insertion and deletion, while frequency-domain jitter can cause the frequency of subcarriers to deviate from the center frequency, resulting in instantaneous frequency offset and phase rotation of received signals, increasing the difficulty of AMC.

2.2. Signal Representation

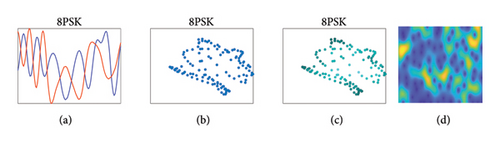

In AMC tasks, the representation of signals is directly related to the method design and AMC performance. The most popular signal representations include temporal signals, spectrum, and constellation. In most work, a single signal representation is used as input for driving the model. Even if some signal representations are attempted to be fused, they remain in a single time or frequency domain [78], resulting in fragmented time-frequency analysis and a lack of effective means to fuse information from multiple domains. Some typical signal representation examples are shown in Figure 3.

2.2.1. Temporal Representation

In fact, rI and rQ components can be regarded as real and imaginary parts of the received signal. In many works [66–68], I/Q signals are directly fed into the model in the form of matrix to learn features and drive the model to complete AMC.

To fully utilize the information hidden in the received signals, I/Q components and A/P information are combined as the signal representation for model training in some works [45, 79].

The quality of temporal signal representations determines subsequent feature learning and establishment of mapping relationship between received signals and modulation schemes. Appropriate signal representation can provide sufficient information to improve the accuracy and robustness of AMC. The fusion of multiple signal representations is an important direction to enhance model learning capability. The contemporary signal representation fusion methods include serial fusion [80] and parallel fusion [81]. The serial fusion refers to the sequential input of multiple representation vectors into the classifier, while the parallel fusion is the combination of multiple representation vectors into a new high-dimensional matrix, which is then fed into the classifier.

2.2.2. Spectrum

It is necessary to pay attention to the trade-off between the temporal and frequency resolution in specific application scenarios. On the other hand, the size of the spectrum directly determines the amount of information contained, which indirectly affecting the computational complexity and inference speed of AMC methods. In addition, a larger spectrum size may lead to model overfitting, especially when the number of training data is limited. A smaller spectrum size helps reduce the risk of overfitting, but may lead to information loss and degradation of AMC accuracy. Some studies have proposed alternative frequency-domain analysis methods, such as wavelet transform (WT) with local analysis and multiscale analysis capabilities [83]. The WT can adapt to the frequency and time characteristics of various signals, thereby improving the robustness to varying SNRs. For certain specific wavelet bases, such as Daubechies wavelet and Haar wavelet, specific fast methods [84, 85] can significantly reduce the computational complexity and improve analysis efficiency.

Compared to temporal signals, spectrums are more prone to data augmentation to help improve the generalization of AMC models, such as spectrum interference [86] and sample generation based on generate adversarial networks (GANs) [87]. A certain level of interference to frequency-domain information can help expand the sample space and improve decision boundaries, but the interference degree needs to be empirically set based on the complexity of the task. GANs mine sample features to make new samples and help models learn robust modulation knowledge, but the stability and reliability are still being explored. Although GANs have been successfully applied to natural and facial images [88], there is a lack of theoretical basis for whether the modulation properties of spectral images can be truly learned.

2.2.3. Constellation

In the above equations, the signal r is J times decimated with offset equal to all the integer symbol timings, and rj(k) denotes the kth symbol of the decimated signal of offset j.

Actually, some other constellation variants have also been studied to improve AMC performance, including the Cauchy-score constellation [90], constellation density [91], and slotted constellation [92]. However, most of the current studies on constellation representations focus on how to cover the modulation information comprehensively, neglecting the design and training process of corresponding classifiers. On the other hand, classifiers like AlexNet [93] and ResNet [81] transferred from image processing tasks are challenging to adapt to the constellation diagrams, as the distribution or structure of symbols rather than pixel values is more noteworthy. In addition, constellation diagrams usually contain a large number of blank areas due to their sparsity, which has uncertain adverse effects on classifiers. How to reduce the dimensions of constellation diagrams and refine modulation knowledge is also an important future research direction.

2.3. Problem Description

The likelihood function is represented by the probability density function (PDF) of the communication model and observation data, representing the probability of received data under a given modulation scheme. Different communication models require the use of corresponding likelihood functions. The design of likelihood functions directly determines the AMC accuracy and the complexity of the system. To reduce the computational complexity of Lb methods in practical AMC applications, statistical features (e.g., mean, variance, and cumulants) have been proposed as substitutes for likelihood functions [31].

In the Fb and DL-based AMC methods, designing appropriate objective functions to measure model performance is a key issue. The optimization of the objective function is prone to falling into local minimum, especially when the number of training samples is insufficient. The optimal trade-off between overfitting and underfitting also needs to be considered to support the generalization of the classifier in different application scenarios and communication environments. Although a large number of stochastic gradient descent (SGD) optimization algorithms [94, 95] and their variants [78, 96] have been developed, the theoretical basis associated with ensuring a lower bound on the modulation information is inadequate.

3. Lb Methods

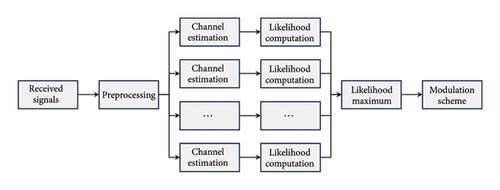

In Lb methods, the likelihood function is computed and updated for the selected communication model to meet the complexity requirements or to be usable in the noncollaborative environment. Afterward, a threshold is used to determine the fit between the likelihood ratio test results of signals and the modulation candidates. The overall process of Lb methods is shown in Figure 4. In this section, we focus on introducing and analyzing typical Lb methods, including ALRT [27], GLRT [28], HLRT [29], and some variants [30, 31].

3.1. ALRT

3.2. GLRT

It can be seen that the GLRT-based classifier can handle high SNR, but is sensitive to low SNR. Once there are too many candidate modulation schemes, especially high-order modulation schemes like 64QAM, the AMC accuracy loss of GLRT is obvious. Moreover, its real-time performance is still difficult to meet the requirements in large-scale MIMO systems.

3.3. HLRT

Compared to ALRT and GLRT, HLRT is adept at distinguishing different types of modulation (e.g., AM and FM) and is robust to noise. However, HLRT requires the selection of appropriate statistics and mixing strategies, which is challenging in practical applications. The AMC accuracy of the hybrid likelihood ratio degrades if the strong correlation is exhibited between the statistics. For complex signals, HLRT faces the same problem as ALRT and GLRT, i.e., it requires significant computational costs and long processing time.

3.4. Variants and Analysis

For specific cases, some variants of the likelihood function are used to improve AMC performance, such as randomized likelihood ratio test (RLRT) [30] by randomizing the training samples and features to generate statistics, and decision-directed likelihood ratio test (DLRT) [31] incorporating search and LRT.

The Lb AMC methods rely on the estimation of modulation parameters and usually require manual setting of multiple hyperparameters, such as search range and iteration number, which exhibit a significant impact on AMC accuracy. In practical applications, selecting appropriate hyperparameters according to communication scenarios is a challenging problem. RLRT has strong robustness by simulating noise distribution, providing an important research direction. By modeling the noise distribution and introducing prior knowledge to guide the design of likelihood functions, the generalization capability of the algorithm can be improved. Therefore, how to further utilize unstructured expert knowledge is crucial. Search guidance in DLRT can reduce the space for candidate modulation schemes and improve the accuracy in classifying M-PSK. In fact, the most popular aspect in the Lb methods is to design appropriate likelihood functions to capture complex features and potential relationships hidden in the signals, such as nonlinear likelihood functions that are more suitable for nonlinear modulation recognition.

4. Fb Methods

In the Fb methods, the signals are first preprocessed through denoising, filtering, and downsampling, and then, the corresponding features are extracted. The key features are selected and used to train machine learning models, i.e., to complete feature learning and modulation classification by minimizing the objective function. The processing flow is shown in Figure 5.

4.1. Feature Extraction

4.1.1. Spectrum-Based Features

- (1)

The maximum spectral power density ηsp of the normalized and centered instantaneous amplitude of the received signal is calculated by the following equation:

() -

where DFT (·) represents a discrete Fourier transform. The variable rAK is the normalized and centered instantaneous amplitude of the received signal r and μA is the mean of the instantaneous amplitude of the signal in which the amplitude normalization is proposed to enhance the attenuation caused by unknown channel effects, and they can be computed by the following equation:

() - (2)

The standard deviation ηnc of the normalized and centered instantaneous amplitude is calculated by the following equation:

() -

where Kc represents the number of symbols that satisfy the constraint of rK(k) > rt, and the rt is a threshold that filters out the symbols with low amplitudes.

- (3)

The standard deviation ηas of the absolute value of segmented instantaneous amplitude of the normalized and centered signal is calculated by the following equation:

() - (4)

The kurtosis ηia of the normalized and centered instantaneous amplitude is calculated by the following equation:

() - (5)

The standard deviation fas of the absolute value of the normalized and centered instantaneous frequency is calculated by the following equation:

() -

where fAK is normalized and centered instantaneous frequency.

- (6)

The symmetry measurement fss of the spectrum around the carrier frequency is calculated by the following equation:

() -

where

() -

In the above equations, fak + 1 denotes the number of symbols corresponding to the carrier frequency fc, and fs is the sampling rate.

- (7)

The kurtosis fia of the normalized and centered instantaneous frequency is calculated by the following equation:

() - (8)

The standard deviation φid of the instantaneous direct phase of the nonlinear component is calculated by the following equation:

() - (9)

The standard deviation φas of the absolute value of the instantaneous phase of the nonlinear component is calculated by the following equation:

()

The above features are applicable to single-carrier modulation methods, such BPSK, QPSK, 8PSK, AM, and FM. These modulation schemes mainly use phase information for information transmission, so the amplitude and frequency characteristics are relatively fixed. In OFDM, different subcarrier frequencies and phases are usually used for modulation. Therefore, the characteristics of amplitude, frequency, and phase are all of great significance. It should be noted that different communication scenarios and modulation methods may have certain differences. For specific communication scenarios and modulation methods, more or other types of features may be involved, or more complex feature engineering methods may be required. Therefore, it is very important to select and extract features targeted at specific tasks and scenarios.

The manually designed spectral features have good interpretability, which clearly reflects the key features of the signal and helps the researcher to understand the relationship between features and modulation schemes and thus improve the model performance. Artificial features can be easily and flexibly implemented and deployed in toolkits without the need for complex optimization. On the other hand, artificial features are difficult to adapt to changes in different modulated signals, resulting in poor generalization performance. The process of manual design and feature engineering is easily influenced by subjective factors. It is worth noting that artificial features cannot automatically learn implicit relationships in the data, which limits the performance improvement of the model. Besides, as the signal dimension increases, artificial features may experience dimensional disasters.

4.1.2. Cumulants

Cumulant [35] is a measure that describes the characteristics of data distribution and can be used to measure the skewness and kurtosis of data distribution. By studying the high-order statistical characteristics of data, cumulants can be used to classify different categories of data. First, the cumulative analysis is performed on the original data to obtain a series of features, capturing the characteristics of the data distribution. Then, the original data are projected onto a low-dimensional space by selecting important high-order cumulants, thereby reducing computational complexity and storage requirements.

The high-order cumulants still have some limitations in practical applications, e.g., the computational complexity of cumulant features is high, especially when dealing with high-dimensional data. In addition, the cumulants are sensitive to noise and instability, so some antinoise and enhancement techniques need to be adopted. The choice of appropriate orders of cumulants is also a concern. Correlation algorithms based on information entropy [97], mutual information [98], or other specific metrics can be introduced to pick the most discriminative high-order cumulant features.

4.1.3. Cyclostationarity

The cyclostationarity features describe the modulation features of received signals by analyzing its statistical characteristics on a periodic basis, which can effectively capture the periodicity, spectral characteristics, and phase information. At present, the commonly used cyclostationarity features include periodic graph [36], cyclic autocorrelation function (CAF) [99], cyclic cross-correlation function (CCCF) [100], cyclic spectral correlation coefficient (CSCF) [101], and cyclic spectral correlation graph (CSCG) [102]. The periodic graph describes the frequency distribution of signals for AMC by calculating its power spectral density (PSD) in the frequency domain. The CAF and CCCF calculate the autocorrelation and cross-correlation of signals under different time lags to capture the phase information. The CSCF further calculates the correlation between CAF and CCCF to characterize the modulation relationship for AMC. The CSCG is an extension of CSCF in the frequency domain, describing the periodic similarity of signals in frequency.

In terms of computational complexity, fast time-frequency transformation methods such as fast Fourier transform (FFT) can effectively improve the computational efficiency. WT and other types of methods can be used to improve its sensitivity to noise. In addition, cyclostationarity features typically exhibit high dimensions, leading to the problems of dimension curse and overfitting. Traditional dimensionality reduction methods such as principal component analysis (PCA) [103] and linear discriminant analysis (LDA) [104] can reduce feature dimensions, but affect the AMC accuracy. Overall, it is necessary to select appropriate cyclostationarity features according to specific communication scenarios and even combine them with other features such as high-order cumulants to improve AMC accuracy.

4.1.4. Feature Fusion

Considering the limitations of a single feature, the feature fusion strategy can integrate feature information from different sources, levels, and representations to form a more comprehensive and discriminative feature representation. Various typical feature fusion strategies have been widely studied, including serial fusion [105], parallel fusion [106], cascade fusion [107], decision fusion [108], weighted fusion [109], and deep learning–based nonlinear fusion [110], but few are applied to the AMC.

Serial fusion connects different feature vectors one by one to construct a higher dimensional feature, which is easy to implement but increases computational complexity. This type of direct fusion is usually hard to bring significant performance improvement and may also have adverse effects in situations where there are too many feature modes. The ability to contextualize the full text of the model is challenging, thus affecting serial fusion efficiency, especially when features are complex.

Parallel fusion concatenates various types of feature vectors in the multichannel manner to form a feature matrix. Different features achieve knowledge aggregation and joint reasoning through information exchange between channels. Compared to serial fusion, parallel fusion has higher efficiency in feature fusion. For example, Wu et al. [106] convert the modulated signal into two image representations of cyclic spectrum and constellation diagram, and fuse them into dual channel image inputs.

Decision fusion inputs various feature vectors into independent classifiers and then fuses each decision result through voting, averaging, or other ensemble methods. It can fully utilize the advantages of multiple feature extractors and classifiers, avoid the failure of a single feature or classifier, and improve the robustness of the entire AMC system. Obviously, the decision fusion method has the highest computational cost as it requires the design of corresponding classifier for each feature.

In practical communication systems, signal transmission can be affected by many interference factors such as channel noise and multipath propagation, resulting in a decrease in signal quality. Therefore, the weighted fusion mechanism can be introduced to assign different weights to different features in the process of feature fusion. It can adaptively adjust feature weights based on the importance of features, reducing the sensitivity of a single feature to signal quality fluctuations. However, both feature weights and classifier parameters need to be optimized simultaneously, which may make the optimization process more complex.

Researchers have started to turn to the study of end-to-end deep learning models to automatically learn the nonlinear relationship between different features, and realize the automatic weighted fusion and analysis of features. Considering the flexibility of the deep learning model structure, feature fusion is strongly scalable and can be adapted to application scenarios with different levels of complexity. Deep learning–based feature fusion technique improves the problem of relative fragmentation of time-frequency-domain analysis of traditional methods and has achieved significant performance improvement in many modulation classification tasks.

4.2. Machine Learning Models

4.2.1. DT

The DT [39] composed of a series of decision nodes is the most suitable classifier for processing spectral features, as shown in Figure 6. In the tree structure, the input node is used to import all types of features. After the input node, a series of conditions or threshold-based judgments with specific individual features are used to identify the modulation scheme of the signal.

The structure of the DT is easy to understand and implement, and therefore, it can clearly display the signal features represented by each branch and leaf node. The high interpretability is helpful to analyze the working principle of the model and classification results. The DT-based methods can automatically select important features during the individual judgment process, which is beneficial for reducing the workload of feature designing and engineering. In addition, DTs possess strong robustness to outliers and noise, but are sensitive to signal parameters and typically require dynamic threshold adjustments.

In practical applications, the construction of DTs involves recursively partitioning features, resulting in high computational complexity, especially when dealing with large datasets, where model efficiency is difficult to meet requirements. The output of DTs may be affected by the order of data input, leading to model instability. In fact, improving the splitting criteria and pruning strategy of DT-based methods is an effective way to improve the generalization ability and computational efficiency of the model [111]. Besides, the most important thing is the efficient feature extraction, such as combining the powerful feature extraction ability of deep learning models and the interpretability of DT [112].

4.2.2. RF

On the basis of DTs, RFs [38] have been developed for AMC. By integrating multiple trees, the RF-based methods correct and prevent overfitting problems. By setting the number and maximum depth of trees, RFs train each tree using different feature subsets to reduce the prediction variance. In practical applications, the training and testing of RFs supports the parallelization operation, which can fully utilize modern hardware resources to accelerate the computational process.

Although the AMC accuracy of RFs is usually better than a single DT, their visualization and interpretability are poor. RFs may be affected by noise and redundant information during feature selection, leading to the prediction bias. Due to the voting mechanism of RFs, errors in individual DTs sometimes have a significantly detrimental impact on the final classification results. Therefore, the adaptability of RFs in feature selection is a research focus [113]. Moreover, how to make RFs more robust in dealing with changes in data distribution is also a key research direction [114].

4.2.3. SVM

By mapping the original data to a higher dimensional feature space through a mapping function, SVM [37] makes the nonlinear classification problem in the original space linearly separable in the feature space. Using optimization algorithms like the Lagrange multiplier method and sequential minimal method, SVM searches for a hyperplane in feature space to distinguish samples of different categories, while maximizing the distance between each sample to the hyperplane. Finally, the new test sample is mapped to the feature space and its distance from the hyperplane is calculated to determine its category.

In the practical application of SVM for the AMC task, its classification performance highly depends on the selection of kernel functions, so identifying different modulation methods usually requires extensive experiments. When the sample distribution between categories is uneven, SVM may lean toward the majority class, resulting in a decrease in classification performance for the minority class. In addition, SVM is a binary classifier, requiring specialized methods (such as the “one vs all”) to handle the multiclassification problem, which increases both the computational complexity and difficulty in parameter adjustment. Once there are too many categories of candidate modulation schemes, the inference efficiency of the model can be significantly affected.

4.2.4. Model Ensemble

Many types of traditional machine learning classifiers have been developed, such as k-nearest neighbor (KNN) [115] and hidden Markov model (HMM) [116]. To address the limitations of each classifier, the model ensemble strategy can be utilized to leverage the strengths of each model.

Model ensemble [117] is able to combine the prediction results of various models to improve the overall discriminative performance of the AMC system. Especially in some complex communication conditions, the model ensemble can make the discrimination system more stable. Due to the diversity of ensembled models and the possibility of converging to different local optima, the robustness of the AMC system can be improved and the risk of overfitting to specific types of modulated signals can be reduced. In addition, the model ensemble can better cope with outliers, as different models have varying degrees of sensitivity to various parameters.

However, compared with a single model, model ensemble requires higher computational resources and time to complete training and reasoning, especially on large-scale datasets. The selection of features has also become more complex, as different models require corresponding features to drive them. During the model ensemble process, multiple parameters need to be adjusted simultaneously, such as the importance of each model, which makes the joint optimization problem difficult. Therefore, it is necessary to choose appropriate base models and ensemble strategies (such as bagging [118], boosting [119], and stacking [79]) to improve the AMC accuracy.

5. End-to-End Deep Learning Methods

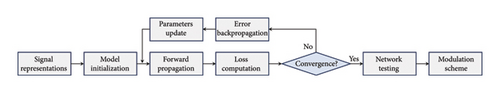

Benefiting the layer-by-layer stacking of dense nonlinear transformations, heavily overparameterized deep learning models have achieved superior feature learning and classification performance in AMC tasks, especially the ability to generalize across various communication scenarios. A wide variety of deep learning model structures have been developed to deal with different signal representations to extract robust features, such as coding methods, connectivity methods, and activation functions. The entire processing flow of deep learning methods is shown in Figure 7. First, the deep learning model is initialized, and then, the objective function of the model is trained to converge. Finally, the converged model was used for testing to evaluate its AMC performance.

5.1. CNNs

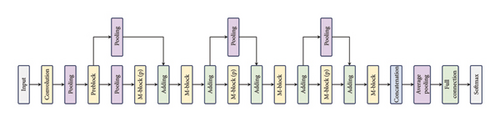

CNNs, as the representative models of deep learning, use convolutional layers to extract features from inputs and use pooling layers to reduce the feature dimensionality. Finally, modulation schemes are classified through fully connected layers with softmax. The mechanisms of weight sharing, local connection, and pooling in CNNs make them highly efficient in extracting spatial features. CNNs can extract and fuse features from both the original time-domain signal and spectrum, forming higher level representations, thereby improving the AMC accuracy. Some typical CNN models specifically designed for AMC are shown in Figure 8.

In [40], the MCNet is capable of analyzing the multiscale spatiotemporal correlations exhaustively to promisingly improve the AMC accuracy under poor conditions with the cheapest computational cost. The first convolutional layer is used to extract generic features and reduce spatial dimension. Then, two layers containing asymmetric convolutional kernels organized in parallel are deployed to reduce the number of weights. Finally, the features of stacked convolutional encoding are output as the modulation scheme in the form of probability distribution through the fully connected layer with softmax. It is worth noting that skip connections are adopted for block-wise association to prevent gradient vanishing and accordingly improve the AMC accuracy. In [41], the convolutional layer, dropout layer, and Gaussian noise layer are applied for regularization and reducing the overfitting in the system. The noise layer improves the model’s robustness to noise by simulating the noise distribution. In [42], additional dense layers and Gaussian noise layers are added to the traditional CNN structure. Hou et al. [44] designed a complex CNN, which is composed of residual units, max-pooling, flatten, and fully connected layer with softmax. Both frequency and phase characteristics of radio signals can be utilized to recognize the modulation schemes in each frequency band. Zhang et al. [110] proposed adding a feature fusion layer to CNN to achieve end-to-end full-stage fusion of features. Using cuboidal convolution kernels, the three-dimensional CNN [120] allows to capture underlying features as intra- and interantenna correlations at multiscale signal representations.

To reduce the training costs of CNN and improve inference efficiency, some lightweight structures have been designed. In [90], the bottleneck and shuffle unit are used to avoid the potential overfitting risk and lower the computational complexity. The model only has a total of 19.35M floating-point operations per second (FLOPs), which is one-tenth of a three-layer ResNet. Some special convolutional operations have also been developed, such as point-wise convolution (PWC) and depth-wise separable convolution (DWSC) [61]. The PWC adopts the convolution kernels of 1 × 1 size, which maintains the size of the output feature maps. PWC only involves operations on a single pixel, so it has high computational efficiency and is suitable for large-scale convolution operations. Due to the independent operation of each channel by PWC, it can highlight the feature differences between channels and better express information between different channels. The DWSC divides the traditional convolution operation into PWC and DWC, where each convolution kernel is responsible for one channel. DSWC is able to greatly reduce the number of parameters, thereby reducing the risk of overfitting. DSWC can explore more different convolutional combinations to better capture features in signals and improve the model’s expressive power.

CNNs can extract a variety of features through multilayer stacking of convolution kernels, while also possessing the excellent contextual correlation capabilities. However, due to its limitations, CNN is hard to perform the precise time-frequency transform operations (e.g., FFT), resulting in insufficient frequency-domain analysis [121]. In some researches, CNNs driven by multimodal data containing I/Q sequence and spectrum are plagued by parameter uncertainty issues, such as Fourier series [122] and window function size [123]. In addition, most of the CNN structures are transferred from visual tasks [93], and there is little consideration given to the modulation characteristics of wireless communication signals by designing specialized modules to extract robust features and improve the AMC performance.

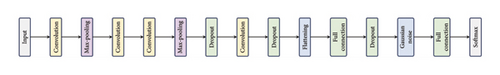

5.2. RNNs

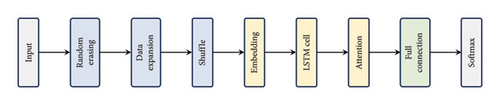

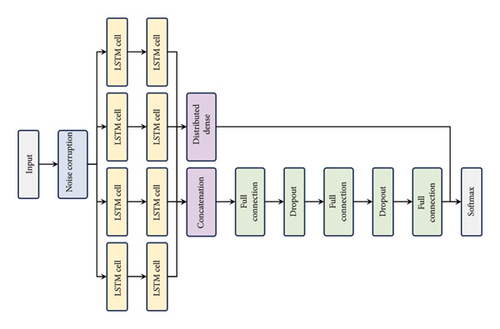

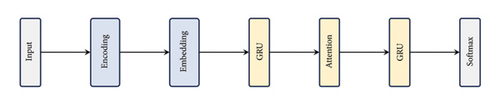

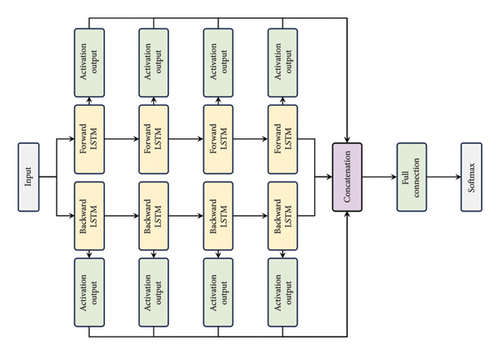

In the AMC problem, the input layer of RNN usually accepts time-domain slices of one-dimensional signals as the input, the hidden layer uses RNN units (such as LSTM or GRU) to learn temporal information in sequence data, and the output layer provides predictions of the modulated signal. The cell state in the RNN unit is used to store the historical information of each sequence, which plays a crucial role in feature learning. The gating mechanism composed of input gate, forgetting gate, and output gate controls the flow of information in the cell state. Some typical RNN models for AMC are shown in Figure 9.

In [45], a single-layer LSTM based on the attention mechanism is proposed for processing long-sequence signal data. The attention mechanism is added to enable LSTM model to capture temporal features of long-sequence data in a way faster than the convergence speed of traditional LSTM. Moreover, signal embedding is introduced in the model to cover the modulation information more comprehensively and accurately. In [47], a novel one-shot neural network pruning method based on the weight magnitude and gradient momentum is proposed to produce sparse RNN structures. Experimental results demonstrate that it is crucial to retain nonrecurrent connections while pruning RNNs. Zang et al. [49] proposed a modified hierarchical RNN with grouped auxiliary memory (GAMHRNN), in which the hierarchical structure is stacked from the group-assisted memory to each layer with fast connections. Li et al. [124] pointed out that RNNs can be used to learn the intrinsic regularity of temporal series, and the attention mechanism is helpful for RNN to focus on the correct pulses and ignore the noise. Bhatti et al. [125] designed a bidirectional LSTM (BiLSTM) structure containing two parallel layers of LSTM for forward propagation and backward propagation, respectively. In the BiLSTM, the minibatch processing operation allows for anticausal behavior and effectively increases the amount of data accessible to the network at every time step, providing the model with a richer context. Liu et al. [126] have verified that BiLSTM layers can extract the contextual information of signals well and address the long-term dependence problems. By adding the self-attention block [127], the BiLSTM is able to obtain both the historical and future knowledge from feature sequences of each domain and thus automatically mine crucial mapping to improve AMC accuracy.

The survey indicates that current research not only focuses on the structure of LSTM but also focuses more on signal characterization or feature analysis methods. Compared to CNNs, although LSTM has powerful temporal analysis capabilities, it also brings high computational complexity and difficult convergence. Due to the inclusion of multiple gating mechanisms and cell states in LSTMs, the optimization of objective functions becomes more complex, especially for communication scenarios containing a large number of candidate modulation schemes. Distributed computing and model compression techniques are effective ways to reduce the computational resources and memory requirements of LSTM in AMC tasks. In addition, one of the challenges faced by LSTM is the difficulty in leveraging a priori knowledge to help improve AMC performance in the same way that CNNs are able to simultaneously process temporal signals and spectrograms by means of 1D convolution and 2D convolution. How to simultaneously incorporate the knowledge from multiple domains into the learning process of LSTM is a key research direction.

5.3. GNNs

In recent years, the mapping of temporal series into graphs has been investigated through the use of techniques such as visibility graphs (VGs), which can simply capture relevant aspects of local and global dynamics simultaneously, thus developing specialized GNN to mine knowledge in time series and obtain special potential graphical features. A graph is composed of nodes and edges, where nodes represent objects and edges represent relationships between objects. During the learning process, the features of nodes and edges are both extracted, and the feature vector of the central node is updated by aggregating the information of neighboring nodes. Furthermore, the transfer function is designed to calculate the information transfer between nodes in order to better capture the structural information of the graph.

In [50], I/Q signal data are mapped into two graphs, respectively, and then, a typical GNN model is designed to process graphs. After concatenating feature vectors extracted from two graphs, the classification results can be obtained through a fully connected layer. In [51], each node in GNN sends message to its adjacent nodes and will merge the messages received from its adjacent nodes. Then, an activation function is followed to enhance the ability of GNN to fit the data distribution. Moreover, the softmax function is added on each row of adjacency matrixes to normalize the input data. To capture the relationships between multidomain features and deep features into account, Yao et al. [52] built a graph of multidomain features and deep features and classified the modulation types using the output of GCN by way of a fully connected layer.

Under the same scale of parameters, the computational complexity of GNNs is higher than that of CNN and LSTM, especially when dealing with large-scale graphs. This is because GNNs need to extract and update features for each node and edge, and the computational effort grows squarely with the size of the graph. GNN also faces the oversmoothing problem, which means that the node representations are gradually approximated as the number of network layers increases. The oversmoothing leads to a decrease in the performance of GNNs when processing graphs with complex topological structures. The main advantage of GNNs lies in capturing the local structures and features of the graph and thus lacks the perception of global structural information. In practice, many graph structures are dynamic, i.e., the topology of the graph and the edges between nodes are constantly changing. However, most GNNs are designed for static graphs and are difficult to adapt to changes in dynamic graphs. Since GNNs are highly dependent on the structure and features of the graph, the generalization performance of GNN may drop dramatically when the graph data change. How to design suitable GNNs that can cope with dynamic graphs is an open problem.

5.4. Transformers

The transformer model consists of an input embedding layer, positional encoding layer, self-attention, multihead attention, point-wise feedforward layer, normalization layer, and output layer. The input embedding layer converts the input modulation signal into a word vector representation. The positional encoding adds the position information to each word vector, so that the model can understand the order relationship between words. The self-attention blocks calculate the association degree between each word and other words in a sequence to associate contextual information. By parallel stacking of self-attention layers, the multihead attention is used to capture different levels of contextual information and improves the model’s expressive ability. The feedforward layer added after each attention layer is adopted to integrate attention information. The normalization helps to stabilize the training process of transformer. The transformer model can more effectively capture long-term dependencies while avoiding the order dependency issue that exists in RNNs. Compared to the fixed convolutional kernels used in CNNs, the self-attention mechanism in the transformer can adaptively learn the weights from different positions, thereby obtaining more accurate feature representations. Moreover, the multihead attention mechanism helps to simultaneously learn contextual information at different levels, facilitating more efficient fusion of different features.

Cai et al. [128] proposed a transformer network consisted of linear projection layer, encoder, and multilayer perception. The I/Q signals are embedded into linear sequences in the linear projection layer. Then, an additional learnable classification token with position embedding is added before being fed into the encoder. The output of the encoder is then served as the input of the multilayer perception head, which is consisted of fully connected layers and dropout layers. The MCformer structure [53] leverages convolution layers along with self-attention-based encoders to efficiently exploit temporal correlation between the embeddings produced by the convolution layer. A frame-wise embedding-aided transformer (FEA-T) [129] is designed to extract the global correlation feature of the signal to obtain higher classification accuracy as well as lower time cost. To enhance the global modeling ability of the transformer, the frame-wise embedding module is used to aggregate more samples into a token in the embedding stage to generate a more efficient token sequence. A signal spatial transformer structure based on the attention mechanism [130] is developed to eliminate communication interference factors by a priori learning of signal structure, such as time offset, symbol rate, and clock recovery. In [131], the self-supervised contrastive pretraining of the transformer is performed with unlabeled signals, and time warping–based data augmentation is introduced to improve generalization ability. Then, the pretrained transformer model is fine-tuned with labeled signals, in which the hierarchical learning rates are employed to ensure convergence.

Despite the introduction of positional encoding, the self-attention mechanism in the transformer model does not explicitly consider the positional information, which may be crucial for learning modulation knowledge. The transformer needs to adapt to complex parameters such as signal bandwidth and SNRs in the AMC task. The current studies have demonstrated the disadvantage of the transformer in terms of computational complexity, especially dealing with long-sequence data, which limits the application of transformer model on edge devices with limited computational resources.

5.5. Hybrid Models

To break through the limitations of a single deep learning model and fully utilize the advantages of various structures, hybrid model structures [56–59, 132–136] have been designed for AMC. Actually, deep learning can flexibly select and combine different types of layers to improve AMC accuracy. For example, the number and configuration of layers can be adjusted according to the complexity of the actual AMC tasks. Compared to the single deep learning model, hybrid models can extract features from multiple perspectives to better cope with various disturbances and noises.

Zhang et al. [56] proposed to solve the AMC task using a dual-stream structure by combining the advantages of CNN and LSTM, which efficiently explore the feature interaction and the spatial–temporal property of raw I/Q signals. Ke et al. [57] presented a deep learning framework based on a LSTM denoising autoencoder (AE) to automatically extract stable and robust features from noisy radio signals and infer modulation schemes using the learned features. Wang et al. [132] proposed a hybrid CNN–transformer–GNN (CTGNet) for AMC to uncover complex representations in radio signals. In [134], a hybrid feature extraction CNN combined with the channel attention mechanism is introduced for AMC. In [135], the ResNeXt model is utilized to extract the distinctive semantic feature of the signal, and the GRU is employed to extract the time-series features.

Although the hybrid models based on specific rules perform better than the single model, they usually need to carefully adjust the configuration of each network layer and parameter. The complex network structure implies high computational complexity, and the convergence of the model’s objective function is difficult to guarantee. In addition, the internal working mechanism of hybrid models is usually more complex than a single deep learning model, resulting in poor interpretability, which affects the application in practical communication scenarios. At present, a series of methods such as feature visualization [58], module decomposition [59], knowledge distillation [136], and attribution analysis [137] have been gradually developed to dissect the working mechanism of deep learning models similar to “black boxes.”

5.6. Optimization and Generalization Methods

In the AMC task, the optimization aims to find the most suitable parameters so that the model can accurately classify the modulation schemes. The selection of optimization algorithms and hyperparameter settings are key aspects of training deep learning models. The SGD based on error backpropagation is one of the most popular algorithms. A series of SGD variants such as momentum optimization [78] and federated learning [96] have also been developed for global optimization problems with highly nonconvex objective functions. The momentum optimization method accelerates the gradient descent process by introducing the accumulation of historical gradient information, which helps to jump out of local minima. The momentum optimization method has the advantage of increasing the optimization speed, but may lead to oscillating results. Federated learning is more demanding in terms of information transfer between agents.

Generalization refers to the ability of the model to apply knowledge learned from a training set to unseen test samples. The ability to generalize well is critical to the success of the model in real-world applications. In the AMC task, generalization is reflected in the model’s ability to accurately identify the modulation schemes of a signal across various communication conditions, such as frequency offset, phase offset, and noise. Currently, the model structural complexity constraints based on the sparsity principle are commonly used to curb model overfitting [138], such as L2 regularization and dropout. In addition, as one of the representative regularization algorithms, data augmentation aims to improve the generalization ability of the model by increasing the number and diversity of training samples [139, 140]. Based on the characteristics of modulated signals, three types of data augmentation methods are considered in [141], i.e., rotation, flip, and Gaussian noise interference, which are applied in both training and testing stages of deep learning–based classifiers. GANs [142] have also been used to learn the features and distribution of radio signals to help expand the input space and improve generalization ability. For example, Tang et al. [143] proposed a programmatic data augmentation method by using the auxiliary classifier generative adversarial networks (ACGANs).

6. AMC Performance Evaluation

6.1. Experimental Datasets

At present, two popular public datasets, i.e., RadioML 2018.01A [144] and RadioML 2016.10A [145], are mainly used for evaluating AMC performance of various methods. The RadioML 2018.01A [144] contains a total of 2,000,000 I/Q signals covering 24 candidate modulation schemes {OOK, 4ASK, 8ASK, BPSK, QPSK, 8PSK, 16PSK, 32PSK, 16APSK, 32APSK, 64APSK, 128APSK, 16QAM, 32QAM, 64QAM, 128QAM, 256QAM, AM-SSB-WC, AMSSB-SC, AM-DSB-WC, AM-DSB-SC, FM, GMSK, OQPSK} with a signal size of 2 × 1024. RadioML 2016.10A [144] contains 220,000 signals with 11 candidate modulation schemes of size 2 × 128, including BPSK, QPSK, 8PSK, 16QAM, 64QAM, GFSK, CPFSK, 4PAM, AM-DSB, AM-SSB, and WBFM. All the modulated signals are set to a 1-MHz bandwidth and SNRs ranging from −20 dB to +18 dB with a step size 2 dB and have been uniformly distributed in each category.

There are also some publicly available datasets with more complex communication conditions that deserve further research, such as RDL 2021.12 [39], RadioML 2016.10B [41], and CSPB.ML.2023G1 [146]. Specifically, CSPB.ML.2023G1a is a challenge dataset with cochannel and frequency offset, and it contains 11 candidate modulation schemes {BPSK, QPSK, 8PSK, 4QAM, 16QAM, 64QAM, SQPSK, MSK, GMSK}. In studies tailored to specific wireless communication considerations, some other candidate modulation schemes have been simulated to verify the AMC effectiveness of the method. For example, {4FSK, 8FSK, 16FSK} transmitted through the Rayleigh channel is considered in [82]. The classification of high-order intraclass modulation schemes with fine granularity like 128QAM and 256QAM is studied in [54].

6.2. Performance Evaluation

In Table 1, we summarize the state-of-the-art AMC performance of various methods on different datasets. It can be seen that most of the work is based on public datasets, considering the interference of a single AWGN. Part of the work studied the classification of commonly used modulation schemes under specific communication conditions. In general, most methods are able to achieve the AMC accuracy above 85% when SNR > 10 dB. When SNR is below −16 dB, most methods exhibit results similar to random classification. In wireless communication systems, the position of users may change at any time, leading to fluctuations in SNR and greatly increasing the difficulty of AMC. Few works consider the effects caused by the actual communication channel, such as Doppler drift [42]. Therefore, the influence of complex noise or demanding wireless communication conditions on AMC performance needs to be further investigated, such as mixed noise [39, 99] and composite channels [67].

| Reference | Method | Channel | Candidate modulation | SNR (dB) | Accuracy (%) | Complexity (ms) |

|---|---|---|---|---|---|---|

| Chen et al. 2019 [30] | Maximum-likelihood | Non-AWGN | BPSK, QPSK, 8PSK, and 16QAM | −3:2:25 | > 95 at 11 dB | — |

| Li et al. 2021 [35] | 4th cumulant + LB | AWGN | BPSK, QPSK, 8PSK, and 16QAM | −10:2:20 | > 95 at 10 dB | — |

| Yan et al. 2020 [99] | Cyclic spectrum | Non-AWGN | BPSK, QPSK, OQPSK, 2FSK, 4FSK, and MSK | −10:1:20 |

|

— |

| Luan et al. 2022 [39] | DT | Non-AWGN | RDL 2021.12 | −20:2:20 | > 85 at 10 dB | — |

| Lin et al. 2018 [37] | SVM | AWGN | 4QAM, 16QAM, and 64QAM | 5:1:30 | 100 at all SNR | — |

| Huynh et al. 2020 [40] | MCNet | AWGN | RadioML 2018.01A | −20:2:18 | > 93 at 10 dB | 0.131 |

| Hermawan et al. 2020 [41] | IC-AMCNet | AWGN | RML 2016.10B | −20:2:18 | 91.7 at 10 dB | 0.29 |

| Zhang et al. 2020 [42] | CNN | Doppler shift: 5 Hz, 50 Hz, 100 Hz | MD 2020 | −6:4:30 |

|

0.402 |

| Zheng et al. 2021 [118] | CNN | AWGN | RadioML 2016.10A | −20:2:18 | 58.8 | 0.3 |

| Wang et al. 2020 [43] | DRCN | AWGN | BPSK, QPSK, 8PSK, and 16QAM | −10:2:10 | 100 at > 0 dB | — |

| Zheng et al. 2022 [82] |

|

Rayleigh + AWGN | OOK, 4ASK, 8ASK, 16ASK, BFSK, 4FSK, 8FSK, 16FSK, BPSK, 4PSK, 8PSK, 16PSK, 8QAM, 16QAM, 32QAM, and 64QAM | −20:2:18 |

|

|

| Hou et al. 2022 [44] | Complex ResNet | AWGN | ASK, QPSK, 2FSK, OFDM, SSB, DSB, AM, and FM | −6:2:10 | 98.6 at 0 dB | 0.328 |

| Zheng et al. 2023 [109] |

|

AWGN | RadioML 2016.10A | −20:2:18 |

|

|

| Chen et al. 2020 [45] | LSTM | AWGN | RadioML 2016.10A | −20:2:18 | 94.1 at 18 dB | 1.08 |

| Shi et al. 2022 [46] | LSTM-AE | AWGN | RadioML 2016.10A | −20:2:18 | 94.5 at 18 dB | 0.004 |

| Zang et al. 2020 [49] | 2-layer LSTM | AWGN | RadioML 2016.10A | 0:2:18 | 90.5 | — |

| Xuan et al. 2022 [50] |

|

AWGN | RadioML 2016.10A | −20:2:18 |

|

— |

| Liu et al. 2020 [51] | GCN | — | 2ASK, 4ASK, 2FSK, 4FSK, BPSK, QPSK, 16QAM, and 64QAM | −14:2:10 | < 80 at 10 dB | 0.78 |

| Cai et al. 2022 [128] | Transformer | AWGN |

|

|

|

— |

| Zhang et al. 2022 [54] | Spatial transformer | AWGN | BPSK, QPSK, 8PSK, 16QAM, 32QAM, 64QAM, 128QAM, and 256QAM | 0:1:23 | 96.6 | 5.729 |

| Zheng et al. 2023 [7] |

|

AWGN |

|

−20:2:18 |

|

|

| Tang et al. 2018 [143] | GCN + CNN | — | BPSK,4ASK, QPSK, OQPSK, 8PSK, 16QAM, 32QAM, and 64QAM | −6:2:14 | 95 at −2 dB | — |

| Li et al. 2023 [136] | ResNeXt-GRU | AWGN | RadioML 2018.01A | −20:2:30 | > 90 at > 10 dB | 0.21 |

| Zhou et al. 2022 [123] | Few-shot CNN | AWGN | RadioML 2016.10A | −20:2:18 | > 80 at > 0 dB | — |

| Khan et al. 2021 [138] | 3D CNN | AWGN | BPSK, QPSK, 16QAM, and 64QAM | — | 96.97 | — |

| Wang et al. 2021 [67] | Multiple CNN | Non-AWGN | FSK, PSK, and QAM | −5:1:5 |

|

— |

In addition to modulation classification accuracy, the inference efficiency of the model determines whether it can be successfully applied in real-time wireless communication processes. In terms of model complexity and inference speed, most AMC methods can meet the requirements of real-time inference, as they are deployed and tested using workstations with efficient computing power. Most models can complete inference within 1 ms, as the length of the signal is usually within 2 × 2048. The work based on spectral maps leads to a decrease in inference efficiency [82], as the number of pixel points in the image is much larger than that of the temporal signal. Only a few studies like [7, 147] have tested the computational efficiency of the AMC method on edge devices with limited power and memory, such as NVIDIA Jetson Nano.

6.3. Robustness Analysis

In practical wireless communication applications, the rapid mobility of communication terminals places high demands on the robustness of AMC methods. The model is required to exhibit robustness against signal characterization, channel types, and communication scenarios; otherwise, a cliff drop in performance occurs during the application. Li et al. [35] considered the impact of power allocation ratios of the far and the near users on AMC accuracy under different SNRs. Luo et al. [148] verified the effectiveness of the AMC method under different channel models. Li et al. [149] and Thameur et al. [150] observed the effect of signal length (from 128 to 4096) on the application of the sparse filtering CNN and fully connected neural network to the AMC task, respectively. Lin et al. [151] discussed the role of denoising through moving-averaging and Gaussian filters to enhance the signal expressiveness.

The setting of hyperparameters during the training process is another aspect that needs to be considered, such as learning rate, batch size, weight decay, and momentum. Currently, most studies set hyperparameters based on empirical basis. Optimal hyperparameter solving methods such as the grid search method are difficult to apply to large-scale models due to the expensive training cost, especially to deep learning models. In addition, the optimization of hyperparameters in training and testing stages lacks qualitative analysis of the impacts on AMC, and only some work [109, 118] has observed the impact on AMC accuracy through experiments.

7. Challenges and Future Directions

Although AMC has achieved a series of progress, it still faces many theoretical and practical challenges. Some key research directions are summarized in Figure 10.

In terms of the signal modality or representation, it directly determines the upper bound of the model’s AMC accuracy, as its implicit expert knowledge is the key to establishing input–output mapping. A large number of signal representations are currently being studied for learning the expert knowledge behind each modulation scheme, with the main research direction being in the way of efficient fusion between representations [152, 153]. How to establish the correlation between expert knowledge and model learning process and create a knowledge base by means of knowledge graph [154] can help to further solve AMC problems.

The current research focuses on the blind AMC, which assumes that the parameters of received signals are unknown, such as SNR, symbol rate, and channel gain, increasing the challenge of AMC. If the prior information like SNR estimation, carrier frequency compensation, and clock synchronization can be introduced to guide the model learning, it is beneficial for extracting discriminative and robust features. Frequency-domain analysis–based methods and wavelet ridge–based techniques can be utilized for carrier frequency estimation [155]. However, further explorations are needed to introduce prior information into the learning process of the model. Adding prior information as a penalty term to the objective function [156] for joint optimization is a feasible approach, but the corresponding impact mechanism is still unclear and needs further exploration.

In addition, the generalization ability of AMC models across various communication scenarios directly determines their actual availability, so regularization methods such as data augmentation [137, 157] are key research directions. However, current data augmentation algorithms focus on expanding the number of samples and overlook the theoretical guidance. The impact of regularization technique on the optimization process of model’s objective function lacks intuitive explanation, resulting in hyperparameters mainly being empirically set. When training deep learning models, techniques such as regularization and pruning can be used to reduce the risk of overfitting and improve their generalization ability. In addition, reasonable model initialization and learning rate adjustment can also make the model more adaptable to new data, thereby improving its generalization performance. By transferring knowledge from one domain to another, the generalization performance of the model can be improved in the target domain. Transfer learning can be achieved through fine-tuning, pretraining, and other methods.

Another factor that affects the actual usability of AMC models is model complexity, which affects the deployment and inference of the model at the edge devices. In addition to some lightweight model structures being developed, some structural search algorithms [158] and redundant weight pruning strategies [147, 159] have also been studied. More studies are attempting to apply machine learning models to complete the AMC task in the field programmable gate array (FPGA) and embedded devices, especially in the wireless communication process with variable communication environments.

In the future, AMC will gradually be applied to more complex communication scenarios, such as high-speed mobile communication. AMC can also be applied to the security monitoring and protection of mobile communication networks. By identifying and recognizing various modulation schemes in mobile communication, malicious intrusions, interference signals, and other security threats can be detected and prevented, improving the security and stability of the network. In the IoT, a large number of smart devices require stable wireless communication. AMC can be used to manage and monitor these devices. By identifying the modulation scheme of the signal sent by the device, it is possible to perform device recognition, tracking, and management, improving the intelligence level of the IoT.

8. Conclusions

A comprehensive survey of AMC based on Lb, Fb, and deep learning methods has been presented in this study, including key technologies, performance comparisons, advantages, and future key development directions. The Lb AMC methods strictly rely on the estimation of modulation parameters and usually require manual setting of multiple hyperparameters, such as search range and iteration number, which exhibit a significant impact on AMC accuracy. The Fb methods require designing corresponding features and classifiers for specific types of modulation schemes and feature engineering to improve AMC accuracy. End-to-end deep learning models have shown significant advantages in AMC tasks, achieving superior AMC performance without the need for artificial feature design. In numerous studies, many key factors have been studied, including appropriate signal representations, model structures, optimization methods, etc. The current research also considers various communication environments, including frequency offset, phase offset, and timing error, as well as signal parameters such as signal length and symbol rate. In terms of AMC performance, most methods have been verified on public or simulated datasets and achieved an accuracy of over 80% when SNR > 10 dB.

Although a series of progress has been made, more attention will be paid to achieving fine-grained recognition of higher order intraclass modulation schemes on edge devices in the future. Deep learning has shown great potential for use in AMC, so in the future, attention will be paid to more suitable deep learning models and corresponding optimization and generalization algorithms. Considering the adaptability and robustness of deep learning, AMC under various communication conditions will also be further studied, such as various channel parameters. In addition, lightweight deep learning is also a key research direction, as it is easy to deploy at the edge.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Conceptualization: X.T. and Q.Z.; methodology: Q.Z., S.S., and A.E.; validation: Q.Z. and X.T.; data curation: X.T.; writing–original draft preparation: Q.Z.; writing–review and editing: L.Y. and X.T.; supervision: Q.Z.

Funding

This research was supported by Shandong Provincial Natural Science Foundation, Grant no. ZR2023QF125 and Program for Young Innovative Research Team in Higher Education of Shandong Province, Grant no. 2024KJH005.

Open Research

Data Availability Statement

No new data were created or analyzed in this study.