Continual Learning Inspired by Brain Functionality: A Comprehensive Survey

Abstract

Neural network–based models have shown tremendous achievements in various fields. However, standard AI-based systems suffer from catastrophic forgetting when undertaking sequential learning of multiple tasks in dynamic environments. Continual learning has emerged as a promising approach to address catastrophic forgetting. It enables AI systems to learn, transfer, augment, fine-tune, and reuse knowledge for future tasks. The techniques used to achieve continual learning are inspired by the learning processes of the human brain. In this study, we present a comprehensive review of research and recent developments in continual learning, highlighting key contributions and challenges. We discuss essential functions of the biological brain that are pivotal for achieving continual learning and map these functions to the recent machine-learning methods to aid understanding. Additionally, we offer a critical review of five recent types of continual learning methods inspired by the biological brain. We also provide empirical results, analysis, challenges, and future directions. We hope that this study will benefit both general readers and the research community by offering a complete picture of the latest developments in this field.

1. Introduction

In recent years, neural network–based models have made impressive progress in various application areas. Current AI-based systems perform well with isolated, well-organized, and stationary data. However, real-world scenarios are dynamic and involve multiple tasks [1]. For example, systems such as autonomous cars, drones, and robots may encounter dynamic and versatile situations. For AI-based systems to perform effectively in real-world scenarios, they must be able to learn continuously, much like the human brain [2]. To achieve this capability, AI systems need to acquire new knowledge and enhance or augment previously learned knowledge without forgetting [3]. Currently, most learning systems are unable to learn continuously and may underperform when exposed to dynamic or incremental data environments. The primary challenge facing current AI-based systems is catastrophic forgetting of past knowledge when performing new tasks [4].

Neuroscientists have discovered various aspects of how the human brain functions. The human brain is capable of continuous learning across a lifespan, processing a constant stream of information that becomes progressively available over time [5]. Learned knowledge is retained, augmented, fine-tuned, and applied to perform new tasks [6]. The brain retains specific memories of episodic-like events and generalizes learned experiences to solve future tasks [7]. The well-known complementary learning system (CLS) model describes brain learning as the extraction of the statistical structure of perceived events, while retaining specific memories of episodic-like events to generalize to novel situations [8]. The hippocampal system is responsible for short-term adaptation, facilitating the rapid learning of new knowledge, which is then transferred and integrated into the neocortical system for long-term storage [9]. As the brain accumulates knowledge over time, it becomes increasingly capable of handling complex tasks [10]. Although humans may occasionally forget old information, a complete loss of prior knowledge is rarely observed [11, 12].

Continual learning (CL) is an emerging field that aims to train machines in a way similar to how the human brain learns [13], such that knowledge learned from past tasks is retained, accumulated, fine-tuned, and subsequently used to solve future tasks [14]. CL is considered an inherent capability of the biological brain [15]. Key characteristics of CL include forward and backward knowledge transfer, overcoming catastrophic forgetting, adaptability, scalability, robustness, resource efficiency, task identification, and learning task similarity [16]. Another important goal of CL is the ability to use learned knowledge to enhance performance on other tasks [17].

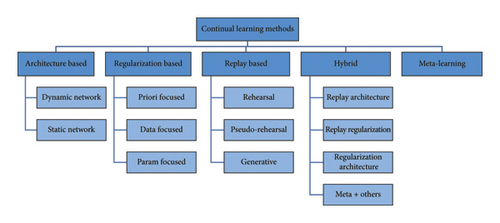

In recent years, various studies have adopted biologically inspired CL mechanisms in AI-based systems [18, 19]. These methods can be divided into five categories: (1) regularization-based, (2) architecture-based, (3) replay-based, (4) meta-learning-based, and (5) hybrid methods. Regularization-based approaches iteratively adjust model parameters for each new task, optimizing them to find a new local minimum [20]. Architecture-based approaches expand networks by adding or, in some cases, pruning a subnetwork, branch, or node to learn a new task [21]. Replay-based approaches store learned knowledge as selected old input samples or data encoded and stored, which is then replayed during the learning of new tasks [22]. Meta-learning is learning to learn. Meta-learning-based approaches adjust network hyperparameters to adjust learning for the new tasks and mitigate biases introduced by manual settings [23]. Hybrid methods combine the above-mentioned approaches [24]. In recent years, hybrid methods have aimed to offer state-of-the-art solutions, particularly in class incremental learning (CIL) scenarios [25].

There are a few review studies on CL. Mai et al. [26] and Masana et al. [27] presented empirical studies comparing several methods for the image classification problem. Aljundi et al. [12] offered an overview of state-of-the-art CL methods, a framework to assess the stability–plasticity trade-off, and an empirical study of eleven CL methods using three benchmark datasets, examining the impact of different parameters. Febrinanto et al. [28] presented a survey of recent progress in graph lifelong learning, classifying existing methods, potential applications, and research challenges. Kudithipudi et al. [? ] identified key capabilities of CL, biological mechanisms to address catastrophic forgetting, and biologically inspired models implemented in artificial systems to achieve CL. Mundt et al. [29] described open set learning and the challenges associated with it, particularly regarding unknown examples beyond the observed set. The authors also proposed a unified perspective integrating CL, active learning, and open set recognition within deep neural networks, along with an empirical study on three datasets.

- •

A detailed overview of the brain’s learning mechanisms and key capabilities that play a crucial role in learning.

- •

A mapping of brain capabilities to current CL-based ML mechanisms.

- •

A comprehensive and critical review of the latest CL methodologies.

- •

The presentation of results achieved by recent CL-based methods in various application areas.

- •

Discussion of benchmark datasets, metrics, and experimental configurations.

- •

A conclusion that addresses key challenges and future directions.

Overall, this study encompasses the characteristics of CL, brain functions that inspire CL-based methods, the mapping between brain functions, and CL-based methods, CL-based methods themselves, benchmark datasets, metrics, and experimental configurations, as well as results and empirical analysis, challenges, and future directions. The details of these characteristics are provided in the following sections.

2. Characteristics of CL

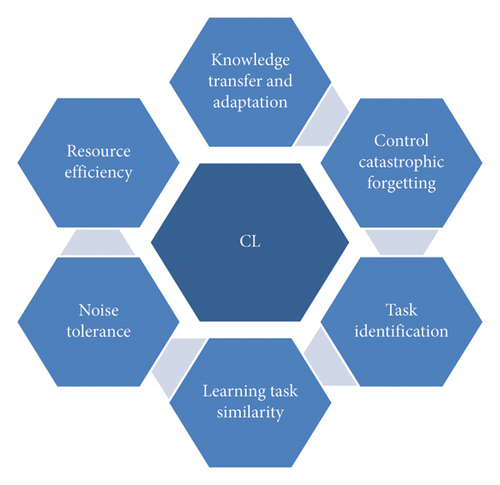

In this section, we discuss the key characteristics of CL. These characteristics are ideally desired in any CL-enabled system designed for a real-world dynamic environment, as shown in Figure 1.

2.1. Knowledge Transfer and Adaptation

The real-world dynamic environment consists of multiple problems or tasks with varying situations and conditions. The system should be capable of learning knowledge, transferring it, and reusing it to perform new tasks. In addition, the system should be able to adapt to any new environment.

2.2. Control Catastrophic Forgetting

Currently, most AI systems suffer from catastrophic forgetting. A CL-based system should be capable of learning new knowledge while also retaining previously learned information. The system must be stable enough not to forget this information while being plastic enough to learn new tasks. This is known as the stability-plasticity dilemma.

2.3. Task Identification

In a real-world scenario, the system should be capable of performing multiple tasks, which requires it to identify new tasks. In addition, the system should be able to handle online learning and continuous data in the context of streaming [30].

2.4. Learning Task Similarity

The system should be able to learn the similarities among different tasks. This ability will help enhance knowledge transfer, both for forward transfer (FWT) and backward transfer (BWT). In addition, the capability to divide complex tasks into simpler tasks can also facilitate the reuse of knowledge among subtasks [31].

2.5. Noise Tolerance

Generally, AI systems are trained on controlled, well-organized, and clean datasets. In contrast, the real-world environment may contain noise, disorganized, and uncontrolled data. The system should be capable of tackling such noise, dynamic environments, and varying conditions. Various studies propose robust models; however, these models have not been studied in relation to CL.

2.6. Resource Efficiency

To mimic the CL functions of the brain, the system should manage constrained resources. New resources for every new task are undesirable. Adaptability and robustness are also key characteristics for any system to achieve CL. In real-world scenarios, the system may encounter noise, out-of-distribution data inputs, and unseen, dynamic environments. As shown in Table 1, CL is achieved in ML systems by implementing methods such as neuromodulation [32], multisensory integration [33], and episodic replay [34].

| Network type | Study | Implementation method | Application |

|---|---|---|---|

| Dynamic network | [119] | CNN | Image resolution |

| [120] | Model with multiple blocks to learn and store actions | Human action recognition | |

| [121] | ResNet, plastic blocks for old knowledge retention and learning new | Classification | |

| [122] | Task-specific network block new scene, NAS for network unit search | Matching driving scenes | |

| [123] | Multistage multitasking network. RL + LSTM. | Text classification | |

| [124] | Bayesian neural networks. Task modeling and representation. Feature space through Gaussian posterior distributions. | Classification | |

| [57] | Shared backbone network. Prediction head training | Image quality assessment | |

| [58] | Decoder networks, mixed label uncertainty strategy for robustness. | Classification | |

| [125] | Dynamic branches fixed and trainable. Knowledge distillation of spatial and channel dimensions | Semantic segmentation | |

| [126] | Main-branch evolution and sub-branch param modification | Classification | |

| [127] | Stacked generalization principle. Deep neural networks. | Multiple | |

| [128] | Memory networks | Classification | |

| [96] | Reinforced continual learning, Bayesian optimized continual learning | Multiple | |

| [129] | CNN, growing network for new class | Classification | |

| [130] | Global and local parameters | Classification | |

| [131] | Cross-domain, backbone with extra params | Cross-domain classification | |

| [118] | Linear filtering, network pruning | Classification | |

| [132] | SOINN. Network modification, noisy data mapping | Unsupervised learning | |

| [133] | Linear combinations, network pruning, progressive learning | Classification, speech recognition | |

| [134] | Manifold alignment for CL. Learning without premonition. | Intrusion detection | |

| [134] | Manifold alignment for CL. Learning without premonition | Intrusion detection | |

| Static network | [135] | Out-of-distribution (OOD) detection and task masking | Classification |

| [136] | Class-specific convolution and modulation parameters | Label-to-image translation | |

| [137] | Incremental sparse convolution layers with uncertainty loss variable | 3D segmentation | |

- Note: The first column shows the network type used to achieve continual learning. Implementation methodology is shown in the third column.

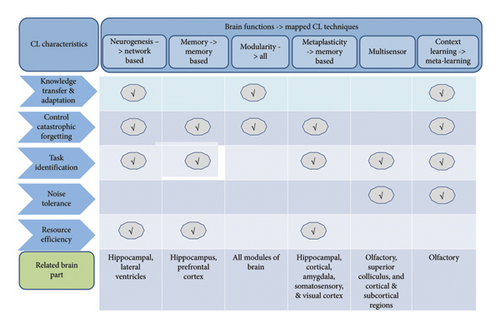

3. Brain Functions That Support and Inspire CL

The neurological processes of the human brain involve various phenomena, such as neural signaling, neuronal connections, and the actions of neurotransmitters, which together form the basis of brain activity and function [35]. Humans have evolved several methods for CL. Some studies suggest that a CL-based method may handle learning in dynamic environments [36]. While not all ML methods for CL are explicitly designed to mimic these biological mechanisms, many exhibit functional parallels that offer insights into robust learning systems. Below, we analyze brain functions that either directly inspire CL techniques (e.g., hippocampal replay) or share convergent principles (e.g., sparsity for efficiency) with engineered solutions. Figure 2 and Table 2 map these relationships, distinguishing between explicit bioinspired methods and implicit analogies. The matrix depicts the connections between key goals of CL and biological mechanisms in the brain. We describe the brain functions that support the goal of CL, the relationship between different CL characteristics and brain functions, and recent CL-based ML implementations. The circle with a tick mark indicates that the brain function contributes to the CL characteristic. The line below shows the part of the brain that is responsible for the said characteristic.

| Continual learning characteristics | Neurogenesis | Memory | Brain | Functions | Multisensors | Context learning |

|---|---|---|---|---|---|---|

| Modularity | Metaplasticity | |||||

| Knowledge transfer and reuse | [21, 57–59] | — | [32, 60–62] | — | — | [33, 63–65] |

| Control catastrophic forget | [66, 67, 67, 68] | [69–71] | [61, 72–74] | [16, 50, 75, 76] | — | [32, 77] |

| Task management | [59, 78–80] | [69, 81–83] | [57, 61, 84] | [16, 50, 85] | [86, 87] | [33, 64, 78] |

| Adaptability | — | [22, 34, 81, 88] | [32, 89–91] | [16, 74] | [33, 92] | [33, 64, 89] |

| Resource management | [66, 93, 94] | [8, 69, 71, 95] | [21, 42, 61, 96] | — | — | — |

- Note: The table represents the studies which cover the brain biological mechanism (shown as top row) and continual learning characteristic.

3.1. Neurogenesis

Neurogenesis is the process of generating new neurons in the central nervous system [37]. It occurs in the dentate gyrus of the hippocampal formation and in the subventricular zone of the lateral ventricles (LVs) [38, 39]. This process is expedited when the brain undergoes a variety of experiences. Additionally, changes in the size and configuration of the brain have been observed [15]. Structural connections remain almost the same throughout life [15]. During the study of the mouse brain, it was observed how newly created neuroblasts travel from the subventricular zone of the LV through the rostral migratory stream (RMS) into the olfactory bulb (OB), where mature interneuron populations are produced [40]. Although this process remains active throughout life, it occurs more actively in the early years. Some studies related to neuroimaging suggest that the infant brain is more plastic and continues to grow and develop. Biological neurons in the brain utilize constructive algorithms in the form of plasticity, involving the enlargement and pruning of synapses during neurogenesis. Several studies suggest that synaptic plasticity and neurogenesis are important mechanisms to alleviate catastrophic forgetting in the biological brain [41].

In the context of ML, the explicit inspiration from neurogenesis is achieved by various architecture-based methods, where new neurons, blocks, or branches are added to the existing network to learn new tasks [42]. In addition, the implicit analogy is that both brains and ML systems face trade-offs between stability (retaining old knowledge) and plasticity (integrating new information), leading to similar solutions like dynamic capacity expansion.

Biological neurogenesis is much more dynamic and adaptable than what current algorithms can replicate. In the brain, it is carefully controlled by factors such as environmental enrichment, stress, and chemicals like serotonin and BDNF [43].

3.2. Memory System

In the biological brain, replay is defined as the repetition of activities in some format during sleep that have already occurred while awake. This activity occurs in the hippocampus alone or collectively in the hippocampus and neocortex [44]. Similarly, studies have observed the presence of multiple memories in the brain, such as hippocampal–cortical memory [45]. It has been observed that the hippocampus is used for fast learning, whereas slow learning occurs in the cortex. This explains how the brain forms declarative memories. However, studies suggest that there may be other memory models in addition to these. The CLS theory presents the idea of two memory blocks in the brain [46]: the first is the hippocampus for fast learning and the second is the neocortex for slow and detailed learning [44].

According to the modified description of CLS, the hippocampal model continuously trains the neocortical model by replaying nonoverlapping representations of previously learned knowledge [44, 47]. Neuromodulators like acetylcholine help switch between encoding and retrieval modes. Oscillatory coupling, such as theta–gamma phase coordination, plays a key role during memory replay. Additionally, behavioral context, such as stress hormones, enhances the recall of important or emotionally significant memories. Several studies, such as [8], have proposed methods to control memory size while learning multiple tasks.

In artificial neural networks, the explicit inspiration is implemented in the form of dual-network architectures (e.g. [48]), generative replay [49] which replicate hippocampal–neocortical interactions, and replay of samples [44]. In addition, as an implicit analogy, the convergent principle is adopted through replay-based methods which address forgetting by “rehearsing” past data, mirroring the brain’s need to counteract interference with limited resources [50]. By taking inspiration from the time-varying plasticity of biological synapses, various studies have tried to replicate metaplasticity by combining memory, architecture, and regularization-based methods [24, 49, 51].

In the following two paragraphs, we will discuss two important topics related to the memory system, which are active forgetting and memory reconsolidation in brain. Active forgetting in the brain is a controlled practice in the brain. During sleep, the brain loses less important neuron connections to free memory for new learning. This process is called synaptic downscaling [52]. In addition, less important memory is identified to be eliminated through dopamine mapping [53]. Moreover, new neuron cell addition replaces the old memory cells to incorporate new learning [54].

Memory reconsolidation in the brain is the process of recalling a previously stored memory which are considered to be temporarily unstable or malleable. This process depends on the synaptic plasticity mechanism such that the memory retrieval temporarily weakens it, allowing to update for new learning [55, 56].

3.3. Neuromodulation

Studies suggest the modular nature of the human brain. It is observed that neuromodulation plays an important role in learning and adoption in different environments and contexts, knowledge remembering, augmentation and refinement [61], robustness to noise, and adjustments even during uncertainties [97]. Sparsity is closely associated with the neuromodulation. Sparse coding happens generally in the brain [98]. It is reported that the hippocampal dentate gyrus utilizes very sparse depictions for pattern discernment and knowledge retention [99]. Sparsity also offers robustness, memory capability, storing efficacy, and efficiency [100]. In this context, the explicit inspiration is the gating mechanisms in ML (e.g., [62]) which mimic neuromodulatory attention.

As shown in Table 1, brain functions such as neuromodulation, neurogenesis, and context-dependent learning are closely related to knowledge transfer. Neuromodulation is extensively inspired by various studies, especially by using architecture-based models [62].

3.4. Metaplasticity

Metaplasticity is related to the updating of synapses which depends upon the current state, history, and neural activities. It also indicates the direction, duration, and degree of synaptic plasticity in the future [16, 101]. Studies suggest that metaplasticity phenomena play various roles in learning by the biological brain such as controlling the threshold and activation for plasticity, synchronization, and consolidation of the learned knowledge [102–104]. Synaptic plasticity is the mechanism by which the brain stores the information. The capability of different synapses can be updated through neural activity [105]. Subcortical acetylcholine happens in the substantia innominata, and in the medial septum (M), dopamine happens in the ventral tegmental area and the substantia nigra compacta, noradrenaline happens in the locus coeruleus, and serotonin happens in the dorsal and medial raphe nuclei [106]. Learning new knowledge can affect the previous learning which may cause forgetting. The availability of limited resources may cause rapid forgetting. This may also occur in biological synaptic weights. Metaplasticity rules that govern the learning are the source of inspiration for the design of ANNs to cater to forgetting and solving the stability–plasticity phenomena with available resources. Based on explicit inspiration, regularization-based methods penalize changes to important weights, akin to synaptic tagging [107].

In biological metaplasticity, synaptic changes are shaped by dynamic factors like neuromodulator activity patterns such as dopamine bursts aligning with theta brain waves, and behavioral states such as sleep, which can reset plasticity through specific processes [108]. In contrast, artificial methods like elastic weight consolidation (EWC) simplify this complexity by stabilizing model weights using basic importance measures [109]. Unlike these methods, biological synapses adapt flexibly, incorporating multiple influences like sensory inputs, energy constraints, and genetic regulation to fine-tune plasticity.

3.5. Multisensor Input Learning

Biological organisms take input from multiple sensors such as vision, tactile, and auditory signals. These input signals may be noisy, distributed, nonlinear, and out of sync [110]. These signals from multiple sensors are integrated by the superior colliculus as a final input to enable final coordinated motor functions among different organisms [110]. The initiation of movements through a common motor map offers efficiency in handling these signals and responses [111]. The knowledge of the process involved in handling, filtering, and processing multisensory input from different organisms provides the lead for the task-agnostic method which is a desired property to achieve CL capability in AI systems [111].

The OB is the cortical area that receives sensory signals from the other parts of the brain and the nose. Neurons that connect directly to brain regions involved in memory, context, and emotion are mainly driven by internal states, behavioral expectations, and the context of learned odors [112]. Low-level sensitized tissues and response at the subcortical level facilitate the interface with the environment. The high-level cortical brain executes only necessary plans and selects and tunes them. This method offers better resource utilization and better learning [113]. These inputs facilitate the fast learning of tasks, associating rewards with stimuli, coordinating appropriate motor actions, and helping modulate responses [114]. As an implicit analogy, task-agnostic ML methods [86] resemble biological multisensory processing but often lack modularity seen in cortical hierarchies.

3.6. Context-Dependent Learning

Studies suggest that the context plays a pivotal role in information understanding, processing, and making inferences and strategies in the biological brain by offering in the context information [64]. Context information plays an important role in the formation of responses in the olfactory system. Similarly, the neurons related to emotion, memory, context, and information also consider context information including state and behavior in decision-making. This additive information related to the context offers a complete picture and flexibility in learning, decisions, and actions. Context modulation and gating are also involved in selective attention [115]. For example, gain modulation helps to encode target trajectories in insect vision. Studies suggest the presence of some biases that are present since birth. These inductive biases play a role in complete learning from the environment [116]. In the brain, context-dependent learning works by neuromodulatory states such that arousal controlled by norepinephrine adjusts the importance of sensory inputs dynamically, cross-regional oscillatory coupling which regulates theta–gamma brainwave interactions to link related information, and developmental metaplasticity such that the brain fine-tunes its sensitivity to context using genetic and molecular changes [113]. In comparison, ML methods like task-specific gating can handle context changes in a fixed way but lack the brain’s ability to continuously adapt and scale context sensitivity. Context-dependent learning is achieved in ML through meta-learning and task-specific gating [117]. Different studies utilize network pruning method to control the growing dynamic network [118].

4. CL Methods

CL-based methods can be categorized into five types: regularization-based, architecture-based, replay-based, meta-learning-based, and hybrid methods, as shown in Figure 3. These methods are considered biologically inspired having roots in the brain functions.

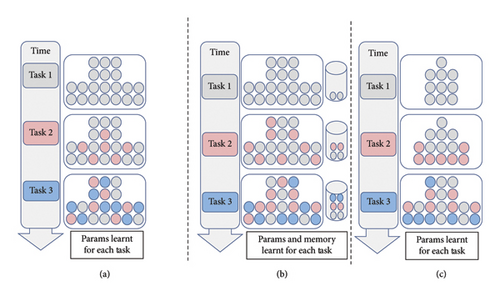

4.1. Architecture-Based Methods

In this approach, architectural properties and parameters of the model are updated in response to the new task to accommodate the new information as shown in Figure 4(c). These models are inspired by modularity. Modularity is a capability of biological learning systems that defines the functional specialty of the brain subsystems. Although the working principles of neurogenesis and structural plasticity are still topics of active research, the available findings suggest that modular neural networks with plasticity offer an effective mechanism for knowledge retention and reuse even in a dynamic environment. Architecture-based methods can further be divided into dynamic networks and static networks. In both cases, the existing model is preserved and reused while adding new learning capacity. These approaches are envisaged as the knowledge retention and reuse mechanism, which is not possible to acquire by keeping the capacity of the model permanent, especially in the case of multiple dynamic tasks. Table 1 enlists the recent CL-based methods that adopt architecture-based methods for learning. It also highlights the type of network and neural network implementation methodology.

4.1.1. Dynamic Network

The dynamic network may grow for every new task by adding a new branches [42], submodel/block [91], and new layers or adding new nodes [73]. Some studies suggest that pruning of nodes, layers, or parameters may also be performed in this learning method [138, 139]. As the newly added parameters, blocks, and branches do not interfere with each other, hence, the performance on past tasks is not disturbed by learning the new tasks [96]. Various schemes are proposed for dynamically growing the networks, such as [57], which presented an idea of a shared backbone model and a prediction head for each new task with simultaneous training of all heads. Overall performance is calculated by a weighted sum of the results of all heads. Ref. [21] presented the idea of a network that grows as per new feature space and also devised a procedure to compact the enlarged network by a self-activator mechanism. Ref. [58] added a new decoder to the main network for every new class with mixed label uncertainty and an average probability of perturbed samples is calculated to compute the results. Ref. [59] devised a network comprised of two branches such that one branch is dedicated to learned knowledge of old tasks and the second branch is dedicated to the learning of the new tasks. A feature fusion block is utilized to synchronize two branches. It can be observed that such methods utilize task-specific oracle and generally follow multihead configuration [80]. In the case of dynamic networks, hyperparameters are manually assigned. However, a few studies utilized the neural architecture search (NAS) mechanism to calculate the number of cells and blocks of the network [122]. NAS is also utilized to select appropriate parameters already learned through the learning of the old tasks for re-usability [140].

In dynamic networks, a few studies utilized self-organizing incremental neural networks (SOINNs). Such networks are capable of mapping the probability distribution of input data to network structure through competitive learning to grow network structure [132]. However, to prepare the network for new tasks, filters/layers are deleted at user-defined fixed patterns which results in abrupt alteration in the network structure [132]. In addition, the formation of a network structure is also a major challenge [141]. Recent studies try to address these issues by optimizing the network updating process for unsupervised learning [132, 141]. SOINN was extended for supervised learning by using virtual nodes that carry label information [142].

In the dynamic network-based methods, the network may keep growing due to the addition of new parameters, blocks, or branches. In recent years, some methods have been adopted to control and compact the growing network. In the growing network, a large number of old neurons dominate the network which limits the learning capacity of the network [140]. Network pruning or quantization is adopted to control the network size and to preserve the learning [118]. The second technique is weight rectification to adapt the model to new tasks by limiting the network size [143]. This technique proved effective even for zero-shot scenarios and data without task identification. The third technique to prune and compact the model to learn multiple tasks is based on the variational Bayesian approximation [144]. It utilizes sparsity-inducing priors to compact the hidden units. It can also be implemented through sparse storage and constructive algorithms. The fourth technique is based on the utilization of the power law to grow sparse networks through preferential attachment, topology driver method, and node self-activation [145]. As an additional benefit, these methods also alleviate negative FWT and overfitting by limiting the parameter transfer [133].

4.1.2. Static Network

In static network–based methods, generally, a fixed block is allocated for each task with the option to mask out blocks dedicated to old tasks during the learning of the new tasks [138]. To achieve this goal, some studies devised a gating mechanism. In this line, [146] used gating which governs the selection of the filters for the training of new tasks. Ref. [147] used gating which calculates the dependence between previously stored knowledge and new knowledge.

The main challenge in the static network is the balance of learning between old and new tasks [140]. Various schemes have been proposed to keep the knowledge by modifying the parameters of the static networks, such as [148], which combined spectral attention semantics and coefficients locations along with the pruning to store the knowledge. Ref. [135] utilized out-of-distribution detection and task masking for the learning of parameters to alleviate catastrophic forgetting. Ref. [136] presented an idea of a semantic aware convolution filter and normalization technique to train the fixed parameters of the network, whereas [137] proposed terms for the learning of fixed parameters both at the network and layer level such that residual propagation method for sparse convolution is defined at layer level while an uncertainty variable is defined at network level.

4.1.3. Limitations of Architecture-Based Methods

Although the architecture-based method can retain the learned knowledge, however, such methods may reach limits of learning capacity and size Therefore, these methods require an appropriate mechanism to manage the increasing number of parameters [149], and a mechanism to decide which parameters should be used at the test time [124]. In addition, most of the architecture-based methods need a task-controlling mechanism at the test time [149]. It is also observed that as the number of tasks increases, the performance on old tasks gradually decreases [150]. Similarly, the dissimilarity between data of old tasks and new tasks may also affect the performance as the model may suffer from gradient conflict problems [59]. Moreover, it may take a large amount of time to learn distributions to form the tree structure in the case of large datasets [151].

4.2. Regularization-Based Methods

Different studies suggest that synaptic plasticity takes care of the synchronization between previously learned and new knowledge [13]. Inspired by this mechanism of the brain, regularization-based methods are devised [13]. This methodology is different from architecture-based and replay-based methods in such a way that it does not generally require increasing memory or networks, rather it utilizes a regularization factor in the loss term during the training of new tasks [12] as shown in Figure 4(a).

Table 3 enlists the recent CL-based methods that adopt regularization-based methods for learning. It also highlights the type of gradient additional terms and neural network implementation methodology.

| Regularization | Study | Implementation method | Application |

|---|---|---|---|

| Priori focused | [152] | Incremental hashing loss function. CNN | Image retrieval |

| [153] | RL. Policy relaxation for new tasks. Weighting for instances of episodic replay. | RL | |

| [154] | Regularization at the functional space. | Classification | |

| [155] | Random theory and Bayes’ rule. Randomized neural networks. A single pass-over data. | Classification | |

| [20] | Class-correlation loss function. Low computation for training | Classification | |

| Data focused | [156] | Regularization of the feature space. For generative models | Classification |

| [157] | Intertask synaptic mapping (ISYANA) to consider the shared feature space between the tasks. Parameter importance matrix | Classification | |

| [158] | The regularizing term is modified based on the difference in probability distribution at the target layer based on the Cramer–Wold distance | Classification | |

| [80] | Remember and adjust model behavior. Two loss functions. | Classification | |

| [159] | Gradient descent for online learning. Dynamic gradient descent settings | NLP | |

| [160] | Feature map weighting. Restricts modification of critical features | Classification | |

| Param focus | [161] | Adaptive uncertainty-based regularization. Three uncertainty factors used to regularize weights. A task-specific residual adaptation block for the network | NLP |

| [162] | Federated learning class-aware gradient loss and a semantically related loss. Protect privacy by gradient-based communication. | Classification | |

| [163] | Parameter significance-based weight updation | Image deraining | |

| [164] | For embedding networks. The computed semantic drift of features. | Classification | |

| [165] | Quadratic gradient based on Hessian approximation. Regularize parameters of BN for continual learning. | Classification | |

- Note: The first column shows the gradient type. Implementation methodology is shown in the third column.

4.2.1. Priori Focused

Prior-focused methods emphasize regularizing the gradient stick to new tasks with an emphasis on dealing with the catastrophic forgetting of previously learned knowledge. In these methods, the model trained on old tasks becomes a prior for the learning on the new task using the regularization factor. The general strategy is to regularize the weights of the model to keep more relevant to old tasks [156].

Some studies calculate the weightage of parameters of the model through the distributions and update the task-specific parameters as per the weightage and gates mechanism [13]. However, updating parameters through controlled weighted penalties may not be successful in optimizing the loss function in case of a large number of tasks [96]. Various schemes are proposed for updating the weights of the network, such as [166], which proposed an idea of training network in the null space for past problems; however, strong null space prognosis may affect the learning on the current problems. Ref. [167] utilized mode connectivity in the loss term to train the network for multiple tasks such that null space projection is used for past problems and SGD for current problems; however, it needs to store past samples similar to rehearsal-based methods. Ref. [168] presented the idea of gradient decomposition, named as shared gradient and task-specific gradients. Task-specific gradients are prone to new tasks and consistent with shared gradients. This line [168] further presented the idea of separate gradients for each layer to evade the dissimilarity and hegemony of gradients for different layers. It is further observed that convergence of shared gradient is good for retaining the knowledge of old tasks; however, the convergence of task-specific gradient may disturb the learning of other tasks [168]. Similar methods such as drop-out and training regimes also emphasize the modification of the loss functions through a weight selection mechanism [13]. These methods are considered suitable for multitasking learning problems [13].

4.2.2. Data Focused

To solve the issue of coherence of embedding between old tasks and new tasks [169], a few studies utilize knowledge distillation to retain the learning [96]. Knowledge distillation offers a compression method to transfer knowledge from one model to another model [170]. This is done by taking a copy of the shared weights and layers of the model from the previous problems. In addition, a supplementary loss function is used for knowledge distillation. In data-focused paradigm, various schemes are proposed for updating the weights of the network, such as [171] presented the weight aligning to regularize the biased weights of the FC layer. FC layer is selected based on the assumption that weights of the FC layer are very sensitive and biased in CIL, particularly in case of class imbalance data. Generally, CL-based methods use standard gradient descent with fixed steps per repetition. Such settings are important for cross-task learning. However, keeping these steps predefined may undermine learning and generalization across multiple tasks/problems. Recent studies suggest gradient descent settings to be dynamic at run time, called continual gradient descent [159].

4.2.3. Param Focused

Generally, three types of approaches are adopted with respect to the incorporation of regularization terms in the network: first, to introduce the regularization term in the parameter space. Most of the studies fall in this category; the second is to introduce the regularization term in the functional space [154] by regulating the models’ predictions to stay aligned with the old tasks through task-specific functions; third, utilization of regularizing terms in the shared feature space [156].

4.2.4. Limitations of Regularization-Based Methods

Regularization-based methods offer very less or zero forgetting; however, such methods are less prone to learn new tasks as learned weights are inclined to old tasks [128]. In such scenarios, the model may suffer from a gradient conflict problem where gradients of multiple task objectives are not aligned so that adopting the average gradient value can be disadvantageous in achieving better results [59]. Second, such methods are likely to fail if the model is presented with many tasks or the sequence of the presented tasks is very diverse due to the dependability on the correct estimation of the loss function. Third, such methods do not consider intertask correlation to exploit shared features. In such cases, even the parameter importance matrix (if used) may explode [157]. In this regard, various recent studies present the loss function as the cross-correlation among different tasks and classes [20]. Fourth, in the case of a few-shot learning scenarios, regularization-based methods may fail due to the requirement of a high learning rate due to the availability of few input samples for the new task [129]. Five, these methods may not perform well for lifetime learning augmentation. Six, a few empirical studies suggest that regularization-based methods offer comparatively low accuracy as compared to other methods and offer a poor proxy for keeping the network output [194].

4.3. Replay-Based Methods

Memory is the core subsystem of the human brain that retains learning over a lifetime. This retention of learning offers the baseline for knowledge reuse and augmentation. This mechanism offers inspiration for the memory-based mechanism for knowledge retention and reuses in ML-based methods to achieve CL [195–198] as shown in Figure 4(b).

Table 4 enlists the recent CL-based methods which adopt regularization-based method for the learning. It also highlights the type of the gradient additional terms and neural network implementation methodology.

| Replay type | Study | Implementation method | Application |

|---|---|---|---|

| Rehearsal | [172] | Memory augmented. Sparse knowledge replay. GRU | Trajectory prediction |

| [173] | Cortex, hippocampus mapping, semantic building for memory management | Anomaly detection | |

| [174] | Instance/class-level correlations in knowledge replay | Classification | |

| [175] | Dual memory for samplers and statistics of past classes | Classification | |

| [176] | Contrastive learning for feature extraction. Self-distillation to preserve knowledge | Classification | |

| [177] | Reservoir and class balance methods were utilized. 3D geometry data are saved for future tasks | 3D localization | |

| [178] | Continual learning for noisy data. Self-replay to tackle forgetting. Self-centered filter to clean data | Classification | |

| [179] | Casual inference to identify and remove causal effects. Incremental momentum exclusion method | Classification | |

| [180] | Intertask attention strategy to enhance knowledge. Dual-classifier structure | Classification | |

| Pseudo rehearsal | [181] | Dynamically growing dual memory-based network with memory replay | 3D object recognition |

| [182] | Task-related knowledge dictionary, complementary-aware latent space based on nonlinear relation | Sentiment analysis | |

| [183] | Two-level parameterized input samples | Classification | |

| [184] | Knowledge extraction through prototype matching. Feature sparsification to optimize the memory. Contrastive learning | Segmentation | |

| [185] | Distinguished feature embedding for objects. Few-shot object detection and instance segmentation. | Segmentation and detection. | |

| [186] | Cross-domain knowledge transfer. Two graph structures to preserve learned knowledge | Person identification | |

| [187] | Multilevel pooling for knowledge and feature relation extraction and storage. Entropy-based pseudolabeling. | Segmentation | |

| [188] | Word embedding for semantic knowledge. Mechanism for visual-semantic information arrangement | Classification | |

| [189] | Dreaming memory to protect privacy. Knowledge distillation, contrastive learning | Person identification | |

| Generative | [190] | Generative memory. Memory-based data distribution, temporary, and long-term | Classification |

| [191] | Dynamic generative memory knowledge retention through parameter-level attention mechanism | Classification | |

| [192] | Clustering of features. Synthesized, saved features combined for new tasks. | Classification | |

| [193] | Semisupervised learning classifier. Conditional data generation, pseudolabel estimation for enhanced learning. | Classification | |

- Note: The first column shows the replay type. Implementation methodology is shown in the third column.

4.3.1. Rehearsal

Rehearsal or replay-based technique requires to store input subset from previously learned tasks [196]. These stored samples are replayed to recall the learning for solving future problems []. A similar approach is episodic memory methods which not only use the previously stored samplers for training but also for making inferences [].

Due to data diversity and storage limitations, selecting an input subgroup effectively and efficiently for replay is a challenging problem [199]. Various schemes are proposed to select, preserve, and retrieve the samples effectively and efficiently, such as [200] proposed a scheme to save supplementary low-fidelity samplers; Ref. [201] selected samples based on importance and class affiliation; Ref. [202] utilized weighted k-nearest neighbor rule to select and store memory cells; Ref. [199] utilized combination of multiple functions such as variance in class and loss function for the selection of appropriate samples; Ref. [203] proposed replay strategy for task-free boundaries; Ref. [204] proposed a procedure to update the memory efficiently and applying knowledge distillation to cover CIL; Ref. [205] used linear mode connections of network to store the knowledge; Ref. [22] tried to mimic the declarative memory in the form of instance memory which is used to save the samples and the task memory which saves the semantic information; and Ref. [88] proposed a methodology to preserve the inter instance spatial relation by using knowledge invariant and spread out properties.

4.3.2. Pseudo-Rehearsal

Pseudo-rehearsal technique is presented to solve the scaling issues related to rehearsal-based methods for sample selection [206]. Random inputs based on some distributions which are supposed to estimate the samplers from previous tasks are used as input to the model [207]. This technique is proven effective for small-sized networks. However, for large-sized models and huge datasets, such random-based inputs may not represent the complete input feature space.

Various schemes are proposed to convert the input space to the distributions and vice versa, such as [17] utilized code fragments–based knowledge extraction and transfer scheme in LCS. Ref. [208] utilized Bayesian-based statistical representation of latent knowledge space for clustering. Ref. [181] presented dynamically growing dual memory-based network with the concept of memory replay for neural activation. Ref. [209] saved the mean and standard deviation of extracted features and then regenerated the features based on Gaussian distribution for replay. Ref. [210] saved pixels’ affiliation information using self-attention maps obtained from the last layer of multihead transformer encoder and utilized a class-specific region pooling. Ref. [83] randomly selected episodes to generalize the features and a mechanism to generate self-promoted prototype. Ref. [211] presented an idea of preparing and reserving embedding space to represent the input classes.

4.3.3. Generative

In generative learning methods, instead of storing input samples to give as input to the model to learn future tasks, input data are generated using different statistical distribution and generative ML models. These distributions are derived based on the input samples of the previous tasks [190]. Various schemes are proposed for generating the input samples from different distributions, such as [212] proposed schemes for knowledge extraction and transfer by combining pseudo-rehearsal and generative memory methods; Ref. [202] saved the input samples in the form of statistical distributions and then generated the replay samples with the help of these distributions; Ref. [213] proposed an idea of generative negative replay; and Ref. [82] utilized an adaptive feature generation mechanism by exploiting diverse knowledge of noisy unlabeled data. However, such approaches have high computational complexity due to the high training time of the generative models, particularly for large datasets [213].

4.3.4. Limitations of Replay-Based Methods

In rehearsal-based methods, the data are presented sequentially, and the solution is prone to remain in the low-loss area for each task. This phenomenon causes overfitting which hampers generalizing capability of the model [173]. Second, as the number of tasks increases, the forgetting issue becomes more prominent due to the memory and computational constraints and inevitable overfitting of the model to a particular feature space [96]. Third, in the case of generative learning, the model may take a vast amount of time to learn appropriate distributions of large datasets [151]. Fourth, such methods generally suffer from inadequate generalization ability and are more fragile to noise [214].

Regarding pseudo-rehearsal, the approach may suffer from scaling and overfitting in the case of complex tasks [192]. Second, it requires an effective and efficient mechanism for encoding, retrieving, and reusing the learned knowledge for better utilization for future tasks [169]. Third, if encoding scheme is not updated with the arrival of the new data, it may learn the new tasks well [215]. Four, for each new task, embedding space is updated to understand new labels; however, the model may confuse in learning new labels [216].

4.4. Meta-Learning–Based Methods

Above-mentioned methods may suffer from inductive biases introduced due to the manual setting of parameters. Such biases may cause the suboptimal performance due to differences in objective and expected output [117]. Meta-learning offers better solutions in complex and diverse environments when complicated setting of parameters is required.

Meta-learning is learning to learn. It is inspired by the capability of the human brain that finds new solutions after small learning and experience. By utilizing the learning-to-learn mechanism, this technique tries to learn the dynamic settings as per the working of the brain. Meta-learning is the learning at two levels, i.e., outer loop and inner loop. Outer loop controls the working of inner loops in dynamic scenarios through meta-updates of meta-objectives. The inner loop manages the task-specific fine-tuning and specific task [217]. Generally, meta-learning methods are implemented through support sets and query sets. Support set is utilized for fast learning. The query set is utilized to learn the adaptation [218].

Meta-learning methods can be categorized as model-based, optimization-based, and parameter-based methods [219]. Reference [23] presented a meta-learning methodology to tackle deterministic problems by CL using neural networks. The method utilized a corset as memory and the degree of relationship between global and individual tasks are exploited to tackle forgetting. Ref. [219] implemented meta-learning by the combination of preconditioning matrices to update the gradient for sharing the learned knowledge across multiple tasks to tackle forgetting. Ref. [220] devised an approach in which attention methodology is utilized to empower the meta-learning algorithm to highlight and acquire nontrivial important features, and then, the meta-learning algorithm is enabled to take care of knowledge learned from past tasks and reuse it for future tasks. Ref. [117] proposed a two-level regularization based on meta-learning to regulate the network for knowledge retention and utilization across multiple tasks. The optimization methodology is devised for each task. Ref. [221] exploited the meta-learning to achieve CL through a vision transformer network, i.e., attention to self-attention. A new mask is trained for each new task and added to the main vision-transformed model to achieve learning across multiple tasks. Ref. [222] utilized domain randomization and meta-learning to achieve CL. Meta-learning-based mechanism is used to regularize the loss function related to the learning of multiple domains. The concept of meta-domains/auxiliary domains which are generated by randomized image manipulations is also utilized. Inspired by the slow and fast memory concepts of the human brain, Ref. [218] tried to devise a mechanism of fast and slow updates of weights. In the proposed study, updating weights, feature extraction, and semantic learning mechanisms are defined in fast and slow-based learning.

4.4.1. Limitations of Meta-Learning–Based Methods

Though the prospect of meta-learning–based solutions is exciting, such methods have few limitations [223]. First, these methods are computationally expensive. Second, such methods require controlled, well-defined selection of the tasks, which may not be the case in the real-world scenarios. Three, it is observed that meta-learning-based methods may undergo task overfitting in case of multitask problems. This weakness limits its generalization capability when deployed in dynamic real-world scenarios with multiple tasks [220]. Four, in the case of incremental learning, the model needs to balance stability and plasticity. Currently, most meta-learning-based methods lack this capability. Five, in the case of task-specific feedback mechanisms to update the parameters, the meta-learning technique may not perform well for complex datasets [224].

4.5. Hybrid Methods

Few studies suggest that the above-mentioned approaches may fail even for controlled and well-arranged datasets in scenarios when labels or task IDs are unavailable at the test time [135]. Keeping in view the limitations of the above-mentioned approaches, some studies proposed hybrid solutions that are a combination of the above-mentioned methods [24, 49, 51]. Recent studies show that hybrid methods offer state-of-the-art solutions to avoid catastrophic forgetting particularly for CIL scenarios [25]. Such methods may be categorized in the following types.

4.5.1. Replay + Architecture

In these types of studies, catastrophic forgetting is tackled by combining the replay- and architecture-based approaches such that the memory is devised for storing samples or embedding, whereas the network is modified for new knowledge learning and consistency across different tasks. However, in such cases, as the semantic information of the weights is incomplete, it is difficult to store the knowledge continuously. Hence, a few studies suggest the separation of the memory module and the task module for the better performance [225]. Reference [146] utilized episodic or generative memories to replay the saved samples. In addition, a static network mechanism is adopted. For the training, a gating mechanism is adopted which governs the selection of the filters for the training related to each new task. Ref. [226] proposed a framework consisting of a network, a memory matrix to store the features, and a recall network with a conditional generative adversarial structure to retrieve old concept memories. Based on the probability distribution alignment, Ref. [227] managed the replay buffer with correct offset probabilities of buffers. In addition, the parameter consistency during the learning of multiple tasks is also maintained by updating the loss function for a subset of weights. Ref. [228] presented the idea of prompts which are small learnable parameters. The prompt pool is used for CL across the multiple tasks. The learned knowledge is kept in the form of a prompt pool in the memory.

4.5.2. Replay + Regularization

Instead of simply selecting previous samples from the retained ones, a few studies [229–231] devised a regularization-based strategy for sample selection. Ref. [230] selected samples whose gradients are most interfered with by the newly arrived samples. Ref. [12] proposed the idea of prototyping actual data for efficient learned knowledge and memory management. As the model suffers from low accuracy and weak knowledge transfer across different classifiers in the case of CIL, Ref. [232] presented a method based on a variational autoencoder network for classification and sampler generations. In addition, a contrastive loss function is introduced for better knowledge learning and sharing. Ref. [233] proposed a hashing-based network with gradient aware memory system. The hashing is achieved in two steps. In the first step, the network learns to hash the semantics, and in the second step, learned semantics are converted to particular codes.

To address the limitation of overfitting and overlapping of embedding/label space, Ref. [216] presented a geometric structure to retain the selected samples along with the geometric relation in the embedding space along with the idea of a pretrained model which are trained with episodic samples. In addition, a contrastive loss function is introduced for the training to enhance CL. Ref. [234] utilized a fully spiking network which is trained by biologically plausible local rules. In addition, a regularization term based on synaptic noise and Langevin dynamics is introduced for knowledge extraction, transfer, and utilization.

In recent studies, various researchers utilized core sets in conjunction with memory-based methods for the selection and embedding of the samples [235]. Core sets are generally used for clustering, unsupervised, and supervised learning [229]. Reference [236] utilized it for sample selection by getting maximum value of sample gradient diversity by combining mini-batch gradient similarity and cross-batch diversity [237]. Reference [240] devised a bilevel optimization mechanism and [229] proposed a weighted corset mechanism to select samples from saved knowledge. However, above approaches defined mechanisms that are very specific to methodologies. Such approaches cannot be generalized to broad range of CL methods. In addition, such approaches do not cover other memory replay methods such as pseudo-rehearsal. Moreover, these approaches are computationally expensive.

4.5.3. Architecture + Regularization

To accommodate the CL in temporal-based problems, Ref. [241] utilized attentive recurrent neural networks and two new loss functions for knowledge retention and utilization across multiple problems. Various CL-based methods suffer from knowledge interference across different tasks. To address this issue, Ref. [242] proposed an idea that sparse neurons are reserved for learning current and past tasks, whereas the majority of parameters be reserved for learning future tasks. To achieve it, variational Bayesian sparsity priors are utilized as an activation function. In addition, a loss mitigation-based input sampling and replay mechanisms are defined. The proposed method is capable to learn new tasks without explicitly boundaries. To protect the privacy of the data, Ref. [243] presented the idea of using the sketches of the data instead of replaying the actual data. It is achieved by utilizing a gradient-based consensus mechanism for mapping the actual data to sketches. However, the application of the study is very limitation which is achieved through paying heavy computational cost.

4.5.4. Meta-Learning + Others

CL-based model may suffer to adopt continuous to unlabeled data in a dynamic environment. To address the said limitation, Ref. [243] presented a meta-based optimization and data replay scheme to update the network parameters. Ref. [217] proposed a methodology based on dynamic prototype-guided replay of samples for CL in NLP. In the proposed methodology, an online meta-learning method is adopted by using prototyping mechanism for the selection and representation of effective samples.

4.5.5. Miscellaneous

The thalamus in the brain serves as a filter and relay station to manage sensory input to the cortex. It enhances relevant signals and suppresses background noise. In addition, the thalamus module acts as a learned noise filter such that it reduces overfitting [244]. In the neural network, the control of flow of information between layers mimics the thalamus filters. In addition, amplification of key information and suppressing irrelevant ones mimics the brain’s ability to focus on important information while ignoring distractions. Additionally, the top-down feedback is similar to cortical–thalamic loops [245, 246].

5. Benchmark Datasets

In this subsection, we will review existing benchmark datasets specially designed for CL. Its pertinent to mention that most of the studies as discussed above, use normal benchmark datasets which are not specially designed for CL problems. When normal datasets are used for CL, the datasets are divided and arranged as per the requirements of the CL. However, a few authors described the condition of the dataset to be designed for CL. For example, Ref. [247] describe that real CL benchmark dataset should offer several views. Ref. [248] holds the repetition of classes in the dataset. Ref. [249] also emphasized the importance and need of repetition of classes and instances, as these conditions resemble the real-world scenarios. A few studies such as [12] emphasized on the need of the presence of the context with the dataset to feel like the real world. It is observed from the literature that most of the benchmark datasets are designed for the classification tasks.

5.1. CORe50

Reference [247] proposed CORe50 as a benchmark dataset for object classification. It offers different learning scenarios. It comprised 164,866 images of size 128 × 128. There are a total of fifty types of items which are arranged in ten classes. It offers two types of environments, i.e., indoor and outdoor are covered. Eight scenarios belong to indoor, three scenarios belong to outdoor. These different scenarios mimic the different managed context. Videos and images belong to different lighting, occlusions, pose, and background conditions. Videos and pictures are taken in such a way that all these items are presented to a robot. As an overall, it offers context-based new classes as well as new instances scenarios.

5.2. iCubWorld28

This dataset comprises images of 28 items belonging to seven classes. It represents four different sessions. These sessions represent four different days (days 1–4), in which data are recorded under different environments and conditions. It consists of more than 12,000 images.

5.3. Toys200

It comprised of 200 unique toy-like object samples which are synthetically generated shapes of children toys. Each object has multiple views in the dataset.

5.4. Clear

Reference [250] presented the dataset of different objects that are changed in the shape due to the evolution during the period of 2004–07. These items include cameras, computer, etc. This dataset is constructed from YFCC100M dataset. The main contribution of this dataset is that it portrayed the evolution of real-world objects over the time.

5.5. OpenLORIS

This dataset offers the collection of images taken from RGB-D camera under different environments. It is a candidate dataset for training and testing CL models for domain incremental scenarios. The environment conditions include different occlusions, size, noise, and difficulty levels. It is a real-world dataset. It consists of 186 instances, 63 classes, and 2,138,050 images [251].

5.6. CLAD-C

It is a benchmark dataset for continual object detection [252]. This dataset is constructed based on SODA10M. It comprised of online continuous stream data of 3 days and nights. In the continuous stream, objects appear after every 10 s. The dataset offers data for different domains. The domain shift is exhibited by changing frequencies, time, and weather. It also offers multiple views of the scenes and objects. In order to exactly portray the real-world scenario, the background in the subsequent objects has overlapping.

5.7. CLAD-D

It is also based on SODA10M dataset [252]. It offers a benchmark for autonomous driving. It is proposed for domain incremental learning scenarios. Different domains are portrayed through different highways, day and night time, weather, and location within and outside the city.

5.8. vCLIMB

vCLIMB is video dataset constructed for CL to judge the forgetting in class incremental CL scenarios. It offers a sequence of disjoint tasks with the number of classes which are evenly available over the tasks [131, 159].

5.9. Experimental Configurations Used for CL Methods

There exist various experimental settings which are discussed in the literature related to CL. These settings mimic the real-world situations and natural settings the system has to counter with. Each of these scenarios has its own advantages and challenges [255]. It is pertinent to mention here that there is no agreed standard or guidelines for the formulation of the experimental configurations and division of tasks/problems. In the subsequent subsection, we discuss a few famous experimental configurations reported in the literature related to CL.

5.9.1. New Instances Scenario

In this setting, new instances of the same class become progressively available over time. All classes to be learned are known in the start of the experiments [257]. The original task T having dataset D (full dataset). D consists of n number of records. T is decomposed into four subtasks T1, T2, T3, and T4. Each task having dataset D1, D2, D3, and D4, respectively, where D1 ∈ D2, D2 ∈ D3, and D3 ∈ D4. D1 consists of n1 number of records, D2 consists of n2 number of records, and so on. Similarly, each of these tasks consists of C1, C2, C3, and C4 classes, respectively, such that C1 ∈ C2, C2 ∈ C3, C3 ∈ C4, and n1…. n4 are randomly selected numbers such that n1 < n2 < n3 < n4 and n4 = n. For any task, knowledge learned from all classes and instances of previous tasks is reused.

5.9.2. New Instances and New Classes Scenarios

In this scenario, new instances of already known and new classes become progressively available in subsequent training or at testing time [257]. This scenario is considered more difficult as compared to CIL and new instances scenario. The original task T having dataset D (full dataset). D consists of n number of records. T is decomposed into four subtasks T1, T2, T3, and T4. Each task having dataset D1, D2, D3, and D4, respectively, where D1 ∈ D2, D2 ∈ D3, and D3 ∈ D4. D1 consists of n1 number of records, D2 consists of n2 number of records, and so on. n1…. n4 are randomly selected numbers such that n1 < n2 < n3 < n4 and n4 = n. The Level 4 task T4 having dataset D4 consists of n4 records that is actually the whole dataset, i.e., D4 = D.

5.9.3. CIL

In this setting, samples from new classes are gradually presented to the model. The model may face new classes even at test time. In some scenarios, the model may not have information about task id at test time [155]. The model needs to tackle the confusion between old and new classes due to overlapping, gradient biases, and class imbalance [80]. In addition, the model needs to handle the challenges such as the prediction of unknown classes without previous knowledge, knowledge learning, and enhancement with few instances of each class [151, 188]. In this scenario, the system should have the identification of task and classes belonging to each task, to solve any problem.

Let a problem P consist of four tasks T1, T2, T3, and T4. Each of these tasks consists of C1, C2, C3, and C4 classes, respectively, such that C1 ∈ C2, C2 ∈ C3, and C3 ∈ C4. For any task, knowledge learned from all classes and instances of previous tasks are reused [258].

5.9.4. Domain Incremental Learning

In the domain incremental scenario, generally the structure of the problem and number of classes and instances remain the same throughout training and testing phases. In this scenario, input data distribution, environment, and the context may change [155, 255]. In addition, the system may not know to which task the domain sample belongs. In domain incremental learning, further three configurations are used by a few studies as follows [18]:

Partial Seen Data Configurations: All images in the dataset D are divided into 4 levels L1, L2, L3, and L4, with datasets D1, D2, D3, and D4, respectively. D1 consists of 20% of images, D2 consists of 40% of images, and D3 consists of 60% of images from each class and D4 = D, such that D1 ∈ D2, D2 ∈ D3, and D3 ∈ D4.

Unseen − Equal Dataset Size Configurations: The classification task T is divided into three subtasks T1, T2, and T3. The dataset D is divided into equal three parts D1, D2, and D3. Each part consists of 33.3% of total images in the dataset such that D1∩D2∩D3 = ϕ and Sizeof(D1) = Sizeof(D2) = Sizeof(D3). Unseen - Increasing Dataset Size Configurations: The classification task T is divided into four subtasks T1, T2, T3, and T4. Each task having the dataset D1, D2, D3, and D4, respectively, such that D1∩D2∩D3∩D4 = ϕ. Each of D1, D2, and D3 consists of 20% of all images, and D4 consists remaining 40% of all images from each class.

5.9.5. Task Incremental Learning (TIL)

In this scenario, the training and testing data may consist of sequence of number of disjoint tasks. Generally, the model has access to data from one task at a time. Regarding the task id, the system may or may not have access to task id [27, 155]. The system is supposed to learn incrementally a sequence of tasks without forgetting the previously learned tasks. In the case of TIL, the system has the knowledge about the sequence and arrangement of tasks, both during the training as well as testing phase [255]. algorithm—also at test time—which task must be performed. A special case in this context is single-incremental-task (SIT) scenarios [262]. In these settings, a single task is incremental in nature. Few studies consider new instances and new classes scenarios as special case of this scenarios. Generally, SIT scenario is considered more challenging than task incremental scenario [262].

6. Benchmark Metrics Used in CL Methods

This section describes benchmark metrics used by CL methods for measuring the performance. It can be observed by going through the studies that CL methods are evaluated on metrics specific to problem area. For example, accuracy is used for classification problems. It is learned that there is no universally accepted standardized metric to measure the performance of the models used for CL scenarios. There exist a few metrics which are particularly designed to assess the performance of CL-based methods.

6.1. Average Accuracy

6.2. FWT

FWT is to use the learning of known task to expedite learning of future task [263]. This metric measures whether the CL-based system utilizes the knowledge from past task to learn a new task. It needs the availability of evaluation blocks before and after each new task’s first learning to compute the values.

6.3. Average BWT

6.4. Relative Performance (RP)

RP is employed to make the performance of the model equal or improved for the single task. It offers insight into whether a system is showing an improvement over previous attempts to attain CL versus whether a system simply experiencing CL.

6.5. Cumulative Gain

Cumulative gain keeps record of performance after completion of each task during the complete problem solution scenarios [264].

6.6. Performance Maintenance (PM)

PM offers the complete picture of the model with respect to the performance it maintained on each task. It is a relative term which gives the change in performance on the same task when it was attempted for the first time and the performance on the attempts made next time to solve the same task [131, 265]. It compares a model’s performance when it learns a task first time to subsequent times it encounters the task. It does not measure the absolute performance level. It measures the change in performance over complete lifetime of the system [265].

Regarding the values of the PM, if PM > 0, it means the performance on task is getting better over lifetime, if PM = 0, no forgetting; no learning. If PM < 0, it means catastrophic forgetting.

7. Results and Analysis

The results of various methods using different datasets are presented.

7.1. Image Classification

Tables 5, 6, 7, 8, and 9 show the results of different CL methods for the image classification problem. Table 5 shows the results for CIFAR-10 dataset for CIL and TIL scenarios. It can be observed that as an overall the accuracy for TIL scenarios remains high as compared to CIL scenarios, as CIL scenarios are considered difficult than TIL scenarios. In CIL scenarios, the accuracy of memory replay-based system remained higher as compared to network- and gradient-based methods, whereas the accuracy of architecture-based methods remained at lower side. In TIL scenario, the accuracy of static architecture-based methods remained highest, whereas the accuracy of memory replay method remained lowest. In case, of CIFAR-100 dataset as shown in Table 6, the accuracy of memory-based methods remained at higher side for TIL scenario, whereas the performance of dynamic network method remained at highest end. Table 7 shows the result for ImageNet dataset. For CIL scenario, the accuracy achieved by gradient-based method remained highest. Similarly, for the TIL scenario, the performance of gradient-based method remained highest. Table 10 displays the results of statistical t-test for ImageNet dataset. The table also shows the p-value. t-Statistics and p-value show that Du et al. performed better than other methods. Table 8 shows the results for TinyImageNet dataset for CIL and TIL scenario. It can be observed that the performance of the memory as well as gradient-based methods remained almost same at the higher side. Table 11 displays the results of statistical t-test for Tiny ImageNet dataset. The table also shows the p-value. t-Statistics and p-value show that Cheraghian et al. performed better than other methods. Table 9 shows the results for MNIST dataset for CIL and TIL scenarios. It can be observed that Abati et al. performed better as compared to other methods both in CIL and TIL scenarios.

| Class incremental learning | Task incremental learning | ||||

|---|---|---|---|---|---|

| Method | Technique | Accuracy | Method | Technique | Accuracy |

| Kim et al. 2022 [135] | SN | 88 | Abati et al. 2020 [146] | M + N | 96 |

| Abati et al. 2020 [146] | M + N | 70 | Ji et al. 2021 [201] | MR | 95 |

| Yang et al. 2023 [144] | DN | 68.8 | Kim et al. 2022 [135] | SN | 96 |

| Rosenfeld et al. 2020 [118] | DN | 70.9 | Wang et al. 2022 [253] | MR | 93 |

| Fayek et al. 2020 [133] | DN | 79 | Cha et al. 2021 [176] | MR | 96 |

| Yang et al. 2023 [144] | DN | 56 | |||

| Wang et al. 2022 [253] | MR | 85.6 | |||

| Cha et al. 2021 [176] | MR | 95.9 | |||

| Du et al. 2023 [58] | DN | 87.9 | |||

| Hong et al. 2022 [254] | MG | 65.4 | |||

| Ji et al. 2021 [201] | MR | 85.4 | |||

| Cha et al. 2021 [176] | MR | 84.2 | |||

- Note: (a) Class incremental scenario and (b) task incremental scenario. M + N = hybrid (memory + network) and MR = replay.

- Abbreviations: DN = dynamic network, MG = memory generative, and SN = static network.

| Class incremental learning | Task incremental learning | ||||

|---|---|---|---|---|---|

| Method | Technique | Accuracy | Method | Technique | Accuracy |

| Tao et al. 2020 [129] | DN | 57.5 | Kim et al. 2022 [135] | SN | 96 |

| Liu et al. 2021 [121] | DN | 67.6 | Mazumder et al. 2021 [143] | DN | 69.58 |

| Verma et al. 2021 [130] | DN | 90 | Liu et al. 2022 [137] | GD | 62 |

| Boschini et al. 2023 [204] | MR | 59 | Ji et al. 2021 [201] | MR | 89 |

| Zhuang et al. 2022 [199] | MR | 64 | Wang et al. 2022 [253] | MR | 89 |

| Kim et al. 2022 [135] | SN | 65 | |||

| Zhu et al. 2022 [83] | SN | 56.8 | |||

| Fayek et al. 2020 [133] | DN | 78.5 | |||

| Xu et al. 2022 [170] | DN/RCL | 58.8 | |||

| Xu et al. 2022 [170] | DN/BOCL | 61.7 | |||

| Du et al. 2023 [58] | DN | 70.7 | |||

| Du et al. 2023 [58] | GD | 65 | |||

| Ji et al. 2021 [201] | MR | 52.3 | |||

| Wang et al. 2022 [253] | MR | 56.6 | |||

| Zhuang et al. 2022 [199] | MR | 64 | |||

- Note: (a) Class incremental scenario and (b) task incremental scenario. M + N = hybrid (memory + network), MR = replay, and GD = gradient data focus.

- Abbreviations: BOCL = biobjective CL, DN = dynamic network, MG = memory generative, RCL = reliable CL, and SN = static network.

| Class incremental learning | Task incremental learning | ||||

|---|---|---|---|---|---|

| Method | Technique | Accuracy | Method | Technique | Accuracy |

| Abati et al. 2020 [146] | N + M | 35 | Mazumder et al. 2021 [143] | DN | 98 |

| Liu et al. 2021 [121] | DN | 64 | Du et al. 2023 [58] | DN | 93 |

| Wu et al. 2022 [256] | DN | 69 | |||

| Zhu et al. 2022 [83] | DN | 68 | |||

| Liu et al. 2022 [137] | GD | 74 | |||

| Du et al. 2023 [58] | GP | 85 | |||

- Note: (a) Class incremental scenario and (b) task incremental scenario. M + N = hybrid (memory + network), MR = replay, and GD = gradient data focus.

- Abbreviations: DN = dynamic network, MG = memory generative, and SN = static network.

| Class incremental learning | Task incremental learning | ||||

|---|---|---|---|---|---|

| Method | Technique | Accuracy | Method | Technique | Accuracy |

| Verma et al. 2021 [130] | DN | 66.8 | Ji et al. 2021 [201] | MR | 70 |

| Zhu et al. 2022 [83] | DN | 50 | Wang et al. 2022 [253] | MR | 72.8 |

| Cheraghian et al. 2021 [188] | MP | 68 | Cha et al. 2021 [176] | MR | 53.1 |

| Yang et al. 2023 [144] | DN | 63.5 | |||

| Du et al. 2023 [58] | GP | 55 | |||

| Ji et al. 2021 [201] | MR | 37.6 | |||

| Wang et al. 2022 [253] | MR | 41.5 | |||

| Zhuang et al. 2022 [199] | MR | 56.7 | |||

| Boschini et al. 2023 [204] | MR | 31.7 | |||

| Cha et al. 2021 [176] | MR | 20.1 | |||