MultiResFF-Net: Multilevel Residual Block-Based Lightweight Feature Fused Network With Attention for Gastrointestinal Disease Diagnosis

Abstract

Accurate detection of gastrointestinal (GI) diseases is crucial due to their high prevalence. Screening is often inefficient with existing methods, and the complexity of medical images challenges single-model approaches. Leveraging diverse model features can improve accuracy and simplify detection. In this study, we introduce a novel deep learning model tailored for the diagnosis of GI diseases through the analysis of endoscopy images. This innovative model, named MultiResFF-Net, employs a multilevel residual block-based feature fusion network. The key strategy involves the integration of features from truncated DenseNet121 and MobileNet architectures. This fusion not only optimizes the model’s diagnostic performance but also strategically minimizes complexity and computational demands, making MultiResFF-Net a valuable tool for efficient and accurate disease diagnosis in GI endoscopy images. A pivotal component enhancing the model’s performance is the introduction of the Modified MultiRes-Block (MMRes-Block) and the Convolutional Block Attention Module (CBAM). The MMRes-Block, a customized residual learning component, optimally handles fused features at the endpoint of both models, fostering richer feature sets without escalating parameters. Simultaneously, the CBAM ensures dynamic recalibration of feature maps, emphasizing relevant channels and spatial locations. This dual incorporation significantly reduces overfitting, augments precision, and refines the feature extraction process. Extensive evaluations on three diverse datasets—endoscopic images, GastroVision data, and histopathological images—demonstrate exceptional accuracy of 99.37%, 97.47%, and 99.80%, respectively. Notably, MultiResFF-Net achieves superior efficiency, requiring only 2.22 MFLOPS and 0.47 million parameters, outperforming state-of-the-art models in both accuracy and cost-effectiveness. These results establish MultiResFF-Net as a robust and practical diagnostic tool for GI disease detection.

1. Introduction

Gastrointestinal (GI) diseases constitute a significant global health burden. The rising incidence of GI-related fatalities is primarily attributed to various infectious diseases affecting the digestive system [1]. Gastric cancer (GC) poses a significant health risk, and early detection is essential for improving patient outcomes. The diagnosis of GC is closely tied to the stage of the disease, and early diagnosis and treatment can significantly enhance patient recovery [2]. It is thought that several GI abnormalities, such as bleeding, tissue hyperplasia, and formation of polyps, are associated with colorectal cancer (CRC) [3]. The symptoms of these diseases include Abdominal Pain or Discomfort [4], Change in Bowel Habits [5], Bleeding [6], Unexplained Weight Loss, Nausea, and Vomiting [7]. The proliferation of GI patients can be attributed to a variety of factors such as demanding work schedules, bad dietary practices, and a lack of participation in physical activity, the unavoidable consequences of stress, unhygienic living circumstances [8]. Traditional GI disease diagnosis methods include physical examination, medical history assessment, stool testing, endoscopy, and medical imaging. Colonoscopy is typically employed to diagnose GI problems [9]. Endoscopy reveals the digestive system’s lining, while X-rays, CT scans, and MRIs indicate internal GI features. Modern AI-based diagnostic methods may improve accuracy, efficiency, and noninvasiveness. The motivation for GI diagnosis lies in the critical importance of early and accurate detection of diseases affecting the GI tract. Timely diagnosis of conditions such as colorectal cancer, esophagitis, and other GI disorders is crucial for effective treatment and improved patient outcomes. Advances in medical imaging and deep learning techniques offer the potential to enhance the accuracy and efficiency of GI disease diagnosis, driving the exploration of innovative approaches for more reliable and rapid assessments.

Machine learning (ML) have proven to be effective in analyzing images of the GI tract, facilitating disease diagnosis, treatment planning, and prognosis assessment. ML algorithms can effectively extract and interpret patterns from complex GI images, enabling them to identify subtle abnormalities that may be overlooked by human experts [10, 11]. Deep learning methods use convolutional neural networks (CNNs) to automatically extract image features [12]. Deep CNNs (DCNNs) have demonstrated substantial advancements in the analysis of medical imaging and across a broad range of computer vision (CV) applications. Transfer learning has revolutionized the application of ML in GI disease analysis by leveraging pretrained models to accelerate the training process and improve performance [13]. By transferring knowledge from large-scale image datasets to GI-specific tasks, transfer learning algorithms can effectively tackle the challenge of limited labeled GI data [14]. Feature fusion can improve ML algorithms for GI disease detection by integrating data from multiple image modalities or feature extraction methods [15]. Fusion techniques increase GI envision interpretation and diagnosis accuracy by incorporating numerous details [16]. FF integrates information from multiple sources, such as endoscopy images, patient clinical data, and genetic information, to capture a more comprehensive representation of the disease state, leading to improved diagnostic performance. AI and feature fusion have advanced GI disease detection. These approaches are challenging to implement in clinical practice due to numerous difficulties. ML models must be generalizable and interpretable to be robust across fluctuated patient populations and healthcare environments. Data heterogeneity and bias must be addressed to prevent inaccurate diagnostic findings and improve healthcare access. Integration of patient history, demographics, and test results into ML models improves diagnostic accuracy and personalizes therapy recommendations. Real-time performance and continuous evaluation of ML models for widespread clinical adoption. Overcoming such challenges will enable the development of more accurate, reliable, and widely adopted ML-based GI disease detection systems, increasing patient outcomes and healthcare efficiency.

In recent years, the field of endoscopy image diagnosis has witnessed significant advancements through the integration of DL techniques. Despite the remarkable capabilities of DCNNs in handling complex classifications, their effectiveness heavily relies on access to substantial amounts of data, even with the inclusion of numerous extraction layers. This dependency poses challenges, as DL models trained on insufficient data may exhibit reduced accuracy compared to traditional ML models that leverage handcrafted features. Moreover, many existing solutions demand high computing resources, making them less practical for real-world applications. This work addresses these challenges by introducing the MultiResFF-Net, a novel and cost-efficient model that aims to simplify the complexities associated with endoscopy image diagnosis. The MultiResFF-Net incorporates feature fusion techniques, model truncation, the innovative Modified MultiRes-Block (MMRes-Block), and the Convolutional Block Attention Module (CBAM) to enhance its overall performance. What sets the MultiResFF-Net apart is its independence from external feature extractors and intricate learning algorithms. Unlike many existing studies, the proposed model operates as a standalone pipeline. The core architecture of the MultiResFF-Net is derived from truncated DenseNet121 and MobileNet, further enriched with specifically designed layers to facilitate accurate performance with limited data and a lightweight structure. The aforementioned proposed layers encompass integrated Auxiliary Layers designed for enhancing layer performance, MMRes-Block facilitating residual learning, and CBAM to enable attentive feature recalibration, emphasizing crucial channels and spatial locations.

- 1.

The proposed MultiResFF-Net exhibits enhanced regularization, reduced parameter costs achieved through layer truncation or compression, and layer-wise fusion. These characteristics aim to generate a robust feature set in a cost-effective manner. The rationale behind these strategies is to extract distinctive features from different state-of-the-art DCNNs, ensuring diversity in the feature spectrum without the necessity of an extensive dataset. This approach provides effective control during the learning process to mitigate overfitting, maintains simplicity and cost-efficiency for improved reproducibility, and facilitates ease of deployment.

- 2.

To improve MultiResFF-Net’s performance while keeping computational demands low, we streamlined the DenseNet121 and MobileNet architectures and integrated them into a unified feature extraction pipeline. This fusion enabled the propagation of strong features from both models, leading to improved performance. The MMRes-Block, a tailored residual learning component in our MultiResFF-Net, contributed to mitigating overfitting by introducing additional layers at the endpoint of fused models, generating a richer set of features. On the other hand, the CBAM dynamically recalibrated feature maps, emphasizing relevant channels and spatial locations, thereby enhancing the model’s capacity to focus on informative regions within the input data. The incorporation of MMRes-Block and CBAM components proved highly beneficial, surpassing the performance of models without these enhancements.

- 3.

To evaluate the effectiveness of MultiResFF-Net, we conducted a thorough evaluation on three publicly available datasets. Remarkably, MultiResFF-Net demonstrated competitive performance with only 2.22 MFLOPS and 0.47 million parameters, resulting in decreased test time and enhanced cost-effectiveness compared to numerous alternative methods.

2. Related Work

In this study [17], a noninvasive DL technique is harnessed to predict lymph node (LN) metastasis in GC patients before surgery. The research culminates in developing a nomogram that seamlessly integrates clinical predictions and the DL approach for the preoperative assessment of LN status. The study’s robustness is confirmed by evaluating the DL method’s prediction performance using computed tomography (CT) data in a test cohort, revealing a commendable classification accuracy with an AUC of 0.9803. Moreover, the nomogram demonstrates remarkable discriminative power, boasting an AUC of 0.9914 in the test cohort and an even more impressive 0.9978 in the training cohort. The author [18] utilized the HyperKvasir dataset to propose an innovative ensemble architecture and highly effective CNN fusion methods for the classification of GI tract disorders. By utilizing seven distinct CNN models and employing efficient merging techniques, the study achieved enhanced performance in the categorization of GI tract disorders, as evidenced by an impressive 0.910 F1-score and a 0.902 Matthews correlation coefficient (MCC). These results demonstrate a performance level similar to the previous state-of-the-art model. This study [19] investigates the feasibility of applying Swarm Learning (SL) to the development of computational pathology-based biomarkers for stomach cancer. The research demonstrates that SL-based molecular biomarkers exhibit a high level of accuracy in predicting microsatellite instability (MSI) and Epstein-Barr virus (EBV) status in GC. This underscores their potential significance in advancing GC diagnostics. Moreover, collaborative training using SL holds promise for further enhancing the performance and generalizability of these biomarkers. The research introduces [20] an innovative artificial intelligence methodology grounded in DL, proficiently classifying intestinal histological images based on the likelihood of postoperative recurrence in Crohn’s disease (CD) patients. This advancement holds promise for seamlessly integrating mesenteric adipose tissue histology into predicting postoperative disease recurrence and enriching our comprehension of its underlying mechanisms. Rather than relying solely on conventional histopathological diagnoses, this study harnesses clinical data, specifically the presence or absence of recurrence, to identify distinctive histopathological patterns. By fusing this clinical information with histopathological assessments, artificial intelligence emerges as a valuable tool for the pathological analysis of inflammatory disorders, including inflammatory bowel disease, thereby offering valuable insights for therapeutic strategies. Notably, the model attains an impressive level of accuracy in predicting recurrence, with an area under the curve (AUC) of 0.995. In [21], a sequential ensemble approach is used to predict the probability of GC occurrence and associated mortality. The author’s methodology focuses on mitigating issues like overfitting and error amplification through meticulous parameter optimization of the ML techniques employed within the ensemble framework. The authors have employed a stacking technique, enabling them to select more effective models for the ensemble. Multiple regression techniques have been utilized to enhance the model’s overall performance, further introducing an innovative approach to weighting the ML models used in the ensemble model. The approach has yielded an impressive 97.9% prediction accuracy, underscored by its statistical significance, and the efficacy of the proposed ensemble methodology. The author [22] proposed an innovative technique that deep CNNs fine-tuned through TL to serve as effective feature extractors for detecting GI disorders from wireless capsule endoscopy (WCE) images. This novel approach achieves an impressive accuracy rate of 94.8%, surpassing existing computational methods in benchmark datasets. Ensemble learning is employed to create an integrated classifier tailored for identifying GI disorders to enhance its performance further. This study showcases the potential of DL-based CV models in efficient GI disease screening. The evidence is compelling, suggesting that CNN-based CV classification methods offer a swift, precise, and efficient means of screening for GI diseases, ultimately contributing to reducing mortality rates associated with GI cancers. The study [13] aimed to develop an automated AI-driven method for diagnosing GI diseases to enhance diagnostic accuracy. This approach relied on the utilization of CNNs. Several CNN models, including InceptionV3, ResNet50, and VGG16, were trained using the KVASIR benchmark image dataset, which consists of images captured within the GI tract. Among the CNN models, the one using pretrained ResNet50 weights achieved the highest average accuracy in diagnosing GI disorders, with around 99.80% on the training set and 99.50% and 99.16% on the validation and test sets, respectively. The study presented in [23] proposes a vision transformer-based approach with an impressive accuracy of 95.63% for identifying GI disorders using curated colon images from WCE. The vision transformer strategy demonstrates superior performance compared to DenseNet201, a pretrained CNN model, across multiple quantitative performance evaluation metrics, as rigorously analyzed in the research. This paper delves into the application of DL techniques in medical diagnosis, showcasing the effectiveness of DL for stomach abnormality classification and neural networks for the precise classification of stomach cancer. In [24], the authors introduce a novel Multitask Network called TransMT-Net. This innovative network achieves an impressive classification accuracy of 96.94%. What sets TransMT-Net apart is its unique ability to simultaneously tackle both segmentation and classification tasks when dealing with GI tract endoscopic images. This is particularly valuable as it addresses the challenges faced by CNN models when trained with datasets with sparse labeling and distinguishing between similar and ambiguous lesion types. TransMT-Net utilize the advantages of transformers in learning global characteristics and combines them with the strengths of CNNs in capturing local features. This approach leads to more precise predictions regarding the types of lesions and their respective areas within endoscopic images, ultimately enhancing the model’s overall performance. The study [25] introduces an innovative vision Transformer model designed for classifying images of the digestive tract. This model utilizes hybrid shifting windows to capture short- and long-range dependencies within the images. The model surpasses existing state-of-the-art techniques, achieving a remarkable classification accuracy of 95.42% on the Kvasir-v2 dataset and 86.81% on the HyperKvasir dataset. This heightened diagnosis accuracy is attributed to the model’s unique capability to extract features from broadly distributed targets and narrow focal regions present in GI endoscopic images. Notably, this study represents a significant advancement in endoscopic image categorization, marking the pioneering use of a vision Transformer model.

3. Materials and Methods

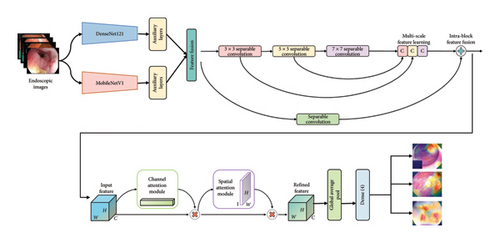

In this paper, we present the MultiResFF-Net as an innovative approach for diagnosing GI diseases. Our method offers a streamlined strategy that capitalizes on robust fused features, striking a balance between computational efficiency and high accuracy. The overall framework of our proposed method is depicted in Figure 1. Our approach leverages transfer learning, utilizing well-established models like DenseNet121 and MobileNet. We optimize these models by truncating blocks and using Integrated Auxiliary Layers to align their outputs to similar dimensions, creating a diverse set of features for the efficient and accurate MultiResFF-Net model. Additionally, we introduce a novel component called MMRes-Block, gaining a significant advantage in terms of efficiency over the conventional MultiRes-Block. To enhance the model further, we incorporate the CBAM block for enhancing the model’s ability to focus on relevant features.

The integration of specific blocks in our proposed MultiResFF-Net model serves distinct purposes, contributing to its overall effectiveness in diagnosing GI diseases. Here are the reasons behind the incorporation of these blocks.

3.1. Transfer Learning With DenseNet121 and MobileNet

Utilizing transfer learning with well-established models like DenseNet121 and MobileNet allows us to benefit from pretrained knowledge on a diverse dataset. This approach helps in capturing generalized features relevant to a wide range of image patterns, laying a strong foundation for our diagnostic model.

3.2. Model Compression by Truncating Blocks and Integrated Auxiliary Layers

Truncating blocks in the transferred models enables us to reduce the overall computational cost of our model while preserving its accuracy. Integrated Auxiliary Layers play a crucial role in reshaping the output dimensions, ensuring compatibility and facilitating the generation of a broader spectrum of distinct features.

3.3. MMRes-Block

The incorporation of the MMRes-Block in our MultiResFF-Net, featuring separable convolutions in lieu of traditional convolutions, significantly enhances computational efficiency without compromising accuracy. This modification streamlines the feature extraction process, ensuring a more lightweight and resource-friendly model for diagnosing GI diseases.

3.4. CBAM

The incorporation of the CBAM is pivotal for enhancing the model’s ability to focus on relevant features. CBAM dynamically recalibrates channel-wise features by considering both spatial and channel attention, thereby improving the model’s discriminative power and diagnostic accuracy.

In summary, each block in the MultiResFF-Net model has been carefully chosen and tailored to address specific challenges associated with computational efficiency, feature extraction, and attention mechanisms, collectively contributing to the model’s efficacy in diagnosing GI diseases.

In the subsequent subsection, we delve into a comprehensive discussion of the models and their individual components.

3.5. Dataset Details and Preprocessing

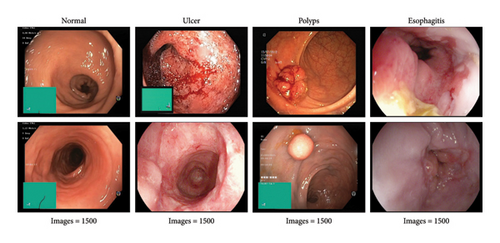

The availability of well-curated endoscopic image datasets has been facilitated by the strong support from large DL communities and related fields. This study utilized a publicly available dataset prepared by [26], consisting of 6000 images categorized into four classes: esophagitis, ulcer, polyps, and normal, with each class containing 1500 images of varying pixel resolutions. Sample images from the dataset are illustrated in Figure 2. To optimize results, preprocessing was applied, resizing each image to 224 × 224 × 3 pixels. For training robust models, a critical step involved the implementation of data shuffling, ensuring that the network encounters diverse patterns during each epoch. This shuffling process enhances the model’s ability to generalize effectively by preventing it from learning patterns based on the sequence of images. Following the shuffling process, the dataset was strategically split into 3200 images for training, 2000 for validation, and 800 for testing, further contributing to the robustness and generalizability of our diagnostic model.

3.6. Proposed MultiResFF-Net Construction

- •

MobileNetV1 [27] is known for its efficiency, utilizing depth-wise separable convolutions to minimize parameters, making it ideal for environments with limited resources. This design choice aims to enhance the overall efficiency of the MultiResFF-Net without compromising diagnostic accuracy.

- •

DenseNet121 [28] employs densely connected blocks, fostering extensive feature reuse and information flow throughout the network. This architecture enhances the model’s performance by leveraging rich feature representations, contributing to the accuracy of the MultiResFF-Net for GI disease diagnosis.

Recent studies highlight the impressive diagnostic capabilities of individual models. However, relying solely on existing methods may restrict their efficacy in addressing intricate medical imaging tasks. To overcome this limitation, our approach proposes the fusion of these models, aiming to generate a more diverse set of features beyond the capacity of a single model. This augmentation of the feature pool is especially valuable when dealing with limited datasets. Supporting evidence from various studies indicates that incorporating feature fusion in DCNN leads to notable enhancements in medical imaging tasks [29]. Nevertheless, it is crucial to acknowledge that while feature fusion contributes to improved performance, the fusion of indirectly related models with a relatively small dataset can potentially induce overfitting [30]. To address this challenge, our work introduces the concept of the MMRes-Block for residual learning and incorporates the CBAM attention mechanism. This strategic integration serves as a promising solution to mitigate the risk of overfitting and optimize performance, particularly in the context of complex medical imaging tasks.

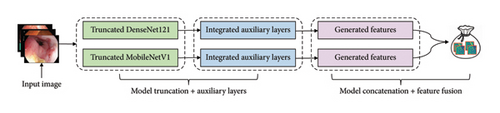

3.6.1. Truncation Method

Before the fusion process to create the MultiResFF-Net, both the DenseNet121 and MobileNet models underwent modifications involving head removal, layer truncation, and freezing different layers for training. The truncation specifically targeted the removal of blocks within the models to compress their size and reduce parameter complexity. Despite these modifications, the fundamental architecture and distinctive features of the models were preserved. This reduction in model complexity is crucial to mitigate the risk of overfitting, particularly when dealing with a limited dataset. Studies have demonstrated that truncated models can perform effectively even with smaller datasets, minimizing overfitting concerns [31]. However, to implement this approach successfully, our proposed method identified a judicious cut-point based on the number of features to retain. This decision aimed to balance preserving essential information while maintaining a concise end-to-end network structure, and ensuring compatibility for subsequent transfer learning.

Table 1 outlines the initial parameters of the selected models, the parameters post-truncation, and the preserved features. For instance, the DenseNet121 model, initially comprising about 7.17 million parameters, was reduced to 218 K after truncation. Similarly, the MobileNet model saw a significant parameter reduction, decreasing from 3.36 million to 137 K. Given the constraints of the limited dataset, adopting the entire model structure would add unnecessary complexity and resource burden. Therefore, our proposed truncation streamlined its structure, removing most layers to reduce parameters and further shorten the end-to-end feature flow.

| Model | Original parameters (M) | Truncated parameters (K) | Features |

|---|---|---|---|

| DenseNet121 | 7.17 | 218 | 256 |

| MobileNet | 3.36 | 137 | 192 |

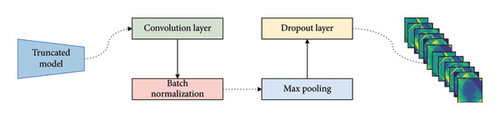

3.6.2. Integrated Auxiliary Layers

As a result of the truncation method, the models underwent a reduction in weight and complexity, sacrificing a significant portion of their feature generation capabilities. However, this truncation introduced an obstacle to layer-wise fusion, given the disparate output shapes of the compressed models. To address this issue and enhance the fusion phase, our study employs integrated auxiliary layers. These layers work with the feature maps of the compressed, simplified networks. The design of these auxiliary layers is both simple and effective, as illustrated in Figure 3. This configuration comprises four sequential layers, including a convolution layer, batch normalization, max pooling, and a dropout layer.

3.6.2.1. Convolution Layer

This layer applies convolutional operations to the input feature maps, enabling the extraction of higher-level features. It enhances the model’s capacity to capture intricate patterns within the compressed networks.

3.6.2.2. Batch Normalization

Batch normalization normalizes the output of the convolutional layer, promoting stable training by mitigating internal covariate shift. This contributes to faster convergence during the training process.

3.6.2.3. Max Pooling

Max pooling decreases the spatial dimensions of feature maps, preserving key information while lowering the computational burden. This layer aids in preserving relevant features while reducing complexity.

3.6.2.4. Dropout Layer

The dropout layer introduces a regularization mechanism, randomly dropping a fraction of the units during training. This prevents overfitting by promoting the development of a more robust model, particularly beneficial when working with compressed and truncated networks.

Incorporating these auxiliary layers mitigates the challenge posed by uneven output shapes and enhances the efficiency of the fusion phase in our study.

Table 2 outlines the specifications of the integrated auxiliary layers designed for the truncated models. The truncation process resulted in incompatible output dimensions, posing a challenge for the fusion process based on the established cut-points. To address this, the proposed Auxiliary Layers skillfully reshaped the output shapes at the cut-points, rendering them identical. The convolutional layer in these auxiliary layers employs a filter size of 128, reflecting the proposed method’s consideration of cost-effectiveness. By defining specific kernel sizes, strides, pool sizes, and other settings for each model, a uniform output shape of 28 × 28 × 128 was achieved. This standardization ensures compatibility for layer-wise fusion. Furthermore, the auxiliary layers incorporate a dropout layer with a rate of 0.2. While enhancing regularization for the incoming fused features, it does not compromise the reshaping process of each model’s output.

| Model | Shape | Convolution layer | Batch norm | Max pooling | Dropout |

|---|---|---|---|---|---|

| MobileNet | 28 × 28 × 128 | Filter = 128, kernel = 1, padding = ‘valid’, activation = ‘relu’, kernel_initializer = ‘lecun_normal’ | Epsilon = 1e − 05, momentum = 0.1, trainable = true, scale = true | Pool size = 1; S = 1 | 0.2 |

| DenseNet121 | 56 × 56 × 192 | Filter = 128, kernel = 1, padding = ‘valid’, activation = ‘relu’, kernel_initializer = ‘lecun_normal’ | Epsilon = 1e − 05, momentum = 0.1, trainable = true, scale = true | Pool size = 2; S = 2 | 0.2 |

3.6.3. Model Concatenation and Feature Fusion

The reduced network size resulting from the truncation of DenseNet121 and MobileNet led to a smaller parameter size. However, this reduction in parameter size posed challenges to the models′ performance in effectively recognizing the four classes of endoscopy images. While adding more layers could potentially address this issue, it risks undermining the purpose of the truncation method and may distort the feature extraction process. To optimize model classification quality, we employed model concatenation and feature fusion through an Add layer, illustrated in Figure 4. This approach aims to expand the range of features across the entire network, striking a balance between model complexity and effective feature extraction. Employing model concatenation and feature fusion through an Add layer was chosen to overcome the limitations posed by the smaller parameter size resulting from the truncation. This method enables the integration of diverse features from the concatenated models, compensating for the reduced capacity of individual networks. The advantages include enhanced model expressiveness, improved capability to capture intricate patterns, and a more robust classification performance, ensuring effective recognition of the four classes of endoscopy images despite the constraints imposed by the truncated models.

3.6.4. MMRes Skip Block

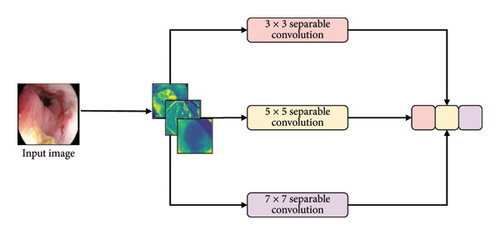

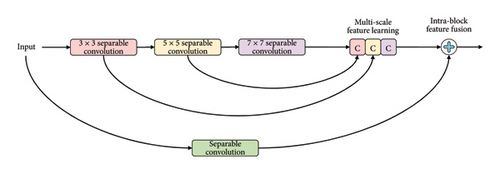

Recognizing the importance of residual learning, this study emphasizes its integration. However, considering the shallow structure of truncated models and the necessity to maintain cost-efficiency, an additional layer and a residual learning component were introduced. Drawing inspiration from the literature’s two-dimensional MultiRes-Block [32], we propose a tailor-fitted residual component termed MMRes-Block to address anticipated challenges. Unlike conventional approaches, the MMRes-Block initiates at the endpoint of both fused models, providing added depth specifically tailored to the diagnostic task. To prevent an escalation of parameters while enhancing feature richness, traditional convolutions within the MultiRes-block were replaced with separable convolution layers. The adoption of separable convolution layers contributes to computational efficiency and reduced parameter complexity. Additionally, a residual connection was introduced for its efficacy in medical image information extraction. The inclusion of 1 × 1 convolutional layers allows the model to capture supplementary spatial information. This configuration, referred to as the MMRes-Block, is depicted in Figure 5, where the number of filters in successive layers gradually increases, complemented by a residual connection and a 1 × 1 filter for dimension preservation.

3.6.4.1. MMRes-Block

The MMRes-Block takes as input the number of filters (U) and the preceding layer (inp). The concept of MMRes-Block involves creating parallel convolutional paths with different kernel sizes (3 × 3, 5 × 5, 7 × 7) to capture features at various scales. The shortcut connection preserves the original input for residual learning. Convolutional layers with different kernel sizes are applied to the input, and their outputs are concatenated along the channel axis (axis = 3). Batch normalization is applied to the concatenated output. The shortcut connection is added to the concatenated output, promoting residual learning. ReLU activation is applied to the final output for nonlinearity.

3.6.4.2. Advantages

- •

Computational Efficiency: The use of separable convolution layers reduces the number of parameters, making the MMRes-Block computationally efficient.

- •

Feature Fusion: The MultiRes-Block structure allows the model to capture features at multiple scales, enhancing its ability to extract relevant information from the input.

- •

Residual Learning: The residual connection aids in mitigating vanishing gradient issues and facilitates the training of deeper networks.

- •

Spatial Information Preservation: The incorporation of 1 × 1 convolutional layers allows the model to capture additional spatial information.

In summary, the MMRes-Block is designed to introduce depth and richness to the network while maintaining cost-efficiency. It leverages separable convolutions, feature fusion through parallel paths, and residual learning to enhance the overall performance of the model, particularly in extracting information from medical images.

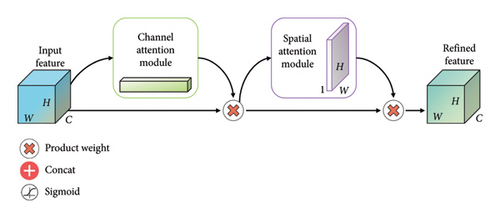

3.6.5. CBAM

The incorporation of the CBAM [33] follows the MMRes-Block in our model, strategically placed to further refine the feature maps. After the MMRes-Block introduces depth and richness to the network, CBAM comes into play to dynamically recalibrate the feature maps. This sequential integration is purposeful as CBAM enhances the model’s ability to focus on informative regions, especially after the MMRes-Block’s feature fusion and residual learning. By emphasizing relevant channels and spatial locations, CBAM optimizes the captured features, aligning with the nuanced patterns often present in medical images. This sequential use ensures a synergistic effect, contributing to the accuracy and precision required for effective GI disease diagnosis.

3.6.5.1. Working of CBAM

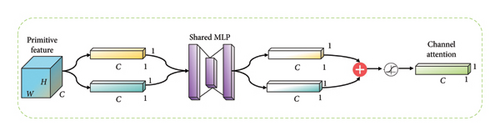

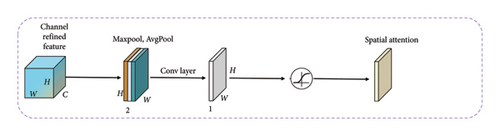

The CBAM module consists of two attention blocks: Channel Attention Module (CAM) and Spatial AM (SAM).

3.6.5.2. CAM

The CAM is designed to capture inter-channel dependencies by separately applying global average pooling and max pooling to the input feature maps. The resulting pooled features are then processed through fully connected layers with activation functions to produce channel-wise attention coefficients. The attention coefficients are applied to the original feature maps, highlighting channels that contribute more to the task.

3.6.5.3. SAM

The SAM focuses on capturing inter-spatial dependencies by applying 1 × 1 convolutions to generate attention maps for both the maximum and average pooled features. These attention maps are element-wise summed to create a spatial attention map. The spatial attention map is then applied to the original feature maps, emphasizing spatial regions that are more relevant for the given task.

3.6.5.4. Integration

The outputs of the CAM and SAM are multiplied element-wise, providing a refined feature map that incorporates both channel and spatial attention.

This integrated attention map is then added to the original input, ensuring that the model benefits from both channel and spatial attention during the subsequent layers of the network.

The CBAM module, by dynamically adjusting feature map weights, effectively directs the model to focus on significant regions, improving its ability to capture intricate details in medical images. This attention mechanism contributes to the overall accuracy and robustness of the proposed MultiResFF-Net for GI disease diagnosis. Figure 6 presenting the complete relationship among CBAM, CAM, and SAM. This visual representation elucidates the interconnected roles and collaborative influence of these attention mechanisms, highlighting their collective impact on refining feature maps and enhancing the model’s focus on informative regions in the context of medical image analysis.

3.7. Hyperparameters Settings

The proposed MultiResFF-Net model is trained as a whole, unlike ensemble approaches which train each component separately. During training, the model utilizes a loss function and a set of preconfigured hyperparameters. For the presented results, a batch size of 64, a learning rate of 1e-3, and 20 epochs were employed. Model checkpointing and early stopping were also implemented. The choice of hyperparameters was based on prior works and empirical evaluations in deep learning and medical image analysis. Specifically, values for batch size and learning rate align with common practices in previous studies [34, 35] focused on GI disease detection and similar medical imaging tasks. Batch size of 64 is a standard choice for deep learning models, facilitating efficient memory usage and training speed. A learning rate of 1e-3 is a moderate value that helps the model converge stably. Raining the model for 20 epochs allows adequate time for it to effectively identify and learn the data patterns. Model checkpoint saves the model’s weights at regular intervals, enabling the restoration of the best-performing model during training. Early stopping terminates training when the model’s performance on a validation dataset no longer improves, thereby preventing overfitting. The Adam optimizer was selected due to its effectiveness in optimizing deep learning models, particularly those with complex architectures like MultiResFF-Net. Categorical cross-entropy loss is a suitable choice for multi-class classification tasks, measuring the model’s ability to distinguish between different classes.

4. Results and Discussion

4.1. Performance Metrics and Experimental Setup

4.2. Overall Performance of the Proposed Model

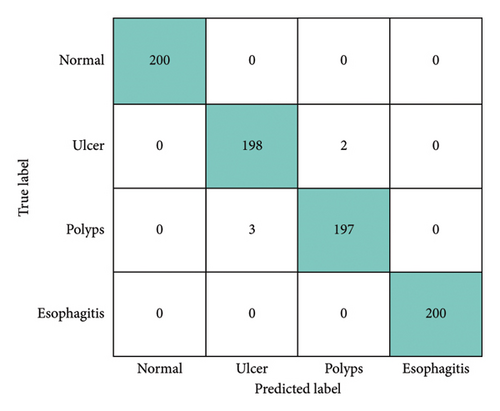

In this section, the performance of the MultiResFF-Net was assessed using the previously provided equations to evaluate its classification effectiveness for each endoscopic image class. To facilitate a straightforward comparison with other state-of-the-art models, a three-way split was employed, including training, validation, and test datasets. For a visual representation of the MultiResFF-Net’s individual test sample classifications, a Confusion Matrix was utilized. Figure 7 illustrates the plotted predictions made by the proposed MultiResFF-Net. Notably, the polyp’s class exhibited the highest misclassification rate, with 3 misclassified samples, indicating the model’s challenge in this particular class. Despite this, considering a total of 795 correct and only 5 incorrect classifications, the MultiResFF-Net achieved an impressive overall accuracy of 99.37% across all four classes. Following the computation of values within the provided matrices, this study generated the corresponding evaluation metrics for the MultiResFF-Net, presented in Table 3. The evaluation results demonstrate the remarkable performance of MultiResFF-Net, achieving an accuracy of 99.37% on the test dataset. Moreover, the results indicate consistent precision, recall, and f1-score, emphasizing the model’s robust performance across different classes, facilitated by the use of a balanced dataset. The class-wise performance breakdown in Table 4 provides a detailed insight into the diagnostic capabilities of the MultiResFF-Net for each specific class. Notably, the model demonstrates exceptional accuracy, precision, recall, and F1-score across all classes. The “Normal” and “Esophagitis” classes exhibit perfect scores in all metrics, showcasing the model’s proficiency in accurately identifying these conditions. For the “Ulcer” and “Polyps” classes, the MultiResFF-Net consistently achieves high scores across all metrics. The slightly lower precision in these classes indicates a small fraction of misclassifications, while high recall values demonstrate the model’s effectiveness in capturing the majority of relevant instances. Overall, the results underscore the model’s robust diagnostic performance across diverse GI conditions. The MultiResFF-Net achieves excellent results through a combination of strategic model architecture and training techniques. The integration of the MMRes-Block enhances feature extraction efficiency, while the CBAM dynamically recalibrates feature maps, emphasizing relevant information. Leveraging a curated and balanced dataset ensures the model’s robustness across diverse classes. The sequential use of attention mechanisms and feature fusion in the model allows it to capture nuanced patterns in medical images, leading to remarkable accuracy, precision, recall, and F1-score values.

| Metrics | Scores (%) |

|---|---|

| Accuracy | 99.37 |

| Precision | 99.38 |

| Recall | 99.38 |

| F1-score | 99.37 |

| Class | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|

| Normal | 100 | 100 | 100 | 100 |

| Ulcer | 99.38 | 98.51 | 99.00 | 98.75 |

| Polyps | 99.38 | 98.99 | 98.50 | 98.75 |

| Esophagitis | 100 | 100 | 100 | 100 |

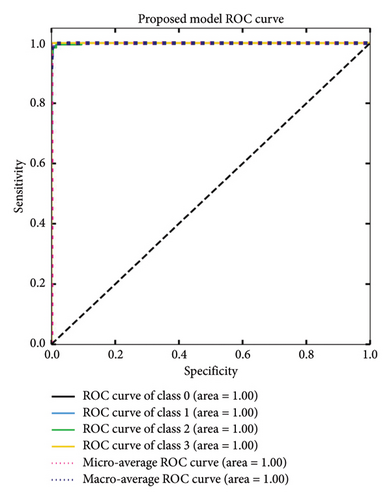

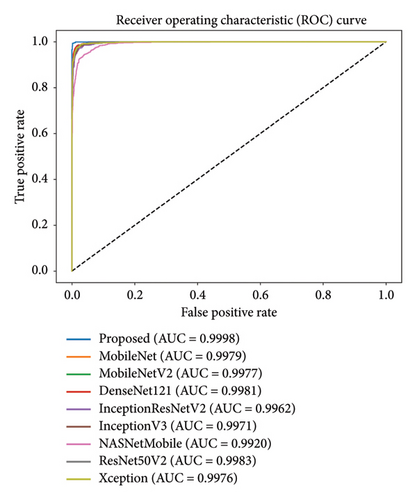

The MultiResFF-Net was evaluated using sensitivity and specificity tests, as well as an ROC curve shown in Figure 8. The results showed that the MultiResFF-Net achieved a remarkable performance, with a sensitivity and specificity of 1.00 for all four classes of endoscopy images. This means that the MultiResFF-Net was able to correctly identify both diseased and nondiseased patients with a high degree of accuracy. In addition to the quantitative results, the ROC curve also showed that the MultiResFF-Net has a high AUC for all four classes of endoscopy images. The fact that the MultiResFF-Net has a high AUC for all four classes of endoscopy images suggests that it is a very effective diagnostic tool. Overall, the evaluation results show that the MultiResFF-Net is a promising new diagnostic tool for endoscopy images. It has a high degree of accuracy, sensitivity, and specificity, and it is also very effective in distinguishing between different classes of endoscopy images.

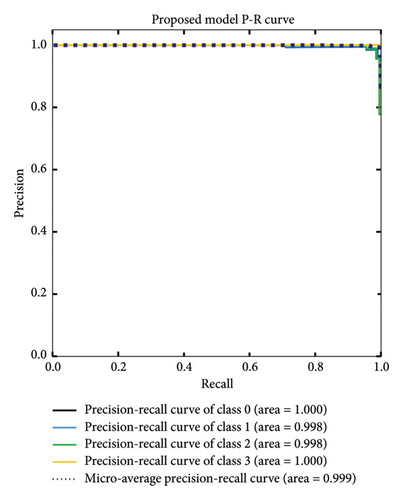

Similar to the ROC curve, the PR curve uses an AUC to evaluate model performance. A lower AUC suggests that the model is more likely to make false predictions. In Figure 9, the AUC for polyp and ulcer diagnosis was 0.998, while the AUC for normal and esophagitis diagnosis was 1.000. The micro-average AUC was 0.999. These results indicate that the model is very good at distinguishing between polyps, ulcers, normal tissue, and esophagitis.

4.3. Grad-CAM and Model Interpretability Analysis

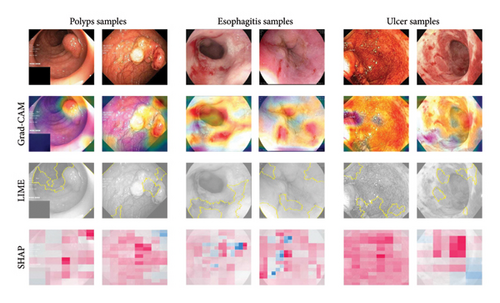

Traditional evaluation metrics provide numerical insights into model performance, but they do not explain how the model arrives at its predictions. To enhance interpretability, we employ Grad-CAM [36] Shapley Additive Explanations (SHAP) [37], and Local Interpretable Model-Agnostic Explanations (LIME) [38] to better understand the decision-making process of MultiResFF-Net.

4.3.1. Grad-CAM Analysis

Grad-CAM examines the gradients of a specific target class in the last convolutional layer of MultiResFF-Net, generating heatmaps that localize feature importance within the input images. Figure 10 presents Grad-CAM visualizations for randomly selected samples, highlighting the regions that contribute most to the model’s diagnostic decisions. These heatmaps demonstrate that the model effectively identifies salient features, such as lesion boundaries and abnormal tissue structures, which are critical for accurate GI disease diagnosis. By emphasizing these regions, MultiResFF-Net enhances transparency and provides clinically interpretable results, helping clinicians understand why specific predictions were made.

4.3.2. SHAP and LIME Explanations

- •

SHAP: SHAP values quantify the contribution of each feature to the final prediction, offering a global understanding of how the model weighs different characteristics of an image. This helps identify which patterns or pixel intensities are most influential in classification.

- •

LIME: LIME perturbs input images and observes changes in predictions, generating localized explanations that highlight the most critical regions influencing a single decision. This approach helps validate that the model makes clinically meaningful associations rather than relying on spurious correlations.

Figure 10 illustrates the interpretability analysis, presenting Grad-CAM heatmaps, SHAP feature importance visualizations, and LIME-based explanations for selected samples. The combination of these techniques enhances transparency by revealing both spatial activation regions (Grad-CAM) and individual feature contributions (SHAP and LIME). The combination of Grad-CAM, SHAP, and LIME enhances the interpretability of MultiResFF-Net, ensuring greater transparency and trustworthiness in its diagnostic predictions. Additionally, the Modified MMRes-Block and CBAM contribute to the model’s ability to dynamically refine feature representations, allowing it to focus on clinically relevant patterns for more accurate and reliable diagnosis. By integrating multiple interpretability techniques, this study provides a deeper understanding of MultiResFF-Net’s decision-making process, making it more suitable for clinical deployment.

4.4. Ablation Study

To gain deeper insights into the development of the MultiResFF-Net, this study conducts an ablation study. The purpose is to compare different variants of the proposed model with and without specific components. The ablation study encompasses various versions of the MultiResFF-Net model, allowing the assessment of the impact of components such as MMRes-Block, CBAM block, and different activation functions within the MMRes-Block.

4.4.1. Hyperparameter Selection

The hyperparameter selection for the MultiResFF-Net model was tested with different values for batch size, learning rate, epochs, and optimizer as shown in Table 5. A batch size of 64 achieved the highest accuracy of 99.37%, offering the best balance between memory efficiency and model stability. For the learning rate, 1e − 3 provided the best result, ensuring effective convergence. A training duration of 20 epochs yielded the optimal performance, allowing sufficient time for learning without overfitting. Among the optimizers, Adam achieved the highest accuracy, likely due to its adaptive learning rate. The final chosen hyperparameters—batch size 64, learning rate 1e − 3, 20 epochs, and Adam optimizer—resulted in the best performance for the model.

| Hyperparameter | Value | Accuracy (%) |

|---|---|---|

| Batch size | 8 | 99.00 |

| 16 | 99.25 | |

| 32 | 99.12 | |

| 64 | 99.37 | |

| Learning rate | 1e − 3 | 99.37 |

| 1e − 4 | 98.75 | |

| 1e − 5 | 98.50 | |

| Epochs | 10 | 98.25 |

| 20 | 99.37 | |

| 30 | 98.75 | |

| Optimizer | Adam | 99.37 |

| SGD | 98.75 | |

| RMSProp | 98.47 | |

- Note: Bold values represent the highest values.

4.4.2. Effectiveness of MMRes-Block

The section evaluates the impact of incorporating the MMRes-Block in different configurations of the MultiResFF-Net. A comprehensive comparison of MultiResFF-Net configurations, including the Base Model, the addition of MultiResBlock, and the incorporation of the MMRes-Block as shown in Table 6. The initial base model achieves a commendable accuracy of 97.12%. This serves as a benchmark for evaluating the impact of subsequent modifications. The inclusion of the MultiResBlock leads to a noticeable improvement, with accuracy increasing to 98.12%. This suggests that the introduction of MultiResBlock contributes to enhanced feature extraction and model performance. The most significant performance improvement is observed with the MMRes-Block configuration, reaching an accuracy of 98.75%. This underscores the effectiveness of the MMRes-Block, particularly its modification involving the replacement of traditional convolutions with separable convolutions. The replacement of convolutions with separable convolutions in MMRes-Block enhances feature extraction efficiency. Separable convolutions decompose the standard convolution operation, leading to improved computational efficiency and reduced model parameters. The modifications in MMRes-Block strike a balance between computational efficiency and accuracy, contributing to the model’s ability to capture relevant features while maintaining a streamlined architecture. In conclusion, the ablation study demonstrates that the MMRes-Block, with its incorporation of separable convolutions, plays a pivotal role in improving the overall performance of the MultiResFF-Net.

| Condition | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| Base model | 97.12 | 97.37 | 97.12 | 97.11 |

| Base model + MultiResBlock | 98.12 | 98.16 | 98.13 | 98.12 |

| Base model + MMRes-Block | 98.75 | 98.76 | 98.75 | 98.75 |

4.4.3. Effectiveness of CBAM Block

This section examines the impact of integrating the CBAM into the MultiResFF-Net. A detailed comparison of MultiResFF-Net configurations, evaluating the effectiveness of integrating the CBAM shown in Table 7. The model without CBAM integration achieves a high accuracy of 98.75%. This serves as a baseline to assess the improvement introduced by the CBAM block. The inclusion of the CBAM block results in a notable performance boost, with accuracy increasing to 99.37%. CBAM dynamically recalibrates feature maps, emphasizing relevant channels and spatial locations. The improved performance with CBAM suggests that this attention mechanism is particularly beneficial in the context of medical image analysis. The consistent improvement across multiple metrics underscores the effectiveness of CBAM in refining the model’s attention and decision-making processes.

| Condition | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| MultiResFF-Net without CBAM | 98.75 | 98.76 | 98.75 | 98.75 |

| MultiResFF-Net with CBAM | 99.37 | 99.38 | 99.38 | 99.37 |

4.4.4. Effectiveness of Activation Functions in MMRes-Block

The ablation study investigates the impact of different activation functions within the MMRes-Block. An in-depth comparison of MultiResFF-Net configurations incorporating different activation functions is shown in Table 8. The model with ELU activation achieves an accuracy of 98.00%. While ELU performs well, it is surpassed by other activation functions in this context. SELU activation further improves performance, reaching an accuracy of 98.37%. SELU’s self-normalizing properties contribute to the enhanced capabilities of the MMRes-Block. SWISH activation shows a slight dip in performance, with an accuracy of 96.75%. The model with ReLU activation achieves the highest accuracy of 99.37%, outperforming other activation functions. ReLU’s simplicity and effectiveness in promoting sparse activations contribute to this superior performance. Despite its simplicity, ReLU activation stands out as the most effective choice in this context, achieving the highest accuracy. ReLU’s ability to promote sparse activations and mitigate vanishing gradient issues contributes to its superiority. The choice of activation function has a noticeable impact on the MMRes-Block’s ability to capture and propagate features. In conclusion, while SELU provides self-normalization, ReLU is more effective for the MMRes-Block in MultiResFF-Net, enhancing sparse activations and model training.

| Activation function | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| ELU | 98.00 | 98.03 | 98.00 | 97.99 |

| SELU | 98.37 | 98.38 | 98.37 | 98.37 |

| SWISH | 96.75 | 96.82 | 96.75 | 96.74 |

| ReLU | 99.37 | 99.38 | 99.38 | 99.37 |

4.5. Discussion

The efficiency of MultiResFF-Net is demonstrated by its superior performance, achieving remarkable results with fewer parameters and reduced depth, as evidenced by the evaluation outcomes. To highlight its contributions and advancements, this study conducted a comprehensive comparison with state-of-the-art pretrained models used for GI disease diagnosis. All competing models were trained under identical conditions, utilizing the same dataset, hyperparameters, and computational environment to ensure a fair evaluation. Additionally, to maintain consistency across models, each was equipped with a standardized classification head, consisting of a GlobalAveragePooling2D layer, a Dense layer with 128 neurons, Batch Normalization, a Swish activation function, and a final Dense layer for classification. Table 9 presents a comparative analysis, showcasing that the MultiResFF-Net outperformed all models, attaining a remarkable 99.37% accuracy on the test dataset. In the comparison, the highest-performing traditional DCNN, DenseNet121, achieved an accuracy of 97.12%. The provided results clearly demonstrate a notable improvement achieved by the MultiResFF-Net, showcasing a substantial increase of 2.25%. This observation suggests that the fusion of DenseNet121 based on the proposed method leads to enhanced performance. Moreover, it highlights that the MultiResFF-Net has the capability to surpass the performance of conventionally trained DCNNs.

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| DenseNet121 | 97.12 | 97.12 | 97.13 | 97.12 |

| IneptionResNetV2 | 95.25 | 95.27 | 95.25 | 95.24 |

| InceptionV3 | 95.38 | 95.41 | 95.38 | 95.35 |

| MobileNet | 96.50 | 96.51 | 96.50 | 96.46 |

| MobileNetV2 | 95.50 | 95.56 | 95.50 | 95.50 |

| ResNet50V2 | 96.50 | 96.50 | 96.50 | 96.48 |

| Xception | 96.37 | 96.41 | 96.37 | 96.33 |

| NasNetMobile | 92.50 | 92.57 | 92.50 | 92.35 |

| Proposed MultiResFF-Net | 99.37 | 99.38 | 99.38 | 99.37 |

The ROC curve is presented to compare the performance of the proposed MultiResFF-Net with other models in Figure 11. The ROC curve is a valuable tool for evaluating the model’s ability to discriminate between classes, particularly in medical diagnostic tasks. In this context, it helps assess the trade-off between sensitivity and specificity. The consideration of the ROC curve is essential because it provides a comprehensive visualization of the model’s performance across different thresholds. A higher AUC score indicates superior discriminative ability, and in this case, the MultiResFF-Net exhibits the highest AUC score of 0.9988. This exceptional AUC score signifies that the MultiResFF-Net outperforms other models in distinguishing between positive and negative cases, further highlighting its efficacy in GI disease diagnosis.

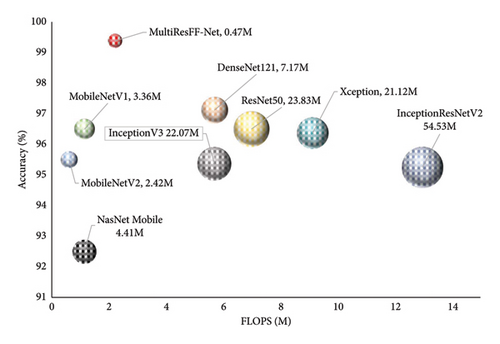

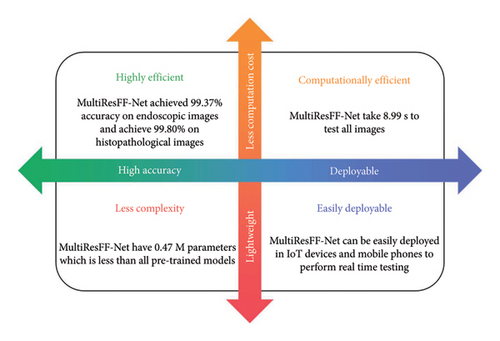

Figure 12 illustrates the trade-offs between accuracy, parameters, and FLOPS for the MultiResFF-Net and other baseline models. Although the proposed model achieved the highest accuracy at 99.37%, it did not achieve the lowest FLOPS. Instead, it excelled in cost-efficiency by achieving the best performance-to-resource ratio, demanding only 2.22 MFLOPS to operate with such high accuracy. Comparatively, NasNetMobile exhibited the lowest MFLOPS at 1.15 million but struggled in overall accuracy with only 92.50%. Despite NasNetMobile’s lower MFLOPS, the MultiResFF-Net demonstrated a slight increase in MFLOPS, resulting in a substantial improvement of 9.87% in accuracy. In contrast to resource-intensive models like InceptionResNetV2 and Xception, the MultiResFF-Net outperformed them, achieving an overall accuracy of 99.37% on the test datasets. Moreover, the proposed model consumed a mere 2.22 MFLOPS, showcasing its relative cost-efficiency compared to larger counterparts while maintaining superior diagnostic performance for the four cases of GI diseases. The MultiResFF-Net also exhibited a modest parameter count of 0.47 million, further emphasizing its efficiency in comparison to other models. In evaluating the practicality of the trained models, the comparison of inference speed emerges as a crucial metric. Each model, including the MultiResFF-Net, underwent the diagnosis of 800 test samples to measure their real-time inference speed. While results may vary across different machines, these outcomes provide valuable insights into the relative speed performance of each model. In Figure 13, it is evident that the MultiResFF-Net stands out by efficiently completing the inference for all 800 samples. Compared to traditional Networks, the MultiResFF-Net demonstrated remarkable efficiency, requiring only 8.99 s to process the entire test dataset. This accelerated inference speed can be attributed to several factors, including the model’s optimized architecture, efficient use of fused features, and the incorporation of lightweight components such as MMRes-Block and CBAM. These design choices contribute to the overall efficiency of the MultiResFF-Net, making it well-suited for practical applications where faster inference times are essential.

Figure 14 not only emphasizes the computational efficiency and performance of MultiResFF-Net in GI disease detection but also underscores its significant advantages in these aspects. The model stands out for its lightweight design, making it highly efficient in terms of computational resources. This characteristic opens door for easy deployment on various devices, including IoT platforms. As a result, MultiResFF-Net holds the potential to perform real-time detection, addressing critical needs in the field of GI disease diagnosis and monitoring. This combination of efficiency and effectiveness positions the model as a promising solution for enhancing healthcare in resource-limited and remote settings.

- •

Early Detection: MultiResFF-Net’s high accuracy and efficient real-time detection capabilities enable the early identification of GI diseases. Detecting diseases in their initial stages allows for prompt medical intervention, reducing the risk of complications and improving patient outcomes.

- •

Resource Efficiency: The lightweight and low-computation nature of MultiResFF-Net makes it suitable for deployment in resource-limited clinical settings, such as rural healthcare facilities and mobile clinics. It minimizes the need for high-end hardware and expensive computational resources, making GI disease screening more accessible to a broader population.

- •

Improved Decision Support: MultiResFF-Net’s interpretability, aided by techniques like Grad-CAM, provides clinicians with a clearer understanding of how the model arrives at its diagnostic decisions. This transparency can enhance the confidence of healthcare professionals in the model’s recommendations, leading to better-informed clinical decisions and patient care.

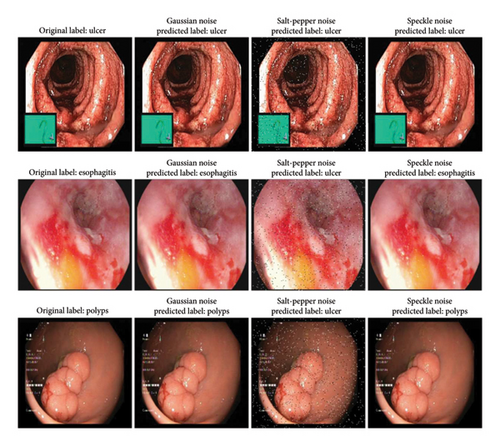

4.6. Model Evaluation and Performance Under Noise Distortions

To assess the robustness of our model, we applied three common noise types—Gaussian noise, salt-and-pepper noise, and speckle noise—to endoscopic images. These noise types simulate real-world distortions encountered in medical imaging due to sensor limitations, transmission errors, and environmental interference. Gaussian noise mimics random variations in intensity caused by sensor inaccuracies, salt-and-pepper noise represents extreme pixel disruptions from transmission errors, and speckle noise replicates granular interference often observed in ultrasound and endoscopic imaging. Evaluating the model under these conditions provides insights into its reliability in practical scenarios. Our model demonstrated strong performance in detecting ulcers, maintaining accuracy even under noise distortions (Figure 15). The distinct ulcer features, such as irregular edges and inflammation, enabled reliable classification across all noise types. However, salt-and-pepper noise posed challenges for esophagitis and polyp detection, leading to misclassification as ulcers. In contrast, the model correctly identified esophagitis and polyps under Gaussian and speckle noise. The misclassification under salt-and-pepper noise stemmed from its disruptive nature, which obscured fine-grained details and edge structures critical for differentiation. These findings highlight the varying impact of noise on medical image classification. While the model exhibits robustness against Gaussian and speckle noise, further improvements in noise-handling techniques and feature extraction are required to mitigate the effects of salt-and-pepper noise for enhanced diagnostic reliability.

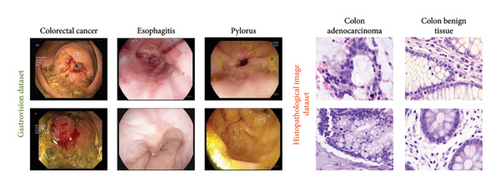

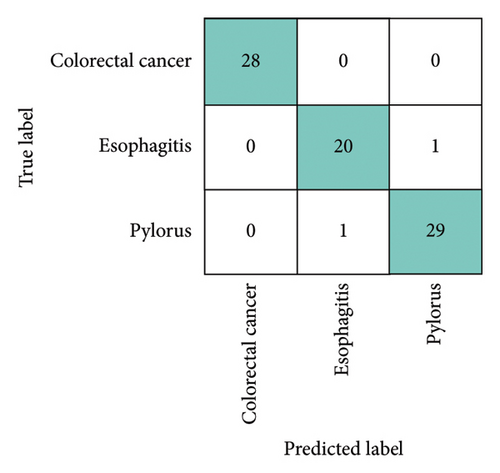

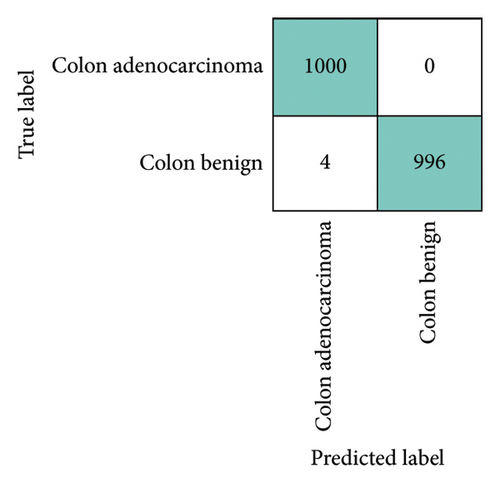

4.7. Testing on Additional Datasets

To assess the robustness and generalization capability of the framework, we trained and evaluated it on two publicly available datasets, testing its performance across different data sources and ensuring its ability to adapt to varied conditions. The first dataset, Gastrovision [39] includes 396 images spread across three categories. The second dataset [40] consists of 5000 histopathological images, divided into two categories. Figure 16 presents sample images from each class in both datasets. The experiments were conducted using the same experimental setup. In Figure 16, the confusion matrices for both datasets are depicted. Figure 17(a) illustrates that the proposed MultiResFF-Net accurately detects all colorectal cancer images, with only one misclassification in the esophagitis and pylorus classes. Figure 17(b) demonstrates the model’s effectiveness in detecting all histopathological images of Colon adenocarcinoma, albeit with four misclassifications in the Colon benign tissue class. Table 10 provides an overview of the overall performance on both datasets, showcasing the proposed MultiResFF-Net’s capabilities in handling diverse data and maintaining high accuracy across different classes.

| Dataset | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| GastroVision | 97.47 | 97.30 | 97.30 | 97.30 |

| Histopathological | 99.80 | 100 | 99.60 | 99.80 |

The results in Table 10 demonstrate the strong generalization capabilities of the proposed MultiResFF-Net on additional datasets. The model achieved a notable accuracy of 97.47% on the GastroVision dataset, demonstrating its effectiveness in diagnosing three distinct GI diseases. Similarly, on the Histopathological dataset, the MultiResFF-Net exhibited exceptional accuracy of 99.80%, achieving perfect precision and maintaining high recall and F1-score for detecting Colon adenocarcinoma and Colon benign tissue images. These results underscore the adaptability and reliability of the proposed model in handling different types of medical image datasets. The proposed MultiResFF-Net’s consistent and high performance on both the primary and additional datasets highlights its potential as a reliable diagnostic tool for GI diseases. The model’s ability to maintain accuracy across diverse datasets underscores its generalizability, which is crucial for practical applications in real-world medical scenarios.

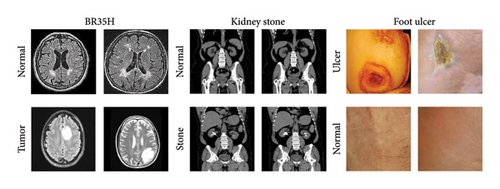

4.8. Assessing Generalization Beyond GI Diseases

DL models in medical imaging must demonstrate strong generalization capabilities to be considered reliable for real-world clinical applications. While our proposed MultiResFF-Net has shown exceptional performance on GI disease datasets, it is crucial to evaluate its adaptability to other medical imaging domains. To address this, we conducted additional experiments on three diverse datasets—brain tumor (BR35H) [41], kidney stone [42], and foot ulcer [43]—to assess the model’s robustness beyond GI diseases. Figure 18 presents representative samples from these datasets. This evaluation ensures that MultiResFF-Net is not limited to a specific modality and can effectively classify different pathological conditions, reinforcing its potential for broader medical applications. Table 11 presents the results of our model on these additional datasets. MultiResFF-Net achieved high accuracy across all three datasets, with 98.83% on BR35H (brain tumor), 98.27% on kidney stone, and 94.81% on foot ulcer detection. The high precision, recall, and F1-score further confirm its effectiveness. The slightly lower performance on the foot ulcer dataset can be attributed to higher variability in ulcer appearances and imaging conditions. However, the overall results demonstrate the model’s ability to extract meaningful features from different medical imaging modalities, supporting its scalability and real-world applicability beyond GI disease diagnosis.

| Dataset | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| BR35H | 98.83 | 99.00 | 98.67 | 98.84 |

| Kidney stone | 98.27 | 98.18 | 98.18 | 98.18 |

| Foot ulcer | 94.81 | 92.73 | 97.17 | 94.88 |

4.9. Comparison With State-of-the-Art Approaches

When introducing a novel method to the existing literature, it becomes imperative to assess its performance in comparison to established approaches. To address this necessity, we present Table 12, offering a comprehensive quantitative analysis of the proposed method in contrast to recently proposed methodologies. The proposed MultiResFF-Net outperforms existing methods across multiple datasets, showcasing its superiority in GI disease diagnosis. The proposed method performs exceptionally well on both endoscopic and histopathological images, highlighting its versatility in diagnosing different types of GI diseases.

| Method | Endoscopic images | Gastro vision | Histopathological images |

|---|---|---|---|

| VGG16 [44] | — | — | 93.00% |

| XGBoost [45] | — | — | 99.00% |

| DL approach [46] | — | — | 96.33% |

| MPADL-LC3 [47] | — | — | 99.27% |

| Multiscale feature fusion [48] | — | — | 96.00% |

| NN with information bottleneck [49] | — | — | 97.52% |

| MfureCNN [26] | 97.75% | — | — |

| Ensemble [50] | 98.30% | — | — |

| Deep ensemble [51] | 96.30% | — | — |

| Stack ensemble [22] | 94.90% | — | — |

| Proposed MultiResFF-Net | 99.37% | 97.47% | 99.80% |

- •

The MMRes-Block played a pivotal role in enhancing feature extraction efficiency. By replacing conventional convolutions with separable convolution layers, the MMRes-Block streamlined the process, ensuring a lightweight yet accurate MultiResFF-Net.

- •

Leveraging the concept of fusing MobileNet and DenseNet121, the MultiResFF-Net harnessed the strengths of both models. Feature fusion, supported by studies in medical imaging, led to a broader set of distinct features, enhancing the model’s ability to handle complex tasks.

- •

CBAM: The integration of CBAM significantly improved the model’s focus on informative regions within the input data. This attention mechanism dynamically recalibrated feature maps, emphasizing relevant channels and spatial locations crucial for accurate diagnosis in medical images.

- •

Before fusion, both MobileNet and DenseNet121 underwent model truncation, removing different blocks to reduce complexity and avoid overfitting. This efficient truncation process preserved essential features while maintaining a shorter end-to-end network.

- •

The introduction of auxiliary layers facilitated the fusion of compressed networks, addressing the challenge of unequal output shapes. These layers reshaped cut-point outputs into identical shapes, allowing for seamless layer-wise fusion.

These combined elements resulted in a model that not only achieved exceptional accuracy on diverse datasets but also showcased efficiency in terms of parameter usage, computational resources, and inference speed. The proposed MultiResFF-Net stands out as a robust and practical solution for GI disease diagnosis in medical imaging.

5. Conclusion and Future Work

This paper introduces the MultiResFF-Net, a feature fusion network designed for the diagnosis of GI diseases through endoscopy images. The proposed model showcases a unique approach by strategically fusing features from truncated DenseNet121 and MobileNet architectures, resulting in a more efficient and cost-effective diagnostic tool. Through rigorous evaluation on three diverse datasets, MultiResFF-Net consistently achieved outstanding accuracy rates of 99.37%, 97.47%, and 99.80%. Importantly, the model operates with a minimal computational cost, boasting 2.22 MFLOPS and 0.47 million parameters. Comparative analyses affirm the superiority of MultiResFF-Net over existing methodologies. The success of MultiResFF-Net lies in its ability to leverage feature fusion, model truncation, and innovative components like MMRes-Block and CBAM. The proposed model not only outperforms conventional deep learning architectures but also provides a more straightforward and cost-efficient alternative. This work contributes a novel perspective to the field of GI disease diagnosis, emphasizing the importance of model simplicity, robust feature extraction, and efficient use of computational resources. MultiResFF-Net stands as a promising advancement in the realm of medical image analysis, paving the way for practical and accessible solutions in healthcare.

Future work will focus on expanding the capabilities of MultiResFF-Net to handle additional classes and a broader range of GI conditions. We will investigate transfer learning strategies to leverage pretrained models, facilitating faster adaptation to new datasets and improving performance across varying endoscopy devices and image qualities. Additionally, model adaptation through domain adaptation techniques will be explored to improve the model’s robustness across different imaging modalities and clinical settings. Another important direction is the real-time implementation and deployment of the model in clinical environments, which will enhance its practical use in healthcare scenarios. Furthermore, we aim to incorporate advanced data augmentation techniques, such as geometric transformations, contrast adjustments, and synthetic data generation, to further improve the model’s robustness and performance on diverse datasets. These advancements will ensure that MultiResFF-Net remains a versatile and effective tool for medical image analysis in real-world applications.

Ethics Statement

The authors have nothing to report.

Consent

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Sohaib Asif: data curation, conceptualization, methodology, software, validation, writing – original draft. Yajun Ying: conceptualization, software, validation. Tingting Qian, Jun Yao and Jinjie Qu: software, validation, visualization. Vicky Yang Wang: supervision, validation, writing – review and editing. Rongbiao Ying: software, validation, visualization. Dong Xu: formal analysis, supervision, validation, writing – review and editing.

Sohaib Asif and Yajun Ying are co-first authors.

Funding

We would like to acknowledge the support from the following funding sources: Wenling Science Bureau Grant #2021S00043, Zhejiang Medical Association Clinical Research Funding #2021ZYC-A234.

Acknowledgments

We would like to acknowledge the support from the following funding sources: Wenling Science Bureau Grant #2021S00043, Zhejiang Medical Association Clinical Research Funding #2021ZYC-A234.

Declaration of Generative AI and AI-Assisted Technologies in the Writing Process. During the preparation of this work the author(s) used ChatGPT 4.0 in order to grammar checking. After using this tool/service, the authors reviewed and edited the content as needed and take full responsibility for the content of the publication.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding authors upon reasonable request.