Wildfire Smoke Detection System: Model Architecture, Training Mechanism, and Dataset

Abstract

Vanilla Transformers focus on semantic relevance between mid- to high-level features and are not good at extracting smoke features, as they overlook subtle changes in low-level features like color, transparency, and texture, which are essential for smoke recognition. To address this, we propose the cross contrast patch embedding (CCPE) module based on the Swin Transformer. This module leverages multiscale spatial contrast information in both vertical and horizontal directions to enhance the network’s discrimination of underlying details. By combining cross contrast with the transformer, we exploit the advantages of the transformer in the global receptive field and context modeling while compensating for its inability to capture very low-level details, resulting in a more powerful backbone network tailored for smoke recognition tasks. In addition, we introduce the separable negative sampling mechanism (SNSM) to address supervision signal confusion during training and release the SKLFS-WildFire test dataset, the largest real-world wildfire test set to date, for systematic evaluation. Extensive testing and evaluation on the benchmark dataset FIgLib and the SKLFS-WildFire test dataset show significant performance improvements of the proposed method over baseline detection models.

1. Introduction

Visual wildfire detection refers to the utilization of imaging devices to capture image or video signals, followed by the application of intelligent algorithms to detect potential wildfire cues. Two critical indicators for determining the presence of wildfires are smoke and flames. Smoke often manifests earlier in the wildfire occurrence process than flames and has the advantage of being less susceptible to vegetation obstruction, making it increasingly intriguing for researchers [1]. In terms of imaging methods, wildfire detection typically involves using visible light cameras or infrared cameras to capture images or videos. Visible light-based wildfire detection is favored for its cost-effectiveness and broad coverage, gaining popularity among researchers [2, 3]. However, because of the complex background interferences in open scenes, visible light-based wildfire detection demands higher requirements for intelligent algorithms, necessitating further research efforts to advance this technology.

Over the past decade, researchers have developed a large number of deep learning–based wildfire recognition, detection, and even segmentation models. For example, References [4, 5] have proposed fire image classification networks based on deep convolutional neural networks, while References [3, 6] added classical detector heads on the basis of CNNs backbone to identify or locate smoke or flame. In recent years, transformer-based models have demonstrated comparable or superior performance to CNNs on many tasks. Therefore, some researchers have tried to apply transformers to the field of fire detection. For example, Khudayberdiev et al. [7] proposed to use Swin Transformer [8] as the backbone network to realize the classification of fire images. In Reference [9], a variety of classical backbone networks including ResNet [10], MobileNet [11], Swin Transformer [8], and ConvNeXt [12] are used to realize wildfire detection with self-designed detection heads, and it is proven in experiments that the transformer model has no obvious advantage over CNNs. We have observed similar phenomena in our experiments. Why do transformer-based models work well in other tasks but fail in wildfire detection?

Through the analysis of a large amount of real fire data, we found that smoke, one of the most typical early cues of fire, has special properties that are different from entity objects. An important basis for judging the presence of fire smoke is the spatial distribution of transparency, color, and texture. These features are generally extracted at the bottom of the deep neural networks. The transformer network establishes the correlation between different areas through the attention mechanism and has unique advantages in modeling long-distance dependencies and contextual correlation, but it has poor ability to capture low-level details. Based on this observation, this article proposes the cross contrast patch embedding (CCPE) module to promote the Swin Transformer’s ability to distinguish the underlying smoke texture. Specifically, we sequentially cascade a vertical multispatial frequency contrast structure and a horizontal multispatial frequency contrast structure within the patch embedding and use the cascaded spatial contrast results to enhance the original embedding results and input them into the subsequent network. We found that this simple design can bring extremely significant performance improvements with an almost negligible increase in computational effort.

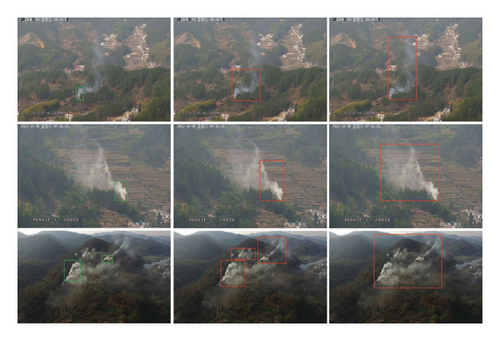

The main difference between wildfire smoke detection and general object detection tasks is the ambiguity of smoke object boundaries. On the one hand, wildfire smoke detection in open scenes often encounters false alarms and requires the addition of a large number of negative error-prone image samples, that is, the images do not contain wildfire but there are objects with high appearance similarity to smoke. Since the number of negative image samples far exceeds the number of wildfire images, error-prone objects only account for a small proportion of the image area. The natural idea is to use online hard example mining (OHEM) [13] to focus on confusing areas when sampling negative proposals, thereby improving the accuracy of the detection model. On the other hand, smoke objects show different transparency at different spatial locations because of different concentrations. It is difficult to clearly define the density boundary between the foreground and background of smoke during manual annotation. This leads to ambiguity in the labeling range of the smoke bounding boxes. In the classic object detection framework, the label assignment of proposals needs to be determined based on the ground-truth boxes. As shown in Figure 1, the green box is the manually labeled smoke foreground, and the red boxes represent the controversial proposals, which are practically exhaustive and cannot be annotated one by one. During the model training phase, it is inappropriate to assign background labels to proposals represented by the red boxes. When the OHEM strategy encounters ambiguous smoke objects, most of the negative proposals acquired during training will be ambiguous. To solve this problem, this paper proposes a separable negative sampling mechanism (SNSM). Specifically, the positive and negative images in the batch are separated during training, and a small number of negative proposals are collected from the positive images with wildfire smoke, and OHEM is used to collect the confusing areas in the negative images without wildfire smoke. Separable negative instance sampling can increase recall and improve model performance in high recall intervals.

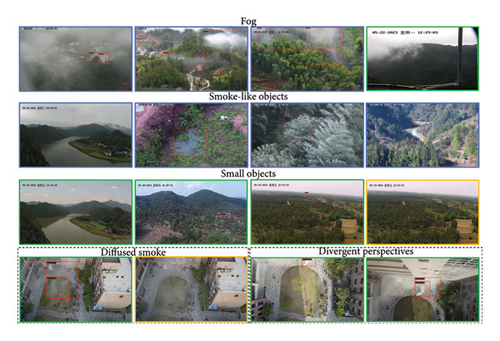

Open-scene wildfire detection lacks a large-scale test dataset for comparison and validation. For instance, the widely employed public dataset, the fire detection dataset [14], comprises 149 videos, of which 74 videos feature fire smoke. The recently released FIgLib dataset [15] encompasses 24.8K images from 315 videos (including omitted images), while the test set only comprises 4880 images sourced from 45 videos. And, some existing researches [6, 16] only report results on undisclosed datasets. The insufficient scale of the public datasets leads to large fluctuations in test results, and the credibility of the experimental results is questionable. This article discloses a large-scale test set, named SKLFS-WildFire Test, which contains 3309 short video clips, including 340 real wildfire videos, and the rest are negative examples of no fire incidents. We obtained a total of 50,735 images by sampling frames with intervals, of which 3588 were images with smoke. False positives are the most critical issue in wildfire detection that affects the user experience, so our test set contains a large number of negative sample images with a large number of interference objects that resemble the appearance of smoke. It provides a benchmark for testing the performance of models in unpredictable environments. A preprint has previously been published [17].

- 1.

We propose a new transformer-based wildfire smoke detector. The issue of the insufficient ability of the transformer backbone to capture smoke details is addressed through the CCPE module. In addition, the challenge of difficult label assignment caused by the unclear boundaries of smoke objects is mitigated by the SNSM.

- 2.

SKLFS-WildFire test, the largest real wildfire test dataset to date, was released, surpassing even most of the published wildfire training sets.

Our algorithm underwent extensive evaluation on 1200 ground-mounted cameras, affirming its efficacy and reliability over prolonged periods.

2. Related Work

2.1. Wildfire Detection Methods

Traditional wildfire detection methods can be broadly divided into two categories: sensor-based approaches, which rely on physical and chemical sensing of combustion byproducts, and vision-based approaches, which analyze image or video data. Sensor-based wildfire detection systems, encompassing ionization [22], photoelectric [23], and gas sensors [24], have been widely implemented in residential and industrial environments because of their rapid response capabilities in detecting both flaming and smoldering combustion events. However, this technology exhibits prohibitive deployment and operational costs when applied to vast and unstructured wildland areas, thereby restricting its scalability for large-scale fire monitoring applications. Vision-based methodologies demonstrate significant potential for wildland fire monitoring because of their broad coverage capabilities and intuitive visualization advantages. Predeep learning era approaches employed handcrafted feature engineering leveraging color, texture, and motion characteristics to discriminate smoke signatures from complex backgrounds [25–27]. However, these methodologies faced critical limitations in addressing smoke’s intraclass heterogeneity (e.g., morphological variations and chromatic dispersion) and environmental confounders (e.g., reflective water surfaces and atmospheric haze), substantially restricting their operational reliability in heterogeneous wildland environments. In recent years, deep learning techniques have revolutionized wildfire detection by automating feature extraction and improving detection robustness, though practical challenges remain concerning computational efficiency, environmental variability, and false-alarm reduction.

There have been a large number of public reports of deep learning–based wildfire detection methods, and remarkable progress has been made. However, the existing methods of wildfire detection are still not satisfactory in practical applications. The characteristics of wildfire detection missions, the challenges they face, and the obstacles to their practical application are still lacking in more in-depth and adequate discussions. Most of the studies focus on the application of mainstream backbones, adjustments of parameters, and performance-efficiency trade-offs. For example, References [28–30] use CNN to extract deep features instead of manual features for image smoke detection, and [28] emphasize the use of batch normalization (BN) in the network. Some researchers [31–33] use classical backbone networks or detectors such as MobileNetV2 [34], YOLOV3 [35], SSD [36], and Faster R-CNN [37] for smoke/flame recognition or detection. For model efficiency, References [21, 38, 39] have all designed new CNN backbone networks for fire recognition, and they emphasize that the newly designed network is highly efficient. Although the efficiency of the model is of great significance, the performance of the wildfire smoke detection model has not yet reached a satisfactory level, that is, too many false positives or low recall. Xue et al. [3] try to improve the model’s small object detection capabilities by improving YOLOV5 [40]. However, in the general object detection field, there are numerous discussions and solutions regarding small object detection. While the detection of small smoke targets in the early stages of wildfires is challenging, it is not the primary issue in wildfire detection. Dewangan et al. [15] proposed wildfire smoke detection methods utilizing spatiotemporal modeling, and Bhamra et al. [41] proposed to incorporate multimodal data such as weather and satellite data, achieving remarkable performance. However, the baseline approach relying on static images for smoke detection still faces unresolved issues. Recently, researchers have also tried to use transformers to improve the model expression ability of fire recognition tasks. Khudayberdiev et al. [7] use Swin Transformer as the backbone network to classify fire images without making any improvements. Hong et al. [9] use various CNNs and transformer networks as backbones for fire recognition tasks, including Swin Transformer, DeiT [42], ResNet, MobileNet, EfficientNet [43], ConvNeXt [12], etc., to compare the effects of different backbones. Experimental results show that the transformer backbones have no significant advantage over the CNN backbones. This is consistent with our observations.

2.2. Wildfire Datasets

Publicly available wildfire datasets are few and generally small in size. Table 1 summarizes the commonly used public datasets for wildfire recognition or detection. The fire detection dataset [14] published by Pasquale et al. is one of the most commonly used fire test sets, containing 31 video clips, 14 of which contain flames. It is worth mentioning that, videos with only smoke but no flame are considered as negative samples in this dataset. They also published the smoke detection dataset, which contains 149 video clips, and 74 videos contain smoke. However, to the best of our knowledge, no published papers have been found to report experimental results on the smoke detection dataset. Chino et al. [18] made a dataset publicly available, called Bowfire, which contains 226 images, of which 119 contain flames and 107 are negative samples without flames, and are equipped with pixel-level semantic segmentation labels. Ali et al. [19] collected the forest-fire dataset, which contains a total of 1900 images in the training set and the test set, of which 950 are images with fire. In Reference [20], a dataset named FireFlame is released, which contains three categories: Fire (namely flame in this paper), smoke, and neutral, with 1000 images each, for a total of 3000 images. Arpit et al. [21] collected a publicly available dataset, named FireNet, which contains a total of 108 video clips and 160 images that are prone to error detection. Dewangan et al. [15] introduced FIgLib, which to our knowledge is the largest wildfire recognition dataset, comprising 315 videos, of which 270 are usable. Building upon FIgLib, Bhamra et al. [41] incorporated multimodal information and removed some samples with missing information, resulting in a final set of 255 videos. However, both studies suffered from relatively small test set sizes, impacting the stability of their test results. Figure 2 shows some samples of several commonly used datasets. We observe two problems with the existing datasets. Firstly, in the stage of fire development and outbreak, smoke and flame have been very intense, and the value of automatic fire detection and alarm is not high, which is not in line with the original intention of early warning and disaster loss reduction. Secondly, the data styles of laboratory ignition scenes and realistic wildfires are quite different. In the SKLFS-WildFire test dataset proposed in this paper, we collect real early wildfire data, where the fire is in the initial stage. This ensures that the dataset is more consistent with real-world scenarios of wildfire detection, allowing for a more direct evaluation of the performance of wildfire detection models in practical applications.

| Datasets | Data format | Flame/smoke | Positive number | Total number | Annotation form |

|---|---|---|---|---|---|

| Fire detection dataset [14] | Video | Flame | 14 videos | 31 videos | Video level |

| Smoke detection dataset [14] | Video | Smoke | 74 videos | 149 videos | Video level |

| Bowfire [18] | Image | Flame | 119 images | 226 images | Pixel level |

| Forest fire dataset [19] | Image | No distinction | 950 images | 1900 images | Image level |

| FireFlame [20] | Image | Flame & smoke | 2000 images | 3000 images | Image level |

| FireNet dataset [21] | Image & video | No distinction | 46 videos | 62 videos & 160 negative images | Image level |

| FIgLib dataset [15] | Video | Smoke | 315 videos | 315 videos | Image level |

| SKLFS-WildFire test dataset (OURS) | Image | Flame & smoke | 3588 images | 50,735 images | Box level |

3. Method

Wildfire smoke detection is an extension of general object detection. General-purpose object detectors can be generally divided into two categories: single stage and multistage. Multistage object detectors are generally slightly better than single-stage object detectors because of the feature alignment, which fine-tunes the proposals from the first stage using the aligned features. However, Figure 1 shows that the location and range of smoke are very ambiguous, and the location fine-tuning in the second stage has little significance, and even degrades the detector performance by introducing excessive location cost. Therefore, based on the classic single-stage detector YOLOX [44], this paper improves the model structure and training strategy according to the particularity of smoke detection. The reason why this paper is not based on more recent studies such as the newest YOLOV8 [45] is that these methods incorporate a large number of tricks based on general object detection tasks, which are unverified in the field of wildfire detection. Furthermore, the CCPE and SNSM proposed in this paper can be easily applied to the new detector since the conclusion of this paper is general and can be extended.

3.1. System Framework

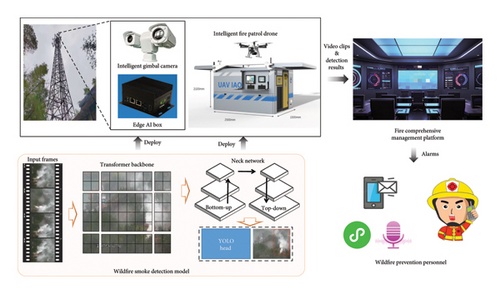

The wildfire smoke detection system is an integral component of forest fire monitoring systems. As illustrated in Figure 3, the smoke detector constitutes the core of this system. This paper proposes a novel transformer-based smoke detection model from three perspectives: model architecture, training mechanisms, and dataset composition. The proposed model is deployed on intelligent gimbal cameras or edge AI boxes to perform real-time video analysis, transmitting suspicious video segments and detection results back to the fire comprehensive management platform. Maintenance personnel on duty manually verify whether the detected incidents constitute actual fires. Upon confirmation of high-risk levels, the platform sends alarm signals via text messages, WeChat Mini Programs, voice calls, and other means to notify wildfire prevention personnel for on-site investigation. The system employs a human-machine coupling approach, balancing high recall rates while tolerating a certain number of false alarms. However, an increase in false alarms significantly burdens the workload of the personnel.

3.2. Model Pipeline

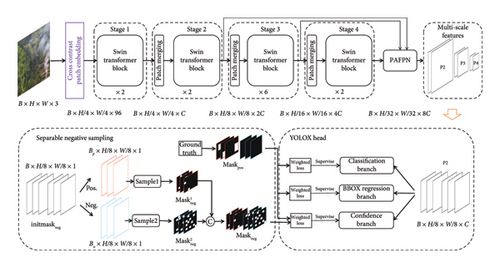

The overall network structure is shown in Figure 4. In this paper, the Swin Transformer backbone network is used to extract multiscale features; the PAFPN [46] is used for integrating multiscale features by adding a bottom-up fusion pathway after the top-down fusion pathway of FPN [47]. Finally, the YOLOX detection head is used to identify and locate smoke instances.

The input of the backbone network is the RGB image group, denoted as , where B, W, and H are the batch size, the width, and height of the image, respectively. After the backbone network and neck, three scales of features , , and are obtained, where C is a hyperparameter and refers to output channels of the Swin Transformer’s first stage. Finally, the multiscale features are fed into the YOLOX head to predict confidence, class, and bounding box. The transformer has strong modeling ability for context correlation and long-distance dependence, but its ability to capture the detailed texture information of images is weak. To address this problem, this paper redesigns the patch embedding of Swin Transformer for smoke detection. In order to solve the problem of ambiguity of label assignment caused by the difficulty of determining the smoke boundary, a SNSM is added to the loss function, and the obtained positive and negative sample masks are used to weight the costs of classification, regression, and confidence.

3.3. CCPE

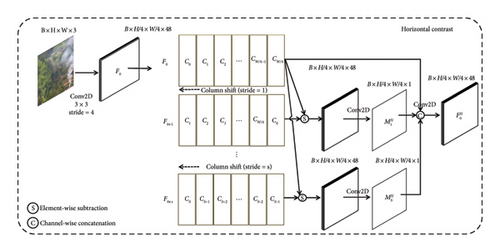

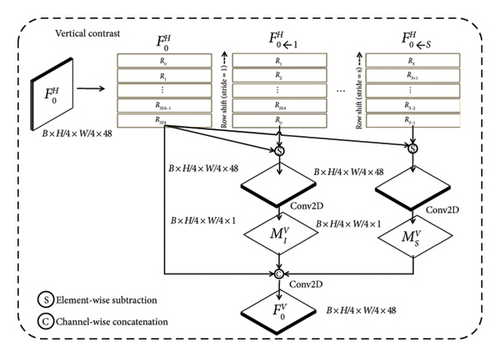

Smoke has a very unique nature, that is, an important clue to the presence of smoke lies in the contrast of color and transparency in space. Capturing spatial contrast can highlight smoke foreground in the background and distinguish fire smoke from homogeneous blurriness, such as poor air visibility, motion blur, zoom blur, and so on. However, the transformer architecture is weak in capturing low-level visual cues. To solve this problem, we propose a novel CCPE module, which is composed of a horizontal contrast component and a vertical contrast component in series.

3.3.1. Horizontal Contrast

Finally, the original feature F and the contrast masks of all scales are concatenated on the channel, and a 3 × 3 2D convolution is applied to adjust the feature maps FH to 48 channels.

3.3.2. Vertical Contrast

Finally, F and FV are concatenated in the channel dimension to obtain the final patch embedding result. Compared with the vanilla patch embedding, the proposed CCPE can quickly capture the multiscale contrast of smoke images. Moreover, the computational cost of spatial shift operation is very small; only a small amount of convolution operators increases the computational cost. Therefore, CCPE makes up for the natural defects of transformers in smoke detection and obtains significant improvement in effect at a controllable computational cost.

3.4. Separable Negative Sampling

In this paper, the images with smoke objects are called positive image samples, and the images without smoke are called negative image samples. And, we refer to image regions containing smoke according to detector rules (e.g., IoU threshold) as positive instance samples, while regions without smoke as negative instance samples.

Smoke detection applications in open scenes often suffer from missed detection and false detection. It has been shown in Figure 1 that the smoke boundary is difficult to define. One of the reasons for missed smoke detection is that in the positive image samples, a large number of image regions may have smoke but are assigned negative labels during the training process, while only the manually labeled regions are assigned positive labels. The areas with smoke that get negative labels often contribute to more loss, which increases the difficulty of training, which then leads to a high false negative rate of the detector. Meanwhile, the cause of false smoke detection lies in the complex background in open scenes. There are a large number of image regions with high appearance similarity to smoke. In engineering, false detections can be suppressed by adding error-prone images. The error-prone region only accounts for a small part of the image, so the loss share of the error-prone region can be strengthened by OHEM [13]. OHEM involves identifying the top-K negative samples with the highest scores during training, which typically represent hard-negative examples. If the traditional OHEM is used to highlight the difficult regions on all the images, the label ambiguity problem in the positive image samples will be more prominent. Therefore, we propose the SNSM to alleviate this problem.

As shown in Figure 4, the feature map is taken as an example. YOLOX head has three branches for confidence prediction, classification, and regression, respectively. The positive mask maskpos is determined using manually annotated ground truth as well as positive sample assignment rules. YOLOX sets the center region of 3 × 3 as positive, and this paper follows this design. The classification loss as well as the loss for the regression are weighted using Maskpos. In the vanilla YOLOX head, all spatial locations contribute to confidence loss. However, because of the addition of a large number of negative error-prone images, we use all positive locations, namely, Maskpos, and part of the negative locations after sampling to weight the confidence loss by location.

Considering the importance of sampling negative locations for loss computation, we propose the SNSM. We denote the initial mask containing all negative locations as initMaskneg = 1 − maskpos. We divide initMaskneg into two groups according to the presence or absence of smoke, that is, the positive image group and the negative image group , where Bp and Bn are the number of positive and negative images, respectively. In , negative locations are randomly sampled according to the number of positive locations, and the result is denoted as . We randomly select a number of negative samples, that is, α1 times the number of positive samples. In , OHEM is used to collect negative samples. Initially, all negative samples are ranked in the descending order based on their scores, and then the top-K negative samples are selected for training, which are denoted as . Here, K is set to α2 times the number of positive samples. We set α1 ≫ α2, so that the negative samples learned by the model are more from negative images, and more attention is paid to the image regions prone to misdetection because of OHEM.

4. Experiments

4.1. Datasets

FIgLib [15] introduced by Dewangan et al. in 2022 stands as the largest forest fire dataset to date. It encompasses 315 wildfire videos, of which 270 are usable. The dataset is divided into three subsets: training, validation, and testing, comprising 144, 64, and 62 videos, respectively. Notably, FIgLib’s distinguishing feature lies in its reliance on temporal information for smoke identification. Even human intelligence struggles to discern smoke solely from single-frame images within FIgLib.

- 1.

High diversity. Most of the data of SKLFS-WildFire comes from video, but we are not taking too many images on one video. About 10 frames are extracted from each video on average to ensure the diversity of data.

- 2.

Strict train-test split design. We split the test dataset according to the administrative region where the cameras are deployed, and all the test videos are in completely different geographical locations from the training dataset.

- 3.

Difficult negative samples. We added a large amount of nonsmoke data on both the training and test sets. These nonsmoke data come from the false detection of classical detectors such as Faster R-CNN [37], YOLOV3 [35], and YOLOV5 [40].

- 4.

Realistic early fire scenes. All SKLFS-WildFire data come from the backflow of application data, mainly for the initial stage of fire, not the ignition data in the laboratory scenario or the middle and late stage of wildfire.

To protect user privacy, the training set of SKLFS-WildFire is not available for the time being. We will open up the SKLFS-WildFire test to facilitate academic researches.

4.2. Metrics for SKLFS-WildFire

4.3. Implement Details

All models are implemented using the MMDetection [48] framework based on the PyTorch [49] backend. We used the NVIDIA A100 GPUs to train all models while using a single NVIDIA GeForce RTX 3080Ti to compare inference efficiency. The input of the wildfire smoke detection models is a batch of images, and we set the batch size B to 64. In the training phase, we follow the data augmentation strategy of YOLOX and use mosaic, random affine, random HSV, and random flip to enhance the diversity of data and improve the generalization ability of the models. Finally, through resize and padding operations, the size W and H of the input are both 640. The set of horizontal strides SH and the set of vertical strides SV in CCPE are both set as {1, 2, 4, 8, 16, 32, 64, 128} to capture the contrast information of different scales with eight kinds of strides. In SNSM, the negative sampling ratio α1 of positive image samples is set to 10, and the negative sampling ratio α2 of negative image samples is set to 190. The proposed model is optimized with the SGD optimizer with an initial learning rate of 0.01, momentum set to 0.9, and weight decay set to 0.0005, and a total of 40 epochs are trained. The mixed precision training method of float32 and float16 was used in the training, and the loss scale was set to 512.

4.4. Comparison on FIgLib

We did not find bounding box-level labels for FIgLib, so we reannotated the boxes in the training subset. Since smoke from FIgLib is visually more challenging to discern, we increased the input size of the images from 640 to 1024. Because of FIgLib’s emphasis on temporal features for smoke detection, we not only retrained the proposed image model on its training set but also concatenated the current frame It with the past frame It−2 in the image channels to obtain a temporal model, where t and t − 2 represent the current time and the time 2 minutes ago, respectively (the frame interval in FIgLib is 1 minute). By implementing the simplest spatiotemporal modeling framework (channel-level concatenation), we provide empirical evidence that the exceptional performance of our proposed model on FIgLib primarily derives from the efficacy of its image-centric architectural design, rather than relying on sophisticated spatiotemporal processing mechanisms. We adopt the metrics in SmokeyNet [15] and compare the results in P (precision), R (recall), F1 score, Acc (accuracy), and TTD (time to detection, minutes) against those reported in Reference [15]. Accuracy and F1 score are the primary composite metrics of importance, with other metrics serving as supplementary references. Table 2 presents the comparison of the effects. In the context of single-frame input models, the proposed model has achieved comparable results, although not the best. This is attributed to the smoke in the FIgLib dataset, which is prone to being confused with other objects in single-frame images, thereby hindering more expressive models from achieving superior performance. A testament to this observation is the comparison between the results of ResNet34 in row 5 and ResNet50 in row 6. Conversely, in the realm of multiframe input models, even with our model’s simple utilization of concatenation to model temporal sequences, we have still attained state-of-the-art (SOTA) results. This underscores the efficacy of the proposed approach.

| Models | Backbone | Params (M) | Acc (%) | F1 (%) | Precision (%) | Recall (%) | TTD |

|---|---|---|---|---|---|---|---|

| Single-frame models | |||||||

| OURS | ContrastSwin | 35.9 | 81.48 | 81.12 | 83.74 | 78.66 | 2.73 |

| SmokeyNet [15] | ResNet34 + ViT | 40.3 | 82.53 | 81.30 | 88.58 | 75.19 | 2.95 |

| SmokeyNet [15] | ResNet34 | 22.3 | 79.40 | 78.90 | 81.62 | 76.58 | 2.81 |

| SmokeyNet [15] | ResNet50 | 26.1 | 68.51 | 74.30 | 63.35 | 89.89 | 1.01 |

| FasterRCNN [15] | ResNet50 | 41.3 | 71.56 | 66.92 | 81.34 | 56.88 | 5.01 |

| MaskRCNN [15] | ResNet50 | 43.9 | 73.24 | 69.94 | 81.08 | 61.51 | 4.18 |

| Multiframe models | |||||||

| OURS | 2 frames ContrastSwin | 35.9 | 84.67 | 84.05 | 88.74 | 79.83 | 2.26 |

| SmokeyNet [15] | 3 frames ResNet34 + LSTM + ViT | 56.9 | 83.62 | 82.83 | 90.85 | 76.11 | 2.94 |

| SmokeyNet [15] | 2 frames ResNet34 + LSTM + ViT | 56.9 | 83.49 | 82.59 | 89.84 | 76.45 | 3.12 |

| SmokeyNet [15] | 2 frames MobileNet + LSTM + ViT | 36.6 | 81.79 | 80.71 | 88.34 | 74.31 | 3.92 |

| SmokeyNet [15] | 2 frames TinyDeiT + LSTM + ViT | 22.9 | 79.74 | 79.01 | 84.25 | 74.44 | 3.61 |

- Note: The proposed model demonstrates strong performance in single-frame input models and achieves the best results in multiframe input models on the FIgLib dataset. Bold values represent the best values among all methods of their category under each metric (column).

4.5. Ablation Studies

The CCPE module is proposed to make up for the insufficient ability of the transformer backbone network to capture the details of smoke texture changes in space. A separable negative sampling strategy is proposed to alleviate the ambiguity of smoke negative sample assignment and improve the recall rate, that is, the model performance under the premise of high recall. In this section, the effectiveness of these two methods is verified by ablation experiments on the SKLFS-WildFire test dataset.

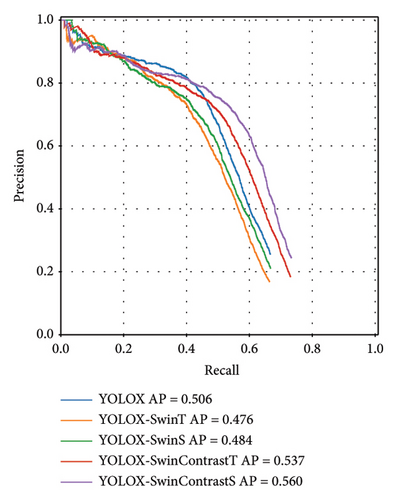

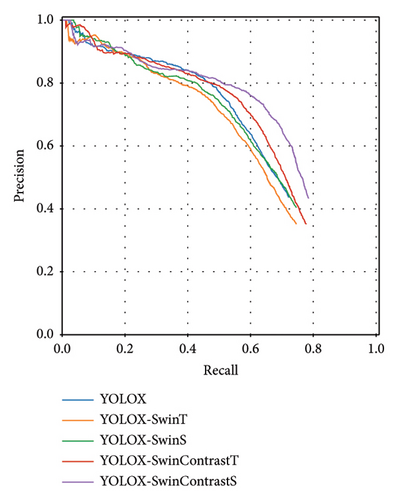

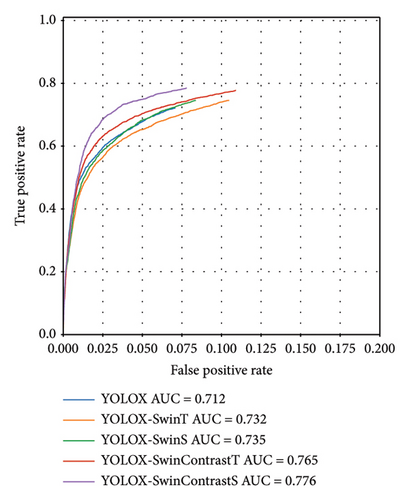

4.5.1. Effectiveness of CCPE

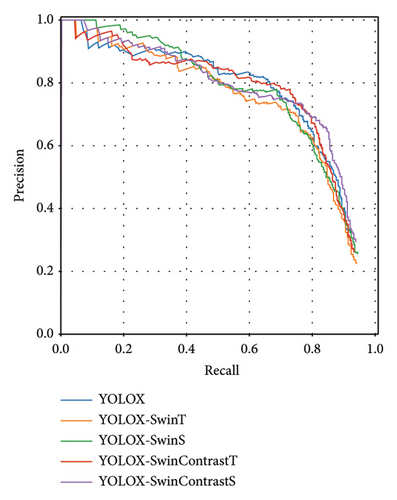

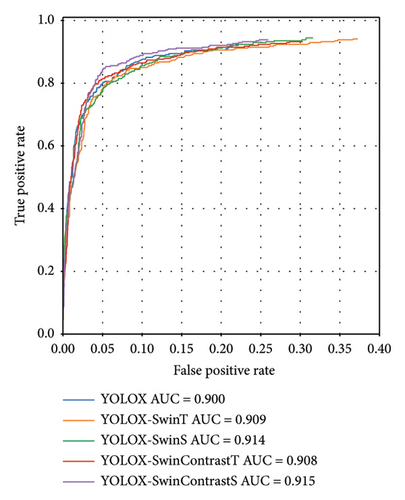

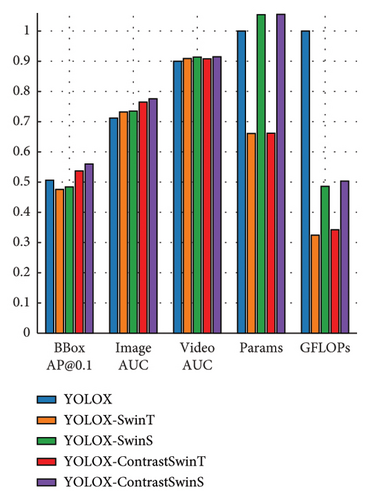

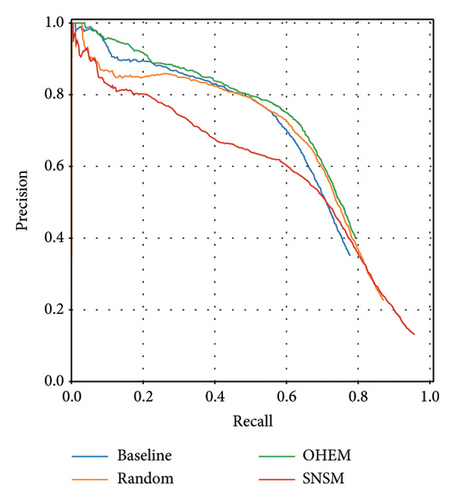

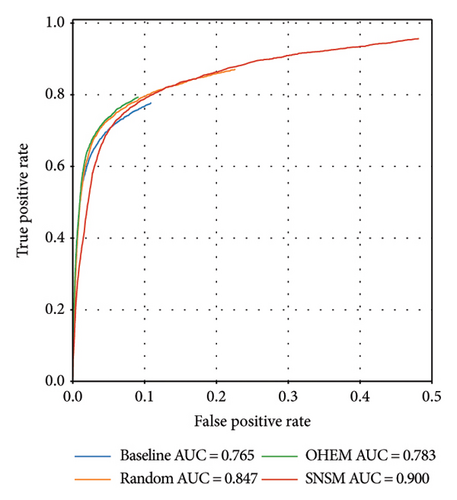

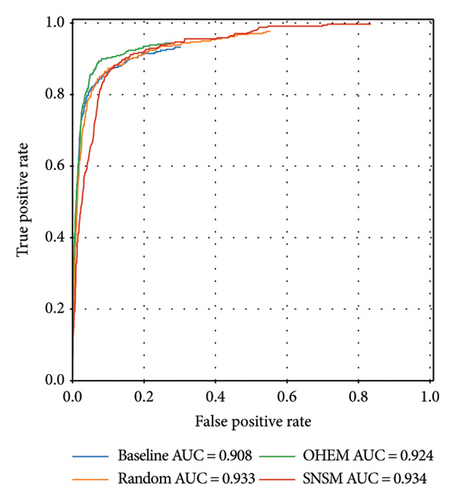

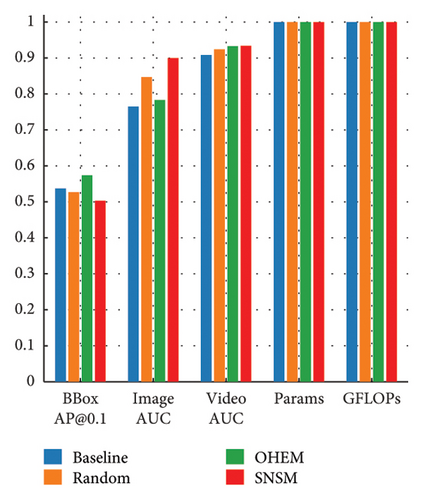

As shown in Table 3, after replacing the CNN-based backbone network CSPDarknet [50] with the Swin Transformer Tiny (denoted as Swin-T) in YOLOX, the AP of the bounding box level decreased. The AUC of image level and video level significantly improved. This proves our observation that the transformer architecture has a stronger ability to model contextual relevance, while CNNs are better at capturing the detailed texture information for better location prediction. In the last row of the table, the patch embedding of Swin-T is replaced by the proposed CCPE. The experimental results show that CCPE consistently outperforms the original YOLOX model and the model replaced by the Swin-T backbone in terms of bounding box, image, and video levels. Figure 6 presents the PR curves at the bounding box level and the PR curves and ROC curves at image and video levels, respectively. These curves show the same conclusions as the numerical metrics, proving that the proposed CCPE can effectively make up for the lack of transformer model ability to model the low-level details of smoke, and significantly improve the comprehensive performance of smoke object detection model with a small number of parameters and calculations.

| Models | Backbone | BBox [email protected] | Image AUC | Video AUC | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOX | CSPDarknet large | 0.506 | 0.712 | 0.900 | 54.209 | 155.657 |

| YOLOX-SwinT | Swin tiny | 0.476 | 0.732 | 0.909 | 35.840 | 50.524 |

| YOLOX-SwinS | Swin small | 0.484 | 0.735 | 0.914 | 57.158 | 75.642 |

| YOLOX-ContrastSwinT | CCPE + Swin tiny | 0.537 | 0.765 | 0.908 | 35.893 | 53.250 |

| YOLOX-ContrastSwinS | CCPE + Swin small | 0.560 | 0.776 | 0.915 | 57.211 | 78.368 |

- Note: CNN-based YOLOX significantly outperforms Swin-based YOLOX on BBox-level metric. After using CCPE to improve Swin, model performance significantly exceeds the baseline on all metrics. Bold values represent the best values under each metric (column).

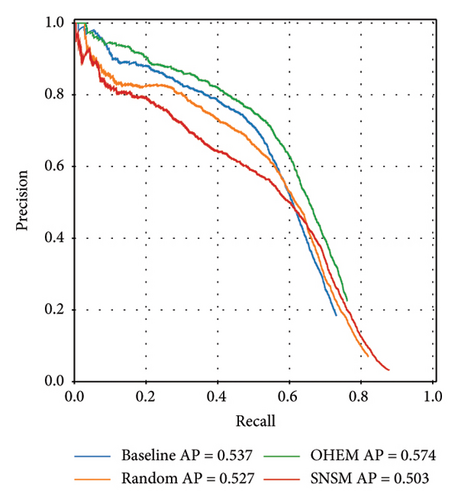

4.5.2. Effectiveness of Separable Negative Sampling

Separable negative sampling is designed for the problem of uncertain boundary of smoke instances. As shown in Table 4, the baseline model YOLOX-ContrastSwin does not employ any negative sampling strategy, that is, all locations participate in the training of the confidence branch. We experimented by randomly sampling 200 times the number of positive locations on negative locations, denoted as “Random.” Similarly, we also tried to sample the negative locations with 200 times the number of positive locations using OHEM with the order of the highest score to the lowest score, denoted as “OHEM.” Finally, SNSM is used to select the negative locations participating in the training, denoted as “SNSM.”

| Models | Sampling | BBox [email protected] | Image AUC | Video AUC | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOX-ContrastSwin | None (baseline) | 0.537 | 0.765 | 0.908 | 35.893 | 53.250 |

| YOLOX-ContrastSwin | Random | 0.527 | 0.847 | 0.933 | 35.893 | 53.250 |

| YOLOX-ContrastSwin | OHEM | 0.574 | 0.783 | 0.924 | 35.893 | 53.250 |

| YOLOX-ContrastSwin | SNSM (OURS) | 0.503 | 0.900 | 0.934 | 35.893 | 53.250 |

- Note: Although there is a decrease in box level AP, the proposed SNSM is able to significantly enhance the comprehensive metrics at image level and video level. Bold values represent the best values under each metric (column).

As can be seen from the results in Table 4, after adopting SNSM, the AP of bounding box decreases from 0.537 to 0.503, while the AUC values of image level and video level are absolutely increased by 13.5% and 2.6%, respectively. The AP of the bounding box level decreases because although SNSM can alleviate ambiguity and increase recall, it provides fewer negative training samples in positive images, which leads to inaccurate box positions. It can also be seen from Figure 7 that SNSM does not perform well in the high-precision and low-recall interval, but it can make the model have relatively high precision in the high-recall interval. The results reveal that our model exhibits discrepancies between predicted and human-annotated bounding boxes in positive frames (because of localization and quantity variations), while generating fewer false positives in negative frames. This trade-off holds greater practical value for smoke detection systems, where alarm accuracy for video clips is prioritized over box precision.

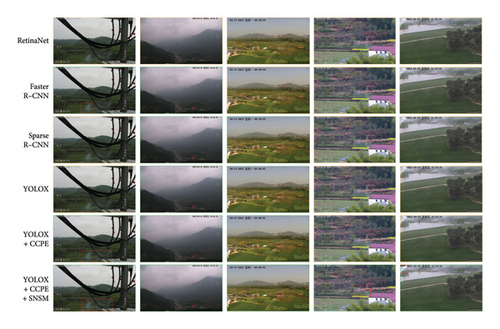

4.6. Comparison to Classical Detectors

It is a tradition to compare with classical methods. Since the code and datasets used in the researches of wildfire detection are rarely publicly available, we did not compare existing wildfire detection methods. Instead, we reproduce some classic and general object detectors, such as YOLOV3 [35], YOLOX [44], RetinaNet [51], Faster R-CNN [37], and Sparse R-CNN [52], on our own training and testing sets. As can be seen from the Table 5 below, the proposed model outperforms all existing models in terms of image- and video-level classification metrics without a significant increase in the number of parameters and calculation. The metrics at the bounding box level are slightly worse than the YOLOX model with CSPDarkNet backbone network in the third and fourth rows. There are two reasons for this phenomenon. First, the location and range of the smoke objects are controversial, so it is difficult to accurately detect. Secondly, SNSM reduces controversy by reducing the contribution of negative instances in positive image samples, which not only improves the recall rate of fire alarms but also damages the fire clues localization inside positive image samples. However, we believe that in the wildfire detection task, detecting fire event and raising alarm is the first priority, while locating the position of smoke or flame in the image is a lower priority although it is also important.

| Models | Backbone | BBox [email protected] | Image AUC | Video AUC | Params (M) | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOV3 | DarkNet-53 | 0.421 | 0.588 | 0.823 | 61.529 | 139.972 |

| YOLOX | CSPDarknet small | 0.504 | 0.679 | 0.882 | 8.938 | 26.640 |

| YOLOX | CSPDarknet large | 0.506 | 0.712 | 0.900 | 54.209 | 155.657 |

| YOLOX | Swin tiny | 0.476 | 0.732 | 0.909 | 35.840 | 50.524 |

| RetinaNet | ResNet101 | 0.365 | 0.810 | 0.879 | 56.961 | 662.367 |

| Faster R-CNN | ResNet101 | 0.432 | 0.598 | 0.858 | 60.345 | 566.591 |

| Sparse R-CNN | ResNet101 | 0.505 | 0.852 | 0.914 | 125.163 | 472.867 |

| OURS | Swin tiny | 0.503 | 0.900 | 0.934 | 35.893 | 53.250 |

- Note: The proposed model outperforms all existing models in both image- and video-level metrics. Bounding box level [email protected] is slightly worse than the YOLOX model with CSPDarkNet backbone network. Bold values represent the best values under each metric (column).

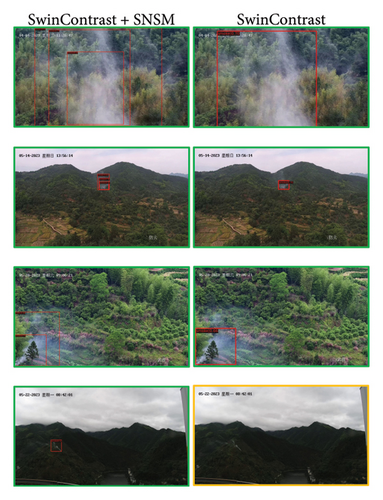

4.7. Visualization Analysis

Figure 8 shows the detection results of the proposed model and other baseline models on some samples of the SKLFS-WildFire test dataset. The threshold score is set to 0.5. The green image border indicates that the wildfire smoke objects are correctly detected, while the orange border indicates that the wildfire smoke objects are missed, and the red bounding boxes in the images represent the smoke detection results. As can be seen from the figure, the proposed model has a huge advantage in terms of recall. The advantage of recall rate is precisely derived from the alleviation of the ambiguity problem of smoke box location and range by SNSM. However, as can be seen from the results of the last row, the high recall rate comes at the cost of multiple detection boxes being repeated in the smoke images, and these detection boxes are difficult to suppress with NMS. This phenomenon also confirms from the side that the problem of positional ambiguity of smoke objects does exist. The model generating multiple detection boxes for a single smoke instance will lead to a relatively low bounding box–level metrics, such as [email protected], which is exactly the problem shown in Tables 4 and 5.

5. Deployment and Limitations

This section details our systematic integration of the wildfire smoke detection model (Swin Tiny variant) into the operational pipeline, including methodology for converting model outputs into actionable platform alerts. We also conduct a critical evaluation of the system’s inherent limitations. Such comprehensive technical disclosure not only facilitates a deeper comprehension of our framework’s practical implementation but also offers concrete implementation guidance for researchers and engineers aiming to adapt our algorithm into real-world surveillance systems operating under resource-constrained conditions.

5.1. Deployment

5.1.1. Postprocessing Strategies for Reliable Smoke Alarm Delivery

The raw output of a wildfire smoke detection model is not directly suitable for end-user applications. On the one hand, the complex backgrounds and numerous distractors in wildland scenes can result in a high false alarm rate when model predictions are used as is. This leads to significant waste of firefighting resources, as each detection would require manual verification. A typical forest fire monitoring PTZ (pan-tilt-zoom) camera covers a range of 3 to 10 km, with 5 km being a common value. This implies that a single camera may monitor an area of approximately 80 square kilometers. The coverage of inspection drones is even larger. Within such vast areas, the likelihood of false positives caused by confusing objects is nonnegligible. If detection results are directly forwarded to users, the system could generate hundreds or even thousands of false alarms per day. On the other hand, even in the event of a real wildfire, users do not expect to receive frequent or redundant alerts. Typically, users prefer not to be alerted repeatedly within every 10 minutes following a reliable alarm. Therefore, the high-frequency outputs of object detection models—often generating several detections per second—are unacceptable in practical deployments. To address these issues and enhance system reliability, we encapsulate the object detection model with lightweight postprocessing algorithms. First, a temporal smoothing strategy is employed to reduce false positives. For PTZ cameras, our system adopts a 6 s temporal sliding window, where alerts are triggered only if over 60% of frames within the window contain smoke detections. For drones, the model analyzes every frame in real-time during inspection. When smoke is detected, the drone suspends its flight and performs a “gaze” operation by observing the suspicious area for 6 s. Similarly, alerts are triggered only if over 60% of frames within the window contain smoke detections. Second, we introduce a regional cooling mechanism to suppress repeated alarms. After an alarm is issued, the system estimates the approximate location of the fire source based on the camera’s longitude, latitude, altitude, orientation, and geographic information system (GIS) map. No additional alerts will be triggered within a predefined radius (e.g., 500 m) around this location for the next 30 minutes. Lastly, several false alarm suppression rules are integrated into the system. In certain scenarios, we incorporate semantic segmentation models to identify and mask regions such as sky, water bodies, buildings, and factories. Smoke detection bounding boxes that exhibit high IoU with these regions (above certain thresholds) are classified as false positives. For frequently misidentified static objects such as walls, rocks, or roads, we define “ignore zones” in the system. These zones are excluded from consideration based on either their geographic coordinates or image-based matching results.

5.1.2. Deployment on Embedded AI Platforms

Our model is primarily deployed on intelligent PTZ cameras and edge computing boxes (for drones, data are transmitted to a ground-based edge box for processing). The smart PTZ cameras are integrated with the Rockchip RK3588 SoC, which features a 6 tera operations per second (TOPS) NPU. The edge computing boxes are equipped with the Jetson Orin Nano module, providing 36 TOPS of AI compute. Both platforms support INT8 and INT16 inference modes. We apply posttraining quantization to convert the original floating-point model into a hybrid INT8/INT16 version. The quantized model maintains performance fidelity, with all metrics deviating by no more than 0.08% from the original float model. On the RK3588 platform, we employ the RKNN inference backend to enable parallel processing. Empirical testing shows a per-frame inference latency of 116 ms with an input resolution of 640 × 640, and memory usage remains under 1.2 GB. On the Jetson Orin Nano, we use ONNX Runtime as the frontend, paired with TensorRT/CUDA as the backend for acceleration. This configuration achieves a per-frame inference latency of 89 ms at the same input resolution. Our wildfire smoke detection system operates with a frame-skipping strategy, analyzing two frames per second. Under this setting, the inference speed comfortably meets real-time processing requirements. Field tests demonstrate that during continuous 24-hour operation, the average power consumption—including both model inference and postprocessing—is 3.9 W on the RK3588 and 7.7 W on the Jetson Orin Nano.

5.2. Limitations

5.2.1. SNSM-Induced Inaccuracies

The proposed SNSM mitigates the assignment of negative labels to regions perceived as smoke-containing by humans but conflicting with ground truth annotations during training, thereby enhancing smoke box recall. However, a limitation of SNSM lies in the generation of numerous plausible yet annotation-inconsistent detection boxes within positive images. This phenomenon stems from a lack of negative instance supervision within positive training images. Figure 9(a) illustrates typical samples exhibiting such prediction inaccuracies. Theoretical analysis reveals that when the sum of parameters α1 and α2 remains constant (i.e., fixed positive-negative instance ratio during training), increasing the α1/α2 ratio implies more negative instances sampled from positive images. This improves spatial prediction accuracy at the cost of heightened training ambiguity and reduced recall for smoke-containing images/videos. Conversely, decreasing the α1/α2 ratio amplifies SNSM’s impact, leading to less accurate box localization in positive images while enhancing image/video-level recall. This trade-off offers configurable solutions for diverse application requirements: smaller α1/α2 ratios should be prioritized when timely wildfire alerting is critical, whereas larger ratios are preferable when precise box localization is needed to derive fire point coordinates.

5.2.2. Fog

The most critical issue is false alarms caused by fog. As shown in Figure 9, the model may produce incorrect detections in images containing fog. Although expanding the training dataset can alleviate this problem, the high visual similarity between fog and smoke prevents a fundamental solution through data augmentation alone. We propose the following solutions: (1) Integrate meteorological information (precipitation, atmospheric humidity) to filter wildfire alarms, as fog typically forms under specific weather conditions. (2) Adopt spatiotemporal smoke detection models. While fog and smoke appear similar visually, their motion characteristics differ, which spatiotemporal approaches can exploit to reduce false positives. Since a single paper cannot resolve all issues, we focus on future work for comprehensive solutions.

5.2.3. Smoke-Like Objects

Besides fog, specific objects may also trigger false alarms. Figure 9 illustrates typical examples. Water surface reflections are common interference—under special angles, reflections can resemble smoke. Plant leaves and flowers may also cause false detections. Though humans perceive them as distinct from smoke, models face challenges in open scenarios where generalization of performance remains limited. Notably, such errors occur across detection algorithms. Based on statistical metrics and subjective observations, our method exhibits fewer such errors, primarily because of our more diverse training dataset and the transformer model’s robust scene understanding capabilities.

5.2.4. Small Objects

The proposed model demonstrates strong small object detection capabilities. As shown in Figure 9, small objects often appear at long distances (several kilometers or more). Our model effectively detects even faint smoke undetectable to human eyes. However, weak visual cues in small objects risk frame drops in video sequences. We recommend: (1) Model improvements. Using high-resolution networks for detail extraction or motion information to compensate for spatial feature limitations and (2) Engineering solutions. Increasing focal length to confirm small smoke objects.

5.2.5. Diffused Smoke

Image-based smoke detection relies on color and transparency contrasts between smoke and background. Fully diffused, contour-less smoke is inherently undetectable. Figure 9 shows detection results under varying diffusion levels. Detection capability depends critically on diffusion degree: our CCPE module effectively captures spatial contrast, enabling robust detection even in severe diffusion cases (left). However, completely homogeneous smoke remains undetectable (right).

5.2.6. Divergent Perspectives

To evaluate performance under different viewpoints (particularly vertical overhead), we deployed drones to fly vertically over smoke (As shown in Figure 9(b)). The model consistently detected smoke. This occurs because natural wind-driven smoke moves obliquely upward rather than vertically, making complete smoke contours highly probable across viewpoints.

5.2.7. Lighting Conditions

Since cameras use overhead viewpoints with automatic exposure control, natural daylight intensity has no impact. Our smoke detection model applies only to daytime normal lighting conditions and cannot operate at night. Wildfire detection at night typically employs thermal imaging devices to detect open flames rather than smoke.

6. Conclusion

The performance of the transformer model in wildfire detection is not significantly better than that of CNN-based models, which deviates from the mainstream understanding of general computer vision tasks. Through analysis, we find that the advantage of the transformer model is to capture the long-distance global context, but the ability to model the texture, color, and transparency of images is poor. Furthermore, the main clue of fire smoke discrimination lies in these low-level details. Therefore, this paper proposes a CCPE module to improve the Swin Transformer. Experiments show that the CCPE module can significantly improve the performance of Swin in smoke detection.

Another main difference between smoke detection and general object detection is that the range of smoke is difficult to determine, and it is difficult to distinguish single or multiple instances of smoke. The assignment mechanism of negative instances in traditional object detectors can lead to contradictory supervision signals during training. Therefore, this paper proposes SNSM, which separates positive and negative image samples and uses different mechanisms to sample negative instances to participate in training. Experiments show that SNSM can effectively improve the recall rate of smoke, especially in the image-level and video-level metrics. However, the method proposed in this paper detects smoke based on static images, and through analysis, it is found that with this method, it is difficult to eliminate the interference of cloud and fog between mountains. Building a fire detection model based on spatiotemporal information is the next step.

Further, we released a new early wildfire dataset of real scenes, the SKLFS-WildFire test, which can comprehensively evaluate the performance of wildfire detection model from three levels: bounding box, image, and video. Its publication can provide a fair comparison benchmark for future research, whether it is detection or classification, static image schemes or video spatiotemporal schemes, and boost the development of the field of wildfire detection.

Disclosure

The preprint is available on arXiv and can be accessed at https://arxiv.org/pdf/2311.10116.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Chong Wang: conceptualization, formal analysis, investigation, methodology, software, validation, visualization, and writing – original draft. Chen Xu: data curation, validation, visualization, and writing – review and editing. Adeel Akram: validation and writing – review and editing. Zhong Wang: project administration. Zhilin Shan: resources. Qixing Zhang: conceptualization, funding acquisition, resources, and supervision.

Funding

This research was supported by National Natural Science Foundation of China, 32471866; Anhui Provincial Science and Technology Major Project, 202203a07020017; Central Government-Guided Local Science and Technology Development Funds of Anhui Province, 2023CSJGG1100; Key Project of Emergency Management Department of China, 2024EMST010101.

Acknowledgments

The numerical calculations in this paper have been done on the supercomputing system in the Supercomputing Center of University of Science and Technology of China. We thank the center for providing computational resources and technical support.

Open Research

Data Availability Statement

The data that support the findings of this study are openly available in Github at https://github.com/WCUSTC/CCPE.