2D Spatiotemporal Hypergraph Convolution Network for Dynamic OD Traffic Flow Prediction

Abstract

Predicting origin-destination (OD) flow presents a significant challenge in intelligent transportation due to the intricate dynamic correlations between starting points and destinations. Although existing OD prediction methodologies leveraging graph neural networks have demonstrated commendable performance, they often struggle to address the complexities inherent in two-sided correlations. To address this gap, this paper introduces a novel approach, the 2D spatiotemporal hypergraph convolution network (2D-HGCN), designed specifically for forecasting OD traffic flow. Our proposed model employs a two-stage architecture. Initially, temporal characteristics of traffic flow between OD pairs are captured using a 1D convolution neural network (1D-CNNs). Subsequently, a 2D hypergraph convolutional network is introduced to uncover spatial correlations in OD flow patterns. The unique aspect of our 2D-HGCN lies in its dynamic hypergraph, which evolves over time, enabling the model to adaptively learn changing spatial dependencies. Experimental evaluation conducted on real-world datasets highlights the efficiency of our suggested model for predicting OD flows. Our results demonstrate a promising predictive performance, showcasing the ability of the 2D-HGCN to effectively capture the intricate dynamics of OD traffic flow.

1. Introduction

Applications for ride-hailing, such as DiDi, UCAR, and Uber, are now broadly embraced for daily travel necessities. These platforms aim to offer convenient ride services to passengers and enhance public transportation efficiency. For example, in Beijing alone, DiDi logs millions of requests for taxis each day. To offer unparalleled services and secure financial success, platforms for ride-hailing require immediate understanding of customer needs. They must efficiently assign orders to service vehicles ahead of time to meet passenger mobility patterns and maximize profits by identifying popular and profitable routes from historical passenger demand data, thus minimizing empty rides. Therefore, rather than just predicting passenger demand quantities within a region, understanding passenger demands in terms of trip origins and destinations is crucial. This information not only reflects passenger demand strength but also serves as a foundation for mining valuable mobility patterns. Origin-destination (OD) flow prediction is very important for understanding passenger travel demand at both local region and global city network.

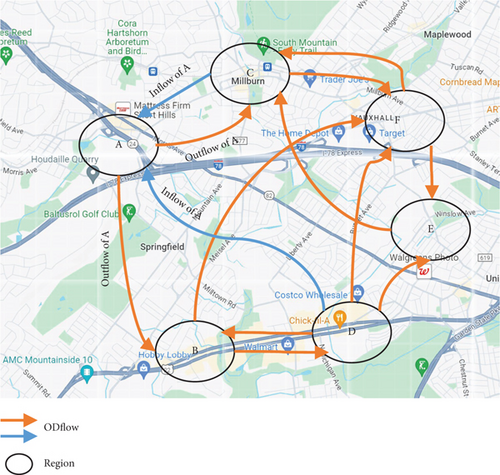

Effectively predicting traffic flow within urban areas has become a fundamental task in the domain of intelligent transportation systems. Urban traffic flow encompasses inflow and outflow, indicating the passenger count traveling to or from the target regions, and OD flow, indicating passenger travel between stations. Figure 1 clearly shows the OD traffic flow between different parts of the city, while observing the figure, we can find that some interregional traffic flows show similar patterns. These similar patterns may reflect similar travel habits or travel demand. Despite the emphasis on predicting inflow or outflow, there has been inadequate attention directed toward OD flow prediction. In addition, we can see that there are complex higher order correlations between the origin and the destination. These correlations can include multihop flows and interdependencies between multiple O-D pairs, which are influenced by temporal dynamics, spatial proximity, and contextual factors such as public events or traffic conditions. There are also complex correlations between origins and origins and between destinations and destinations. These higher order dependencies reflect the interconnected nature of urban mobility flows and highlight the need for models capable of capturing these complex patterns.

- 1.

Firstly, they struggle to handle complex two-sided correlations. For the OD flow problem, there exist correlations among both starting points and destinations. Existing approaches only consider correlations among origins and disregard those among destinations.

- 2.

Additionally, these methods tend to utilize the predefined graph to capture spatial relationships. For instance, a deep learning method based on GCNs for ride-hailing networks establishes connections between regions considering distance and traffic volumes. But spatial correlations designed manually may not accurately represent the changes in spatial interdependencies caused by diverse factors, potentially resulting in bad prediction outcomes.

- •

To better to model complex two-sided correlations between the starting point and destination, dynamic hypergraphs is introduced in our method for OD traffic flow prediction.

- •

2D spatiotemporal hypergraph convolution is designed for OD traffic flow prediction. It leverages hypergraph-based spectral convolution and temporal convolution layers to achieve accurate predictions.

- •

Experimental outcomes from tests on two public OD datasets confirm our model’s efficacy.

2. Related Works

2.1. Traffic Demand Forecasting

Over the course of several decades, the realm of OD flow prediction has been extensively explored, yielding a plethora of proposed models to grapple with this multifaceted issue. A prominent strategy for addressing the complexities of static OD prediction problems centers on the application of the gravity model. Nonetheless, the model’s efficacy is fundamentally constrained by the dynamic and nonlinear attributes characterizing transportation flows, which transcend the capacities of its underlying mathematical framework. Consequently, to address these challenges, statistical models like generalized least squares (GLS), maximum likelihood (ML) estimation, and Bayesian methodologies have emerged as standard tools for navigating the intricacies of OD estimation and prediction. For example, GLS is designed to reduce the discrepancy between predicted and actual flows [1, 2], whereas ML estimators aim to enhance the probability of observed flows by considering the estimated OD flows [3]. Within the Bayesian framework, the posterior probability is calculated through the amalgamation of prior probability expressions derived from OD flows and the likelihood of link flows, conditioned on estimated OD flows. The Bayesian approach [4, 5] diligently endeavors to unveil OD flows that maximize this posterior probability.

In recent years, the advent of deep neural networks has demonstrated their effectiveness in approximating nonlinear features across a diverse array of applications, encompassing classification, regression, and control problems [6, 7]. Simultaneously, supervised learning has firmly established its pivotal position within the domain of deep learning. Convolutional neural networks (CNNs) excel in discerning spatial patterns, while RNNs have proven to be adept at capturing temporal patterns [8, 9]. Considering that transportation systems often take the shape of nodes and links, the prominence of graph-structured information is notably evident in this field. Notably, graph neural networks (GNNs) have garnered recognition for their effectiveness in handling such data [10]. Particularly, GCNs leverage the adjacency matrix to represent node connections, [11], a strategy that expedites convergence and bolsters predictive performance by incorporating network topology.

While a substantial body of literature has addressed zone-based demand prediction, there has been comparatively limited attention directed toward OD-based demand prediction. In a seminal work by Liu et al. [12], they unveiled a contextual spatial-temporal framework for forecasting OD taxi demand across New York. This innovative approach involved dividing the city into grid segments and using a three-dimensional matrix, where two dimensions correspond to the city’s geographical breadth and length, and the third dimension represented the total number of squares. This matrix proficiently encoded OD information, facilitating the ability of various CNN architectures to effectively capture spatial dependencies among square-shaped regions. Furthermore, in a notable study by Wang et al. [13], the calculation of the OD matrix was achieved using multitask learning based on grid embedding. This module played a vital role in acquiring preweighted features, involving the modeling of passengers’ spatial mobility patterns. Following this, the features were incorporated into the multitask learning framework to forecast upcoming OD demand. Moreover, Xiong et al. [14] introduced an innovative method that seamlessly integrated line GCN and Kalman filter techniques, the technique aimed at predicting OD demand across the entirety of the traffic network.

Deep learning–based OD prediction methods have achieved good performance. However, the complex and high-order correlations between origins and destinations are not well utilized, which limits the improvement of prediction performance. Furthermore, these models tend to rely on predefined static graph structures to capture spatial relationships among stations.

2.2. Hypergraph Learning

The need for addressing certain limitations inherent in graph representations, particularly their inability to adequately capture multiple associations, has led to the development of hypergraph learning theory. Hypergraph, initially a mathematical concept, encompasses the conventional graph as a specific case with a fixed order of two. Hypergraph learning was first introduced to the field of computer science by Zhou et al. [15]. Their pioneering work extended from the normalized graph cut and random walk theory to derive a normalized hypergraph cut and express the hypergraph Laplacian. Furthermore, they introduced a methodology for spectral clustering based on hypergraphs and put forth algorithms for transductive classification in their research article.

Building on the foundation laid by Zhou’s work, hypergraph learning methods have found gradual adoption in addressing clustering and classification challenges. Notable instances include the application of geometric hypergraph learning by Arya et al. [16] for social information classification and inhomogeneous hypergraph clustering by Li et al. [17] for network motif clustering. In addition, Li et al. [18, 19] introduced hypergraphs into the object counting domain to alleviate the problems of uneven object distribution and feature alignment. Leordeanu and colleagues [20] improved image matching by employing a semisupervised hypergraph learning approach, which increased resilience to changes in scale, distortions, and anomalies. In different domains, Su et al. [21] utilized vertex-weighted hypergraphs for classifying multiview 3D objects, integrating algorithms for hypergraph partitioning. Within a similar framework, Guo et al. [22] expanded the hypergraph partitioning to design algorithms for person reidentification. Their work introduced innovative hypergraph fusion methods, offering new perspectives on feature fusion.

It is noteworthy that hypergraph learning methods are progressively merging with GNN techniques, leading to the development of novel approaches. Gao et al. [23] and Liao et al. [24] have introduced methods for training GNNs on hypergraphs. These approaches, when applied to citation classification using the CORA dataset, have demonstrated improved accuracy. Wang et al. [25] extend hypergraph convolution to multitask learning for traffic prediction.

Hypergraph representation learning has been proven to be effective in addressing clustering and certain classification challenges, showcasing its capacity to capture high-order associations and its potential in the realm of hypergraph learning.

3. Methodology

In Figure 2, the fundamental concept of the proposed method revolves around the utilization of hypergraph representation to address the OD flow prediction challenge. This involves deriving hypergraph spectral convolution techniques based on the principles of hypergraph learning theory. Subsequently, a 2D hypergraph neural network framework is meticulously designed, incorporating dynamic mechanisms, to effectively accomplish the prediction of the OD matrix.

3.1. Overview

In the OD matrix, both regional and worldwide spatial interrelationships intertwine and exist on both ends of the source and destination. These OD flows are dynamic over time and encompass inherent spatial interconnection. We utilize the hypergraph representation of higher order connections to capture such spatial interdependence. Additionally, there exist correlations between origins and destinations that necessitate simultaneous modeling; thus, we leverage 2D-HGCN to capture both.

Furthermore, the travel patterns exhibited by individuals create a dynamic system. For example, individuals engage in commuting between their place of work and residence on weekdays, resulting in a cyclic travel pattern. This correlation undergoes dynamic fluctuations over time.

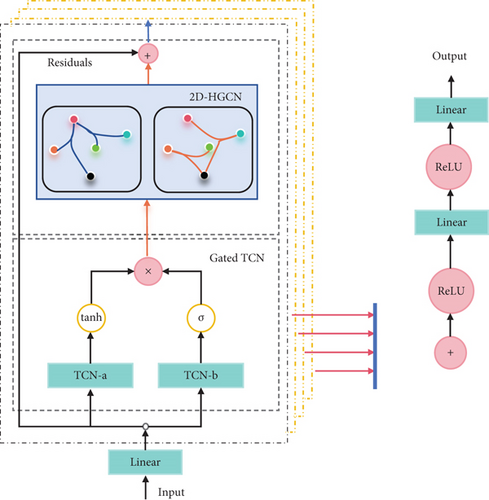

Figure 3 presents the model’s architecture. The input data consists of OD flow data and the graph structure. Initially, we construct dynamic hypergraphs based on the input data. Following this, both hypergraph Laplacian matrices and OD flow data are introduced into the 2D-HGCN. This network incorporates spatiotemporal blocks that integrate hypergraph convolution layers and temporal convolution layers. These combined layers effectively capture both spectral and temporal features associated with each vertex in the hypergraphs. Ultimately, the model proposed in this study produces predictions at the node level.

3.2. Dynamic Hypergraph Construction

In our method, hypergraph construction involves two main procedures: the construction of the primary hypergraph and the dynamic hypergraph . Algorithm 1 shows the process of hypergraph construction.

The primary hypergraph serves as the foundational representation of the network structure, while the dynamic hypergraph provides additional spatial insights into OD patterns for forecasting purposes. The acronym “OD” standing for origin-destination is a standard terminology in transit studies, used to analyze travel patterns in a specific area over temporal dimensions. As passenger travel patterns undergo dynamic changes, corresponding variations manifest in their OD relationships. To address this dynamism, we propose a novel approach utilizing diverse hypergraphs to capture a broader spectrum of temporal features.

An analogous computation can be applied to ascertain the correlation between two destinations. The filtering parameters of the hypergraph convolutional network (HGCN) are shared across diverse time points, concurrently employing distinct hypergraphs for different time slots and employing separate hypergraphs for both origins and destinations.

-

Algorithm 1: Dynamic hypergraph construction.

-

Algorithm 1 Dynamic Hypergraph Construction

-

Input: Traffic tensor X(h), Hypergraph incidence matrix H, Hyperedge set Eh, Vertices set V

-

Output: Dynamic hypergraph

-

Procedure: Construction of Dynamic Hypergraph

-

Initialize empty hyperedge set

-

for each time slot t do

-

Compute 24-hour OD tensor X(h)(⋅, ⋅, t)

-

for each origin i1 and i2 do

-

Compute cosine similarity

-

if then Adjust threshold as needed

-

Add hyperedge to

-

for each destination j1 and j2 do

-

Compute cosine similarity

-

-

if then

-

Add hyperedge to

-

end if

-

end for

-

end if

-

end for

-

end for

-

return

3.3. 2D Hypergraph Convolution

In our methodology for OD flow prediction, we leverage a graph-based neural network. Nonetheless, applying graph convolution directly to hypergraphs presents challenges. Spectral hypergraph convolution is derived by integrating principles from hypergraph neural networks and traditional spectral graph theory.

3.4. Dynamic Spatiotemporal Hypergraph Neural Networks

Following the introduction of spectral hypergraph convolution, our focus transitions to exploring temporal convolution for capturing temporal flow features. Subsequently, we formulate spatiotemporal convolution blocks, which constitute the central component of our comprehensive framework.

-

Algorithm 2: 2D hypergraph convolution.

-

Algorithm 2 2D Spatio-Temporal Hypergraph Neural Networks for OD Flow Prediction

-

Input: Historical OD flow data matrix, OD_data (size: time t), Hypergraph representation parameters: V, E, ω, Temporal sequence length, n

-

Output: Predicted OD flow matrix at future time

-

Procedure: Construction of Dynamic Hypergraph

-

1. Construct the primary hypergraph H0 using V, E, and ω.

-

2. Construct the dynamic hypergraph 𝐻𝑑 to capture additional spatial information.

-

3. Apply spectral hypergraph convolution Hd with a trainable filter g.

-

4. Implement efficient computation using Chebyshev polynomial approximation.

-

5. Construct a 2D Hypergraph Convolutional Network (2DHGCN) for processing a 2D signal on graphs:

-

a. Apply hypergraph convolution (HGCN) to both origins and destinations.

-

b. Use dilated causal convolution as the temporal convolution layer (TCN).

-

c. Stack dilated causal convolution layers with increasing dilation factors.

-

d. Integrate a Gated TCN to capture intricate temporal dependencies.

-

6.Propagate temporal features through multiple layers of 2DHGCN.

-

7. Use a linear regression layer to aggregate feature dimensions for predicted traffic matrix.

-

end procedure

-

for each origin i1 and i2 do

-

Compute cosine similarity

-

if then Adjust threshold as needed

-

Add hyperedge to

-

for each destination j1 and j2 do

-

Compute cosine similarity

-

-

if then

-

Add hyperedge to

-

end if

-

end for

-

end if

-

end for

-

return

3.5. Loss Function

The selection of MAE offers several advantages. Firstly, it is robust to outliers due to its use of absolute differences, which is particularly important in traffic flow prediction where abnormal events such as accidents or construction may influence traffic conditions. Secondly, MAE provides an intuitive measure of the average discrepancy between model predictions and actual observations, enhancing interpretability. Lastly, MAE is a convex function, contributing to the stability of the optimization process.

In summary, choosing MAE as the loss function for our model ensures that reduces the average absolute difference between predicted and actual OD flow values, consequently enhancing its predictive efficacy.

3.6. Overall Algorithm

As shown in Algorithm 2, this paper introduces a dynamic spatiotemporal hypergraph neural network algorithm for predicting OD demand. The algorithm begins by establishing the primary hypergraph structure to capture fundamental network topology. Subsequently, spectral hypergraph convolution is applied for spatial feature learning, coupled with a dynamic hypergraph construction method to reveal additional spatiotemporal information behind passenger OD patterns. The integration of 2D hypergraph convolution effectively processes two-dimensional signals, capturing crucial spatiotemporal features. Temporally, dilated causal convolution captures node-specific temporal trends, and a gated TCN regulates information flow. Finally, multiple layers of 2D hypergraph convolution propagate the extracted spatiotemporal features, culminating in linear regression for predicting traffic matrices. This algorithm combines spatiotemporal hypergraph learning and CNNs, providing an effective solution for traffic flow prediction.

4. Experiments and Analysis

4.1. Datasets

Validating the proposed model involves utilizing two real OD flow datasets, specifically JAP(47) and NYC_TAXI, which are the historical OD flow data of Japan and New York, respectively.

The JAP refers to the Japan OD flow dataset collected in 2021, comprising a significant volume of human GPS trajectory data encompassing 5 million individuals across Japan’s 47 prefectures. The data attributes include anonymous IDs, timestamps, longitudes, latitudes, accuracy, and operating system types. The raw data file for a single month comprises approximately 1 TB in CSV format, with around 180 GPS records per user per day. After undergoing data cleaning and trip segmentation processes, each ID is associated with an average of 10 GPS records, corresponding to either origin or destination locations. The time period selected for analysis is from January 1, 2020, to February 28, 2021 (425 days) as the target time frame.

The NYC_TAXI dataset consists of 22,349,490 taxi trip records from NYC in 2015 (NYC 2017b), spanning from January 1, 2015, to March 1, 2015. We partitioned the entire city into 10 × 20 regions, with each region roughly measuring 1 km×1 km. Each time interval is set to 30 min in length.

4.2. Evaluating Indicator

4.3. Experimental Settings

To achieve a more manageable distribution, we logarithmically transform the original OD tensor using the natural logarithm. The data is partitioned into training, validation, and test datasets with a ratio of 6.4:1.6:2, respectively. For the training process, the optimizer selected is Adam, with a batch size of 16 and a learning rate of 0.0001. The training algorithm is designed to terminate early if the validation error converges within 20 epochs or stop after 200 epochs. Both the observation step (α) and prediction step (β) are set to 7, indicating the utilization of the past 1 week of observations.

4.4. Baselines

- 3.

ST-ResNet [26]: ST-ResNet is a deep learning–based approach, effectively addresses the challenging task of forecasting urban crowd flow by integrating spatiotemporal and external factors

- 4.

PCRN [27]: Built on ConvGRU, PCRN learn recent prediction data and periodic modes.

- 5.

ST-GCN [28]: A novel deep learning framework designed for time series prediction in the traffic domain. By formulating the problem on graphs and employing complete convolutional structures, ST-GCN efficiently captures spatiotemporal dependencies in traffic flow.

- 6.

DCRNN [29]: DCRNN is a typical traffic prediction method that use diffusion graph and gated recurrent unit to learn the spatial-temporal features.

- 7.

Graph WaveNet [30]: Graph WaveNet is an adaptive spatial-temporal prediction architecture for graph constructing, addressing limitations of predefined graph structures by accurately capturing hidden spatial dependencies and demonstrating superior performance on transportation network datasets.

- 8.

MPGCN [31]: MPGCN is a novel approach for OD flow prediction in intelligent transportation, adeptly addresses dynamic correlations and complex spatial dependencies by combining LSTM [32] for temporal feature extraction and a two-dimensional GCN.

- 9.

ODCRN [33]: It is composed of two learning modules: a predefined static graph learning module (e.g., adjacency matrix) as auxiliary input and a dynamic graph constructor (DGC) that obtain OD graph pairs dynamically based.

4.5. Forecast Results and Analysis

Tables 1 and 2, respectively, present the performance evaluation results of our proposed dynamic hypergraph OD passenger flow prediction model compared to other models. The results in two tables denote that 2D-HGCN outperforms baselines in various evaluation metrics, especially showing significant performance improvements on the NYC_TAXI dataset with a large number of nodes.

| Model | MAE | RMSE |

|---|---|---|

| ST-ResNet | 0.2822 | 0.4060 |

| PCRN | 0.2864 | 0.4044 |

| ST-GCN | 0.2910 | 0.4070 |

| DCRNN | 0.2954 | 0.4102 |

| Graph WaveNet | 0.2887 | 0.4040 |

| MPGCN | 0.2859 | 0.4011 |

| ODCRN | 0.2802 | 0.3947 |

| 2D-HGCN | 0.2731 | 0.3854 |

| Model | MAE | RMSE |

|---|---|---|

| ST-ResNet | 1.7567 | 2.6609 |

| PCRN | 1.7072 | 2.6414 |

| ST-GCN | 1.3679 | 2.6528 |

| DCRNN | 1.3260 | 2.5211 |

| Graph WaveNet | 1.3360 | 2.5548 |

| MPGCN | 1.3876 | 2.6752 |

| ODCRN | 1.3854 | 2.6604 |

| 2D-HGCN | 1.1749 | 2.4630 |

Specifically, the performance on the JAP dataset is as follows: ST-ResNet model and PCRN model use CNN to model spatial correlations, but their performance is limited due to the challenges of capturing non-Euclidean spatial correlations by CNN. ST-GCN model and DCRNN model include spatiotemporal modules, but their spatial modeling is restricted to predefined origin-level spatial modeling, overlooking comprehensive spatial relationships. Graph WaveNet uses adaptive matrices to further model spatial relationships. MPGCN model attempts to model spatial correlations and temporal correlations of OD data separately using graph convolution and LSTM, but graph convolution performs poorly in handling complex spatial correlations, and LSTM fails to capture the temporal dependencies of OD passenger flows effectively. In contrast, the ODCRN model employs two adaptive matrices to model spatial correlations at both origin and destination levels, resulting in improved predictive performance. However, the DHGCN (dynamic hypergraph) model achieves the best performance, further demonstrating the superior ability of hypergraphs to capture high-order spatial correlations.

It is worth noting that both the MPGCN and ODCRN models perform poorly on the NYC_TAXI dataset, which has a large number of nodes. The MPGCN model struggles to effectively model spatial correlations in the large-scale data using graph convolution, while the adaptive matrices used in the ODCRN model do not adequately capture the spatial relationships in data with numerous nodes, indicating limitations in the design of the adaptive matrices for modeling spatial correlations in large-scale data. Nevertheless, the dynamic hypergraph model continues to achieve the best results, further highlighting its exceptional capability in capturing high-order correlations.

4.6. Limitations

In a comprehensive analysis of the OD traffic prediction model, we have identified several notable advantages and, simultaneously, confronted significant challenges arising from its innovative use of hypergraph structures. The model endeavors to capture spatiotemporal dependencies more effectively, particularly in addressing the complexities associated with bidirectional correlations in OD traffic flow, by introducing hypergraph structures, specifically 2D hypergraph convolution. However, through a thorough examination, several key issues have surfaced, demanding more detailed investigation.

The model necessitates careful consideration in balancing complexity and efficiency. The introduction of intricate hypergraph structures may result in a decline in training and inference efficiency, a concern not to be overlooked in practical applications. For instance, the complexity of hypergraphs could potentially extend training times on large-scale datasets, thereby limiting the model’s practical utility. Therefore, a meticulous adjustment of model complexity is imperative to ensure enhanced performance without compromising efficiency. The initial attempt at employing hypergraph structures highlights an affirmative exploration of handling complex structures in OD traffic flow. Nevertheless, this signifies a need for additional efforts to optimize the construction of hypergraph structures in practical applications. For instance, while the model indicates advantages in complex scenarios, defining the specific attributes of “complexity” requires clarification through empirical research. Considering the diversity of urban landscapes, disparities in traffic flow structures across different regions necessitate specific scenario analyses to validate the model’s superiority in complex situations.

Regarding the applicability of hypergraph structures, the model underscores their advantages in dealing with intricate structures and asymmetric relationships. For example, in addressing scenarios with numerous one-way streets or traffic congestion in urban settings, the modeling capability of hypergraphs to capture asymmetric relationships may lead to more accurate predictions. Nevertheless, further insight is required to understand the specific scenarios where hypergraphs outperform traditional methods in practical applications. Moreover, the impact of model complexity on efficiency demands particular attention. The introduction of hypergraph structures may lead to a decrease in training and inference efficiency, especially in large-scale real-time applications. For example, during peak hours, exploring methodologies to maintain prediction accuracy while enhancing model real-time capabilities becomes a crucial avenue for in-depth investigation. Fine-tuning model complexity through detailed parameter adjustments and algorithm optimizations holds promise in alleviating efficiency concerns.

In conclusion, to unleash the full potential of the model, a deeper empirical investigation into its performance across various scenarios is imperative. Furthermore, detailed adjustments to hypergraph structures and parameter optimization are essential for enhancing the model’s robustness and practicality. Through concrete case studies and analyses of real-world scenarios, a more profound understanding of the model’s application potential in OD traffic prediction can be achieved, providing robust support for future research and practical implementation.

5. Conclusions

In this paper, we tackle the challenge of OD flow prediction by employing a dynamic hypergraph learning method and a 2D spatiotemporal hypergraph CNN. Our model capitalizes on the spatial correlation of travel OD to improve the precision of OD prediction. Through comprehensive experiments, we have demonstrated that 2D-HGCN surpasses the SOTA methods. Moving forward, we aim to refine the structure and parameters of our proposed approach and delve deeper into the theory of hypergraphs, exploring their potential applications in intelligent transportation systems.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the Changsha City Education Science “14th Five-Year Plan” Research Project (Grant No. CJK2024079), titled “Research on Generative Artificial Intelligence Empowering Learning Support Services and Implementation Pathways for Full-Time Vocational College Farmer Students.” The funder provided financial support for the design, data collection, and analysis of this study.

Open Research

Data Availability Statement

Data can be obtained by contacting the authors.