Solar Irradiance Forecasting Using Temporal Fusion Transformers

Abstract

Global climate change has intensified the search for renewable energy sources. Solar power is a cost-effective option for electricity generation. Accurate energy forecasting is crucial for efficient planning. While various techniques have been introduced for energy forecasting, transformer-based models are effective for capturing long-range dependencies in data. This study proposes N hours-ahead solar irradiance forecasting framework based on variational mode decomposition (VMD) for handling meteorological data and a modified temporal fusion transformer (TFT) for forecasting solar irradiance. The proposed model decomposes raw solar irradiance sequences into intrinsic mode functions (IMFs) using VMD and optimizes the TFT using a variable screening network and a gated recurrent unit (GRU)-based encoder–decoder. Our study specifically targets the 1-h as well as different forecasting horizons for solar irradiance. The resulting deep learning model offers insights, including the prioritization of solar irradiance subsequences and an analysis of various forecasting window sizes. An empirical study shows that our proposed method has achieved high performance compared to other time series models, such as artificial neural network (ANN), long short-term memory (LSTM), CNN–LSTM, CNN–LSTM with temporal attention (CNN–LSTM-t), transformer, and the original TFT model.

1. Introduction

The recent increase in power consumption due to population growth and the economy has also led to rising demand for energy resources [1]. This demand can be efficiently met if energy resources are managed efficiently. The depletion of fossil fuels, the impact of climate change, and the need for energy independence have emerged as pressing global issues. To address these concerns, there has been a large increase in demand for renewable energy sources such as solar and wind power [2]. As a major renewable energy resource, solar power is projected to become a primary power source in the future due to its abundance and minimal carbon emissions. Solar energy intensity differs across the globe, from the equator to polar regions [3]. Fortunately, the technology required for converting solar energy to electricity is widely available. Moreover, investment costs are projected to decrease by 59% by 2025 [4]. In addition, it is expected that cumulative electric power generation will increase to 36.5 kWh by 2040 [5].

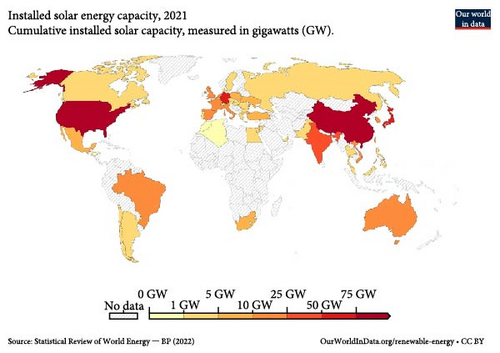

In 2018 [6], there was an 8% increase in the overall capacity of renewable power sources such as hydropower (increasing from 1112 to 1132 GW), solar (increasing from 405 to 505 GW), and geothermal power (increasing from 12.8 to 13.3 GW). Solar energy is an abundant source of energy that can be harnessed using solar photovoltaic (PV) panels to generate electricity from sunlight [7]. Currently, households and industries utilize solar PV technology to produce electricity [8], as it offers the advantage of quick energy production when there is sufficient sunlight. Additionally, installing solar PV systems is a cost-effective and convenient source [9, 10]. Nevertheless, the issue of producing electricity from solar PV panels persists, as various factors, such as partial or complete shading from clouds resulting in reduced power generation [11], deterioration of capacitors or batteries [12], potential induced degradation [13], and uncontrollable environmental factors [14], may hinder the process. The amount of electricity produced from solar panels is directly proportional to the global horizontal irradiance (GHI), which varies by time of day and location. The power output of solar panels is affected by meteorological conditions, resulting in intermittent production. Therefore, to integrate solar energy grids into existing infrastructures for grid stability and energy efficiency, it is crucial to have precise forecasts of GHI to account for the intermittent nature of solar power. Short-term forecasts are typically used to predict irradiation from 1h up to a week ahead, while long-term forecasts are utilized for predicting seasonal impacts on irradiation. Long-term forecasting is critical for financial planning and revenue generation, while short-term forecasting is crucial for managing utilities [15]. Figure 11 shows the amount of energy consumed in the year 2022 from solar. Lighter colors represent less energy consumed, whereas dark colors correspond to higher energy consumption.

Energy forecasting refers to predicting the proportion of energy produced either from renewable energy sources (hydro, wind, and solar) or nonrenewable energy sources (natural gas, oil, and coal), referred to as fossil fuel sources. Forecasting energy-related tasks is of vast importance, as secure and cost-effective transmission is a major need today. Forecasting has played an important role in the power and energy industry as well as in business decision-making [16]. The world is facing significant challenges associated with forecasting, i.e., the intermittent and turbulent nature of these sources [17]. Intermittent solar energy and the amount of energy irradiance differ in different locations, e.g., hot regions experience longer daylight hours than cold regions.

These challenges have prompted a growing demand for renewable energy sources in large-scale energy systems and power grids as a cleaner and more sustainable alternative to traditional fossil fuels. Accurate forecasting can play a vital role in optimizing the utilization of renewable energy sources [18], as the integration of these sources with grid electricity has the potential to meet energy requirements more efficiently. Consequently, investments in renewable energy technologies have significantly surged, with many countries setting ambitious targets for deploying renewable energy to reduce greenhouse gas emissions and ensure energy security.

- •

We introduced an innovative and modified version of the TFT model that captures long-range dependencies for energy forecasting.

- •

By combining variational mode decomposition (VMD) with TFT, our proposed model has achieved enhanced prediction accuracy by utilizing useful signals.

- •

Our TFT model incorporates a variable screen network and a multihorizon fusion multihead attention module to provide insights into input–output relationships while retaining essential information. We replaced the long short-term memory (LSTM) encoder–decoder with a gated recurrent unit (GRU), enabling efficient learning of long-term temporal dependencies.

This paper is structured as follows: Section 2 presents related work. Section 3 describes the problem formulation and methodology adopted to forecast the hour-ahead solar irradiance. Section 4 describes the experimental settings, whereas Sections 5 and 6 discuss the baseline models and results and discussions, respectively. Finally, Section 7 provides the conclusion with future directions.

2. Related Work

In this section, we present the existing work in the literature related to energy forecasting.

Ma and Ma [24] proposed a renewable power generation and electric load prediction approach using a stacked GRU-recurrent neural network (RNN) based on AdaGrad and adjustable momentum. First, a correlation coefficient was applied to the input data for multiple sensitive monitoring parameter selection. Then, the selected parameters were input into the stacked GRU-RNN for accurate renewable energy generation or electricity load forecasting. In [25], a Bayesian deep learning-based probabilistic wind power forecasting model is proposed, using a fully convolutional neural network with Monte Carlo Dropout to construct precise prediction intervals (PIs). Using the Global Energy Forecasting Competition 2014 wind dataset, the model outperforms existing methods by providing accurate and narrower PIs, ensuring stable performance [26].. This is in contrast to conventional deep neural networks, which are deterministic and do not incorporate such uncertainties [27]. In [28], neural networks with a deterministic nature, as well as Bayesian neural networks (BNNs), were suggested for solar irradiation forecasting. In [29], Bayesian model averaging was utilized to predict the ensemble forecasting of solar power generation. Raza, Mithulananthan, and Summerfield [30] explored BNNs for load forecasting and found that due to the probability distribution of model parameters, BNNs take longer to converge compared to other probabilistic deep learning methods, resulting in larger dimensions.

A new machine learning-based method with the stationary wavelet transform (SWT) and transformers was presented for household power consumption forecasting [31]. The experimental findings suggested that the proposed approach outperformed the existing approaches. One disadvantage was that the system could fail under unknown circumstances. A transformer-based energy forecasting model was developed using Pearson correlation coefficient analysis to boost the prediction accuracy [32]. In [33], a comprehensive review of wind speed and wind power forecasting techniques using deep neural networks is conducted. It shows the potential of deep learning in improving forecasting accuracy. In [34], a detailed overview of the current state of wind speed and power forecasting, highlighting key methodologies and identifying areas to improve forecasting techniques to support the integration of renewable energy into power systems.

Wang et al. [17] presented a method for forecasting solar power output that relies on a deep feed-forward neural network. This approach utilizes data from multiple sources, including solar power and weather forecast data. Kim and Cho [35] predicted solar radiation intensity based on several kinds of weather and solar radiation parameters. An electricity forecasting model was developed using a hybrid CNN–LSTM architecture [36]. The CNN component was responsible for feature extraction, while the LSTM layer handled the temporal characteristics of the time series data. Additionally, a predictive model for energy consumption was proposed that utilized LSTM and the sine cosine optimization algorithm [37]. Kim and Cho [35] suggested a hybrid approach that merged a CNN and a bidirectional multilayer LSTM to create a sequential learning prediction model for energy usage. Additionally, another study introduced a similar hybrid model that combines a CNN and GRUs to improve the reliability of energy usage prediction. Both models utilized a coherent structure to achieve their respective goals. A similar model was presented by Real, Dorado, and Duran [38], in which a CNN–LSTM hybrid model was used for residential energy consumption forecasting. A hybrid architecture of CNN and artificial neural network (ANN) was proposed to exploit the advantages of both structures [39]. The proposed model was evaluated on RTE power demand data and a weather forecast dataset. In [40], short-term solar irradiance data were forecasted using 13 datasets from various sources worldwide, with a maximum forecast horizon of 15. The hidden Markov model was extended to an infinite space dimension. The experimental results demonstrated that the proposed model produced more stable forecasting results for higher horizons compared to those obtained from the Markov-chain mixture distribution model. Khan et al. [41] introduce AB-Net, a deep learning framework combining autoencoders and BiLSTM to forecast short-term renewable energy generation, achieving state-of-the-art results on benchmark datasets [40].

Numerous models have been extensively developed for time series forecasting owing to their great significance. Classical tools such as that in [42] serve as the foundation for many time series forecasting techniques. ARIMA [43] addresses forecasting challenges by converting a nonstationary process into a stationary one by differencing. In addition, a CNN-based model using sky images and past irradiance data can be developed to predict short-term solar energy fluctuations under different weather conditions [44]. DeepAR [45] is a forecasting technique that models the probability distribution of future series using a combination of auto-regressive techniques and RNNs. To capture both short- and long-term temporal trends, LSTNet [46] uses CNNs with recurrent-skip connections. Temporal attention is used by attention-based RNNs [47] to analyze long-range dependencies and make predictions. Moreover, a number of studies based on temporal convolution networks (TCNs) [48] use causal convolution to model temporal causation. The primary emphasis of these advanced prediction models is on modeling temporal relationships using techniques such as recurrent connections, temporal attention, or causal convolution.

In recent times, there has been a surge of interest in transformers [49], which leverage a self-attention mechanism to analyze sequential data, including those in natural language processing [50], audio processing [51], and computer vision [52]. Nonetheless, utilizing self-attention for forecasting long-term time series data poses a computational challenge due to the quadratic growth in both memory and time concerning the sequence length. The encoder–decoder framework, as implemented in the transformer model [52], employs an attention mechanism that allows the model to selectively consider relevant information, addressing the shortcomings of the sequence-to-sequence (Seq2Seq) model. This approach also enables parallel training, resulting in reduced training time. Fan et al. [53] presented a TFT that employs both a transformer structure and quantile regression (QR) to learn temporal properties and generate probability predictions. For global solar radiation prediction, Mughal, Sood, and Jarial [54] presented a time series model. The author developed three models, one was used for daily forecasting and the other two were used for hourly forecasting (including and excluding nighttime hours). Demir et al. [55] presented a variant of a transformer-based model for electrical load forecasting by improving the NLP transformer model. The results demanded the likelihood of developing a pretrained transformer model. Zhang et al. [56] introduced a transformer network that delivers highly precise solar power generation forecasts, surpassing the performance achieved with linear regression, CNN, and LSTM methods. A brief overview of related work is given in Table 1.

| Study | Objectives | Dataset | Evaluation measures |

|---|---|---|---|

| Dhillon et al. [57] | Solar energy forecasting in agricultural lands for efficient task scheduling of sensors | NSRDB solar power dataset | R2: 98.052 RMSE: 56.61 |

| Nabavi et al. [26] | Energy forecasting using different machine learning models, in which NARX obtained good results | Energy consumption datasets of Iran | MSE: 0.149 RMSE: 0.495 MAPE: 1.87 |

| Torres et al. [58] | Energy demand forecasting using deep learning techniques | RTE power demand data and weather forecast dataset | MAE: 808.316 MAPE: 1.4934 MBE: 21.7444 MBPE: 0.0231 |

| Khan et al. [59] | Photovoltaic power generation prediction using a CNN–LSTM, a hybrid architecture | PV Rabat plant | MAE: 4.97 MAPE: 19.85 RMSE: 6.65 |

| Kong et al. [60] | Energy forecasting using a CNN and GRU-based framework, AEP dataset, and IHEPC dataset | RTE power demand data and weather forecast dataset | MSE: 0.09 RMSE: 0.31 MAE: 0.24 |

| Aasim, S. N. Singh, and Mohapatra [61] and Khan et al. [41] | Short-term renewable energy generation forecasting for efficient incorporation, merchandise management of energy deposits, and energy control systems | NREL wind dataset, solar power dataset | Solar dataset (MSE: 0.0106, RMSE: 0.1028); Wind dataset (MSE: 0.0004, RMSE: 0.0189) |

| Gaamouche et al. [62] | Electricity use and renewable energy plant production forecasting | PV datasets for two locations, Cocoa and Golden | Cocoa dataset (MAE: 0.035, MSE: 0.0023); Golden dataset (MAE: 0.034, MSE: 0.0027) |

| Xia et al. [63] | Electricity load prediction using a GRU-RNN | Hourly wind energy generation dataset | Wind dataset (RMSE: 0.0526, MAE: 0.0393); Load dataset (RMSE: 507.2, MAE: 351.5) |

| Kaur, Islam, and Mahmud [64] | RE forecasting using the integration of BiLSTM neural networks with compressed weight parameters using VAE, i.e., a VAE-Bayesian BiLSTM model | Solar generation time series data (2010–213) | RMSE: 0.0985 MAE: 0.0985 Pinball: 0.0386 |

| Wu et al. [65] | Long-term energy usage forecasting using the transformer-based model | Six datasets: ETT | MSE: 0.06 MAE: 0.189 |

| Wu et al. [49] | Global solar radiation prediction using a nonlinear autoregressive neural network model | NSRDB dataset | MAE: 0.93 RMSE: 1.28 MSE: 1.65 |

| Devlin et al. [50] | Transformer long-term load forecasting | US utility company | 2.571% MAPE accuracy increase |

| Dinculescu, Engel, and Roberts [51] | Energy usage forecasting using stationary wavelet transform (SWT) and transformers | UK-DALE: a domestic dataset of five distinct houses | RMSE: 0.0090 MAE: 00061 MAPE: 8.4765 |

| Phan, Wu, and Phan [66] and Gao et al. [67] | Photovoltaic generation forecasting using a transformer-based model | PV power output from North Taiwan, with 1-h intervals and a range of 2 years | 7.73% and 24.18% reduction in both NRMSE and NMAPE |

- Abbreviations: GRU, gated recurrent unit; LSTM, long short-term memory; MAE, mean absolute error; MSE, mean squared error; PV, photovoltaic; RNN, recurrent neural network.

In this paper, we present the modified version of TFT that is based on transformed architecture. Moreover, our proposed version of TFT incorporates VMD that captures the long-range dependencies in the data. We show the effectiveness of our proposed model by evaluating it on the solar irradiance dataset [42].

VMD offers superior decomposition capabilities compared to alternative methods like empirical mode decomposition (EMD) by overcoming issues such as mode mixing and providing better frequency separation, making it more robust for nonstationary signal analysis. Meanwhile, TFT excels in handling sequential data, offering better interpretability of time-varying features compared to classical methods like wavelet or Fourier transforms. TFT’s attention mechanisms also enable it to focus on critical temporal patterns, enhancing model performance in time-series tasks. These characteristics, along with their flexibility in various applications, justify their selection over other methodologies.

3. Methodology

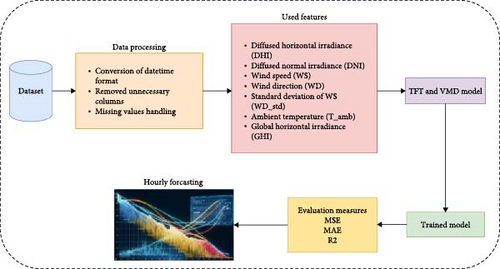

In this study, VMD and a TFT are adopted to forecast the N-hour-ahead solar irradiance. TFT [68] was proposed for time series forecasting, and in this work, some of its original components are modified to enhance the robustness of forecasts on longer time horizons. The forecasting workflow is illustrated in Figure 2. The workflow starts with raw data, and historical sequences and related features are extracted. The features of the dataset used are the same as those used by Haider et al. [42]. Data preprocessing is performed to ensure consistent data quality by removing unnamed columns, converting the “datetime” column to a proper datetime format, and handling missing values by averaging and filling in the missing entries. Then, the forecasting windows from which the model uses the past sequence and future horizon are created as well. The best hyperparameters obtained from the grid search were then used for training and testing using the different evaluation metrics. The modeling part comprises of statistical component VMD and a modified TFT. The model performance is analyzed using different performance metrics.

3.1. VMD

The raw wind speed (WS) data are represented by f(t), with Ns denoting the sample number and K representing the number of decomposed submodels. The decomposed modes are represented by ukt. To determine the number of patterns, rres should not exhibit a noticeable decreasing trend [69].

3.2. TFT

Transformer-based models with an encoder and a decoder have grown in popularity in time series forecasting [32]. The time series past data are fed into the encoder, and the decoder uses autoregressive forecasting to predict future values, leveraging an attention mechanism to focus on the most valuable historical information for prediction. Nevertheless, past approaches frequently ignore various input types or presuppose that all external inputs will be known in the future. TFT, on the other hand, model created especially for multihorizon time series forecasting [66]. TFT addresses these issues by matching structures to certain data properties, providing better than that of black-box models such as neural networks or complicated ensembles that conceal the relative relevance of features and their interactions. Furthermore, TFT represents a novel approach that surpasses other state-of-the-art forecasting models.

To assure good prediction performance with various forecasting issues, the TFT model architecture, which is shown in Figure 3, uses established components to build feature representations for each input type (i.e., static, known future, and observed inputs). There are separate inputs of the time-related input features Xs,t−Ti:t−1 and Xs,t:t+To−1 with no shared parameters. Long-term time series temporal information is effectively handled by multilayer GRU encoders and decoders after the inputs Xs,t−Ti:t−1 and Xs,t:t+To−1 are transformed. The proposed model replaces the usual LSTM layers in the TFT model’s encoder and decoder with GRU layers. One significant advantage of GRU is the unification of the forget and input gates into a single update gate, which allows it to more efficiently capture short-term dependencies and properly simulate sequences with rapid changes. The encoder and decoder outputs from the final layer are combined into a multi-timestep fusion module that distributes weights according to importance. By minimizing the revised quantile loss function, predicted quantile values are obtained. Note that modules with the same color in Figure 3 have the same parameters. TFT is made up of five main parts, which are broken down into gating mechanisms, variable screening networks, GRU encoder-decoder, multihorizon fusion, and PIs.

The TFT captures both short-term and long-term patterns, handles mixed data types, and offers interpretability through attention mechanisms. VMD decomposes signals into IMFs with minimal mode mixing and is robust to noise, making it ideal for nonstationary and complex time series data. Both models enhance time series forecasting by effectively processing intricate patterns.

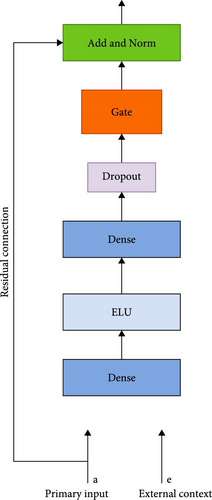

3.2.1. Gating Mechanism

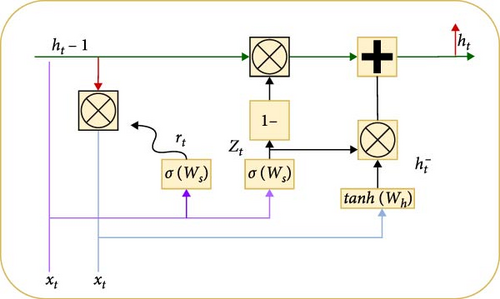

The gradient disappearance problem in RNNs is solved by the GRU’s use of temporal information processing, which retains pertinent information and discards irrelevant information. This system comprises a reset gate and an update gate. Although the update gate stores memory from the previous time step, the reset gate merges fresh input data with previous memory. An illustration is shown in Figure 4.

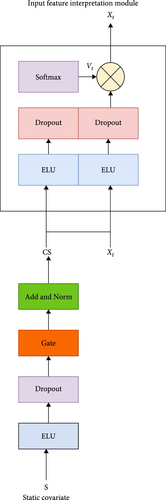

3.2.2. Variable Screening Network

The input feature multihead attention module in Figure 6 weighs the input variables with weight vector ht. The importance of each input feature is represented by ht through the sigmoid activation function σ(.) and the intermediate variable. The ELU activation function is used instead of RELU to alleviate gradient disappearance. The module includes dropout, layer normalization, and softmax layers. The larger the weight ht is, the more important the corresponding input variable is to the output.

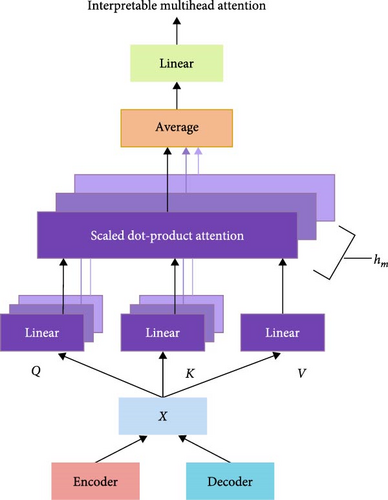

3.2.3. Multi-Timestep Fusion

The multi-timestep fusion module is designed to capture long-term correlations in hourly load forecasting. It produces a vector with the expected size by allocating weights to all of the encoder and decoder outputs from prior time steps. This enables the model to concentrate on more crucial data. Figure 7 depicts the multihead fusion module structure.

The equation above depicts the multihead attention mechanism, where A(.) represents a normalized function, and n is the dimension of the K vector. The weight matrices of Q and K for the hth head are denoted by and , respectively, while WV represents the weight matrix of V shared across all heads to enforce time series causality.

3.3. Loss Function

The weight parameters (W) of the TFT are optimized by jointly minimizing the quantile loss over the set of output quantiles (Q) and the training data domain (U), which consists of M samples.

4. Experimentation

In this section, we will explain the dataset and the process through which we conducted our experiments.

4.1. Dataset

The efficiency of solar PV energy systems is affected by numerous factors, including the location and weather conditions of the installation site. These factors can be broadly categorized into two groups: spatial factors and temporal factors. Spatial factors refer to the physical characteristics of a solar PV plant, such as its size, panel arrangement, and orientation, while temporal factors relate to variations in weather and climate conditions, including seasonal changes, weather events, and time of day.

Both spatial and temporal factors play an important role in determining the amount of solar energy that a PV system can generate. For instance, a larger solar PV plant with more panels will generally generate more energy than a smaller system, while the orientation of the panels and the arrangement of the plant can also impact its efficiency. Additionally, variations in weather and climate conditions can significantly affect the efficiency of PV systems, with factors such as cloud cover, temperature, WS, and humidity all playing a role.

In this study, we used a solar energy irradiance dataset [42], which is numerical data and it contains various parameters related to PV generation, such as GHI, diffused horizontal irradiance (DHI), and diffused normal irradiance (DNI), as well as WS. This dataset was collected over 5 years, from 2015 to 2019, at an EMAP tier 1 meteorological station located 500 m above sea level at 33.64 ° N and 72.98 ° E. The dataset consists of hourly records, and ~41,000 records are available for analysis. By examining the data and analyzing the various spatial and temporal factors that impact PV efficiency, the researchers sought to better understand how to optimize solar energy generation in this region. A detailed description of dataset variables is given in Table 2. We have used the same criteria of feature extraction adopted by Haider et al. [42], in which DHI, DNI, WS, wind direction (WD), and its standard deviation (WD_std) and ambient temperature (T_amb) were selected because of high correlation with GHI.

| Statistics | GHI | DNI | DHI | T_amb | RH | WS | WS_gust | WD | WD_std | BP |

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 197.5 | 177.6 | 88.42 | 21.9 | 59.02 | 1.77 | 4.18 | 175.1 | 13.0 | 944.7 |

| Std | 278. | 301.8 | 127.9 | 8.48 | 20.82 | 1.38 | 2.47 | 108.2 | 10.0 | 9.573 |

| Min | 0.000 | 0.000 | 0.00 | 1.0 | 10.0 | 0.0 | 0.0 | 0.0 | 0.0 | 881.0 |

| Max | 1040.0 | 2777.0 | 843. | 45.3 | 100.0 | 13.20 | 31.0 | 360.0 | 82.2 | 1002.0 |

- Note: Minimum (Min), Mean, maximum (Max), and standard deviation (Std) values of all the daily parameters measured: global horizontal irradiance (GHI) in Wm−2, diffused normal irradiance (DNI) in Wm−2, diffused horizontal irradiance (DHI) in Wm−2, ambient temperature (T_amb) in °C, relative humidity (RH) in %, wind speed (WS) in ms−1, maximum WS within a specific time interval (WS_gust), wind direction (WD) in °N (to east), WD standard deviation (WD_std) and barometric pressure (BP).

4.2. Data Split and Preprocessing

Before feeding the data into each of the test models, we performed a data check to identify any missing values and the quantity of missing data. Additionally, during exploratory data analysis, a significant number of GHI values equal to zero were discovered. These zero values correspond to nighttime solar GHI values and were expected to be zero. However, this large number of zero values impacted the accuracy of the models, as there are 19,403 GHI values, while there are ~41,000 total records. To address this issue, all values between 7 p.m. and 5 a.m., the typical nighttime hours, were removed from the dataset, resulting in a reduction in the number of zero values to 4236. Then, the remaining missing values are treated as replacing with the average values. After selecting data for a specific time interval, the total number of records available for analysis was 25,785. This process ensured transparency in the dataset being used for modeling, thus improving the reliability of the results obtained from the models. The dataset was split into three subsets: a training set consisting of 70% of the data and a validation and test set consisting of 15% of the data each.

4.3. Training Details

For time series forecasting, a window is defined that consists of two values, the number of time steps taken to forecast the future value and the future values that are to be forecasted. Our experiments involved the use of four forecasting windows, each with a specific size. The sizes of these windows were (7,1), (14,3), (28,7), and (56,14), where the first number represents the number of past sequences and the second number represents the number of future sequences that the model needs to predict.

Since the data sequences are hourly based, the model uses a historical data sequence of a specific number of hours and forecasts future values for certain hours. For instance, in the first window size tuple (7,1), the model uses a past sequence of 7 h to predict the next hour’s sequence. Similarly, the model uses past sequences of 14, 28, and 56 h and predicts 3, 7, and 14 h into the future, respectively. We excluded zero-value transactions from the data between 7 p.m. and 5 a.m.; thus, 14 h of data sequences remain. Therefore, a day comprises 14 h. Hence, forecasting using window sizes (7,1) and (7,3) means predicting solar irradiance based on a half-day sequence, while forecasting using window sizes (28,7) and (56,14) involves taking 2- and 4-day past sequences to forecast the next half- and full-day solar irradiance, respectively. The best results were recorded based on the second-mentioned window. Different experiments were conducted with different training hyper-parameters, but the architecture of the TFT model used had the same settings, such as the number of layers, as in its original implementation. We experimented with different batch sizes, i.e., 16, 24, 32, 64, and learning rates between 0.0001 and 0.1. The quantile loss with seven quantiles was used as a loss function. We trained each model for 100 epochs using the Adam optimizer. The best validation model was used for testing purposes.

The models were implemented with a Ubuntu system using Python 3.8 with PyTorch. To split the data, the Time Series Dataset module was utilized. Early stopping was employed to prevent overfitting. The training for the TFT model was performed using a 1080 Ti (R) Core (TM) i7 GPU with 128 GB RAM.

5. Baseline Models

In this section, we present the baseline methods for our proposed model. We experimented with different settings, such as the number of layers and hidden units. Final configurations are given in the related subsections.

5.1. LSTM

LSTM is an improved RNN that is widely used for time series forecasting and solar irradiance [37]. This study used three layers of LSTM, with 64, 32, and 16 hidden units, and one dense layer, with 1, 3, 7, and 14 neurons for window sizes of (7,1), (14,3), (28,7), and (56,14), respectively.

5.2. ANN

ANNs consist of input, hidden, and output layers, with the hidden layer learning about data patterns through neurons. The hidden layer then forwards the result to the output layer. ANNs have self-learning capabilities and rely on data experience to learn and predict results. In this experiment, two ANN hidden layers, with 256 and 128 hidden units, and one dense layer for output, with 1, 3, 7, and 14 neurons for windows sizes of (7,1), (14,3), (28,7), and (56,14), respectively, were used.

5.3. Original TFT

Transformer-based models, comprising encoder and decoder parts connected via an attention mechanism, are well-known in time series forecasting. The encoder uses historical data as input, while the decoder predicts future values by focusing on valuable historical information. The decoder uses masked self-attention to prevent future value acquisition during training. However, previous methods do not consider different input types or assume that all exogenous inputs are known in the future. The original TFT [69] addresses these issues by aligning architectures with unique data characteristics via appropriate inductive biases.

5.4. GRU

GRU is also an RNN, and it can also be used as a solar irradiance model [63]. This study used three layers of GRU, with 64, 32, and 16 hidden units, and one dense layer, with 1, 3, 7, and 14 neurons for window sizes of (7,1), (14,3), (28,7), and (56,14), respectively.

5.5. CNN–LSTM

CNN–LSTM is a hybrid architecture consisting of LSTM layers stacked after CNN layers. Some recent studies have used such architecture for time series forecasting as well [37]. This study used two layers of CNN, with 32, and 16 hidden units, two LSTM layers with 16 hidden units each, and one dense layer, with 1, 3, 7, and 14 neurons for window sizes of (7,1), (14,3), (28,7), and (56,14), respectively.

5.6. CNN–LSTM With Temporal Attention (CNN–LSTM-t)

This study uses a CNN–LSTM model augmented with a temporal attention mechanism. The CNN layers are employed for local feature extraction from the input data, while the LSTM layer captures long-range dependencies and temporal patterns. The attention mechanism dynamically weighs the importance of different time steps in the LSTM output sequence, allowing the model to focus on relevant information. The CNN–LSTM-t used in this study consists of one Convolutional layer followed by a MaxPooling layer and a flattened layer. This is followed by an LSTM layer followed by an attention module with one Dense layer and additional layers for reshaping, applying activation functions, and performing element-wise multiplication. At the end, one dense layer, with 1, 3, 7, and 14 neurons, was applied for window sizes of (7,1), (14,3), (28,7), and (56,14), respectively.

5.7. Vanilla Transformer

Transformers are cutting-edge models in domains of different domains of artificial intelligence, including time series prediction that outperform typical deep learning architectures [70]. This study uses a vanilla transformer model with two heads of multiheaded self-attention followed by two dense layers, one with 1, 3, 7, and 14 neurons for window sizes of (7,1), (14,3), (28,7), and (56,14), respectively.

6. Results and Discussion

In this section, the performance of the proposed TFT, baseline models, and original TFT models is analyzed with varying forecasting window tuples, i.e., (7,1), (14,3), (28,7), and (56,14). The first value in the tuple represents the number of past time steps used for forecasting, where each step is 1 h, while the second value represents the number of time steps being forecasted, which is also 1 h. A window size of (4,1) is a single-step forecast, while the others are multistep forecasts ranging from 3 to 14 h ahead. As the dataset has zero values of GHI from 7 PM and 5 AM, the solar day becomes 14 h. Hence, a window size of (56,14) means using the past 4 days of data as input and forecasting the next day’s data. The dataset used [42] in this study contains GHI data as well as weather data in which temperature, WS, etc., are variable quantities and metaphorical features.

6.1. Baseline Models

Each model underwent rigorous training over 100 epochs for each window size, employing a batch size of 64 and utilizing mean squared error (MSE) as the chosen loss function. The model architecture featured two hidden layers, ensuring the capacity to capture and leverage temporal dependencies within the data. As the window size increased from (7,1) to (56,14), we observed a consistent trend of increasing loss values, indicating that larger window sizes allowed the model to capture more extensive contextual information except for CNN–LSTM, GRU in which loss decreased as window size increased. The loss was irregular for the model CNN–LSTM-t and transformer. This progression towards larger window sizes was accompanied by the continued utilization of two hidden layers, highlighting the model’s adaptability and efficiency in accommodating the expanded context.

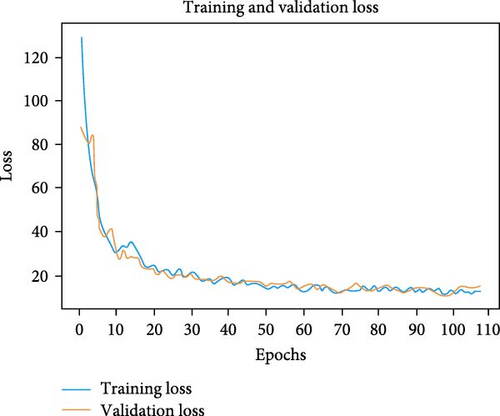

6.2. Proposed TFT Models

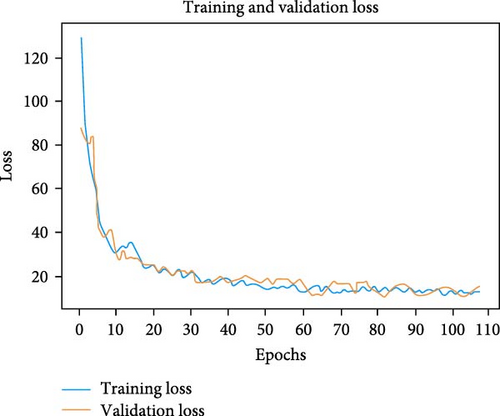

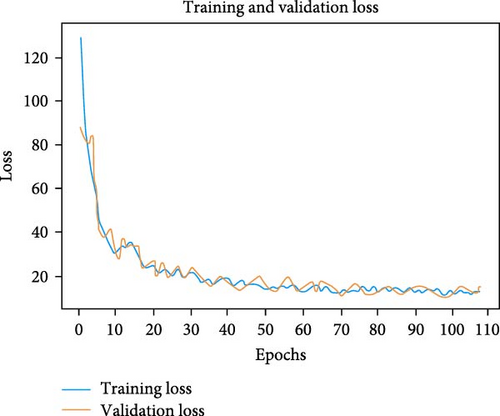

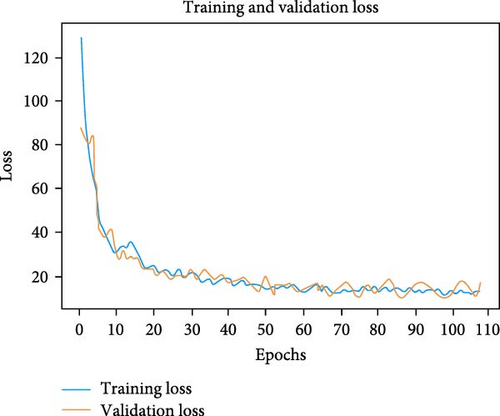

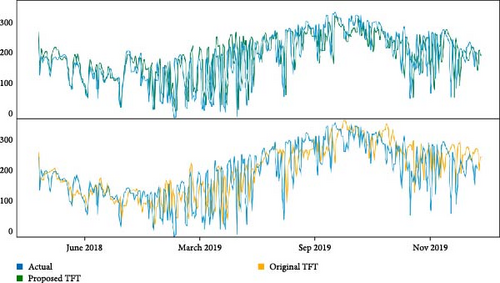

This section presents the training and evaluation performance with the training and validation sets, respectively. In Section 5.4, we briefly introduce the metrics used to evaluate the models. The training and validation loss plots of the proposed TFT model are given in Figure 8 for window sizes of (7,1), (14,3), (28,7), and (56,14). The loss graphs show that the performance starts decreasing upon lengthening the forecasting window size.

Conducted a systematic investigation by employing different window sizes, batch sizes, learning rates, layers, loss functions, and optimizers. The aim was to identify the combination that yields the most optimal results for our forecasting task. Notably, among the evaluated window sizes of (28,7), (14,3), and (56,14), the window size of (56,14) emerged as the most suitable choice, demonstrating superior performance across multiple configurations. This central window size effectively captures the necessary historical and contextual information, resulting in more accurate predictions. Consequently, we focused our subsequent analyses and optimizations primarily on the (56,14) window size, discarding configurations with lower performance potential. By strategically narrowing our focus to the optimal window size, we were able to achieve significant enhancements in the accuracy and efficiency of our forecasting model.

Figure 8 shows the performance of the proposed TFT with the actual irradiance value and shows the effectiveness of our methodology, mainly the GRU-based encoder and decoder, variable screening network, and feature fusion multihead attention mechanism.

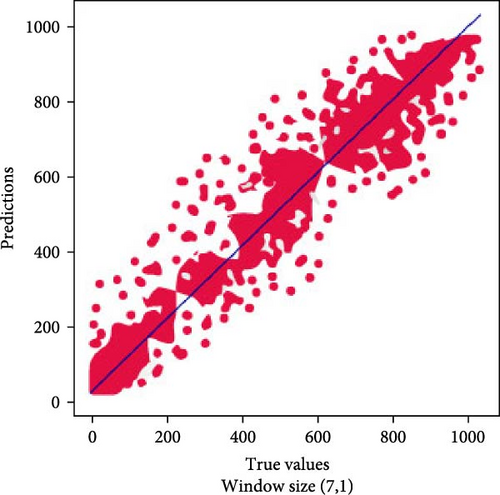

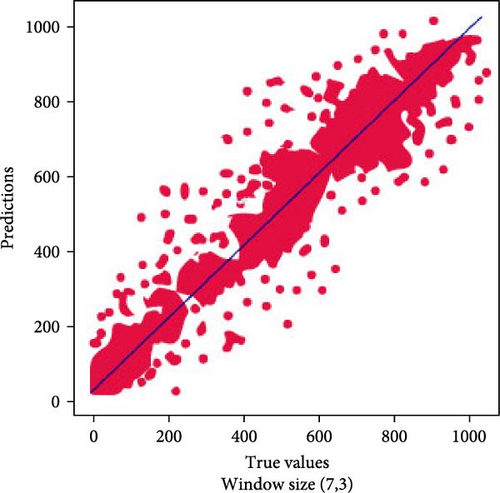

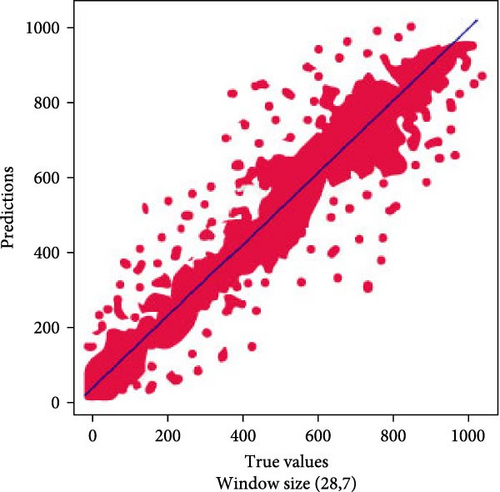

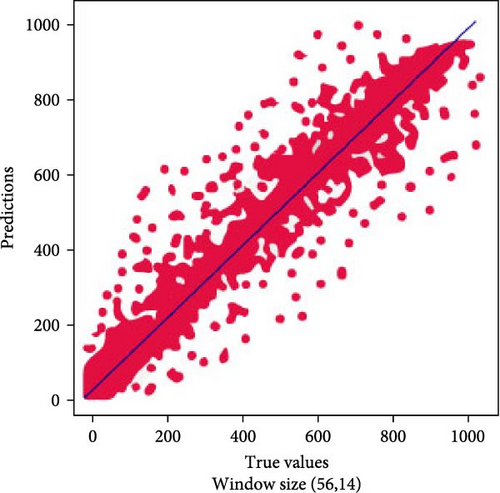

Figures 9 and 10 show the scatter plots for our proposed models on different window sizes. These plots provide valuable insights into the dispersion of prediction errors. Notably, for the window size (7,1), the points on the scatter plot exhibit a more scattered distribution, indicating a wider range of prediction errors. In contrast, the (14,3) window size shows points that are slightly more clustered, suggesting a more consistent performance. Moving to larger window sizes, such as (28,7) and (56,14), we observe an increasing dispersion of points, signifying a wider spread of prediction errors. This analysis highlights how the choice of window size can impact the reliability and consistency of our forecasting models, with smaller window sizes tending to produce more concentrated predictions and larger window sizes leading to a broader range of forecasting accuracy.

6.3. Performance Comparison

This section presents the comparative performance analysis of the proposed TFT, original TFT, LSTM, and ANN models in terms of all four performance metrics, mean absolute error (MAE), R2, MSE, and standard deviations (SD), and all forecasting window sizes, (7,1), (14,3), (28,7), and (56,14). For forecast horizon (7,1), it can be seen that our proposed model has outperformed the original TFT and other models significantly, with an MAE score of 19.29 and an R2 of 0.992, while the ANN also surpasses the original TFT and LSTM models in 1-h ahead prediction, with an MAE of 21.53 and an R2 of 0.981, while MAE values of 22.81 and 25.21 and R2 values of 0.971 and 0.926 are obtained using the original TFT and LSTM models, respectively. For 3-h ahead, forecasting, the proposed TFT and original TFT models have better performance compared to the LSTM and ANN models, with MAE values of 22.55, 24.28, 27.74, and 29.34, respectively, and R2 values of 0.978, 0.960, 0.902, and 0.895, respectively. From the 1-h prediction to the 3-h horizon, the original TFT and LSTM models perform better than the ANN model; however, the performance of both TFT models is approximately similar. This result shows the effectiveness of the transformer models in capturing long-range dependencies and, hence, perform well on the horizon of the window. In other words, the performance of the ANN and LSTM models deteriorates as the prediction horizon lengthens, as seen by a comparison of their R2 scores at various forecast horizons in Figures 9 and 10. For forecasting window sizes of (28,7) and (56,14), the proposed TFT has R2 scores of 0.940 and 0.921 R2, respectively, while the original TFT has R2 scores of 0.905 and 0.892, respectively. On the other hand, the LSTM and ANN models show deteriorating performance as the forecasting horizon length increases, with R2 scores of 0.882 and 0.831 for a window size of (28,7) and 0.835 and 0.818 for a window size of (28,7). Based on the performance of all models, it can be established that the ANN and LSTM models are highly effective in terms of short-term forecasting. However, as the forecast period becomes longer, the accuracy values tend to decrease. This is evident from the findings presented in Table 3, which displays the performance of each architecture across different forecast horizons.

| Model | Window size | MAE | MSE | R2 |

|---|---|---|---|---|

| LSTM | (7,1) | 25.21 | 1619.70 | 0.926 |

| ANN | 21.53 | 1251.51 | 0.986 | |

| CNN–LSTM | 56.5 | 6424.7 | 0.588 | |

| CNN–LSTM-t | 46.492 | 4415.06 | 0.7171 | |

| GRU | 52.35 | 5289.3 | 0.661 | |

| Transformer | 46.62 | 4310.5 | 0.723 | |

| Original TFT | 22.81 | 1048.81 | 0.971 | |

| Proposed TFT | 19.29 | 957.10 | 0.992 | |

| LSTM | (14,3) | 27.74 | 1883.52 | 0.902 |

| ANN | 29.3 | 1937.99 | 0.895 | |

| CNN–LSTM | 50.81 | 5230.6 | 0.665 | |

| CNN–LSTM-t | 50.54 | 4800.8 | 0.6925 | |

| GRU | 51.9 | 5214.6 | 0.666 | |

| Transformer | 50.92 | 5194.6 | 0.667 | |

| Original TFT | 24.28 | 1384.27 | 0.960 | |

| Proposed TFT | 22.55 | 1242.34 | 0.978 | |

| LSTM | (28,7) | 79.20 | 5624.07 | 0.882 |

| ANN | 95.84 | 7850.51 | 0.831 | |

| CNN–LSTM | 52.6 | 5160.6 | 0.669 | |

| CNN–LSTM-t | 49.2 | 4756.3 | 0.6956 | |

| GRU | 49.2 | 4783.7 | 0.693 | |

| Transformer | 48.5 | 4733.6 | 0.697 | |

| Original TFT | 46.64 | 4918.68 | 0.905 | |

| Proposed TFT | 41.08 | 3742.34 | 0.940 | |

| LSTM | (56,14) | 94.60 | 8606.80 | 0.835 |

| ANN | 129.71 | 11,029.39 | 0.818 | |

| CNN–LSTM | 51.7 | 5134.4 | 0.671 | |

| CNN–LSTM-t | 50.76 | 4813.71 | 0.691 | |

| GRU | 55.21 | 5805.5 | 0.628 | |

| Transformer | 48.49 | 4747.2 | 0.696 | |

| Original TFT | 77.91 | 6842.30 | 0.892 | |

| Proposed TFT | 63.51 | 5280.44 | 0.921 | |

- Abbreviations: ANN, artificial neural network; CNN–LSTM-t, CNN–LSTM with temporal attention; GRU, gated recurrent unit; LSTM, long short-term memory; MAE, mean absolute error; MSE, mean squared error; TFT, temporal fusion transformer.

The decrease in accuracy over longer forecast horizons may be attributed to a variety of factors, including the increased complexity of predicting outcomes over a longer period, the accumulation of errors in the predictions, or the presence of more variability in the data as the forecast horizon expands. Therefore, it is important to consider the forecast horizon and the potential limitations that may arise when evaluating the effectiveness of deep learning models for forecasting. The findings of this study suggest that while these models can provide accurate short-term forecasts, their reliability decreases over longer periods, which should be considered when selecting an appropriate forecasting model. Actual values vs. predicted values by the original TFT and proposed TFT models are shown in Figure 11.

The key advantages of TFT and VMD that contributed to this superior performance are twofold. TFT and VMD enhance the stability and accuracy of the forecasting process by effectively decomposing raw data into manageable subsequences. This advantage enables our model to excel in solar heat irradiation forecasting.

In essence, VMD empowers the TFT model with a more informative and discriminative input representation, allowing it to learn and leverage the inherent patterns and relationships within solar irradiance data more effectively. This, combined with the model’s other features, results in the observed superior forecasting performance, making it a valuable asset for solar irradiance prediction tasks.

To estimate the robustness of our modified TFT model, we run training using three different random runs. Table 4 presents the average MAE, average MSE, and their respective SD for the proposed TFT model across different window sizes.

| Model | Window size | Avg MAE | SD of MAE | Avg MSE | SD of MSE |

|---|---|---|---|---|---|

| Proposed TFT | (7,1) | 20.14 | ±2.19 | 972.43 | ±113.29 |

| Proposed TFT | (14,3) | 21.71 | ±2.31 | 1270.95 | ±175.2 |

| Proposed TFT | (28,7) | 41.19 | ±3.34 | 3792.01 | ±343.2 |

| Proposed TFT | (56,14) | 64.04 | ±3.07 | 5210.44 | ±451.3 |

- Abbreviations: MAE, mean absolute error; MSE, mean squared error; SD, standard deviations; TFT, temporal fusion transformer.

For the (7,1) window size, the proposed TFT model exhibits an average MAE of 20.14 ± 2.19, suggesting that, on average, the model’s predictions differ from the ground truth values by ~20.14 units, with a variation of 2.19 units. The average MSE for this configuration is 972.43 ± 113.29, reflecting the squared magnitude of errors and their variability. Similarly, for the (14,3) window size, the model’s performance is characterized by an average MAE of 21.71 ± 2.31 and an average MSE of 1270.95 ± 175.2.

A comparative overview of computational loads of four machine learning models, namely LSTM, ANN, original TFT, and proposed TFT, is presented here. The number of parameters reflects the model’s complexity, with LSTM having 55.7 K, ANN with 40 K, original TFT with 43.5 K, and the proposed TFT with 45 K parameters. Hence, the performance of our proposed TFT is increased with a slight increase in the number of trainable parameters as compared to the original TFT. In the future, we will modify our network to reduce the number of parameters and devise techniques that are efficient in the long-term as well as short-term forecasting.

7. Conclusion

Solar irradiance forecasting is essential for the safe and efficient operation of solar power plants and for ensuring an uninterrupted power supply. The accurate prediction of solar irradiance is challenging due to its random and intermittent nature. To improve solar irradiance forecasting, we propose a TFT combined with VMD to consider historical solar irradiance and other meteorological data. The system decomposes the historical solar irradiance series using VMD, and the decomposed submodels are adopted as historical inputs for the TFT model. The experimental results show that the VMD-TFT model outperforms comparable models, such as the original TFT, LSTM, and ANN models, in terms of various indicators.

From this study, it was found that our proposed TFT model performs better than the baseline models for long-term solar irradiance forecasting, with an R2 score of 0.921 for the TFT model compared to an R2 score of 0.892 for the original TFT. The ANN performs well compared to the LSTM and original TFT models when the forecasting window size is (7,1). However, the transformer-based models perform better with higher time horizons, which indicates the learnability of the transformer-based models on long-term dependencies. In the context of solar irradiance forecasting, potential future research directions could include increasing the scope of the data used or experimenting with different time scales to examine longer time intervals.

Beyond enhancing forecasting accuracy, our research has broader implications for renewable energy grids and integration strategies. The improved prediction models can contribute to better grid stability by enabling more accurate solar power generation forecasts, which are crucial for balancing supply and demand. This, in turn, can influence policies related to renewable energy adoption and integration, promoting more efficient and reliable solar power utilization. Additionally, the insights gained from the importance of past and future variables and attention to different lag orders can guide the design of advanced forecasting systems, ultimately supporting the transition to a more sustainable and resilient energy infrastructure. Researchers and decision-makers can leverage this analysis for accurate forecasts and meticulous planning, paving the way for more effective integration of solar energy into the power grid. Attention weight patterns could also be utilized to analyze the consistent temporal patterns in solar irradiance data, such as seasonal fluctuations. These avenues of research have the potential to increase confidence in solar irradiance forecasting among human experts.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The researchers would like to thank the Deanship of Graduate Studies and Scientific Research at Qassim University for financial support (QU-APC-2024-9/1).

Acknowledgments

The researchers would like to thank the Deanship of Graduate Studies and Scientific Research at Qassim University for financial support (QU-APC-2024-9/1). Also, the authors would like to thank the research chair of Prince Faisal for Artificial Intelligence (CPFAI) at Qassim University for facilitating this research work.

Endnotes

Open Research

Data Availability Statement

The data set used in this search can be downloaded from [42].