Sarcasm Detection in Sentiment Analysis Using Recurrent Neural Networks

Abstract

In recent years, online opinionated textual data volume has surged, necessitating automated analysis to extract valuable insights. Data mining and sentiment analysis have become essential for analysing this type of text. Sentiment analysis is a text classification problem associated with many challenges, including better data preprocessing and sarcasm detection. Sarcasm in the text can reduce sentiment accuracy unless the model is specifically designed to identify such nuances. Sarcastic data conflicts with context, which leads to ambiguity. This article examines the impact of various preprocessing methods on sentiment classification. It presents a sarcasm detection–based sentiment analysis model that utilises an effective preprocessing system and a robust technique for identifying sarcastic text. The preprocessing method includes the impact of removing stop words, whitespaces, usernames, hashtags, and unnecessary URLs, mapping contractions dictionaries, designing word reference sheets, and stemming and lemmatization on the model. Sarcastic headlines are collected, and a word cloud is created using the frequency of words with sarcastic headlines. Custom sarcasm detection models based on long short-term memory (LSTM), recurrent neural network (RNN), and word embeddings are utilised for sarcasm detection. The model has achieved a validation accuracy of 0.88 and an F-measure score of 72% for the overall system.

1. Introduction

Natural language processing (NLP) helps devices interpret information automatically and behaves like a bridge between machines and human language, making it an essential domain of artificial intelligence. Sentiment analysis is a subdomain of NLP that focuses on the emotion being expressed. A necessary step of sentiment analysis is data preprocessing, which is responsible for transforming raw data into a format that can be understood and analysed by computers and machine learning. Preprocessing deals with errors, inconsistencies, incomplete information, and nonuniform patterns in raw data to present neatly formatted data to the machine for processing. Different preprocessing approaches are used, which include the removal of stop words, whitespaces, usernames, hashtags, and unnecessary uniform resource locator (URLs), mapping contractions dictionary [1], designing word reference sheets, stemming [2], and lemmatization [3]. Sarcastic text negatively impacts sentiment analysers, and this research contributes to detecting sarcasm in text. Here, a word cloud is created using the frequency of words with sarcastic headlines, and an LSTM [4], recurrent neural network (RNN) [5], and word embedding–based sarcasm detection model [6] are presented. Besides interpretation, it also helps in context detection [7] in structured and unstructured data.

Linguistic patterns refer to how the core parts of a sentence are put together, and they are not only meant to detect semantic behaviour but also depend on variable features like regional dialects, sense ambiguity [8], contextual behaviour, and slang, which contributes to its complexity and triggers many challenges. Sentiment analysis analyses opinionated text and determines attitude towards the topic by assigning polarity. It tries to address the challenges that are associated with language processing. Various individual entities count towards language processing, including classification by words or phrases. The writer’s attitudes and feedback about a particular entity are easily understood and called opinion mining. Several NLP approaches are used to detect positive and negative polarity attached to the subject of the text. Sentiment analysis is associated with many challenges that can reduce sentiment accuracy unless the model is specifically designed to detect those challenges [9]. A few common but critical challenges faced by sentiment analysis are sarcasm detection, word sense ambiguity [10], entity-named recognition [11], sentiment strength, anaphora recognition [12], and parsing.

Sarcasm detection is one of those challenges in which people express their negative opinions using neutral or positive words, which is standard on social media platforms. Sarcasm detection is not a more manageable task because of the variable nature of languages; it becomes slightly tricky to train text classification models successfully as sarcasm depends on context [13]. In [14], the author explains implicit and embedded sarcasm detection and concludes that it is the most difficult to detect accurately. Different approaches are used to detect sarcasm in text, including rule-based, statistical models, and deep learning approaches, which are as follows.

Rule-based approaches use evidence that is treated as a rule and might give a prediction of sarcastic text. In the case of Twitter datasets, hashtag sentiment can be considered a predictor. If hashtag sentiment does not match the tweet’s meaning, that tweet can be regarded as sarcasm. In [15], a novel self-deprecating sarcasm detection approach is proposed using rule-based and machine learning techniques. The experimental results of this approach are exceptional when tested on various datasets.

Statistical approaches use different machine learning models to classify sarcastic texts and are meant to detect sarcastic features from the contextual information; however, they may vary in features and learning algorithms [16]. Most approaches use bag of words (BoW) besides contextual, n-gram, and design pattern–based features. The text contains several forms of sarcasm that require detection. Most of the work in sarcasm detection relies on the support vector machine (SVM). However, naïve Bayes, decision trees, and regression trees are also widely discussed by researchers [17].

Deep learning architectures have shown proven contribution because they offer flexible modelling alternatives in feature engineering, handle big data, detect complex trends, and are frequently used for multitasking learning [18]. In [19], the similarity between word embeddings is used as a feature for sarcasm detection, resulting in improved accuracy. In recent years, deep learning approaches have improved more than recursive SVM [19].

The irony of sarcasm is derived from the French word “Sarcasmor,” which means “tear flesh” or “grind the teeth.” It represents bitter communication; as Macmillan Dictionary defines it, “sarcasm is an activity of saying or writing the opposite of what you mean or speaking in a way intended to make someone else feel stupid or show them that you are angry” [17]. For example, “It feels great being bored.” Here, the sentence’s literal translation differs from what the writer intends to say, as it has sarcasm. Sarcasm involves portraying negative circumstances as positive and positive situations with negative feelings. Despite using negative emotion while writing sarcasm in text, using only positive words to convey a negative message is preferred. Therefore, people face hurdles with sarcasm detection, especially for social media content such as tweets, reviews, blogs, and online forums, where information is more informal. Interjections are also commonly used to express sarcasm, like dislike contradiction, the negative emotion conveyed with an antonym pair, the rejection of universal facts, and the denial of time-dependent facts.

To comprehend the preferences and behavioural patterns linked to a particular Twitter account, one may analyse and observe the user’s likes and dislikes and readily identify instances of sarcasm. For example, if Rolando’s fans like to post tweets about his success and his Twitter account consists of a tweet like “I love to see Ronaldo’s failure in football” that denies his likes habit, one can easily mark it as sarcastic. Rejection of universal facts [20] is explained by the tweet that “the sun is revolving around the earth” and reality is the opposite as the user is negating universal truths and has the potential to be detected as sarcastic. Temporal facts change over time; for example, “Pakistan played amazingly well to get an amazing win in the cricket World Cup 2015 final,” while Pakistan won only the 1992 World Cup, so such a contradiction can result in a tweet as sarcastic [21]. The feature list for sarcasm detection has three main categories. The first category discusses lexical features: unigram, bigram, trigram, n-gram, and hashtag. The second category is pragmatic, which includes smiles, emojis, and replies. The third is hyperbolic, providing interjection words (wow, oh, yay, and wah), intensifiers (adverbs and adjectives), exclamations, question marks, and quotes. Sarcasm detection applies to domains, for example, Twitter, online product reviews, website comments, Google Books, blogs, and online discussion forums [22].

The proposed approach uses the Twitter dataset for sarcasm detection, a microblogging platform where users can read and write short texts of 140 characters to convey their messages. Due to the restriction in size, people prefer to use symbolic and figurative texts to share their emotions, including emoticons, exclamation marks, and interjection words, which become the most arduous tasks in NLP. The primary objective of this research study is to measure the impact of preprocessing techniques in sentiment analysis and to improve accuracy by incorporating deep learning approaches for sarcasm detection and the latest preprocessing techniques. The significant contribution of this approach is an enhanced sentiment detection tool that utilises state-of-the-art preprocessing methods, which facilitate accurate data analyses, combined with effective word embedding techniques and a novel deep learning model employing LSTM and RNN. Unlike state-of-the-art methods that often rely on conventional preprocessing techniques or generic deep learning models, our approach integrates specific preprocessing strategies with deep learning architectures specifically tailored for sarcasm detection. This combination enables an enhanced understanding of sentiment, addressing challenges that traditional models struggle with, such as context ambiguity and subtle sarcasm.

The literature explores several machine learning techniques for sarcasm detection, including traditional methods such as SVM and logistic regression, alongside deep learning approaches like RNNs and LSTMs. While classical methods offer simplicity and interpretability, deep learning models, particularly LSTMs and bidirectional LSTMs (BiLSTMs), provide better performance by capturing sequential dependencies in text, making them well-suited for detecting subtle patterns like sarcasm. The rest of the paper is organised as follows. Section II describes the literature review. Section III describes the methodology, having two subsections. The first section presents the impact of various preprocessing approaches on sentiment analysis and mentions the techniques used here. In the second subsection of the methodology, the LSTM, RNN, and word embedding–based sarcasm detection model are applied to a word cloud containing sarcastic data. Section IV explains the result, and Section V describes the conclusion and future work.

2. Literature Review

Many research articles on the recognition of sarcasm have been published. Several methods have been proposed based on various techniques, including statistical models, corpus based, pattern recognition, supervised or unsupervised machines, and deep learning. In [22], the authors proposed six algorithms for sarcasm detection in tweets on Twitter and made a comparison report. However, using predefined rules, this approach can detect sarcastic data of only six types. In [23], the authors proposed a model that uses SVM to detect sarcastic tweets based on a concept level, common sense knowledge, coherence, and machine learning approaches. The suggested classifier is an ensemble of two SVMs with two different feature sets. However, some limitations include inappropriate concept expansion that may lead to misclassification and nonsarcastic sentences being perceived as sarcastic. Sarcasm conveys implicit criticism that is hard to detect, even for humans. This complexity in sarcasm detection can lead to misunderstandings in sentiment analysis and is even more complicated when dealing with social media platforms where content is messy and informal [24].

Researchers have argued that sarcasm is not only relevant to the writer’s meaning, the opposite of what he says, but is also about a meaning inversion that must be broad and can evaluate attitude, behaviour, and propositional content [25]. Several machine learning algorithms are used to detect sarcasm. Yet deep learning methods such as CNNs and RNNs often excel with noisy and unstructured datasets due to the multilayer perceptron [25]. Combining different machine learning approaches with deep learning approaches has the potential to fine-grain results. Researchers have determined that optimising preprocessing can lead to more accurate outcomes. As proposed by [26], a sarcasm detection method incorporates improved preprocessing techniques and swarm optimisation to enhance results. Bagging and boosting are applied in parallel to limit noise. However, this approach has a limitation as it uses a manually arranged dataset and is physically named as sarcastic and nonsarcastic, mainly human dependent, with only 1000 tweets. This dataset needs to be expanded more to get better results.

Emojis and slang dictionaries are essential, especially in social media sarcasm detection, and [27] is one of the necessary contributions to it where different classification algorithms are compared. The best classifier is paired with additional preprocessing and filtering approaches to analyse results. The slang and emojis dictionary is mapped with a better-performing model selected from the previous step but only applicable to short data. Corpus-based sarcasm detection is a standard topic applied to movie reviews to detect irony [28], but using humans to analyse comments demands more manual effort. SentiWordNet is a well-known sentiment strength detection lexicon used to detect sentiment in newspaper headlines [29]. Another lexicon-based approach is integrated with a bootstrapping algorithm to detect sarcasm in the text by automatically learning words related to positive emotions and negative sentiments. These learned sentiments and situational words help detect sarcasm in a tweet by analysing their context [29]. While selecting the dataset, only those tweets associated with the sarcasm tag were shortlisted [30].

On the other hand, different types of sarcasm are also discussed broadly in different research approaches. A behavioural modelling approach was proposed to detect sarcasm on Twitter, in which a SCUBA framework that captures the likelihood of a person being sarcastic or not is evaluated with better results [31]. However, the SCUBA framework needs detailed historical information to detect sarcastic tweets. In a pragmatic-based classification, symbolic and figurative text (including smileys, emoticons, replies, and @users) in tweets is used to detect sarcasm, one of the most powerful features. It is more common in shorter length platforms where there is a limitation in message size. Various authors have used this pragmatic feature in their work to detect sarcasm. One of the most important contributions that adopt pragmatic-based classification is where the Hadoop-based framework is used to detect sarcasm [32].

Text properties, such as intensifier, interjection, quotes, and punctuation, are also essential to detect sarcasm in textual data, and this approach is known as hyperbole text classification. It is more precise in practical experimentation, as several authors have achieved high accuracy in their research to detect sarcasm, especially in tweets. Utsumi et al. [33] have reviewed vital parts of speech (POSs), including extreme adjectives with adverbs, and debated how unnecessary adjectives and adverbs intensify the text and detect implicit sarcastic behaviour. The authors in [32] have discussed how hyperbole helps detect sarcasm by using interjection and punctuation.

2.1. Recent Trends in Approaches of Sarcasm Detection

A slight decline is observed in regression-based model standard for classification and sarcasm detection. However, recent sarcasm detection approaches involve extracting text features [34]. The benefits of automatic feature extraction show an apparent increase in more sophisticated techniques like CNN and LSTM instead of SVM, NN, and random forest [33]. CNN has been used widely for language modelling, spam detection, and sentiment classification because convolution layers convolve features by applying filters to feature matrices using the activation function. Each row relates to a word vector deduced from a word embedding approach. Hyperparameters associated with CNNs include padding, stride, size, pooling layers, and channels. Different channels correspond to various input sizes; separate channels will be designated for Word2Vec and GloVe. This research study will utilise GloVe- and LSTM-based models.

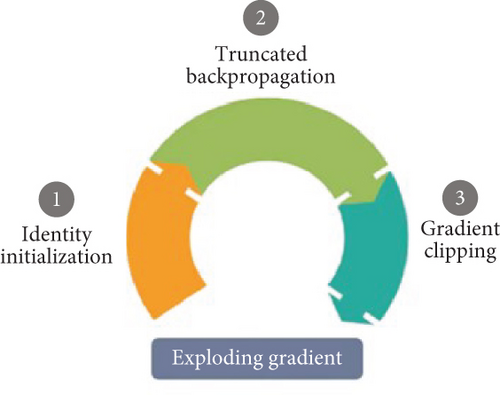

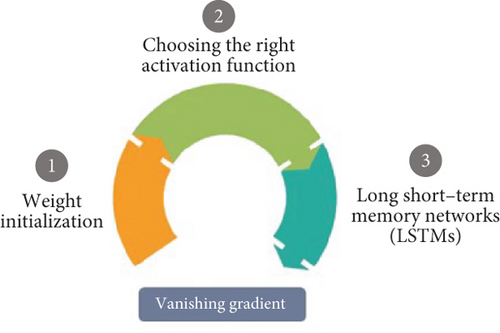

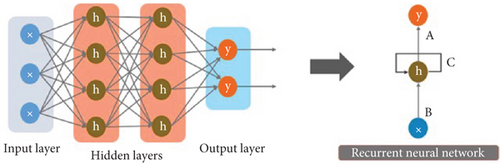

RNN is equally essential for text prediction, as it uses a sequential approach that considers context. RNN model structure is susceptible to vanishing gradient where the gradient is decreased to such a low value that it becomes impossible to train the system. LSTM and gated RNNs are used with improved results to compensate for the vanishing gradient. LSTM has demonstrated its success rate in sarcasm detection by addressing exploding and vanishing gradient issues, as illustrated in Figures 1 and 2, by establishing input relationships to cultivate long-term memory dependencies. This is regulated by the sigmoid function, which makes it more complex than RNN. Exploding and vanishing gradient problems are the two common challenges of training deep learning models. The exploding gradient issue is presented in Figure 1, where gradients grow uncontrollably during backpropagation, leading to unstable updates and divergence in model training. This often occurs in deep networks with improper weight initialisation or high learning rates.

In contrast, Figure 2 highlights the vanishing gradient problem, where gradients shrink exponentially as they propagate backwards, making it difficult for earlier layers to learn meaningful representations. This is particularly problematic for RNNs and deep feedforward networks. Techniques such as gradient clipping, proper weight initialisation, and activation function choices are commonly employed to mitigate these issues. Understanding these problems is crucial for improving model stability and achieving better performance in sentiment analysis tasks.

Embedding approaches are crucial in detecting sarcasm more accurately in deep learning–based systems. They are used to map a real-valued vector space to words. Like-meaning words usually have a similar vector representation, which helps to create clusters. Vector representations serve as input to neural networks. Additionally, word embeddings can be either static or contextual. Contextual embedding captures the uses of words across varied contexts, while static embedding maps the semantics of each word and mentions how it should be used. It is also known as semantic modelling. Oven and microwave have almost the exact word vector model representation, but Onion will have a different representation. GloVe and Word2Vec are two widely adopted methods for word representation. Word2Vec maps the vector representation of each word by deriving the context of surrounding keywords. A deep learning algorithm may accept the neural network’s output if required. Words with low cosine similarity are different, and words with high cosine similarity are the same. The GloVe designs a local word vector by considering words’ representation in regional and global contexts.

Contextual word embedding provides the probability distribution of context and differentiates it from similar words by generating vector representations and a trained model. Deep learning approaches are widely used in research to detect sarcasm. A CNN, LSTM, and deep learning–based model for sarcasm detection have been proposed by [35], where two datasets were created for testing and training. The training dataset consists of 18,000 sarcastic tweets annotated with the hashtag sarcasm and 21,000 nonsarcastic tweets. Another dataset contains 2000 tweets annotated by researchers. The initial input layer was padded to ensure the input size matched the word embedding dimension 256. Using the sigmoid function, the input layer was fed into a two-layer CNN and then passed to the pooling layer, yielding a two-layer LSTM, which was then input into a fully connected DNN, resulting in softmax output with an F1 score of 0.921. Recursive SVM was also considered, resulting in poor performance with an F1 score of 0.732.

Another recent approach for sarcasm detection proposed by [36] utilised BLSTM, CNN, and word embeddings to develop the site-BLSTM, which was trained and tested on two datasets. The first dataset was balanced, containing 7994 tweets classified as sarcastic and 7324 as nonsarcastic, derived from the SemEval 1015 task. In contrast, the second dataset was unbalanced, containing 15,000 sarcastic and 25,000 nonsarcastic tweets annotated by automatic sarcasm detectors. The input tweet text is processed through the GloVe embedding layer to generate word vectors for the BLSTM layer. The softmax attention layer produces an output vector that is combined with an auxiliary feature vector, which is then input into the CNN using the ReLU activation function and max-pooling output layer, followed by the softmax output layer that classifies the text as sarcastic or nonsarcastic. This model achieved a score and F-measure of 0.916 on the SemEval dataset and 0.8828 on the imbalanced random tweet dataset. Another approach, A2Text-Net [37], employs a deep neural network to mimic face-to-face speech by integrating auxiliary variables such as punctuation, POS, numerals, and emojis to enhance classification performance. The results from A2Text-Net demonstrate superior classification performance over conventional machine learning and deep learning algorithms, achieving an F-measure of 80% for Twitter. The same dataset was used with DNN and LSTM, resulting in scores of 79% and 80%, respectively.

3. Methodology

3.1. Text Preprocessing

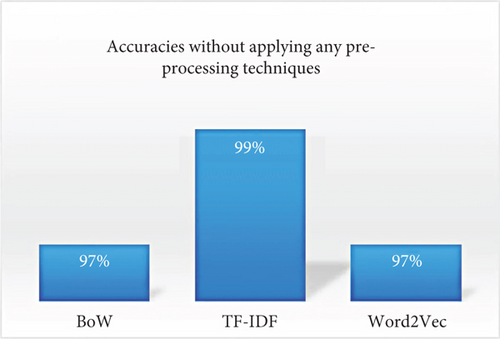

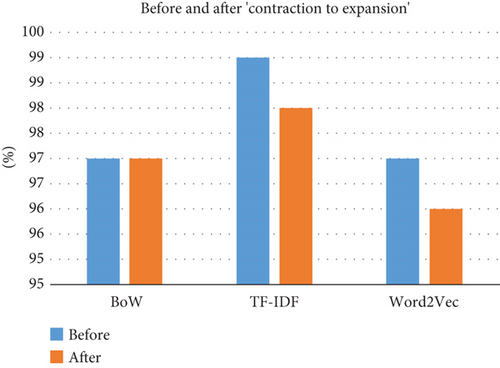

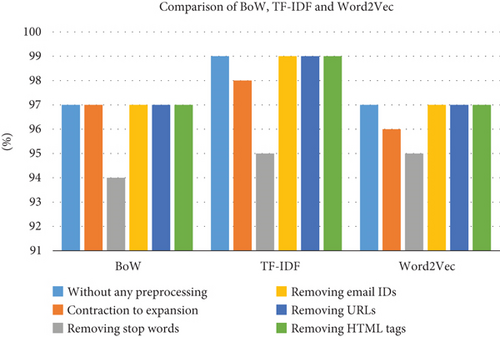

This section will discuss the results obtained before and after several preprocessing methods are applied individually. The impact of preprocessing is assessed by observing fluctuations in the classification performance of the sentiment classification task, which is measured in terms of accuracy. The term frequency–inverse document frequency (TF-IDF) is better than BoW and Word2Vec when no preprocessing technique is applied. As shown in Figure 3, TF-IDF gets a maximum accuracy of 99% without any preprocessing. This is because TF-IDF assigns low weightage to frequently occurring words compared to less frequently occurring words. In the case of contraction to expansion, the accuracy of TF-IDF and Word2Vec is reduced by 1° each, while the accuracy of BoW remains unchanged. It can be observed that contraction to expansion does not improve performance. Hence, as evident from Figure 4, contraction to expansion is an ineffective preprocessing technique.

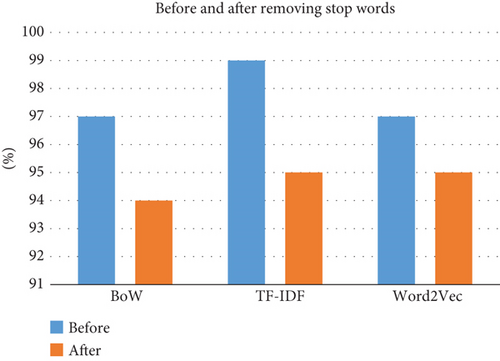

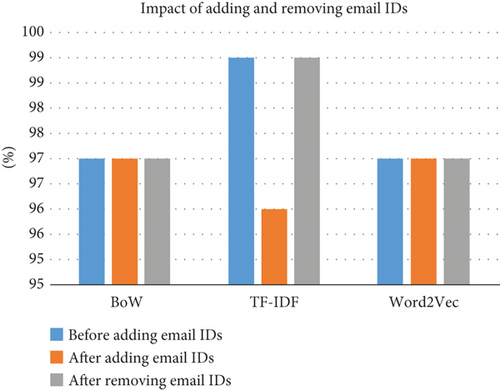

When stop words are removed from the dataset, the accuracy of all models is reduced. The accuracy of TF-IDF is reduced by 5°, BoW by 3°, and Word2Vec by 2°, as shown in Figure 5. Many of the previous studies like [2–9] found the removal of stop words from the data useful for sentiment classification, but we found that the removal of stop words adversely affects the sentiment classification which is also reported by [10–13]. The classic method of removing stop words is based on precompiled lists. These lists also contain the words “no,” “nor,” and “not” removed, which can change the negative sentiment to a positive. For example, if a user reviews a product with a single statement, “I am not happy with this product and hence not recommended.” After removing the stop words, the review will become “happy product hence recommended”; a negative review will be counted as a positive review. To assess the impact of “email IDs” in the dataset, we add randomly generated “email IDs” to each review and check their accuracies on all models. Afterwards, we remove “email IDs” from the dataset through regular expression and record the accuracies to study the change inaccuracies. The accuracy of TF-IDF drops by 3° and remains unchanged in the case of BoW and Word2Vec, as illustrated in Figure 6.

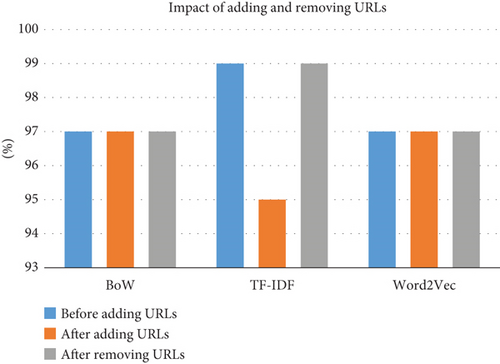

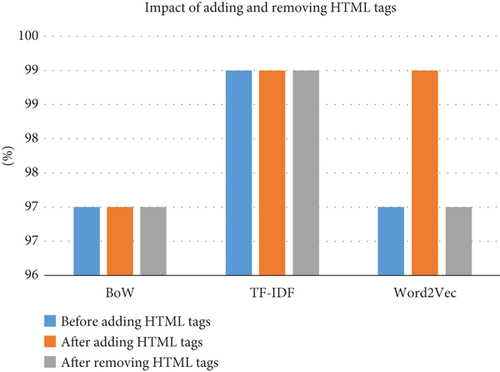

To assess the impact of “URL” in the dataset, we add a randomly generated URL to each dataset review, calculate the accuracies, remove the randomly added URL using regular expression, and calculate the accuracies. It can be seen that removing URLs is an effective preprocessing technique, as it effectively reduces the vocabulary size. In contrast, there is no impact on the performance of BoW and Word2Vec. In the case of TF-IDF, accuracy decreases by 3°, as shown in Figure 7. Similarly, to check the impact of HTML tags in the dataset, we first add randomly generated HTML tags to each dataset record and record the accuracies. Then, we remove the HTML tags by regular expression and record the accuracies of BoW, TF-IDF, and Word2Vec. Figure 8 shows that removing HTML tags is an effective preprocessing technique because it reduces the vocabulary size and does not affect performance. However, some inconsistency can be seen in the case of Word2Vec, when HTML tags were added to the dataset, but we could not find any justification.

- •

Contraction to expansion: In general, short form of famous phrases is frequently used in a text, that is, can’t, it’s, I’d, wasn’t, and he’ll are used for “cannot,” “it is,” “I would,” “was not,” and “he will,” respectively. The expanded version of these phrases contains more meaning than the contracted version, so using the extended version of such words is preferred.

- •

Removing stop words: Stop words are commonly used in any language. They are critical to many applications, and the reason why is frequently explained as follows: If we remove the terms widely used in a given language, we can focus on the essential words instead.

- •

Removing URLs: A URL addresses a unique resource on the Web. Some reviewers provide a URL to refer to another online resource in their review.

- •

Removing HTML tags: Reviews collected from online websites commonly contain HTML tags; for example, <br>, <h1>, and <b> are some widely used tags used for line break, heading, and bold, respectively.

- •

Email IDs were removed.

For this experiment, a manually cleaned dataset of 500 text reviews (250 positives and 250 negative reviews) is selected. For classification, SVM is chosen as it is the most frequently used conventional machine learning algorithm for sentiment analysis with three feature extraction models, that is, BoW, TF-IDF, and Word2Vec.

The collected dataset contains noise that must be removed to feed the model. Many algorithms and libraries are used to clean data, including removing stop words, named entity recognition, and POS tagging. Natural Language Toolkit (NLTK) is a relatively simple old library with a lot to offer in preprocessing. Hence, NLTK is used to perform the necessary preprocessing steps discussed here.

Tokenisation and necessary replacements: The dataset is tokenised, and emoticons, punctuations, and symbols are either replaced or removed. Emojis, punctuations, and symbols in tweets cannot be overlooked, as they may convey valuable sarcasm-related information.

A word reference sheet is constructed, mapping these signs with references that can be positive, negative, or neutral. If available in the reference sheet, the corresponding tag for each sign is assigned or removed to be incorporated later.

Contraction dictionary: Contractions are expended by creating a lexicon and then using a regular expression to map words with the lexicon.

Stop words and extra spaces removal: All text is converted to lowercase letters, and punctuations are removed. Stop words are the most common words that increase the noise in data, such as this, that, and here, and they are not meant to give valuable information, so NLTK is used to remove such stop words and extra spaces. Usernames, hashtags, and unnecessary URLs are removed.

Stemming and lemmatization are also applied to reduce a word to its root and lemma by matching it with a language dictionary.

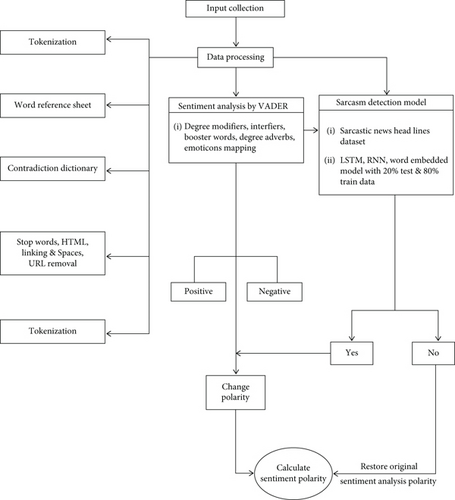

The proposed approach calculates the sentiment polarity of tweets by incorporating sarcasm detection. The dataset used for testing is preprocessed and passed to the VADER, a tool to perform text sentiment analysis to calculate sentiment polarity. VADER is available in the NLTK package and can be directly applied to unlabelled text based on a dictionary that maps lexical features to intensities. It implements grammatical and syntactical rules by considering quantifications for each direction on the perceived intensity of sentiment. Word-order discreet relationships are incorporated; the polarity score is calculated by considering degree modifiers, intensifiers, booster words, and degree adverbs. They are equally essential to impact sentiment intensity by decreasing or increasing strength.

The overall sentiment score is calculated by summing up the intensity of each word. Each tweet is assigned four different compounds, positive, negative, and neutral scores. The compound score is calculated by summing the valence scores of each word in the lexicon adjusted according to rules and then normalised between −1 (extremely harmful) and +1 (extremely positive)—the positive, negative, and neutral proportions of text fall in each category. Threshold values to assign polarity labels are as follows: positive sentiment (compound score ≥ 0.05), neutral sentiment (−0.05 < compound score < 0.05), and negative sentiment (compound score ≤ −0.05).

A polarity label is assigned to each tweet dependent on the compound score. After getting this polarity label, the dataset is passed to the sarcasm detection engine to calculate whether the text is sarcastic. If the engine detects it as sarcastic text, a label of 1 is assigned to it. The polarity label of each sarcastic tweet is reversed.

3.2. Sarcasm Detection Approach

Most of the research has used Twitter datasets to detect sarcasm. However, it slows down the process as datasets collected from social media have informal content and noisier language, especially when they have slang language, short words, spelling mistakes, etc. Sarcasm detection also needs contextual tweets to be available, which is why this approach has preferred the headline dataset collected from the Onion website, which produces sarcastic data on current events and nonsarcastic news headlines from HuffPost. The dataset has 4890 headlines, making it a practical resource for training sarcasm detection models due to its rich contextual cues and real-world sarcastic expressions. This dataset has low sparsity, and the chances of finding pretrained embeddings increase as professionals write formally and have no spelling mistakes. This dataset has less noise and high-quality labels than Twitter datasets because the Onion is meant to publish sarcastic news. Also, the dataset is self-contained and not like tweets that are sometimes responses to other tweets, and the original context is unavailable. The dataset in the proposed approach has three attributes: is-sarcastic (Boolean), news headline itself, and a link to actual news.

Sarcastic headlines are collected, and a word cloud is designed using the frequency of words with sarcastic headlines. LSTM is used in the proposed approach as RNN, LSTM, and word embeddings can make sarcasm detection efficient, making the statements from Twitter easily classifiable. Pretrained GloVe models using RNN in [38] are utilised in this study. The details of the proposed methodology are presented in Figure 10. RNN architecture is best known for time series prediction. It uses the state to keep track of memory and efficiently processes sequence data. Processing sequential inputs can generate future output.

This approach modifies the standard RNN architecture by incorporating LSTM cells to mitigate vanishing and exploding gradient issues. Additionally, the model integrates BiLSTM to capture both past and future context and an attention mechanism to weigh important words for sarcasm detection. These modifications enhance the model’s ability to detect sarcasm by improving contextual understanding and focusing on key features.

3.3. Test Train Split

During the test train split, 20% of data is reserved for testing and 80% for training. The maximum length needs to be defined as 25. Its value can be tuned by experimenting to determine if the accuracy value can increase. Keras tokeniser is used to vectorise a text corpus where text input is converted into an integer sequence or a vector with a coefficient for each token in binary values. It creates tensors passed to the model, setting tokenisers on headlines, producing sequences, and computing the word index. The length of this word index will be unique tokens, and vocabulary will be one plus unique tokens. Padding zeros to those headlines that are less than 25 words long and this function will also cut the words where words in the headlines are more than 25. Therefore, only 25 words of headlines are passed. Data is shuffled to reduce biasedness in our dataset. After that, tensors are constructed, and training and test set numbers are published.

Distributed word representation is gathered from the GloVe model, an unsupervised learning algorithm for obtaining vector representations for words. It is achieved by mapping words into a meaningful space where the distance between words is related to semantic similarity. The GloVe Twitter model has a dictionary containing words as keys and its vector representation as value. The vector representation is designed by accessing that dictionary. The next step is to create the embedding layer, which needs an embedding matrix; the shape of this matrix will be the shape of vocabulary times the embedding dimension; in this case, it is just 100. To build the matrix, it is checked to find out if the word is present in the vocabulary, then its vector representation is considered, and if not, then that row will contain zeros. The Keras function constructs the embedded layer, and a pretrained model will be used. In this case, using a sequential module is the easiest way to build a neural network. Then, the embedding layer is added as the first layer to the network, and 64 LSTM RNNs are added with a dropout of 0.2 and a recurrent dropout of 0.25. These hyperparameters can be tuned to get better results. The sigmoid function is used, and the last layer will contain only one node. This binary classification problem uses binary cross-entropy and Adam as an optimiser.

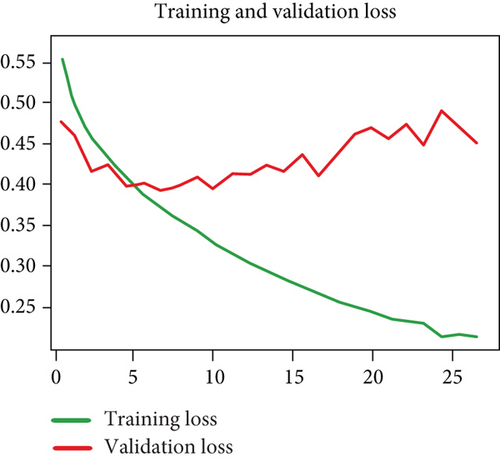

The learning and training process utilised TensorFlow and Keras. The classification technique employed was binary classification, distinguishing sarcastic from nonsarcastic content. The experimental setup for this study involved training the models on a MacBook Pro 2020 equipped with an Intel i5 processor and 16GB RAM. The training time lasted for almost 6 h, covering 25 epochs. In the first epoch, this model got an accuracy of 0.78, and after 25 epochs, it achieved an accuracy of 0.88, close to 90%. After five epochs, training and validation accuracy overlapped, and training accuracy increased to reach 0.88, as presented in Figure 5. Since this model does not have overfitting here, which is a significant sign, now, to visualise it graphically, both validation and training loss are decreasing, and there is no considerable overfitting so that you can create it for epochs. Test sentences are passed to the model function, which will detect whether statements are sarcastic. Training and validation accuracy and loss are graphically presented in Figures 12 and 13.

4. Evaluation and Result

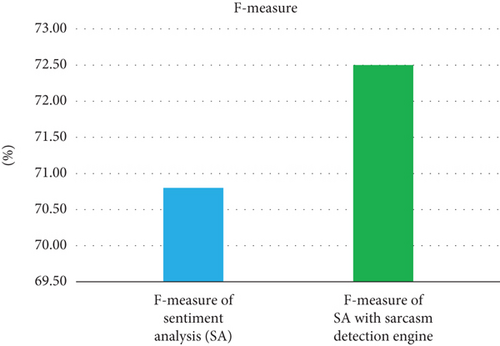

Detecting sarcasm to enhance sentiment score is a widely studied problem. The proposed technique was evaluated using F-measure, training loss, and validation loss to assess model performance. Lower training and validation loss indicates that the model effectively learned patterns in the data without overfitting, leading to better generalisation. The correlation between these metrics shows that as the model minimised loss during training, it improved its precision and recall balance, enhancing the F-measure score. A higher F-measure score suggests that the model successfully reduced false positives and negatives by effectively capturing contextual nuances in sarcastic text. The result was measured using the F-measure metric, which is calculated to determine the test’s accuracy as the weighted harmonic mean of the precision and recall of the test. The proposed model has performed much better than existing approaches, as predicted by [19] using SVM and naïve Bayes, where SVM has an F-measure of 61.6% and naïve Bayes achieves 45%. Word embedding approaches effectively detect sarcasm as they capture a word’s meaning in a vector representation, and they are widely used. GloVe embedding is utilised in [39] with a linear support vector classifier and logistic regression, achieving F-measures of 67% and 69%. A multitask learning approach is proposed, combining static and contextualised embeddings [40]. The system’s results using CNN and LSTM architecture for sarcasm detection yield an accuracy of 63%, while the F-measure for sentiment analysis reaches 70%. Additionally, there are approaches that yield superior results when considering the dataset and methods employed. One approach using RNNs to detect sarcasm in political speeches has achieved an accuracy of 95% and an F1 score of 95% for both sarcastic and nonsarcastic content [41]. A deep multitask learning approach for sarcasm detection in sentiment analysis has earned an F1 score of 94% [42]. The calculated F-measure of our proposed approach on the same dataset using VADER without a sarcasm detection engine is 70.81%. When incorporated, the sarcasm detection engine’s F-measure jumps to 72.48%, as presented in Figure 14. Although this model had the potential to perform much better, the F-measure is slightly improved, and there are many reasons for this.

5. Conclusion

The model is trained on highly formal data while being tested on informal data where irregular language patterns are used, which decreases accuracy. Many ambiguous words are mapped incorrectly in the VADER dictionary, so sentiment polarity must be double-checked before it is passed to the sarcasm detection engine. There are multiple embedding issues because a word might have various senses. Another reason could be contextual sarcasm, such as the quote, “Oh, and I suppose the apple ate the cheese.” The similarity score between “apple” and “cheese” is 0.4119, which is considered the most similar pair. The most dissimilar pairs are “suppose” and “apple,” with a similarity score of 0.1414. The sarcasm in this sentence can only be understood in the context of the complete conversation it is part of. Sometimes, words are related but not directly, leading to a low similarity score, which may cause our model to predict nonsarcastic content as sarcastic.

Nomenclature

-

- LSTM

-

- long short-term memory

-

- RNN

-

- recurrent neural network

-

- URL

-

- uniform resource locator

-

- SVM

-

- support vector machine

-

- Word2Vec

-

- word to vector

-

- HTML

-

- HyperText Markup Language

-

- TF-IDF

-

- term frequency–inverse document frequency

-

- CNN

-

- convolution neural network

-

- BoW

-

- bag of words

-

- NN

-

- neural networks

-

- VADER

-

- Valence Aware Dictionary and sEntiment Reasoner

-

- NLTK

-

- Natural Language Toolkit

-

- GloVe

-

- Global Vectors for Word Representation

-

- ReLU

-

- rectified linear unit

-

- POS

-

- part of speech

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The authors received no specific funding for this work.

Acknowledgments

The authors have nothing to report.

Open Research

Data Availability Statement

The data used in this article are openly available and can be made available upon request.