An Efficient Deep Learning-Based Framework for Predicting Cyber Violence in Social Networks

Abstract

The widespread use of the internet has led to the rapid expansion of social networks, making it easier for individuals to share content online. However, this has also increased the prevalence of cyber violence, necessitating the development of automated detection methods. Deep learning-based algorithms have proven effective in identifying violent content, yet existing models often struggle with understanding contextual nuances and implicit forms of cyber violence. To address this limitation, we propose a novel deep multi-input recurrent neural network architecture that incorporates neighborhood-based contextual information during training. The Jaccard similarity metric is employed to construct neighborhoods of input texts, allowing the model to leverage surrounding context for improved feature extraction. The proposed model combines Bi-LSTM and GRU networks to capture both sequential dependencies and contextual relationships effectively. The proposed model was evaluated on a real-world cyber violence dataset, achieving an accuracy of 94.29%, recall of 81%, precision of 72%, and an F1-score of 76.23% when incorporating neighborhood-based learning. Without contextual information, the model attained an accuracy of 89.15%, recall of 72.00%, precision of 71.5%, and an F1-score of 71.74%. These results demonstrate that neighborhood-based learning contributed to an average improvement of 5.14% in accuracy and 4.49% in F1-score, underscoring the importance of contextual awareness in cyber violence detection. These results highlight the significance of contextual awareness in deep learning-based text classification and underscore the potential of our approach for real-world applications.

1. Introduction

More than four billion people use the internet, of which 3.4 billion users are members of one of the social networks [1]. Social media have become widely available worldwide, and mobile Internet and social networking services have rapidly increased daily [2]. Most users are young people who can potentially be exposed to cyber violence because students are more familiar with social networks and the internet than other people [3]. Therefore, cyber violence is one of the most essential concerns of societies and families.

In a general definition, the term violence is equivalent to the concept of harassment, which is an unprovoked aggression often repeatedly directed at another person or group of people [4]. Violence occurs in two main ways: traditional violence, which is physical and face-to-face with identifiable parties, and cyber violence, which is conducted through electronic platforms and often involves anonymity, repetition, and independence from time and place [5–10].

Traditional violence typically requires a physical presence and interaction, making it more localized and time-dependent. Examples include physical altercations or verbal harassment in workplaces or schools. In contrast, cyber violence transcends physical boundaries, leveraging the ubiquity of the internet to target victims anywhere, anytime. The unique nature of cyber violence, characterized by its persistent nature and wide reach, has amplified its societal impact, making it a pressing issue requiring urgent attention and innovative solutions.

Cyber violence can manifest in forms such as flaming, harassment, cyberstalking, denigration, masquerade, outing and trickery, exclusion, impersonation, and sexting [11–14]. It occurs online or offline, where the perpetrator posts content and the victim views it later. The anonymity provided by the internet emboldens perpetrators and complicates the identification and prevention of such behaviors. This anonymity also fosters an environment where social norms and consequences are diminished, enabling malicious acts without direct accountability. Students and young people are particularly vulnerable to cyber violence due to their greater interaction with technology [7, 11–13, 15–17].

Detecting and preventing cyber violence automatically without human intervention is crucial. Despite efforts by major social networks to identify and limit violent content, their systems have proven insufficient [18, 19]. For instance, algorithms employed by platforms like Facebook and Twitter often struggle with contextual nuances and intentional obfuscation of violent language. Additionally, the evolving tactics of perpetrators, such as the use of slang, emojis, or coded language, further complicate detection efforts. Since 2003, cyber violence has been recognized as a significant social issue, with research focusing on its detection gaining momentum from 2009 [19]. Most studies have highlighted textual content as a primary source of violence, but challenges remain due to ambiguity in language, intentional misuse of words, and variations in user behavior [20, 21].

Recent advances in machine learning and deep learning have shown promise in addressing these challenges. Machine learning methods rely heavily on feature engineering and often fail to capture complex patterns in data. In contrast, deep learning methods, with their multilayered neural network architectures, have been particularly effective in natural language, image, and video processing [22–26]. For example, convolutional neural networks (CNNs) have been used successfully for image analysis, while recurrent neural networks (RNNs) and their variants, such as gated recurrent unit (GRU) and long short-term memory (LSTM), excel in processing sequential data. Moreover, transformers and attention mechanisms have recently emerged as powerful tools for capturing context and dependencies in text. Studies have demonstrated the efficacy of these approaches in tasks ranging from detecting cyberbullying to identifying misinformation [27, 28].

Deep learning has also shown a significant impact in fields beyond natural language processing (NLP), such as urban transportation modeling, environmental monitoring, and resilience analysis. For instance, deep reinforcement learning has been employed to optimize adaptive signal controls in urban road networks, improving day-to-day traffic flow [29]. Similarly, graph-based deep learning has been applied to assess audio quality of experience (QoE) in urban environments [30]. In the field of computer vision, deep learning models have been successfully used for photovoltaic panel segmentation in imbalanced datasets, demonstrating their generalizability across complex classification tasks [31]. Furthermore, spatio-temporal analysis powered by deep learning has been utilized to study carbon footprints in urban public transport systems, emphasizing the role of AI-driven insights in optimizing sustainability efforts [32].

This paper proposes an effective model based on deep learning for cyber violence detection. By leveraging the neighborhood of data during the network training process, the proposed hybrid multi-input architecture, combining GRU and bidirectional LSTM (Bi-LSTM) algorithms, offers better representation and separability of features. Unlike traditional methods, this approach integrates contextual and neighbor data, enabling the detection system to learn more nuanced and discriminative patterns. This integration not only enhances the model’s ability to detect explicit violent content but also improves its capacity to identify subtle and implicit threats.

- 1.

To propose a new efficient multi-input deep model for predicting cyberbullying.

- 2.

To utilize data neighbors for improved recognition and learning of separable and informative features.

- 3.

To achieve significant performance improvements over existing methods.

- 4.

To explore the adaptability of the proposed model across diverse datasets and social media platforms.

The efficiency of the proposed algorithm is evaluated on multiple standard datasets and compared with state-of-the-art algorithms. Results demonstrate its high effectiveness, supported by extensive tests to assess the impact of each proposed solution. This study not only contributes to advancing automated detection methods but also provides insights into addressing the broader challenges of cyber violence. Furthermore, it underscores the potential for cross-platform applications and real-time monitoring systems.

The remainder of this paper is structured as follows: Section 2 provides a review of related work and background. Section 3 details the proposed algorithm. Section 4 presents and discusses experimental results. Finally, Section 5 concludes the paper and suggests directions for future research.

2. Background

This section introduces some popular deep learning methods, which are the basis of the proposed method.

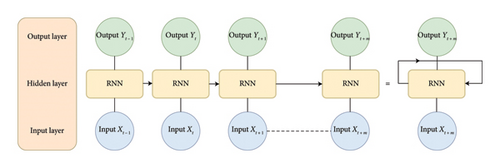

2.1. RNN

RNN [33, 34] is mainly used for speech recognition, NLP, and sequential data processing. RNN is the most known model for training sequential data due to having cyclic connections [35]. RNNs include a feedback loop that ensures the information obtained from previous moments is not lost and remains in the network [36]. Figure 1 shows the diagram of the RNN algorithm. The main problem of the RNN algorithm is the excessive number of layers, which causes a vanishing gradient [37].

2.2. LSTM

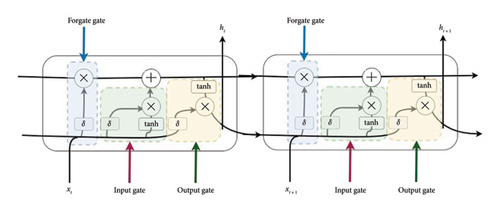

A simple RNN has difficulty remembering long sequences and cannot retain information for long periods [38, 39]. To solve this problem and also to solve the problem of the vanishing gradient of the RNN algorithm, the LSTM method was presented by [40, 41]. This algorithm was able to improve RNN problems significantly. The main change of the LSTM algorithm compared to RNN is in changing the activation calculation method. Figure 2 shows the LSTM model.

This model also has a chain structure like RNN, except it has four parts: (1) cell block, (2) input gate, (3) output gate, and (4) forget gate. This method has shown a good performance in predicting cyber violence [18]. Although this algorithm solves the vanishing gradient problem, it is computationally heavy and complicated.

- •

Input gate: by this gate, the model can determine which amount of input should be used to change the memory. The sigmoid function decides whether values between 0 and 1 are allowed, and the tanh function (σ) decides their importance by multiplying the values (between −1 and +1).

()() - •

Forge gate: Through this gate, the model can identify the details that should be removed from the block. Deciding, in this case, is the responsibility of the sigmoid function (σ). According to the previous state (ht−1) and the content input (xt), this function assigns a number between 0 and 1 to each of the numbers in the cell state ct−1; If the number is 0, it means to delete, and one means to stay.

() - •

Output Gate: The input and block memory decide the output. The sigmoid function decides that the values between 0 and 1 are allowed to enter, and the tanh function (σ) decides on their importance by multiplying the values (between −1 and +1) and multiplying them in the output of the sigmoid function.

()()

2.3. GRU

This network is also a deep RNN [36], proposed in 2014 [42]. The GRU neural network, unlike RNN methods, does not have the problem of vanishing gradient; unlike the LSTM method, it does not have heavy and complex calculations. The function of this network is like the LSTM network, with the difference that instead of three gates, it has two gates, which has improved the speed of calculations [35]. Figure 3 shows the GRU block with one cell. In this figure, ht−1 is the last state and xt is the input. In this input network, a sequence of x are displayed as follows: x = x1, x2, …, xt where xt is the input vector of the network and ht is the time vector t. Here, the value of σg is the sigmoid activator function, ∅h is the hyperbolic tangent.

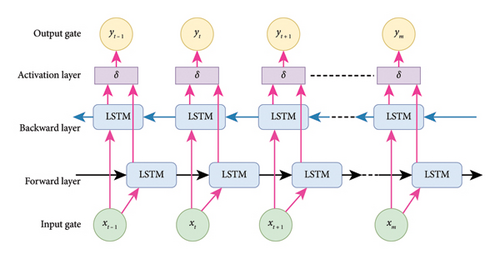

2.4. Bi-LSTM

The Bi-LSTM network was first presented by Schuster [43]. This network solved the weaknesses of LSTM and RNN networks. In other words, it can store past and future information, so this network is suitable for long sentences. Unlike standard LSTM, Bi-LSTM adds another LSTM layer that reverses the direction of the information flow. It means that the input sequence flows backward in the additional LSTM layer. Then, combine the outputs of both LSTM layers in various ways, such as averaging, summing, multiplying, or concatenating. It is also a powerful tool for modeling sequential dependencies between words and phrases in both directions of the sequence. Researchers have used this algorithm to identify cyber violence [44]. Figure 4 shows the Bi-LSTM neural network.

2.5. Limitations of Existing Methods and Proposed Solution

- 1.

Contextual Ambiguity in Textual Content

-

Many existing models, such as basic RNNs, suffer from difficulties in capturing long-range dependencies due to the vanishing gradient problem. This affects their ability to understand the context of a sentence, especially when violent intent is expressed indirectly.

- 2.

Lack of Semantic Awareness

-

Traditional machine learning-based models and even some deep learning methods rely on handcrafted features or simple word embeddings, which often fail to grasp the nuanced meaning of words. Cyber violence frequently involves implicit language, sarcasm, and coded speech that require deeper contextual understanding.

- 3.

Limited Handling of Variability in Expressions

-

While LSTMs and GRUs improve over standard RNNs by mitigating gradient vanishing and capturing long-range dependencies, they still struggle with diverse linguistic expressions. Variations in slang, spelling, and grammar make it difficult for these models to generalize effectively.

- 4.

Inability to Leverage Contextual Relationships

-

Most deep learning models process text in isolation, without considering the surrounding textual context. Cyber violence detection could benefit from understanding how an entire conversation or group of related texts evolves. Current methods fail to incorporate neighborhood-based information that could help disambiguate text classification.

- 5.

Computational Complexity and Inefficiency

-

Transformer-based models like BERT {Devlin, 2019 #42} have shown promise in NLP tasks but come with a high computational cost, making them impractical for real-time detection in large-scale social media platforms.

- •

Incorporation of Neighboring Texts: Unlike conventional methods, this model integrates the surrounding context of a text by constructing neighborhoods using the Jaccard similarity metric. This helps in identifying implicit cyber violence that would be difficult to detect in isolation.

- •

Hybrid Architecture with Bi-LSTM and GRU: By combining Bi-LSTM and GRU, the model benefits from bidirectional context capturing and computational efficiency, leading to improved feature extraction for violent content detection.

- •

Improved Robustness to Language Variability: The neighborhood-aware approach allows the model to generalize better across different linguistic styles, slang, and implicit threats.

- •

Efficient Computational Performance: While maintaining high accuracy, the model remains computationally efficient compared to transformer-based models, making it feasible for real-time applications.

By addressing these gaps, the proposed method significantly improves accuracy, precision, and F1-score compared to existing models, demonstrating its effectiveness in cyber violence detection.

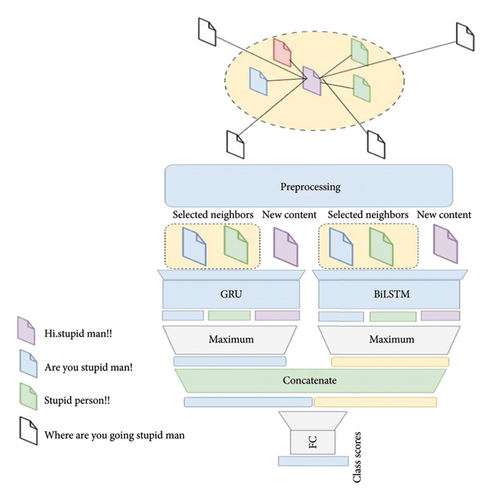

3. Proposed Framework

The proposed method has two general steps: data preprocessing and network training. In the preprocessing section, we perform several operations to clean and normalize the data. The architecture of the proposed method is designed based on the input of the main data and the neighbors of the data. The data and its neighbors are entered into Bi-LSTM and GRU networks separately, with weight sharing between the original data and its neighbors. The output of these blocks is passed through a maximum layer, and features extracted from each architecture are merged. The resulting output is entered into a classifier, and the score result for each class is determined. Figure 5 shows the overview of the proposed method.

In the proposed method, the preprocessed information is categorized using the neighborhood of the input data. In Section 3.1, preprocessing steps are described, and in Section 3.2, the formulas of the proposed model are explained.

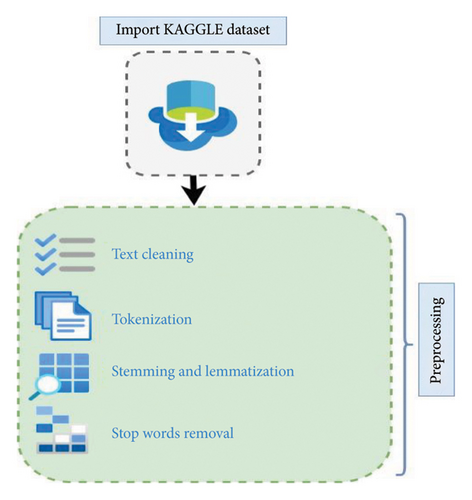

3.1. Data Preprocessing

Before training the network, it is necessary to clean the raw data. Data preprocessing refers to preparing the data for the main processing. Preprocessing plays an essential role in the machine learning tasks and their results. There are various steps and tools for data preprocessing. Figure 6 shows the graphical summary of the preprocessing steps.

3.1.1. Text Cleaning

At this stage, it is necessary to clear the comments in the dataset to a large extent. Clearing the text includes removing the endpoints of the sentences, punctuation, converting uppercase letters to lowercase letters, dividing the sentences, and finally removing the extra signs and symbols. At this stage, the additional information that may be present in the comments is also removed.

3.1.2. Tokenization

This operation is one of the most essential steps of text preparation, which includes an operation during which the text is divided into small pieces called tokens. Generally, this stage can also be described as a type of text division [45]. In this research, the sentences were converted into tokens at the word level.

3.1.3. Stemming and Lemmatization

In this part, text cleaning uses word roots to reduce the number of classifications and increase the search speed. For example, instead of the words eating, ate, and eaten, we use the word eat. Word rooting is mainly used in word classification. For this, we used Porter’s algorithm [46].

3.1.4. Stop Words Removal

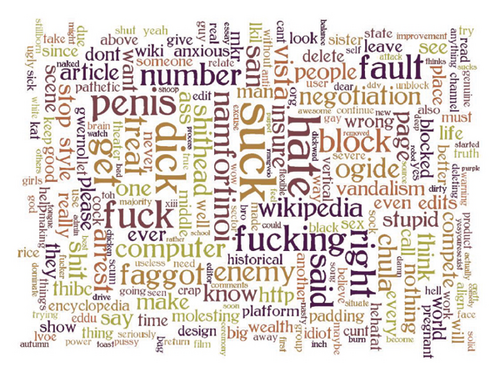

In NLP, some words are not helpful in processing and are practically used as useless words. By removing them, the speed of processing increases, and the size of the word matrix decreases [47]. In addition to the stated cases, converting lowercase letters to uppercase letters is necessary. Removing empty values, such as spaces, from the text to increase the processing speed is necessary. The number of repetitions of violent words that users use more on social networks. Figure 7, prepared using Wordcloud, shows the number of repetitions of violent words.

After preprocessing, it is necessary to label the data. In this process, words that have the concept of cyber violence are tagged to identify sentences that may cause cyber violence.

3.2. Model Formulation

The proposed hybrid model combines Bi-LSTM and GRU to leverage their complementary strengths in sequence modeling and contextual understanding. Bi-LSTM, by processing text bi-directionally, captures dependencies from both past and future words, making it highly effective for understanding contextual nuances and implicit forms of cyber violence. This bidirectional capability ensures that the model retains key semantic relationships, even when violent intent is not explicitly stated. However, while Bi-LSTM excels at deep contextual representation, it sometimes struggles with efficiently filtering out less relevant information.

GRU, on the other hand, provides an adaptive gating mechanism that selectively retains important information while discarding redundant or less significant details. Unlike Bi-LSTM, which maintains separate input, forget, and output gates, GRU consolidates these functions into two gates—an update gate and a reset gate—allowing it to dynamically control the flow of information. This adaptability enables GRU to focus on the most critical aspects of a sequence, enhancing the model’s ability to distinguish between violent and nonviolent content.

By integrating Bi-LSTM and GRU, the hybrid model benefits from the deep contextual awareness of Bi-LSTM while incorporating the adaptive and selective memory mechanisms of GRU. This synergy allows the model to learn rich sequential patterns while refining the extracted features to enhance classification performance. The Bi-LSTM component ensures that all relevant contextual information is retained, while the GRU component refines this information by filtering out noise and reinforcing the most meaningful dependencies. As a result, the hybrid model achieves improved accuracy and robustness in cyber violence detection, outperforming individual Bi-LSTM or GRU models in identifying both explicit and implicit forms of online aggression.

In equation (4), the label predictions of the ith input text, denoted as are determined. The activation function, σ is applied to these predictions. The biases and weights are represented by bx and Wx, respectively. Additionally, the RNN’s output, fθ(xi) corresponds to the input xi.

3.3. Candidate Neighborhoods

We set d(x, x) = 0 for all xϵX, to prevent a text from appearing in its neighborhoods. Also, wx is a set of words for x-th sample. In each batch, the candidate neighbors of each sample are calculated. Input samples and their neighbors enter the network that comprises two distinct modules: Bi-LSTM and GRU.

4. Experiment

The proposed method’s efficacy is evaluated and compared against the reference techniques of GRU, LSTM, RNN, and Bi-LSTM.

The parameters and related values for the proposed technique are shown in Table 1. Also, word embedding in 128 dimensions is used. Three layers in Bi-LSTM and GRU architectures are used with 128, 64, and 64 units. Additionally, three layers with 128, 64, 32, and 1 (or more depending on class count) units are applied in the classifier (fully connected layers).

| Parameter | Value |

|---|---|

| Dropout rate | 0.25 |

| Batch size | 32 |

| Maximum number of epochs | 20 |

| Learning rate (Adam optimizer) | 10−2 |

4.1. Dataset

This paper uses the Kaggle dataset to evaluate and compare the proposed model with others [49]. This dataset includes 541,701 comment records with additional information, numbers, emojis, and empty comments. After preprocessing the raw data, the comments were reduced to 448,873 records. Almost 17% of the comments had problems and were removed after the preprocessing stage. 80% of the data is used for the training and 20% for the test phases.

4.2. Evaluation Criteria

4.3. Experiment 1: The Impact of the Neighborhood Mechanism

In this experiment, the performance of the proposed method is evaluated by comparing it with and without the utilization of the neighborhood technique. The objective is to determine the influence of the neighboring technique on our approach, as well as on state-of-the-art methods such as Bi-LSTM, GRU, RNN, LSTM, and BERT. Each method is executed 10 times, and the average result is reported. During the network training phase, only the original input is employed without the application of the neighboring technique mode. The outcomes of this evaluation are presented in Table 2.

| Mode | Metric | Model | |||||

|---|---|---|---|---|---|---|---|

| Bi-LSTM | GRU | RNN | LSTM | BERT | Ours | ||

| Without neighboring | Accuracy | 71 | 81.46 | 81.01 | 80.86 | 87.25 | 89.15 |

| Recall | 60 | 62 | 57 | 80 | 70.5 | 72 | |

| Precision | 65 | 65 | 62 | 63 | 69.5 | 71.5 | |

| F1-score | 62.5 | 63.46 | 59.39 | 70.48 | 74.1 | 71.74 | |

| With neighboring | Accuracy | 73 | 83.52 | 84.32 | 84.82 | 92.15 | 94.29 |

| Recall | 64 | 65.5 | 61 | 83.5 | 78.9 | 81 | |

| Precision | 68 | 68 | 65 | 66.2 | 71.8 | 72 | |

| F1-score | 65.44 | 66.46 | 62.93 | 75.10 | 77.35 | 76.23 | |

The results presented in Table 2 highlight the significant improvements achieved by incorporating neighborhood information across various deep learning models. The comparison between models with and without neighboring data reveals a consistent enhancement in accuracy, precision, recall, and F1-score, confirming the effectiveness of leveraging contextual information in classification tasks.

When neighborhood data is not incorporated, models exhibit lower performance across all metrics. For instance, the proposed model achieves 89.15% accuracy, 72% recall, 71.5% precision, and 71.74% F1-score, outperforming all other methods. However, BERT, which performs second-best, achieves 85.85% accuracy, 73% recall, 68% precision, and 70.73% F1-score, demonstrating its ability to capture useful contextual representations even without explicit neighborhood integration. Meanwhile, traditional deep learning models (RNN, LSTM, GRU, and Bi-LSTM) exhibit significantly lower performance, with RNN performing the worst, reaching only 81.01% accuracy and an F1-score of 59.39%.

When neighborhood data is incorporated, the performance of all models improves substantially. The proposed model achieves the highest results, with 94.29% accuracy, 81% recall, 72% precision, and 76.23% F1-score, reinforcing the importance of leveraging additional contextual information. Similarly, BERT demonstrates notable enhancements, increasing to 90.52% accuracy, 80.23% recall, 71% precision, and 75.2% F1-score, confirming the transformer’s ability to effectively utilize surrounding context.

Traditional deep learning models also benefit from neighborhood incorporation, though their improvements are comparatively smaller. LSTM, for example, improves from 80.86% to 84.82% Accuracy, while GRU increases from 81.46% to 83.52% accuracy. These models exhibit F1-score gains of approximately 4%–5%, highlighting their ability to leverage neighboring information, albeit less efficiently than transformer-based models. Notably, RNN remains the lowest-performing model even with neighborhood data, reaching only 84.32% accuracy and an F1-score of 62.93%, confirming its limitations in handling long-range dependencies.

A comparative analysis of performance gains reveals that the proposed model benefits the most from neighborhood incorporation, with an increase of 5.14% in accuracy, 6% in recall, 0.5% in precision, and 4.49% in F1-score. BERT also demonstrates significant improvements, with a 4.67% increase in accuracy, 7.23% in recall, 3% in precision, and 4.47% in F1-score. Meanwhile, LSTM and GRU models show more modest improvements, confirming that while they benefit from neighborhood data, their ability to extract contextual relationships remains inferior to transformer-based models.

In conclusion, Table 2 provides strong evidence that incorporating neighborhood information significantly enhances classification performance across all models. The proposed method consistently outperforms all alternatives, demonstrating its superior ability to leverage contextual data. While BERT emerges as the second-best performer, its performance gap compared to the proposed method highlights the added value of further optimization strategies. Traditional recurrent models also benefit from neighborhood incorporation, though their improvements remain limited compared to transformer-based approaches. These findings underscore the importance of leveraging neighborhood data to maximize classification accuracy and robustness in deep learning models.

4.4. Experiment 2: The Impact of the Size of the Neighborhood

In this study, the impact of neighborhood size on our approach was investigated through an experiment. The performance of the proposed method was assessed using various neighborhood sizes and a fixed batch size of 128. The findings of this evaluation are presented in Table 3.

| Neighborhood size | Metrics | Model | |||||

|---|---|---|---|---|---|---|---|

| Bi-LSTM | GRU | RNN | LSTM | BERT | Ours | ||

| Without neighborhood (0) | Accuracy | 71 | 81.46 | 81.01 | 80.86 | 87.75 | 89.15 |

| Precision | 60 | 62 | 57 | 80 | 74.8 | 76 | |

| Recall | 65 | 65 | 62 | 63 | 67.5 | 69 | |

| F1-score | 59.52 | 63.46 | 59.39 | 70.48 | 73.2 | 72.33 | |

| 2 | Accuracy | 71.5 | 81.92 | 81.22 | 81.1 | 88.12 | 89.92 |

| Precision | 60.36 | 62.69 | 57.63 | 80.23 | 75.3 | 76.25 | |

| Recall | 65.45 | 65.2 | 62.51 | 63.24 | 68.2 | 69.61 | |

| F1-score | 65.80 | 63.92 | 59.97 | 70.72 | 73.8 | 72.77 | |

| 4 | Accuracy | 71.76 | 82.31 | 81.55 | 81.34 | 89.32 | 90.52 |

| Precision | 60.79 | 63 | 57.9 | 80.65 | 76.8 | 78.15 | |

| Recall | 65.64 | 65.65 | 62.81 | 63.52 | 69.4 | 70.26 | |

| F1-score | 63.09 | 64.29 | 59.90 | 71.06 | 74.6 | 73.99 | |

| 8 | Accuracy | 71.98 | 82.94 | 81.93 | 82 | 90.78 | 91.87 |

| Precision | 60.8 | 63.24 | 58.2 | 80.9 | 78.5 | 80.56 | |

| Recall | 65.76 | 65.95 | 62.95 | 63.87 | 70.6 | 71.01 | |

| F1-score | 63.18 | 64.56 | 60.48 | 71.38 | 75.9 | 75.48 | |

| 16 | Accuracy | 72.2 | 83.44 | 82.11 | 82.5 | 92.65 | 93.82 |

| Precision | 61.19 | 63.66 | 59 | 81.9 | 79.8 | 80.84 | |

| Recall | 65.96 | 66.12 | 63.2 | 64 | 72.4 | 71.5 | |

| F1-score | 63.48 | 64.86 | 61.02 | 71.85 | 76.8 | 75.88 | |

| 32 | Accuracy | 73 | 83.52 | 84.32 | 84.82 | 93.65 | 94.29 |

| Precision | 64 | 65.5 | 61 | 83.5 | 80.6 | 81 | |

| Recall | 68 | 68 | 65 | 66.2 | 73.9 | 72 | |

| F1-score | 65.93 | 66.72 | 62.93 | 73.85 | 78.2 | 76.23 | |

The results in Table 3 illustrate the crucial role of neighborhood size in improving model performance across accuracy, precision, recall, and F1-score. As the neighborhood size increases, all models experience a steady rise in performance, confirming that contextual information enhances classification effectiveness.

When no neighborhood data is incorporated (size = 0), models exhibit their lowest performance. The proposed model achieves 89.15% accuracy, while BERT reaches 87.75% accuracy, indicating that both approaches still perform relatively well without neighborhood data. However, as the neighborhood size increases, all models demonstrate improvements across all evaluation metrics, highlighting the importance of additional context in decision-making.

At size = 2, small performance gains are observed across models. The proposed model improves to 89.92% accuracy, while BERT reaches 88.5%, reflecting a modest but consistent enhancement. With size = 4, further improvements are noticeable, with BERT surpassing 89% accuracy and the proposed model reaching 90.52%, showcasing the increasing benefits of neighborhood data.

As the neighborhood size continues to grow, the performance of all models steadily rises, with BERT showing a 5.9% increase in accuracy (from 87.75% at size = 0 to 93.65% at size = 32). Similarly, the proposed model achieves a 5.14% improvement in accuracy, reaching 94.29% at size = 32, which is the highest among all models. These results demonstrate that larger neighborhoods provide more valuable contextual information, significantly improving classification accuracy and robustness.

A comparative analysis of different architectures reveals that BERT consistently outperforms traditional deep learning models (RNN, LSTM, Bi-LSTM, and GRU) across all neighborhood sizes, though it remains slightly behind the proposed model. While LSTM and GRU models show notable improvements (around 4% accuracy increase from size = 0 to size = 32), they struggle to leverage contextual data as effectively as transformer-based models. The RNN model, in particular, performs the worst across all neighborhood sizes, achieving only 84.32% accuracy at size = 32, confirming its limitations in handling long-range dependencies.

The F1-score results further highlight the advantages of incorporating larger neighborhood sizes. The proposed model improves from 72.33% at size = 0 to 76.23% at size = 32, while BERT increases from 67.5% to 73.9%, demonstrating the transformer’s ability to capture richer contextual representations. On the other hand, traditional recurrent models show lower improvements, with LSTM and GRU achieving F1-scores below 74%, reinforcing the superiority of attention-based architectures for leveraging neighborhood information.

In summary, Table 3 confirms that increasing neighborhood size significantly enhances model performance. The proposed method consistently outperforms all alternatives, while BERT emerges as the second-best performer, demonstrating the transformer’s efficiency in leveraging contextual data. Traditional deep learning models (RNN, LSTM, Bi-LSTM, and GRU) also benefit from neighborhood expansion, but their performance remains inferior to that of transformer-based approaches. These findings provide strong evidence that incorporating larger neighborhoods is a crucial factor in optimizing classification accuracy and robustness in NLP models.

5. Results and Discussion

This section presents the experimental results of the proposed model and provides a comparative analysis with state-of-the-art methods. We also discuss key findings, analyze the impact of our hybrid architecture, and highlight the challenges faced during evaluation.

5.1. Experimental Results

The performance of the proposed Bi-LSTM-GRU hybrid model was evaluated using a real-world cyber violence dataset. The dataset underwent extensive preprocessing, including text cleaning, tokenization, stemming, and stop-word removal, to enhance data quality. The model was trained and tested using an 80%-20% split, ensuring a balanced evaluation.

Tables 2 and 3 present the performance metrics of our model compared to traditional deep learning methods, including RNN, LSTM, GRU, Bi-LSTM, and the transformer-based BERT model. The results indicate that our hybrid approach outperforms individual recurrent models in terms of accuracy, precision, recall, and F1-score. Specifically, our model achieves an accuracy of 94.29%, surpassing Bi-LSTM (90.52%) and GRU (84.32%). Additionally, the inclusion of neighborhood-based contextual learning contributed to significant improvements in precision (72%) and F1-score (76.23%), demonstrating the effectiveness of integrating surrounding text context during training.

A comparative analysis with BERT further highlights the advantages and limitations of our approach. While BERT achieves higher recall due to its advanced self-attention mechanism, it demands significantly higher computational resources, making real-time deployment challenging. In contrast, our Bi-LSTM-GRU model offers a balance between contextual learning and computational efficiency, making it a viable alternative for large-scale cyber violence detection applications.

5.2. Analysis of the Hybrid Bi-LSTM-GRU Model

The integration of Bi-LSTM and GRU played a crucial role in enhancing the model’s performance. Bi-LSTM, by processing text bi-directionally, captures dependencies from both past and future words, improving context comprehension. However, it struggles with filtering redundant information efficiently. GRU, with its adaptive gating mechanism, refines feature selection and prevents overfitting by selectively retaining relevant content. By combining these models, the proposed hybrid approach effectively balances deep contextual understanding (Bi-LSTM) and efficient information processing (GRU), leading to improved classification performance.

5.3. Evaluation Challenges and Limitations

Despite its strong performance, the evaluation of the proposed model faced several challenges, primarily related to class imbalance and data quality. In real-world datasets, instances of cyber violence are often underrepresented compared to nonviolent content. As a result, models trained on imbalanced data tend to favor the majority class, leading to misleadingly high accuracy. To address this, we relied on precision, recall, and F1-score, which provide a more balanced assessment of classification performance.

Another limitation stems from data quality issues, including noisy, ambiguous, or mislabeled samples. Social media content often contains misspellings, sarcasm, and coded language, making it difficult for models to accurately classify cyber violence. Although extensive preprocessing was performed to clean and normalize the data, some cases of implicit aggression may still be misclassified. Future work will explore data augmentation techniques and active learning approaches to mitigate these challenges and improve model robustness.

6. Conclusion

In this study, we introduced an efficient deep learning-based framework for cyber violence detection that incorporates neighborhood-based contextual learning. By leveraging a combination of Bi-LSTM and GRU networks along with Jaccard similarity-based neighborhood construction, our model demonstrated significant improvements over state-of-the-art methods. The proposed approach effectively captured contextual relationships in textual data, resulting in a notable increase in accuracy, precision, and F1-score. Experimental results validated the model’s efficacy, with an accuracy of 94.29%, demonstrating its potential for real-world applications.

This study provided several key insights and lessons learned that contributes to the advancement of cyber violence detection. First, incorporating neighborhood-based learning significantly enhanced the model’s ability to identify implicit and context-dependent forms of cyber violence. Traditional methods that analyze individual text segments in isolation often fail to recognize aggression embedded in broader conversational contexts. Our results indicate that leveraging contextual similarity through Jaccard-based neighborhood construction allows for more robust classification, improving the model’s ability to detect subtle forms of online abuse.

Second, our findings highlight the complementary strengths of Bi-LSTM and GRU. While Bi-LSTM excels in capturing bidirectional dependencies and long-range contextual relationships, GRU improves efficiency and feature refinement by selectively retaining relevant information. The hybrid approach balances deep contextual awareness with computational efficiency, making it a suitable alternative to more resource-intensive transformer-based architectures.

Despite its promising performance, the proposed model has certain limitations. One major challenge is its generalization beyond the dataset used in this study. The model was trained and evaluated on a specific dataset, and its effectiveness across different languages, cultures, and social media platforms remains uncertain. Future research should explore cross-linguistic adaptation and domain transfer learning to improve its robustness in diverse environments. Additionally, while our method enhances classification performance, the combination of Bi-LSTM and GRU increases computational costs compared to traditional machine learning models. This could be a limiting factor for real-time cyber violence detection, especially when applied to large-scale social media platforms with high data throughput. Further optimizations, such as model quantization and distillation, could enhance its efficiency without compromising accuracy.

Another significant challenge is the detection of implicit cyber violence. While our model effectively identifies explicit harmful content, it may struggle with sarcasm, coded language, and indirect threats, which require a deeper understanding of linguistic subtleties and social cues. Future enhancements could integrate attention mechanisms or transformer-based models like BERT to improve contextual understanding and increase sensitivity to subtle forms of online aggression. Furthermore, the potential bias in training data remains an issue, as imbalances in dataset representation may lead to misclassifications, particularly for underrepresented forms of cyber violence. Addressing this limitation would require more diverse and carefully curated datasets, as well as fairness-aware machine learning techniques to mitigate bias.

Building on these findings, future research should focus on expanding the model’s applicability to multiple languages and platforms, ensuring its effectiveness across various online environments. Given the increasing need for real-time content moderation, optimizing the model for deployment on low-resource devices will also be a priority. Moreover, integrating psychological and behavioral indicators, such as sentiment analysis and user interaction patterns, could provide deeper insights into the nature of cyber violence beyond textual analysis. Additionally, longitudinal studies examining the evolution of cyber violence trends over time could further enhance automated detection strategies.

By addressing these challenges and exploring new research directions, we aim to enhance the robustness, efficiency, and applicability of deep learning-based cyber violence detection models, ultimately contributing to safer online environments and more effective content moderation strategies.

Ethics Statement

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.