Fine-Tuning BERT Models for Multiclass Amharic News Document Categorization

Abstract

Bidirectional encoder representation from transformer (BERT) models are increasingly being employed in the development of natural language processing (NLP) systems, predominantly for English and other European languages. However, because of the complexity of the language’s morphology and the scarcity of models and resources, the BERT model is not widely employed for Amharic text processing and other NLP applications. This paper describes the fine-tuning of a pretrained BERT model to classify Amharic news documents into different news labels. We modified and retrained the model using a custom news document dataset separated into seven key categories. We utilized 2181 distinct Amharic news articles, each comprising a title, a summary lead, and a comprehensive main body. An experiment was carried out to assess the performance of the fine-tuned BERT model, which achieved 88% accuracy, 88% precision, 87.61% recall, and 87.59% F1-score, respectively. In addition, we evaluated our fine-tuned model against baseline models such as bag-of-words with MLP, Word2Vec with MLP, and fastText classifier utilizing the identical dataset and preprocessing module. Our model outperformed these baselines by 6.3%, 14%, and 8% in terms of accuracy, respectively. In conclusion, our refined BERT model has demonstrated encouraging outcomes in the categorization of Amharic news documents, surpassing conventional methods. Future research could explore further fine-tuning techniques and larger datasets to enhance performance.

1. Introduction

Document classification is the process of arranging text materials into different groups [1]. It is a sort of supervised learning in which an algorithm is trained to recognize patterns in labeled data and then use that knowledge to assign labels to new text documents [2]. The model is trained until it understands the underlying patterns and correlations between the input data and the output label. It has a wide range of applications, from organizing and filtering news items to sorting emails, identifying spam, and even evaluating public sentiment [3]. However, in the Big Data Era, where text data are generated every second, manually classifying text is nearly impossible [4]. As a result, the demand for automatic text categorization and natural language processing (NLP) technology is rapidly expanding for languages like Amharic and other Ethiopian languages with complex grammatical structures but limited digital resources.

To perform text classification, we need to transform words into numerical representations. This is performed via word embedding techniques, which are divided into two types: static embedding and contextualized embedding [5]. fastText, word2vec, and GloVe are all examples of static word embedding models [6]. On the other hand, embedding from language models (ELMo), generative pretrained transformer (GPT), and bidirectional encoder representation from transformer (BERT) are known for their ability to construct context-aware word embeddings using deep learning techniques, mainly transformer models [7]. Understanding the context of words in a sentence or document is essential for NLP tasks. A word’s meaning can vary depending on its surroundings, and context-based embedding models are designed to capture this distinction by generating different representations for the same word based on its context.

Static word embeddings are a form of NLP model that produces fixed-dimensional representations of words based on co-occurrence statistics in a given corpus [8]. Unlike context-based or contextual embeddings, static embeddings do not capture meanings that vary with context and do not adapt to changes in word semantics depending on their surroundings. Rather, they offer a uniform, context-independent representation of each word in the vocabulary [9]. Static embedding generates vector representations for both single words and word sequences (n-grams). Word2vec and GloVe generate word representations, whereas fastText goes a step farther by producing representations based on n-grams. This enables fastText to overcome a major issue with word2vec and GloVe: the inability to handle words not seen during training (out-of-vocabulary words) [10].

Context-based language models such as BERT and GPT are developed to maintain the contextual meaning of words. BERT is a game-changing language model created by Google in 2018 [11]. Because of its ability to capture bidirectional contexts and construct richly contextualized word representations, it represents a big leap in NLP, and it has become a core model for several NLP applications [12]. When compared to standard unidirectional models, BERT captures the contextual information of words by evaluating the whole context of a word in both directions, resulting in a more nuanced understanding of linguistic settings. In addition, BERT is designed to be fine-tuned to perform specific NLP tasks such as text categorization, named entity recognition, and question-answering [13].

Knowing the context of a word in a sentence or document is crucial for automatic document categorization like that of other NLP applications [14]. As a result, to achieve Amharic multiclass text categorization, a context-based language model is highly required. The BERT model has been fine-tuned by many researchers for Amharic sentiment analysis, named entity recognition, and hate speech detection from social media [15, 16]. However, in this study, we refined the pretrained BERT model for multiclass Amharic text categorization. The Ethiopian News Agency (ENA), Fana Broadcasting Corporate (FBC), and Walta Information Center (WIC) contributed to this custom dataset. Researchers can then use the publicly available online dataset and trained model for further improvement or fine-tuning.

Amharic is a Semitic language that falls under the Afro-Asian language family. Its alphabet is based on the Ge’ez script, which is the ancient religious writing system used by Ethiopian Christians [17]. Amharic is a language with a complex structure, characterized by its distinctive phonetic, phonological, and morphological features [18]. It comprises 38 unique phonemes, including 31 consonants and 7 vowels, along with a minimum of 234 different consonant-vowel syllables [19]. Amharic is considered an under-resourced language due to the scarcity of accessible NLP tools and resources, including pretrained language models and extensive text data collection.

Tokenizing and normalizing Amharic text documents are difficult due to the language’s complexity. These techniques are essential for correctly processing and interpreting linguistic data. Tokenization involves breaking down text into smaller units, while normalization ensures consistent word and phrase representation. To address the tokenization issue, we employed a rule-based approach of word segmentation techniques to process the incoming Amharic document. We replaced Amharic characters with simpler equivalents found in a normalizing database we prepared. This table compares characters with similar sounds and meanings. We chose the characters for the first column based on their frequency of appearance in Amharic documents and replaced them with their more common equivalents.

Fine-tuning a pretrained language model is an effective method for creating classifiers in low-resource languages. It decreases data needs, improves performance with limited data, establishes a solid foundation, speeds convergence, lowers computing costs, shortens development time, reduces data bias, and improves generalization. Pretrained language models are trained on a variety of datasets, decreasing biases and enhancing generalization to new data [20].

To the best of our knowledge, no one has yet adapted general-purpose language models for Amharic multiclass news document classification. In this study, we fine-tuned the BERT language model for this specific task by adding a classifier on top of the pretrained model. We then compared its performance against other language models like bag-of-words (BoW), Word2Vec, and fastText. In addition, we will publicly share a pretrained language model specifically for Amharic, along with a multiclass news dataset. This will establish a strong base for researchers and developers to create more sophisticated NLP applications in the Amharic language.

This study makes the following key contributions: (1) It offers a versatile dataset for NLP tasks related to Amharic news. (2) It supplies a pretrained model that can be adapted for future text mining applications by fine-tuning the BERT model in Amharic. (3) It evaluates the effectiveness of context-based language modeling against BoW, word2vec, and the fastText text classifier model for classifying Amharic text documents.

The paper is organized as follows: Section 2 discusses existing research on the study’s issue. Section 3 describes the data, models, and specific processes used in the research. Section 4 describes the experiments carried out, the results obtained, and their importance. Section 5 provides results and discussion. Section 6 summarizes the study’s main contributions, findings, and possible future directions.

2. Related Works

NLP applications often use pretrained text representations, but Amharic, which is a morphologically complex and under-resourced language, lacks this feature [16]. This paper investigates the use of word embeddings and BERT models for learning text representations in NLP. It explores commonly used methods like word2vec, GloVe, and fastText, as well as the BERT model. The study also examines the performance of query expansion using word embeddings and the use of a pretrained Amharic BERT model for masked language modeling, next-sentence prediction, and text classification tasks. Experimental results show that word-based query expansion and language modeling perform better than stem-based and root-based text representations.

Yimam et al. [15] developed and improved two existing semantic models for Amharic, a complex Ethio-Semitic language. They train seven new models, which include Word2Vec embeddings, distributional thesaurus (DT) embeddings, BERT-like contextual embeddings, and DT embeddings produced by network embedding techniques. When evaluated on NER and POS tagging tasks, these newly developed models outperformed several state-of-the-art models, including pretrained multilingual models like FLAIR and RoBERTa, as well as traditional word embedding models like Word2Vec. This demonstrates the effectiveness of the proposed models in capturing the nuances of the Amharic language. The researchers have openly shared their models, promoted collaboration, and stimulated further research in Amharic NLP. By providing these resources, they empower other researchers and developers to advance their work and develop cutting-edge NLP applications designed for the Amharic language.

Tezgider, Yildiz, and Aydin [21] introduced a bidirectional transformer (bitransformer) using two transformer encoder blocks for text data processing. Four models, including long short-term memory, attention, transformer, and bitransformer, were tested on a large Turkish text dataset. The experimental results demonstrated that the transformer-based models, including the proposed bitransformer, significantly outperformed traditional deep learning models like LSTM. This highlights the advantage of attention mechanisms and the bidirectional nature of the bitransformer in capturing long-range dependencies and semantic information within text sequences. The bitransformer excelled in text classification, demonstrating its ability to learn patterns and representations from the data.

Transfer learning is widely recognized for its potential to expedite and cost-effectively advance research in the downstream tasks of NLP [22]. This research develops the first BERT for the Amharic language, which outperforms pretrained models in sentiment classification tasks. The fine-tuned BERT model outperforms with 95% accuracy in the insufficient labeled corpus and performs best in Amharic Facebook comments.

Endalie and Haile [23] introduced a deep learning model for Amharic news document classification by integrating a convolutional neural network (CNN) with fastText word embedding. This model utilizes fastText to generate text vectors that capture the semantic meaning of the texts, addressing the limitations of traditional methods. These word vectors are then fed into the embedding layer of a CNN, which automatically extracts relevant features from the text. The CNN architecture enables the model to learn hierarchical representations of the text, capturing both local and global patterns. The proposed model was evaluated on a diverse dataset containing six news categories. Impressively, it achieved a high classification accuracy of 93.79%, surpassing traditional methods and demonstrating its effectiveness in accurately categorizing Amharic news documents.

Although pretrained word embedding models have advanced a variety of NLP applications, they ignore context and meaning within the text [24]. The researchers fine-tuned the pretrained AraBERT model for text classification, adapting it to the specific nuances of the Arabic language and task. They further enhanced the model by combining it with different classifiers. The fine-tuned AraBERT model significantly outperformed other state-of-the-art models, achieving an impressive F1-score and accuracy of up to 99%. This highlights the potential of pretrained language models like BERT in addressing challenging NLP tasks, even for languages with limited resources like Arabic.

The work of [25] introduces a BERT-based text classification model, ARABERT4TWC, for classifying the Arabic tweets of users into different categories. Building on this foundation, their model incorporates custom dense layers to fine-tune the network for the task of Arabic tweet classification. A multiclass classification layer is strategically positioned atop the BERT encoder to enable accurate predictions across various categories. To evaluate the effectiveness of ARABERT4TWC, they conduct rigorous experiments on five publicly accessible Arabic tweet datasets. They compare their model’s performance against a diverse range of state-of-the-art deep learning models, including other BERT-based models and traditional machine learning techniques. Through extensive experimentation, the results demonstrate that ARABERT4TWC consistently outperforms these competing models, highlighting its superior ability to capture the nuances and complexities of Arabic text.

The work of [26] aims to enhance text classification accuracy on the AG news dataset. It explores three powerful deep learning models: CNNs, BiLSTMs, and BERT. CNNs excel at identifying local patterns, BiLSTMs capture long-range dependencies, and BERT understands complex semantic relationships. By carefully tuning the hyperparameters of these models, the study highlights the superior performance of BERT compared to traditional methods in the task of news classification. This underscores the potential of advanced deep learning techniques to significantly advance the field of NLP, particularly in tasks that demand a deep understanding of language and context.

The news document classification models presented in [23, 27, 28] utilized a traditional method such as fastText and BoW technique to represent Amharic text for classification purposes. However, traditional word representation methods like BoW, fastText, and Word2Vec often struggle with word ambiguity, out-of-vocabulary words, and capturing long-range dependencies. In contrast, References [29, 30] demonstrate that fine-tuning BERT significantly improves English text classification for news documents. Motivated by recent advancements in transfer learning, we propose the application of this technique to enhance NLP for the under-resourced Amharic language. By leveraging pretrained models developed on large, resource-rich languages, we aim to accelerate training, improve performance, and address the challenges posed by data scarcity. Furthermore, we anticipate reducing computational costs and enhancing model robustness, leading to more effective and efficient Amharic language processing systems.

Amharic, the second most spoken Semitic language in Ethiopia, is the working language of Ethiopia’s Federal Democratic Republic and various regions, including Amhara, Addis Abeba, South Nations and Nationalities, Benishangul Gumuz, and Gambella [31]. Even though Amharic is the country’s working language, there is a serious lack of learning models in the language for various NLP applications. In this study, a fine-tuned BERT model is used to create an Amharic multiclass classifier, which will be utilized as input for further fine-tuning.

3. Materials and Methods

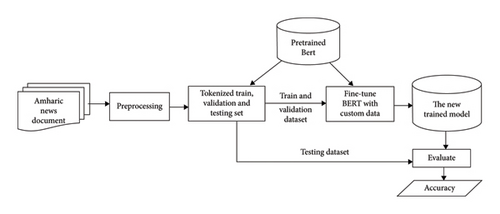

BERT is a powerful language model that excels in understanding Amharic text. It breaks down text into tokens, assigns them numerical representations, and utilizes self-attention to comprehend word relationships and context. By processing text bidirectionally, BERT captures semantic and syntactic nuances, disambiguates words with multiple meanings, and grasps long-range dependencies. This enables it to effectively understand complex Amharic sentences. Figure 1 illustrates all the steps we took to fine-tune the BERT model for Amharic multiclass categorization.

The fine-tuning process for BERT models starts with creating a labeled dataset intended for retraining the existing model (as depicted in Figure 1). Data preprocessing is crucial, as the effectiveness of the learning algorithm is closely linked to data quality; thus, preprocessing is required. Next, the training and validation datasets must be tokenized using the tokenizer associated with the BERT model before they are fed into the fine-tuned model. Following this, the model is retrained and saved. Finally, the newly trained BERT model is loaded, and its performance is assessed using the testing dataset, with results reported based on the evaluation metrics employed. A detailed description of each constituent component is provided as follows.

3.1. Data Source

To create a context-aware language model or adapt a pretrained model for specific NLP tasks, a significant quantity of text data is required. Currently, a vast amount of news content is continuously published online across various platforms, including social media, websites, and traditional media outlets. We gathered news articles of different lengths from the official websites of major governmental media sources, such as the ENA, FBC, WIC, and Reporter News Journal, to fine-tune the BERT language model for multiclass classification of Amharic news documents.

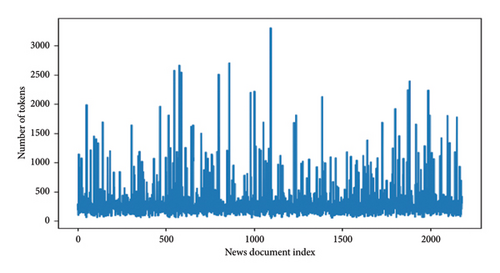

We collected 2181 unique Amharic articles from prior sources, covering seven major news categories. The longest article contains 3327 tokens, while the shortest has 40 tokens. Figure 2 shows an overview of the token distribution among the news documents used in this study. The distribution graph shows that the majority of the articles examined have a length of 40–500 tokens.

In this research, the news articles examined had an average length of 315 tokens each. The study encompasses seven distinct categories of news: accidents, agriculture, health, education, business/economy, politics, and sports. The compiled dataset comprises a total of 137,081 sentences in Amharic, amounting to 685,393 words or tokens overall. To provide a clearer understanding of the distribution of articles across these categories, Table 1 presents the number of news instances associated with each category, along with corresponding labels for easy identification. This structured approach allows for a comprehensive analysis of the various topics covered in the Amharic news domain.

| Category name | Number of new documents | Label |

|---|---|---|

| Accident | 308 | 0 |

| Agriculture | 302 | 1 |

| Health | 312 | 2 |

| Education | 308 | 3 |

| Business/economy | 316 | 4 |

| Politics | 315 | 5 |

| Sports | 320 | 6 |

The number of observations in each group is approximately equivalent, as seen in Table 1. This means that our dataset does not have skewed data distributions [32]. This balanced representation ensures that all categories are well represented in the model. It lowers the likelihood of overfitting to more populous categories within the dataset.

3.2. Data Preprocessing

In the field of NLP, one of the most essential steps is to preprocess texts before feeding them into a model. For well-resourced languages such as English and several European languages, there are many tools available, like NLTK, which facilitate effective text cleaning and preprocessing. However, for Ethiopian languages such as Amharic and Tigrigna, there are no publicly available open-source tools designed for this purpose. This forces us to use our codes to develop text processing and normalization for the Amharic language. It enhances our NLP application and contributes to the broader goal of making NLP accessible and effective for the Amharic language.

3.2.1. Data Cleaning

Amharic is written in Geez alphabets called Fidel (ፊደል) and traditionally uses a unique punctuation mark, the Ethiopic comma (፡), to separate words. Modern writing uses a single space for most texts, but some punctuation marks, such as full stop (።), comma (፡), semicolon (፤), and question marks (?), are also used. Latin punctuation marks may also be used [15]. Amharic text can be split into sentences using Amharic end-of-sentence marks (።), question marks, or exclamation marks.

Data cleaning is a vital preprocessing step for accurate Amharic document analysis. This involves eliminating extraneous or irrelevant data, such as HTML tags, hyperlinks, special characters, nontext components, numerals, and non-Amharic characters from the text documents. After cleaning, we preprocessed the data by normalizing characters, tokenizing words, and removing stop words. The preprocessed data were then used to train, evaluate, and test the proposed learning models: training the model on the data to learn patterns and relationships, evaluating its performance on a separate validation dataset to assess accuracy and generalizability, and finally, testing its real-world performance on a completely unseen test dataset.

3.2.2. Normalization

Normalization in Amharic language processing aims to combine numerous character forms that reflect the same sound into a single standard form [33]. Several “Fidels” or characters in Amharic share the same phonetic sound, and there are no definitive rules to determine their appropriate usage in different contexts. Consequently, this leads to a variety of writing styles, especially in online platforms like news articles and social media, where many Fidels are often used at random. Examples of Amharic characters that share same phonetic representation include ሀ፣ሃ፣ሐ፣ሓ፣ኀ፣ኃ፣ኻ (hā), ሠ፣ሰ (se), አ፣ ኣ፣ዐ፣ዓ (ā), ፀ፣ጸ (ts’e), and others, along with their variations. Thus, the single event or real-world entity might be articulated using many terms, resulting in redundancy that extends training duration and increases the storage requirements for creating learning models. To tackle this issue, we developed an Amharic text normalization program that standardizes texts written in multiple “Fidel” languages with similar sounds to the dominant class. This enhances the NLP model’s performance by diminishing the number of unique terms in the dataset. Table 2 presents a normalized list of Amharic characters that share identical sounds and meanings, despite variations in their written form.

| Standardized character form | Characters to be substituted with their standardized form |

|---|---|

| hā (ሀ) | hā (ሃ፣ኃ፣ኀ፤ሐ፣ሓ) |

| se (ሰ) | se (ሠ) |

| ā (አ) | ā (ኣ፣0፣ዓ) |

| ts’e (ጸ) | ts’e (ፀ) |

| wu (ው) | wu (ዉ) |

| go (ጐ) | go (ጎ) |

Table 2 shows Amharic characters to be standardized, along with their corresponding standardized forms used in this study. This table outlines the specific character substitutions required for normalizing Amharic text. The consonant characters and their variations listed in the second column are substituted with their standardized counterparts from the first column. This avoids the occurrence of identical words written in different character forms within the corpus.

3.2.3. Tokenization

Tokenization is a crucial stage in processing the Amharic language, which entails dividing texts into smaller components known as tokens [34]. Tokens can be sentences, phrases, or words, depending on the specific task. Tokenization in Amharic is particularly difficult due to the lack of distinct word boundaries and the language’s complicated morphological structure. To overcome these challenges, we implemented a rule-based word segmentation approach for tokenizing Amharic documents. The rule-based approach involves dividing the text into individual words by utilizing specific delimiters characteristic of the Amharic language. These delimiters include punctuation marks such as the full stop (።), comma (፡), semicolon (፤), and question marks (?). This method ensures that the text is accurately segmented for further processing.

3.2.4. Stop Word Removal

To improve the analysis’ performance, we removed common, noninformative terms, known as stop words, from the Amharic text. We eliminate predefined list stop words from the collection prepared by [35]. Stop words are commonly used words in any language such as articles, prepositions, pronouns, and conjunctions that are usually filtered out before processing natural language, as they contribute little meaningful information. By removing these stop words, we minimize the size of the dataset, which in turn reduces training time due to a lower number of tokens. This process also highlights contextually significant terms, reduces noise, and enhances the analysis and interpretation of textual data.

3.2.5. Train Test Splitting

Our dataset of Amharic news documents was divided into three subsets: a training set comprising 80% of the data, a validation set consisting of 10% of the training data, and a testing set comprising the remaining 20% of the original dataset. We selected an 80/20 train-test split to ensure a balanced distribution of data between training and testing sets. This balance allows for effective model training and reliable performance evaluation [36]. The training set was used to train the model, the validation set was utilized to fine-tune the model’s hyperparameters, and the testing set was reserved for a final evaluation of the model’s performance on unseen data. In the subsequent sections, we will present and analyze the model’s performance during both the training and testing phases.

3.3. BERT Fine-Tuning for Amharic Multiclass Text Classification

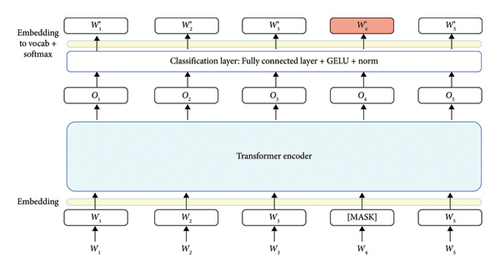

BERT is a transformer-based model used for natural language interpretation. Unlike standard language models, which read text in a single direction (left-to-right or right-to-left), BERT analyzes text bidirectionally, gathering information from both sides of a word at the same time. This enables BERT to have a better understanding of word meaning in context [37]. We build a classification layer on top of the pretrained BERT model and trained the entire model on our training datasets. The model processes the input text sequence, capturing semantic and syntactic meanings. The Transformer encoder extracts contextual information from the sequence. The masked token prediction task helps the model learn better representations of words and their relationships. This architecture is commonly used in state-of-the-art text classification models. Figure 3 illustrates the architecture of the BERT model, highlighting its key components and their interactions.

- 1.

Input Embeddings: The model takes a sequence of tokens as input. Tokens are then turned into embeddings, which consist of three parts. Token Embeddings: represent single words or sub-words. Segment Embeddings: Determine whether a token is in the first or second sentence (used in NSP). Positional Embeddings: Indicate a token’s position in the sequence.

- 2.

Encoder Layers: These layers, made up of several transformer encoders, are critical for processing the input. Each encoder layer contains the following. Self-Attention Mechanism: This mechanism allows the model to determine the relevance of each token with respect to all others that came before and after it. Feed-Forward Neural Networks: These networks process information generated during the attention step. Layer normalization and residual connections: These strategies improve training stability and overall performance.

- 3.

Output Layer: After the input has been processed by the encoder layers, the resulting embeddings can be used for a variety of downstream tasks, including classification, token prediction, and sequence labeling.

When employing a pretrained BERT model, it is a usual practice to replace the original output layer with a task-specific layer and fine-tune the entire model. The task of constructing applications on top of pretrained models utilizing labeled data is known as fine-tuning. BERT fine-tuning is one of the most popular and effective approaches to NLP tasks. This process includes several steps, such as modifying the model, setting up training configurations, fine-tuning the model, adjusting hyperparameters, and deploying the final version. In this paper, we investigate and fine-tune the pretrained “BERT-base-multilingual-cased” for Amharic text categorization.

We fine-tuned a general-purpose multilingual language model (BERT-base-multilingual-cased) to specifically understand Amharic text. We achieved this by training it on a massive dataset (133 million words) of Amharic news articles collected from the web (Amharic CC-100) [38]. This dataset is a valuable resource for anyone working on Amharic language technology, such as machine translation, summarizing text, understanding sentiment, and even creating new Amharic text [39]. The CC-100 dataset can be accessed online at https://data.statmt.org/cc-100/. As a result of this fine-tuning, we created a new model called “bert-base-multilingual-cased-fine-tuned-amharic.”

The bert-base-multilingual-cased-finetuned-amharic model is better at understanding and working with Amharic because it was trained on a bunch of Amharic text. This model is appropriate for tasks such as text categorization, sentiment analysis, and machine translation, providing a better grasp of the Amharic language. The Amharic BERT model was developed by adapting the original multilingual BERT model. This involved replacing the original vocabulary with Amharic vocabulary to better align with the language’s specific linguistic characteristics [22]. This model was created to recognize Amharic-named entities. To begin, we tokenize each sentence to N tokens and insert the [CLS] token at the beginning. Then, for each token i, we produce an input representation Ei by adding the vector embeddings corresponding to the token, the segment, that is, the sentence to which it belongs, and the token location.

After preparing the documents, we load the pretrained model and encode our training, validation, and testing data with maximum length and padding. We then create a layer on top of the pretrained model specifically for document classification and adjust the number of labels to match the categories in our dataset. The documents are categorized into seven groups: accident, agriculture, health, education, business/economy, politics, and sport. The model is subsequently retrained by fine-tuning the hyperparameters and saving the trained model along with its log files. This saved model can be used for any future NLP tasks.

3.3.1. fastText Language Model for Amharic Multiclass Text Classification

Facebook research created fastText, an efficient and popular language representation model for word and text categorization applications [40]. fastText models are trained on massive text corpora using unsupervised learning, and word representations are developed based on the co-occurrence of words and subword units for different languages such as English, Chinese, Amharic, etc. Treating each variant of words as an atomic unit in morphologically rich languages like Amharic is not an efficient approach. Words like ሰው (sewi), የሰው (yesewi), በሰው (besewi), ከሰው (kesewi), ሰውነት (sewineti), ከሰውነት (kesewineti), የሰውነት (yesewineti), etc. (all derived from the root word “ሰው” meaning “man”) are typically treated as separate words unless a morphological analysis is conducted beforehand. Unlike traditional word embedding models, fastText breaks down words into character n-grams, treating each word variant as a combination of these smaller units, enabling it to handle rare and morphologically complex words more effectively. It captures the morphological structure of words as the sum of their constituent character n-grams.

For instance, the word vector for “ዘገባ” (zegeba which means report) is obtained by summing the vectors of its constituent n-grams: “<ዘገ,” “ዘገባ,” and “ገባ”>. This manifest helps to generate better word embeddings for rare words. When compared to worde2vec and Glove, it is in addition capable of handling morphological variants and addresses out-of-vocabulary problems [41]. Its ability to handle multiclass text classification makes it a desirable tool for a wide range of applications.

The procedure of the fastText classifier starts with word representations, which are then averaged into text representations and supplied into a linear classifier (multinomial logistic regression). Text representation is a hidden state shared by features and classes. It uses the Softmax layer to obtain a probability distribution over a predefined class. A bag of n-grams is used to maintain efficiency without sacrificing precision. There is no discernible usage of word order. The hashing method is utilized to ensure that the n-gram mapping process is fast and memory efficient. This research utilizes the fastText classifier to categorize Amharic news articles. The process begins by preparing a custom dataset, cleaning it, and dividing it into training and testing sets. These sets are then transformed into a format compatible with fastText (__label__ + label name + actual news document). The model is trained using the training set and subsequently evaluated against the testing set. The performance of the trained model is then meticulously assessed using various quality metrics.

3.4. BoW for Amharic Multiclass Text Classification

The BoW model is a straightforward yet efficient method employed in NLP to represent textual documents as numerical vectors. It calculates the term frequency of each word in the document. It ignores the grammatical structure and order of words in a document, instead focusing exclusively on their frequency of occurrence [42]. After segmenting the Amharic news document into individual words or tokens, we create a vocabulary that includes all unique Amharic terms found in the entire dataset. Each word in this lexicon is given a unique number identification. We then build a fixed-length feature vector for each document. Each dimension in this vector corresponds to a certain word from the vocabulary, and the value at that dimension indicates how frequently the relevant word appears in the document.

To illustrate, let’s consider two Amharic news documents: Doc1: “ኢትዮጵያ አዲስ አበባ ዜና” (Ethiopia Addis Ababa News) and Doc2: “ስፖርት እግር ኳስ ተጫዋች” (Sport Football Player). After breaking down these documents into individual words and constructing a vocabulary of unique words, we might obtain a vocabulary like this: [ኢትዮጵያ, አዲስ, አበባ, ዜና, ስፖርት, እግር, ኳስ, ተጫዋች]. The corresponding feature vectors for these two documents would be: Doc1 [1, 1, 1, 1, 0, 0, 0, 0]. Doc2 [0, 0, 0, 0, 1, 1, 1, 1]. A classification algorithm can then learn to associate the first vector with the “News” category and the second vector with the “Sports” category.

In this study, we employed count vectorization to convert the provided documents into numerical feature vectors. This technique involves counting the occurrences of each word within a document. The resulting output is a sparse matrix where each row represents a document and each column represents a word. The value at the intersection of a row and column indicates the frequency of the word in the respective document. We used a total of 74,975 features for the BoW model. We chose this method for feature vector generation because of its effectiveness on large datasets and its simplicity [43]. Once the feature vectors were generated, we utilized a multilayer perceptron (MLP) classifier for classification.

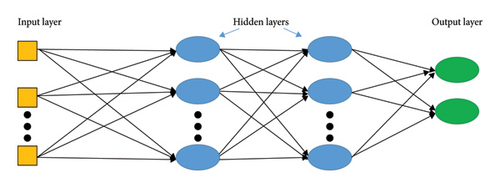

We chose the MLP model due to its flexible approach to document classification. MLPs can automatically learn intricate patterns from raw data, reducing the need for manual feature engineering. This makes them suitable for large-scale and complex classification tasks, as they can be easily scaled and adapted to different problem domains [44]. This neural network model is unidirectional, with signals flowing from input to output only. An MLP neural network is built from basic building blocks. A single input neuron serves as the foundation, which can be multiplied to accommodate multiple inputs [45]. These neurons are organized into layers, and by combining multiple layers sequentially, the full MLP network architecture is realized. Figure 4 illustrates a typical neural network architecture with an input layer, multiple hidden layers, and an output layer.

Neural networks are composed of interconnected processing units, or neurons. These neurons are linked by weighted connections and employ activation functions to determine the output based on input and a threshold value. The input layer receives data and passes it to the hidden layers. Each neuron in a hidden layer processes the output from the previous layer, applies an activation function, and then transmits the result to the next layer. This process repeats until the final output layer is reached.

We employ Python’s scikit-learn library to build an MLP network classifier. The performance of an MLP classifier is significantly influenced by the number of hidden layers, neurons per layer, and the number of training iterations. Determining the optimal number of layers and neurons remains a challenge. Underfitting occurs when the network is too simple, while overfitting results from excessive complexity. We utilize a grid search strategy to systematically experiment with different hyperparameter settings for the MLP. This method allows us to identify the combination of hyperparameters that yields the best performance on the given dataset.

3.4.1. Word2vec for Amharic Multiclass Text Classification

Words are the basic units of written language, representing sounds through a set of recognizable symbols. They are the smallest indivisible units within the grammatical structure of language. Word2Vec is a tool designed to create distributed representations of words [46]. Word2Vec can considerably improve the performance of categorization models by offering a more complex understanding of the text. Word2Vec converts each word in the Amharic corpus into a dense vector representation. This is accomplished using either the continuous bag-of-words (CBOW) or skip-gram models, which train to predict a word based on its context or vice versa.

CBOW and skip-gram are two fundamental methodologies in NLP for generating word embeddings. CBOW forecasts a target word utilizing its adjacent context words, whereas skip-gram anticipates the surrounding context words. For Amharic text classification, CBOW is used to learn semantic and syntactic relationships, generating word embeddings [47]. The average of these embeddings is then calculated to derive document-level representations.

In the final stage, we implemented an MLP classifier to categorize the feature vectors generated by the Word2Vec model. This choice of classifier aligns with the one used in this study on news document classification using BoW, enabling a direct comparison of the two methods’ performance.

3.5. Evaluation Metrics

- 1.

The accuracy of a classifier model can be determined using equation (1) by considering the ratio of true predictions versus all entries.

() - 2.

The true-positive rate (TPR), often referred to as sensitivity or precision, represents the proportion of actual positive data points that are accurately identified as positive out of all positive data points. It can be calculated using equation (2).

() - 3.

Recall is simply defined as the ratio of correctly predicted positive classes to all positive classes that exist. It is mathematically expressed in equation (3).

() - 4.

The F1-score is a statistic that combines precision and recall into a single metric. Equation (4) shows how we can compute it.

()

4. Implementation

The pretrained BERT and fastText models were preprocessed and fine-tuned using Python programming. These preprocessing and fine-tuning models were trained on the graphics processing unit (GPU) of Google Colab. We used a transformer’s library to train BERT models. These transformers provide a set of pretrained deep learning models for a broader range of NLP applications such as sentiment analysis, false news detection, question answering, and text classification. To fine-tune the pretrained BERT model from the Hugging Face Library, we utilized AutoTokenizer and AutoModelForSequenceClassification for tokenization and text classification, respectively. Hugging Face allows you to employ pretrained BERT models that are specialized to do certain NLP tasks.

The labeled Amharic news document dataset includes training, validating, and testing datasets for training, validating, and evaluating the fine-tuned model. We have two columns in our labeled dataset: the news document and the news category label. The label’s values included are accident, agriculture, health, education, business/economics, politics, and sports. The Panda’s library’s label encoder module is used to convert these category labels to numerical values.

In addition to fine-tuning the BERT model for multiclass Amharic news document classification, which is based on context-based language modeling, we also fine-tuned fastText, a static embedding language model. We also develop Amharic multiclass classification models using standard techniques like BoW and Word2Vec as benchmarks. We used the same dataset, preprocessing, operating conditions, and evaluation metrics to compare and contrast their performance over an Amharic news content classification to generate two pretrained models from the context-based and static language models. These trained models will be critical in the development of Amharic NLP applications.

5. Results and Discussion

In this section, we delve into the process of fine-tuning a pretrained BERT model for the specific task of categorizing Amharic news documents. To evaluate the performance of our fine-tuned model, we employed a rigorous experimental setup. We recorded the best training performances throughout the process, saving them after each epoch. The batch sizes used for training and evaluation were 4 and 8, respectively. We optimized the model’s hyperparameters using a grid search approach, which involves evaluating different combinations of hyperparameter values to find the best-performing configuration. Table 3 provides a summary of the hyperparameter values utilized for training the model.

| Hyperparameters | Values |

|---|---|

| Evaluation_strategy | Epoch |

| Save_strategy | Epoch |

| Logging_ strategy | Epoch |

| Num_train_epochs | 10 |

| Learning_rate | 1e − 5 |

| Per_device_train_batch_size | 4 |

| Per_device_eval_batch_size | 8 |

| Load_best_model_at_end | True |

| Warmup_steps | 1000 |

| Weight_decay | 0.01 |

| Logging_steps | 20 |

We adopted a single train-test split for all experiments in this manuscript. A single train-test split approach offers several advantages, including computational efficiency, simplicity, clear data separation, and faster iteration. It is particularly well-suited for large datasets, rapid experimentation, and basic model evaluation. While cross-validation provides a more robust evaluation, especially for smaller datasets, it can be computationally expensive [36].

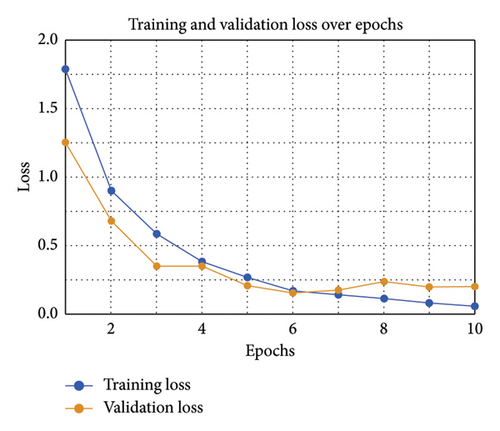

To identify the optimal number of epochs for our model and dataset, we conducted a comparative analysis of model performance across various epoch values. The learning curve indicates that the training loss and validation loss curves have stabilized around the 10th epoch. This suggests that the model has reached its peak performance and further training would likely lead to overfitting [48].

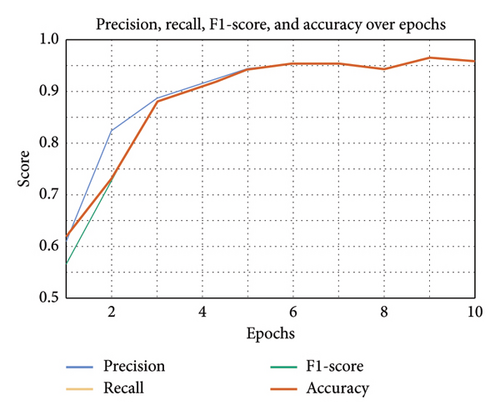

To provide a clearer understanding of the model’s learning progression, the training results from the log file are presented in two figures: Figures 5 and 6. Figure 5 illustrates the loss values, while Figure 6 shows the training performance metrics, both tracked over 10 epochs. The figures show details of the training and validation results of the BERT model fine-tuned for classifying Amharic multiclass news documents, including metrics such as training loss, validation loss, precision, recall, F1-score, and accuracy. Figure 5 presents the learning curves for the fine-tuned model, showing the training and validation loss across 10 epochs. The training loss reflects the model’s ability to fit the training data, while the validation loss indicates its generalization performance.

Figure 6 depicts a model’s performance over 10 training epochs, with all four measures showing an upward trend. Precision and recall measures exhibit similar patterns, indicating that the model is improving at recognizing positive cases while avoiding false positives. The F1-score, a balanced measure of precision and recall, has also been steadily increasing, showing overall increased performance. Accuracy measures are also increasing, indicating that the model is becoming more accurate in its predictions.

Along with training and testing the model’s performance, we assessed its effectiveness on test set categorization. The evaluation of the proposed classifier is done with an evaluation parameter that compares the number of documents classified correctly and incorrectly. To ascertain whether the classifier accurately categorizes the news items, we must compare the labels generated by the model with those assigned manually. In addition, the trained Amharic news document classifier is evaluated using various quality metrics, including accuracy, recall, precision, and F1-score, by adjusting the hyperparameters of the pretrained BERT model. The categorization of the testing news dataset is employed to gauge the performance of the fine-tuned model. The results are presented in Table 4.

| Model | Evaluation metrics | |||

|---|---|---|---|---|

| Amharic fine-tuned BERT | F1-score | Accuracy | Precision | Recall |

| 87.59% | 88% | 88% | 87.61% | |

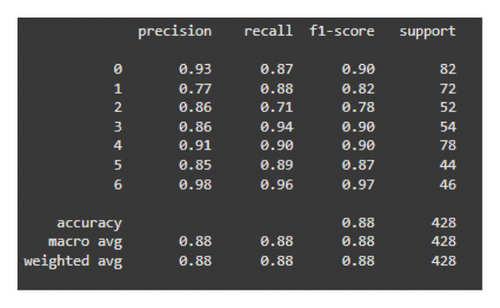

In addition to reporting the overall performance metrics (average F1-score, accuracy, precision, and recall) for the seven news categories, we provide a detailed breakdown of the model’s performance on each individual class. Figure 7 presents a comprehensive classification report of the model’s performance on each news category. The results presented in the figure indicate that the model achieves an overall accuracy of 88%, demonstrating a relatively strong performance. The macro and weighted average metrics also indicate strong overall performance.

The figure illustrates the classification performance of each class in our test dataset. Class 0 achieved a high precision of 0.93, correctly identifying 93% of instances. Class 1 had a lower precision of 0.77, correctly identifying 77% of instances. Class 2 had a moderate precision of 0.86, correctly identifying 86% of instances. Classes 3, 4, 5, and 6 demonstrated high precision, correctly classifying 86%, 91%, 85%, and 98% of instances, respectively. The high F1-score indicates strong overall performance. However, Class 1 seems to have the lowest performance, with a precision of 0.77. This is likely due to the overlap between agriculture-related news and news related to politics, economy, and health [49, 50]. This results in the model facing difficulty in distinguishing between Class 1 (agriculture) and other related classes.

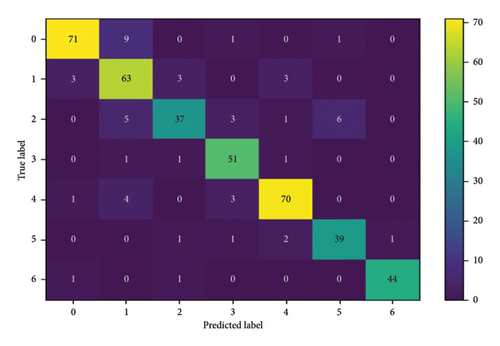

In addition to the standard evaluation metrics of F1-score, accuracy, precision, and recall, we employed a confusion matrix to visually represent the distribution of news articles across true and predicted categories. This matrix allowed us to gain insights into the specific types of classification errors made by our model, such as false positives and false negatives. Figure 8 shows how well the model predicts different classes compared to the true positive.

The confusion matrix above offers a comprehensive analysis of the model’s efficacy in categorizing various classes. The diagonal elements denote accurately identified occurrences, whereas the off-diagonal elements signify misclassifications. The matrix indicates that the model demonstrates robust performance in identifying classes 0 (accident), 4 (economy), and 6 (sport) achieving high accuracy rates. This is because the three categories are fundamentally distinct and have little to no overlap in terms of their subject matter. The model is also fairly accurate in classifying documents from Class 3 (education).

However, there are instances of misclassification between classes 1 (agriculture), 2 (health), and 5 (politics) that are difficult to be classified by the model since they share a certain number of features. Specifically, six instances of Class 2 were erroneously classified as class 5, while nine instances of class 0 were misclassified as Class 1. Similarly, five instances of Class 2 were erroneously categorized as Class 1. This is due to the inherent ambiguity of the Amharic language, where a single word can have multiple meanings. For instance, the word “ትምህርት” (pronounced timhirt) can refer to the process of learning and teaching in the context of “education,” while in the context of “health,” it signifies health literacy. Although the model exhibits strong overall performance, there is potential for improvement in accurately classifying news documents into specific categories.

We also employ the fastText model to classify Amharic news documents in this work. We train and test the fastText model by transforming our training and testing datasets into the fastText format. The model obtains a training accuracy of 0.90% when we train it with the loss set to softmax and the epoch set to 100. The evaluation metrics values presented in Table 5 are produced by the fastText model to classify the testing dataset.

| Classifier | Evaluation metrics | |

|---|---|---|

| fastText classifier | Precision | Recall |

| 80% | 80% | |

Tables 4 and 5 show that the Amharic fine-tuned BERT model outperforms the fastText classifier in all assessment criteria utilized in this study. We notice that the fine-tuned model improves the classification precision and recall by 8% and 7.61%, respectively. Even though fastText is the best static-based embedding and text classifier model among static-based embedding models such as word2vec and Glove, it handles the embedding of words that do not exist in the corpus by constructing the embedding of the supplied to subword-based embedding [51]. This is because the BERT models keep the contextual meanings of every word in every phrase or document in a way that differs from the context of those words used in other sentences or documents. It also observes the left and right directions to determine the contextual meaning of words.

Finally, we conducted a comparative analysis to evaluate the performance of our fine-tuned BERT model against other popular language models, including fastText, as well as traditional baseline models like BoW and word2vec. To conduct this comparative analysis of these language models, we used the same dataset and preprocessing model. To tune the hyperparameters of each learning algorithm, we used a grid search technique. This entailed training each model 10 times with different hyperparameter settings and determining the configuration that generated the best results. Table 6 presents a comparison of four different text classification models: Fine-tuned BERT, fastText, Word2vec, and BoW. The performance of each model is evaluated using four main metrics: accuracy, precision, recall, and F1-score.

| Models | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| Fine-tuned BERT | 88 | 88 | 87.61 | 87.59 |

| fastText classifier | 80 | 80 | 80 | 80 |

| Word2vec with MLP classifier | 74 | 73.7 | 74 | 73.7 |

| Bag-of-words with MLP classifier | 81.7 | 82.4 | 82.6 | 82.3 |

According to the result presented in Table 6, fine-tuned BERT outperforms all other models in all metrics, making it the most effective model in this study. With an accuracy of 88%, it outperforms the other models significantly. The precision of 88% indicates that when BERT predicts a favorable outcome, it is true 88% of the time, exhibiting a high ability to decrease false positives. Its precision is equal to its accuracy, meaning that the model demonstrates a strong ability to correctly classify positive instances while minimizing the occurrence of false positive classifications. With F1-score of 87.59%, the model maintains a fair trade-off between precision and recall. This superior performance can be attributed to BERT’s ability to keep the contextual meanings of every word in every phrase or document in a way that differs from the context of those words used in other sentences or documents. It also observes the left and right directions to determine the contextual meaning of words.

The BoW model ranks second in terms of performance, with an accuracy of 81.7%. It effectively identifies positive instances (recall of 82.6%), while maintaining an adequate level of precision (82.4%) and reducing false positives. fastText achieves an accuracy of 80% across all metrics. This equal performance in precision and recall suggests a balanced approach. In contrast, Word2Vec is the least effective model, with an accuracy of only 74%. It struggles to correctly identify instances, as indicated by its low precision and recall (both 73.7%). The low F1-score of 73.7% further highlights its inability to balance precision and recall. Word2Vec requires large amounts of data to generate exact word representations that reflect complex language patterns. Unfortunately, the dataset employed in this work was insufficient to teach Word2Vec to understand the syntactic and semantic structure of Amharic documents [52].

Furthermore, we assess these models’ effectiveness in predicting the category label of unseen and unlabeled news documents. For this process, we used the same preprocessing and tokenizer module as the one we used for the model development. Table 7 shows a comparative analysis of fine-tuned BERT, Word2Vec with MLP classifier, BoW with MLP classifier, and fastText classifier models on unlabeled five Amharic news documents. The “True label” column represents the ground truth, while the remaining columns indicate the model’s predictions. This allows for a direct comparison of model performance.

| Sample texts translated from amharic | Predicted labels by each model | True label | |||

|---|---|---|---|---|---|

| Fine-tuned BERT | Word2vec | Bow | fastText | ||

| Eighteen people died in a traffic accident in Serti Kebele, North Showa Zone, Angollana Tara District | 0 | 0 | 2 | 0 | 0 |

| Dropping points from Manchester City and Arsenal has created a good chance for Liverpool in the progress of the English Premier League title | 6 | 6 | 6 | 6 | 6 |

| In the discussion held at the Prime Minister’s Office, amendments were made to the general education, technical and vocational education, higher education, and education sector management | 3 | 2 | 3 | 1 | 3 |

| Institutions covering 80% of rural economic development in the region are organized in clusters and are working in coordination and cooperation | 1 | 2 | 5 | 4 | 4 |

| Copper kills living sperm. Pregnancy is impossible without viable sperm | 2 | 2 | 2 | 1 | 2 |

The results presented in Table 7 indicate that the fine-tuned BERT model surpasses other models in accurately classifying Amharic news documents. The model correctly predicts the labels of four out of five documents. However, it misclassifies a document labeled as “economy” by news publishers as “agriculture.” The fine-tuned model misclassified the “economy” news document as “agriculture” likely due to the presence of terms like “clusters,” which are commonly used in agricultural contexts. In contrast, the Word2Vec, BOW, and fastText models accurately classify three out of five news documents, following the performance of the fine-tuned BERT model. Despite achieving correct classifications for three documents, the Word2Vec, BOW, and fastText models exhibit discrepancies in their specific predictions.

This paper investigated the possibility of developing an Amharic news document classifier by fine-tuning the pretrained models. These fine-tuned and retrained models are then made publicly available to others who want to improve them even more by fine-tuning them with new training, validating, and testing datasets. We did not study the fine-tuning of BERT models with different bases due to a lack of experimental resources, datasets, and computational machines, and we failed to create our own BERT model from scratch.

6. Conclusion

The open availability of learned models and scientifically confirmed data collection aids in the execution of any NLP task in a given language. This is not the case with under-resourced languages such as Amharic, where scholars are focusing on the establishment of the language model and data gathering rather than the NLP problem at hand. This study presents a comprehensive evaluation of a fine-tuned BERT model for Amharic multiclass news document classification. We fine-tuned the BERT model using Amharic news articles from major Ethiopian news outlets such as FBC, ENA, WIC, and Reporters. These articles were categorized into seven primary news categories: accidents, agriculture, health, education, economy, politics, and sports. We used various data preprocessing techniques like cleaning, normalization, and tokenization to prepare the data for the learning model. After fine-tuning the BERT model using this dataset, we evaluated its performance on various metrics. The empirical findings indicate that the fine-tuned BERT model significantly outperforms baseline models such as BoW, word2vec, and fastText, demonstrating the power of deep learning techniques for NLP in low-resource languages like Amharic. The experimental results indicate that the fine-tuned model achieved satisfactory performance on all evaluation metrics. It achieves an accuracy of 88%, a precision of 88%, a recall of 87.61%, and an F1-score of 87.59%. However, future research could explore further fine-tuning strategies and the incorporation of larger, more diverse datasets to enhance performance.

Conflicts of Interest

The author declares no conflicts of interest.

Funding

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Acknowledgments

This research was conducted by an academic staff member at the Jimma Institute of Technology, Jimma University, Ethiopia. The author wishes to express sincere gratitude to the institute for its generous provision of resources, and to Jimma University for its invaluable support and guidance throughout the research process.

Open Research

Data Availability Statement

The data that support the findings of this study are openly available in GitHub at https://github.com/demekeendalie/Fine-tuning-bert-model-for-amharic-news-classification.