Customized Generative Adversarial Imitation Learning for Driving Behavior Modeling in Traffic Simulation

Abstract

Driving behavior modeling is a crucial yet challenging task in the development of traffic simulation systems. Advances in machine learning and data-driven vehicle trajectory extraction technologies have significantly advanced research in this area. However, the performance of such models can be affected by numerous factors often overlooked by the existing methods, including the complexity of real-world road environments and driver characteristics. In this paper, we introduce a novel modeling approach, termed the customized generative adversarial imitation learning (Cus-GAIL) method, designed to capture these complex factors. Our approach incorporates a conditional imitation learning technique that utilizes traffic’s prior knowledge to train a reinforcement learning (RL) model. In addition, we have innovatively developed a collision avoidance mechanism that markedly improves the reliability of microscopic traffic simulation. To address variations in driving styles, we have also created a driver classifier. Moreover, we propose a method for synthesizing small-sample vehicle trajectory data to enhance the RL model’s ability to perceive rare data scenarios. By integrating these components, our model effectively encapsulates a wide range of external and internal factors. To validate the efficacy of the Cus-GAIL method, we employ an unmanned aerial vehicle (UAV) to monitor the two road segments and gather video data of actual vehicle trajectories. The experimental results demonstrate that the Cus-GAIL method outperforms established baselines on both microscopic and macroscopic metrics.

1. Introduction

Driving behavior modeling is a foundational component in intelligent transportation systems, autonomous driving, and advanced driver-assistance systems (ADASs). With the rapid development of sensing technologies, vehicular networks (VANETs), and artificial intelligence, research in this field has gained significant momentum in recent years. By modeling drivers’ operational patterns, behavioral preferences, and responses to dynamic environments, such systems can enhance traffic safety, driving comfort, and energy efficiency [1].

Recent advancements in sensor technologies (e.g., LiDAR and vision systems) and large-scale naturalistic driving datasets (e.g., Waymo Open Motion and nuScenes) have enabled unprecedented granularity in capturing heterogeneous driving patterns, from routine car-following to complex negotiation behaviors in urban scenarios. The field has gained renewed importance with the realization that purely physics-based trajectory prediction models often fail in real-world interactions where human drivers combine traffic rules with social norms, a challenge highlighted by recent autonomous vehicle disengagement reports showing 34% of incidents stem from incorrect behavior anticipation1.

Cutting-edge research leverages graph neural networks (GNNs) [2] and transformer architectures [3] to model the latent cognitive processes behind lane-change decisions, while multiagent reinforcement learning (RL) frameworks [4] successfully replicate human driving styles in simulated environments. The multimodal sensor fusion approach for driving behavior recognition is proposed [5], which significantly improved the detection of anomalous behaviors in complex driving scenarios. Similarly, deep RL is applied to model driving strategies, enabling more human-like and context-aware decision-making in autonomous vehicles [6]. Driving behavior models are also essential for developing high-fidelity driver profiling and for enabling predictive safety mechanisms such as early-warning systems. Particularly in the era where human drivers and autonomous systems must coexist, the ability to understand and anticipate human driving behavior plays a crucial role in ensuring safe and efficient human–machine interaction [7]. As a result, developing robust and interpretable models for driving behavior has become a critical research frontier in modern transportation technologies.

To model behaviors, trajectory prediction is an analogous task and it has been applied in many fields, including aircraft flight trajectory prediction in the aviation industry [8], short-term passenger trajectory prediction in subways [9], short-term ship trajectory prediction [10], long-term human trajectory prediction [11], and cyclist trajectory prediction [12]. These data-driven models can be trained on a variety of scenarios, allowing them to better capture changes in driving behavior, in contrast to traditional car-following and lane-changing models [13, 14].

With the advancements in machine learning and video-based vehicle trajectory extraction technology, unlike traditional car-following and lane-changing models, many existing data-driven methods can be used on multiple scenarios to better reflect driving behavior changes. However, on the one hand, when applied to driving behavior modeling, most existing models typically rely on large volumes of training data and use time-series deep learning techniques, such as recurrent neural networks (RNNs) and long short–term memory (LSTM) [15], to predict vehicle trajectories. On the other hand, the predictive performance of the data-driven method is also influenced by several internal and external factors, including the random behavior of surrounding vehicles, historical trajectory information, and the relative position of adjacent vehicles. Without incorporating the complex road environment and the various driving behaviors in different scenarios, these methods may struggle to achieve optimal performance.

To overcome the shortcomings of existing models, in this paper, we follow the research line of using data-driven trajectory predicting models to develop simulation systems [16–18] and focus on the scenario of road traffic simulation and investigate the problem of how to predict the vehicle trajectory more accurately, finally, to achieve a better performance of driving behavior modeling.

Specifically, based on the classical generative adversarial imitation learning (GAIL) model [19], we propose a customized GAIL (Cus-GAIL) method for vehicle trajectory prediction. Within the Cus-GAIL framework, we introduce a set of customized measures to model complex driving–related factors. First, in terms of domain knowledge, we use a conditional imitation learning (CIL) approach to integrate traffic’s prior knowledge, which has proven to be valuable in our experiments. Second, during the decision-making phase, we introduce an innovative collision avoidance mechanism, which significantly improves the reliability of the microscopic traffic simulation. Third, to account for the diversity in driving styles, we incorporate a driver classification (DC) subtask to capture the variety of driving behaviors. Finally, to mitigate the issue of limited training data and improve the model’s understanding of rare scenarios, such as overtaking, we propose a small-sample vehicle trajectory data synthesis method.

To validate the effectiveness of our proposed Cus-GAIL method, we use an unmanned aerial vehicle (UAV) to monitor the two road segments and collect video data on actual vehicle trajectories. Extensive experiments are conducted using both microscopic and macroscopic traffic simulation metrics. The results, compared to strong baseline methods, show that our approach outperforms existing methods.

- •

We investigate the task of vehicle trajectory prediction for driving behavior modeling. Through data analysis, we highlight several external and internal real-world factors that influence performance, which are often overlooked by existing methods. These factors include traffic’s prior knowledge, collision avoidance mechanisms, driving behavior styles, and the augmentation of rare driving scenarios.

- •

We propose the Cus-GAIL method to address these factors. The proposed improvements include the following: (1) a CIL approach that leverages prior traffic knowledge, (2) an innovative collision avoidance mechanism that enhances the reliability of microtraffic simulations, (3) a driver classifier to account for differences in driving styles, and (4) a data synthesis method to improve model performance on limited data.

- •

To validate the effectiveness of the Cus-GAIL method, we collect a vehicle trajectory dataset using UAV monitoring on two real-world road segments. We conduct extensive experiments using microscopic and macroscopic traffic simulation metrics, and the results show that our method outperforms competitive baseline approaches.

2. Related Work

In the field of microscopic traffic simulation, one of the main goals of modeling longitudinal vehicle movement in traffic flow, also known as car-following modeling, is to replicate driving trajectories. Although many models have been proposed to simulate traffic flow, most of them are based on idealized settings that are not sufficiently representative of real-world conditions. In this section, we first review the basic imitation learning, and then classify related works on driving behavior modeling into three categories: traditional imitation learning methods, neural network–based methods, and GNN–based methods.

2.1. Imitation Learning

This paper focuses on the imitation learning method, a machine learning approach designed for tasks where the goal is to perform a task by mimicking expert demonstrations. Traditionally, two main approaches are used for this setting: behavioral cloning [20], which learns a policy through supervised learning on state-action pairs from expert trajectories, and inverse RL (IRL) [21, 22], which seeks a cost function under which the expert is uniquely optimal. Although behavioral cloning is simple and appealing, it generally requires large amounts of data due to the compounding error caused by covariate shifts [23, 24]. IRL, on the other hand, learns a cost function that prioritizes entire trajectories, thus avoiding the compounding error common in methods that focus on single-timestep decisions. However, many IRL algorithms are computationally expensive, as they require RL in an inner loop. To overcome these drawbacks, a model-free imitation learning algorithm based on generative adversarial networks (GANs) has been proposed, called GAIL [19]. GAIL determines the policy through interaction with the environment, whereas behavioral cloning does not require such interaction. In addition, by using a generator and discriminator, GAIL is faster than IRL because it bypasses the intermediate IRL step. Extensions of GAIL have been proposed, such as infoGAIL, which leverages mutual information [25], and PS-GAIL, which uses a parameter-sharing approach [26]. In this paper, we build on GAIL and propose a customized version (Cus-GAIL) tailored for vehicle trajectory prediction. In the following, we review various methods related to the vehicle trajectory prediction task.

2.2. Methods for Vehicle Trajectory Prediction

2.2.1. Traditional Imitation Learning–Based Methods

To better understand the spatial interactions between vehicles and make the model more independent of specific environments, a dual learning model (DLM) was proposed for vehicle trajectory prediction. This model embeds an occupancy map (OM) and a traffic scene risk map (RM) [16]. However, learning the OM requires environment-specific data, meaning the model may not adapt well to new or different environments. Later, an attention mechanism was introduced that emphasizes the importance of neighboring vehicles and their future states [27]. This method extends beyond pairwise interactions between vehicles to simulate higher-order interactions. It also combines global and local attention to generate multiple possible trajectories for surrounding vehicles. However, this approach can increase model complexity due to the need for more parameters and intricate structures, which can lead to overfitting and reduced generalization performance. Real trajectory time-series data were used to train an interactive perception-based trajectory prediction system, aiming to explore the factors that most influence trajectory prediction. The analysis revealed that factors such as traffic density, secondary task engagement, driver gender, and age group further impact prediction accuracy [27].

2.2.2. Neural Network–Based Methods

With the development of neural networks, many models based on RNNs or LSTMs [15] have been proposed, utilizing more real-world data and experimental settings. For instance, to address potential gradient explosion issues in RNN-based vehicle trajectory prediction, an attention mechanism–based RNN model was introduced [7], incorporating network traffic state data into urban vehicle trajectory predictions. However, this method requires a large dataset to train the model and obtain accurate attention weights. A simple encoder–decoder architecture with multihead attention was proposed [28], enabling the model to generate predicted trajectory distributions for multiple vehicles in parallel. It can also learn to focus on influential vehicles in an unsupervised manner, improving network interpretability. However, due to the encoder–decoder architecture, this method may be prone to overfitting, which can affect its generalization ability when applied to new datasets. Xing et al. [29] proposed a joint time-series modeling method to predict the trajectories of preceding vehicles with different driving styles. This method uses limited intervehicle communication signals, such as the speed and acceleration of the preceding vehicle, to accurately and personally predict its trajectory. However, changes in driving style, such as those caused by varying traffic conditions or driver behavior, may affect the prediction results. Rossi et al. [30] compared LSTM- and GAN-based deep learning models for trajectory prediction. Their proposed GAN-3 model can generate multiple predictions in multimodal scenarios. They found that while LSTM-based models perform well in single-modal scenarios, generative models are superior in multimodal scenarios. However, GAN models may suffer from model collapse, where generated trajectories become too similar or repetitive, lacking diversity. To address issues of model interpretability, Lin et al. [31] proposed an LSTM-based model with a spatial–temporal attention mechanism (STA-LSTM) to explain the impact of historical trajectories and neighboring vehicles on the target vehicle. However, the STA-LSTM model is sensitive to abnormal data, and the presence of outliers in the input data may lead to incorrect predictions.

2.2.3. GNN-Based Methods

GNNs have shown effectiveness in various tasks, and researchers have applied this technique to trajectory prediction. The environment attention network (EA-Net) was proposed [18], which consists of a graph attention network (GAT) and a convolutional social pooling module with a squeeze-and-extraction mechanism (SE-CS) as the environmental feature extraction module, embedded in an LSTM encoder–decoder. This structure overcomes the limitations of dimensionality and structural issues when modeling interactions between vehicles and the surrounding environment, making the extracted feature information more comprehensive and effective. However, the SE-CS mechanism requires convolution and squeeze-and-extraction operations to be performed on each node, resulting in high computational costs and longer training and prediction times. A novel graph-based information sharing network (GISNet) was proposed [32], enabling information sharing between the target vehicle and surrounding vehicles. This model encodes the historical trajectory information of all vehicles in the scene. In addition, a graph-based spatiotemporal convolutional network (GSTCN) was proposed, combining graph convolutional networks (GCNs) for spatial interactions, convolutional neural networks (CNNs) for temporal features, and a gated recurrent unit (GRU) to generate spatiotemporal trajectory distributions. The model also uses a weighted adjacency matrix to describe the mutual influence between vehicles [33]. However, constructing the weighted adjacency matrix requires significant prior knowledge and experience, and improper analysis of the mutual influence between vehicles could compromise the accuracy of the matrix.

3. Methodology

GAIL is a classical RL method for imitation learning [19]. This paper aims to use vehicle trajectory data extracted from UAV videos to learn the driving behavior of real vehicles by modeling complex indicators of road environments and driving behaviors. Several customized measures for improving the GAIL algorithm (referred to as Cus-GAIL) are proposed, enhancing modeling performance, and enabling the method to control vehicle behavior in a traffic simulation environment. In the following subsections, we introduce the improvements made during the training of Cus-GAIL and the Cus-GAIL-based driving decision-making method.

3.1. Improvement Measures in Cus-GAIL

The proposed improvement measures in training Cus-GAIL are introduced from multiple perspectives, including the following: (1) CIL with prior knowledge, (2) DC, and (3) driving data synthesis methods. The first measure leverages more domain knowledge during model training, the second models the variety of driving styles, and the last focuses on data augmentation to enhance model training. These measures aim to model the complex real-world environment and capture various factors of driving behavior.

3.1.1. CIL With Prior Knowledge

Using end-to-end imitation learning to control behaviors such as acceleration, lane changes, and steering angles is effective in a single scenario, where a one-to-one mapping relationship is established. However, different driving scenarios and behavioral states require consideration of different features. For example, when driving through an intersection, the driver must consider not only the current traffic flow but also the traffic information from all four directions and the status of traffic signals. Without this information, the vehicle may base its decisions on the assumption of straight-line driving, leading to errors in subsequent traffic simulations.

In this paper, prior knowledge includes traffic signs and driving scene information. Traffic signs are categorized into the following types: (1) warning signs, which alert about potential dangers near the vehicle; (2) prohibition signs, which prohibit certain types of vehicles from performing specific actions; (3) directional signs, which indicate the direction and path for the vehicle to follow; and (4) guide signs, which provide information on the direction and distance to the destination. Driving scene information primarily consists of details about the driving context, including the current road type (e.g., urban expressways, ramps, lane changes, etc.). Both traffic signs and driving scene information are encoded into a unique feature vector, which is used to select different decision model branches. Specifically, when a branch is selected, only that branch is active, while the others remain frozen.

3.1.2. Analysis of Driving Behavior Characteristics and DC

Driver characteristics are key indicators of driving behavior across various scenarios. For drivers of the same type, the features in their samples should exhibit consistency, while different driver types will show significant differences in driving characteristics. In real-world driving, numerous factors influence driver behavior, and there may be correlations between these features. Therefore, it is crucial to select the core features that most significantly affect the driving behavior.

Driving behavior can be categorized into two levels: microscopic and macroscopic. At the microscopic level, it includes acceleration, braking, and steering, as well as car-following and lane-changing behaviors. At the macroscopic level, it involves route selection.

- •

Lateral acceleration directly reflects a driver’s turning behavior: Aggressive drivers tend to drive at higher speeds, take larger turns, and generate higher lateral acceleration. They focus on completing lane changes quickly, without regard for surrounding traffic conditions. Conservative drivers, in contrast, tend to slow down when turning, observe the traffic conditions, and then change lanes. Ordinary drivers exhibit behavior that lies between these two extremes.

- •

Longitudinal acceleration reflects the rate of vehicle speed change: Aggressive drivers frequently accelerate and decelerate in pursuit of the fastest possible speed, while conservative drivers prefer to maintain a steady speed.

- •

Time headway (TH) and gap represent the safe distance maintained by a following vehicle: Aggressive drivers tend to have smaller headways due to their inclination to forcefully cut in. Conservative drivers maintain a larger following distance, driving at a slower speed, which results in a greater headway.

- •

Heading angle information indicates the vehicle’s stability during driving: Aggressive drivers, even at high speeds, make sharp steering adjustments when changing lanes, leading to larger values for the product of speed and heading angle. Conservative drivers, however, make smaller steering adjustments, resulting in a smaller product of speed and heading angle.

- •

Rate of change of heading angle can also reflect the time and speed at which a driver executes a lane change: Aggressive drivers typically exhibit a higher rate of change in heading angle, while conservative drivers display a smaller rate of change.

Based on this analysis, this paper develops a DC task to improve driving behavior modeling by leveraging GAIL.

- •

Conservative drivers: These drivers maintain a safe distance from the vehicle in front of them and generally avoid overtaking, lane changing, or other aggressive behaviors. They demonstrate a strong sense of patience.

- •

Aggressive drivers: These drivers are prone to overtaking, lane changing, speeding, and cutting in, often significantly impacting other vehicles on the road. To avoid slow traffic, they may engage in risky behaviors, such as driving in the wrong direction, entering restricted areas, or running red lights, to save time.

- •

Normal drivers: These drivers exhibit average driving behaviors, neither overly cautious nor excessively aggressive.

3.1.3. Driving Synthesis Data (DSD)

In real-world driving trajectories, only a small number of vehicles exhibit behaviors such as lane changing, cutting in, and overtaking, but these actions can significantly impact the behavior of other vehicles in the environment. Figure 1 shows snapshots of violation driving behavior samples where vehicles enter restricted areas in real scenarios. Specifically, as shown in Figure 1(a), the black vehicle violates traffic rules by attempting to overtake the car in front from a restricted area while driving on the main road. Then, as shown in Figures 1(b) and 1(c), the vehicle enters the restricted area and completes the overtaking maneuver within 3 s. After overtaking, as shown in Figure 1(d), the vehicle returns to the original lane. This vehicle’s overtaking in the restricted area and its intention to enter the main road disrupt the normal flow of traffic for other vehicles on the main road and the ramp. Therefore, it is crucial to focus on modeling such behaviors in simulations.

To address this, the paper proposes a small-sample data augmentation method that generates trajectory data with similar properties for less frequent data samples, such as those involving violation behaviors.

Specifically, the small-sample driving data synthesis algorithm generates data from two perspectives: longitudinal and lateral. The longitudinal data synthesis method involves altering the state of the sample at a particular moment (such as changing the speed, acceleration, or turning angle) to simulate the subsequent trajectory behavior, without altering the overall trajectory type, as shown in Figure 2. The lateral data synthesis method generates longitudinal data for vehicles surrounding the sample trajectory without modifying the behavior or state of the sample trajectory itself, thereby changing the visual range information of the trajectory sample.

3.1.3.1. Synthesis Results for a Small-Sample Dataset

We generated small-sample data for rare behaviors such as overtaking, continuous lane changing, and illegal driving, which are infrequent in real-world data but have a significant impact on simulation outcomes. The generated results are shown in Table 1.

| Small-sample types | Real data sample size | Generated sample size |

|---|---|---|

| Overtaking | 244 | 1890 |

| Running red lights | 22 | 1420 |

| Cutting in | 410 | 1500 |

| Driving in the opposite direction | 3 | 100 |

| Driving into restricted areas | 144 | 1280 |

3.2. Cus-GAIL-Based Driving Decision-Making Method

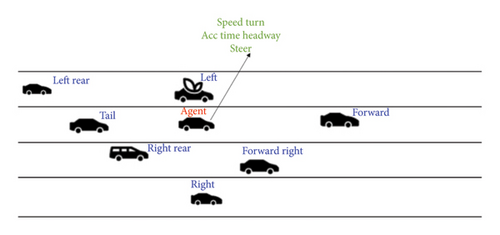

The training strategy for the driving model is based on the GAIL method. The input to the generator model consists of the road environment and driving features, while the output is a series of driving actions. The discriminator model evaluates whether the generated driving action data come from the generator or expert samples. A collision avoidance mechanism is introduced to enhance the RL model’s decision-making capability.

3.2.1. Generator That Integrates Temporal Information

3.2.2. Collision Avoidance Mechanism

As microscopic traffic simulation algorithms run, the observation space for vehicles and expert data grows over time. Unfamiliar observation spaces may lead vehicles to make unsafe driving decisions, causing simulation failures. To address this, we propose a collision prevention safety mechanism that limits the likelihood of simulation failure. In real-world driving data, collisions are rare, so dangerous driving states are seldom seen in expert data. However, as the simulation progresses, the vehicle’s state information diverges from real data, causing it to make incorrect decisions that lead to collisions. When the simulation vehicle is on the edge of a collision, its state information differs substantially from real-world data. Based on this observation, we propose a collision prevention safety mechanism that uses an expert policy model to assess behavioral differences between the generated and expert states, determining whether the vehicle is in a state that could lead to a collision. The expert policy πexp is obtained using behavior cloning. At each moment in the simulation, the behaviors aaen and aexp generated by the policies πaen(xt) and πexp(xt) are compared under the observation state xt. If the difference between aaen and aexp is large, it indicates that the vehicle is in an unfamiliar state. In such cases, the vehicle searches the state library for a more similar state and takes the corresponding action for that state.

3.2.3. Discriminator Model and Training Process

During interaction with the environment, the traffic environment, surrounding vehicle, and vehicle state information are fed into the generator model. Based on prior knowledge, the appropriate model branch is selected, and the driving behavior is generated, producing a large number of state-action pairs (S, A), denoted as (SG, AG). Next, vehicle trajectories are extracted from UAV videos and processed to match the format of the generated data, i.e., state-action pairs with the same time and form as the generated data, forming expert data (SE, AE). The expert data samples are labeled as real data, with labels as follows: conservative drivers are labeled 1, normal drivers 2, and aggressive drivers 3. The data generated by the generator are labeled as fake data with a label of 0. Both real and fake data are input into the discriminator model, which evaluates the data and computes a loss value. The generator is then updated based on this loss.

In the ongoing adversarial process between the discriminator and the generator, the generator progressively learns to produce data that closely resemble real data. For the expert data samples, the data points tend to cluster near the driving style’s center, while fewer points are far from the center. Targeted training is performed on the data points far from the clustering center using conditional imitation learning. In this study, we assign higher rewards to the fake data generated by the generator that is closer to the clustering center of the real samples.

In summary, this paper applies the GAIL method, utilizing CIL based on driving scenarios. The collision prevention model reduces the likelihood of dangerous driving states, while the driving style classification method enhances the realism of simulations. The small-sample data augmentation method is used to simulate the impact of overtaking and other rare behaviors on traffic flow.

4. Experiment

4.1. Dataset

To validate the effectiveness of the proposed Cus-GAIL method, we collected a real-world dataset consisting of 2.4 h of video footage. The videos were recorded from two different road segments by a UAV flying at an altitude of 100–200 m, with a frame rate of 24 frames per second. The number of vehicles appearing in the videos ranges from 300 to 1200 per minute. We use the videos from one of the road segments as the training set, and those from the other segment as the test set.

For vehicle detection and tracking in the video stream, we use PP-YOLOE2 and BoT-SORT3. The trajectories of each vehicle are extracted, and the required features for Cus-GAIL are calculated. The details of these features are shown in Table 2. To obtain the labels of driving behavior characteristics and DC, the key video frames are extracted per 5 s. The human labelers mark the annotations on various behavior characteristics and DC.

| Feature type | Features |

|---|---|

| Road level | Length of the road segment |

| Number of lanes | |

| Lane line colors | |

| Lane line types | |

| Speed limit | |

| Lane changing positions | |

| Vehicle level | Speed |

| Average speed | |

| Acceleration | |

| Minimum stopping distance | |

| Headway | |

| Direction | |

| Counts of each driving behavior performed (following, lane changing, cutting in lane, entering restricted area, etc.) | |

| Lane level | Traffic density |

| Vehicle speed at each section | |

4.2. Baselines and Training Setting

The main baselines for comparison are as follows: Behavior cloning is a straightforward imitation learning algorithm that learns a policy directly from expert demonstrations by using supervised learning on observation-action pairs. GAIL [19] is an effective imitation learning method that trains a generative policy model to replicate expert behavior, achieving this by jointly training a discriminator to distinguish between trajectories generated by the generator and expert trajectories. InfoGAIL [25] extends GAIL by adding a mutual information term between the latent variables and the observed state-action pairs. PS-GAIL [26] is another extension of GAIL, utilizing a parameter-sharing approach grounded in curriculum learning to enhance performance in multiagent scenarios.

We implement our models with the Adam optimizer [34], using β1 = 0.9, β2 = 0.999, and ε = 1e − 8. The experiments are conducted on an Ubuntu 20.04 operating system, using software such as OpenDRIVE4 V1.4, Python 3.7, and PyTorch 1.8. The hardware configuration includes an Intel (R) Core (TM) i9-10900K CPU, 128 GB of memory, a 2 TB mechanical hard disk, and 10 NVIDIA GeForce RTX 2080 Ti graphics cards.

For the task of learning driving behavior, to evaluate whether the learning results align with those of real vehicles, we compare the positions and differences between the apprentice vehicle and the corresponding expert vehicle trajectories at each moment from a microscopic perspective. In addition, we assess the overall traffic indicators of the simulation from a macroscopic perspective.

4.3. Metrics

We evaluate the consistency between the results generated by the learning algorithm and the actual vehicle driving conditions from both microscopic and macroscopic perspectives.

From a macroscopic perspective, we evaluate the similarity between the simulated traffic flow and the actual traffic flow, as well as the safety of the simulated traffic flow, through metrics including task success rate (TSR), collision rate (CR), TH, and average flow speed (AFS).

4.4. Experimental Results

4.4.1. Result from a Microscopic Perspective

As shown in Table 3, we compare our proposed Cus-GAIL with several well-performing baseline models from a microscopic perspective. We observed that behavior cloning performed the worst due to error accumulation caused by covariate shifts [23, 24]. As the simulation progresses, the difference between the driving state of the simulated vehicle and the real distribution gradually increases, causing the behavior cloning model to generate increasing errors, which further exacerbates the disparity in driving state distribution.

| Decision model | Training scenario | Testing scenario | ||

|---|---|---|---|---|

| RMSE | MCE | RMSE | MCE | |

| Behavior cloning | 0.456 | 0.191 | 0.788 | 0.291 |

| GAIL | 0.187 | 0.133 | 0.309 | 0.282 |

| InfoGAIL | 0.139 | 0.107 | 0.231 | 0.224 |

| PS-GAIL | 0.092 | 0.081 | 0.115 | 0.103 |

| Cus-GAIL (ours) | 0.052 | 0.032 | 0.091 | 0.073 |

GAIL mitigates the error accumulation problem inherent in behavior cloning and improves the smoothness of driving. As a result, GAIL outperforms the behavior cloning model significantly. Both infoGAIL and PS-GAIL are extensions of GAIL. InfoGAIL enhances GAIL’s objective by incorporating mutual information between latent variables and observed state-action pairs, while PS-GAIL applies a parameter-sharing approach based on curriculum learning. Both models outperform GAIL.

The proposed Cus-GAIL improves upon the original GAIL by modeling the fact that drivers with different driving styles may exhibit different behaviors in the same state, through the introduction of driver category characteristics. Furthermore, Cus-GAIL incorporates prior knowledge via conditional imitation and generates samples of abnormal driving behaviors to augment the dataset. As a result, Cus-GAIL achieves the lowest RMSE and MCE compared to the baseline models.

4.4.2. Result from Macroscopic Perspective

As shown in Table 4, the performance of each model from a macroscopic perspective aligns with the results from a microscopic perspective. Due to the covariate shift problem, the behavior cloning model still exhibits poor generalization. However, GAIL and its extensions (infoGAIL and PS-GAIL) effectively mitigate this issue, ensuring that the TH of these models falls within a safe range. In addition, the TSR and CR of these models are significantly better than those of the behavior cloning model. Nonetheless, the average speed of the simulated traffic flow generated by these models differs by more than 36% from the real traffic flow.

| Decision model | TSR | CR | TH | AFS |

|---|---|---|---|---|

| Behavior cloning | 0.253 | 0.652 | 0.594 | 28.587 |

| GAIL | 0.693 | 0.227 | 3.387 | 22.458 |

| InfoGAIL | 0.665 | 0.241 | 3.459 | 24.630 |

| PS-GAIL | 0.651 | 0.251 | 2.892 | 21.377 |

| Cus-GAIL (ours) | 0.847 | 0.088 | 2.471 | 17.461 |

| Oracle | 1.000 | 0.000 | 2.224 | 15.610 |

By incorporating driver category features, Cus-GAIL prevents collisions that would occur if conservative drivers performed aggressive maneuvers during the simulation, significantly reducing the CR of the simulated traffic flow and improving the TSR. Furthermore, Cus-GAIL introduces prior knowledge through conditional imitation, which integrates driving status and traffic signs, bringing the TH and AFS of the simulated traffic flow closer to those of the real traffic flow.

5. Analysis and Discussion

5.1. Ablation Experiments

We conduct a series of ablation experiments to evaluate the effectiveness of each proposed module. Starting with the base GAIL, we sequentially add our new modules, DC, CIL, and DSD, and compare the performance in both microscopic and macroscopic settings.

As shown in Table 5, after incorporating each module, both RMSE and MCE are reduced, indicating improved model performance. Specifically, the improvement after adding the DC module confirms the accuracy of DC and demonstrates that the driver behavior models align well with real data. The addition of conditional imitation leads to a significant performance boost, suggesting that the prior knowledge we introduced effectively guides the model to make correct driving decisions under current conditions. The improvement observed after using small-sample synthesis data highlights the significant impact of illegal driving behaviors on traffic flow, and our synthesis method effectively generates data for such behaviors. When all three modules are combined (i.e., our proposed Cus-GAIL), the RMSE and MCE for both the training and test sets reach their lowest values, resulting in the best overall performance.

| Decision model | Training scenario | Testing scenario | ||

|---|---|---|---|---|

| RMSE | MCE | RMSE | MCE | |

| GAIL | 0.187 | 0.133 | 0.309 | 0.282 |

| +DC | 0.167 | 0.108 | 0.273 | 0201 |

| +DC + CIL | 0.062 | 0.041 | 0.098 | 0.079 |

| +DC + CIL + DSD (ours) | 0.052 | 0.032 | 0.091 | 0.073 |

The performance trends shown in Table 6 align similarly from a macroscopic perspective. With the addition of DC, conditional imitation learning, and DSD modules, the TSR and CR of the simulated traffic flow generated by the model show an upward trend, while the TH and flow speed become increasingly closer to the real traffic flow. Notably, after adding DSD, while the TSR and CR slightly decrease, the headway and flow speed improve significantly, aligning more closely with the real traffic flow.

| Decision model | TSR | CR | TH | AFS |

|---|---|---|---|---|

| GAIL | 0.693 | 0.227 | 3.387 | 22.458 |

| +DC | 0.788 | 0.121 | 2.851 | 20.753 |

| +DC + CIL | 0.851 | 0.086 | 2.562 | 18.377 |

| +DC + CIL + DSD (ours) | 0.847 | 0.088 | 2.471 | 17.461 |

| Oracle | 1.000 | 0.000 | 2.224 | 15.610 |

5.2. Visualization Study of Driver Styles

5.2.1. Using K-Means to Cluster Driver Styles

The K-means algorithm is used to classify vehicles in the dataset into conservative, aggressive, and normal types based on features such as the mean and variance of lateral acceleration, the mean and variance of longitudinal acceleration, the mean and variance of headway, the rate of change of heading angle, and the product of heading angle and speed. Afterward, the clustering results are refined, and any obvious misclassifications are corrected. For each driver’s trajectory, the driver type is determined based on the majority clustering result corresponding to the driving style at each moment.

5.2.2. Visualization of Clustering Results

Clustering is performed using features such as vehicle speed, steering angle, headway, acceleration, distance to the left lane line, and number of lane changes to classify the driving state of each vehicle at each moment as conservative, aggressive, or normal. The most frequent driving state type within each trajectory is then identified and marked as the vehicle driver’s style. Figure 3 shows the clustering results of driving styles.

In addition, the clustering results of driving styles are visualized in actual driving environments, as shown in Figure 4. The blue car represents a conservative driver, who maintains a larger safety distance from the vehicle ahead. The white car represents an aggressive driver, who engages in behaviors such as cutting in to reach their destination faster. The green car represents a normal driver, whose driving behavior falls between the conservative and aggressive extremes.

5.3. Discussion

The experimental results above show that the behavior cloning method is simple and easy to implement quickly in engineering applications. This study highlights the importance of experimental methods and feature selection in the early stages. However, due to the presence of compound errors, the performance of behavior cloning is poor. As time progresses, vehicle speeds increase, and the distance between vehicles decreases, resulting in a collision probability of 65.2%. GAIL effectively alleviates the compound error problem in microscopic traffic simulation, allowing vehicles to maintain a safe distance for longer periods. This improves the success rate of the task and reduces the mean square error of acceleration. However, there remains a significant discrepancy between the simulation and real traffic flow.

Model collapse occurs when the generator model tends to produce the same data distribution, leading all vehicles to adopt similar driving behaviors. By enhancing the GAIL reward function, giving higher weights to data samples closer to the center of the driving style cluster, and using distinct behavior models to control vehicles, we improve the effectiveness of the microscopic traffic simulation. Compared to the strong baselines, such as behavior cloning, GAIL, infoGAIL and PS-GAIL, the key difference is that Cus-GAIL models more various data and captures more information, which provides significant improvement and demonstrates its effectiveness. From the ablation experiments, each proposed component of Cus-GAIL, i.e., DC, CIL and DSD, is also proved to provide a positive contribution to the final performance. For example, classifying driver styles effectively prevents all simulation vehicles from adopting the same strategy in similar scenarios, preserving the diversity of driving strategies for different driver types in real-world scenarios. Incorporating traffic rules and driving scenes as prior knowledge to guide the selection of CIL branches helps simulation vehicles better recognize the current driving environment, significantly reducing the CR and improving the alignment of simulation vehicle behavior with real vehicle behavior.

Even a small number of illegal driving vehicles can impact the behavior of normal vehicles in traffic flow. To replicate the effect of illegal driving vehicles in microscopic traffic simulation, this study employs a small-sample driving data generation algorithm to produce a large volume of illegal driving data. This enables the model to fully learn the impact of such behaviors, further improving macroscopic indicators and achieving a microscopic traffic simulation that closely mirrors real-world driving conditions.

6. Conclusion

Driving behavior modeling plays a crucial role in the development of traffic simulation systems. To accurately model driving behaviors, it is essential to account for the complex factors of the road environment as well as the diverse driving styles of individuals. In this paper, we propose a Cus-GAIL method, which integrates various external and internal factors into a unified model. Specifically, we first develop a CIL approach to incorporate traffic’s prior knowledge. Second, we introduce an innovative collision avoidance mechanism to enhance the reliability of the microscopic simulation process. In addition, we propose a DC model to address behavioral differences across driving styles. Finally, we introduce a vehicle trajectory data synthesis method to improve Cus-GAIL’s perception capabilities, particularly in scenarios with limited data, such as overtaking situations. To evaluate the effectiveness of our method, we use a UAV to monitor two real-world road segments and collect vehicle trajectory data. Through extensive experiments, we demonstrate that all the proposed measures are effective based on both microscopic and macroscopic metrics typically used in traffic simulation.

Admittedly, the work presented in this paper still has room for improvement, including a relatively small testing dataset, limited real-world scenarios, a simple reward model and RL method, and limited factors considered in driving behavior modeling. Several promising research directions for the future include exploring more fine-grained and relevant factors that influence driving behavior, such as weather conditions and workday/holiday information. In addition, more powerful reward models that capture a broader range of information should be developed, as they are the key component of RL systems. Finally, leveraging cutting-edge large language models and advanced RL techniques may open new opportunities for enhancing driving behavior modeling.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the National Natural Science Foundation of China (Grant no. 72288101), the International Science and Technology Cooperation Program of the Shanghai Committee of Science and Technology, China (Grant no. 24170790602), and the 2024 special program of Artificial Intelligence Promotion on the Reform of Scientific Research Paradigms of the Shanghai Committee of Education, China.

Endnotes

1https://www.dmv.ca.gov/portal/vehicle-industry-services/autonomous-vehicles/disengagement-reports/.

2https://github.com/PaddlePaddle/PaddleDetection.

Open Research

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.