Fast Visual Tracking with Enhanced and Gradient-Guide Network

Abstract

The existing Siamese trackers express visual tracking through the cross-correlation operation between two neural networks. Although they dominated the tracking field, their adopted pattern caused two main problems. One is the adoption of the deep architecture that drives the Siamese tracker to sacrifice speed for performance, and the other is that the template is fixed to the initial features; namely, the template cannot be updated timely, making performance entirely dependent on the Siamese network’s matching ability. In this work, we propose a tracker called SiamMLG. Firstly, we adopt the lightweight ResNet-34 as the backbone to improve the proposed tracker’s speed by reducing the computational complexity, and then, to compensate for the performance loss caused by the lightweight backbone, we embed the SKNet from the attention mechanism to filter out the valueless features, and finally, we utilize the gradient-guide strategy to update the template timely. Extensive experiments on four large tracking datasets, including VOT-2016, OTB100, GOT-10k, and UAV123, confirming SiamMLG satisfactorily balance performance and efficiency, where it scores 0.515 on GOT-10k while running at 55 frames per second, which is nearly 3.6 times that of the state-of-the-art method.

1. Introduction

Visual object tracking is a crucial yet fundamental topic in computer vision. With its extensive range of applications [1, 2, 3, 4, 5, 6, 7, 8, 9, 10], it has emerged as a highly sought-after research direction.

Visual tracking has recently evolved into three branches. The first branch depends on correlation filters [11, 12, 13, 14], and the second one mainly utilizes strong deep architectures [15, 16]. But both have their limitations. The first needs generalizations for various scenarios and objects, while the second branch cannot meet real-time requirements.

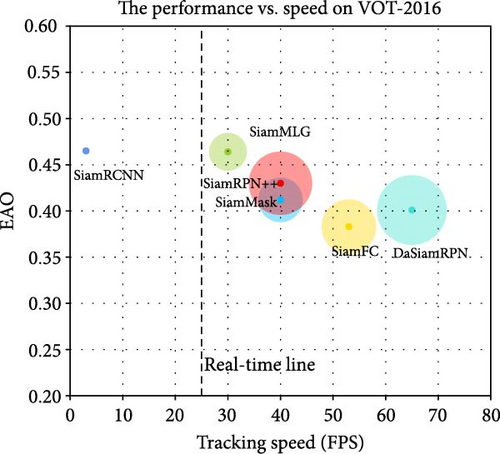

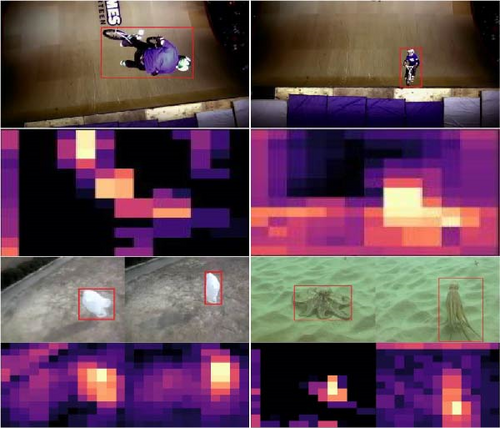

As the third branch, the Siamese trackers [17, 18, 19, 20] have garnered great attention from the academic community due to their simple structure and acceptable generalization capabilities. Yet, these Siamese-based trackers still have two major areas for improvement, which might enlarge the gap between academic approaches and industrial applications. In specific, firstly, ever since the SiamRPN++ [21] has broken the limitations that deep neural networks [22] are incapable of using as the backbone, an increased number of Siamese trackers have utilized deep neural networks to improve their performance, while ignoring the trackers is becoming heavy and expensive, as shown in Figure 1. Secondly, the Siamese trackers are usually given the object at the initial frame of the video sequence and adopt this object as the template to perform similarity matching in the next frames. Although such a pattern has expressed tracking effectively, it still ignores the fact that the template may seriously deform in next frames, as shown in Figure 2. The reason is that this template is the only one used in the Siamese tracking process, which means that the manually selected object in the initial frame of the video is the template used in the entire tracking process. Despite the fact that some trackers [23, 24] have tried to address the first shortcoming, their simplistic structure has caused performance to drop dramatically. Other methods [25, 26, 27] have tried to update templates by different mechanisms, but they simply focus on combining the previous object features while neglecting the discriminative information in the background clutter. Such obsolete solutions cause the main challenge of Siamese tracking how to design an effective model that can update the template in a timely manner. Also, they cause the Siamese trackers to struggle in complicated scenarios and leave the potential for further improvement.

Inspired by this observation, in this paper, we first try to adopt the modified and lightweight ResNet-34 [22] as the backbone because it is the light version of ResNet-50, which guarantees the tracking speed. However, precisely because the ResNet-34 is a lightweight network, it may cause some performance drop. Accordingly, we embed the selective kernel network (SKNet) [28] in the lightweight network ResNet-34 to balance the performance loss, giving the ResNet-34 channel-discriminative capability for input features. And enlightened by the GradNet [29], we later utilized the gradient in ResNet-34 to execute template updates. Specifically, we utilize discriminatory information in the gradient via feed-forward and backpropagation to complete template updates. This is because the gradients are calculated based on the final loss, which takes into account both positive and negative candidates. They not only have discriminative information that reflects the object’s deformation but can also distinguish the object from the background clutter. Besides, unlike classic gradient-based but hand-designed trackers [30, 31], utilizing the gradients in the backbone to update the templates can improve speed by further reducing computation.

- (1)

We design a lightweight backbone with an embedded attention mechanism by using the ResNet-34 to improve tracking speed.

- (2)

Utilizing the gradients in the designed backbone, we promptly update the template in our proposed tracker.

- (3)

Extensive experiments conducted on four popular datasets demonstrate that our proposed tracker performs competitive results while running at 55 frames per second (FPS).

The structure of this paper is as follows: Section 2 briefly reviews the interrelated works, Section 3 illustrates our proposed tracker, Section 4 gives the results and analysis of experiments, and Section 5 is the conclusion of this paper.

2. Related Works

2.1. Siamese Network-Based Trackers

Since SiamFC [18] introduced AlexNet [32] as the backbone, increasingly Siamese trackers such as SiamRPN [33] and DaSiamRPN [23] have also begun to utilize AlexNet as their backbone, which allows them to run at nearly 100 FPS. Yet, these trackers’ performance still has the potential to be improved due to the utilities of shallow backbones. Noticing this phenomenon, SiamRPN++ [21] successfully introduced the deep neural network ResNet-50 [22] into visual object tracking, which improves performance further. Henceforth, while the latest Siamese tracker [34, 35] endeavors to tackle finer-grained issues, such as anchor-free-based [24, 36, 37], unsupervised learning [38], 3D object tracking [39, 40, 41], rotation [42], redetection [43], and nighttime tracking [44], most of them still employ a deep architecture that fails to reach an acceptable balance between performance and speed. SiamSERPN [45] uses the lightweight network MobileNet-v2 [46] as the backbone and designs a squeeze and excitation region proposal network to compensate for the performance loss, which tries to attend to both, but it is still incapable of updating the template timely due to the natural structure.

2.2. Model Updating in Visual Tracking

Ensuring timely template updating is essential for improving the performance and robustness of the trackers. Based on such consideration, DSiam [25] proposes a rapid transformation learning method that facilitates efficient online learning from historical frames. Later, the method FlowTrack [26] utilizes optical flow information to convert templates and integrate them according to their weights. Yet, the two methods mentioned above perform template updating by combining the templates, not only driving them to exhibit high computational complexity but also ignoring the impact of the background clutter, especially when handling large-scale video sequences. Although some gradient-descent-based trackers [30, 31] emerged, they need multitime training iterations to catch the appearance of deformation, which causes them to be less effective and distant from real-time requirements. The latter UpdateNet [47] proposes a compact method to update templates in a timelymanner. In specific, it employs the initial, accumulated, and current templates to estimate the optimal template for the subsequent video’s frame. Despite the fact that UpdateNet can be integrated into different Siamese trackers, such as SiamFC and DaSiamRPN, previous knowledge about parameter tuning is required. Compared to UpdateNet, our proposed tracker update template is in an end-to-end network.

3. Proposed Tracker

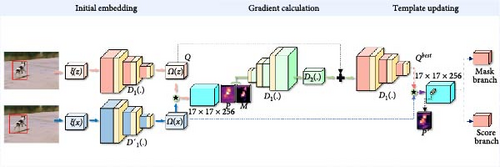

The structure of our proposed tracker is shown in Figure 3. The upper branch is the template branch, which receives historical frames as the input, and the bottom branch is the search branch, receiving current frames as the input. Both branches are the ResNet-34 embedded with SKNet [28], and the SKNet has three stages (Split, Fuse, and Select), as illustrated in Section 3.3. Then, the template updating strategy also consists of three stages (Initial Embedding, Gradient Calculation, and Template Updating), provided in Section 3.4 in detail.

3.1. Basic Tracker

The previous SiamMask [48] is the first tracker that integrates tracking and semantic segmentation, which has two versions, the three-branch (anchor-based) and two-branch (fully convolution-based) versions, where we modify the latter and utilized the modified SiamMask as our basic tracker.

Although our modified basic tracker uses the ResNet-34, which is lightweight and embedded with SKNet, as the backbone, the working pattern remains the same as the original two-branched SiamMask. Namely, for the basic tracker we used, the backbone first extracts the input features and directly conducts cross-correlation operations to obtain the similarity map. Then, the mask function is obtained by semantic segmentation according to the scores generated in the similarity map. However, integrating the mask function with visual tracking poses several challenges to the basic tracker. One is that the basic tracker cannot balance acceptable performance and speed; the other is that its performance rapidly declines when faced with complicated scenarios. In short, the robustness of our basic tracker still has the potential to be improved further because it cannot update the template promptly.

3.2. Siamese Lightweight Backbone

The backbone of our basic tracker consists of two identical and parameter-shared ResNet-34, but unlike the one utilized in other computer vision tasks, the ResNet-34 we adopted is modified. Specifically, we modify the original stride from 32 to 8 to enhance the resolution of the features, allowing the SiamMLG to locate the small-size objects more accurately, which learns from the previous work SiamRPN++ [21].

Moreover, we crop the historical images that are inputted to the template branch. Precisely, we retain the central region of 7 × 7 as the template feature and use the average RGB values to occupy the remaining areas, effectively capturing the entire target region and alleviating the substantial computational load [18].

Owing to its computational complexity of only 3.6 × 109 FLOPS, the ResNet-34 is more efficient than the deeper ResNet-50 (3.8 × 109 FLOPS) [22]. But simultaneously, the ResNet-34 we adopted, due to having a deeper structure than shallow AlexNet [32] and ResNet-18, can extract more information. The capability of ResNet-34 to balance performance and efficiency makes it suitable as the backbone for the proposed tracker.

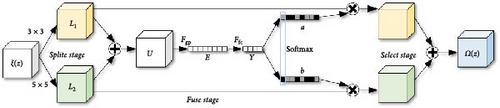

3.3. The Embedded Attention Mechanism

Attention mechanisms have been widely adopted in computer vision tasks, especially in image classification, to allow models to focus adaptively on the valuable features among the input rough features. Inspired by this, we introduce the SKNet among the branch attention mechanisms into the tracking field to compensate for the performance drop caused by the lightweight ResNet-34 as the backbone of our tracker. The SKNet introduced has three stages, including Split, Fuse, and Select, and the structure is shown in Figure 4. Note that the illustration in this subsection takes the template branch as an example.

3.3.1. Split Stage

The rough feature ξ(z) input from the template branch needs to be decomposed into two copies by convolution kernels of size 3 × 3 and 5 × 5, in which the 5 × 5 one is the dilated convolution [49] for efficiency, and the obtained features are denoted by L1 and L2, respectively.

3.3.2. Fuse Stage

3.3.3. Select Stage

Compared to the SENet [50], the SKNet we adopted possesses fewer parameters, and it keeps the original structure of the neural network as the backbone. Therefore, our decision to adopts the SKNet as the filter has the potential to enhance the performance of the tracker significantly.

3.4. Gradient-Guide Strategy

In the two ResNet-34 that served as the backbone of our tracker, we implemented the gradient-guided strategy on the template branch. Utilizing gradient information in a timely manner, our proposed method executes template updating and improves performance. Similar to the SKNet mentioned in Section 3.3, the gradient-guided strategy we adopted is divided into three stages either: Initial Embedding, Gradient Calculation, and Template Updating.

3.4.1. Initial Embedding Stage

3.4.2. Gradient Calculation Stage

At last, the updated template features U(z) will undergo to the next stage to generate the optimal template.

3.4.3. Template Updating Stage

Specifically, in the first stage, SiamMLG performs convolution operation by using refined features to generate scores map P; in the second stage, SiamMLG employs the generated scores map P and training label M to calculate the initial loss l, and then the latter backpropagates to the network D2 to obtain the gradient information G and utilizes the gradient information G with the refined template features Ω(z) to generate the updated template features U(z); and in the last stage, the updated template feature U(z) is inputted into the template branch to obtain the optimal template Qbest, and the final similarity map P′ is obtained by convolving the optimal template Qbest with the refined search region features Ω(x). By repeating the above process, SiamMLG achieves timely updates to the templates and eventually completes the object tracking.

Compared to UpdateNet [47], our proposed tracker utilizes backpropagation information to update the template timely, which reduces time consumption. Besides, unlike GradNet [29] either, our SiamMLG is based on SiamMask, which has more competitive performance and robustness than the early SiamFC, making our tracker stable when encountering complex scenarios.

4. Experiments and Analysis

Our comparison experiments are conducted on four extensive and mainstream datasets, including VOT-2016 [51], OTB100 [52], UAV123 [53], and GOT-10k [54]. In addition, we conducted ablation experiments on the UAV123 datasets and utilized GradNet as the benchmark tracker to compare with. Before providing the results and analysis, we will demonstrate the training dataset adopted for our tracker and implementation details about our experiments in Section 4.1 and Section 4.2, respectively.

4.1. Training Dataset

We adopt the pretrained backbone to accelerate convergence and improve the generalization of the tracker concerning general objects in visual tracking further. Namely, we use the ResNet-34, which is trained on several large training datasets, including ImageNet-DET [55], ImageNet-VID [56], LaSOT [57], and GOT-10k (for training), as the backbone. In both training and testing, we use single-scale images with 127 pixels for template patches and 255 pixels for searching regions.

4.2. Implementation Details

4.2.1. Computer Configuration

Our SiamMLG is implemented on a PC with the following configuration: Intel Xeon (R) E5-2667 v3 (Dual CPU), Nvidia RTX 2080ti (GPU), 32G (RAM), and Ubuntu 18.04 LTS (OS).

4.2.2. Operation Settings

The proposed tracker uses the SGD optimizer to optimize the loss function for a total of 70 epochs, where the initial learning rate is set to 0.001 for the first 20 epochs through a warm-up learning rate mechanism, and the learning rate decays to 0.0005 for subsequent epochs. Based on this, the training batch size is set to 64, the decay rate of the weights is 0.0005, and the momentum is 0.9.

4.3. Comparison Experiments

4.3.1. VOT-2016 Dataset

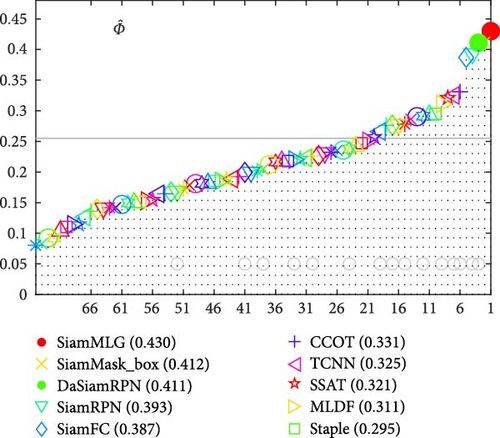

We test the proposed tracker on the VOT-2016 dataset and compare it with several mainstream Siamese trackers in visual tracking, including SiamRPN [33], DaSiamRPN [23], and SiamMask [48]. The VOT-2016 dataset includes 60 video sequences that contain lots of complex scenarios and object deformations. The evaluation protocol of the VOT-2016 dataset is Accuracy, Robustness, and Expected Average Overlap (EAO), where the Robustness is based on failure rate; namely, the higher the value, the more unstable trackers are, and EAO, combining Accuracy and Robustness, is the primary metric for evaluating performance. The comparison results on the VOT-2016 dataset are shown in Table 1 and Figure 5.

| Trackers | MLDF | SSAT | TCNN | CCOT | SiamFC | SiamRPN | DaSiamRPN | SiamMaks | SiamMLG |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 0.490 | 0.577 | 0.554 | 0.539 | 0.568 | 0.618 | 0.612 | 0.623 | 0.632 |

| Robustness | 0.233 | 0.291 | 0.268 | 0.238 | 0.262 | 0.238 | 0.221 | 0.233 | 0.182 |

| EAO | 0.311 | 0.321 | 0.325 | 0.331 | 0.387 | 0.393 | 0.411 | 0.412 | 0.430 |

- The best two results are highlighted in italics and bold fonts.

In the results, our proposed tracker achieves the best score with 0.430 on EAO and 0.632 on Accuracy, which exceeds the SiamMask by 4.2% and 1.5%, respectively. The core reason is that our tracker, based on SiamMask, embeds SKNet to filter valueless features and adopts the gradient-guided strategy to update the template in a timely. Despite our tracker’s backbone being the ResNet-34, the SKNet we embedded and the gradient-guided strategy we adopted still enhanced our tracker’s performance. It is also worth noting that our tracker is the most robust due to its ability to update templates timely, improving on SiamMask by 22%.

4.3.2. OTB100 Dataset

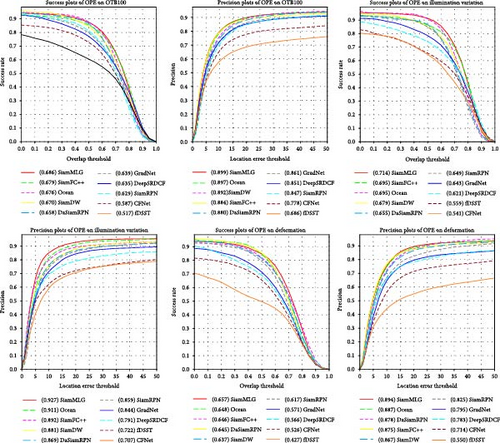

The OTB100 dataset, which contains 100 video sequences with different challenging factors, was formerly presented as the OTB50 dataset in 2013 and has since gone on to be supplemented with 50 video sequences in 2015. The evaluation methods used by the OTB100 dataset are the success plots and the precision plots, and the performance of the trackers calculated is ranked by the area under the curve (AUC) in the generated plot. In the comparison experiment, we compare our proposed tracker with several trackers, including Ocean [58] and SiamFC++ [24], the results of which are shown in Figure 6.

In the comparison results of the success and precision plots, our SiamMLG achieves the best scores in both plots. Compared to SiamFC++, our tracker exceeds 1.1% and 1.7% in the success and precision plots, respectively. Though our tracker and SiamFC++ are both lightweight trackers, our tracker performs better due to the SKNet filtering out useless features input into the backbone. Also, compared to GradNet [29], which updates the template by adopting the gradient-guide strategy either, our tracker achieves a 6.9% improvement in success and a 4.3% improvement in precision, respectively. The reason is that our tracker adopts the modified SiamMask, whose backbone is the ResNet-34, as the base tracker, whereas GradNet utilizes the earlier SiamFC as the base tracker. In addition, compared with other Siamese trackers, SiamRPN and DaSiamRPN, which rely on the template in the initial frame of the video for similarity-matching learning, our SiamMLG is more capable of facing challenging factors in the OTB100 dataset.

4.3.3. GOT-10k Dataset

The GOT-10k dataset was proposed by the Chinese Academy of Sciences (CAS) in 2018, and it includes a considerable and highly diverse group of wild objects, with over 10,000 video segments containing real-world moving objects. To ensure fairness in the evaluation protocol, GOT-10k contains two types of datasets, one for training and one for testing, with zero overlap between the classes. Furthermore, the trained and tested trackers must upload their results to the official website, which obtains the performance score by official evaluation. The official metrics for performance evaluation comprise average overlap (AO), success rate (SR), and FPS. AO symbolizes the average overlaps between all predicted bounding boxes and the ground-truth boxes; SR0.5 signifies the ratio of successfully tracked frames with an overlap exceeding 0.5, while SR0.75 represents instances where this overlap surpasses 0.75.

We compare our SiamMLG with several trackers, including CGACD, DaSiamRPN, and SiamMask. The comparison results are shown in Table 2. Compared to the original SiamMask that uses the deep architecture ResNet-50, our tracker outperforms the original SiamMask in all other metrics despite scoring slightly behind in SR0.75, especially in the FPS metric, which expresses the speed; our tracker is approximately three times better than the deep SiamMask. The core reason is that our tracker utilizes the lightweight ResNet-34 as the backbone to reduce the parameters and improve performance by embedding the SKNet to filter the useless features. Though DaSiamRPN’s speed is almost 2.4 times faster than ours, its performance is more deficient. Like the original SiamMask, our tracker is slightly behind the CGACD in the SR0.75 metric but substantially ahead in speed. Moreover, we also compared the state-of-the-art tracker SiamRCNN [43], which is a two-stage tracker. It has higher performance than ours because it can detect the trajectory of the object while tracking. Yet, precisely because SiamRCNN is a two-stage tracker, its speed is weighed down by the heavy model, which is only 2.79 FPS, as shown in Table 2. In comparison, our tracker achieved a speed of around 55FPS, which is about 19 times faster than SiamRCNN. The explanation is that our tracker adopts the more lightweight ResNet-34 as the backbone, and the lighter backbone makes our method more real-time. Within an acceptable range of performance losses, comparison experiments on the GOT-10k dataset show that our tracker balances satisfactory performance and acceptable speed.

| Trackers | MDNet | DaSiamRPN | THOR | SiamRPN | CGACD | SiamMask | SiamMLG | SiamRCNN |

|---|---|---|---|---|---|---|---|---|

| mAO | 0.299 | 0.444 | 0.447 | 0.463 | 0.511 | 0.514 | 0.515 | 0.649 |

| mSR0.5 | 0.303 | 0.536 | 0.538 | 0.549 | 0.612 | 0.587 | 0.604 | 0.728 |

| mSR0.75 | 0.100 | 0.220 | 0.204 | 0.253 | 0.323 | 0.366 | 0.352 | 0.597 |

| FPS | 1.52 | 134.40 | 1.00 | 74.46 | 37.73 | 15.37 | 55.63 | 2.79 |

- The top 2 results are highlighted in italics and boldface fonts.

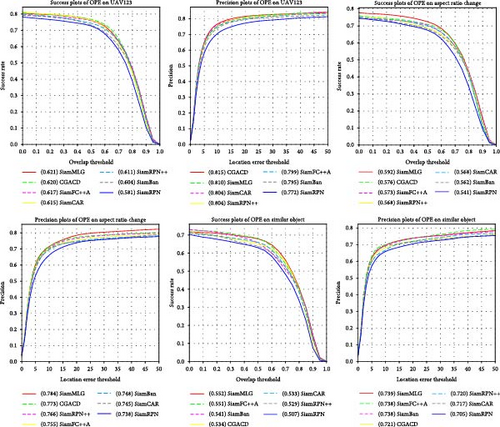

4.3.4. UAV123 Dataset

The UAV123 dataset holds 123 video segments taken by UAV from high altitudes, with over 110k frames. The challenge factors, such as small-size objects and occlusion, mainly characterize it, which makes it challenging for the trackers tested. Additionally, all the videos in the dataset are fully annotated with upright bounding boxes. The evaluation metrics of the UAV123 dataset are the same as the OTB100 dataset, which are success plots and precision plots.

We compare our proposed SiamMLG with several mainstream trackers, and the comparison results are shown in Figure 7. The proposed tracker scores 0.621 in the success plots to rank first and scores 0.810 in the precision plots to rank second. Compared with the latest tracker, SiamFC++, which adopts shallow neural networks as the backbone, our tracker improves by 0.7% in success plots and 1.4% in precision plots. The core reason is for this result is that our SiamMLG’s backbone is ResNet-34, which has a deeper architecture than the shallow AlexNet and discriminates adaptively for the rough features. Besides, compared with the CGACD, which is a two-stage tracker, although our proposed tracker is not performing as well as it in precision plots, our proposed SiamMLG still takes the lead in specific challenging scenarios, indicating that our tracker becomes robust due to the template updating strategy.

4.4. Ablation Study

We conduct the ablation study on the UAV123 dataset to investigate the consequence of each improvement through designing multiple variants of the proposed tracker. The results are presented in Table 3.

| Tracker | Compare operations | Success | ΔSuc (%) | Precision | ΔPre (%) |

|---|---|---|---|---|---|

| SiamFC++ | — | 0.617 | — | 0.799 | — |

| Variant 1 | Only adopts ResNet-34 as the backbone | 0.601 | −2.6 | 0.787 | −1.6 |

| Variant 2 | Original backbone adopted gradient-guide strategy | 0.606 | −1.8 | 0.797 | −0.3 |

| Variant 3 | Backbone with SKNet | 0.608 | −1.5 | 0.798 | −0.2 |

| SiamMLG | Backbone with SKNet + gradient-guide strategy | 0.703 | +0.7 | 0.810 | +1.4 |

- ΔSuc and ΔPre denote the degree of advancement of the success and precision, and the latest tracker SiamFC++ is used as benchmark method. Italic values are the results of SiamMLG in UAV123 dataset.

Variant 1 only uses the lightweight ResNet-34 as the backbone. Compared with the SiamFC++, this variant is 2.6% and 1.6% behind in terms of success and precision, respectively. The reason for this is that the backbone of variant 1 is the lightweight ResNet-34, which inherently results in weaker feature extraction capability because of its shallow architecture.

Variant 2 utilizes the original backbone but performs the gradient-guide strategy to update the template. Although it is slightly more acceptable than variant 1, it is still weaker than SiamFC++. The reason might come from the quality branch proposed by SiamFC++, which is more beneficial for performance improvement than ours.

Variant 3 uses the backbone embedded with the SKNet but does not perform the gradient-guide strategy. Since the SKNet allows the backbone to discriminate the channels of the rough features adaptively, it slightly improves performance over the first two variants. Nevertheless, this variant is still inferior to SiamFC++.

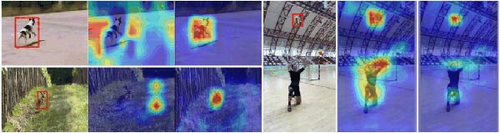

After combining all the advancements, the performance of our proposed tracker improves in certain ways compared to that of the SiamFC++, which is 0.7% and 1.4%, respectively. This is because SKNet filters out the valueless features from the rough features, allowing the retained valuable ones to be updated by performing the gradient-guided strategy. As shown in Figure 8, our proposed SiamMLG is robust and has a more accurate bounding box.

5. Conclusion

In this paper, we argue that the current Siamese trackers still suffer from two main problems, one being that the deep neural network serves as the backbone, dragging down the Siamese trackers’ speed, and the other being that the templates are incapable of updating timely, influencing the Siamese trackers’ performance. To address these problems, we proposed a Siamese tracker named SiamMLG based on the modified two-branch SiamMask. In specific, our proposed tracker first adopts the lightweight ResNet-34 embedded with SKNet as the backbone, in which the lightweight neural network aims to enhance the tracker’s speed, and the attention mechanism aims to repay the performance loss induced by the lightweight backbone by retaining valuable features. Based on the above, our proposed tracker is able to promptly update the template by using valuable features and gradient-guided information, which allows the tracker to perform stably. Extensive comparisons and ablation experiments on four large datasets, including the VOT2016, OTB100, GOT-10k, and UAV123 datasets, show that our tracker achieves competitive performance while running at nearly 55 FPS. However, the proposed tracker can only easily track 2D objects that are inputted to the tracker in daytime scenarios. In more complex nighttime scenarios, SiamMLG tends to degrade performance.

Conflicts of Interest

The authors have no competing interests to declare that are relevant to the content of this article.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (No. 62272063, No. 62072056, and No. 61902041); the Natural Science Foundation of Hunan Province (No. 2022JJ30617 and No. 2020JJ2029); the Standardization Project of Transportation Department of Hunan Province (B202108); the Hunan Provincial Key Research and Development Program (2022GK2019); and the Scientific Research Fund of Hunan Provincial Transportation Department (No. 202042).

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.