A Hybrid Prediction Model for Pumping Well System Efficiency Based on Stacking Integration Strategy

Abstract

The current prediction model for the system efficiency of pumping units primarily relies on a mechanistic approach. However, this approach incorporates numerous unnecessary factors, thereby, increasing the cost associated with predictions. With the improvement of the oil field database, the available information is increasing. Some scholars propose a prediction model based on a single neural network, however, such models face challenges in effectively capturing complex data, resulting in lower prediction accuracy and limited resistance to interference. To solve the above problems, the study proposes a novel stacking integrated learning prediction model, which incorporates fivefold cross-validation. First, the magnitude of the correlation coefficient was quantified using the Pearson correlation coefficient. Second, the impact and predictive features were normalized. Final, convolutional neural network (CNN), recurrent neural network (RNN), Long Short-Term Memory network (LSTM), gated recurrent unit (GRU), and transformer are used as the base models, and fully connected neural network (FNN) is used as the metamodel. Each base model was trained by fivefold cross-validation, and the predicted values of each fold were stacked by rows. Next, the predicted values of each base model are stacked by columns as input variables to the metamodel and metamodel learning is performed, and the stacking integrated learning prediction model based on fivefold crossover validation is established. To validate the accuracy of the model, we selected 5,000 actual well parameters, including 26 impact features and one predictive feature, for comparative analysis. This analysis presents the maximum percentage reduction in mean square error (MSE), mean absolute error (MAE), and root-mean-square error (RMSE) of our proposed integrated learning model concerning a single neural network prediction model as 28.26%, 24.40%, and 15.66%, respectively. The maximum percentage improvement in R2 is 17.74%. It shows that our proposed integrated learning prediction model has high prediction accuracy.

1. Introduction

Oil is the blood of industry, a strategic commodity, and a necessity for the survival of modern countries. Oil exploitation technology incorporates a rod pumping system that directly influences the efficiency of oil extraction, which in turn, impacts the economic viability of the operation. Pumping well system exploitation efficiency refers to the ratio of the amount of effective function to lift the fluid to the surface to the energy input to the system. At present, the prediction of production efficiency of pumping well systems adopts a mechanistic model [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13]. Mechanistic models require extensive mathematical modeling based on numerous mechanisms, making the process cumbersome and costly due to the consideration of many factors with relatively minor effects. In contrast, deep learning-based prediction models can first select methods based on feature importance, eliminating less influential factors, and thereby constructing more efficient and accurate prediction models. Currently, deep learning models employed for system efficiency prediction predominantly rely on a single neural network. A single model is vulnerable to data noise, outliers, and overfitting, which can degrade its performance on new data. It is highly sensitive to data distribution and feature selection, with minor changes potentially altering predictions. Moreover, a single model often captures only specific aspects of the data, limiting its ability to represent its complexity and diversity. Therefore, it is necessary to investigate the application of integrated learning methods for the efficiency prediction of rod pumping systems.

The pumping well system efficiency prediction model includes a mechanism and a deep learning prediction model. The mechanism prediction model originates from the longitudinal vibration of the pumping rod column. The wave equation for the longitudinal vibration of the rod string was first established [1]. A simulation model for the longitudinal vibration of the rod string, incorporating the coupling vibration of the rod string and liquid string, was developed to predict the pump indicator diagram and suspension indicator diagram [2]. The motion equation for coupling vibration between the ground device and rod liquid was established, along with a study on the influence of fluid parameters on rod design parameters [3]. Nonlinear vibration equations for pumping rod columns were developed [4, 5, 6, 7, 8, 9, 10]. A model for predicting the efficiency of a pumping unit well system based on multiphase tubular flow was also developed [11, 12]. Furthermore, a set of dynamic equations using Lagrangian mechanics to represent the motion equations of the rods was established, capable of being solved in predictive or diagnostic modes [13]. The major disadvantage of these mechanistic model is that although they can predict the system efficiency of pumping units well, there are many factors to be considered, and some unnecessary factors are taken into account, which makes the total prediction cost high.

With the advancement of information technology, deep learning has found applications across various domains [14, 15]. In the wind speed prediction industry, a hybrid prediction method for multistep wind speed prediction based on empirical wavelet transform (EWT), multi-objective modified seagull optimization algorithm (MOMSOA), and multi-kernel extreme learning machine (MKELM) was proposed [14]. In mechanical fault diagnosis, an intelligent convolutional neural network (CNN)-based mechanical fault diagnosis method was developed to address the data imbalance problem [15]. A multiscale graphical convolutional network for fault diagnosis of bearings was introduced [16]. In materials science, a depth autocoder thermography (DAT) method for detecting subsurface defects in composites was proposed [17]. In construction, a virtual sample-based calibration method for in situ sensors of building thermal systems was presented [18]. In traffic prediction, a model based on a Long Short-Term Memory (LSTM) network was proposed [19]. In the photovoltaic power generation industry, a weather classification model based on generative adversarial networks and CNNs was proposed [20]. In the oil industry, the focus includes fault diagnosis of pumping wells [21, 22, 23, 24, 25] and production forecasting [26, 27, 28, 29, 30, 31, 32, 33, 34, 35]. Research exploring the application of deep learning in predicting the efficiency of pumping well systems is limited. The influencing factors of pumping well system efficiency based on k means and improved algorithms were analyzed [36], and a single time series prediction model of pumping well system efficiency was established [37]. The main disadvantage of these single neural network prediction models is their difficulty in fitting complex data and low anti-interference ability. To overcome the limitations of single neural networks, the concept of ensemble learning was introduced, where the combination of multiple base learners typically yields significantly enhanced generalization performance. An integrated learning algorithm for effectively addressing highly nonlinear problems was proposed [38]. Integrated approaches to cancer prediction based on deep learning [39], a nonlinear learning regression top layer for integrated prediction [40], a novel multi-objective differential evolution algorithm for classifier integration based on text sentiment classification [41], an integrated learning-based predictive model for slope stability [42], and the application of stacking ensemble learning to daily runoff forecast were also proposed [43]. As can be seen from the above integrated learning for each industry, integrated learning has higher prediction accuracy than a single neural network prediction model. Therefore it is necessary to study the system efficiency prediction model for pumping wells using integrated learning.

To address the aforementioned issues, this study introduces a novel stacking-based integrated learning model, validated through fivefold cross-validation. This model comprises a set of base models and a metamodel. The base models include CNNs, recurrent neural networks (RNNs), LSTM networks, gated recurrent units (GRUs), and transformer models. The metamodel is a fully connected neural network (FNN). Initially, we perform a quantitative analysis of the factors influencing pumping system efficiency using the Pearson correlation coefficient, thereby, identifying their respective impacts. Subsequently, we analyze the influence and predictive characteristics of pumping system efficiency using normalized data. The base models are trained according to the fivefold cross-validation principle, and the metamodel is employed to integrate the base models through the stacking strategy, forming a comprehensive prediction model. Finally, we compare and analyze the prediction accuracies of the individual models—CNN, RNN, LSTM, GRU, and Transformer—and the stacking integrated learning model based on fivefold cross-validation. Additionally, we examine the effects of neural network hyperparameters on the prediction results for both single network models and the stacked integrated learning model.

The structure of the remaining sections in this article is as follows: Section 1 presents the introduction of the study. Section 2 presents the model underlying integrated learning. Section 3 describes the pumping well system efficiency prediction model based on stacking integrated learning. Section 4 provides the experimental analysis. Section 5 discusses the effect of the hyperparameters of the base model on the accuracy and robustness of the predictive model. Section 6 provides the summary of the proposed research and the conclusion.

2. Base Unit for Integration Algorithms

In this study, the efficiency of pumping well systems is evaluated using an integrated stacking learning approach, which consists of base models and a metamodel. The base models include diverse neural network architectures such as CNNs, RNNs, LSTM networks, GRUs, and transformer models. The metamodel used is a FNN. This section provides an overview of the base and metamodels employed in the ensemble learning strategy.

2.1. CNN

CNNs, introduced to address the challenges of parameter redundancy and increased computational complexity in FNNs when processing large data volumes, utilize parameter sharing and sparse interlayer connections to reduce computational demands, featuring a structure comprising convolutional, pooling, fully connected, and output layers [44, 45].

2.2. RNN

RNNs were designed to address the limitation of traditional neural networks, where each layer is fully connected but lacks connections between layers [46, 47]. An RNN typically comprises an input layer, an output layer, and recurrent units.

2.3. LSTM Neural Network

LSTM networks, featuring forget, input, and output gates, were developed to overcome RNNs limitations in retaining long-term information, enabling selective memory retention across various applications [48, 49].

2.4. GRU Network

2.5. Transformer

The transformer model, using self-attention and sequence-to-sequence architecture with encoders and decoders comprised of multihead attention, feedforward networks, residual connections, and layer normalization addresses softmax gradient issues through scaled dot-product attention [52, 53].

2.6. FNN

In a FNN, each neuron in one layer is connected to every neuron in the next layer, ensuring full interconnectivity. This architecture is a foundational structure in deep learning, capable of modeling a wide range of functions.

3. Stacking Integrated Learning Model Based on Fivefold Cross-Validation

3.1. Pearson Correlation Coefficient

3.2. Minimum–Maximum Normalization

where x is the value of each sample, xmin is the minimum value of all samples, and xmax is the maximum value of all samples.

3.3. Stacking Integration Learning Based on Fivefold Cross-Validation

Conventional deep learning methods often encounter limitations that hinder the accurate prediction of pumping system efficiency using a single neural network. Integrated learning approaches address these challenges by combining multiple base learners, thereby, mitigating the overfitting issues associated with individual models. The main integrated learning strategies include bagging, boosting, and stacking. Bagging, a parallel integration technique, reduces estimation errors by creating multiple training subsets through random sampling and combining the outputs of independent learners into a unified ensemble. Boosting exemplifies sequential integration learning, where the predictions of successive models are combined to enhance the overall accuracy. Stacking involves using the predictions from various base models as input features for a metamodel, which then generates the final prediction.

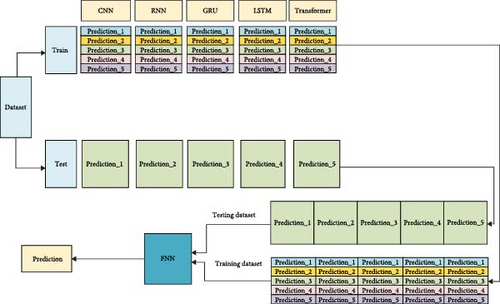

The prediction of efficiency for the pumping well system is a regression problem. The stacked integrated learning approach enables the integration of multiple diverse base learners, wherein each base learner is trained on a designated training set and utilizes its prediction outcomes as inputs for the meta-learner. Moreover, in case any of the base learners encounters an error, the meta-learner automatically rectifies it to achieve enhanced predictive performance. Therefore, in this paper, the stacking integrated learning method is chosen to predict the system efficiency of pumping wells. Since the selection of base model and metamodel is stochastic, we choose CNN, RNN, LSTM, GRU, and transformer as the base model and FNN as the metamodel. The base model is trained using the fivefold cross-validation principle. By employing the metamodel as a carrier and integrating the base model through a stacking integration strategy, we establish an efficiency prediction model for pumping systems based on fivefold cross-validation. The steps are as follows and the structure is schematized in Figure 1 and Algorithm 1.

-

Algorithm 1: Stacking integration learning based on fivefold cross-validation.

-

-

1. Divide the dataset into a training set and a test set.

-

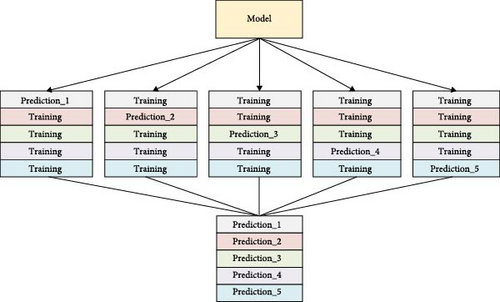

2. The training set in Step 1 is divided equally into five equal parts, each as a base test set and the rest as a base training set, utilizing CNN, RNN, LSTM, GRU, and transformer models, respectively. Finally, 25 different single neural network models are obtained, respectively. Each of these single networks contains five structurally different models. They are tested using a base test set and the base test results of the same network are stacked in row form. The fivefold cross-training plot for a single model is shown in Figure 2.

-

3. The predicted values of the base test set of the CNN model, the predicted values of the base test set of the RNN model, the predicted values of the base test set of the LSTM model, the predicted values of the base test set of the GRU model, and the predicted values of the base test set of the transformer model in Step 2 are arranged in columns as the training set of the metamodel.

-

4. For the construction of the test set of the metamodel, the test set of the partitioned data in Step 1 was predicted using one of the five CNN models trained in Step 2, respectively, and the values of the five prediction results were averaged to obtain the final CNN model prediction results. Repeat the above steps in the RNN network model, LSTM network model, GRU network model, and transformer network model to get the predicted results of five base model test sets, and arrange the predicted results of these five base model test sets in the form of columns to get the test sets of metamodels.

-

5. The training set of the metamodel obtained from Step 3 is substituted into the metamodel based on the fully connected neural network for training, and the test set of the metamodel obtained from Step 4 is used for test.

-

4. Experiments and Analyses

To enhance prediction accuracy, this paper presents a stacked ensemble learning framework that leverages fivefold cross-validation. The framework integrates five base models—CNN, RNN, LSTM, GRU, and transformer—with a metamodel that employs a FNN. To evaluate the predictive efficacy of the proposed model, the study utilizes a dataset comprising system efficiency metrics from 5,000 pumping wells in an oil field.

4.1. Data Presentation

- (1)

The data for this study were obtained from a database of an oil field in the western region of the People’s Republic of China, which contains 26 impact features and one predictive feature. Among them, the pumping unit model, motor model, and balancing method are represented as string types. The stem diameter, stem length, well inclination angle, and azimuth angle are continuous numeric function types. The label encoding LabelEncoder (.) from the scikit-learn library was used to perform the encoding.

- (2)

To address potential data imbalance, we randomly selected 5,000 actual wells from the database. Outliers were then removed from this subset. If fewer than 5,000 wells remained after outlier removal, additional data were selected from the source database until the total reached 5,000 wells. The dataset was then divided into a training set (80%) and a test set (20%) and normalized using Equation (24).

4.2. Pearson Correlation Coefficient Analysis

Pearson’s correlation coefficient was used to assess the impact of each important feature on the efficiency of the system and the results are shown in Table 1.

| Impact characteristics | Correlation coefficient |

Impact characteristics | Correlation coefficient |

|---|---|---|---|

| Motor type | −0.009 | Anticollision distance (m) | −0.015 |

| Type of pumping unit | 0.076 |

|

0.037 |

| Balanced approach | −0.0092 | Pump depth (m) | 0.095 |

| Balance degree (%) | −0.0041 | Stroke (m) | −0.04 |

| Saturation pressure (MPa) | −0.034 | Pump diameter (mm) | 0.35 |

| Oil well fluid density (kg/m3) | 0.033 | Jig frequency (min−1) | −0.21 |

| Oil well fluid viscosity (MPa.s) | −0.15 |

|

−0.039 |

| Gas–oil ratio | −0.22 | Sucker rod diameter (mm) | −0.052 |

| Relative density of natural gas (kg/m3) | −0.036 | Sucker rod length (m) | 0.31 |

| Oil pressure (MPa) | 0.0041 | Well inclination angle (angle) | 0.041 |

| Casing pressure (MPa) | 0.016 | Dogleg degree (angle) | −0.14 |

| Moisture content (%) | 0.2 |

|

−0.088 |

| Dynamic liquid level (m) | 0.04 | — | — |

| Pump clearance level | 0.024 | — | — |

- (1)

According to the analysis of Table 1, it is known that the system efficiency of the pumping unit well is negatively related to the type of motor, balancing method, balancing degree, saturation pressure, kinetic viscosity of well fluid, gas–oil ratio, the relative density of natural gas, release stroke, stroke, stroke, number of pumping rod stages, diameter of pumping rods, degree of doglegs, and number of holdups. A positive correlation exists between the type of pumping unit well, oil well fluid density, oil pressure, casing pressure, water content, dynamic liquid level, clearance level, tubular specification, down pumping depth, diameter of pumps, length of pumping rods, and inclination angle of wells and the system efficiency of the pumping unit well.

- (2)

To ensure the accuracy of the prediction, this paper selects the factors affecting the efficiency of the pumping unit well system as follows: motor type, balancing method, degree of balancing, saturation pressure, kinetic viscosity of the oil well fluid, gas–oil ratio, relative density of natural gas, release stroke, stroke, number of pumping rod stages, the diameter of the pumping rods, degree of the doglegs, number of holdups, type of the pumping unit wells, the density of oil well fluids, oil pressure, casing pressure, moisture content, dynamic liquid level, clearance class of pumps, specification of the oil tubing, depth of the down-pumping, diameter of the pump, length of pumping rods, and the angle of the inclination of the wells.

4.3. Evaluation Indicators

4.4. Validation of Prediction Accuracy

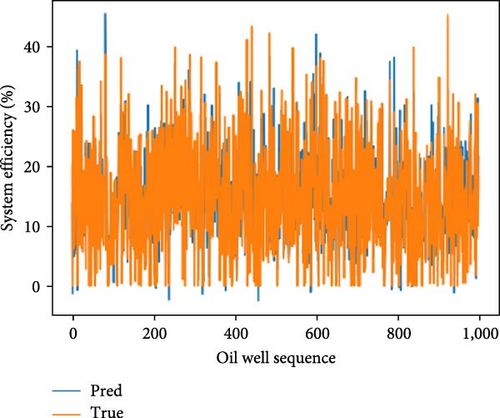

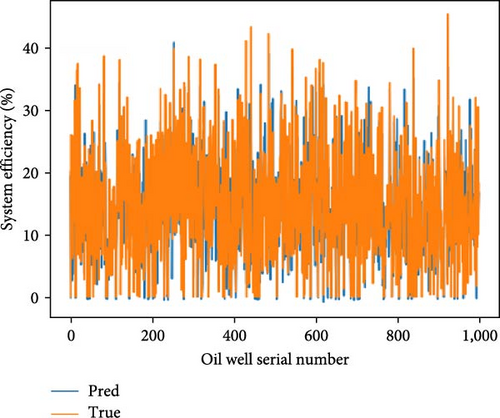

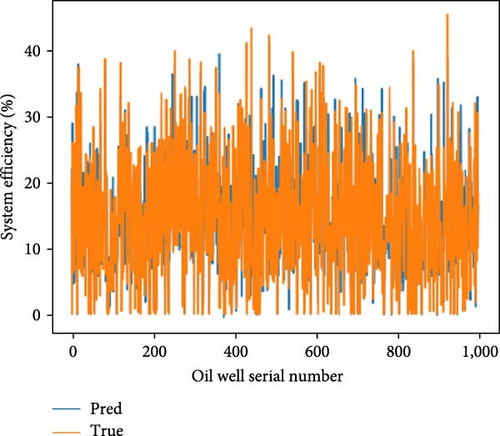

This study assesses the performance of various machine learning models in predicting oil well outputs. A training set of 4,000 oil wells and a test set of 1,000 oil wells were used. The models evaluated include CNN, RNN, LSTM, GRU, transformer, and a stacking integrated network. Predictions were made on the test set, and performance metrics are detailed in Figure 3. The results are summarized in Table 2, which presents MSE, RMSE, MAE, R2, and time metrics. Improvements for each model and the integrated learning approach are analyzed in Table 3. Hyperparameter settings for each model are specified in Table 4.

| Model | MSE | MAE | RMSE | R2 | T (s) |

|---|---|---|---|---|---|

| CNN | 0.00331 | 0.0372 | 0.0575 | 0.6193 | 33 |

| RNN | 0.00281 | 0.0323 | 0.0530 | 0.6765 | 281 |

| GRU | 0.00289 | 0.0318 | 0.0537 | 0.6674 | 275 |

| Transformer | 0.00317 | 0.0343 | 0.0563 | 0.6351 | 335 |

| LSTM | 0.00311 | 0.0324 | 0.0557 | 0.6424 | 236 |

| Stacking | 0.00236 | 0.0281 | 0.0485 | 0.7292 | 5,700 |

| Model | MSE (%) | MAE (%) | RMSE (%) | R2 (%) |

|---|---|---|---|---|

| CNN | 28.26 | 24.40 | 15.66 | 17.74 |

| RNN | 16.3 | 12.94 | 8.5 | 7.79 |

| GRU | 18.56 | 11.34 | 9.76 | 9.25 |

| Transformer | 25.78 | 18.05 | 13.85 | 14.81 |

| LSTM | 24.28 | 13.23 | 12.98 | 13.51 |

| Model | Learning rate | Number of hidden layers | Number of neurons in the hidden layer | Epochs | Division ratio (%) | Optimizer type |

|---|---|---|---|---|---|---|

| CNN | 0.001 | 3 | 300 | 5,000 | 20 | Adam |

| RNN | 0.001 | 3 | 300 | 5,000 | 20 | Adam |

| GRU | 0.001 | 3 | 300 | 5,000 | 20 | Adam |

| Transformer | 0.001 | 3 | 300 | 5,000 | 20 | Adam |

| LSTM | 0.001 | 3 | 300 | 5,000 | 20 | Adam |

| Stacking | 0.001 | 3 | 300 | 5,000 | 20 | Adam |

- (1)

Based on the experimental results presented in Figure 3, Tables 2, and 3, it is evident that the stacking model exhibits the lowest MSE, MAE, and RMSE, while demonstrating the highest R2 value. This empirical evidence suggests that the predictive accuracy of the pumping unit well system efficiency prediction model based on the stacking integration strategy surpasses that of individual neural network prediction models.

- (2)

The accuracy of each model is influenced not only by the hyperparameters but also by the data distribution. According to Table 2, among the single neural network prediction models, the RNN adapts better to this sample data, while the CNN shows poorer adaptation. The integrated learning model leverages the strengths of all individual neural network models to maximize its accuracy.

- (3)

According to Table 2, the analysis of the learning time for each prediction model shows that the integrated learning model has the longest training time, which correlates with its higher prediction accuracy. However, in practical applications, it is common to improve one aspect of performance at the expense of less critical factors.

5. Analysis of the Impact of Hyperparameters on Accuracy and Stability

5.1. Effect of Learning Rate on Prediction Accuracy

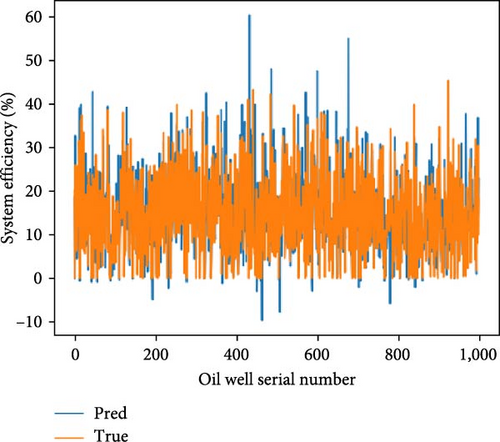

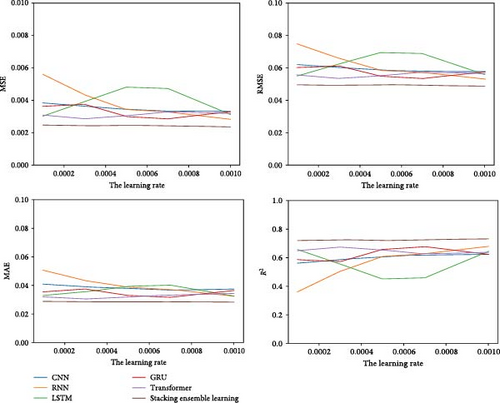

This study investigates the effect of varying learning rates on the prediction accuracy of several machine learning models. We tested learning rates of 0.0001, 0.0003, 0.0005, 0.0007, and 0.001 using CNN, RNN, LSTM, GRU, transformer, and stacking integrated learning models. Each model underwent fivefold cross-validation to assess its performance. The impact of different learning rates on model accuracy was evaluated using MSE, RMSE, MAE, and R2 scores, as illustrated in Figure 4.

- (1)

The analysis shows that with varying learning rates, the stacking integrated learning model consistently achieved the lowest MSE, RMSE, and MAE while attaining the highest R2 score. This indicates that the stacking integrated learning approach proposed in this study offers superior prediction accuracy compared to individual neural network models.

- (2)

Throughout the range of learning rates, the stacking integrated learning model exhibited minimal fluctuation in all evaluation metrics. This stability suggests that the stacking integrated learning approach proposed in this study is more robust compared to individual neural network models.

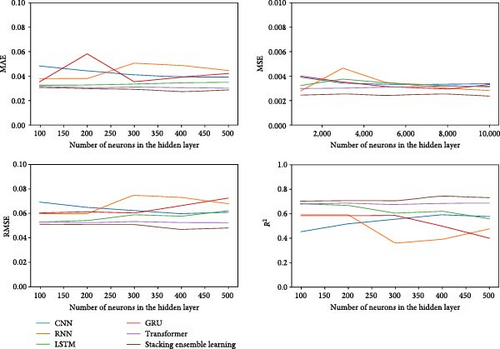

5.2. Effect of the Number of Neurons in the Hidden Layer on the Prediction Accuracy

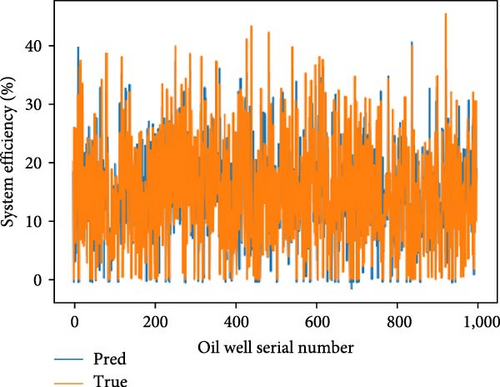

This study examines the effect of varying the number of hidden layer neurons on the predictive accuracy of integrated network models. We tested five different neuron configurations: 100, 200, 300, 400, and 500. Using a controlled variable approach, we evaluated CNN, RNN, LSTM, GRU, and transformer models, alongside stacking integrated learning models. Predictions were made using fivefold cross-validation. The performance of each model was assessed based on MSE, RMSE, MAE, and R2 values, as shown in Figure 5.

- (1)

As the number of neurons in the hidden layers increases, the stacking integrated learning model demonstrates the lowest MSE, RMSE, and MAE, while achieving the highest R2 value. These results indicate that the stacking integrated learning model provides superior prediction accuracy for the pumping unit well system efficiency compared to individual neural network models.

- (2)

Increasing the number of neurons in the hidden layers results in minimal variations in the evaluation metrics of the stacking integrated learning model for predicting pumping unit well system efficiency. This suggests that the stacking integrated learning model exhibits greater stability compared to individual neural network models.

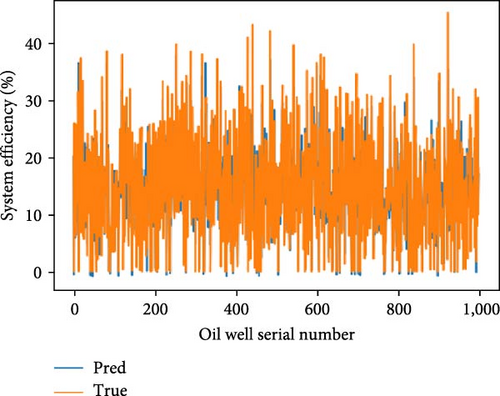

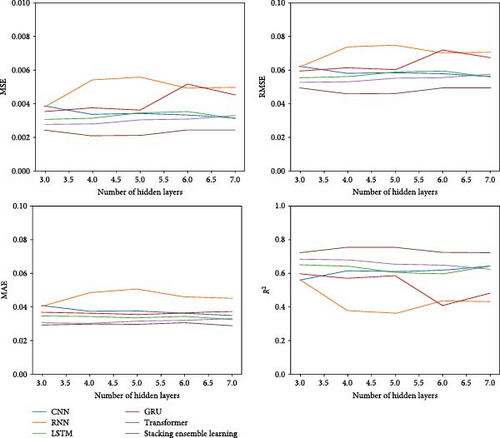

5.3. Effect of the Number of Hidden Layers on Prediction Accuracy

To assess the effect of varying the number of hidden layers on the predictive accuracy of integrated network models, we evaluated configurations with 3, 4, 5, 6, and 7 hidden layers. Utilizing a controlled variable approach, we applied CNN, RNN, LSTM, GRU, and transformer models, along with a stacking integrated learning model. Predictions were made using fivefold cross-validation. The performance of each model was assessed based on MSE, RMSE, MAE, and R2 values, as shown in Figure 6.

- (1)

As the number of hidden layers increases, the stacking integrated learning model consistently achieves lower MSE, RMSE, and MAE compared to individual neural network models. Additionally, the stacking integrated learning model attains a higher R2 value. These results demonstrate that the stacking integrated learning model offers superior predictive accuracy for the pumping unit well system efficiency over single neural network models.

- (2)

As the number of hidden layers increases, the evaluation metrics for the stacking integrated learning model exhibit minimal variation. This suggests that the stacking integrated learning model maintains greater stability in predicting pumping unit well system efficiency compared to other models.

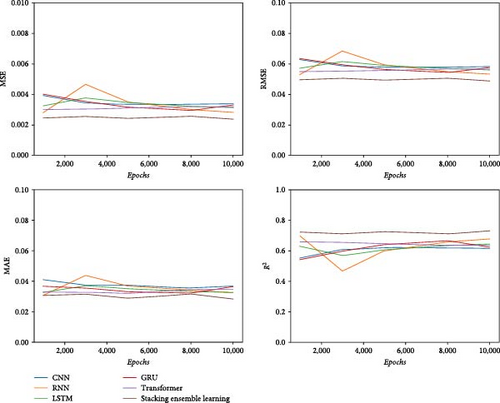

5.4. Effect of Epochs on Prediction Accuracy

To evaluate the effect of varying the Epochs on the predictive accuracy of integrated network models, we tested configurations with 1,000, 3,000, 5,000, 8,000, and 10,000 iterations. Using a controlled variable approach, we applied CNN, RNN, LSTM, GRU, and transformer models, along with a stacking integrated network model, utilizing fivefold cross-validation. The performance of each model was assessed based on MSE, RMSE, MAE, and R2 scores, as illustrated in Figure 7.

- (1)

As the Epochs increases, the stacking integrated learning model consistently achieves the lowest MSE, RMSE, and MAE, while attaining the highest R2 value. These findings indicate that the stacking integrated learning model offers superior predictive accuracy for pumping unit well system efficiency compared to single neural network models.

- (2)

The evaluation metrics for the stacking integrated learning model exhibit minimal variation with increasing Epochs, indicating that this model maintains a higher level of stability compared to others.

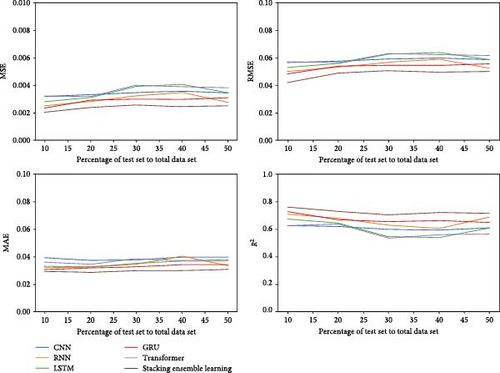

5.5. Effect of the Division Ratio of the Validation Set on the Prediction Accuracy

To evaluate the effect of different validation set division ratios on the predictive accuracy of integrated network models, we analyzed division ratios of 0.1, 0.2, 0.3, 0.4, and 0.5. Using a controlled variable approach, we applied CNN, RNN, LSTM, GRU, and transformer models, along with a stacking integrated network model, utilizing fivefold cross-validation. The performance of each model was assessed using MSE, RMSE, MAE, and the coefficient of determination R2, as illustrated in Figure 8.

- (1)

As the proportion of the validation set increases, the stacking integrated learning model consistently achieves lower MSE, RMSE, and MAE compared to single neural network models. This indicates that the stacking integrated learning model provides superior predictive accuracy for pumping unit well system efficiency.

- (2)

With an increased proportion of validation set data, the stacking integrated learning model shows reduced variation in evaluation metrics compared to single neural network models. This suggests that the stacking integrated learning model offers greater stability for predicting pumping unit well system efficiency

6. Conclusion

- (1)

This study introduces a novel prediction model for the efficiency of pumping well systems based on stacking integrated learning. The model consists of a base model and a metamodel. The base model integrates various neural network architectures, including CNN, RNN, LSTM, GRU, and transformer models. The metamodel is designed as a FNN. Initially, we performed a quantitative analysis of the factors influencing pumping well system efficiency using the Pearson correlation coefficient. Data preprocessing was then conducted through min–max normalization. Finally, a fivefold cross-validation technique was employed to train the base model, and the stacking integration strategy was applied, with a fully connected neural network serving as the metamodel. This approach resulted in an efficient prediction model for pumping well system performance.

- (2)

To validate the model’s accuracy, experiments were conducted on a sample of 5,000 real oil wells. The experimental findings demonstrate that the predictive accuracy of the stacking integrated learning-based model for pumping well system efficiency surpasses that of individual neural network models.

- (3)

To analyze the stability of the model, we examined the effects of learning rate, number of hidden layers, number of neurons in the hidden layer, total number of iteration steps, and the proportion of validation set division using the control variable method. The results indicate that the stacking integrated learning-based prediction model for pumping well system efficiency proposed in this paper exhibits greater stability compared to individual neural network models.

- (4)

Predicting the efficiency of pumping well systems is crucial for petroleum practitioners to evaluate the performance of these systems. Given that different integration strategies yield varying levels of predictive accuracy, future research will primarily focus on investigating the effects of different integration strategies on the prediction accuracy of pumping unit well system efficiency.

Conflicts of Interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflicts of interest in connection with the work submitted.

Acknowledgments

This research was funded by the National Natural Science Foundation of China (grant no. 51974276).

Open Research

Data Availability

The dataset generated and analyzed during the current research period will be provided within the scope of informed consent of the first author upon reasonable academic request.