Fast Spectral Clustering via Efficient Multilayer Anchor Graph

Abstract

Recent studies have shown that graph-based clustering methods are good at processing hyperspectral images (HSIs), while falling short for large-scale HSIs due to high time complexity. Meanwhile, the performance of these methods relies on the quality of the constructed graph with selected anchor points. More anchor points bring better clustering results for graph-based methods. Time complexity, however, sees a considerable increase as the number of anchor points grows. Therefore, a method that can obtain efficient clustering accuracy and consumes less time is to be developed. Against this backdrop, a novel algorithm named fast spectral clustering via efficient multilayer anchor graph (FEMAG) is proposed to resolve the accuracy and time-consuming trade-off problem. First, FEMAG adopts superpixel principal component analysis (SuperPCA) to extract the low-dimensional features of HSIs. Then, a multilayer anchor graph is constructed to improve the clustering performance. When constructing the similarity graph, FEMAG takes balanced K-means-based hierarchical K-means (BKHK) to obtain outperforming anchor points efficiently. Extensive experiments validate that FEMAG achieves better clustering accuracy while taking less time compared to previous clustering methods.

1. Introduction

Hyperspectral images (HSIs) record abundant spatial and spectral information with different substances behaving differently at different wavelengths [1–3]. This advantage makes HSIs widely used in environmental monitoring, military detection, and medical diagnosis, among others [4–6]. Abundant information will also introduce redundancy in HSI classification or clustering, and labeled HSIs are labor-consuming work. To solve these issues, scholars tried to bend spatial and spectral information together while reducing the redundancy. For example, a spatial and spectral structure preserved self-representation model for unsupervised hyperspectral band selection without using any label information was proposed by Tang et al. [7] to solve this problem. Clustering analysis, as an unsupervised classification method, can learn the intrinsic similarity of unlabeled samples and divide them into different groups, which is one of the most popular methods in HSI processing [8–10]. According to spectral graph theory, the graph consists of vertices (data points) and edges, where the value of each edge indicates the number of its nearest neighbors [11–13]. Spectral clustering (SC) [14], fast SC [15], and sparse subspace clustering (SSC) [16] are typical graph-based clustering methods.

Graph-based clustering methods have great potential in HSI processing, which exploits the nonlinear pairwise similarity by data distances [17, 18]; therefore, scholars have made deep research on it and made great progress. For instance, Tang et al. [19] proposed unsupervised feature selection via multiple graph fusion and feature weight learning to optimize the quality of the similarity graph. Cai and Chen [20] proposed the landmark-based spectral clustering (LSC) method to get points of higher quality with K-means. Based on this, Huang et al. [21] presented the ultrascalable spectral clustering (U-SPEC) method, in which a fast approximation method with k-nearest representatives is used to construct the sparse affinity submatrix. Wang et al. [22] proposed scalable graph–based clustering with nonnegative relaxation (SGCNR) for large-scale HSIs to reduce the time complexity with stable accuracy.

- 1.

FEMAG constructs an anchor-based multilayer anchor graph to get higher accuracy and lower computing cost.

- 2.

To thoroughly investigate the spatial information of HSI, low-dimensional features are learned by SuperPCA. The computing burden, therefore, has been effectively alleviated.

- 3.

The parameter-free strategy is applied to achieve self-adaptive optimization of the heat-kernel parameter.

2. Overview of Graph-Based SC

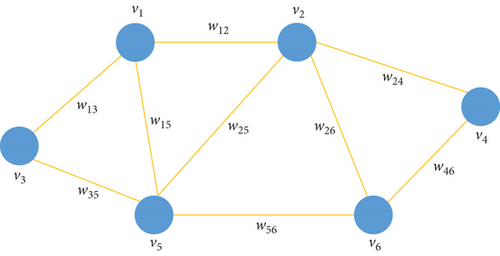

In graph-based theory, each data point (a pixel in the HSI) is viewed as a vertex, so we let G = {V, ξ, W} be a weighted graph without directions, where V denotes the set of vertexes and ξ denotes the set of edges describing the similarity of one-to-one vertexes. W = [wij] ∈ ℝn×n is the similarity matrix of the graph, which can be obtained by Gaussian function , where ‖•‖2 is defined by Euclidean distance between vectors and σ is the heat-kernel parameter, whose value has a direct impact on clustering performance and needs to be tuned manually in experiments. See the weighted graph in Figure 1.

For HSIs, denotes the data matrix, where n is the number of data points and d represents the dimension of the image.

3. Description of FEMAG Algorithm

Key steps of the FEMAG algorithm are as follows: (1) use SuperPCA to reduce the redundant information in an HSI so as to lighten the computation burden; (2) construct a multilayer anchor graph to better describe the relationship between data points while decreasing the time complexity; and (3) adopt balanced K-means-based hierarchical K-means (BKHK) to select the most representative anchors to increase the clustering efficiency.

3.1. Low-Dimensional Feature Generation With SuperPCA Algorithm

- 1.

Performing superpixel segmentation on initial HSI data according to the entropy rate of superpixels.

- 2.

Implementing PCA on smaller components and rearranging the obtained low-dimensional matrix to compose the reduced-dimensional data.

3.2. Anchor Generation With BKHK

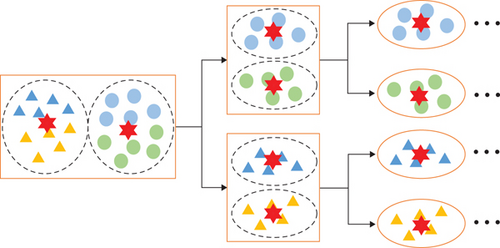

Anchor-based methods select m anchor points from n data points in the image, where m ≪ n. Several ways, such as random selection, K-means, and the BKHK algorithm [30, 31], are used to obtain anchor points. The random selection is simple and fast but fails to select good enough to construct the similarity graph. K-means can generate more representative anchors, but its time complexity is too high, making it hard to apply for large-scale HSI. BKHK segments data into two clusters with the same number iteratively and thus can select representative anchor points efficiently, which is suitable for large-scale HSIs. In this paper, we adopt BKHK to get brilliant anchors efficiently. Figure 2 demonstrates the schematic of the BKHK algorithm. Triangles and circles indicate raw data points, and different colors represent different categories. The red hexagon is the cluster center, and the BKHK algorithm generates data points for each layer by balanced K-means.

3.3. The Parameter-Free Anchor-Based Similarity Matrix

3.4. Multilayer Anchor Graph Construction

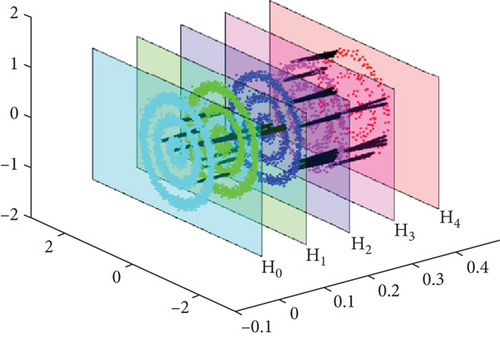

To provide a vivid description, the multilayer anchor graph is shown in Figure 3. In each layer, there are three-ring synthetic data, and the original layer H0 consists of 4000 data points. Then, K-means is used to select the following layers with H1 = 2000, H2 = 1000, H3 = 500, and H4 = 250 anchor points.

3.5. The FEMAG Algorithm

The time complexity of FEMAG can be approximated as .

To express the method more clearly, the detailed FEMAG is described in Table 1.

| Step | Details |

|---|---|

| Input | HSI data matrix X ∈ ℝn×d; the number of superpixels s; the reduced dimension ; the number of neighbors k; and the number of anchor points m1, m2, ⋯mh |

| Output | The class indicator c |

| Step 1 | Extract low-dimensional features of original data by SuperPCA |

| Step 2 | Generate anchor points by BKHK |

| Step 3 | Get matrix Z0,1Z1,2 ⋯ Zh−1,h according to Equation (7) |

| Step 4 | Achieve matrix ZH according to Equation (8) |

| Step 5 | Obtain matrix W according to Equation (10) |

| Step 6 | Get the related continuous solution of F by conducting SVD on matrix M |

| Output | Obtain the class indicator by K-means |

4. Experiments

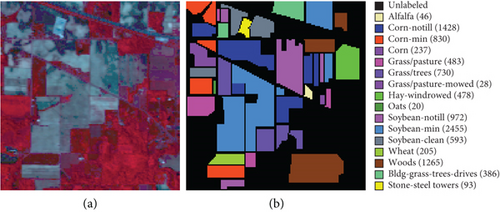

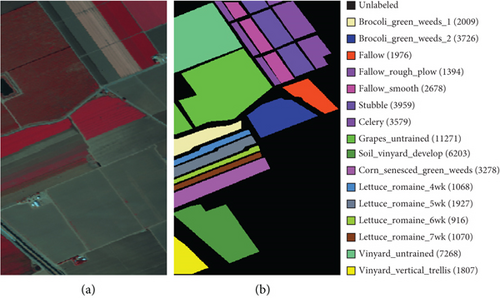

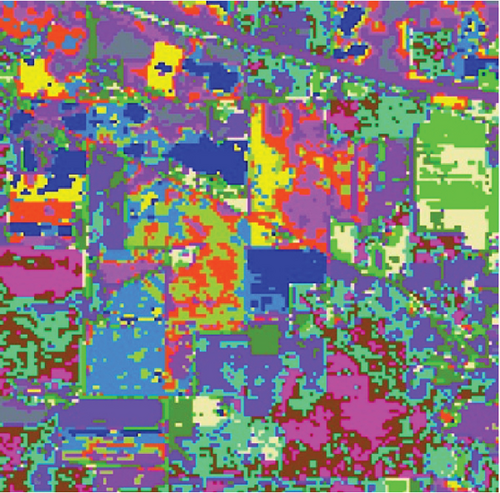

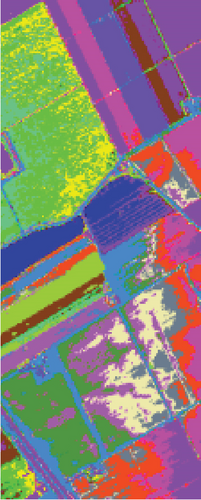

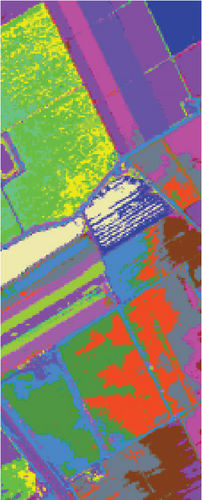

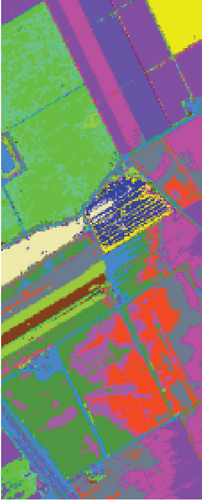

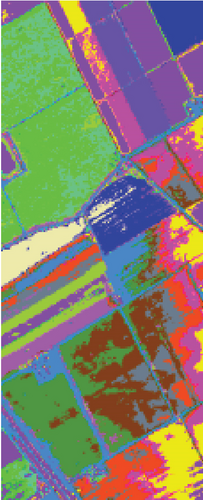

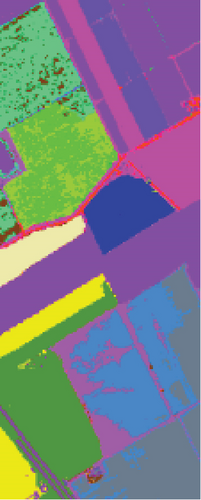

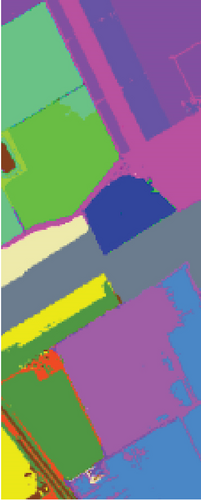

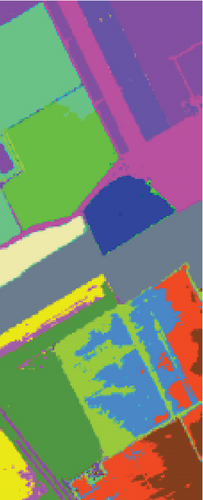

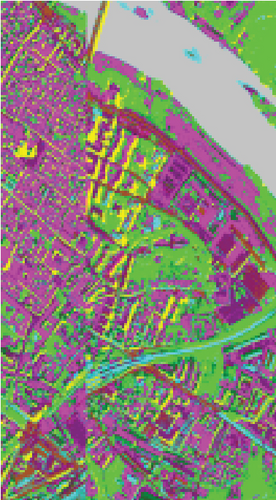

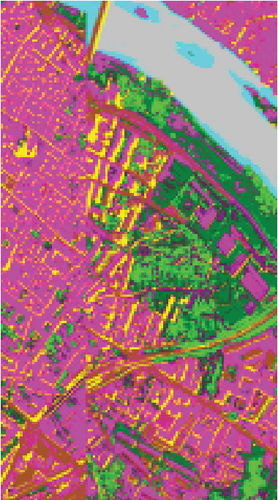

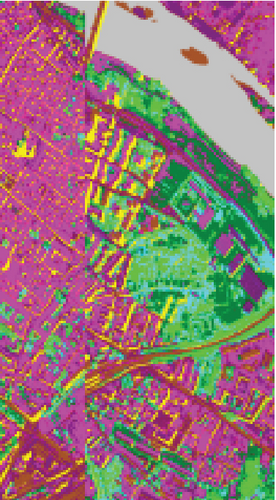

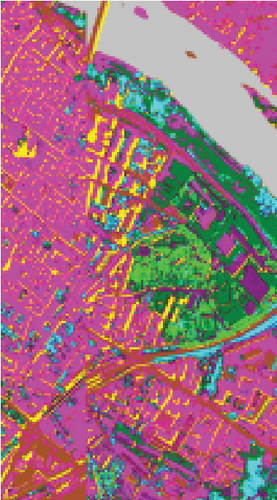

In this section, the HSI data sets used in experiments and the parameter setting are introduced at the beginning. To confirm the performance of FEMAG, we compare it with classic SC methods (named SC [34], LSC-R, LSC-K, U-SPEC, and SGCNR). FEMAG selects anchor points by random and K-means, respectively, marked as FEMAG-R and FEMAG-K. Then, the clustering time of different methods is analyzed. All the experiments are implemented on three open HSI data sets, Indian Pines (Figure 4), Salinas (Figure 5), and Pavia Center (Figure 6) by a PC with Intel i9-10980XE (36) @ 4.60GHz and 64-GB RAM, MATLAB 2021a.

In the experiments, Indian Pines is regarded as a small-sized data set, Salinas as a medium-sized one, and Pavia Center as a large-sized. Both clustering maps and index evaluations are provided to demonstrate the efficiency and effectiveness of FEMAG. Quantitative evaluations adopt the user’s accuracy (UA), average accuracy (AA), overall accuracy (OA), and kappa coefficient. Besides, the best clustering results are highlighted in boldface.

For clarity, we use FEMAG-m1, m2, ⋯, mh to denote the FEMAG method with h layer anchor graph. For example, FEMAG-512-256 means that there are two anchor layers in its graph structure, containing 512 and 256 anchor points, respectively. Moreover, FEMAG-m means the FEMAG contains m anchor points; for example, FEMAG-1024 means that the FEMAG contains 1024 anchor points.

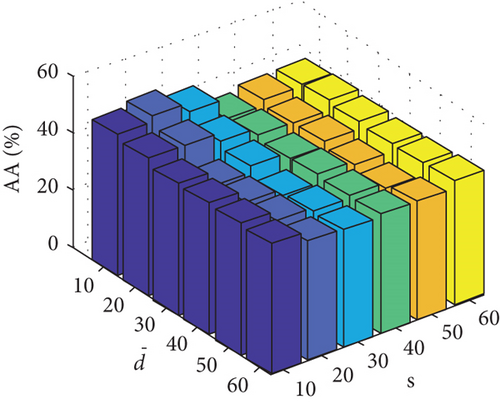

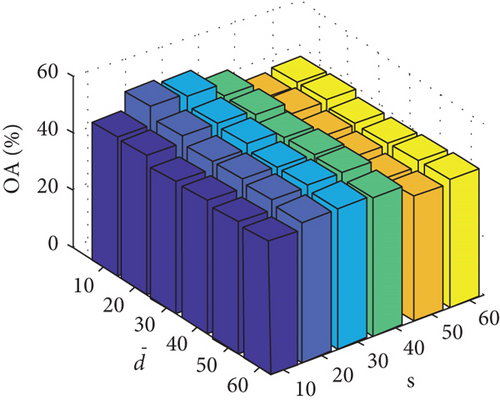

4.1. Parameter Sensitivity

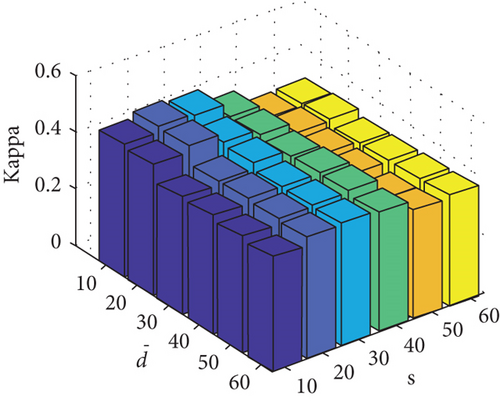

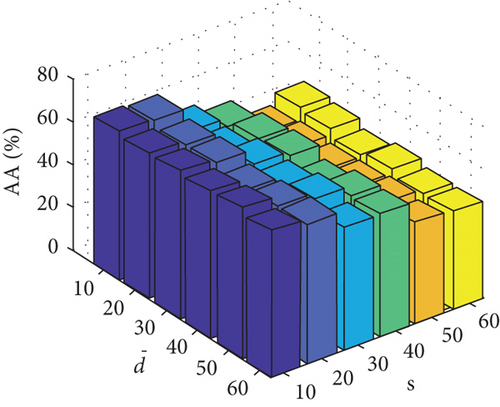

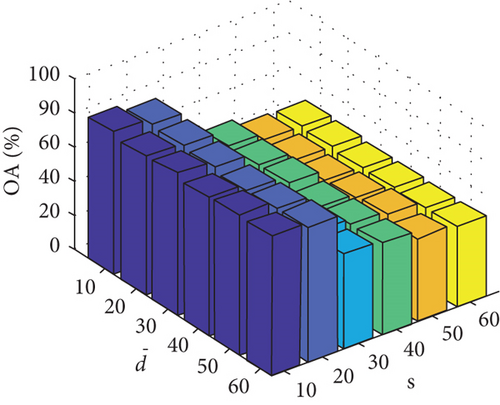

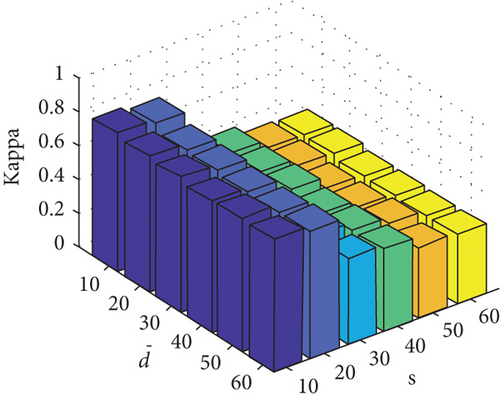

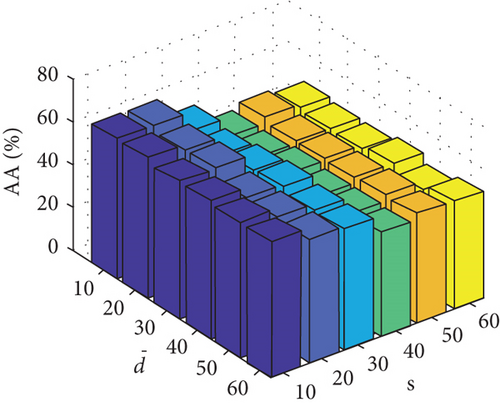

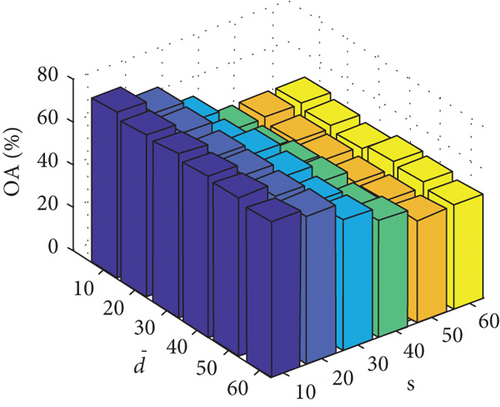

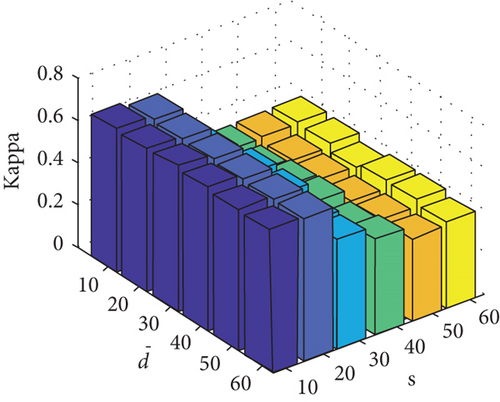

In this section, the parameter sensitivity of FEMAG will be assessed. SuperPCA includes three parameters: s, , and k. The clustering performance is mainly affected by s and ; k is related to the sparsity of matrix Z and has little effect on the time complexity.

Parameter sensitivity experiments are conducted on the three HSI data sets. By referring to Figures 7(a), 7(b), and 7(c), we see that the clustering performance depends mainly on the parameter s, and the parameter has little effect on the clustering performance. Note that the motivation of our FEMAG algorithm is efficient, it is reasonable to select a small s = 20 and for Indian Pines data set. Figures 7(d), 7(e), and 7(f) show that the clustering performance of FEMAG is relatively robust to parameter , noting that parameter s = 10 is the best performance achieved by the FEMAG algorithm. As shown in Figure 7(g), FEMAG is quite insensitive to with wide ranges of values. In terms of OA and kappa, we get similar observations. The result validates the above analysis. The smaller s can obtain better clustering results in the joint action.

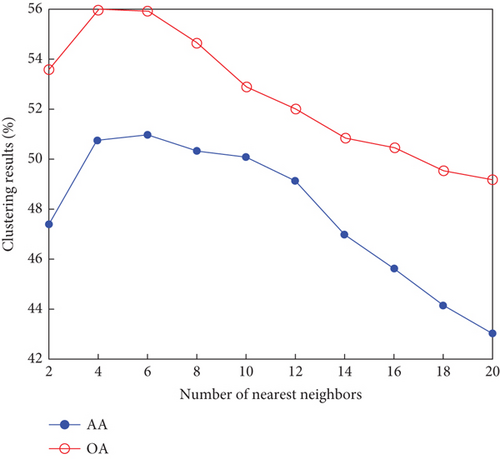

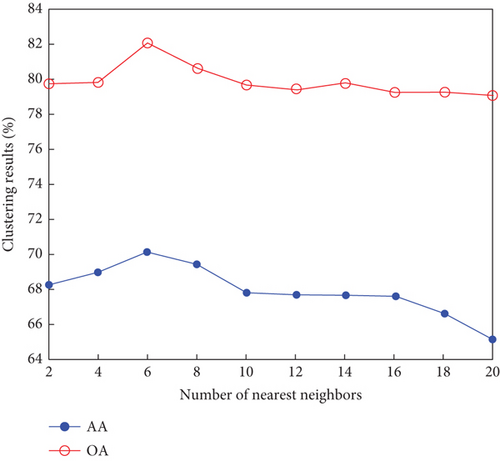

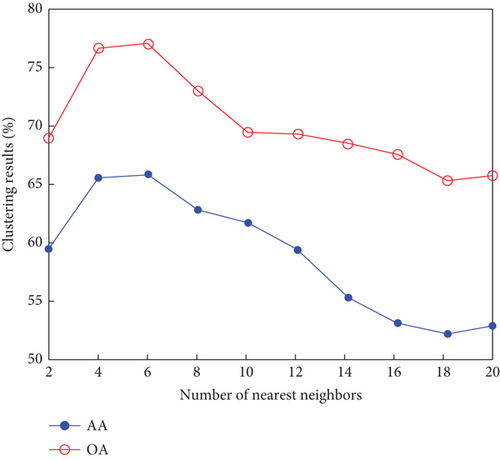

Finally, the sensitivity of parameter k involved in FEMAG is tested. For convenience, k varies from 2 to 20, and the corresponding results are shown in Figure 8, from which we can see that as a general trend, the clustering results become worse with the increase of the number of nearest neighbors and k < 10 making the result reasonable on the three HSI data sets. Therefore, it is acceptable to set k = 5.

A multilayer anchor graph is constructed in FEMAG for better clustering performance; therefore, it is a key point how sensitive the algorithm is to the layers of the anchor graph. Extensive experiments are conducted to test it, and the clustering results are shown in Tables 2, 3, and 4.

| Method | F-64 | F-128 | F-256 | F-512 | F-128-64 | F-256-128 | F-256-128-64 | F-512-256-128 |

|---|---|---|---|---|---|---|---|---|

| AA (%) | 38.61 | 43.72 | 46.67 | 48.57 | 44.74 | 50.15 | 51.54 | 53.27 |

| OA (%) | 52.41 | 54.49 | 48.16 | 54.30 | 53.95 | 55.12 | 57.21 | 62.37 |

| Kappa | 0.4610 | 0.4847 | 0.4329 | 0.4843 | 0.4823 | 0.5086 | 0.5215 | 0.5801 |

| Time (s) | 0.6 | 0.8 | 1.1 | 1.9 | 0.8 | 1.5 | 2.1 | 3.0 |

- Note: The best clustering results are highlighted in boldface.

| Method | F-64 | F-128 | F-256 | F-512 | F-128-64 | F-256-128 | F-256-128-64 | F-512-256-128 |

|---|---|---|---|---|---|---|---|---|

| AA (%) | 60.30 | 62.46 | 64.72 | 67.13 | 65.19 | 68.31 | 69.85 | 73.12 |

| OA (%) | 72.36 | 74.17 | 75.58 | 75.12 | 79.60 | 82.53 | 82.22 | 82.56 |

| Kappa | 0.6871 | 0.7082 | 0.7253 | 0.7224 | 0.7722 | 0.8052 | 80.08 | 80.49 |

| Time (s) | 2.6 | 4.1 | 7.2 | 10.5 | 6.8 | 9.10 | 10.64 | 12.1 |

- Note: The best clustering results are highlighted in boldface.

| Method | F-128 | F-256 | F-512 | F-1024 | F-256-128 | F-512-256 | F-512-256-128 | F-1024-512-256 |

|---|---|---|---|---|---|---|---|---|

| AA (%) | 55.53 | 58.03 | 61.30 | 64.54 | 61.27 | 65.52 | 65.86 | 66.89 |

| OA (%) | 73.22 | 73.22 | 74.85 | 75.77 | 74.40 | 76.63 | 76.79 | 77.56 |

| Kappa | 0.6341 | 0.6354 | 0.6517 | 0.6758 | 0.6523 | 0.6802 | 0.6941 | 0.7012 |

| Time (s) | 23.8 | 33.7 | 42.5 | 52.7 | 36.5 | 48.50 | 55.68 | 63.7 |

- Note: The best clustering results are highlighted in boldface.

From the left four columns of Table 2, it can be seen that as the number of anchor points grows, the FEMAGs with a single layer see increasing clustering accuracy (AA, OA, and kappa coefficient) and the same trend with the clustering time.

From the right two columns, it is clear that FEMAG with three layers, such as F-256-128-64 and F-512-256-128, has higher clustering accuracy with more anchor points. The AA, OA, and kappa have increased by 3.4%, 9.0%, and 11.2%, respectively, while the clustering time has also grown from 1.5 to 3.0 s, which is still acceptable.

Experiments have also been done in the Salinas and Pavia Center data sets and obtained the same conclusions.

These experiments and results illustrate that the number of layers and anchors depends on the real application requirements. The selection of layer number is to strike the balance between time complexity and clustering accuracy. For example, if high clustering accuracy is required while the clustering speed is tolerated, a more layer with a large amount of anchor point graph can be constructed; if HIS clustering needs to be completed in a short period of time and the accuracy requirement is not very high, then a less layer with less anchor point graph can be constructed to finish a fast clustering.

4.2. Small-Size Data Set

To evaluate the performance of clustering methods on small-size data, experiments are first conducted on the Indian Pines. For this small data set, we construct the anchor graph with 256 and 128 anchor points in FEMAG, written as FEMAG-256-128. The parameters of the FEMAG are set as k = 5, s = 20, and . In addition, the number of anchor points of algorithms LSC-R, LSC-K, U-SPEC, and SGCNR is set to 128 during the experiment.

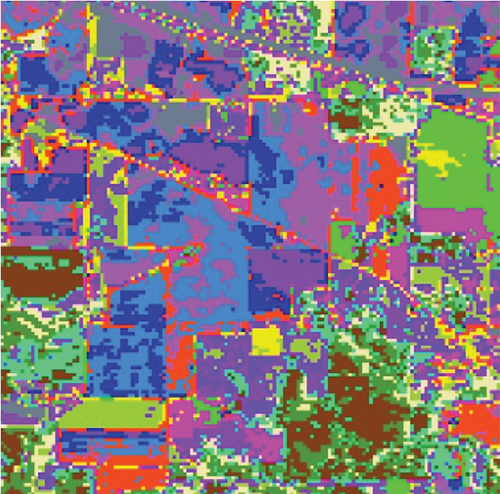

The clustering maps shown in Figure 9 and the corresponding evaluations and time consumed in Table 5 show that LSC-R gets unsatisfying clustering results incorporating numerous misclassifications, with the lowest OA of 31.26% and kappa coefficient of 0.2549. LSC-K and FEMAG-K perform better by significantly decreasing the misclassifications and improving by almost 4% and 11% in AA compared to randomly selected anchor points. Three graph-based clustering methods—LSC-K, U-SPEC, and SGCNR—obtain better clustering performance than SC.

| Class | SC | LSC-R | LSC-K | U-SPEC | SGCNR | FEMAG-R | FEMAG-K | FEMAG |

|---|---|---|---|---|---|---|---|---|

| Alfalfa | 0 | 0 | 0 | 10.87 | 0 | 0 | 0 | 0 |

| Corn-notill | 44.05 | 26.75 | 25.14 | 32 | 30.25 | 63.17 | 43.77 | 43.77 |

| Corn-mintill | 33.13 | 21.45 | 30.6 | 33.98 | 31.33 | 31.93 | 31.93 | 31.93 |

| Corn | 12.24 | 14.77 | 25.74 | 17.3 | 27.43 | 22.78 | 75.95 | 75.95 |

| Grass-pasture | 24.64 | 49.9 | 35.82 | 49.48 | 50.1 | 65.84 | 12.01 | 65.84 |

| Grass-trees | 30.41 | 47.95 | 44.52 | 34.52 | 53.70 | 60.27 | 60.27 | 39.73 |

| Grass-pasture-mowed | 0 | 92.86 | 0 | 32.14 | 0 | 0 | 0 | 3.57 |

| Hay-windrowed | 82.01 | 18.83 | 92.47 | 93.72 | 99.37 | 0 | 100.00 | 100.00 |

| Oats | 0 | 0 | 55 | 45 | 0 | 0 | 0 | 0 |

| Soybean-notill | 23.87 | 24.18 | 29.84 | 30.25 | 27.06 | 82.00 | 82.00 | 82.00 |

| Soybean-mintill | 24.52 | 28.8 | 29.98 | 30.88 | 31.28 | 43.99 | 43.99 | 43.99 |

| Soybean-clean | 16.86 | 27.15 | 21.42 | 22.43 | 16.69 | 64.59 | 64.59 | 96.29 |

| Wheat | 96.1 | 96.1 | 97.56 | 96.1 | 96.59 | 0 | 99.51 | 99.51 |

| Woods | 55.65 | 40.4 | 34.94 | 34.86 | 29.09 | 42.92 | 42.92 | 42.92 |

| Buildings-grass-trees-drives | 19.69 | 16.06 | 19.69 | 18.65 | 22.8 | 76.94 | 76.94 | 76.94 |

| Stone-steel-towers | 59.14 | 31.18 | 52.69 | 0 | 77.42 | 0 | 0 | 0 |

| AA (%) | 32.64 | 33.52 | 37.21 | 36.39 | 37.07 | 34.65 | 45.87 | 50.15 |

| OA (%) | 35.44 | 31.26 | 34.59 | 35.49 | 36.32 | 49.56 | 52.2 | 55.12 |

| Kappa | 0.2969 | 0.2549 | 0.2872 | 0.2907 | 0.3009 | 0.4427 | 0.4762 | 0.5086 |

| Time (s) | 38.0 | 1.4 | 2.3 | 0.8 | 10.1 | 1.0 | 3.7 | 1.5 |

- Note: The best clustering results are highlighted in boldface.

As is shown in Table 5, the FEMAG obtains the highest accuracy with an AA of 50.15%, which is 4.28%~17.51% higher than the FEMAG-R and FEMAG-K algorithms. For OA and kappa, the accuracies obtained by FEMAG are 55.12% and 0.5086, which are 2.92%~23.86% and 0.0324~0.2537 higher than FEMAG-R and FEMAG-K. For the eight classes of corn, grass-pasture, hay-windrowed, soybean-notill, soybean-mintill, soybean-clean, wheat, and buildings-grass-trees-drive, FEMAG obtains the highest precision of 75.95%, 65.84%, 100%, 82%, 43.99%, 96.29%, 99.51%, and 76.94%. The running times of LSC-R, LSC-K, U-SPEC, FEMAG-R, FEMAG-K, and FEMAG belong to the same order of magnitude.

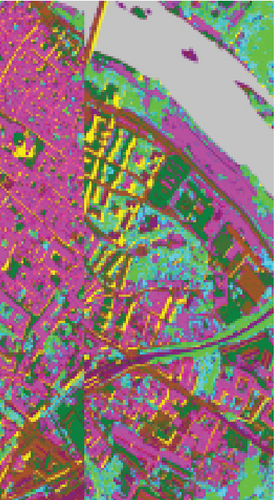

4.3. Medium-Sized Data Set

For the Salinas data set, a three-layer anchor graph with 256, 128, and 64 anchor points is constructed in FEMAG, marked as FEMAG-256-128-64. In addition, the parameters of FEMAG are set as k = 5, s = 10, and . The number of anchor points of algorithms LSC-R, LSC-K, U-SPEC, and SGCNR is set to 256 during the experiment.

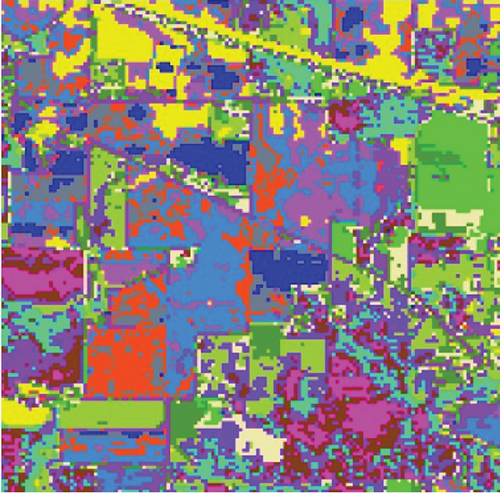

Table 6 shows the quantitative evaluations and running time, and Figure 10 shows the clustering maps, from which we can see that FEMAG obtains better clustering maps and produces more homogenous areas compared with other algorithms. It is obvious that FEMAG-K and FEMAG obtain better clustering performance than FEMAG-R, which fully shows that K-means and BKHK can select more representative anchor points. In addition, we can also draw this conclusion from the LSC algorithm.

| Class | LSC-R | LSC-K | U-SPEC | SGCNR | FEMAG-R | FEMAG-K | FEMAG |

|---|---|---|---|---|---|---|---|

| Brocoliweeds_1 | 0 | 99.70 | 98.36 | 98.41 | 100.00 | 100.00 | 100.00 |

| Brocoliweeds_2 | 65.59 | 40.77 | 54.32 | 81.51 | 99.92 | 99.84 | 99.54 |

| Fallow | 44.38 | 25.76 | 23.48 | 20.65 | 0 | 0 | 0 |

| Fallowrough | 99.71 | 98.78 | 99.86 | 96.92 | 99.28 | 99.78 | 99.57 |

| Fallowsmooth | 90.93 | 88.87 | 92.87 | 65.50 | 98.70 | 98.36 | 98.77 |

| Stubble | 96.64 | 98.81 | 97.73 | 93.74 | 97.30 | 99.57 | 99.67 |

| Celery | 53.20 | 99.30 | 99.39 | 99.41 | 99.92 | 99.92 | 99.92 |

| Grapesuntrained | 43.62 | 43.92 | 57.99 | 59.13 | 45.63 | 86.60 | 86.58 |

| Soil | 80.27 | 66.79 | 84.59 | 87.47 | 99.95 | 99.94 | 99.95 |

| Corn | 58.63 | 60.22 | 9.82 | 63.67 | 1.07 | 0.7 | 21.38 |

| Lettuce_4wk | 73.60 | 81.74 | 23.6 | 66.76 | 0 | 0 | 0 |

| Lettuce_5wk | 13.70 | 44.47 | 91.90 | 63.47 | 0 | 100 | 100.00 |

| Lettuce_6wk | 98.03 | 99.13 | 97.49 | 99.45 | 0 | 0 | 0 |

| Lettuce_7wk | 87.48 | 0 | 92.15 | 0 | 0 | 0 | 0 |

| Vinyarduntrained | 42.23 | 38.00 | 60.26 | 60.04 | 85.65 | 99.35 | 99.30 |

| Vinyardtrellis | 18.65 | 17.87 | 0 | 0.17 | 98.84 | 96.29 | 88.32 |

| AA (%) | 60.41 | 62.76 | 67.74 | 66.02 | 57.89 | 67.52 | 68.31 |

| OA (%) | 57.24 | 59.20 | 66.80 | 68.70 | 67.57 | 81.55 | 82.53 |

| Kappa | 0.5326 | 0.5553 | 0.6306 | 0.6528 | 0.6454 | 0.7943 | 0.8052 |

| Times (s) | 3.6 | 5.3 | 4.7 | 60.3 | 5.4 | 39.3 | 48.5 |

- Note: The best clustering results of each material are highlighted in boldface.

As seen in Table 6, the FEMAG outperforms other methods with AA of 68.31%, OA of 82.53%, and kappa of 0.8052, which are 0.79%~7.9%, 0.98%~25.23%, and 0.0109~0.2726 higher than other methods. It can also be seen that FEMAG-R, FEMAG-K, and FEMAG generate smoother clustering results than LSC-R in this scene, with the 10.33%, 24.31%, and 25.29% improvement in OA. The running time of LSC-R, LSC-K, U-SPEC, FEMAG-R, and FEMAG is of the same order of magnitude. In particular, the FEMAG only takes 9.1 s, which is six and four times faster than the SGCNR and FEMAG-K, respectively. Note that SC cannot work on the Salinas data set due to an “out of memory (OM)” error because the Salinas data set has 111,104 pixels (samples) whose size is too large for SC.

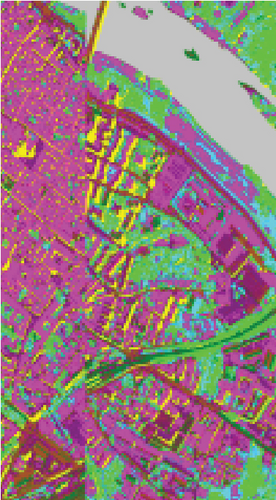

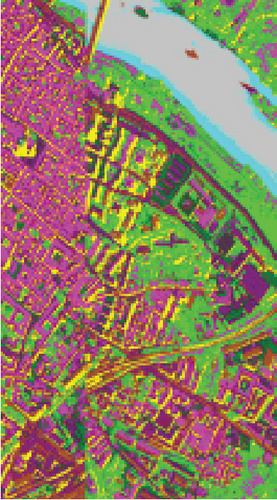

4.4. Large-Sized Data Set

To demonstrate that FEMAG can perform well on large-sized HSI data, experiments on the Pavia Center data set are implemented. We construct a two-layer anchor graph with 512 and 256 anchor points in FEMAG, marked as FEMAG-512-256. In addition, the parameters of FEMAG are set as follows: k = 5, s = 10, and . Then, the number of anchor points in algorithms LSC-R, LSC-K, U-SPEC, and SGCNR is set to 256.

The clustering maps of the Pavia Center data set are shown in Figure 11, which shows that FEMAG achieved the best clustering results; meanwhile, FEMAG, FEMAG-K, and SGCNR obtained smoother clustering maps than other algorithms by taking spatial information into account.

By referring to Table 7, FEMAG obtains the highest precision with the best AA of 65.52%, increased by 4%~15% than other competitors; its OA is 76.63%, increased by 2.5%~9.8% than other competitors; and kappa coefficient of 0.6802, which has increased by 3.2%~13.9%. The performance of FEMAG is better than FEMAG-R and FEMAG-K, which is attributed to the effectiveness of the BKHK algorithm and anchor-based graph construction strategy. Water and asphalt classes are effectively distinguished, and the recognition level is much higher than that of other methods.

| Class | LSC-R | LSC-K | U-SPEC | SGCNR | FEMAG-R | FEMAG-K | FEMAG |

|---|---|---|---|---|---|---|---|

| Water | 98.55 | 98.7 | 98.97 | 90.04 | 90.01 | 96.51 | 99.56 |

| Trees | 99.8 | 69.01 | 75.89 | 72.11 | 56.25 | 54.11 | 23.22 |

| Asphalt | 0 | 9.06 | 10.49 | 0 | 0 | 1.2 | 49.39 |

| Self-blocking bricks | 0.19 | 96.35 | 0 | 67.64 | 58.96 | 58.36 | 58.77 |

| Bitumen | 54.42 | 39.66 | 30.59 | 26.22 | 61.33 | 48.56 | 48.6 |

| Tiles | 81.53 | 84.69 | 82.96 | 71.27 | 88.89 | 75.58 | 88.57 |

| Shadows | 55.78 | 1.3 | 61.04 | 76.45 | 19.49 | 65.12 | 65.82 |

| Meadows | 43.76 | 56.66 | 52.49 | 63.09 | 50.6 | 44.38 | 55.9 |

| Bare soil | 99.9 | 99.86 | 99.9 | 99.93 | 99.9 | 99.9 | 99.86 |

| AA (%) | 59.33 | 61.7 | 56.92 | 62.97 | 58.38 | 60.41 | 65.52 |

| OA (%) | 73.84 | 74.84 | 74.82 | 74.56 | 69.82 | 71.67 | 76.63 |

| Kappa | 0.6484 | 0.6589 | 0.6551 | 0.6576 | 0.5974 | 0.6204 | 0.6802 |

| Times (s) | 23.4 | 28.2 | 12.7 | 129.2 | 25.3 | 241.5 | 48.5 |

- Note: The best clustering results of each line are highlighted in boldface.

The table shows that the running times of LSC-R, LSC-K, U-SPEC, FEMAG-R, and FEMAG are 23.4, 28.2, 12.7, 25.3, and 48.5 s, respectively. As the scale of the data set grows, the total number of samples increases to 783640 in the Pavia Center data set. Focus on six graph-based clustering methods, FEMAG needs 48.5 s, and SC cannot work on it due to “OM error.” Since the anchor-graph and BKHK strategies are adopted, the graph construction is rather fast, and the clustering performance is also relatively outstanding.

5. Conclusion

The performance of clustering methods is based on anchor graphs as well as how anchors are selected. To tackle the problem, we proposed the FEMAG algorithm. First, SuperPCA learns the intrinsic low-dimensional features of HSIs. Second, a multilayer anchor graph is constructed, which can effectively reduce the time complexity while better describing the relationship between pixels and anchors. Third, other than the K-means method, BKHK can select anchors much faster. Experimental results demonstrate that FEMAG with BKHK is 3.4 times faster than FEMAG with K-means, and the OA of FEMAG is 20.1%~44.2% higher than other methods, which means FEMAG significantly outperforms state-of-art methods.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Yiwei Wei and Chao Niu designed the research. Dejun Liu and Peinan Ren modified the draft.

Funding

This study was financially supported by the National Natural Science Foundation of China (No. 52272446).

Acknowledgments

This study was financially supported by the National Natural Science Foundation of China (No. 52272446).

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.