SFC-Based IoT Provisioning on a Hybrid Cloud-Fog Computing with a Minimized Latency

Abstract

The increasing heterogeneity of traffic in the Internet of Things (IoT) service demands presents a challenge for computing nodes to meet the computing resources and link bandwidth required. To address this, IoT requests have been virtualized and organized in service function chaining (SFC), which requires a host among hybrid cloud-fog computing nodes. To find a suitable host for each service function (SF) request, a typical migration algorithm has been used. However, this approach delayed the response due to propagation delays in searching for a valid nearby node. Mission-critical service demands may not even be served at all. This article addresses this issue by proposing two novel approaches: nearest candidate node selection (NCNS) and fastest candidate node selection (FCNS). These approaches employ software-defined network (SDN) controllers and the Markovian arrival process, Markovian service process, and single host (M/M/1) queuing model to monitor the maximum possible latency required to meet the SF service demand by each computing node and finally assigning into one with least latency. With the use of these methods, heuristic monitoring of computing resources is made possible, allowing the selection of the most suitable host computing nodes based on proximity or minimal round-trip time. Moreover, priority-based fastest candidate node selection (PB-FCNS), an adaptation of FCNS, accounts for concurrent service requests using the general arrival process, general service process, and single host node (G/G/1) queuing model. Compared to traditional migration algorithms, NCNS and FCNS provide significant improvements in reducing round-trip time by 5% and decreasing the probability of unsuccessful service function chains by 55%. Despite the cost of installation, employing these methods in conjunction with SDN controllers can reduce latency, maximize service success rates, and guarantee the delivery of heterogeneous service functions.

1. Introduction

Service function chaining (SFC) is a networking technology that enables the creation of a chain of network services to process and manipulate traffic as it flows through the network [1]. SFC has become a critical technology to manage and orchestrate complex network services such as IoT applications [1]. The need for tremendous amounts of storage and processing capacity by the IoT nodes demands a network of IoT with cloud and fog computing nodes, which allows services with very low latency, very high availability, high throughput, very high security, and very low cost [2]. Industrial automation, manufacturing processes, and service technologies are interwoven networks of machines and tools that necessitate the utilization of cutting-edge advancements such as big data processing, artificial intelligence, advanced robotics technology, and dependable interconnectivity to achieve optimal energy levels, enhance flexibility, and workforce efficiency [2, 3]. For instance, the implementation of cloud-based product quality monitoring in smart manufacturing has led to a significant improvement in product quality, cost reduction, and operational efficiency. These systems’ real-time monitoring and analysis capabilities have the potential to prevent product defects and minimize waste, while their predictive functionalities can optimize the production process and reduce downtime [4].

In a heterogeneous IoT system with mission-critical and nonmission-critical devices, service function chaining can be used to optimize the flow of data and processing [5]. SFC can be used to route data to the appropriate processing and storage resources based on the specific requirements of the devices and the data. For example, mission-critical devices often require real-time processing and high storage capacity, while nonmission-critical devices may have less demanding processing and storage needs. Service function chaining can be used to route data generated by mission-critical devices to dedicated resources capable of handling the processing and storage requirements efficiently [5]. On the other hand, data generated by nonmission-critical devices can be directed to less resource-intensive resources. This approach can help optimize the use of resources and ensure that mission-critical devices receive the necessary processing and storage capacity to operate effectively.

The hybrid cloud-fog computing model combines the benefits of the cloud and fog computing (including edge computing) to provide a solution for processing and storing IoT data [6]. It leverages cloud servers’ computing power and storage capacity and the low-latency processing and real-time analytics capabilities of fog computing and edge devices to provide a flexible, scalable, and efficient computing infrastructure for IoT applications [7, 8].

In the context of the heterogeneous IoT system with service function chaining, hybrid cloud-fog computing can be used to provide an optimal balance from processing and storage capacity to low-latency services. Cloud resources can be used to provide the necessary processing and storage capacity for mission-critical devices, while fog computing resources can be used to provide low-latency data aggregation and processing for all devices, including nonmission-critical ones. The service function chaining can be implemented in the cloud and fog layers to route the data flow to the appropriate processing and storage resources based on the specific requirements of the devices and the data [7]. Fog computing in the realm of IoT put forth a proposal for a secure real-time strategy for smart manufacturing [9]. By capitalizing on the potential of fog computing, companies can mitigate the risk of security breaches and enhance the efficacy of their IoT applications. Nonetheless, the effectiveness of using a specific computing node in IoT applications may be contingent on the unique demands of the application in question and the capabilities of the fog components that are being leveraged [9].

Starting from the last decade, software-defined networking (SDN) has brought about a radical change in facilitating and managing communication between service function chaining (SFC) and hybrid computing nodes. After being orchestrated by SFCs, heterogeneous IoTs can integrate with SDN devices [1]. The paradigms have become a pillar of high-speed Internet connections and higher availability.

This technique involves the interconnection of two or more ordered or partially ordered SFs to support specific application requirements [10, 11]. OpenStack defines SFC as the SDN version of policy-based routing (PBR). SFC enables the creation and management of complex network services that involve multiple functions and can be used to dynamically steer traffic through a specific sequence of network services to meet the requirements of different applications, users, or policies [12, 13]. SDN controller automates and reconfigures the network of service functions and computing nodes without changing the hardware setup, thus providing efficient provisioning and scheduling [14]. A service is governed by a policy, which consists of a set of guidelines that dictate how data are transferred between a virtual network and specific service instances. SFs, which are deployed on diverse IoT physical devices such as sensor networks and actuators, serve as the organizational structure for virtual network functions (VNFs). With the NFV, proprietary hardware can be moved to software’s virtual servers and integrated with the cloud [15]. NFV can be used as a microservice-based network function running in peripheral devices, sensors, actuators, and computing nodes. Hence, to facilitate seamless scalability of network systems, policies are regularly updated, control is centralized, and reliable connectivity is maintained within computing data centers [16].

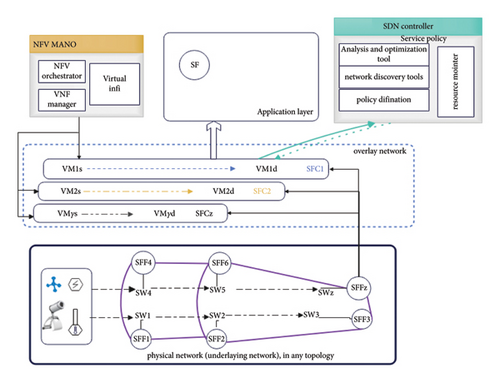

The SDN data plane switches play a crucial role in forwarding data from service functions and feedback from computing nodes under the command of the SDN controller [17]. VNFs are an integral part of network function virtualization (NFV), which uses a unique method of organizing and managing virtualized network functions, computing resources, and applications. In service function chaining, a sequence of interrelated VNFs is coupled in a single chain to provide specific application requirements as shown in Figure 1 [6, 20]. Among the various traffic engineering techniques for optimizing network paths, the SDN controller can help in dynamic service assignments.

In the physical network, there are service function forwarders (SFFs) responsible for receiving traffic from underlying network switches and applying forwarding policies to direct packets to specific service functions (SFs) [18, 19]. Source and destination virtual machines’ (VMs) instances, managed by the NFV manager tool typically provided by the edge network, carry traffic on top of the underlying network as a virtual overlay network, maximizing the programmability and reconfigurability of our SFC network.

In this article, our focus is to minimize the overall round-trip latency between the SFs and the computing nodes and maximize the number of successful SF requests by optimally placing them in hybrid cloud-fog computing nodes. As shown in Figure 1, we have utilized an SDN controller’s analysis and optimization tool to calculate the maximum possible round-trip time delay for deploying SFs to an available computing node. The SDN controller’s resource monitoring service can heuristically monitor the SFs, link bandwidth, and compute node resources. Given the heterogeneity of SFs’ resource requirements, our goal is to minimize the propagation time needed to find a suitable host computing node by avoiding the service migration. Although there exist heuristic dynamic SFC network assignment techniques for hybrid cloud-fog computing nodes [21, 22], we enhance the delay calculation by considering the impact of both current and previous SFs’ traffic on the queueing side of the computing node using the SDN controller as a central service and resource master. To achieve this, we apply queuing disciplines to the existing heuristic dynamic assignment.

We proposed NCNS and FCNS to find the optimal host computing node for each SF request. Both apply the M/M/1 queuing [23] model to estimate the maximum possible waiting time at the given computing node. Those algorithms mainly have one difference. NCNS searches the optimum computing node, starting from the closest, until the valid host is found. FCNS, on the other hand, heuristically searches for the computing node with the minimum possible round-trip service time among the candidates, which qualifies for enough resource capacity and link capacity.

In addition, we solved one of the major limitations. The existing algorithms have faced the assignment problem for concurrently arriving SFs as they are not obliged to request the controller [21, 24]. We came up with a priority-based FCNS algorithm to handle the concurrent SFC with G/G/1 queuing discipline [25]. The SDN controller gives priority to mission-critical services by taking account of active previously assigned SFs, the currently arrived SFs, and the real-time performance of computing nodes.

- (1)

Minimize the maximum possible round-trip time delay required to serve SFC requests to uplift the quality of service (QoS) and increase the success rate of each request.

- (2)

Enhance SDN controllers’ decision-making by developing a priority algorithm for concurrently arrived mission-critical SFC requests on a hybrid computing model.

The article is structured into sections covering Related Work, Methodology (including system model and algorithms), Results and Discussion, and Conclusion.

2. Related Work

The authors of [16] focus on the utilization of web services based on the REST web architecture and network function virtualization (NFV) for their IoT application’s southbound interface and automated management of network resources. We on the other hand leverage the potential for SFC’s service policy and SDN controllers to manage the computing resources for heterogeneous traffic.

In [17], the authors propose the SF-appliance determination and routing orchestration (SIDE) problem for hybrid SFC networks and present the Markov approximation technique to solve it based on the assumption called “every local decision can construct a global solution.” Their proposed approach aims to maximize the weighted utility of the network using integer programming with quadratic constraints (IPQCs) while considering the penalty of routing and NFV market budget. Simulation results show that their approach can effectively optimize SFC in hybrid network function clouds. Their approach relied on a precomputed destination node, which hardly provides a flexible demand of computing resources environment for heterogeneous IoT. The IPQC formulation may become computationally expensive for large-scale networks with many sessions and network function appliances.

The use of mobile edge-cloud (MEC) technology-based service delivery has been proposed in [26]. Migration strategies based on cost, time, bandwidth, and energy consumption models have been suggested for traffic between MEC stations [26]. However, it is important to consider the potential increase in latency and complexity that can result from having packets propagate through the entire route during migration. Further research is required to develop more efficient migration techniques that can minimize latency and complexity while ensuring seamless data transfer between MEC stations.

In addition to MEC and energy-efficient allocation of resources, the optimization of computing resource blocks (CRBs) for fog computing-dependent IoT systems has also been studied [22]. The main objective of [22] relied on finding optimal rental costs for resources provided to fog nodes. However, the research did not include the direct allocation of IoT resources to fog nodes.

The authors in [27] propose a federated edge-assisted mobile cloud architecture for service provisioning in heterogeneous IoT environments. The architecture is designed to provide computational and storage resources to IoT devices in a distributed manner, leveraging both edge and cloud infrastructures. Despite having a similar objective as ours to reduce latency and increase the availability of resources, the architecture does not implement an SDN controller or queuing model that can support concurrent services and dynamic reconfiguration of resources.

Another approach to efficient service delivery for IoT systems is through the allocation of service requests to physical resources while considering energy constraints [28]. The use of a genetic algorithm for finding optimal deployments with and without energy constraints has been proposed, and it has been suggested that considering the energy requirement of virtual network functions (VNFs) is better while allocating resources. As it works only for the static assignment of VNFs to a physical resource, it may not be suitable in all cases, as VNFs may have different energy requirements over time [28].

The authors in [29] aimed to address the challenges of supporting edge computing systems with high-mobility and high-density vehicle environments. To achieve this, they proposed a caching strategy that facilitates collaboration between edge and cloud computing nodes. This approach allows for the optimization of data migration based on the deployment of vehicular content caching (VEC), the density of vehicle requests, and the similarity of the requested data. To implement this caching strategy, the authors utilized software-defined networking (SDN) to manage the transfer of data to storage locations. They also included a cache update mechanism that allows computing storage spaces to inform other nodes of any changes. In addition, they developed a popularity prediction model that predicts the popular content that vehicles will request soon. To encourage vehicles to contribute their caches and computing resources, the authors also included an incentive mechanism. This mechanism rewards vehicles that contribute more resources with quicker content delivery. Overall, this caching strategy is designed to minimize the time taken to obtain the required supporting data and improve the performance of edge computing systems in high-mobility and high-density vehicle environments.

Another approach proposed in [30] is the Kubernetes container-based service chains in fog-cloud computing nodes. The SFC controller optimizes resource provisioning in Kubernetes based on network latency and bandwidth, which is not supported by the default Kubernetes scheduler. However, increasing the number of replicas may lead to node evictions if resources are not available. Dynamic strategies should be added to further refine the allocation scheme in terms of bandwidth fluctuations and delay changes as SFC requests themselves are heterogeneous.

Dynamic provisioning of SF requests was explored in [21] using migration between fog-to-fog nodes, fog-to-cloud nodes, and cloud-to-cloud nodes based on three major constraints: memory, processing unit, and bandwidth between links [21]. The migration starts at nearby nodes and continues to the next nearby computing node from the user, where a service request was made. While the provisioning of service function chains (SFCs) on a hybrid computing node in [21] is claimed to be dynamic, propagating all the data throughout all the nodes is important so that the data might no longer stay until getting a valid computing node. This brings an additional latency and complexity. In addition, the processing time and processing memory should not be considered as a nonreusable resource; all the SFs would not have been permanently assigned to the host.

A mixed-integer linear programming (MILP) model has been proposed for the placement of different application components to hybrid cloud-fog systems with the help of network function virtualization seeking to minimize computational cost [31]. It is important to note that the authors did not set any latency requirement for the IoT system, as some of the applications are time-sensitive. Furthermore, the mapping was static by nature since, after the deployment of IoT systems to a particular cloud or fog node, it will continue to be mapped under that [31].

The article in [32] discussed an IoT computation offloading system that operates across three layers: ground, aerial, and space. Similarly, in [33], the adaptive role assignment algorithm dynamically assigns target hunting and communication relay roles for each 3U vehicle based on their locations and mobility specifically for underwater applications. The ground layer comprises IoT devices that perform specific computation tasks, such as seismologic surveillance and forest fire monitoring, in remote areas. Unmanned aerial vehicles (UAVs) in the aerial layer act as edge nodes, providing edge computing and caching capabilities to the ground IoT devices. In the space layer, a LEO satellite offers cloud computing services to its coverage area. The authors devised a joint optimization problem that aims to minimize the maximum delay among IoT devices while ensuring that the maximum available energy and tolerable delay constraints are met. The problem involves optimizing various factors, including association control, task assignment, power control, bandwidth allocation, UAV computation resource allocation, and UAV position optimization. Unlike ours, the authors did not mention any device in charge of facilitating and monitoring the task allocation for each operating layer so requests need to iterate through all the available devices to ensure that fair task allocation or offloading is ensured.

In [34], DHTs are used to avoid network flooding in MANETs, but they lack support for high mobility due to increased overhead, complexity, collisions, and network inconsistency. While DHTs are popular in peer-to-peer (P2P) networks, they are not recommended for SDN overlays as the SDN controller can handle the network without additional configuration and synchronization complexities. P2P networks use DHTs for node and resource identification, caching overlays, and content delivery [35]. However, for our IoT-focused case, efficiently locating and identifying computing resources are our priorities [36]. DHT-based elastic SDN controllers [37] focus on managing the number of controllers deployed, which is beyond our current scope.

The authors of the article in [24] proposed a dynamic planning model to solve the service function chaining (SFC) placement problem on the fog computing and communication network (FCCN) using deep reinforcement learning (DRL). The proposed algorithm aims to minimize latency, reconcile service costs with quality of service, and maximize long-term cumulative reward. The algorithm successfully reuses virtual network functions (VNFs) and deploys more requests with less latency, efficient resource consumption, and improved QoS compared to other methods. We found an area of improvement in the article, considering the dynamic distribution of deployed VNFs over time and does not address failure restoration or parallel VNF processing. We used G/G/1 queuing discipline to address the concurrently arrived SFs.

Overall, these studies noted the importance of considering various factors such as latency and bandwidth when designing service delivery mechanisms for IoT systems. While different approaches have been proposed, there is still a need for further research to develop more efficient and effective solutions.

3. Methodology

3.1. System Architecture

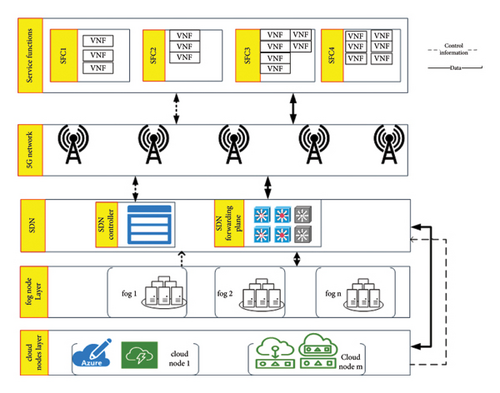

To provide a complete service, heterogeneous IoT systems are organized as virtual network functions (VNFs) that are dynamically coupled into their respective service functions (SFs) based on geography, time, and interrelation, as stated in [5, 38, 39]. All the service chains have a network with the SDN controller and SDN data plane. The SDN controller collects the real-time status of available computing resources for all computing nodes, analyses available computing resources in each computing node, and creates and monitors the network of SFCs and computing nodes.

We perform analytical calculations to determine the capacity and delay of both the link and the computing node. Various factors can impact the performance of links and computing nodes at any given time, including congestion, network load balancing, multiprotocol label switching (MPLS), and traffic shaping. In our analysis, we assume that the link’s performance is consistently capable of handling the traffic, as the bandwidth dynamically adapts to the overlay network. Our focus is on the virtual network nodes dedicated to serving the IoT system. Our primary objective is not only to select the optimal path but also to choose the ideal computing node for a specific service function (SF).

In Figure 2, the service functions encapsulate VNFs and exchange control information with the SDN control plane and data with the SDN data plane through network nodes. Computing nodes are also connected to the SDN control plane and the SDN data plane. Thereby, the SDN controller computes an optimal connection based on the requested VNF type and available computing resources.

3.2. System Model and Problem Formulation

We assume that Z number of SF requests [S1, S2, S3…, and Sz] arrived within the period T and each SF has I number of interrelated VNFs [γ1, γ2, γ3, γ4, …, and γi] based on the grouping criteria. The type and number of VNFs in each SF are based on a predefined context-aware or domain-level load-balancing policy [40, 41]. For instance, in the case of industrial IoT, the service policy might group the VNFs in the same sequential feeding systems or closed-loop systems into a single SF [42].

Clients are responsible for purchasing reasonable computing resources at the computing node, as they can deploy a “pay as you go” model for these resources [43]. The computing resources required for a process include processor speed, storage for recording incoming data and computation, and security measures. The SDN controller also updates various information to optimize resource usage at each computing node. This includes the relative location of each node from the SDN controller, remaining processing speed and working memory while serving multiple SFs, and remaining storage memory from the initial deal. This information is updated within a certain time interval to calculate the maximum possible time delay to serve the SF request at that computing node. All the abovementioned terminologies and their symbols are shown in Table 1.

| Parameters | Symbol |

|---|---|

| Available processing memory, storage memory, and processing speed resource at node y | Qp(y), Qs(y), Ψ(y) |

| SFs, number of VNFs in each S, location of request form controller, and time delay for the unit traffic between base stations | S, Γ, L, Tp |

| Request traffic (Erlang), maximum tolerable delay, request control information traffic, request info form controller to request, and bandwidth between request and the controller | Ar, δγmax, Arc, Acr, βγc |

| Requested processing storage, request bandwidth, request storage space, and request processing speed | Qpγ, βγ, Qsγ, Ψγ |

| Setup time delay, propagation delay, queuing delay, processing delay, and transmission delay by cloud or fog computing node | Dset, Dpropx (y), Dquex (y), Dprocx (y), Dtranx (y) |

| Number of cloud nodes and number of fog nodes | n, m |

| The propagation speed of the medium between 5G base stations, between the SDN data plane to the yth computing node | ∂, ∂(y) |

3.2.1. Delay Calculation

(1) Setup Delay. The time taken by the SFs to make a request and for the SDN controller to respond is an important factor in network latency. SFs send the necessary network control information to the SDN controller and the SDN controller calculates and responds with the chosen computing node. The setup delay is influenced by whether an in-band or out-of-band connection is established between the SDN controller and the network nodes. Since our focus is not on enhancing the flow rule, we opt for an in-band connection to ensure that instructions from the SDN controller reach the network nodes. However, it is important to note a key distinction from the approach mentioned in [44]: we only utilize the in-band connection to transmit information about the packets’ characteristics, rather than the packets themselves. As a result, the setup time delay is significantly reduced compared to other delays.

In addition to considering the exponential arrival of the SFs, the M/M/1 queuing model also considers the exponential departure of the service requests. This means that the service time for each service request follows an exponential distribution, where the time to serve a service request is a random variable that follows an exponential distribution. Our model can estimate the expected waiting time for service requests in a queuing system with a single host (i.e., 1 host computing node). This queuing model assumes that the service requests arrive independently of each other and that the service time for each service request is independent of the arrival time and service time of other services [23].

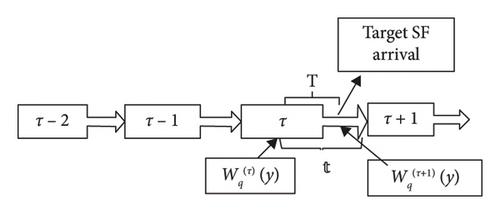

The SDN controller has a memory management system to store information that is at least useful for the current calculation [45]. The SDN controller updates control information regarding computing nodes within a certain time interval . We suppose that the last update is at τ, T is the time taken from τ to the arrival of target SFs, and T is less than the time interval from τ to the next update τ + 1 as shown in Figure 3. In addition to the possible queueing delay registered from the last update Wq(τ) (y), additional waiting time is required for the SFs arrival from τ to τ + T given by ST(y), where T is the time elapsed while giving service to SFs in the queue.

Now, we have ST(y), Wq(τ)(y), and T. By substituting equation (10) in equation (9), we can find the maximum possible queuing delay by the service function.

3.2.2. Capacity Constraints

The processing speed and processing memory of the yth computing node must be greater than the sum of the required processor speed and processor memory of all VNFs in that particular SF. The remaining storage of the yth computing node must have more than the sum of storage requirements by all VNFs in that SF.

3.3. SFC-Based IoT Provisioning

(1) SFC Request Preparation. The service policy allows the SFs to gather related VNFs and sum up the requirements for processing speed, working memory, storage memory, and bandwidth. For the proposed algorithms, the SDN controller chooses the host computing node by holistically monitoring the status of each computing node. The prior task before assigning the host computing node is checking for the validity of the node. Valid computing nodes are those with valid links and node capacity for the given SF request which will be described by the pseudocode in ‘.

-

Algorithm 1: candidate node validity checker.

- (1)

Input: for all services

- (2)

output: find the candidate’s computing nodes

- (3)

for 1: Y do

- (4)

If node capacity and link capacity are valid then

- (5)

candidate ⟵ True

- (6)

count candidate ++

- (7)

If count candidate = 0 then

- (8)

Return to 1//service is unsuccessful

- (9)

for 1: c = count candidate do

- (10)

calculate Dset(c), Dtran(c), Dproc(c), Dprop(c), Dque(c)

- (11)

Dtotal(c)⟵Dset(c) + Dproc(c) + Dtran(c) + Dprop(c) + Dque(c)

- (12)

end for

3.3.1. Nearest and Valid Candidate Node Selection (NCNS)

In NCNS, the SDN controller holistically monitors all computing nodes and SF service requests, assigning the host computing node based on the proximity of a valid computing node to the SF service request.

SDN controller calculates the maximum hypothetical time delay by the candidate node to the targeted service chain from the nearest valid computing node to the farthest. SDN controller sorts relative separation distance in an ascending order. SDN controller chooses the host computing node with a minimum L(y) and valid capacity constraint as shown by the pseudocode in Algorithm 2.

-

Algorithm 2: Delay calculation and nearest candidate node selection algorithm.

-

(13) //get y number of host computing nodes with the valid capacity and link constraint

-

(14) for 1: c do//c is the candidate node

-

(15) L(c) = Ld(c) + Ly(c)//relative location

-

(16) Delay_total(c) ⟵ Dtotal(y)//copy

-

(17) Sort L(c)//sort computing node from nearest to farthest

-

(18) for 1: c do

-

(19) for 1: q do//c = q

-

(20) If L(c)[c] = L(c)[q] then

-

(21) If Delay_total(c) [q] < 𝛅𝛄 max then//delay validity checker, q is the host

-

(22) Dtotal(c)[q]⟵Dtotal(c)[q] + Delay_total(c)[q]

-

(23) Delay_total(c)⟵0//reset the array for the next SF

-

(24) host++//aim to check whether one host is found or not?

-

(25) break//abort the loop

-

(26) end if

-

(27) end for

-

(28) end for

-

(29) if host = 0 then//there is no host computing node.

-

(30) Return to 1 in the previous algorithm 1//service is unsuccessful

3.3.2. Fastest Candidate Node Selection (FCNS)

FCNS is generally similar to the NCNS, but it selects the fastest valid candidate computing nodes as host nodes to free up computing resources as quickly as possible for future SF requests. The host computing node will be the one with the fastest possible round-trip time among the other candidates with valid node capacity and link capacity constraints.

It computes the total possible time delay, Dtotal(y), by a candidate computing node, assuming node y hosts the current SF request. The SDN controller chooses the host with the least round-trip time to complete the service and has a Dtotal(y) less than the maximum tolerable delay δγ max and valid link capacity constraint. Finally, the SDN resets Dtotal(!y), the hypothetical time delay by other computing nodes as shown by the pseudocode in Algorithm 3.

| Parameters | Values |

|---|---|

| n, m | 5, 2 |

| S, Γ (VNFs per service), L (unit) | 10000, 2–25 (rand), 1–10 (rand) |

| Cγ | True/false (rand) |

| Aγ (Erlang per VNF), Aγc (Erlang), Acγ (Erlang) | 0.2–50 (rand), 0.01, 0.01 |

| δγmax (millisecond) | 10–20000 (rand) |

| Qpγ (GB), Qsγ (GB), Ψγ (GHz) | 0.005–8 (rand), 0.1–32 (rand), 0.5–100 (rand) |

| βγ (GHz), βγc (GHz) | 0–50 (rand), 0.1 |

| (βfd, βcd, βfθ, βcθ) (GHz) | 8–15 (rand), 20, 5–25 (rand), 10 |

| (Tf, Tc) (milli second) | 15/20/25, 50/100 |

| L(y) (unit) | 6/8/10/12/100/500 |

-

Algorithm 3: Delay calculation and fastest candidate node selection algorithm.

-

(13) Delay_total(c) ← Dtotal(y)//get a copy of an array of total delay

-

(14) Sort Delay_total(c)

-

(15) if Delay_total(c)[0] > δγmax then

-

(16) Return to 1 in Table 2//service is unsuccessful

-

(17) else

-

(18) if candidate = True and then

-

(19) for 1: c do

-

(20) Dtotal(c)[0] ← Dtotal(c)[0] + Delay_total(c)[0]//host with min delay

-

(21) Delay_total(c) ← 0//reset if for the next request

-

(22) //update the resource by the host computing node

-

(23) end for

-

(24) end if

-

(25) end else

3.3.3. Priority-Based Fastest Candidate Node Selection (PB-FCNS)

FCNS and NCNS both use the first in, first out (FIFO) queuing model, meaning that SF requests are processed in the order they are received. PB-FCNS, on the other hand, prioritizes service chains with a minimum-maximum tolerable delay. This means that service chains that cannot tolerate delays beyond a certain threshold are given a higher priority. This approach is useful in scenarios where some SFs require low latency or high throughput, such as real-time video or audio streaming applications.

The SDN controller accepts requests from concurrent service chains and sorts them based on their traffic size, choosing the host computing node starting from service chains with minimum traffic size to the larger. The queuing delay in PB-FCNS is calculated using the G/G/1 queuing model, which is a general queuing model that can handle arbitrary interarrival and service time distributions. To select the host computing node, the SDN controller validates whether the candidate nodes pass capacity and delay constraints, and then uses the G/G/1 queuing model to calculate the remaining traffic in the node. This helps to ensure that the selected nodes can handle the incoming SFs without exceeding their capacity or causing excessive queuing delays.

In the G/G/1 queuing model, the waiting time S(T)(y) represents the additional waiting time added to the system between τ and τ + T. To calculate this waiting time, one can use Little’s law [23], which states that the long-term average number of SFs in a queuing system is equal to the long-term average arrival rate multiplied by the long-term average time that a SF spends in the system.

This approximation is simpler than the one based on Marchal [45] and can be used to estimate the average waiting time in the G/G/1 queuing model when the arrival and service processes are not known in advance. However, it may not be as accurate as the more complex approximation based on Marchal [45]. In this article, the Langenbach-Belz [25] approximation is used to calculate the S(T)(y).

The SDN controller collects updated information about the resources of computing nodes as Wqτ(y), S(T)(y), and T are known, and then we substitute them in equation (8) or 10 to find Wqτ+T(y), so that we can find the queuing delay.

Apart from giving priority to concurrently arrived SFs by adjusting the formula of queuing delay, all delay calculations, capacity, and link validation as well as host node selection technique are the same as FCNS.

4. Result and Discussion

4.1. Simulation Parameter

We conducted an experiment based on the simulation parameters that included service chain request criteria and computing resources. The cloud computing model generally had a higher processing speed than the fog computing model. The processing speed range for cloud nodes was set from 30 GHz to 200 GHz, while the range for fog nodes was set from 1 GHz to 30 GHz. The processing memory range was set from 40 GB to 200 GB for cloud nodes and from 1 GB to 30 GB for fog nodes. In addition, the storage memory requirement for cloud nodes ranged from 1 TB to 30 TB, while for fog nodes, it ranged from 1 GB to 50 GB, whether temporary or permanent. The simulation constants included the average holding time of the nodes, the location of each node, and the requirements of VNFs. We assumed that traffic transmission between the service chain and SDN data plane was at 5G with a 10 Mb bandwidth. The simulation parameters are presented in Table 2.

4.2. SFC Provisioning and Automation: Encountered RT Delay and Success Comparison

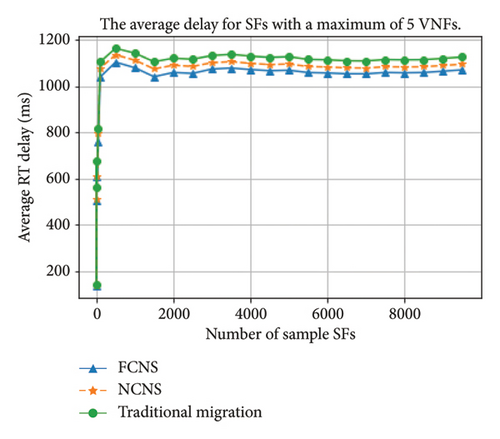

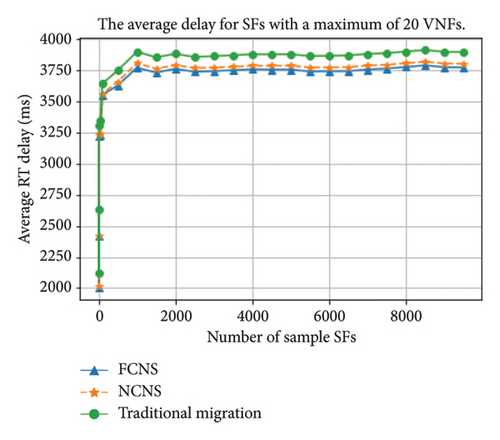

The experiment involved 10,000 heterogeneous SFs, each with dynamically changing VNFs. The graph below illustrates the average round-trip (RT) delay taken to serve SFs in FCNS, NCNS, and traditional migration cases. The expectation was that FCNS would outperform both NCNS and traditional migration algorithms as it selects the best computing node using an integrated approach. Figures 4 and 5 depict the average RT delays by the SFs that orchestrate a maximum of 5 VNFs and 20 VNFs, respectively.

The results show that FCNS and NCNS algorithms outperformed the traditional migration algorithm in terms of minimizing the latency per SF. The traditional migration algorithm requires propagation delay upon finding a valid computing node, which increases the average RT delay. While some SFs took a bit longer time in the NCNS algorithm than in the FCNS algorithm, the average RT delay was still lower. As the maximum number of VNFs in the SF increased from 5 to 20, the margin between the traditional migration technique and the proposed algorithms grew wider. When the maximum possible VNFs in each SF increased, the number of valid candidate nodes dropped, which meant that the traditional migration technique had to propagate more and result in higher delays.

The traditional migration algorithm has a five percent and up to four percent more average delay compared to FCNS and NCNS algorithms, respectively. Over a longer time, traditional migration will be prone to service failure of SF requests due to limited computing resources.

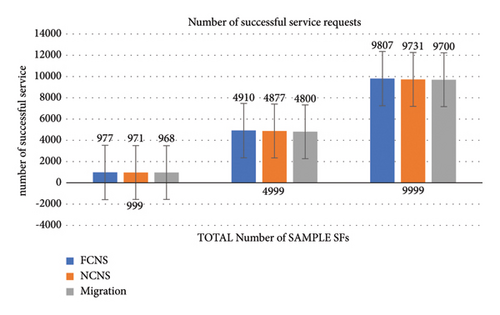

Figure 6 depicts the proportion of successful service chains to the total SFs, with success determined by finding a host node with a valid profile for capacity limitations. For all three samples of SFs, the proportion of successful SFs by FCNS is superior to NCNS and the migration technique. The optimal host assignment in the FCNS helps future requests from mission-critical SFs. The unsuccessful SFs are about 192/9999 by the FCNS compared to 299/9999 by the migration technique. SFs in the traditional migration technique may not find a host computing node with more resources quicker than their maximum tolerable delay because it requires additional propagation delay.

4.3. PB-FCNS

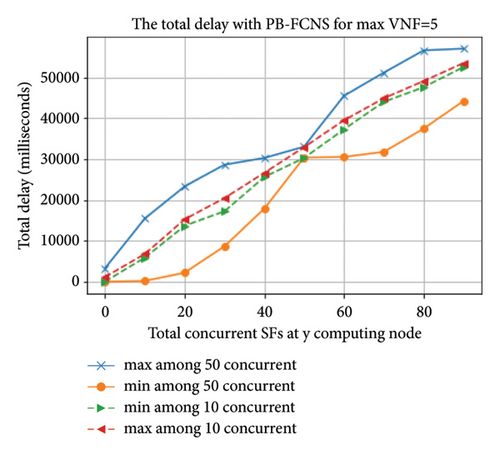

We have made the simulation with 90 SFs. As shown in the legend of the following graph. The four priority options are: prioritize the larger traffic among the 50 concurrent SFs, prioritize the larger traffic among the 10 concurrent SFs, prioritize the smaller traffic among the 10 concurrent SFs, and prioritize the smaller traffic among the 50 concurrently arrived services.

Based on the simulation results, prioritizing mission-critical services with the least possible round-trip time delay among the 50 concurrent SFs yielded a reduction in total round-trip time delay of 7–12% compared to the FCNS as shown in Figure 7. By implementing the PB-FCNS approach, not only can concurrent services be handled more efficiently but also mission-critical services can get the necessary attention and prioritization they require, resulting in a more streamlined and effective system.

5. Conclusion

In conclusion, NCNS and FCNS approaches, along with the PB-FCNS adaptation of FCNS, offer substantial advancements in optimizing SFC provisioning to the host computing nodes within IoT systems. By leveraging SDN controllers and queuing models, these methods contribute to reducing latency, improving the success rate of service function chains, and ultimately boosting the overall performance and efficiency of the system. Thus, the total round-trip time delay for the SFs is reduced by 5%, and the probability of unsuccessful SFs drops by 55% compared to the typical migration algorithm. Adjusting the queuing model to G/G/1 by adopting PB-FCNS eliminates the issue of service priorities for concurrently arrived heterogeneous SFs. The impact of implementing SDN and SFC technology on scheduling IoT systems to host computing nodes, might need to consider optimization related to the number of SDN controllers per service function and optimization of deployment cost. We suggest providing efficient SFC gateway placement and NFV implementation for IoT systems, which can also be another research potential. Our work is particularly valuable, especially for situations with multiple but limited computing resources. For future work, we recommend incorporating additional network parameters such as congestion, traffic steering, and the impact of using a peer-to-peer distributed hash table (DHT) on the overlay network.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Open Research

Data Availability

The data used to support the findings of this study are included within the article.