Online Energy-Aware Scheduling for Deadline-Constrained Applications in Distributed Heterogeneous Systems

Abstract

In the current computing environment, the significance of distributed heterogeneous systems has gained prominence. The research on scheduling problems in distributed systems that consider energy consumption has garnered substantial attention due to its potential to enhance system stability, achieve energy savings, and contribute to environmental preservation. However, efficient scheduling in such systems necessitates not only the consideration of energy consumption but also the ability to adapt to the dynamic nature of the system. To tackle these challenges, we propose an online energy-aware scheduling algorithm for deadline-constrained applications in distributed heterogeneous systems, leveraging dynamic voltage and frequency scaling (DVFS) techniques. First, the algorithm models the continuously arriving applications and heterogeneous processors and proposes a novel task-sorting method to prioritize tasks, ensuring that more applications are completed within their respective deadlines. Second, the algorithm controls the selection range of processors based on the task’s subdeadline and assigns the task to the processor with the minimum energy consumption. Through experiments conducted with randomly generated applications, our approach consistently exhibits superior performance when compared to similar scheduling algorithms.

1. Introduction

Distributed heterogeneous systems stand out for their network of myriad computing nodes, each furnished with a unique combination of computing resources across various types and performance tiers. In the current computing landscape, the significance of such systems has gained remarkable prominence [1, 2]. These systems offer a multitude of benefits, including high-performance, efficient, and highly reliable computing capabilities, making them suitable for a wide range of application scenarios. These domains range from scientific computation, big data analytics, and artificial intelligence to cloud services, the Internet of Things, and beyond. By harnessing the diverse array of computing resources available within distributed heterogeneous systems, it becomes feasible to adeptly meet diverse application requirements [3]. Furthermore, leveraging these resources allows for enhanced system performance, improved energy utilization efficiency, and the facilitation of innovation and development across diverse fields [4]. In the orchestration of these resources, scheduling algorithms assume a pivotal role, ensuring their efficient allocation and utilization.

The issue of energy consumption poses a significant challenge in the context of distributed heterogeneous systems. Due to the varying energy consumption characteristics of different types of computing nodes within the system, it becomes imperative to devise effective approaches for managing and optimizing energy consumption. Addressing the energy consumption problem entails the development of resource allocation and task scheduling strategies that aim to minimize overall energy consumption while meeting the performance requirements of tasks. Through the utilization of energy-aware scheduling algorithms in tandem with dynamic voltage and frequency scaling (DVFS) techniques, distributed heterogeneous systems can effectively manage and control energy consumption [5]. This approach allows the system to enhance energy efficiency and sustainability by dynamically adjusting the voltage and frequency levels of computing nodes in response to workload demands.

As is widely recognized, the energy-conscious task scheduling problem is classified as an NP-hard problem [6]. Many existing algorithms primarily address static or offline scenarios, wherein all tasks are predetermined and the environment is assumed to be stable [7–10]. Despite the wealth of insightful research on static scheduling algorithms, their adaptability falls short in the face of the dynamic landscape of distributed heterogeneous systems, which are characterized by fluctuating performance levels and node availability. Consequently, the focus on online scheduling becomes critical. Through online scheduling, dynamic decisions are made concerning task distribution and scheduling by taking into account the system’s current state as well as the real-time influx of tasks, necessitating persistent surveillance of the system and resource conditions in either real time or near real time. By doing so, the system can adapt to changing conditions, allocate tasks efficiently, and optimize energy consumption in real time, thereby enhancing overall system performance and resource utilization.

- 1.

By leveraging models of complex applications and heterogeneous processors, we propose a formulation for the energy-aware application scheduling problem in heterogeneous distributed systems. This formulation revolves around a constrained optimization approach, aiming to minimize the energy consumption of applications while adhering to strict deadline constraints.

- 2.

We propose an OMECDS algorithm which efficiently allocates suitable processors for stochastic incoming applications with lower time complexity. Moreover, we propose a novel approach to prioritize tasks from different applications to enhance the success rate of application execution. The proposed algorithm integrates a processor selection mechanism that considers the subdeadline defined for each task, with the primary objective of minimizing energy consumption while adhering to the imposed deadline constraints.

- 3.

To evaluate the performance of our proposed approach, we conducted experiments using simulations with randomly generated applications. The results obtained indicate that OMECDS outperforms other existing approaches.

The remainder of this article is organized as follows. In Section 2, we provide an overview of the related work on task scheduling algorithms. Section 3 introduces the application model, energy model, and heterogeneous processor model and provides a detailed description of the problem. In Section 4, we present the algorithm proposed in this study. Section 5 presents the experimental results and provides an in-depth analysis. Lastly, in Section 6, we discuss the conclusion and further work of our research.

2. Related Work

Considerable attention has been devoted to investigating scheduling algorithms that incorporate energy consumption considerations in distributed heterogeneous systems. This research has broadly categorized existing energy-conscious scheduling strategies into two main groups: those prioritizing completion times and those driven by multiple objectives, according to their scheduling aims.

Completion time–oriented methods prioritize minimizing the overall completion time of applications in a distributed heterogeneous system, while also taking energy consumption into account. By adjusting the voltages and frequencies of system processors through techniques like DVFS, these methods are aimed at optimizing the performance-energy trade-off. Xiao et al. [15] introduced an algorithm called MSLECC, which addresses the challenge of minimizing the dispatch length of parallel applications while ensuring limited energy consumption in heterogeneous distributed systems. MSLECC incorporates an energy consumption constraint to ensure that the energy consumed during the scheduling process remains within predefined limits. By considering the energy efficiency trade-offs associated with DVFS, the algorithm strikes a balance between achieving shorter schedule lengths and maintaining energy consumption at an acceptable level. Chen et al. [11] introduced an enhanced approach to address the challenges of the MSLECC algorithm. They proposed two key strategies: an efficient task prioritization strategy and a weight-based energy distribution strategy. Building upon the genetic algorithm framework, Liu et al. [16] proposed an energy-conscious dependence task scheduling algorithm with a multiobjective fitness function to balance the makespan and energy consumption. These aforementioned studies are geared toward enhancing scheduling efficiency in distributed heterogeneous systems by transforming the multifaceted challenge of minimizing completion time and energy consumption into a singular scheduling objective. This conversion allows for the application of various optimization techniques and algorithms to find an efficient solution.

Multiple objective-oriented scheduling algorithms are designed to address scheduling problems that involve multiple conflicting objectives [17, 18]. In scheduling scenarios, multiple objectives must be simultaneously addressed, including minimizing energy consumption, lowering costs, maximizing resource utilization, and meeting deadline constraints. The multiobjective optimization scheduling algorithm endeavors to identify an optimal set of solutions, specifically the nondominated solution set, often referred to as the Pareto optimal solution set. Zhang et al. [19] focus on the combinatorial optimization problem of biobjective optimization, aiming to achieve a high level of system reliability while minimizing energy consumption in the context of parallel tasks. Li et al. [20] introduced a hybrid energy-aware multiobjective optimization algorithm that encompasses eight distinct types of neighborhood structures. In order to balance global and local search abilities, a hybrid deep exploitation and deep exploration method is employed. Khiat, Haddadi, and Bahnes [21] employed an innovative amalgamation of hybrid genetic, max–min, min–min, and random algorithms to orchestrate a harmonious equilibrium between energy consumption and overall response time. Such an equilibrium was accomplished by the judicious modulation of processor voltages and frequencies, thereby facilitating the attainment of an optimal computational solution. Rehman et al. [22] proposed an algorithm called MOGA, which was employed to address the conflicting interests of cloud stakeholders in optimization problems. This approach is aimed at minimizing the makespan while adhering to budget and deadline constraints, while also offering an energy-efficient solution through the utilization of DVFS. Additionally, a gap search algorithm was proposed in this study to optimize the resource utilization of the cloud’s available resources.

While the papers mentioned earlier primarily focused on static scheduling methods with offline task allocation processes, there is ongoing research in the field of online scheduling algorithms that can effectively handle the arrival of random tasks [23]. Zhou et al. [24] presented a multiworkflow scheduling algorithm designed to dynamically schedule concurrent workflows while adhering to user-defined deadline and budget constraints. In their approach, they use the concept of ranku from HEFT [25] as a task priority metric. Additionally, they introduced a bifactor selection method for resources, aiming to strike an optimal balance between the budget and deadline constraints. Arabnejad, Bubendorfer, and Ng [26] involve the utilization of a central queue to manage the workflow that has arrived. In this process, the subdeadline of each task is calculated, and the algorithm employs the early deadline first strategy to prioritize and select tasks for scheduling. This strategy is aimed at improving the success number of the workflow by ensuring that tasks with earlier deadlines are scheduled first.

In the domain of task scheduling for distributed heterogeneous systems, it is crucial to take into account both energy consumption and online scheduling issues. By employing appropriate online energy consumption-aware scheduling algorithms, it enhances the overall system performance and improves energy utilization efficiency.

3. Model

In this section, we establish the heterogeneous processor model, application model, and energy model utilized in our study.

3.1. Heterogeneous Processor Model

The distributed heterogeneous system we considered consists of m diverse processors, denoted as P = {pk|k ∈ [1, 2, ⋯, m]}, interconnected by a high-speed network. Each individual processor, pk ∈ P, is equipped with DVFS capabilities, allowing it to switch between different frequencies, denoted as fk. The available frequency range for processor pk spans from the minimum value, , to the maximum value, . It is important to note that the execution time of a task decreases as the processor’s frequency increases, while the energy consumption increases. We disregard the energy impact resulting from processor frequency-state transitions. In our assumption, when a task is assigned to a processor, it is executed immediately. However, it is important to note that a processor can only execute one task at a time. This restriction ensures that the processor’s resources are effectively utilized and that tasks are processed sequentially to avoid any conflicts or resource contention [27, 28].

3.2. Application Model

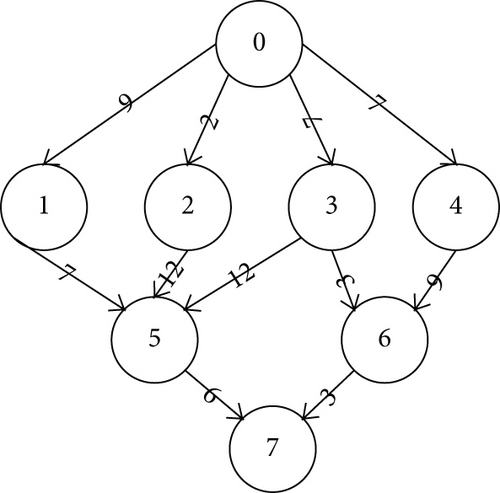

A parallel application, composed of several interlinked tasks, can be depicted as a directed acyclic graph (DAG). In this representation, each node corresponds to an individual task, and each edge delineates the data dependencies among tasks. The application is submitted in a stochastic manner, but once submitted, all relevant information about the application is known. We denote the set of dynamic applications as η = {G1, G2, ⋯, Gw}. A specific DAG within this set, denoted as Gs, is modeled as Gs = {as, ds, Vs, Es}. In this model, as represents the arrival time of Gs, ds represents the deadline of Gs, Vs is the set of nodes, where each node represents a task, and Es is the set of edges, where each directed edge indicates the data dependency between tasks and . The communication time between tasks and is denoted as . If and are located on the same processor, we assume the communication time . A task can only be executed when all the data from its predecessors have been transferred. A task without any immediate predecessors is referred to as an entry task, symbolized as , while a task with no successors is referred to as an exit task, symbolized as . Figure 1 shows an example of a DAG-based parallel application that describes the data dependencies of eight tasks.

The completion of all tasks in the DAG Gs signifies the completion of Gs as a whole.

3.3. Energy Model

The used notations in this paper are presented in Table 1.

| Notation | Implication |

|---|---|

| pk | The k-th processor in the system |

| m | The number of processors |

| Gs | The s-th DAG in the system |

| as | The arrival time of DAG Gs |

| ds | The deadline of Gs |

| Vs | The set of tasks in Gs |

| Es | The set of edges between all tasks in Gs |

|

|

The i-th task in Gs |

| ns | The number of tasks in Gs |

|

|

The set of predecessors of task |

|

|

The set of successors of task |

|

|

The data transfer time between and |

|

|

The average execution time of |

|

|

The actual execution time of task on processor pk with frequency fk,h |

|

|

The earliest start time of |

|

|

The earliest finish time of |

| avail(pk) | The earliest time that the processor pk can execute a task |

| makespans | Schedule completion time of Gs |

|

|

The energy consumption of task on the processor pk with frequency fk,h |

3.4. Problem Description

Constraint (2) ensures that each task can only be scheduled once, preventing duplication or multiple assignments. Constraint (3) states that the actual completion time of the application must satisfy its deadline requirement.

4. Proposed OMECDS Algorithm

In this section, we introduce the OMECDS algorithm, tailored for managing the scheduling of multiple applications with diverse arrival times within a heterogeneous distributed system. The main objective of the algorithm is to minimize the overall energy consumption of the system while efficiently scheduling as many applications as possible. This is accomplished through meticulous optimization of resource allocation and task scheduling, all the while adhering to the specified deadline constraints of the applications. The algorithm is divided into two main phases: the task selection phase and the processor allocation phase.

During the task selection phase, we introduce a novel approach to calculate the priority and subdeadline of each ready task derived from the incoming applications. The computation of priority and subdeadline takes into account an array of factors including task dependencies, arrival time, overarching scheduling objectives, and other pertinent considerations.

In the processor allocation phase, we map each eligible task to an available processor, taking into account the task’s subdeadline. The goal is to assign the task to a processor and select an appropriate frequency setting that ensures the subdeadline is not violated while minimizing energy consumption. This phase involves making intelligent decisions to optimize the allocation of processors and frequencies, considering the dynamic characteristics of the tasks and the available resources. The objective is to achieve efficient task execution while conserving energy resources.

4.1. Task Selection Phase

In contrast to static scheduling, online scheduling operates under the condition that the topology and data information of the application are unknown until the application is submitted. This necessitates that the algorithm must be capable of handling incoming applications, parsing their task information, and making decisions promptly. The algorithm must dynamically adapt to the evolving task requirements and efficiently make real-time scheduling decisions. It should be able to process incoming applications on the fly, adjust the task allocation, and optimize the resource utilization based on the available information. This ability to handle unknown and evolving application characteristics is essential for effective online scheduling. As the DAGs arrive, they are stored in the DAGPool, and their parameters are parsed during the task selection phase. In this phase, the algorithm is aimed at identifying the ready tasks, determining their priority, and allocating subdeadline for scheduling.

To accommodate the system’s dynamics and adhere to task priority constraints, the OMECDS employs a strategy of exclusively scheduling ready tasks during each iteration. This approach prevents the scenario where the initial applications occupy a significant portion of system resources, potentially impeding the completion of subsequent applications. By prioritizing the scheduling of ready tasks, the algorithm ensures that tasks from different applications have a fair opportunity to be executed and progress toward completion according to their priorities. This approach promotes efficient utilization of system resources and facilitates the timely execution of all arriving applications.

Ready task means a task has no predecessor or its all immediate predecessors have been completed. When new DAGs arrive, all the entry tasks within the DAG are considered ready tasks and are added to the ready task list (RTL). Additionally, when the last unfinished predecessor of a task is completed, that task becomes a ready task and is also added to the RTL. As a result, the RTL consists of all tasks that are currently ready for execution. All tasks within the RTL can be executed in parallel, as they have met their dependencies and are independent of each other in terms of execution order.

The closer the task’s is to the , the more priority it has. We rank urgency of all ready tasks. This prioritization allows for the scheduling algorithm to focus on tasks that are time-critical to ensure the successful completion of the corresponding DAGs within their deadlines. When comparing priorities between two tasks and , if both urgency of and are positive or negative, the task exhibiting greater urgency is deemed to have higher priority. Conversely, if the urgency value is one positive and the other negative, the task with negative urgency is ascribed higher priority.

The process of handling newly arrived applications in OMECDS is outlined in Algorithm 1. The algorithm utilizes the RTL to manage tasks that are ready for execution. Upon the arrival of new DAGs, the algorithm calculates the earliest start time , earliest finish time , and latest finish time for all tasks in the DAG (Line 1). This information is crucial for scheduling and processor allocation. For the new DAGs, only entry tasks are considered ready tasks. The algorithm adds these entry tasks to the RTL (Line 2). Additionally, all unselected tasks are added to the TaskPool (Line 3). The TaskPool serves as a repository for tasks that are not yet ready for execution. The algorithm sorts the ready tasks in the RTL based on their values (Line 4). This prioritization ensures that tasks with higher urgency, often associated with closer deadlines or critical dependencies, are scheduled first. Finally, ready tasks in the RTL are allocated to available processor using the function ScheduleReadtTask(RTL) (Line 5). This step involves assigning suitable processors and frequencies to the tasks, while considering their timing constraints and energy efficiency objectives.

In a DAG with n tasks, the maximum number of edges e is given by ((n − 1)n)/2. In Algorithm 1, Line 1 calculates , , and for each task in order to determine their scheduling parameters. This calculation requires a time complexity of O(n2). In Line 4, the length of the RTL queue is determined by the maximum indegree of a task in Gs, denoted as nin. Sorting tasks in the RTL queue takes a time complexity of O(nin × log(nin)). The calculation of urgency in the algorithm also takes a time complexity of O(nin). Therefore, the overall time complexity of task selection for an arrival DAG Gs in the algorithm is O(n2 + nin × log(nin)). Considering that nin is typically small compared to n, we can simplify the time complexity to O(n2).

-

Algorithm 1: The preprocessing for an arrival DAG.

-

Require: The arrival DAG Gs = {as, ds, Vs, Es}.

-

Calculate , and for each tasks in Vs;

-

Add all entry tasks into RTL;

-

Add other tasks in Vs into TaskPool;

-

Calculate the of all tasks in RTL; and sort them in non-descending order;

-

Call ScheduleReadyTask(RTL; );

The process of the algorithm that handles the completed task is detailed by Algorithm 2. When a task is completed, the algorithm identifies the immediate successor tasks that become ready as a result. These ready tasks are added to the RTL to indicate that they are now eligible for execution (Lines 1–5). The values of selected tasks are calculated (Line 3). The selected tasks are then removed from the TaskPool to indicate that they have been scheduled for execution (Line 4). Tasks in the RTL are sorted based on their urgency values (Line 7). This ensures that the tasks with higher urgency, which reflects their priority, are scheduled first. Finally, the function ScheduleReadyTask(RTL) is called to allocate the resources and execute the ready tasks (Line 8).

The completion of a task indicates that its immediate successor tasks are likely to be ready for execution. By promptly identifying and scheduling ready tasks, the algorithm can potentially complete more applications within their respective deadline constraints. This dynamic handling of ready tasks enhances the overall efficiency and effectiveness of the scheduling algorithm. When a task is completed, Algorithm 2 needs to traverse each task in , which needs time O(nout). O(nout) is the maximum outdegree of a task among Gs. The time complexity in Line 2 is O(nin). The length of RTL saving the ready task in is at most equal to nout. Sorting tasks in RTL requires time O(noutlog(nout)). Hence, the time complexity of the processing for the finish of a task is O(nin × nout + nout × log(nout)).

-

Algorithm 2: The processing for the finish of a task.

-

Require: A completed task

-

For each do

-

If all of pred have been completed then

-

Calculate ;

-

Add task into RTL and remove from TaskPool;

-

Endif

-

Endfor

-

Sort all tasks in RTL by urgency in a non-descending order;

-

Call ScheduleReadyTask(RTL);

4.2. Processor Allocation Phase

In the processor allocation phase, the algorithm assigns appropriate processors and frequencies to the tasks in the RTL based on their priority order. The objectives are to ensure the timely completion of tasks, thereby averting any severe consequences, and to reduce the overall energy consumption of the system.

By iterating through all possible combinations and selecting the one with the minimum energy consumption that satisfies the subdeadline constraint, the algorithm ensures that the task is allocated to the best available resources in terms of energy efficiency while meeting its timing requirements. If none of the processors can complete the task within its SD, then assign the task to the processor that completes it the fastest.

-

Algorithm 3: Function ScheduleReadyTask(RTL).

-

Require: each task in RTL.

-

Ensure: The schedule scheme.

-

For each in RTLdo

-

Emineft, minE⟵MAXVALUE, bestPro, bestF⟵∅;

-

For all processor pkdo

-

For all frequency fk,hdo

-

Calculate the , , and ;

-

If then

-

If then

-

, bestPro⟵pk, bestF⟵fk,h, ;

-

Endif

-

Endif

-

Endfor

-

Endfor

-

Schedule on bestPro with bestF;

-

Remove from RTL;

The pseudocode of the processor allocation phase is stated in Algorithm 3. The algorithm iterates through all available processors and frequencies to find the best combination for each ready task (Lines 1–15). The algorithm initializes related variables for every task in RTL (Line 2). Then, traverse all processors and frequencies and calculate est, eft, and E (Lines 3–5). The algorithm checks if the earliest finish time () of the task on the selected processor and frequency is less than the task’s subdeadline () (Line 6). This ensures that the allocated processor can complete the task within the specified subdeadline. The algorithm selects the processor bestPro and frequency bestF combination that results in the minimum energy consumption for the task (Lines 7 and 8). Finally, assign task to bestPro with bestF for execution and remove from RTL (Lines 13–15).

In the processor allocation phase, we only select ready tasks for scheduling, which helps improve the system’s responsiveness to dynamic events. By considering both energy consumption and subdeadline constraints, OMECDS chooses the best processor and frequency combination that minimizes energy consumption while considering the deadline constraint. The length of queue RTL is at most equal to nin. For each task in RTL, Algorithm 3 needs to traverse all processors. The number of computing processors is m, and the maximum number of frequencies is H. Lines 1–5 in Algorithm 3 are to calculate , , and for each task and each processor, which need time . The time complexity of resource allocation is O(nin × m × (nin + H)).

5. Results

In this section, the performance of the proposed OMECDS algorithm is evaluated by comparing it with the LESA [11], MSLECC [15], and FCFS with ranku algorithms. LESA addresses the challenge of energy-aware scheduling for dependent tasks on a heterogeneous multiprocessor system. It introduces a list-based algorithm to effectively determine start times and processor assignments. The approach optimizes task execution by balancing dependencies and energy constraints, strategically choosing suitable processors and speed settings for minimal completion time. MSLECC is a classic method of solving dependent task scheduling problems with the shortest schedule length while considering energy consumption constraints. It differs in task sequencing and energy allocation methods from LESA. FCFS with ranku algorithm gives a rank for each DAG task according to the HEFT [25] and schedules DAGs according to the “first come first service.” For the online scheduling problem considered in this article, the LESA and MSLECC algorithms are repeatedly executed at each new DAG arrival to handle the scheduling. All the algorithms used in the experiments are implemented in the Java programming language. The experiments were conducted on a computer with an Intel® Core™ i7-10700H CPU operating at 2.90 GHz, 16.00 GB RAM, and a 64-bit Windows 10 operating system.

5.1. Experimental Setup

The simulated heterogeneous distributed system consists of m processors with varying processing capabilities. The processor and application parameters used in the experiments are as follows: , , , , 2.5 ≤ mk ≤ 3.0, and fk,max = 1 GHz (all the frequency values are discrete with an accuracy of 0.01 GHz). These parameters are set exactly by reference to existing work [3, 12, 35]. In this experiment, 20 processors are randomly generated for each experiment.

The random DAGs used in the experiment are generated using a DAG generator based on several parameters, namely, CCR, n, fat, density, regularity, and jump. The parameter settings are chosen based on a previous paper [13] to ensure a diverse set of DAG characteristics. CCR represents the ratio of communication to computation in the DAG. The available values for this parameter are {0, 1, 2}. n determines the total number of tasks in the DAG. The values used in the experiment are {10, 20, 30, 40, 50, 60, 70, 80, 90, 100}, providing a range of DAG sizes. fat influences the width and height of the DAG, thereby affecting its overall structure. The available values are {0.2, 0.4, 0.6}. density impacts the number of edges between different levels of nodes in the DAG. A higher density value indicates a larger number of edges. The values used in the experiment are {0.2, 0.4, 0.6}. regularity affects the uniformity of the number of tasks at each level of the DAG. A higher regularity value indicates a higher similarity in the number of tasks among different levels. The set of values used is {0.2, 0.4, 0.6}. jump represents the number of different levels an edge can connect. A larger jump value allows edges to connect nodes that are farther apart in the DAG. The available values for this parameter are {1, 2, 4}. Table 2 summarizes the parameter settings used in the DAG generator.

| Parameters | Value |

|---|---|

| n | = [10, 20, 30, 40, 50, 60, 70, 80, 90, 100] |

| fat | = [0.2, 0.4, 0.6] |

| density | = [0.2, 0.4, 0.6] |

| regularity | = [0.2, 0.4, 0.6] |

| jump | = [1, 2, 4] |

| CCR | = [0, 1, 2] |

5.2. Results and Discussion

5.2.1. Planning Successful Rate (PSR) and Energy Consumption Analyses

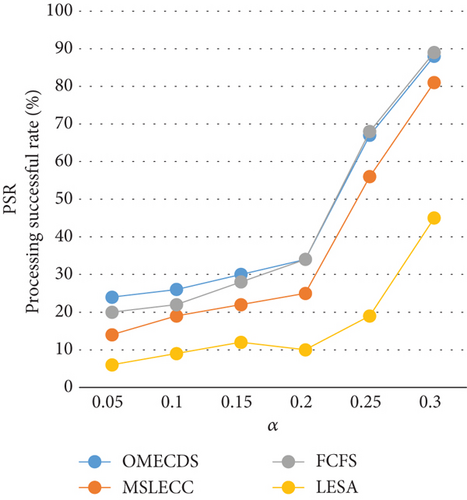

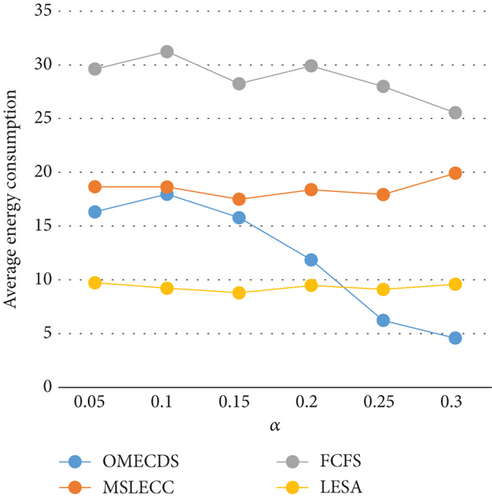

The first experiment, depicted in Figure 2, encompasses the evaluation of both PSR and average energy consumption across varying arrival time intervals. In Figure 2(a), the PSR demonstrates a discernible upward trend as the arrival time interval increases. This increase in the arrival factor, denoted by α, implies a reduction in the number of applications that the system must process within a unit of time. In the system under consideration, the number of processors is fixed. This means that the computing power of the system is limited. Consequently, the reduction of α leads to an enhancement in the successful completion rate of applications. However, it is important to note that the FCFS algorithm prioritizes minimizing the completion time of applications at the expense of significantly higher energy consumption, as illustrated in Figure 2(b). As the value of α increases, the urgency to complete applications within the specified deadline diminishes, leading to a greater emphasis on energy consumption performance, as depicted in Figure 2(b) when α exceeds 0.2. Remarkably, the OMECDS algorithm surpasses the MSLECC algorithm achieves notable energy savings and PSR improvements. In comparison to the LESA algorithm, the OMECDS algorithm exhibits a 30.55% increase in average energy consumption, but a remarkable 204.5% improvement in average PSR. When compared to the FCFS algorithm, the OMECDS algorithm achieves a 58.57% reduction in energy consumption and a 7.12% increase in average PSR.

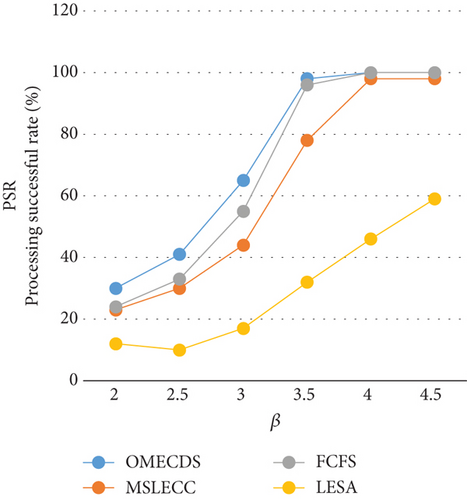

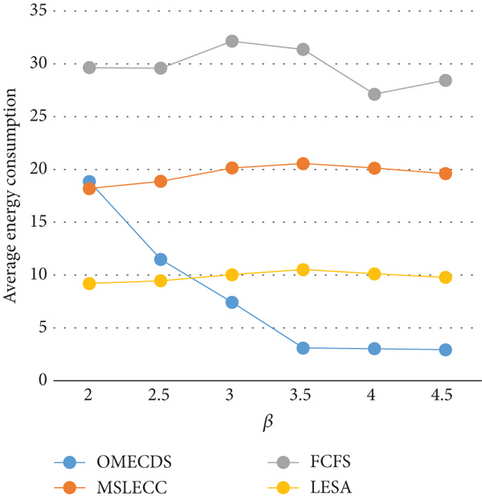

Figures 3(a) and 3(b) present the PSR and average energy consumption of the four algorithms under varying deadline factors. With an increase in the value of β, the deadlines for each application are extended, resulting in an improvement in the PSR for all algorithms. When β surpasses 3.5, OMECDS exhibits the capability to schedule all arrived applications while meeting their respective deadlines. OMECDS demonstrates a more remarkable ability to minimize energy consumption when the deadline is relaxed, as evidenced in Figure 3(b). In contrast, MSLECC and LESA algorithms allocate energy for each task, leading to similar energy consumption even under different deadline constraints, because their energy consumption constraints are fixed. Based on the experimental results, it is evident that OMECDS outperforms both FCFS and MSLECC algorithms in terms of both PSR and energy consumption across different values of β. Additionally, OMECDS surpasses LESA in terms of PSR across varying β values. Notably, when compared to LESA, in general, OMECDS still achieves a significant 18.41% reduction in average energy consumption.

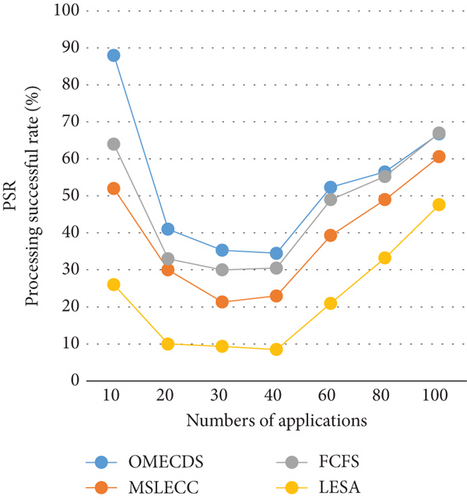

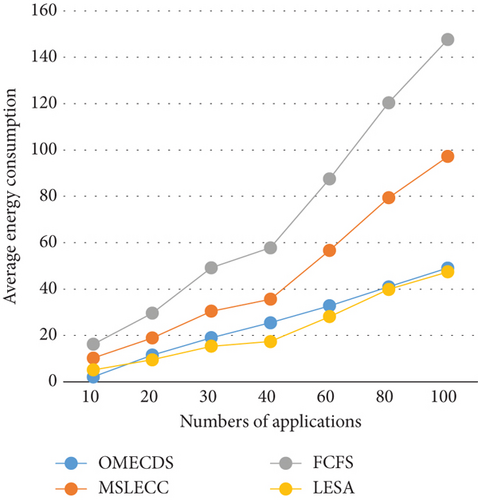

In Figure 4, we experiment with different numbers of applications. Notably, the number of tasks in an application is still randomly generated. The number of applications is 10, 20, 30, 40, 60, 80, and 100. The α is 0.15 and β is 2.5. Obviously, OMECDS exhibits superior performance compared to FCFS and MSLECC algorithms. Compared with LESA, the average energy consumption of OMECDS experiences a slight increase of 7.97%, but the PSR shows a substantial improvement of 198%.

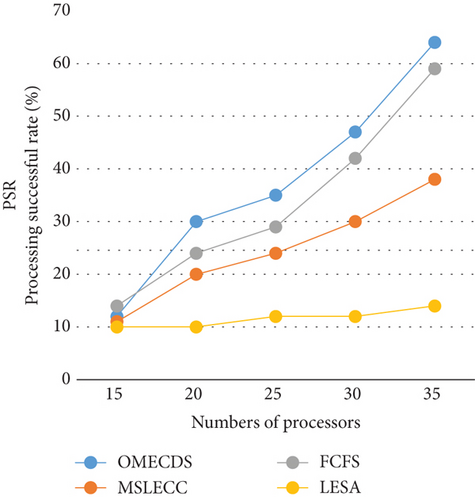

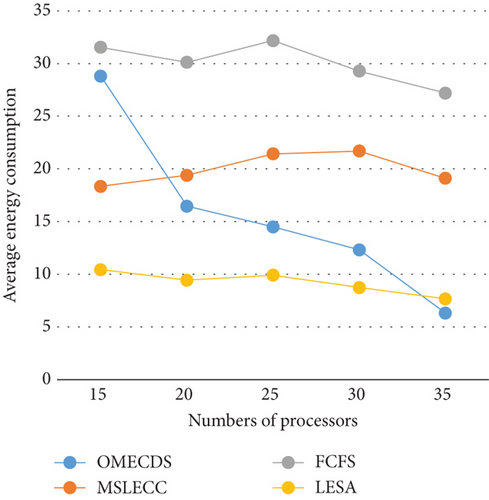

Figures 5(a) and 5(b) show the performance of the four algorithms with a varied number of processor, while keeping the number of applications fixed at 20. All processors are randomly generated. The value of α is set to 0.15 and the value of β is set to 2. As the number of processors increases, the PSR of all algorithms tends to increase. When compared to MSLECC, OMECDS achieves an average PSR improvement of 46% and reduces average energy consumption by 20.11%. In comparison to FCFS, OMECDS shows a 10.35% improvement in PSR and a 48.72% reduction in energy consumption. Compared with LESA, OMECDS experiences a 63.94% increase in energy consumption but exhibits an impressive average PSR improvement of 212.09%. Overall, when considering both the successful completion rate of applications and energy consumption, OMECDS performs better than the other algorithms.

5.2.2. Runtime Analyses

This subsection is aimed at evaluating the time efficiency of the four algorithms. The running time represents the duration from the start of the algorithm to the point of finding a solution. Time performance is one of the important indicators to measure the algorithm.

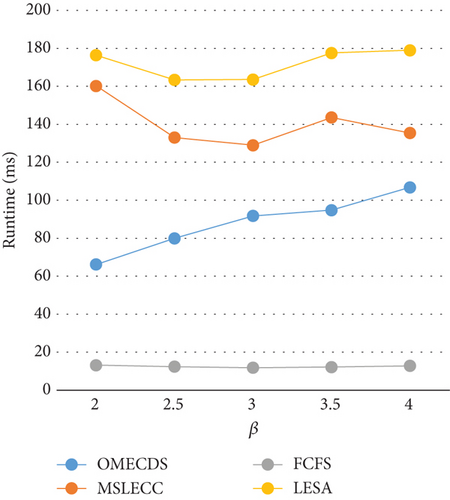

In the first comparison, we analyze the average runtime of all algorithms under different deadline factors β, as illustrated in Figure 6. As mentioned previously, when the deadline constraint becomes looser, OMECDS algorithm tends to prioritize solutions with lower energy consumption. Consequently, as β increases, the running time of OMECDS experiences a slight increase. On the other hand, FCFS exhibits better time performance compared to OMECDS, LESA, and MSLECC due to its utilization of the maximum frequency for processors without dynamic adjustments. However, it is important to note that OMECDS still demonstrates better time performance compared to LESA and MSLECC. Both MSLECC and LESA are heuristic-based algorithms with low time complexity, indicating that OMECDS maintains good execution efficiency while achieving its objectives.

The last experiment is aimed at investigating the average running time of all algorithms under varying numbers of randomly generated applications. In Figure 7, it can be observed that as the number of applications increases, the average execution time of all algorithms also increases. However, our proposed algorithm demonstrates significantly shorter average execution times when compared to LESA and MSLECC. Although FCFS has a shorter execution time than OMECDS, it is important to highlight that OMECDS excels in application completion and energy savings. In summary, OMECDS exhibits outstanding performance in terms of execution efficiency, energy savings, and successful application scheduling.

6. Conclusions

To tackle the dynamic scheduling problem associated with parallel applications in heterogeneous distributed systems, we have introduced the OMECDS algorithm, which is aimed at minimizing energy consumption while adhering to deadline constraints. The proposed algorithm boasts a low computational complexity, making it efficient in practical implementation. Our devised task priority method effectively manages the spontaneous arrival of applications, while the formulation of subdeadlines and the selection mechanism for processors strike an optimal balance between meeting deadlines and minimizing energy consumption. Experimental results have demonstrated the superior performance of our approach compared to alternative algorithms. However, for future work, we plan to delve into distributed methods to tackle existing challenges. Centralized approaches are susceptible to single points of failure. We aspire to explore distributed solutions that can augment reliability and robustness in scheduling parallel applications.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Y.L. and J.C. designed the resource. Y.L. wrote the first draft of the paper. Y.L., X.D., J.C., and C.D. analyzed the results, read the manuscript, and approved the final version. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 62106202), the Key Research and Development Program of Shaanxi (No. 2024GX-YBXM-118), the Aeronautical Science Foundation of China (No. 2023M073053003), and the Fundamental Research Funds for the Central Universities.

Open Research

Data Availability Statement

Data will be made available on request.