An Extensible Gradient-Based Optimization Method for Parameter Identification in Power Distribution Network

Abstract

Accurate parameter identification of power distribution network (PDN) has attracted remarkable attention recently. However, power device parameters usually show an instability attributed to both the operating status and manual entry. Therefore, it is urgent to develop reliable algorithms for identifying PDN parameters with both high accuracy and high efficiency. Most of the existing algorithms are gradient-free and based on the heuristic schemes, resulting in an unstable numerical calculation. Herein, based on our previous work about the adaptive gradient-based optimization (AGBO) method, we propose an extensive version, namely, AGBO-Pro model. In this method, both the numerical and categorical features of experimental observations are utilized and incorporated with each via a weighted average. By comparing the proposed method with several heuristic algorithms, it is found that the errors in RMSE, MAE, and MAPE criteria via AGBO-Pro are all about 2 times lower with a much faster and more stable convergence of the loss function. By further taking a linear transformation of the loss function, the AGBO-Pro model achieves a more robust performance with a much lower variance in repeat numerical calculations. This work shows great potential in possible extension of gradient-based optimization methods for parameter identification in PDN.

1. Introduction

Acquiring accurate and reliable device parameters is crucial in the context of power distribution networks (PDNs) due to their multifaceted implications [1]. However, the lack of in situ measurement techniques poses challenges in directly obtaining certain PDN parameters, which are typically assumed to be static in real situations. These parameters include line resistance, line reactance, transformer resistance, transformer reactance, transformer conductance, and transformer electrical susceptance. This limitation often leads to poor estimation in parameter identification for PDN [2]. To address these challenges, numerous approaches have been developed to enhance numerical efficiency and reduce residuals in parameter estimation. These approaches include supervisory control and data acquisition, power management unit (PMU), and advanced metering infrastructure. They can be classified into various methods, such as the full-scale approach [3], PSOSR [4], normalized Lagrange multiplier (NLM) test [5], finite-time algorithm (FTA) [6], residual method, sensitivity analysis method, Lagrange multiplier method [7], Heffron-Phillips method [8], and specialized Newton–Raphson iteration [9]. Additionally, recent advancements in machine learning and deep learning techniques have led to the proposal of smart methods, including artificial neural network [10], graph convolution network (GCN) [11], support vector machine (SVM) [12], multihead attention network [13], deep reinforcement learning [14], estimation using synchrophasor data [15], PSCAD simulation [16], multimodal long short-term memory deep learning [17], and edge computing [18]. While these methods show effectiveness with simulation data, they often require specialized measuring devices.

To overcome the challenges posed by the lack of required data and measuring devices, a mathematical approach called the power flow model can be employed. The power flow model establishes relationships between PDN parameters and easily obtainable data, such as active power, reactive power, and voltage. The challenging parameters mentioned earlier can be optimized using algorithms in combination with the static parameters, namely, active power, reactive power, and voltage, on the low-voltage side. By utilizing the power flow model, the voltage values on the high-voltage side can be calculated, and the residuals between the calculated and true values can be used to construct a loss function for optimization methods. The optimization methods for parameter identification can be generally classified into two categories, namely, the gradient-free methods [19–25] and gradient-based methods [26, 27]. First, in gradient-free methods, the heuristic or biomimetic optimization rules are designed such as particle swarm optimization (PSO), genetic algorithm (GA), ant colony algorithm, Aquila optimizer (AO) [28], nuclear reaction optimization (NRO) [29], and Pareto-like sequential sampling heuristic method (PSS) [30]. The performance of these heuristic algorithms is largely dependent on the initialization. On the other hand, the gradient-based method is usually constructed by combining the physical model of PDN and the neural network with the backward propagation of the loss function. Beneficial from the chain rule and automatic derivative of the loss function with respect to the parameters to be optimized, the gradient-based method is a deterministic approach. Therefore, many advanced gradient descent algorithms can be utilized to accelerate the optimization. In addition, researchers pay more attention to data preprocessing methods, including the utilization of clustering algorithms and hypothesis testing [31, 32].

- (1)

An extensible gradient-based optimization method is proposed, which is constructed with customized neural network layer and loss function, and it achieves a higher and more robust performance in parameter identification problems of PDN.

- (2)

In the physical-informed multihead neural network, we separate the experimental measurements into the numerical features and categorical features. After several manipulations, the categorical features are transformed to a weight distribution and incorporated into the numerical features via a linear transformation. Such a treatment is rarely studied or neglected by other investigations.

- (3)

The performance in three evaluation functions of this hybrid method during the numerical calculation is much better than that of several individual optimization algorithms.

This paper is organized as follows. Section 2 introduces the identification equations of power flow model in PDN and proposes the AGBO-Pro optimization method. The experimental data and calculation details are given in Section 3. The results and discussions are given in Section 4. Finally, Section 5 gives a brief conclusion.

2. Materials and Methods

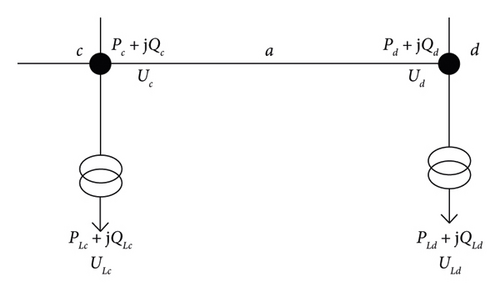

2.1. Power Flow Model Calculation

The fundamental principles of PDN analysis can be found in references [20, 31–33]. To streamline the computational process, the assumption of balanced three-phase condition is made as a prerequisite for power flow calculations in this work. The schematic diagram of power flow calculation circuit model is shown in Figure 1.

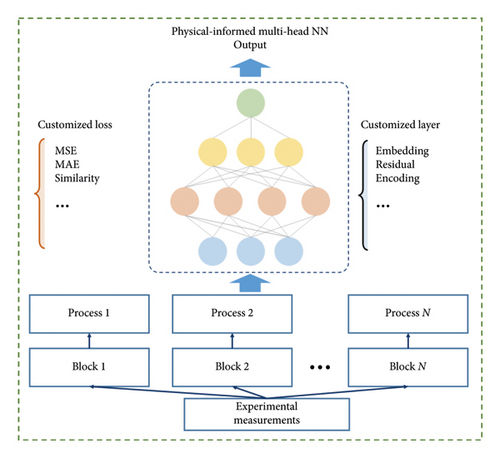

2.2. Theoretical Framework of AGBO-Pro for Parameter Identification in PDN

The schematic diagram is shown in Figure 2, where it is combined with gradient-based neural network (NN) and gradient-free optimization method. The inputs of the framework are experimental measurements of PDN, which can be divided into several blocks (or fields) including numerical features and categorical features. Each block can be processed via a customized way and then connected with customized layers. The loss function for gradient-based optimization is also flexible.

2.2.1. Physical-Informed Multihead Neural Network

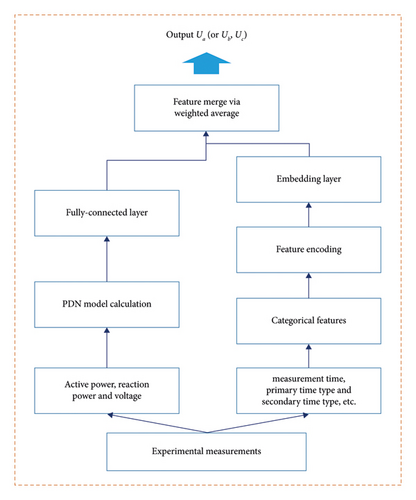

The experimental measurements of PDN can be classified into two categories, namely, the continuous (or numerical) features and discrete (or categorical) features such as measurement time, primary time type, and secondary time type. The numerical and categorical features are processed with different treatments by introducing a multihead neural network, which is shown in Figure 3. It is shown that the input layer of NN is separated into two blocks; the first includes numerical features, which are defined as X1 = (PLd,i, QLd,i, ULd,i) representing the set of the active power, reaction power, and voltage on the high-voltage side of the transformers, and the subscript i represents the sample points for i = (1,2, ⋯, N). The other includes categorical features X2 = (Ti, Pi, Si⋯), whereTi and PVirepresent the measurement and peak-valley period, respectively. It is noted that the recording duration has 24 categories, and the peak-valley electricity has 3 categories (i.e., high, medium, and low).

In the previous work, we have derived the gradients of the loss function with respect to Rd, Xd, Gd, Bd, Rcd, and Xcd. According to the PDN model, the loss function can be calculated with forward calculation; then, the back propagation of the gradient of the loss function can be applied to update the connection weight in NN.

2.2.2. Gradient-Based Optimization Algorithm

Once we have the above gradients of the loss function with respect to the parameters, then the gradient-based optimization can be implemented. The pseudocode of optimization method of this work is shown in Algorithm 1.

-

Algorithm 1: Adaptive gradient-based optimization methods.

-

Input: θ0 initial parameters

-

f(θ) objective function to be optimized

-

β1, β2 decay rates for moment estimates

-

m0 initialized first-order moment

-

υ0 initialized second-order moment

-

t time step

-

η learning rate

-

l∞ ← 2/(1 − β2) − 1 maximum length of the simple moving average

-

While θt is not converged do

-

t ← t + 1

-

gt ← ∇θft(θt−1) gradient with respect to parameters at time step t

-

update first-order moment

-

update second-order moment

-

biased-corrected first-order moment

-

the length of the moving average

-

If the variance is tractable, i.e., lt > 4then

-

update the adaptive learning rate

-

the variance rectification term

-

update parameters

-

Else

-

-

End while

-

Return θt

2.3. Evaluation Functions of the Parameter Identification Algorithm

- (1)

Mean absolute error (MAE):

(14) - (2)

Root mean square error (RMSE):

(15) - (3)

Mean absolute percentage error (MAPE):

3. Dataset and Calculation Details

3.1. Data Collection and Description

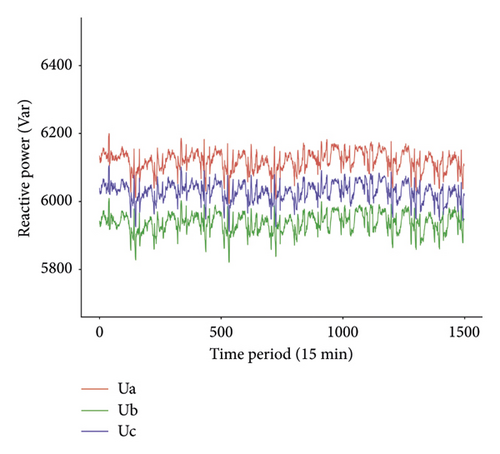

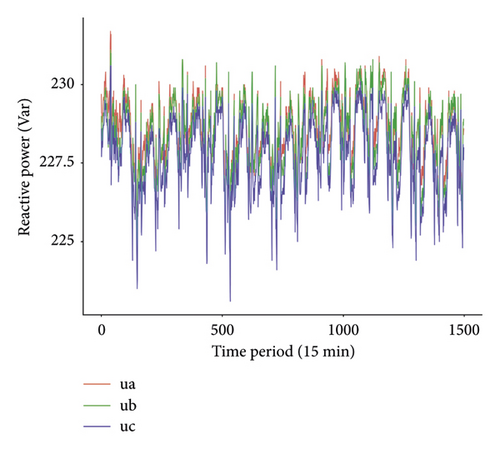

In this work, a dataset including 1499 samples is collected via SCADA [33, 34] for the training of the proposed model. The voltage profiles on the high-voltage (Ua, Ub, and Uc) and low-voltage (ua, ub, and uc) sides are presented in Figures 4 and 5, respectively.

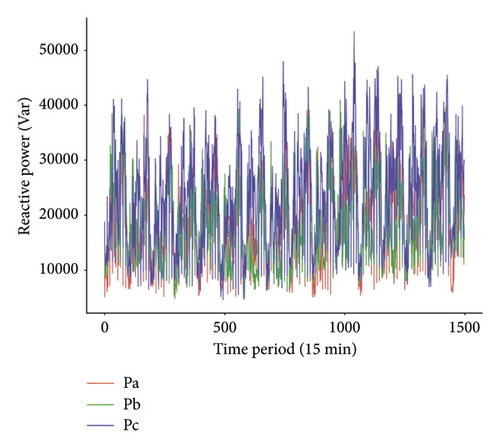

From Figures 4 and 5, it is found that the high-voltage sides in the dataset are similar to the three-phase balance satisfying the equations in Section 2.1. In addition, the active power (Pa, Pb, and Pc) and reactive power (Qa, Qb, and Qc) profiles on the low-voltage side are given in Figures 6 and 7, respectively.

It is found from Figures 6 and 7 that the variations of active power and reaction power show a similar trend, indicating that the data collection is stable enough for parameter identification of PDN.

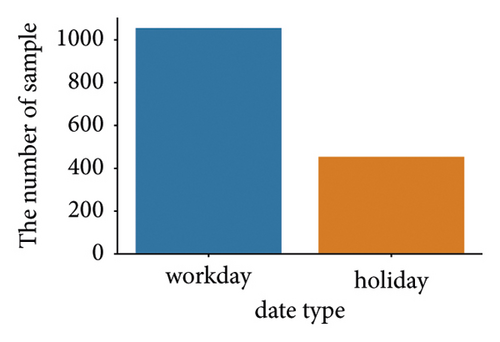

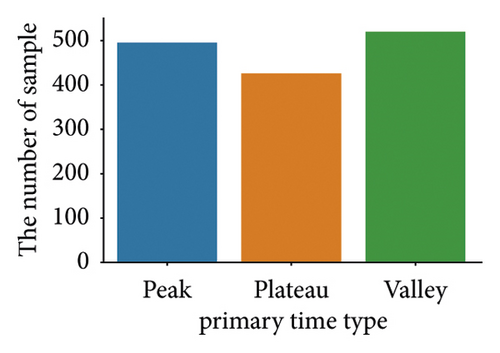

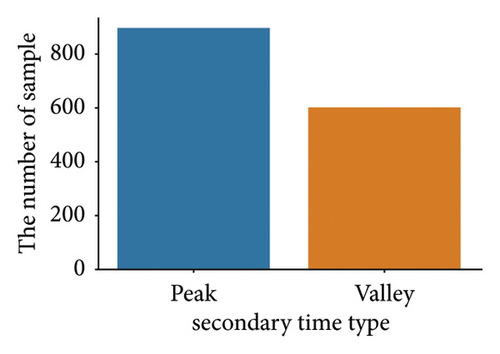

It is noted that all samples collected have four categorical features, namely, measurement time, date type, primary time type, and secondary time type. The measurement time represents the time information when the sample is measured, which ranges from 0 to 24 hours. The date type represents whether the measurement time is on a workday and holiday. The primary time type and secondary time type have two different definitions for daytime. The primary time type has three levels: peak mean hours refer to 09:00 to 12:00 and 18:00 to 21:00 daily, plateau mean hours refer to 13:00 to 17:00 and 22:00 to 23:00 daily, and valley represents 00:00 to 08:00. The secondary time type has two levels: peak means 08:00 to 21:00, whereas valley refers to 22:00 to 07:00. The distribution of these four categorical features is shown in Figure 8.

3.2. Evaluation and Calculation

| Parameter name | Abbreviation | Upper bound | Lower bound | Unit |

|---|---|---|---|---|

| Line resistance | Rcd | 0.5 | 0.005 | Ω/km |

| Line reactance | Xcd | 0.5 | 0.005 | Ω/km |

| Transformer resistance | Xd | 20 | 5 | Ω/km |

| Transformer reactance | Rd | 10 | 0.8 | Ω/km |

| Transformer conductance | Gd | 8e − 6 | 4e − 6 | S |

| Transformer electrical susceptance | Bd | 8e − 5 | 2e − 5 | S |

| Slope of linear regression | a | −5 | 5 | — |

| Bias of linear regression | b | 500 | 1000 | — |

To mitigate the impact of randomness associated with AGBO and SMBO-based methods on the results in this study, the dataset was randomly partitioned 25 times to ensure accuracy and stability in the results.

4. Results and Discussion

Before discussing the results, some hyperparameter settings of each method are described as follows. The prior weight and number of started jobs are set as 1 and 20 for TPE, and the rate of reduction in SA is 0.1 as default value. The learning rate is 5e − 4 in AGBO-based methods. The maximum of iteration step is 1000 for all the methods in this study. The parameter identification results of AGBO and SMBO-based methods with mean square error between Uc and Ucal are shown in Table 2.

| Method | MAE | RMSE | MAPE |

|---|---|---|---|

| AGBO-Pro ∗ | 25.415 ± 0.855 | 32.511 ± 1.050 | 0.415 ± 0.014 |

| AGBO | 64.100 ± 0.474 | 65.791 ± 0.478 | 1.047 ± 0.008 |

| RS | 65.570 ± 0.746 | 67.148 ± 0.699 | 1.071 ± 0.012 |

| TPE | 64.689 ± 0.648 | 66.355 ± 0.633 | 1.057 ± 0.011 |

| SA | 65.894 ± 0.983 | 67.439 ± 0.913 | 1.077 ± 0.016 |

| AO | 64.5001 ± 0.7016 | 66.1795 ± 0.6854 | 1.0539 ± 0.0115 |

| NRO | 64.5174 ± 0.6998 | 66.1954 ± 0.6837 | 1.054 ± 0.0114 |

| PSS | 64.6944 ± 0.6984 | 66.3599 ± 0.6847 | 1.0571 ± 0.0114 |

- ∗AGBO-Pro uses mean square loss to ensure fairness in comparison. The bold values indicate that the AGBO-Pro method gains the lowest values in all three evaluation functions, viz. MAE, RMSE and MAPE, indicting its best performance.

It can be found in Table 2 that AGBO-Pro has the best performance with significantly low values of MAE, RMSE, and MAPE compared with other metaheuristic algorithms such as AO, NRO, and PSS. AGBO also has better results than SMBO-based methods, but the prediction results do not have remarkable differences since the statistical properties between Uc and Ucal are neglected.

Other recent studies also have proposed the prediction results with the same metrics and the same dataset in this paper, such as the methods of MCMC and SMBO combined with clustering and hypothesis testing (denoted as MCMCC and SMBOC). Li et al. [32] published the best results of MAE values of MCMCC and SMBOC being 62.467 ± 0.366 and 61.868 ± 0.322, respectively. In another paper [31], the values of MAE computed by MCMCC and SMBOC are 62.136 ± 0.336 and 61.268 ± 0.311, respectively.

Based on the previous study [26], the line transformation should be implemented to Ucal before calculating loss function. The parameter identification results with linear transformation are listed in Table 3.

| Method | MAE | RMSE | MAPE |

|---|---|---|---|

| AGBO-Pro-LR ∗ | 5.131 ± 0.093 | 6.514 ± 0.152 | 0.084 ± 0.002 |

| AGBO-LR | 5.247 ± 0.079 | 6.593 ± 0.111 | 0.086 ± 0.001 |

| RS-LR | 6.447 ± 0.801 | 8.054 ± 0.958 | 0.105 ± 0.013 |

| TPE-LR | 6.078 ± 0.753 | 7.589 ± 0.830 | 0.099 ± 0.012 |

| SA-LR | 6.970 ± 1.111 | 8.682 ± 1.318 | 0.114 ± 0.018 |

- ∗The results of AGBO-Pro with Pearson correlation coefficient loss. After the linear transformation labeled as “AGBO-Pro-LR,” the optimization method proposed in this work still has the best performance.

All methods perform better in Table 3 than the results in Table 2, which indicates that the linear transformation between Uc and Ucal has an important contribution to identify PDN’s parameters. Moreover, the results between AGBO-Pro and AGBO mean that the supplementary categorical information such as measurement time, date type, primary time type, and secondary time type plays an important role in PDN’s parameter identification and the key categorical information can be merged by AGBO-Pro proposed in this work.

Leaning rate, the size of the embedding layer dimension, and the number of hidden layers are three critical hyperparameters of AGBO-Pro; therefore, the PDN’s parameter identification performance under different hyperparameters has been investigated in this section. The performances of various learning rates are displayed in Table 4.

| Learning rate | MAE | RMSE | MAPE |

|---|---|---|---|

| 5e − 2 | 5.850 ± 0.192 | 7.365 ± 0.266 | 0.0956 ± 0.00315 |

| 1e − 2 | 5.486 ± 0.242 | 6.916 ± 0.266 | 0.0897 ± 0.0028 |

| 5e − 3 | 5.131 ± 0.093 | 6.514 ± 0.152 | 0.0839 ± 0.0015 |

| 1e − 3 | 5.486 ± 0.172 | 6.916 ± 0.242 | 0.0897 ± 0.0028 |

| 1e − 4 | 8.231 ± 0.327 | 11.121 ± 0.493 | 0.135 ± 0.0053 |

| 1e − 5 | 8.545 ± 0.057 | 11.454 ± 0.326 | 0.140 ± 0.0009 |

- The bold values indicate that with the learning rate 5e − 3, the model has the lowest values in three evaluation functions.

It can be found that the learning rate has a remarkable influence on AGBO-Pro; when the learning rate is set to 5e − 3, the identification performance is optimal, and the value of learning rate between 1e − 2 and 1e − 3 is suggested in this paper. The size of embedding layer dimension is investigated subsequently under the optimal value of learning rate and listed in Table 5.

| Embedding dimension | MAE | RMSE | MAPE |

|---|---|---|---|

| Measurement time: 32 | 5.370 ± 0.201 | 6.751 ± 0.279 | 0.0878 ± 0.003 |

| Date type: 16 | |||

| Primary time type: 16 | |||

| Secondary time type: 16 | |||

| Measurement time: 64 | 5.131 ± 0.093 | 6.514 ± 0.152 | 0.0839 ± 0.001 |

| Date type: 32 | |||

| Primary time type: 32 | |||

| Secondary time type: 32 | |||

| Measurement time: 128 | 5.224 ± 0.120 | 6.596 ± 0.209 | 0.0854 ± 0.002 |

| Date type: 64 | |||

| Primary time type: 64 | |||

| Secondary time type: 64 | |||

Since the dimension of categorical features is small, the embedding dimension of the neural network is less than 128 in this work. According to the results in Table 5, the change of the embedding dimension has only a minor impact on the identification performance, and the optimal size of the embedding dimension is chosen as 64, 32, 32, and 32 for the four categorical features, respectively. AGBO-Pro include the hidden layer to leaning the information of categorical features after embedding, and the influence of the number of the hidden layers are shown in Table 6.

| Embedding dimension | MAE | RMSE | MAPE |

|---|---|---|---|

| 1 hidden layer | 5.131 ± 0.093 | 6.514 ± 0.152 | 0.084 ± 0.002 |

| 2 hidden layers | 5.574 ± 0.285 | 7.048 ± 0.344 | 0.091 ± 0.005 |

| 3 hidden layers | 5.545 ± 0.279 | 7.052 ± 0.397 | 0.091 ± 0.005 |

- The bold values indicate that with one hidden layer, the model gains the best performance.

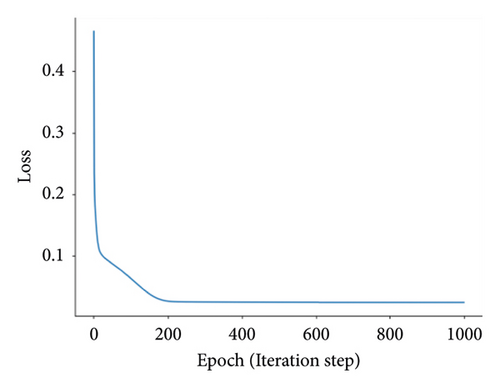

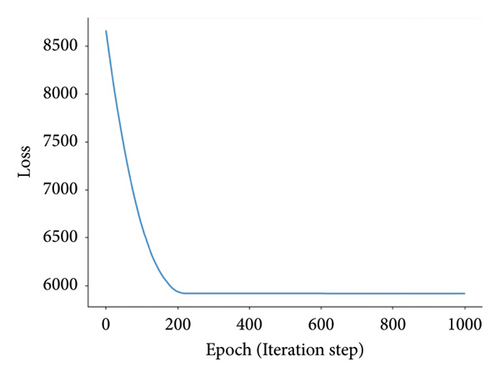

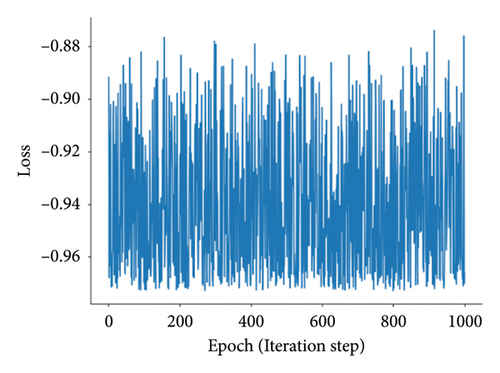

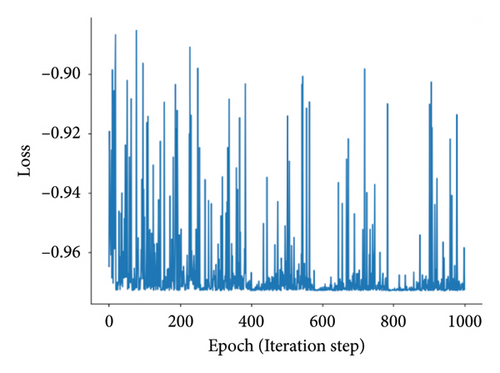

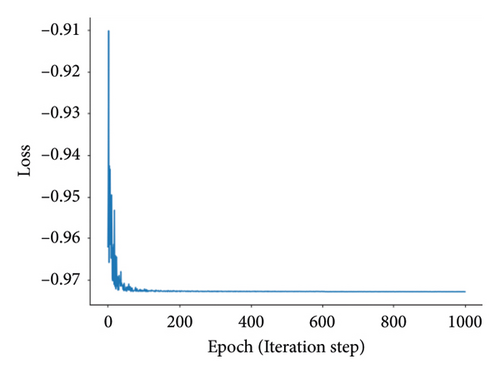

Having more hidden layers in the network implies a larger number of parameters, slower computation speed, and a higher risk of overfitting. Combining the results from Table 6, it can be found that a single hidden layer achieves better identification performance. The convergence plots of AGBO and SMBO-based methods are displayed in Figure 9.

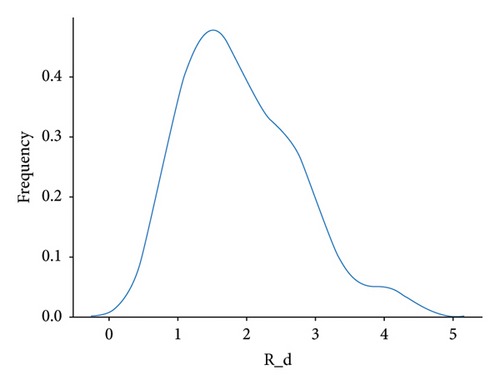

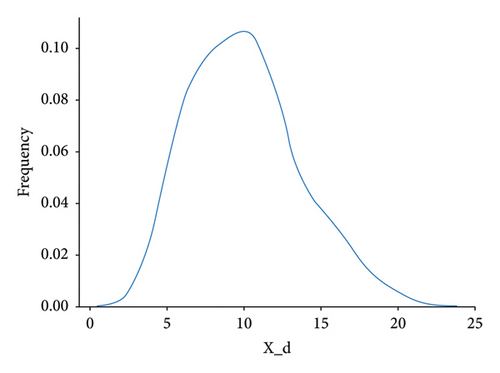

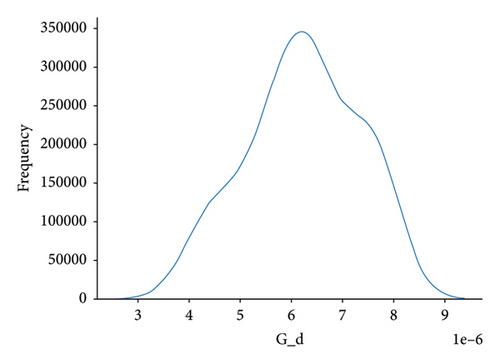

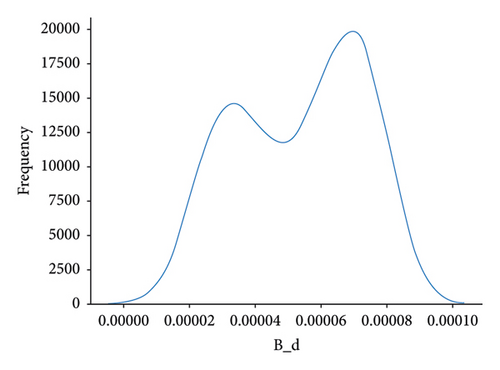

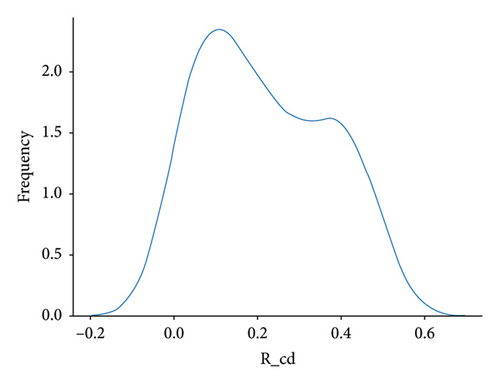

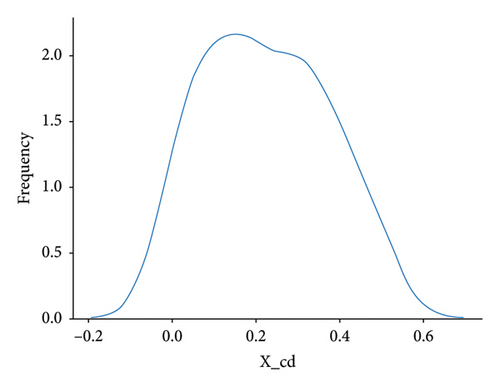

The AGBO-based methods converge after 200 iterations; compared with the SMBO-based methods, the convergence plots of AGBO-based methods are much smoother and stable, since the searching direction for parameter update is deterministic to the gradient-based optimization method, such as AGBO and AGBO-Pro. After 25 repeated splitting datasets, the distribution plots of the identified PDN’s parameters from AGBO-Pro-LR are shown in Figure 10. It can be found that all the identified parameters are roughly distributed within a relatively fixed range, providing a data foundation for the subsequent parameter analysis in future research.

5. Conclusion

In this work, we propose an extensible gradient-based optimization method for parameter identification in PDN calculation and analysis. A physical-informed multihead neural network is adopted to treat the numerical features and categorical features separately. The two kinds of features are merged via a weighted average. After several forward-backward calculations, the similarity loss function with respect to the six parameters to be identified achieves a fast convergence.

We compare the proposed method (namely, AGBO-Pro model) with the original AGBO model and several heuristic algorithms such as RS, TPE, SA, AO, NRO, and PSS. The numerical calculations show that the errors by AGBO-Pro are the lowest in all three evaluation functions, i.e., MAE, RMSE, and MAPE, with a faster and more stable convergence of the loss function. By further taking a linear transformation of the loss function, the method of this work has a lower variance in 25 repeat experiments, showing a much more robust performance in parameter identification.

In addition, the variations in hyperparameters of optimization method such as the number of hidden layers and embedding layers, learning rate, and weight decay are also systematically investigated. It is found that the method proposed in this work achieves more stable and robust performance to identify PDN parameters. This work shows an effective exploration in incorporating the numerical and categorical features of experimental measurement into gradient-based optimization method.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This work was supported by Nanjing Institute of Technology Scientific Research Start-Up Fund for High-Level Introduced Talents (grant no. YKJ202135).

Open Research

Data Availability

Data are available from the corresponding authors upon reasonable request.