An Intrusion Detection Model Based on Feature Selection and Improved One-Dimensional Convolutional Neural Network

Abstract

The problem of intrusion detection has new solutions, thanks to the widespread use of machine learning in the field of network security, but it still has a few issues at this time. Traditional machine learning techniques to intrusion detection rely on expert experience to choose features, and deep learning approaches have a low detection efficiency. In this paper, an intrusion detection model based on feature selection and improved one-dimensional convolutional neural network was proposed. This model first used the extreme gradient boosting decision tree (XGboost) algorithm to sort the preprocessed data, and then it used comparison to weed out 55 features with a higher contribution. Then, the extracted features were fed into the improved one-dimensional convolutional neural network (I1DCNN), and this network training was used to complete the final classification task. The feature selection and improved one-dimensional convolutional neural network (FS-I1DCNN) intrusion detection model not only solved the traditional machine learning method of relying on expert experience to extract features but also improved the detection efficiency of the model, reduced the training time while reducing the dimension, and increased the overall accuracy. In comparison to the I1DCNN model without feature extraction and the conventional one-dimensional convolutional neural network (1DCNN) model, the experimental results demonstrate that the FS-I1DCNN model’s overall accuracy increases by 0.67% and 2.94%, respectively. Its accuracy, precision, recall, and F1-score were significantly better than those of the other intrusion detection models, including SVM and DBN.

1. Introduction

The Internet has become one of the key tools that we cannot live without thanks to the advancement of science and technology, but in recent years, the complexity of the network environment has led to an increasing number of network security incidents around the world [1, 2], and the number of network attacks on various countries is increasing. Therefore, the research on intrusion detection technology has become an indispensable link in the field of network security research. Intrusion detection technology became a dynamic and crucial security protection tool, opening the second line of defense after firewall [3]. It has since developed into a crucial way to defend against network intrusion in the current era of widely used encrypted traffic [4].

Different intrusion detection models based on machine learning and deep learning are being improved more and more as artificial intelligence technology advances quickly [5, 6], but both have disadvantages. SVM, decision trees, and other common algorithms [7–9] are used frequently in traditional machine learning. Their main issue is that they frequently lose sight of the connections between features when extracting features because they rely too heavily on expert experience. Wang et al. [10] proposed a support vector machine based intrusion detection framework. They implement the logarithm marginal density ratios transformation to form new transformed features. In this way, they improve the capability of SVM detection model. In order to reduce the complexity of the model, Kim et al. [11] established a hierarchically integrated anomaly detection model that employs decision trees to build misuse models and data decomposition to create smaller subsets and single-class SVM models from the subsets. Both of the above use SVM to build detection models; however, the training speed drops dramatically when the training data increases substantially [12], so the SVM model is not a very good choice.

Deep learning has been used extensively in the field of intrusion detection recently, but its issue has been poor detection performance. Wang et al. [13] proposed an intrusion detection method based on feature optimization and BP neural network, which improves the intrusion detection rate of a few categories while reducing the dimensionality. However, since BP neural network has a large number of parameters, the convergence speed is relatively slow, leading to low training efficiency. In order to effectively reduce feature dimensionality while maintaining detection performance, Luo and Lu [14] developed a hybrid network attack detection algorithm based on artificial neural networks and genetic algorithms. However, the genetic algorithm itself necessitates the process of encoding and decoding, resulting in a lengthy training period. In the field of intrusion detection, deep neural networks and convolutional neural networks significantly outperform BP neural networks in terms of training efficiency, but the accuracy rate still needs to be increased. Zhang et al. [15] established a deep convolutional neural network classification model based on the improved PCA algorithm, which improves the accuracy of detection while using the PCA algorithm for dimensionality reduction, but the overall accuracy is relatively low. Yang and Wang [16] proposed an improved convolutional neural network intrusion detection method that abstracts low-level traffic into high-level features. The low-level intrusion traffic data is abstractly represented as advanced features by CNN. The stochastic gradient descent algorithm is used to converge the model and the optimization algorithm is used for parameter tuning, and better results are achieved. Moreover, compared with the deep convolutional neural network with too high dimensionality, the one-dimensional convolutional neural network is not only low-dimensional but also a better choice for intrusion detection data with high chronology. Qazi et al. [17] proposed a one-dimensional convolutional neural network-based deep learning system for network intrusion detection and achieved an accuracy rate of 98.96%. However, this system only detects four categories of attacks and is not truly pervasive. Hang et al. [18] proposed an improved method of a one-dimensional convolutional neural network, which used the results of two convolutions as the input of global average pooling and global maximum pooling, and combined the input data to improve the network intrusion detection rate and reduce the parameters and training time of the model.

- (1)

We used the methods of oversampling, undersampling, and mean square normalization to process the original dataset

- (2)

We adopted XGboost feature selection method to select and filter the processed data, which reduced the training time of the model and sped up the operation efficiency

- (3)

We designed an improved one-dimensional convolutional neural network (I1DCNN) intrusion detection model and compared different optimization algorithms. The Adam optimization algorithm is finally used to adjust the model parameters dynamically

The main distribution of this article is as follows. Section 2 introduces the research methods of this article. Furthermore, we provide the experimental results and analysis in Section 3. Finally, we draw research conclusions and prospects.

2. Related Knowledge

2.1. XGboost Feature Selection Algorithm

The XGboost (extreme gradient boosting) algorithm [19] is an evolution of the GDBT (gradient boosting) algorithm and is an efficient system implementation. In this paper, we make use of its tree model to quantify it and choose the features based on their relative importance.

2.1.1. Decision Tree Model and Their Combinations

fk(xi) refers to the weight of the leaf node that the kth tree in the sample is categorized into, and F denotes the function space of all trees.

2.1.2. Importance Metrics

As determined by equation (3), X is the set of features assigned to the leaf nodes, Gain is the gain of theXth feature for the segmentation point, and Cover denotes the number of samples at each node.

2.2. Convolutional Neural Networks

Convolutional neural network (CNN) is one of the representative deep learning networks. It has been successfully applied to a variety of artificial intelligence applications [22], including computer vision and natural language processing [23, 24]. Compared to conventional intelligent algorithms, CNN has much more powerful feature extraction capabilities, with its main components being the convolutional layer, pooling layer, fully connected layer, and softmax layer.

2.2.1. Convolutional Layer

2.2.2. Pooling Layer

2.2.3. Fully Connected Layer

3. Intrusion Detection Model Based on FS-I1DCNN

The FS-I1DCNN intrusion detection model that is proposed in this paper has three main modules, with the specific framework shown in Figure 1. Data preprocessing module, which first samples, filters, and cleans the original dataset; XGboost feature screening module, which prioritizes data by XGboost model and filters out the features with higher contribution to the model by experimental comparison; I1DCNN traffic detection module, which completes the classification task by an improved one-dimensional convolutional neural network [22], and after experimental validation following comparison tests, the best option is chosen, and the FS-I1DCNN intrusion detection model is finished being built.

3.1. Data Preprocessing

The FS-I1DCNN intrusion detection model that is proposed in this paper has three main modules, with the specific framework shown in Figure 1. The first module is data preprocessing module, which includes sampling, filtering and cleaning the original dataset. The second module is XGboost feature screening module, which prioritizes data by XGboost model and filters out the features with higher contribution to the model by experimental comparison. The last module is I1DCNN traffic detection module, which completes the classification task by an improved one-dimensional convolutional neural network [22], and the best scheme is selected after experimental verification. The Canadian Institute for Cybersecurity Research’s CIC-IDS-2017 dataset [25] is used in this study. It was collected from 9 a.m. on July 3, 2017, to 5 p.m. on July 7, 2017, and primarily includes 12 categories of attacks and regular benign traffic. Since the dataset has some missing data and is large and has enough experimental data, it should be processed for missing values. The method used is to remove the missing values to stop the missing values from having an effect on the experiment. Furthermore, this experimental dataset contains 78 pertinent features, each of which has a magnitude and order of magnitude that varies. Some of these feature values are of a large order of magnitude, which will affect how well the model performs if trained directly. The feature flow duration, for instance, has a range of feature values between -1 and 119999993, so this feature should be normalized to speed up computing and also remove the impact on the experimental results if the magnitudes are different.

Each group’s feature value, mean value, and standard deviation are represented by the letters L, Lmean, and α, respectively.

The final data categories and quantities obtained following the above data preprocessing are shown in Table 1.

| Data categories | The amount of processed data |

|---|---|

| Benign | 285324 |

| Bot | 7864 |

| DDos | 128027 |

| Dos GoldenEye | 10293 |

| Dos Hulk | 231073 |

| Dos slowhttptest | 5499 |

| Dos slowloris | 5796 |

| FTP-Patator | 7938 |

| Heartbleed | 5632 |

| Infiltration | 4608 |

| PortScan | 158930 |

| SSH-Parator | 5897 |

| Web Attack | 16620 |

3.2. XGboost Feature Selection

If a feature is being screened, whether it plays a crucial part in the model will determine whether to keep it or not. To achieve the best classification effect, generate the corresponding feature contribution degree based on the XGboost feature importance index mentioned above, sort them based on the size of the feature contribution degree, and run experiments using various retained features. Algorithm 1 illustrates the XGboost feature selection algorithm suggested in this paper.

-

Algorithm 1: The XGboost feature selection algorithm.

-

Input: Intrusion detection dataset S, feature F = {t1, t2 ⋯ tn}, n is feature sum.

-

Step1: Calculate the importance of each feature, in order from smallest to largest, get , and k ≤ n, and satisfy the condition of .

-

Step2: Acc = 0, i = k // Acc is accuracy, and i is the number of retained features

-

Step3: for i to 1, step −1 do

-

Step4: Input the CNN, and record the accuracy obtained as Acc(i)

-

Step5: if Acc(i)>Acc then

-

Step6 Acc⟵Acc(i)

-

Step7 End if

-

Step8 End for

-

Output: the feature filtered dataset S′

After the above XGboost selection, the dataset S′ is obtained, which has a smaller dimension than the dataset S before the selection, which reduces the time for the following training and speeds up the operation efficiency.

3.3. Improved One-Dimensional Convolutional Neural Network

Convolution-pooling-full connections make up the bulk of traditional convolutional neural networks. Compared with the fully connected neural network, CNNs have fewer parameters when the same number of hidden units is used [26]. Moreover, CNN is easy to train [27]. And one-dimensional convolutional neural networks are a kind of convolutional neural network that can be effectively identified and applied to the time series problem of sensor data or by fixed-length periodic signal data. So this paper selects a one-dimensional convolutional neural network as the core of the classification model according to the characteristics of the temporal sequence of the intrusion detection dataset. In addition, due to the high dimensionality of the intrusion detection dataset, only one layer of convolutional operation does not fully extract the features in the dataset, so in this paper, we design an AlexNet style improved one-dimensional convolutional neural network [28] (I1DCNN), whose structure is illustrated in Figure 2. It primarily consists of four convolutional layers, two layers with maximum pooling, one layer with flattening, one softmax function output layer, and one full connection layer. To ensure the simultaneous reduction of crucial information, two convolution layers are used to fully extract features, followed by a maximum pooling layer for pooling processing. The previous convolution pooling operation is then carried out again. The output multidimensional data is then transformed into a one-dimensional array using the flattened layer. Use the softmax output function for output as you transition to the full connection layer.

The Adam optimization algorithm is used in this paper to optimize and create an improved one-dimensional convolutional neural network after output by the softmax output function. The Adam optimization algorithm is essentially a momentum method and RMSprop optimization algorithm. To ensure that all parameters are relatively stable, it dynamically modifies the learning rate of each parameter using the first-order moment estimation and second-order moment estimation of gradient [29]. Algorithm 2 illustrates the Adam optimization in this paper.

-

Algorithm 2: The Adam optimization algorithm.

-

Input: Initial parameter θ and step size ε.Exponential decay rate of moment estimation ρ1 and ρ2. They denote updating the momentum term and RMSprop, respectively. The feature filtered dataset S′ and minibatch of the training set of u samples {x(1), ⋯x(u)} in the filtered dataset S′,whose corresponding target is y(i).

-

Step1: int s = 0, r = 0 t = 0 // s is a first-order matrix variable, r is a second-order matrix variable, t is a time step

-

Step2: while failure to meet stop guidelines do

-

Step3: g⟵1/m∇θ∑iL(f(x(i); θ), yi) // Calculate gradient

-

Step4: t⟵t + 1 // Update training times

-

Step5: s⟵ρ1s + (1 − ρ1)g // Cumulative gradient

-

Step6: r⟵ρ2r + (1 − ρ2)g2 // Calculate gradient squared

-

Step7 // Correct the deviation of the first moment

-

Step8 // Correct the deviation of the second moment

-

Step9 // calculate update, element-by-element operation.

-

Step10 θ⟵θ + Δθ // Update parameter

-

Step11 End while

-

Output: The updated parameter θ′

Utilizing the Adam optimization algorithm, the mean value of the gradient and the mean value of the gradient square are adaptively adjusted to improve the classification performance of the improved one-dimensional convolutional neural network created after feature screening.

4. Experimental Results and Analysis

4.1. Experimental Environment

The Windows Server 2016 operating system, Intel(R) Xeon(R) CPU E5-2650 [email protected] GHz, and the system type 64-bit operating system were the experimental environments used in this experiment. Python3.7.9 is the programming language and version. Pycharm and Anaconda are two third-party programs. Tenserflow-CPU is the primary deep learning framework, and Keras is used to build the network model. Machine learning libraries include sklearn and time.

4.2. Evaluation Indicators

In order to ensure the effectiveness of the experiment, accuracy, precision, recall, F1-score, and ROC curve were adopted as indicators to evaluate the performance of the machine learning model. Among them, true class (TP) refers to the number of positive cases correctly classified; false negative class (FN) refers to the number of positive cases misclassified as negative cases; false positive class (FP) represents the number of negative cases misclassified as positive cases; true negative class (TN) refers to the number of correctly classified negative cases; and the confusion matrix formed by it is shown in Table 2.

| Label | Predict class | ||

|---|---|---|---|

| Positive | Negative | ||

| True class | Positive | TP | FN |

| Negative | FP | TN | |

Accuracy, precision, recall, F1-score, and ROC curve were chosen as the indicators to assess the performance of the machine learning model in order to guarantee the efficacy of the experiment. False positive class (FP) represents the number of negative cases misclassified as positive cases. False negative class (FN) represents the number of positive cases incorrectly classified as negative cases, and true class (TP) refers to the number of positive cases correctly classified; the confusion matrix created by true negative class (TN), which is the quantity of correctly classified negative cases, is displayed in Table 2.

4.3. Comparison Experiment with Different Numbers of Features

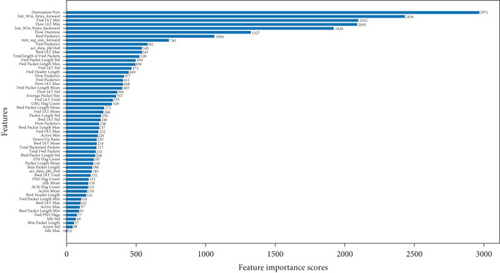

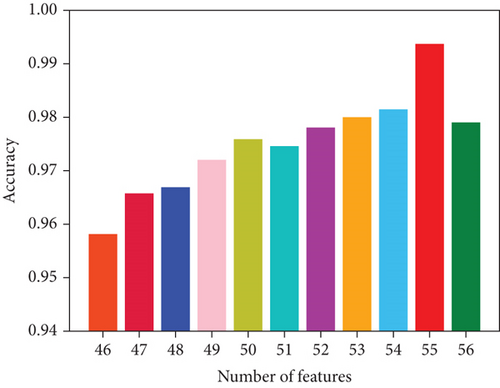

The size of the number of features in the screening of the number of features has a significant impact on the outcomes of the experiment. A smaller number of screening may overlook the crucial features, while a larger number of screening may result in feature redundancy, which is of little significance to the experimental results. There are 56 features with varying degrees of contribution to the experiment, as shown in Figure 3‘s ranking of feature contribution scores for various features. Therefore, the preprocessed data in this paper are screened by the importance of features of 56 features by the size of contribution scores for relevant comparison experiments, and the results are shown in Figure 4. When the number of features is 55, it has the highest accuracy rate and has a clear advantage over other feature numbers. As the number of features decreases from 55, the accuracy rate gradually decreases. For this reason, 55 features were chosen as the final number of features for the experiment.

4.4. Model Results and Analysis

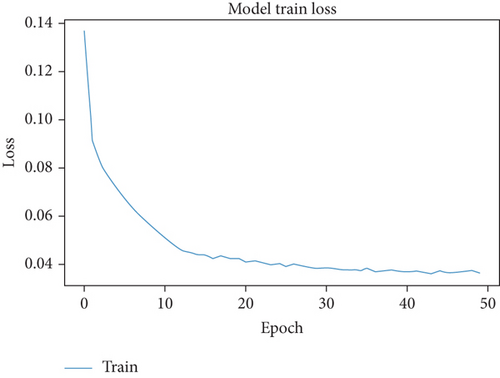

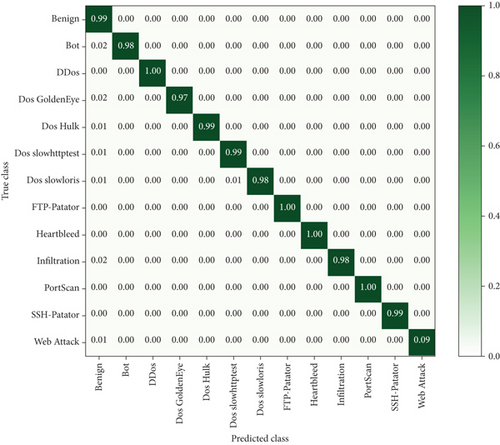

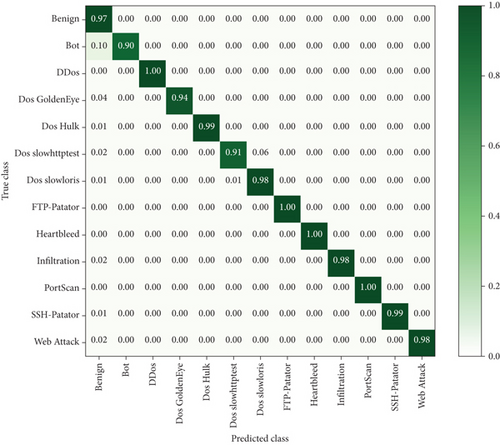

The prepreprocessed dataset was fed into the improved 1D convolutional neural network after feature importance selection. The experimental results are shown in Figure 5. When the number of iterations exceeds 40, the experimental results tend to be stable, so the epoch value is set to 40. The results of the confusion matrix obtained from the experiment are shown in Figure 6, which shows that the classification accuracy of each category reaches more than 90%, including 100% for DDos, FTP-Patator, Heartbleed, and PortScan, and 98% for Dos Hulk, Dos slowloris, Infiltration, SSH-Patator, and Web Attack. The three types of malicious traffic also have accuracy of 90%, 94%, and 91%, respectively, for Bot, Dos GoldenEye, and Dos slowhttptest. In addition, the overall accuracy of the model also reaches 99.36%. So, it shows that the FS-I1DCNN intrusion detection model suggested in this paper has good performance in the classification results of each different class of malicious traffic and has good classification effect.

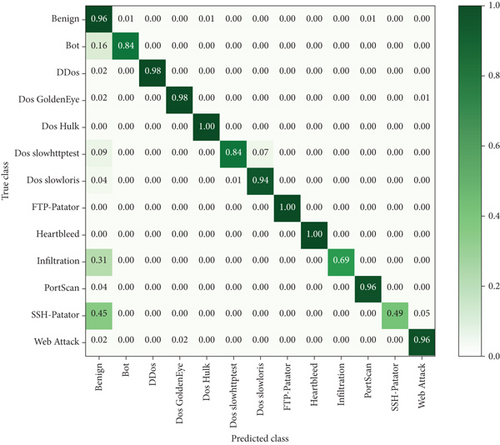

4.5. Comparison Experiment with I1DCNN and 1DCNN

This paper is experimentally compared with 1DCNN and I1DCNN to demonstrate the superiority of the FS-I1DCNN intrusion detection model. The results of the confusion matrix of the two are shown in Figures 7 and 8, with an overall accuracy rate of 96.42% and 98.69%, respectively. In conclusion, the I1DCNN model outperforms the 1DCNN model in terms of classification accuracy for all categories aside from benign and Dos Hulk, which are comparable to the 1DCNN model. It can be seen that the FS-I1DCNN model proposed in this paper has more or less improvement for each category when compared to the I1DCNN model without XGboost feature selection, and for a few categories, such as Bot and Dos slowhttptest, it has about 8% improvement. The I1DCNN intrusion detection model that is suggested in this paper has a notable improvement in accuracy for a select few categories and a better classification effect overall. Additionally, the average training times per epoch FS-I1DCNN compared to I1DCNN is 903 seconds and 988 seconds for these two methods, which is a full reduction of 85 seconds. This data demonstrates how the FS-I1DCNN intrusion detection model enhances classification effects while reducing dimensionality and operating times, further enhancing detection efficiency.

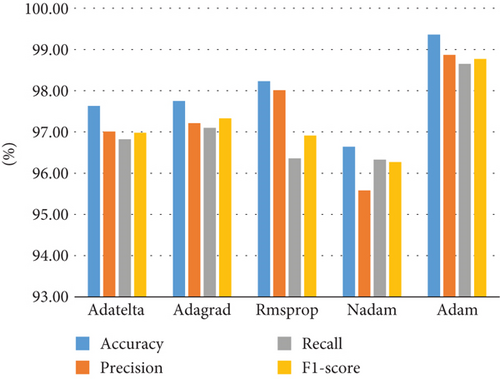

4.6. Comparative Experiment of Different Optimization Algorithms

When selecting the optimization algorithm of the model, different optimization methods have different adaptive fields and advantages, and the experimental results are also different. Considering that Adam can dynamically adjust the learning rate of each parameter by utilizing the gradient, allowing for the realization of self-adaptive learning and the achievement of a better classification effect when using this optimization algorithm. This paper conducts comparative experiments on various optimization algorithms based on the FS-I1DCNN intrusion detection model, and the outcomes are displayed in Table 3 and Figure 9. As can be seen, the Adam optimization algorithm has the highest classification accuracy when learning at the same rate, and it outperforms Adatelta in terms of accuracy rate, recall rate, and F1 score. The Adam optimization algorithm has the best classification effect because it is 1% to 3% higher than the Adagrad, Rmsprop, and Nadam optimization algorithms.

| Optimization algorithm | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|

| Adatelta | 0.9763 | 0.9701 | 0.9682 | 0.9698 |

| Adagrad | 0.9775 | 0.9721 | 0.9710 | 0.9733 |

| Rmsprop | 0.9823 | 0.9801 | 0.9636 | 0.9691 |

| Nadam | 0.9664 | 0.9558 | 0.9633 | 0.9627 |

| Adam | 0.9936 | 0.9887 | 0.9865 | 0.9877 |

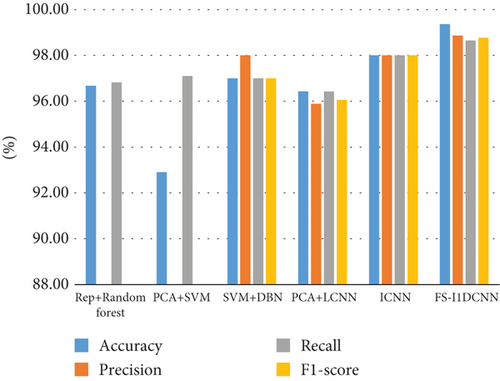

4.7. Comparison Experiment with Other Algorithms

Using the same dataset, it was compared cross-sectionally with other algorithmic models, such as random forest, SVM, DBN, LCNN, and ICNN, to determine how effective the FS-I1DCNN intrusion detection model proposed in this paper was. The results are shown in Table 4 and Figure 10. It is evident that the FS-I1DCNN intrusion detection model proposed in this paper has improved overall accuracy, precision, recall, and F1-score by an average of 4.36%, 1.57%, 2.21%, and 1.75% when compared to the other five models. All of the indexes are better than the other detection models, demonstrating the model’s superior classification performance and applicability.

5. Conclusion

In order to address the problems with intrusion detection, a model based on FS-I1DCNN is proposed in this paper. After data processing, 55 features with a higher contribution are selected for the CIC-IDS-2017 dataset using the XGboost feature importance ranking method. These features are then fed into an improved one-dimensional convolutional neural network to finish the model’s final classification task. The FS-I1DCNN intrusion detection model not only resolves the issue with the conventional machine learning approach of relying on expert experience to extract features but also enhances the detection efficiency of the model by using the XGboost feature screening method, reduces training time, and increases overall accuracy rate. The final experimental results demonstrate that the FS-I1DCNN intrusion model outperforms other intrusion detection models across all evaluation indices, achieving more than 97% classification accuracy in each category and 99.36% overall accuracy. In the future, the model will be used in actual network attack scenarios in order to further increase its detection effectiveness while maintaining high model classification performance.

Conflicts of Interest

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Acknowledgments

This research was funded by the New Infrastructure and University Informatization Research Project (Grant no. XJJ202205017).

Open Research

Data Availability

The dataset used in this paper is open, which is proposed in reference.