Improved Distributed Multisensor Fusion Method Based on Generalized Covariance Intersection

Abstract

In response to the multitarget tracking problem of distributed sensors with a limited detection range, a distributed sensor measurement complementary Gaussian component correlation GCI fusion tracking method is proposed on the basis of the probabilistic hypothesis density filtering tracking theory. First, the sensor sensing range is extended by complementing the measurements. In this case, the multitarget density product is used to classify whether the measurements belong to the intersection region of the detection range. The local intersection region is complemented only once to reduce the computational cost. Secondly, each sensor runs a probabilistic hypothesis density filter separately and floods the filtering posterior with the neighboring sensors so that each sensor obtains the posterior information of the neighboring sensors. Subsequently, Gaussian components are correlated by distance division, and Gaussian components corresponding to the same target are correlated into the same subset. GCI fusion is performed on each correlated subset to complete the fusion state estimation. Simulation experiments show that the proposed method can effectively perform multitarget tracking in a distributed sensor network with a limited sensing range.

1. Introduction

The main task of multitarget tracking (MTT) technology is to detect targets in clutter environment and estimate their motion parameters in real time [1, 2]. Among the many existing multitarget tracking technologies, nearest neighbor (NN) [3], probabilistic data association (PDA) [4, 5], multiple hypothesis tracking (MHT) [6], and random finite set (RFS), multitarget tracking algorithm is called the main method. The first three methods have been widely used in the field of multitarget tracking. They use a certain distance criterion to divide the observation set through data association processing, and convert the multitarget tracking problem into multiple independent single-target tracking problems for processing. For relatively complex multitarget tracking problems, correlation errors are prone to occur, resulting in the degradation of tracking performance. The PHD filtering [7] method based on random finite sets provides a new solution to the above-mentioned new problems of multitarget tracking. Strict mathematical description, its biggest advantage over the traditional multitarget tracking algorithm is that it can avoid the data association calculation between the observation and the target. With the help of particle filter (PF) technology and Gaussian Mixture (GM) technology, the research team represented by Vo has successfully obtained two approximate realization forms of these PHD filters, namely, GM-PHD [8] and SMC-PHD [9]. The PHD filtering method is based on a solid mathematical foundation, which can better reflect the essence of the target tracking problem, can avoid the data association problem in the traditional method, and has less computational complexity in complex multitarget tracking applications, so it is used in more and more field of application [8–19].

The multisensor information fusion technology effectively fuses the information obtained by multiple sensors and can obtain a more accurate description of the problem than the information obtained by a single sensor. In fact, multisensor information fusion is not a new concept. In a broad sense, the cognitive process of human beings through various senses such as sight, hearing, smell, taste and touch is essentially an information fusion process. With the emergence of various new sensors, the vigorous development of signal processing technology and the great improvement of hardware computing performance, real-time data fusion processing can be performed more efficiently, so data fusion technology has been widely used. Compared with single-sensor systems, multisensor systems have several advantages: first, the fusion of observations obtained by several sensors can improve the estimation of the target state. If the data can be combined in an optimal way, the estimation accuracy can be improved statistically by increasing the amount of data; second, the observation quality can also be improved by using the relative motion between sensors. For example, two sensors and the target form a triangle. If the corresponding relationship between the observation angles of the two sensors relative to the target is known, the target can be positioned by the triangulation method, which is widely used in commercial navigation and geological surveys. Third, multisensor systems can extend temporal and spatial coverage. Because one sensor may detect places that other sensors cannot, a certain sensor may detect objects in a certain period of time that other sensors cannot detect in that period of time.

Sensor fusion technology can provide more effective information about targets in time and space. Multiple sensors cooperate with each other to effectively track and estimate the state and number of targets. Information fusion is a key algorithm in multisensor networks, and distributed MTT algorithms have the advantage of being more fault-tolerant and flexible than centralized fusion frameworks and have received much attention recently [20–24]. Generalized covariance intersection (GCI) [25, 26] fusion theory is an effective method to solve the distributed multisensor MTT fusion problem, also known as exponential mixture density (EMD) [27]. GCI fusion is equivalent to calculating the density of minimizing the sum of information gains from local posteriors Kullback-Leibler divergences (KLD) [28, 29], thus avoiding the problem of double counting public information [30]. The method has also been successfully applied to multisensor PHD filter fusion [31–33], multisensor CPHD filter fusion [34], and multisensor multi-Bernoulli filter fusion [35].

In fact, GCI fusion rules tend to keep only trajectories present in all local posteriors. This defect is exacerbated when sensors have different fields of view (FoV). At present, there are many methods to solve the problem caused by the finite field of view of the sensor in the generalized covariance intersection fusion process. Based on the GM-PHD filter, Battistelli et al. proposed a simultaneous localization and mapping solution to solve the problem that different sensors have different detection fields [36]. Vasic et al. model the uncertainty of the target in regions that cannot be explored between sensors, using the idea of a uniform intensity throughout the region to initialize all local PHDs [37]. At the same time, Vasic et al. also proposed to use the distance value between each Gaussian component to improve the GCI fusion algorithm [37], but this method overestimated the number of targets and caused false positives. Kai et al. proposed a method of supplementary measurement to expand the field of view [38]. Recently, there are many new improved algorithms, such as introducing the GCI fusion algorithm into the label random finite set multitarget tracking method [39–43], and the feasibility of the algorithm has been proved, but there are still many problems to be dealt with, including parameter setting as well as inconsistent labels. The problem of sensor fusion is not just for object tracking, it can also be applied to a wider space, including object detection and estimation.

In this paper, we propose the principle of complementary measurements to compensate for the limited detection range of sensors, which cannot get the measurement information of the whole scene. That is, the measurements outside the detection range of the sensor are complemented by other sensors, and the multitarget density product is used to classify whether the measurements belong to the intersection region of the detection range, and the measurements that do not belong to the intersection region are complemented, which can avoid repeated complementation of the same region to reduce the amount of computation. Direct GCI fusion may lead to large tracking errors and computational complexity for different Gaussian components representing different targets. The distance correlation GCI fusion method is used, i.e., the complementary measures are correlated by distance division to associate Gaussian components that may be the same target to the same subset. Subsequently, GCI fusion is performed on the different correlated subsets, and the fusion state estimation is completed. The performance of the fusion algorithm is verified by simulation scenarios.

The following sections are arranged as follows: Section 2 introduces the background of the algorithm, including PHD filtering, limited field-of-view sensors, and GCI fusion rules; Section 3 analyzes the reasons for GCI fusion mismatch; Section 4 introduces the solution of this paper, including measurement complementarity and improved GCI fusion method based on distance threshold; the fifth section verifies the effectiveness of this algorithm in the multitarget tracking environment by comparing the improved algorithm in this paper with some traditional algorithms. Abbreviations section is the acronym for this article.

2. Background

2.1. PHD Filtering

Set the multiobjective statement set and measurement set as Xk and Zk. Dk|k − 1(x|Z(k)) represents the density function corresponding to the multiobjective posterior density at time k, which is the first-order moment approximation of the multiobjective posterior density, and is usually called PHD in the target tracking theory based on random finite sets.

2.2. Sensor Network Field of View

Among them, represents the detection probability of the sensor s in the limited field of view. Each sensor usually has a different field of view due to its type, location, and orientation.

Consider a sensor network consisting of sensors with the limited field of view, where information sharing is required because the sensors have a limited field of view for detection. Denote by Sj(t), the set of sensors that can reach sensor j after t step communication.

2.3. GCI Fusion Rules

A key technology for multitarget tracking using distributed sensor networks is the PHD information fusion between multisensors. Optimal PHD fusion among different sensors is difficult to achieve because the common information among the sensors is usually unknown, especially in large multisensor networks. Next, we will introduce the generalized covariance intersection fusion algorithm.

The GCI coefficient satisfies .

3. Analysis of GCI Fusion Issues

For most single sensors, due to the limitations of the sensor’s own performance, such as detection capability, detection range, and transmission rate, the scene data information provided by the sensor is incomplete and the data type is single. In addition, it is also susceptible to the influence of complex environment, which produces data caused by noise and interference clutter, which cannot provide accurate target information. In order to make up for the defect of a single sensor, multiple sensors are used to detect the target in the scene at the same time, so that the target in the scene can generate at most one measurement on each sensor, and then complete the information sharing between adjacent sensors and data communication. Fusion estimation is performed at the fusion center.

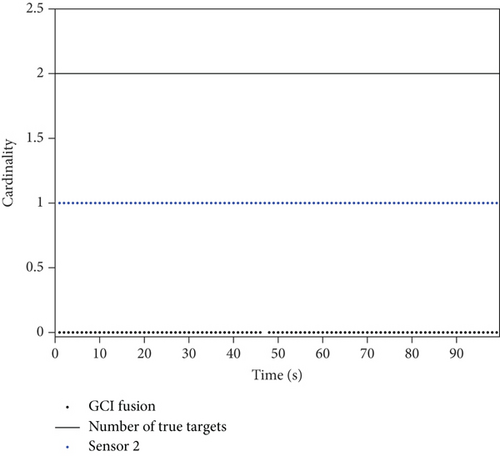

However, the multisensor fusion method sometimes leads to poor fusion matching results due to the defects of a single sensor. It has been analyzed earlier that when the GCI fusion algorithm is applied to a multisensor network where each sensor has a limited detection field of view, the number of targets will be falsely reported. This section uses an actual simulation scenario to verify the GCI fusion algorithm.

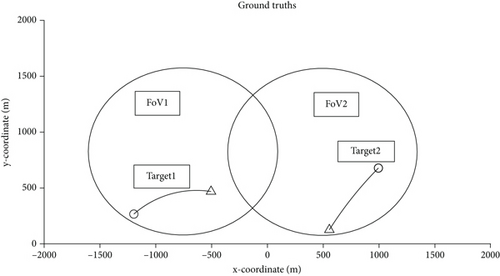

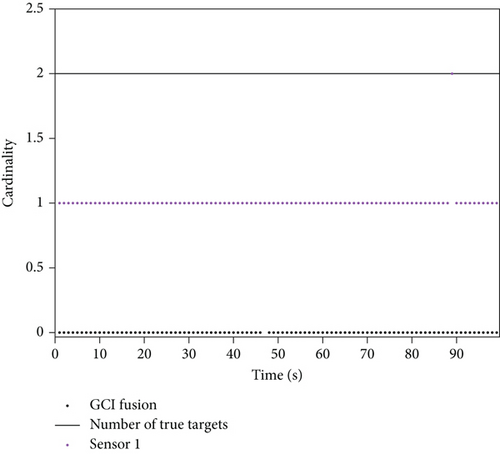

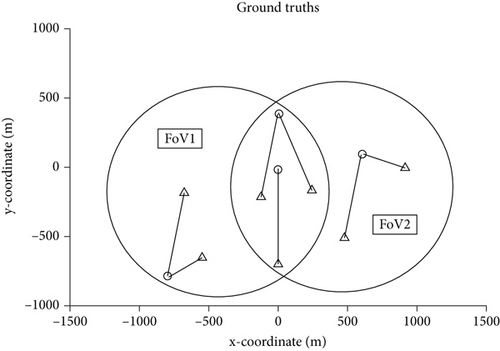

In the multisensor network, the number of sensors is set to 2, the tracking method adopts GM-PHD filtering, the probability of target survival is 0.99, the detection probability within the detectable range of the sensor is 0.98, and the detection probability is 0 in the area outside the detection range. The observation area is [-2000, 2000] × [0, 2000](m2). For simplicity, set up two targets to do a circular motion. The schematic diagram of multisensor network tracking is shown in Figure 2. Among them, ∘ represents the starting position of the target movement, Δ represents the end position of the target movement, the target 1 is in the field of view FoV1 of the sensor 1, the target 2 is in the field of view FoV2 of the sensor 2, and neither target is in the intersection area of the field of view.

The estimated number of targets is shown in Figure 3. As can be seen from Figures 2 and 3, each sensor can only detect one target, and after GCI fusion, it will directly cause all two targets to be lost. The reason is that the detection range of a single sensor is limited, so that a single sensor can only detect targets within its own detection area and lose targets outside its own detection area. In the fusion process, because the weights given by the two sensors to the Gaussian components corresponding to the same target measurement value are too different, the fusion algorithm fails and the target is lost.

From the simulation results, it can also be known that the traditional GCI fusion algorithm can effectively fuse the Gaussian components of different sensors corresponding to the same target only when the target is in the intersection of the multisensor network detection area. If only some sensors can detect the target, and some sensors cannot detect the target, that is, when the target is in the multisensor non-intersection detection area, the traditional GCI fusion algorithm will inevitably have false positives, which will lead to the loss of the target. The essential reason is also because the GCI fusion algorithm fuses the weights of the Gaussian components of the same target by each sensor. If a sensor cannot detect the target, the Gaussian component weight value will be too small, which will affect the final state extraction, resulting in the loss of the target.

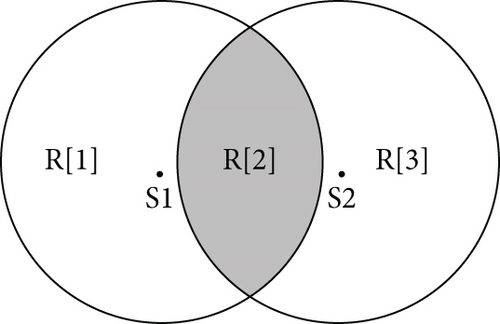

From the simulation, it can be seen that each sensor can only generate the corresponding set of measurements within its own limited detection range and cannot detect all targets. If all targets are to be detected and information is to be shared, then it is necessary that different sensors can share their own detection information among themselves. However, suppose all the measurement information from one sensor is shared directly to another sensor. In that case, it may lead to the reuse of information in the intersection region of the detection range of the two sensors, which in turn leads to the problem of combinatorial explosion. As shown in Figure 1, if the information is shared between Sensor 2 and Sensor 1, the information in the R[2] region is shared repeatedly, resulting in an increased computational burden for the intersection region measurement set.

4. Solutions

4.1. Complementary Measurements

In order to realize that a single sensor can detect and track the whole tracking scene, information sharing between different sensors is needed, and the measurement information from one sensor is directly communicated to another sensor. However, to avoid the reuse of measurement information, a distinction needs to be made between intersecting and non-intersecting areas of sensor detection ranges.

Let the measurement set of all sensors at the moment k be , where the measurement set generated by the sth sensor in its FoVs is , and Ms,k is the number of measurements of the sth sensor at the moment k. When a sensor is to supplement another sensor with measurements, it is necessary to supplement the measurements outside the intersection area rather than sharing all measurements to another sensor, which would lead to the reuse of information and increase the computational burden.

Equation (15) shows that under the condition of no complex interference, the extended two-sensor PHD product can be determined to be located in the intersection region of the detection range of the two sensors if it is not a zero value. This property can be used to divide the PHD function between the intersection region of the sensor detection range and the region outside the intersection by using the PHD product to find the common measurement information of the two sensors and distinguish them.

Once the measurements are complementary, each sensor contains all the measurements in the sensor network, and the measurements located in the sensor detection intersection area are not reused, which also greatly reduces the computational burden. Moreover, with the complementary measurements, even a newborn target function within a sensor can be detected in time, because the measurement sharing helps the sensor network to share the newborn target function to each sensor in time, effectively improving the tracking performance of the whole sensor network.

4.2. Gaussian Mixture PHD Filtering GCI Fusion

, , and are the means, covariances, and weights of the Gaussian components after fusion, respectively.

The communication method in the literature [44–46] can converge faster. It can effectively cope with scenarios where the limited field of view does not completely overlap [47], so this algorithm uses this method for intersensor communication due to the limited detection field of view of individual sensors and the need for information sharing among neighboring sensors.

When t = 0, .

After the fusion of all associated subsets on sensor s is completed, each subset is a new Gaussian component represented as , and then the state is extracted on this sensor by and , and the filtered updated Gaussian component is returned for use in the next iteration.

The distributed sensor measurements complementary Gaussian component correlation GCI fusion tracking method is summarized as Algorithm 1:

-

Algorithm 1

-

Input: Filtered Gaussian component at time k − 1. The measure set at time k.

-

For s = 1, ⋯, S

-

Quantitative complementation according to (12)(13)(14) yields

-

End

-

The Gaussian component association is performed according to (29) to obtain , where Cs,k denotes the number of subsets after association.

-

For c = 1, ⋯, Cs,k

-

Calculate the Gaussian component Ds,k(x) according to equation (31)

-

Calculate the number of target estimates according to equations (32) and (33)

-

End

-

The new Gaussian component is obtained at the sensor after fusion

-

End

The state extraction is performed according to , s = 1, ⋯, S to obtain Xs, and return the state estimate and the filtered Gaussian component.

Output: Gaussian components and Xs for each sensor after filtering update.

5. Simulation and Experimental Results

5.1. Simulation Parameter Settings

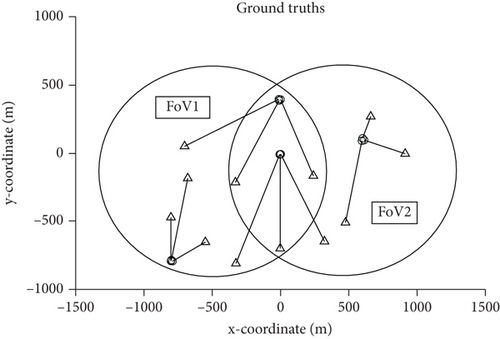

In the multisensor network, the detection field of each sensor is limited, and the filtering algorithm GM-PHD filter of each sensor is set. The detection area of the multisensor network is set as [-1500, 1500] × [-1000, 1000](m2) so that in the detection area, a total of 6 targets will appear. The time of the 6 targets is different, and the 6 targets may appear from 4 different positions. When the number of targets is satisfied under variable conditions, each target moves in a straight line at a uniform speed. In the target tracking performance comparison chart, the traditional GCI-GM-PHD algorithm and each GM-PHD algorithm are compared with the improved algorithm in this paper.

Among them, the target state value is xk, the separation parameters are c > 0 and dc(x, y) = min(c, ‖x − y‖), and 1 ≤ p < ∞ is the distance sensitivity parameter, which is taken in the simulations, c = 200 and p = 2. If the OSPA distance is smaller, the error value of the multitarget state estimation will be smaller.

5.2. Scenario 1: Complementary Verification of Multisensor Measurements

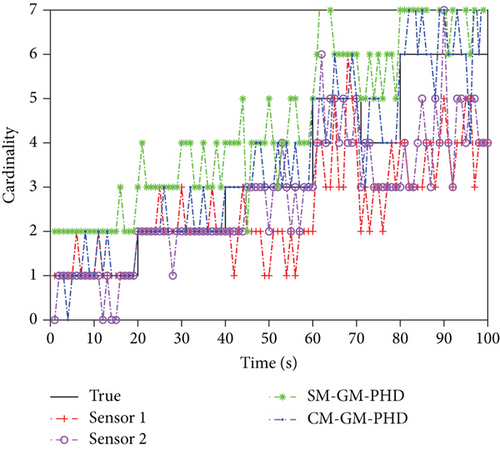

To verify the improvement in tracking the performance of the proposed complementary measurement method (CM-GM-PHD) relative to the method of all sharing measurement (SM-GM-PHD) in this paper, filtered tracking is performed by a single sensor. This multitarget tracking scenario has a clutter rate λ = 90, a survival probability ps,k = 0.99, and a detection probability pD,k = 0.95 for the sensor’s effective field of view. Figure 4 shows the real trajectory of the target and the limited detection range of the sensors, each of which has the same performance with a sensing radius of 700 m.

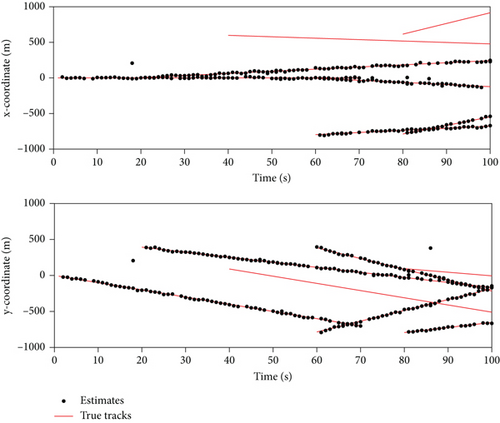

Figure 5 illustrates the single-sensor tracking effect without measurement sharing, and Figures 5(a) and 5(b) show the tracking effect of sensor 1 and sensor 2, respectively. Corresponding to Figure 3, due to the limited detection field of view, two targets are lost for sensor 1 and sensor 2, respectively, resulting in a dramatic degradation of tracking performance.

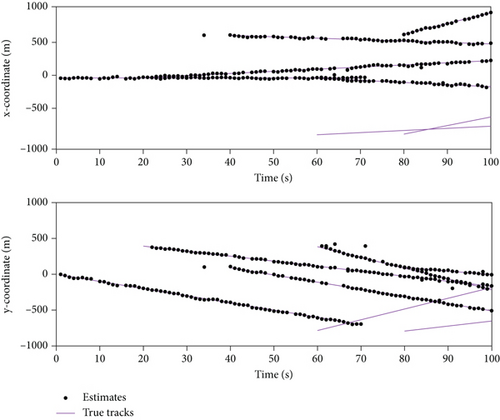

Figures 6(a) and 6(b) show the tracking measurement plots after the measurements are complementary and the tracking measurement plots after the measurements are all shared, respectively. The comparison shows that the single sensor can detect and track all the targets after the complementary or shared measurements, and the tracking performance is greatly improved. It can be seen from Figure 6(b) that the sharing of all measurements will lead to the reuse of the measurements in the intersection area of the sensor detection range, and there are too many redundant Gaussian components in the measurements, which contain a lot of clutter. After the pruning and merging step is performed by the controller, the clutter will have a greater probability of being regarded as the real target, so the number of false targets will also increase, which can also be reflected in the subsequent target number estimation simulation.

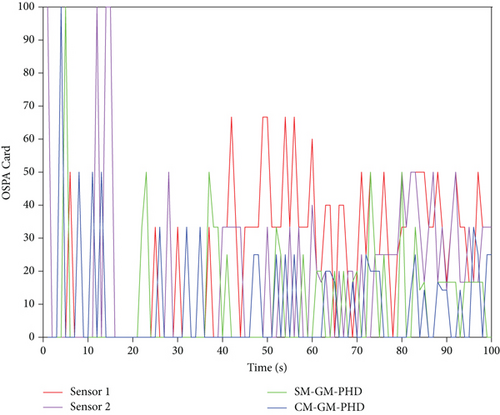

Figure 7 shows the tracking error comparison graph and the target number estimation comparison graph. From Figures 7(a)–7(c), it can be seen that the tracking error is reduced. All targets in the field of view can be tracked after the measurements are shared between sensors, and the tracking error is also much reduced. The tracking performance is improved after the measurements are complemented between sensors. With the Figure 7(d) we can know that the number estimation is overestimated due to the duplicate sharing of measurements in the intersection region caused by the sharing of measurements, the effect is better when the measurements are complementary. The target number estimation is closer to the true value.

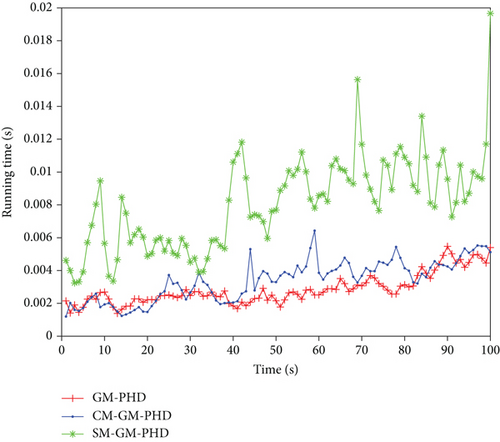

It is obvious from Figure 8 that the calculation efficiency of the measurements complementary method is significantly higher than that of the measurements sharing method because the measurements complementary method avoids duplicate information sharing in the intersection region.

5.3. Scenario 2: Field-of-View Complementary Gaussian Component Correlation GCI Fusion Performance Analysis

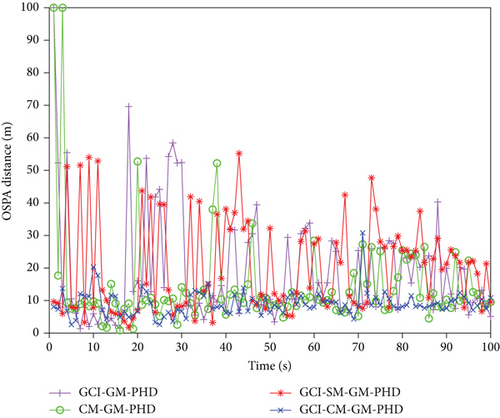

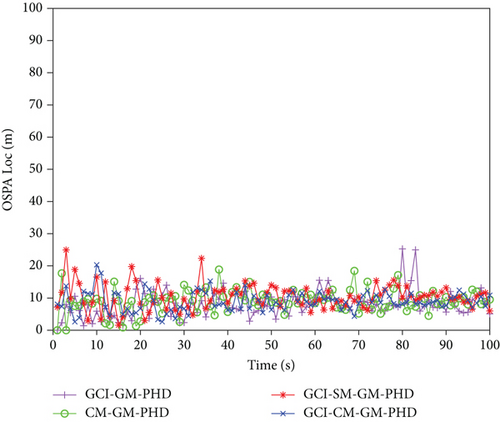

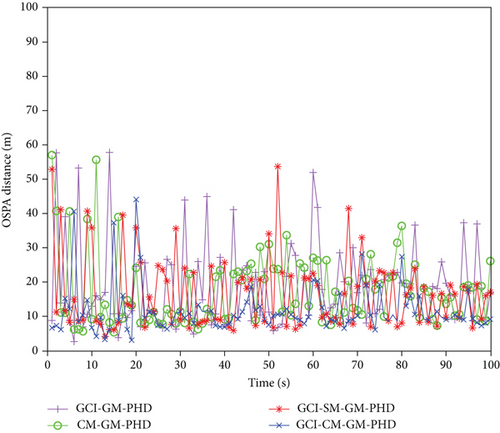

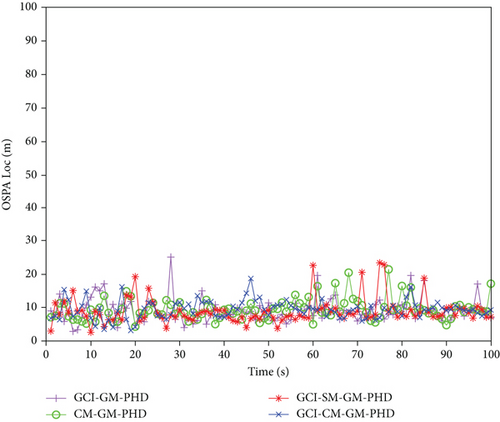

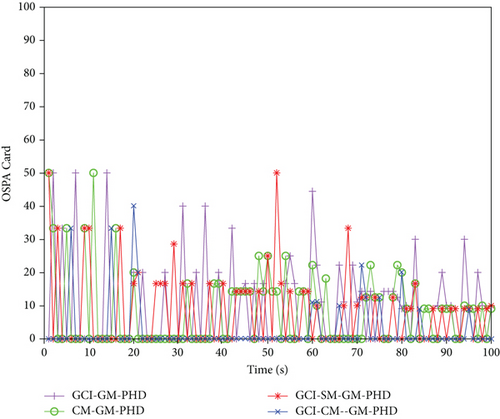

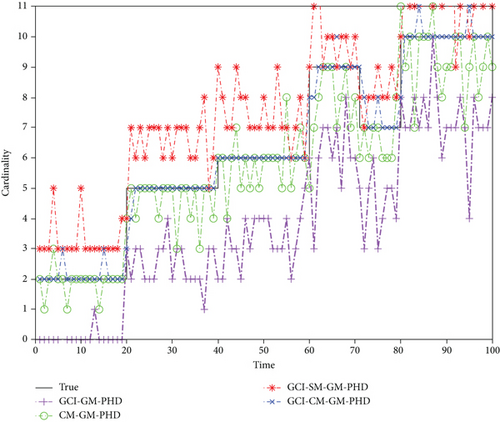

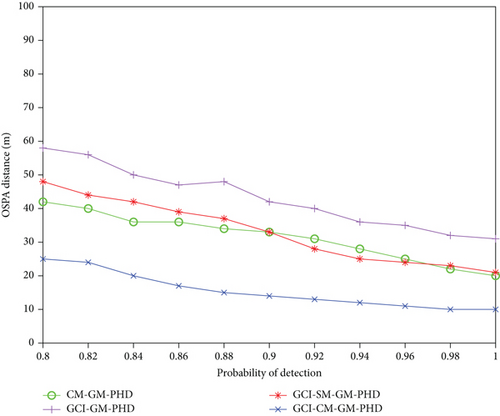

In the limited field of view distributed multisensor network, set the communication iteration maximum value T = 3, threshold Dmax = 4, and other settings as in Scenario 1. The following simulations are compared by different methods. The first method is the measure-complementary Gaussian component correlation GCI fusion method (GCI-CM-GM-PHD), which is the algorithm proposed in this paper. The second method is the GCI fusion method with all shared Gaussian components of the measurements (GCI-SM-GM-PHD). The third method is a direct GCI fusion estimation of multisensor information without measurement sharing. The fourth method is the measurement complementary method (CM-GM-PHD).

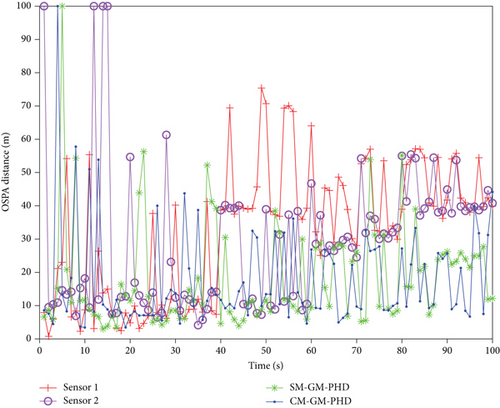

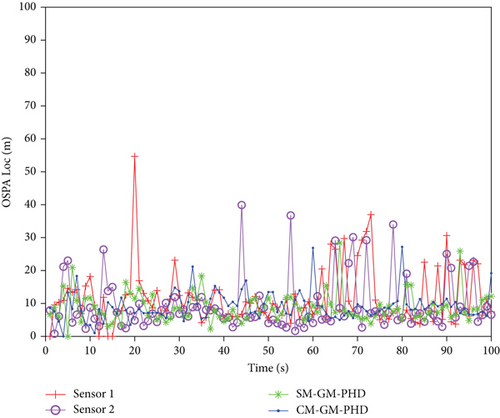

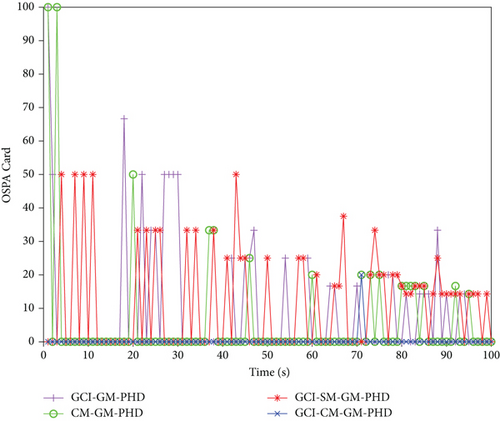

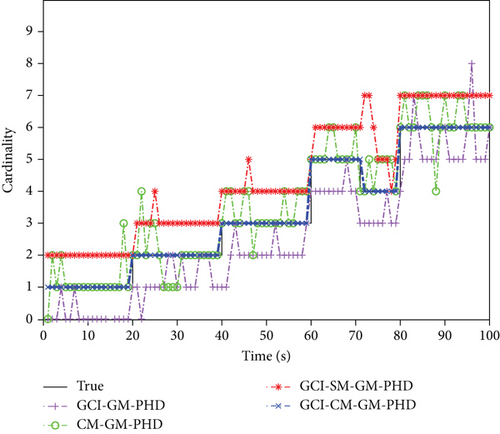

Figure 9 shows the simulation results of the four methods for pairwise target tracking. Figures 9(a)–9(c) show the multitarget OSPA error, OSPA location error, and cardinalities estimation error, respectively. From Figures 9(a) and 9(b), it can be seen that the GCI-CM-GM-PHD tracking error is lower due to the use of the GCI fusion method, which increases the fault tolerance of the PHD filter and converges the Gaussian components of the same target through the threshold, which greatly reduces the tracking error. The conventional GCI fusion method, on the other hand, causes the tracking target to be lost due to the absence of the complementary measurements, which is the main reason for the larger error. However, with the Figure 9(c) we can know that the cardinalities estimation error of GCI-SM-GM-PHD is higher than that of the GCI-CM-GM-PHD algorithm. However, with the Figure 9(c) we can know that the potential estimation error of GCI-SM-GM-PHD is higher than that of GCI-CM-GM-PHD algorithm, because the GCI-CM-GM-PHD algorithm uses Gaussian component product. Distinguish the Gaussian component in the intersection area of the sensor detection range to avoid the repeated use of the Gaussian component in the intersection area. In this way, through the pruning and merging step and GCI fusion, the probability of clutter being regarded as a real target is greatly reduced, and the number of targets can be estimated more accurately. The difference in target number estimation can be seen from Figure 9(d).

5.4. Scenario 3: Simulation Experiment (the Number of Targets Increases)

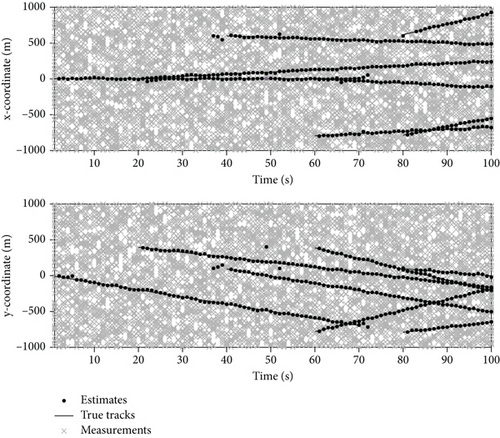

In this section, more targets are set to enter the multitarget tracking scene. A total of 12 targets appear at different times. First, the condition of the time-varying target number is satisfied. In this simulation scene, the targets exist in the multitarget tracking scene at the same time. The maximum number is 10 and the average number of clutter per unit volume is set to 30. The actual situation of the multitarget tracking scene and the filtered results of each algorithm are shown in Figures 10 and 11.

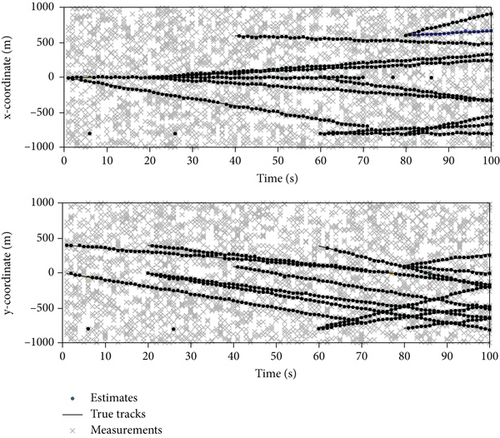

The target tracking results are shown in Figure 10(b), and clear tracking results can be obtained. In the tracking scene, due to the existence of clutter, the filtering algorithm often mistakenly identifies clutter points with larger weights when extracting states during the filtering process. It is a real target, which leads to some noise in the tracking results. But when the clutter point is regarded as the real target, the fusion algorithm will filter it through the distance threshold in the algorithm.

It can be seen from Figures 11(a) and 11(b) that the GCI-CM-GM-PHD algorithm can adapt to the situation. In terms of OSPA error, the GCI-CM-GM-PHD algorithm shows the best performance. It can be seen from Figures 11(c) and 11(d) that the GCI-CM-GM-PHD algorithm performs better than the other three algorithms, which also indirectly proves that the algorithm is effective in this situation stability. It can be seen from the number estimation in the figure that the single-sensor CM-GM-PHD algorithm will mistake the clutter as the real target because it is difficult to adapt to the multitarget environment in the dense clutter environment, resulting in an overestimation of the number. In the traditional GCI-GM-PHD algorithm, due to the limited field of view of the sensors, during the fusion process, due to the large difference in weight between the sensors, the target is lost and the estimated number of targets is low. GCI-SM-GM-PHD is used because too many measurements are reused, resulting in the estimated number of targets being higher than the true value.

In order to verify that the stability of the filtering, in the final simulation experiment, the OSPA distance error value of each algorithm in the scene under the change of clutter rate and the change of detection probability is summarized and analyzed. And finally from the summary, it can be seen from the analysis in Figures 12(a) and 12(b) that the algorithm in this paper can show high stability under different clutter rates and different detection probabilities, indicating that the algorithm can perform better in the complex multitarget tracking scenarios.

After the simulation comparison of the above scenarios, the tracking performance of the algorithm proposed in this paper has been significantly improved compared with other traditional algorithms. The algorithm can improve the robustness of distributed multisensor networks for multitarget tracking in dense clutter environment while maintaining computational efficiency, which proves the effectiveness of the proposed algorithm.

6. Conclusion

For the distributed multisensor multitarget tracking problem with a limited detection field of view, this paper proposes a distributed field-of-view complementary Gaussian component correlation GCI fusion tracking method. The algorithm is based on the traditional GCI-GM-PHD filtering to complement the measurements in the field of view. Its advantages in computational efficiency and tracking accuracy can be proved through simulation experiments. Then the fusion after distance correlation of Gaussian components greatly increases the tracking accuracy and stability of the algorithm, and the number of targets is estimated more accurately. The simulation experiments in this paper mainly focus on linear motion targets, and extending the algorithm in this paper to more general scenarios is a future work that needs to be done, such as maneuvering targets. In addition, the study can be extended to investigate spoofing attack to track estimation of multitarget states [49].

Abbreviations

-

- MTT:

-

- Multitarget tracking

-

- NN:

-

- Nearest neighbor

-

- PHD:

-

- Probabilistic hypothesis density

-

- GM:

-

- Gaussian mixture

-

- SMC:

-

- Sequential Monte Carlo

-

- EMD:

-

- Exponential mixture density

-

- KLD:

-

- Kullback-Leibler divergences

-

- CPHD:

-

- Cardinalized probability hypothesis density

-

- GCI:

-

- Generalized covariance intersection

-

- GCI-GM-PHD:

-

- Generalized covariance intersection Gaussian mixture probability hypothesis density

-

- CM-GM-PHD:

-

- Complementary measurement GM-PHD

-

- SM-GM-PHD:

-

- Sharing measurement GM-PHD

-

- GCI-CM-GM-PHD:

-

- Generalized covariance intersection complementary measurement GM-PHD

-

- GCI-SM-GM-PHD:

-

- Generalized covariance intersection sharing measurement GM-PHD.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

The authors would like to thank Air Force Engineering University, Shaanxi Province Natural Science Basic Research Program, 2022JQ—679.

Open Research

Data Availability

The data used to support the findings of this study are included within the article.