[Retracted] Intelligent Vehicle Visual Navigation Algorithm Based on Visual Saliency Improvement

Abstract

The automobile has gradually developed into an indispensable tool for human daily travel and transportation. Further reducing the traffic accident rate to improve the traffic safety level and improving the road traffic safety performance is a global issue worthy of common concern for human beings, moreover, it is a common concern for political circles, scholars, researchers, and other related workers in the field of transportation all over the world. Therefore, in this paper, by studying a large amount of literature and carrying out relevant model construction, based on the theories of visual navigation basic theory, intelligent vehicle theory and visual saliency improvement theory, etc., the intelligent vehicle visual navigation with visual saliency improvement is studied in depth through the feature point tracking algorithm research method (including three feature point methods and one optical flow method), and it is concluded that the intelligent vehicle with visual saliency improvement is better than the ordinary. The conclusion that the overall performance of the vehicle is better in all aspects. The following discussions are also proposed for the algorithm improvement: acquisition of visual saliency images; continuous enhancement of visual saliency of images; reasonable application of filtering algorithm.

1. Introduction

Nowadays, automobiles have gradually developed into an indispensable tool for human daily travel and transportation, and their convenient and efficient characteristics have largely improved our production and life style. However, at the same time, traffic congestion and even traffic accidents have arisen, and the trend is getting worse year by year. Up to now, the above-mentioned road traffic problems have had a high degree of concern. According to the statistics, in the past 10 years, the total number of traffic accidents in the world reached 2 million per year, resulting in more and more people being injured, even disabled and even killed and showing a rising trend. Therefore, improving road traffic safety is a global issue that deserves common attention of mankind, and is of common concern to politicians, traffic scholars, researchers, and other related workers in the world.

In order to improve the efficiency of vehicle traffic and reduce the occurrence of traffic accidents, thanks to the maturity of machine vision, sensor technology, artificial intelligence, automatic control technology, and other related technologies, intelligent transportation systems have emerged and developed rapidly. As the foundation and important part of the intelligent transportation system, intelligent vehicles are gradually receiving the attention of today’s society. It is of great practical importance to improve traffic safety and operational efficiency. According to a study published by McKinsey Global Institute, smart vehicles are predicted to be one of the 12 disruptive technologies that may improve people’s daily lives, business models, and the world economy in the future [1]. The study also states that by 2025, the value of smart cars is expected to reach $200 billion to $1.9 trillion. For China, the “Technology Roadmap for Key Areas of Made in China 2025” released by China’s National Advisory Committee on Manufacturing Power Construction Strategy in October 2015 points out that future smart cars cannot only significantly guarantee traffic safety, relieve traffic congestion, improve social efficiency, and implement energy conservation and emission reduction, but also promote automotive, electronics, communication, which is decisive for promoting China’s industrial transformation and upgrading strategic significance [2]. Since March 2020, new infrastructure development, or “new infrastructure”, has become a buzzword for the economy and people’s livelihood. Among them, intelligent transportation and autonomous driving have become one of the closely related landing directions, which highlight the broad application prospects of intelligent vehicles in the future [3].

Smart vehicles are integrated systems that combine environmental perception, autonomous localization, path planning, and decision control. Among them, environment perception technology, as the basis and prerequisite for other issues, is the key link for smart vehicles to achieve intelligence and the basic guarantee for their safety and intelligence [4]. The accuracy and robustness of the perception algorithm can directly affect or even determine the implementation of the upper layer functions, and incorrect environmental perception can affect the control of the vehicle and therefore cause safety hazards [5]. For example, the accuracy of road area detection will determine whether an intelligent vehicle can drive properly in a drivable road area; the performance of lane line detection technology will directly affect the operation of the lane keeping system.

Therefore, it is of great value and significance to carry out research on environment perception technology for intelligent vehicle visual navigation to promote the rapid development of intelligent vehicles and intelligent transportation. In this paper, we investigate the vehicle vision navigation algorithm from this perspective.

2. Research Background

In foreign research, from many research scholars and related practitioners began to try and explore the intelligent vehicle technology, to know how the relevant technology gradually mature has gone through nearly 50 years of history. The earliest involvement of foreign research institutions in autonomous driving technology began in the 1970s. The U.S. National High Council (DARPA) in cooperation with the Army in 1984 for the first time developed the autonomous ground Army combat vehicle project can be regarded as the real beginning of autonomous driving technology. DARPA has held three consecutive “DARPA Grand Challenge” unmanned desert challenges since 2004 and renamed the event “DARPA Urban Challenge” in 2007. i.e., the Intelligent Vehicle Urban Challenge, which in a way, officially kicked off the modern self-driving intelligent vehicles [6]. In this series of DARPA events, driverless cars were required to successfully achieve a desert crossing with a 230 km section in 2004, and unfortunately, none of the teams were able to complete the race. In the second year of the competition, 23 teams participated, five of which completed the task, with the team from Stanford University winning the title. In 2007, the 96-kilometer intelligent Vehicle Urban Challenge focused more on the ability of vehicles to drive themselves on structured urban roads with few pedestrians and other vehicles. A team from Carnegie Mellon University (CMU) won the competition, followed by Stanford University (2nd place), and Virginia Tech (3rd place) [7]. In summary, the three major driverless car challenges mentioned above have greatly contributed to the development of smart cars. In the above process, many representative smart car development achievements emerged, such as the NavLab series of smart cars developed by Carnegie Mellon University, the “Boss” self-driving car jointly developed by Carnegie Mellon University, and General Motors in the 2007 “DARPA Urban Challenge”. “DARPA Urban Challenge” in 2007, and “DARPA Urban”, a smart car developed by Stanford University, was the first runner-up in the “junior” competition in 2007. The “DARPA Urban”, a smart car developed by Stanford University, was the second runner-up in the 2007 “junior” competition. In summary, the development of smart cars has reached a climax with the investment of Carnegie Mellon University, Stanford University and MIT in the development of autonomous driving technology.

In the domestic research, in general, in the world compared to the developed countries, because of the late start of China’s research and development work on intelligent vehicles, there is a certain gap in the level of technology, but thanks to the continuous efforts of the relevant personnel, in recent years has been extremely rapid development, in some aspects also achieved refreshing research results.

Back in the 1980s, some domestic research institutions began to try and explore research work related to intelligent vehicles. For example, Tsinghua University began to invest in research on intelligent vehicles in 1986, followed by the National University of Defense Technology in 1987. In addition to the above-mentioned universities, universities such as University of Science and Technology of China, Jilin University, and Harbin Institute of Technology have contributed to the research of intelligent vehicles one after another and achieved fruitful results [8].

In addition to the above-mentioned universities and research institutions, many domestic automotive companies and Internet companies have also successively started the research and development of intelligent vehicles. Among them, Baidu Inc. has taken the lead in engaging in the research and development of self-driving technology. Baidu Inc. launched its smart car in December 2016 to achieve autonomous driving in urban structured road conditions for the first time. Similar to the environment perception system of the driverless car developed by Google, Baidu’s smart car also uses the expensive LIDAR for the perception of the driving environment. In 2017, Baidu Inc. launched a smart car development platform and named it Apollo. Currently, the Apollo smart cars developed by Baidu Inc. have more than 3 million kilometers of driving distance distributed in more than 20 cities. The latest news shows that Apollo unmanned cars have been granted a transportation license by Beijing to carry passengers on specific roads within the city. In addition, many high-tech companies, such as the well-known domestic Alibaba Company and its Cainiao and Gaode Maps, Tencent, Drip, and Huawei, have gradually started to dabble and layout in the field of self-driving intelligent vehicles [9].

Therefore, with the vigorous development in foreign countries, intelligent vehicles in China have also developed rapidly. In this paper, we start from visual navigation and study the relevant algorithms to lay a certain theoretical foundation for the development of intelligent vehicles in China.

3. Research Method and Basic Theory

3.1. Basic Theory

3.1.1. Basic Theory of Visual Navigation

The basic theory of visual navigation is mainly introduced, including visual imaging model, feature point tracking algorithm, and Structure from Motion (SFM) technique to solve camera motion according to the geometric relationship between image feature points. Meanwhile, the performance of different feature point tracking algorithms is experimentally verified.

- (1)

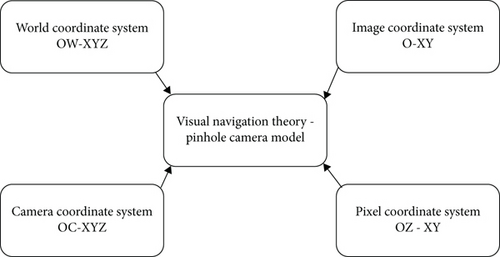

The world coordinate system is a three-dimensional coordinate system that describes the specific location of feature points in the three-dimensional world [10]

- (2)

The camera coordinate system is a three-dimensional coordinate system whose origin cO is located at the optical center of the camera lens, the cZ axis is along the direction of the camera optical axis, and the cX and cY axes are parallel to the x- and y-axes of the pixel coordinate system, respectively

- (3)

The image coordinate system is a two-dimensional coordinate system, whose origin O is the intersection of the camera optical axis and the pixel plane, and the x- and y-axes are parallel to the x- and y-axes of the pixel coordinate system, respectively

- (4)

The pixel coordinate system is a two-dimensional coordinate system whose origin O is located at the upper left corner of the pixel plane, and the x- and y-axes are along the upper and left sides of the pixel plane, respectively [11]. As shown in Figure 1

In this theory, aberration correction is also performed. In the actual imaging process, a lens needs to be mounted on the front of the camera in order to give a better imaging effect. Because the lens itself will have an impact on light propagation, resulting in radial aberrations in imaging; and the lens installation process, subject to the constraints of the installation process, the lens may not be parallel to the image projection plane, resulting in tangential aberrations in the projected image.

Then the coordinates of the aberration correction (xdistord, ydistord) points are (5), (6) projected to the pixel coordinate system by Equation and the correct positions of the feature points are obtained [13].

3.1.2. Theory of Intelligent Vehicles

The ultimate goal of intelligent vehicles is to achieve safe, comfortable, and efficient autonomous driving. In order to distinguish different levels of autonomous driving technology more reasonably, the International Society of Automata Engineers (ISAE) released a new classification system for the level of autonomous driving technology in 2014. The National Highway Traffic Safety Administration, from the National Highway Traffic Safety Administration (NHTSA), already had its own set of classification systems, but it announced in September 2016 that it was adopting the classification standards proposed by SAE. SAE’s classification system has been used as a common classification standard by most autonomous driving researchers and practitioners. According to the SAE classification standard, there are six technology levels from Level 0-Level 5, ranging from no autonomous driving to fully autonomous driving [14].

According to the SAE classification criteria, Level 0 is nonautonomous, i.e., purely manual, and does not have driverless capabilities. The vehicle only provides safety warnings and protection systems to assist when necessary. Level 1 is a driver assistance system that assists the driver with a single operation such as acceleration, deceleration, or steering in certain traffic scenarios. Level 2 refers to partial automation. Systems at this level have multiple functions such as speed control and steering, but require the driver to monitor the road environment in real-time and be ready to take over the vehicle at any time. Level 3 refers to autonomous driving under limited conditions. In other words, the automated driving system performs all driving operations when driving conditions are met, and the system proactively notifies the driver when he or she needs to take over the vehicle. Level 4 and Level 5 correspond to high automation and full automation, respectively, and share common characteristics of safe autonomous driving, but the difference is that the former is only applicable to specific road scenarios, while the latter can cope with all driving conditions [15].

Thanks to the rapid development of smart vehicles in recent years, several companies worldwide have announced the launch of driverless smart cars around 2020 as of now. According to relevant predictions, by 2022, intelligent vehicles with self-driving modes will gradually appear in urban traffic environments; only after 2025 will fully driverless intelligent vehicles be developed in large numbers and gradually become commercialized; around 2035, the sales of driverless intelligent vehicles will be as high as about 21 million units worldwide, by which time the intelligent. The global market share of vehicles with autonomous driving function will reach or even exceed 25%. In addition, for our country, the huge market demand, the amazing car sales, and the strong consumer demand for high technology will make China expected to be the largest market for smart vehicles worldwide at some point in the future [16].

3.1.3. Visual Saliency Improvement Theory

The human visual system has the ability to quickly search and locate objects of interest when confronted with natural scenes. This visual attention mechanism is an important mechanism for people to process visual information in daily life. With the dissemination of massive data brought by the Internet, how to quickly obtain important information from massive image and video data has become a key problem in the field of computer vision. The introduction of this visual attention mechanism, i.e., visual saliency, in computer vision tasks can bring a series of significant aids and improvements to visual information processing tasks. The advantages of introducing visual saliency are mainly in two aspects: first, it can allocate limited computational resources to more important information in images and videos; second, the results of introducing visual saliency are more in line with people’s visual cognitive needs. Visual saliency detection has important applications in target recognition, image and video compression, image retrieval, and image retargeting. Visual saliency detection model is the process of predicting which information in an image or video will receive more visual attention by computer vision algorithms [17].

Visual Attention Mechanism (VA) refers to the automatic processing of regions of interest by humans when confronted with a scene, while selectively ignoring regions of interest. These regions of interest are called salient regions. Visual saliency includes both bottom-up and top-down mechanisms. Bottom-up can also be considered as data-driven, i.e., the image itself is attractive, while top-down is a focus on images under the control of human consciousness. The field of computer vision has mainly focused on bottom-up visual saliency, while top-down visual saliency has been less studied in the field of computer vision due to the lack of understanding of the human brain structure and its role in revealing behavioral mechanisms [18].

3.2. Feature Point Tracking Algorithm Research Method

Visual navigation uses the geometric constraint relationships between tracking feature points in an image to solve the navigation information. Therefore, the tracking accuracy of feature points will directly affect the solving accuracy of navigation results. At present, feature point tracking algorithms are mainly divided into two categories: feature point method and optical flow method. The feature point method extracts and describes feature points in continuous frame images, and then matches and identifies the same feature points to achieve the tracking of feature points; the optical flow method describes the motion of feature points in continuous frame images, and it calculates the instantaneous velocity of feature points, and if the time interval is small, the motion displacement of feature points can be obtained. These two types of algorithms have their advantages and disadvantages [19].

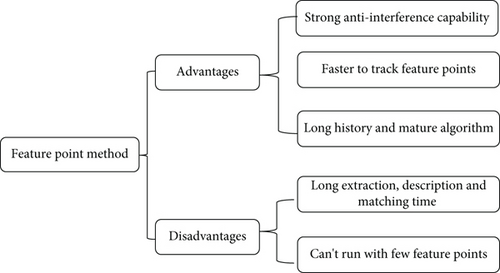

The feature point method has the advantages of (1) the algorithm is insensitive to changes in illumination, motion and noise, and has a strong anti-interference capability; (2) the camera can track feature points even if it moves faster, and has a strong robustness; (3) the research time is long and the algorithm is relatively mature. There are two disadvantages as follows: (1) the extraction, description, and matching of feature points are time-consuming to compute; (2) it does not work properly in environments with few features [20]. As shown in Figure 2.

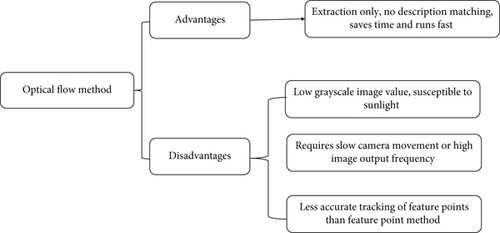

The optical flow method has the following advantages: only feature point extraction is performed, no feature point description, and matching is performed, which saves computation time and the algorithm runs faster. It has the following disadvantages: (1) the algorithm assumes the image gray value is constant, so it is easily affected by lighting; (2) it requires slower camera movement or higher image output frequency; (3) the tracking accuracy of feature points is not as good as the feature point method. As shown in Figure 3.

3.2.1. Feature Point Method

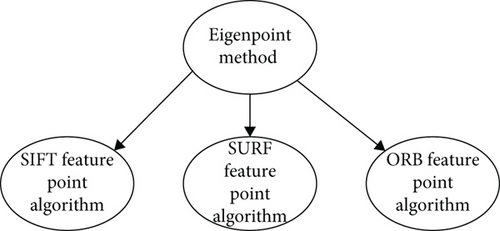

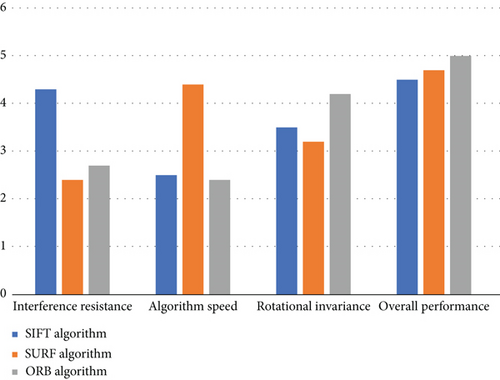

The method of tracking feature points by using feature point method is: first, extracting feature points in consecutive frame images; then, calculating the descriptors of feature points; after that, matching feature points in different frame images according to the similarity of descriptors, that is, the tracking of feature points is realized. The commonly used feature point methods are SIFT algorithm, SURF algorithm, ORB algorithm, and so on. Different feature point methods have different specific forms of descriptors and adopt different similarity calculation criteria, i.e., different matching criteria for feature points. The SIFT (Scale-Invariant Feature Transform) algorithm is able to identify the same feature points in consecutive frame images that have different scales and undergo rotation. In addition, the algorithm also has good performance when there are luminance variations and noise interference in the continuous frame images. However, the algorithm is computationally intensive and the real-time performance is not good. The SURF (Speeded Up Robust Features) algorithm improves the SIFT algorithm, and the algorithm maintains robustness to image scale and rotation changes, reduces the complexity of the algorithm, and makes the algorithm computationally faster by at least three times. The ORB algorithm is much faster than the SIFT algorithm and SURF algorithm, but the ORB algorithm has only rotation invariance to image changes. The ORB algorithm is a combination of the FAST feature point extraction algorithm and the BRIEF feature point description algorithm, both of which have very fast computational efficiency, but neither of them has rotation invariance or scale invariance. The ORB algorithm fully implements rotation invariance on the basis of these two. As shown in Figure 4.

- (1)

Euclidean distance

- (2)

Hamming distance

For the feature point descriptors of SIFT algorithm and SURF algorithm, the elements are the image gradient change values in the neighborhood of the feature points, so the Euclidean distance is used as the similarity calculation criterion; for the feature point descriptors of ORB algorithm, the elements are in the form of binary strings, so the Hamming distance is used as the similarity calculation criterion. After feature point extraction and matching for two images, two feature point sets are obtained, and then feature point matching is performed using the similarity calculation criterion, i.e., for a feature point in point set one, the descriptor distance is measured with all feature points in point set two and matched with the feature point that is close to it in point set two. This is a violent matching method, and the Brute Force Matcher class can be used in OpenCV for feature point matching operations.

3.2.2. Optical Flow Method

The optical flow method only needs to extract feature points and does not require feature point description and matching, and the computation time of optical flow is much less than the feature point description and matching time. Since feature point description and matching are not required, corner points in the image can be extracted and used as feature points for optical flow tracking. Corner points are the intersection of two or more edges in an image, and the gray value of the image at the corner point changes significantly, so the extraction rate of corner points based on grayscale images is very fast and quick, which also makes the tracking of corner points take less time. The commonly used corner points are Harris corner points, GFTT corner points, etc. After the corner points are extracted, the corner points are tracked using the optical flow method and the specific principles of the optical flow method are described below. The optical flow method assumes the following conditions: (1) the pixel gray value of feature points in space in consecutive frame images remains constant; (2) the pixel position of feature points in space in consecutive frame images changes less or the time interval of consecutive frame images is small; (3) all pixel points near the neighborhood of feature points have the same motion trend.

When the feature points are under different scales of images (i.e., the feature points occupy different numbers of pixels in the images), the surrounding pixel environment of the feature points may be different, which means that the optical flow method may not achieve the tracking of the feature points. In this case, an image pyramid form can be added to the optical flow method to track feature points. The image pyramid is a stack of multiple layers of images, with the bottom image being the original image, and each layer up, the lower image is scaled by a certain number of times. By scaling the image, the feature points in the image are represented at various scales, so that feature point tracking can be achieved even if the feature points are in two images with different scales.

4. Research Results and Discussion

4.1. Research Results

This paper focuses on intelligent vehicle vision navigation algorithms with improved visual saliency, so more comparative analysis of ordinary human-driven vehicles and visually navigated intelligent vehicles is conducted to study the advantages of improved intelligent vehicle vision navigation algorithms. The data analysis is used to determine the deficiencies of intelligent vehicle visual navigation, to make further improvements, and to make certain theoretical contributions to the future artificially intelligent driving vehicles.

First, this paper conducts a comparative analysis of several specific algorithms of the feature point algorithm to analyze the advantages and disadvantages of individual algorithms and the impact on the data in this paper. The SIFT (Scale-Invariant Feature Transform) algorithm is able to identify the same feature points in consecutive frame images with different scales and rotations. In addition, the algorithm also has good performance when there are luminance variations and noise interference in the continuous frame images. However, the algorithm is computationally intensive and the real-time performance is not good. The SURF (Speeded Up Robust Features) algorithm improves the SIFT algorithm, and the algorithm maintains robustness to image scale and rotation changes, reduces the complexity of the algorithm, and makes the algorithm computationally faster by at least three times. The ORB algorithm is much faster than the SIFT algorithm and SURF algorithm, but the ORB algorithm has only rotation invariance to image changes. The ORB algorithm is a combination of the FAST feature point extraction algorithm and the BRIEF feature point description algorithm, both of which have very fast computational efficiency, but neither of them has rotation invariance or scale invariance. The ORB algorithm fully implements rotation invariance on the basis of these two. As shown in Figure 5.

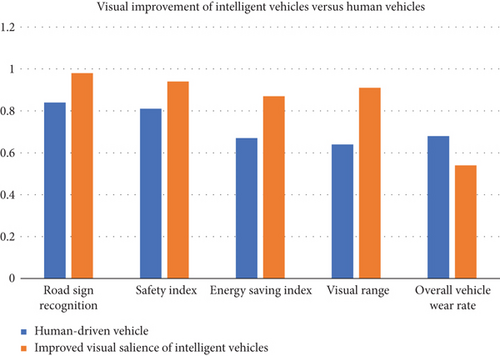

After big data processing, the article made some comparisons between visually improved intelligent vehicles and artificial vehicles in five aspects: road sign recognition, safety index, energy saving index, visual range breadth, and overall wear rate of the vehicle. The results show that, except for the overall wear and tear rate of the vehicle, the visually improved intelligent vehicle is higher than the visually improved functional vehicle in terms of road sign recognition, safety index, energy saving index, and visibility range width. Therefore, it can be seen that the overall comparison between vision improvement intelligent vehicles and artificial vehicles has obvious advantages, and the overall performance and car wear and tear and even energy consumption have better performance. As shown in Figure 6.

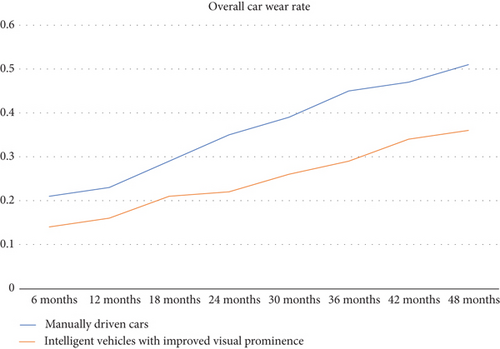

In this study, a 4-year comparative analysis of the overall vehicle wear rate was also conducted, and after the comparison, it was seen that the wear rate of the artificially driven vehicle was higher than the level of the visually significant improved intelligent vehicle in all time periods. It can be seen that the life span of the visually significant improved intelligent vehicles as well as parts maintenance aspects are better than the artificially driven vehicles, although the visual improvement of intelligent vehicles technology is not mature enough, but has compared out relative advantages for the development of intelligent vehicles to provide greater momentum (Figure 7).

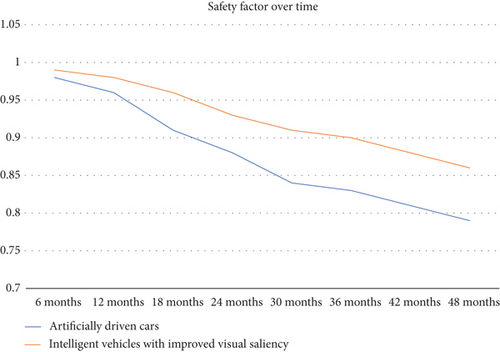

In this paper, the study also conducted a 4-year comparative analysis of the overall safety rate of the vehicle, and after the comparison, it is seen that the overall safety coefficient of the manually driven vehicle is higher than the level of the visually significant improved intelligent vehicle in all time periods. Although the difference between the two is not significant, a higher safety factor is better than a lower one. It can be seen that although the visually significant improved vehicles are not mature, the advantage of their high safety coefficients has been demonstrated. Therefore, this result can provide some theoretical basis for the development of intelligent vehicles. As shown in Figure 8.

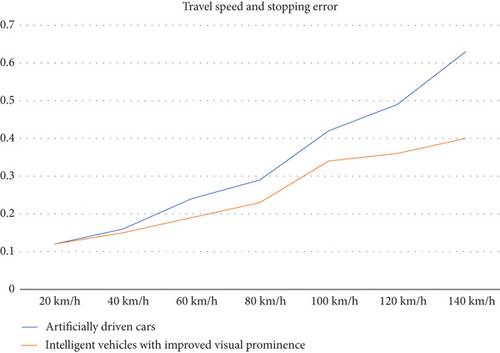

In this study, a comparative analysis of the parking error of the two vehicles at each driving speed was conducted, and after the comparison, it was seen that both the visually significant improved intelligent vehicle and the manually driven vehicle had a gradual increase in parking error with the increase in driving speed. However, the error of the visually significant improved intelligent vehicle is always smaller than that of the manually driven vehicle. Although the visual saliency improvement intelligent vehicles than ordinary vehicles parking error is much smaller, but the parking error is still relatively high and does not meet the needs of modern intelligent vehicles. As shown in Figure 9.

4.2. Optimization of Visual Navigation Intelligent Vehicle Path Recognition Image Processing Algorithm

4.2.1. Acquisition of Visual Saliency Image

One of the important bases of the system design and optimization technology of the visual navigation intelligent vehicle path recognition image processing algorithm system is its ability to achieve the most rapid, reasonable, and efficient acquisition of digital image content information. Only the present system does this on the basis of the design. In order to improve the intelligent vehicle path information, the image processing system based on visual navigation algorithm is optimized. As mentioned earlier, in the process of practical application promotion, the relevant industry technicians generally use more specific detection methods, and these methods exist singularity. Linear algorithms for path noise detection and recognition are affected by factors such as changes in external light intensity and are therefore more difficult to adapt to external changes. So now we need to learn how to digital image effective information for more effective and reasonable ground information acquisition, effective and reasonable access to digital image information effective data also need us to camera signal analysis as the core technical basis, through the software to obtain the camera information, image data for image sampling, detection, processing and quantification. At the same time, image measurement also requires that the data is at least in the form of a two-dimensional matrix map, which can more effectively and reasonably label the location information. Specifically, digital image processors generally use grayscale pixels as the basic unit for describing grayscale color. A grayscale pixel is divided into only 256 lowest gray levels corresponding to a specific color. If each grayscale pixel has a white value of its own, then the lowest grayscale level value corresponding to its specific grayscale color can generally be set to the number 255, and the lowest grayscale level of the corresponding color is processed to the number 0.

The basic practice of the interface between some existing topics of analysis and discussion and research is to simply list the data and materials, without much practical significance, for the greater majority of the Chinese themselves, but also unable to fully understand its conclusions, rather than simply carry out such formalistic research; it is better to further combine the actual work of the local situation and then the relevant issues item by item rather than simply conducting such a formalistic study; it would be better to further analyze each issue in the context of the actual work on the ground and bring this research project to its most practical conclusion. Obtaining digital images will be the important core of the image processing algorithm problem of optimized visual navigation and intelligent vehicle path fast identification in the future. The main consideration for further optimized visual navigation and intelligent vehicle path fast identification image processing algorithms is to further promote the further development of new generation intelligent vehicle applications in a better and more efficient way and gradually solve the above-mentioned existing key technical problems. Therefore, it is more necessary to continue to focus on the problem of rapid access to digital image technology.

4.2.2. Continuous Enhancement of Image Visual Saliency

After the success of obtaining digital image information, further continuous attention to other related algorithms of image enhancement technology is needed. In other words, the algorithm is to continuously acquire digital image information and obtain continuously enhanced images, which is a very important part of the development process of path recognition and image processing algorithm optimization.

Although these workflows are still relatively mature and necessary, the work and process of digital image data acquisition and production are often difficult to carry out because of the difficulty of practical operation and use. This kind of image resource data acquisition related work itself is not only required to have professional and related training institutions staff to personally carry out the operation of the specific technical application, but also because in the process of the actual acquisition of related data operations will also be inevitably or inevitably subject to serious interference by factors such as environmental noise, once the image reappears If the image is seriously disturbed by environmental noise and other factors, it means that there may be a series of situations such as the lack of image information data quality, and the impact of some detailed information on the image content data quality will be greatly reduced. For the actual situation, a series of new image processing methods and techniques must be used to solve this problem. The process of enhanced image processing also has some extremely important historical significance, and the decision to implement its contents in stages is mainly for further research to solve the above-mentioned related problems in the future, and timely processing and removal of all kinds of redundant image information and useless image information left in the enhanced image information processing, including image edge information and image contrast data. From the perspective of the image gray value transformation technology of a basic specific use of demand to start the analysis, if in the lighting system itself there is too strong high brightness saturation light of the actual use of demand or the actual exposure and cannot reach an effective degree to meet the visual needs of the human eye, then we really hope to obtain or get the most direct specific effective image signal in the relevant image gray Value transformation distribution information may also present and display the distribution information is very concentrated and fuzzy situation, and the image signal and its distribution range related to the change of image gray value may also have a great jitter, which will lead to the vehicle path constantly jitter caused by narrow image differentiation will be significantly reduced, in the long run, the path recognition function of the error rate must also only have a great increase. This means that the system cannot recognize the vehicle image path information accurately by facing it correctly, which obviously goes against the original design intention of visual navigation and smart cars and their future development path. The image signal obtained by path recognition of visual navigation smart cars is often accompanied by high pixel brightness of the background image, which is not conducive to quickly meeting the actual needs of consumers. Among them, the path recognition algorithm of smart cars based on visual navigation will directly and greatly affect the output quality of the overall background image information processing, so this requires us to further suppress the black areas affecting the background image pixel brightness by enhancing the background image contrast processing. The optimization learning method of segmentation transformation and linear transformation can accomplish the overall system optimization of both the intelligent recognition function of the visual speech navigation system and the image acquisition and processing analysis capability of the intelligent vehicle path system. In general, the three-stage segmented linear transformation algorithm can be directly applied to many other important aspects of image contrast expansion and effective information acquisition.

4.2.3. Rational Application of Filtering Algorithms

All these specific details of the work process and its application in the smart car reality environment of a variety of specific technology and application of the ultimate goal is to complete the optimization of car vision navigation smart car path recognition image processing algorithm needs to achieve a series of complex work tasks, and in all this in the realization of these complex specific details of the work process involving a variety of specific technology and different technical aspects; filtering algorithms will always be the most important step that needs to be taken to accomplish their purpose. The common algorithm of filtering in fact in the vehicle reality technology system filtering will have a lot of algorithm species, especially the median filtering algorithm, boundary keeping filtering and other algorithm species in the filtering algorithm application is relatively more. Although we say that the above algorithms can effectively achieve at least a certain degree of the meaning of the effective elimination of some random signal noise, but in fact, once this random noise signal appears very too dense, then its own for our specific image of the effective support and role in the processing will naturally be significantly limited. The effective support and role for our specific image processing will be significantly limited, especially in the process of full image denoising and micronoise reduction, which may even eventually lead to the image effect gradually becoming more blurred, so that the pattern will not only lead to the fundamental inability to achieve the goal of perfect image recognition processing, but it will also inevitably lead to further impact on the visual navigation system; and it is also bound to further affect the accurate and effective analysis of image data information related to the recognition of path trajectories of visual navigation systems and smart cars.

The median filtering algorithm is one of the main algorithms, which uses a window containing only an odd number of gray pixels to directly scan the points in the grayscale image randomly, and can sort the grayscale values of each pixel in the first two grayscale values and the window for a reasonable comparison, and then automatically selects the middle value of the two grayscale values in the window directly. In terms of high image and denoise effect, the performance of image noise reduction achievable by both filtering algorithms will be very obvious. In addition, whether it is focused on the image edge information point data rectification and processing ability or focus on the image edge information data processing ability, it has shown that it has a rather good visual processing effect, can really guarantee in the visual navigation system, and intelligent car path information recognition platform to achieve the image information recognition data integrity and fluency. It should fully attract the attention of our researchers at all levels of related departments to pay attention to this further technology.

5. Conclusion

- (1)

Acquisition of visually salient images. As the previous analysis said in its actual technology and application and promotion process, the relevant industry technical personnel in practice will be commonly adopted to the use of a variety of more specific applicable detection methods and will also mostly have algorithmic singularity, whether it is a noise detection recognition algorithm based on the parallel lane itself or a linear algorithm itself is a large degree. To a certain extent, this will also often or will be directly subject to some external factors or some direct influence; path noise detection and recognition of the linear algorithm itself are the once that will be affected by the external light intensity changes and other factors, which may be unable to go alone to the external changes due to some relatively random existence of the light and noise signal environment to adapt

- (2)

Continuous enhancement of the visual saliency of the image. After the success of obtaining digital image information, there is a need to further continuously focus on other related algorithms of image enhancement technology, in other words, the algorithm is to continuously obtain digital image information and obtain continuously enhanced images, which is a very important part of the development process of path recognition and image processing algorithm optimization

- (3)

Rational application of the filtering algorithm. Whether it is specifically focused on the image edge information point, data rectification and processing ability or focus on the image edge point information data processing ability have shown to have a considerable certain relatively good visual processing effect, can really guarantee in the visual navigation system, and intelligent car path information recognition platform to achieve the image information recognition data integrity and fluency should fully cause. Our all levels of relevant departments of researchers to pay attention to this further technology of extensive attention

Conflicts of Interest

The authors declare no competing interests.

Open Research

Data Availability

The labeled dataset used to support the findings of this study are available from the corresponding author upon request.