[Retracted] Audio Signal Acquisition and Processing System Based on Model DSP Rapid Design

Abstract

When using DSP technology, technicians can easily and conveniently replace DSP audio processors, make second-party equipment, improve processor performance, reduce application costs, and receive and make music to meet the needs of different signals. Introduces knowledge of speech comprehension, which includes theoretical and cognitive processes, such as pre-speech characterization, final discovery, behavior, structure, and knowledge. By analyzing and comparing various common features, Mel frequency cepstrum coefficient is used to determine the physical features, and the results of traditional algorithms are studied, compared, and evaluated. Research, compare, and evaluate numbers in traditional algorithms. The simulation is based on the algorithms of all the connections used in the system and checks that the algorithm is correct. Design an experimental model, compare the advantages and disadvantages of algorithms and algorithms, consider the process requirements and changes of the whole algorithm, and select the algorithm accordingly algorithm. The experimental results show that the speed recognition of the TW algorithm is not much different from that of the HMM algorithm, and the efficiency of the DTW algorithm in the training models is lower than that of the HMM algorithm. Given the limited resources of the DSP platform in this system, we have chosen the DTW algorithm as the self-reporting system for this system. Finally, the performance of the system is checked and the test results are released. Observations of the experimental instrument showed that the system was effective in being able to recognize small words and discrepancies in speech.

1. Introduction

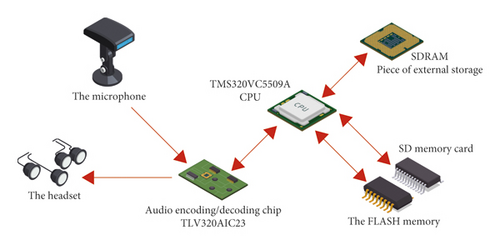

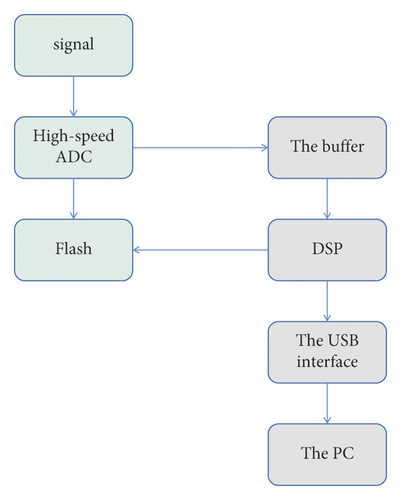

Since the birth of the first microprocessor, its technical level has been rapidly improved. From the first single-chip microprocessor 4004 launched by Intel in the 1960s, but in more than 40 years, the integration of microprocessors has developed from the earliest thousands of transistors to hundreds of millions of transistors, the word length has increased from 4 bits to 64 bits, and the operation speed has increased from 1 Hz to more than Ig Hz (Ig = 230). The rapid development of technology has triggered the revolution of information industry. Information technology with computer technology and communication technology as the core is changing people’s way of life and works at an unprecedented speed [1]. Digital signal processing is a core issue in information technology. As shown in Figure 1, the core device to realize digital signal processing is digital signal processor (hereinafter referred to as DSP). It is different from general microprocessor. General purpose microprocessors generally adopt von Neumann structure, data and programs share a storage space, and many functions are realized by microcode. DSP adopts Harvard structure or improved Harvard structure, and the data space is separated from the program space, which greatly improves the instruction execution speed: DSP contains special hardware multiplier and adder, which can complete multiplication and accumulation in one clock cycle. These characteristics make them especially suitable for the field of real-time processing with high speed, repeatability, and intensive numerical operation. TL company is a leader in DSP field. The data shows that one of every two digital cellular phones adopts it products, and 90% of the world’s hard disks and 33% of modem adopt it DSP technology [2]. At present, the widely used DSP products of IT companies include three series: cz000, c5o00, C6000, and C3X. CZ000 is a series of controllers, all of which are 16 bit fixed-point DSP, which is suitable for the field of control. C5000 is a fixed-point low-power series, which is suitable for the field of handheld communication products. C6000 is a 32 bit high-performance DSP chip. Where c62xx is fixed-point series and c67xx and c64xx are floating-point series. Although C3x series is not the mainstream product of it at present, it is still widely used as a 32 bit low-cost floating-point DSP. The development of digital signal processing technology makes it possible to use digital method to process speech signal in real time. Traditional analog methods deal with voice signals, the hardware equipment is expensive, cannot be upgraded, and the product life cycle is short. Using digital technology to process speech signal has the advantages of strong anti-interference, easy transmission, and processing, which represents the development direction of speech processing technology. The high-speed and programmable characteristics of DSP chip make it very suitable for the field of voice signal processing. The voice processing system based on DSP is the future voice processing system [3].

2. Literature Review

Liang, W. proposed an algorithm for endpoint detection based on energy and discriminatory information. The algorithm can update the noise energy in all frames including speech frames by using the energy and discrimination information of frame signals, so as to track the change of noise energy. Experimental results show that this method improves the accuracy of energy-based algorithms in tank noise environments [4]. Lee et al. proposed the setting method of high and low energy closed values, and used the short-time zero-crossing rate for secondary processing to identify clear consonants and noise with very small energy. The experiment of isolated word recognition using linear prediction coefficient and dynamic time warping shows that the recognition rate can be improved from isolated words to combined words [5]. Other studies have adopted new speech feature parameters for endpoint detection, such as Cerina et al. according to the pronunciation characteristics of Chinese speech, the interference of DC noise is effectively eliminated by using amplitude and power spectrum [6]. Yang et al. proposed a new endpoint detection method based on the similar distance between autocorrelation functions [7]. Miller et al. used a new time-frequency parameter to count the effective energy of speech signal in time domain and frequency domain, and detected the speech segment by setting a closed value. Although the above methods have achieved certain improvement results in their own experimental environment, the performance in strong noise environment, the selection of threshold value in the implementation process, and avoiding the influence of impulse interference noise need to be further studied and verified [8]. Yan and Yang believe that embedded system based on computer technology can be designed for special applications. It is a special computer system. We can tailor the hardware and software of embedded system to make it suitable for a specific application [9]. Eder et al. believes that the embedded system has higher reliability, allowing the system to work in harsh environments and cope with sudden power failure. It has low cost, small size and low power consumption, so that the system can be better embedded into other devices. For audio and video system, it must also have a high real-time performance [10]. Zhang et al. believes that the embedded processor has experienced the evolution from simple to complex, from single core to multi-core, and its data processing ability has been continuously improved. Among them, the digital signal processor DSP can efficiently perform a large number of data operations and run audio and video algorithms. As a high-performance processor, ARM processor is widely used in embedded system design [11]. Heath believes that in the era of analog signal, the signal waveform is easy to be disturbed during transmission, and its storage also needs huge capacity. With the development of digital technology, audio and video technology has also made great strides. Audio and video signals are sampled and quantized to obtain audio and video digital signals, and then the audio and video digital signals are compressed and encoded to reduce the amount of data. Among them, the compression technology of audio and video digital signals is constantly developing, and the compression ratio is constantly improving [12, 13]. Hao et al. believes that the development of digital video technology is complex, including many sampling or compression standards, and with the development of technology, these standards are constantly innovating, which brings difficulties to developers. The existing implementation of digital video can only run on specific hardware conditions and operating system. Developers must manually program the underlying code of audio and video, which makes the development process very complex and time-consuming.

Based on the current research, this study proposes to analyze and compare several common characteristic parameters, and finally use Mel frequency cepstral coefficient as the characteristic parameter of the system; In the aspect of recognition algorithm, this paper studies and compares the algorithm, and puts forward improvement measures for the shortcomings of large amount of computation in the traditional algorithm. The algorithm used in all links of the system is simulated to verify the correctness of the algorithm. Design simulation experiments compare the advantages and disadvantages of the algorithm, comprehensively consider the system requirements and adaptability to the algorithm, and select the algorithm as the recognition algorithm of the system.

3. Theoretical Knowledge of Speech Recognition

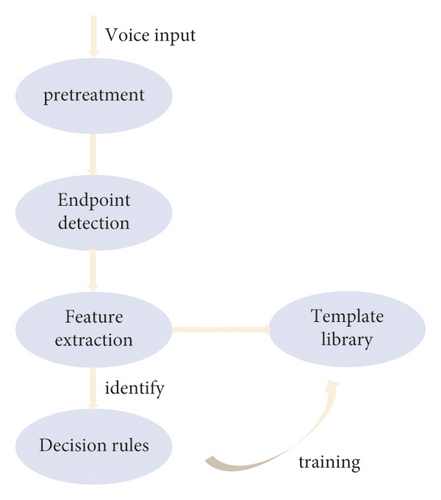

This study mainly studies the speech recognition of isolated words. The designed recognition system is for isolated words with small vocabulary. The so-called isolated word means that the speech to be recognized is a single syllable, word, or phrase. The structure block diagram of a typical isolated word recognition system can be seen in Figure 2.

The system consists of speech signal preprocessing, feature extraction, speech template library, and matching decision can be seen in Figure 2.

In addition, the main process of speech recognition can be divided into training and recognition. The first is training. The speech signal is preprocessed and its features are extracted. On this basis, the speech template is established. This process is called training. The next recognition process refers to the process of matching the newly extracted features with the template features. Match the characteristics of the speech signal to be tested with the trained speech template, find the optimal solution in the template according to the selected matching strategy (decision rules), and obtain the recognition result [14].

3.1. Speech Signal Preprocessing

- (1)

Studies show that the frequency of speech signals is usually concentrated between 300 and 3400 Hz. According to the Nyquist sampling theorem, when the sampling frequency is twice or equal to the frequency of the analog signal, the original analog signal can be completely restored from the sampled signal. In most practical applications, the sampling frequency of 8 kHz is adopted. The microphone (MIC) is externally connected to the PC. In the windows environment, the voice can be sampled by using the recorder software of windows. The sound card of PC can filter and analog-to-digital convert the voice signal to obtain the voice file in wave format, so as to prepare materials for future work. Adjust the volume of the microphone to the appropriate size before formal recording. Click the “start” button of the recorder, speak with your mouth to the microphone (keep an appropriate distance), and then click the “stop” button to end the recording. Finally, save the recorded voice. The voice file is saved in PCM format. In terms of attributes, 8 kHz, 16 bit, mono and 15 kB/s attributes are selected for saving. Save the recorded voice file in format to the computer for subsequent use. The waveform of voice “power on” is recorded by the above method.

- (2)

Pre-Emphasis of Speech Signal. The speech will be attenuated by 6/DB octave after lip blessing. Therefore, before processing the speech signal, the original signal is usually increased (or accentuated) according to the proportion of 6/DB octave. The purpose is to accentuate the high-frequency part of the speech to improve the high-frequency resolution of the speech and eliminate the low-frequency interference, so as to reduce the impact of lip blessing.

- (3)

Windowing and Framing of Speech Signal. After the speech signal is pre-weighted, it needs to be windowed by frame. According to the “short-term fixed” nature of the speech signal, when analyzing speech signal, it can be divided into short segments (called analysis frame) for processing. This process is called “framing,” each segment is called a “frame,” and the duration of each frame is called “frame length.” Ensure frame continuity to move smoothly between previous and next frames, the overlapping segmentation method is often used for frame division. The overlapping part of two adjacent frames is called “frame shift.” In general, the number of frames per second is approximately 33–100 frames, i.e., the frame length is 10–30 ms, and the length of frame shift is 0–1/2 times of the frame length [15].

Here, N is the length of the window. Because the characteristics of short-time analysis parameters of speech signal are relatively affected by window function ω(n), the selection of window function ω(n) is very important in order to obtain short-time parameters that can better reflect the characteristics of speech signal.

3.2. Endpoint Detection of Voice Signal

In complex application environment, how to distinguish speech components from non-speech components from input signals is an important problem in speech processing. Speech endpoint detection is the process of determining the beginning and end of a speech portion of an input signal. Accurate endpoint detection is important for a speech recognition system. It can distinguish the real speech data from the collected data, so as to reduce the amount of useless data and calculation, and make the processing accurate and efficient. Generally, endpoint detection is realized by using two parameters: short-time average energy and short-time zero-crossing rate of speech signal.

3.2.1. Based on Short-Time Average Energy

When the system input signal-to-noise ratio is very high, only the parameter of short-time energy is needed to distinguish the speech part from the noise part. However, in the complex practical application environment, it is difficult to achieve a high signal-to-noise ratio. At this time, the parameter of short-time average zero-crossing rate needs to be used to judge.

3.2.2. Based on Short-Time Average Zero-Crossing Rate

In practical applications, short-term mean energy and short-term mean zero intersection velocities are often combined to determine the endpoint, which is called the double-threshold comparison method.

3.2.3. Double-Threshold Comparison Method

The main idea of the double-threshold comparison method based on short-term average power and short-term average zero-velocity is to set high thresholds and low thresholds for these two parameters, respectively, and judge the beginning and end of the speech segment by comparing the two parameters with their respective high and low thresholds.

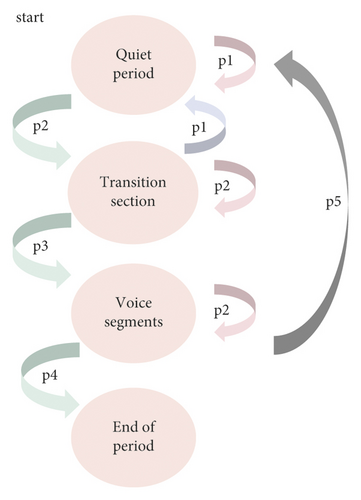

The audible signal required for endpoint detection is divided into four segments: silent segment, transition segment, valid speech segment, and end segment. The calculation flow of the double threshold comparison algorithm is to judge the conversion relationship of the four stages of speech.

- (i)

In the initial stage of detection, the default signal is in the mute section. If any of the short-term averages exceeds the minimum threshold, include the transition section.

- (ii)

When in the transition phase, if the short-term average energy value exceeds its maximum threshold, it will enter the effective speech phase.

- (iii)

When the two parameter values are reduced below their respective minimum threshold, the end section will be entered, that is, the end of speech. It should be noted that the following two situations are likely to lead to endpoint detection errors: the first error is caused by short-time noise, and the second error is caused by the time interval between words in the effective speech segment. In order to prevent these two misjudgments, two more thresholds can be set, namely the minimum voice segment length threshold and the maximum mute segment length threshold. The speech state transition relationship of the double threshold method is shown in Figure 3.

3.3. Hardware Structure and Principle of the System

3.3.1. Overview of the Overall Structure of the System

The voice signal acquisition and processing system is divided into two parts, including signal acquisition, digitization, and processing subsystem and PC, see Figure 4.

Signal acquisition, digitization, and processing subsystem: in order to realize high-precision sampling, transmission and real-time algorithm processing of voice signals, we adopt the framework structure of high-performance digital signal processor and high-speed bus technology. DSP is used to complete the real-time processing algorithm with huge amount of calculation (FFT, correlation analysis, power spectrum analysis, etc.), and the high-speed bus technology is used to complete the fast transmission of processing results or sampling data stream. The traditional communication interface between peripheral and host is generally based on PCI bus, ISA bus, or RS232C serial bus. Although PCI bus has high transmission speed (132 MB/s) and supports “plug and play” function, its disadvantages are troublesome plug and plug and limited expansion slots (generally 5∼6). The ISA bus obviously has the same problem. Although RS232C serial bus has simple connection, its transmission speed is slow (56 kbps) and the number of host serial ports is limited. The author uses DSP to design a real-time data acquisition and processing subsystem based on USB2.0 bus [17]. The structure is shown in Figure 5. The advantage of USB is that there will be no interrupt request and DMA, memory, and I/O conflict; strong expansion ability; and easy installation. Among them, USB2 0 has a transmission speed of up to 480 mbps and has gradually become the mainstream of computer interface.

Under the control of PC, the signal acquisition, digitization, and processing subsystem is responsible for sending the final calculation results to PC for storage and display. The computer application program is easy to realize rich graphical interface and has good man-machine dialogue interface for research.

3.3.2. Signal Analog-to-Digital Conversion Channel

For the signal input channel, we adopt two AD9042 analog-to-digital conversion function chips. It has high speed, high performance, and low power consumption. It can work only with +5 V power supply, and provide data output at the sampling rate of 12B and 41 MHz. Especially for the requirements of multi-channel, the AD9042 design ensures that it has a distortion-free dynamic range of 80 dB on the bandwidth of 20 MHz, and the typical signal-to-noise ratio is 68 dB. The dual-port RAM space connected with 4K is divided into two blocks. When 2B data is collected, an EXT_INT7 interrupt will be generated. DSP will take the data away, and the number of data taken by DSP does not affect A/D sampling.

3.3.3. DSP Processor

The data signal after AD conversion is sent to DSP processor for operation and processing. Factors such as operating speed, bus width, cost performance, and power consumption need to be considered when selecting a DSP chip. This design adopts TMS320C6201 data processor. This chip is a high-performance fixed-point digital signal processor. When the working frequency reaches 200 MHz, each instruction cycle is 5 ns and the operation speed can reach 1600MIPS. One set of 256 bit program bus, two sets of 32-bit program bus, and one package of 32-bit DMA special bus [18]. The advanced VLIW ARCHITECTURE is adopted. Eight 32 bit instructions can be executed in parallel in a single instruction cycle. Instruction acquisition, allocation, execution, and data storage need to be completed by multi-level pipeline. VLIW also has a RISC-like structure and has good compilation performance. External memory interface (EMIF) supports seamless connection with various external storage devices, including synchronous dynamic memory (SDRAM), synchronous burst static memory (SDRAM), and interface with direct asynchronous memory, including static memory, SRAM, and EPROM. In addition, the internal module of C6201 also includes two independent programmable DMA processors and 16b auxiliary channel (HBI) of host interface. DMA can divide the frequency of CPU, which can work independently of CPU and carry out data throughput according to CPU clock rate.

3.3.4. USB2.0 Communication Interface

- (1)

In its unique architecture, other functions include Smart Serial Interface Engine (SIE), which frees up built-in MCUs and performs all basic USB functions for continuous, efficient, and high-speed data transfer.

- (2)

There is 4 kB FIFO in the chip for data buffer. As a slave device, FIFO interface can be used.

-

Connect directly with DSP.

- (3)

The USB interface and application environment share FIFO directly to solve USB high-speed bandwidth issues, while microcontroller may not participate in data transmission.

- (4)

8501 single-chip microcomputer program can be run from RAM or external memory. When running from ram, it can be loaded from PC through UBS port or E2PROM to facilitate software update.

- (5)

FX2 can provide a fully integrated solution, which takes less board space and shortens development time. In FX2 packaging mode, we choose 56 pin SOPP with less circuit board space.

There are two modes for the interface between CY7C68013 and external equipment. One is SlaveFISO mode. CY7C68013 is set as slave mode, which is controlled by external equipment. Like ordinary FIFO, it reads and writes the FIFO inside the chip, sets it as synchronous and asynchronous working modes, and provides the working clock generated internally or input externally to the FIFO. Another working mode is to take CY7C68013 as the host mode, and the programmable interface GPIF can read and write the control waveform through software programming. It can read and write the data of the external interface controller, memory, and bus. This mode is suitable for the situation without external control. Slavefifos mode is selected in this scheme to read and write asynchronously. C6201 reads and writes multiple buffer FIFOs inside CY7C68013. C6201 selects the internal FIFO of CY7C68013 through I/O port, submits data packets, and obtains that the internal FIFO of CY7C68013 is “full,” “half full,” and “empty” by FLAGA, FLAGB, and FLAGC [19].

4. Experimental Results and Discussion

4.1. Traditional Algorithm

- (1)

Construct two nxm matrices d and D, starting d with the Euclidean distance d (i, j) of the frame corresponding to the parallelogram, where D is initially D = ∞ and D (1, l) = d (1, 1).

- (2)

Use equation (8) to repeatedly calculate d (i, j) until D (n, m).

- (3)

D (n, m) is the cumulative distance sum of the optimal path.

- (4)

Starting from the end point (n, m) and tracing back to the starting point (1, 1), the best path can be obtained [20].

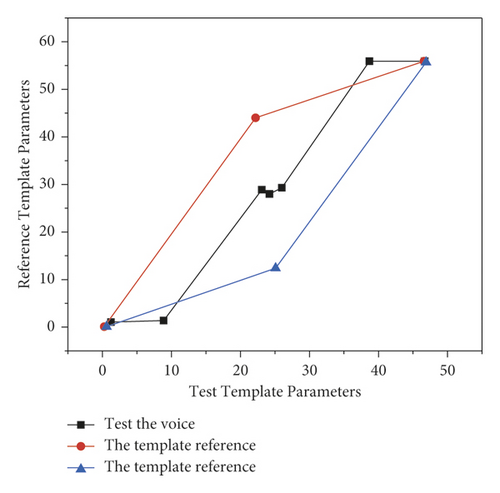

Figure 6 shows the best matching path of test voice “1” and reference template “1” in traditional DTW.

4.2. HMM Model

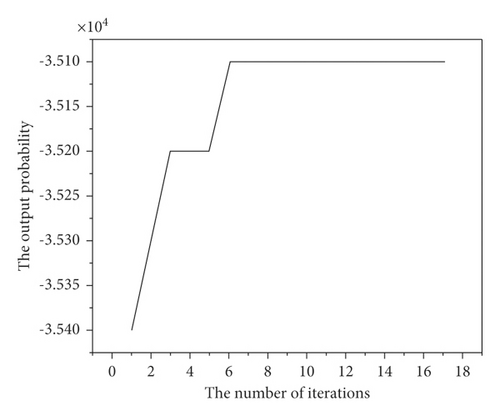

To build an HMM model, its parameters must be initialized first. In this study, the HMM model of non-spanning left-right type with state number n = 4 and Gaussian element state number M = 4 is selected for simulation. Therefore, only the first element of the initial state probability π is 1, and other elements are 0, that is, π = [1,0,0,0]. The initial state transition probability A is set by uniform distribution, the initial value of parameter B can be easily determined in discrete HMM and can be set by uniform distribution or random setting. However, in continuous HMM, the setting of the initial value of B parameter will greatly affect the results of iterative calculation [1]. The initialization method of parameter B in this study is to average the parameters of the observation sequence according to the number of States, and then form a matrix of all the parameters belonging to one segment in the observation sequence, call the clustering function k means, and calculate the mean value, variance and weight coefficient of each normal Gaussian probability density function from the clustering results.

Six command words were selected: “start,” “shut down,” “forward,” “backward,” “restart,” and “sleep.” Each word was recorded 11 times to produce 11 samples, of which 10 samples were taken for training and 1 sample was used for recognition [21]. Figure 7 shows the training process.

4.3. Comparison Experiment between DTW and HMM

This section mainly compares and analyzes the two algorithms, and selects a suitable algorithm for the subsequent implementation. The experiment mainly makes statistics on the template training time, recognition rate, and average recognition time of the two algorithms [22].

First, we need to establish a voice template library. The voice data were recorded by 3 men and 2 women in the laboratory. Six selected command words: “boot,” “off,” “forward,” “return,” “restart,” and “sleep” were recorded for 15 times, with a total of 750 voice samples. Among them, 500 samples are used for training and 250 samples are used for identification. The final experimental results are shown in Table 1.

| Index algorithm | DTW | HMM |

|---|---|---|

| Training time | 346.1S | 4400S |

| Identification time | 3.6S | 1.9S |

| Average recognition rate | 96.4% | 98.8% |

This can be seen from the results in Table 1, for the most important index recognition rate of speech recognition system, HMM algorithm has the highest recognition rate, followed by DTW algorithm, but there is little difference. However, in terms of template training time and recognition time, HMM algorithm takes longer than DTW algorithm. Especially the training time, HMM algorithm is more than ten times higher than the other two algorithms. Therefore, for the recognition of isolated words with small vocabulary, the recognition rate of DTW algorithm is almost the same as that of HMM algorithm, and the time consumption of DTW algorithm in template training is much less than that of HMM algorithm. Therefore, we choose dtw-25 as the core algorithm of this system.

5. Conclusion

This study examines the basic concepts of speech recognition, related processes, and functions of recognition systems. The work around the bulletin usually includes the following issues: examining simple technologies used in speech recognition, such as pre-instructions, windows -was windows, end-detection, and behavior decomposition, and focuses on two types of speech algorithms. Dynamic time distortion (DTW), hidden Markov model (HMM), and request metrics to improve DTW algorithms. Using MATLAB7.0 software, modeling the algorithms affects each link in the speech recognition process, examining the accuracy of the algorithm to compare the advantages and disadvantages of the DTW algorithm and HMM algorithm, and designing experimental design.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This study was supported by Social Sciences Youth Fund of Hunan Province (Fund Code: 17YBQ025): Research on the construction of aggregated mobile audio platform in the scene age.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.