Key Information Extraction Algorithm of Different Types of Digital Archives for Cultural Operation and Management

Abstract

In order to improve the effect of key information extraction from digital archives, a key information extraction algorithm for different types of digital archives is designed. Preprocess digital archive information, taking part of speech and marks as key information. Self-organizing feature mapping network is used to extract the key information features of digital archives, and the semantic similarity calculation results are obtained by combining the feature extraction results. Combine with mutual information collection, take that word with the highest mutual information value as the collection cent, traverse all keywords, and take the central word as the key information of digital archives to complete the extraction of key information. Experiments show that the recall rate of the algorithm ranges from 96% to 99%, the extraction accuracy of key information of digital archives is between 96 and 98%, and the average extraction time of key information of digital archives is 0.63 s. The practical application effect is good.

1. Introduction

Generally speaking, there are no management problems in the spontaneous stage of cultural production. Cultural production and business activities are the product of commodity economy [1, 2]. When material and cultural production develop to a certain extent, social division of labor is further clarified, and professionals and professional groups engaged in cultural production appear, both the ruling class and the ruled class try to use cultural production to serve the interests of their own class, which is the conscious stage of cultural production [3]. Only at the conscious stage of cultural production can cultural operation and management be put on the agenda. In a modern capitalist society, everything into a commodity culture products without exception has become a part of capitalists, for-profit special goods. The vast majority of cultural activities are restricted by the value of commodity production rules [4]. The tendency of commercialization of cultural production and cultural activities has become a common social phenomenon in the field of capitalist culture. The law of market economy dominates the management of cultural operations and management activities, and the quality of management is the key to the success or failure of specific cultural products in the free competition of the cultural market. Especially with the rapid development of social economy, different types of digital archives are gradually increasing, the main products of these are social culture, in order to better manage for these different types of digital files, need to study a new key to different types of digital archives information extraction algorithm, to enhance the management level of the digital scheme. Therefore, it is of great significance to study a key information extraction algorithm of different types of digital archives.

In the digital archives, information extraction is an important research topic, reference [5] proposed a digital book records mass data fast extraction algorithm. Based on the range characteristics of large-scale data attributes, the distribution samples of digital book archive data are divided into multiple subintervals to achieve data classification. By constructing a neuron model, the error terms of output are determined according to the data output of the hidden layer and output layer, and the weight of each layer of the BP neural network is adjusted. This method builds a fast extraction model based on BP neural network and realizes the fast extraction of massive archive data. Reference [6] proposed a key information extraction algorithm based on TextRank and cluster filtering. First, the key information is extracted and vectorized for Word2Vec. Then, TextRank is improved by constructing a graph model integrating word eigenvalues and edge weights, and the stable graphs obtained by iterative convergence are merged and clustered to form clusters. Then, a cluster quality evaluation formula was designed for cluster filtering, and TextRank was applied to form the final clustering. Finally, annotate the information type of the cluster. For testing the text, by comparing the key information vector distance cluster heart vector and the words information types, combines information type and key information to get the key of the text information. Reference [7] proposed a hidden Markov model based on an improved extraction algorithm of key information extraction. The web document is converted into D0M tree and preprocessed, and the information item to be extracted is mapped to state and the observation item to be extracted is mapped to vocabulary. The improved hidden Markov model is used to extract key information of the text. Reference [8] proposed a key information extraction algorithm based on word vector and location information. Vector representation model by word learning vector of each word in the target document said, will the reflect of the latent semantic relations between the word and the word vector combined with location feature fusion to the PageRank score model, choose a few top words or phrases as the key target document information, in order to complete the digital archives of key information extraction. Reference [9] proposed a key information extraction algorithm of unstructured text in the knowledge database. Six yuan group was used to optimize the hidden Markov model, probability model, and smooth processing of incomplete training samples. Initialization and termination operations were carried out for the sequences of observation values released at different times to obtain the optimal state sequence. After decoding the observation sequence, the positive sequence and reverse sequence were obtained by comparing them to filter out the states without decoding ambiguity and complete ambiguity elimination. According to the maximum probability state sequence, the text key information to be extracted is defined and the key information is extracted.

However, the above-mentioned key information extraction algorithm is suitable for different types of digital files, and the effect is not ideal because the boundary of key information extraction is uncertain. Therefore, this paper designs a new key information extraction algorithm for different types of digital archives. Firstly, the algorithm divides the main categories of key information, takes parts of speech and marks as features, and introduces the self-organizing feature mapping neural network to traverse the center of word set, thus realizing the extraction of key signals quickly and accurately. The effectiveness of the algorithm is verified by experiments.

2. Materials and Methods

2.1. Digital Archive Processing

2.1.1. The Text Participle

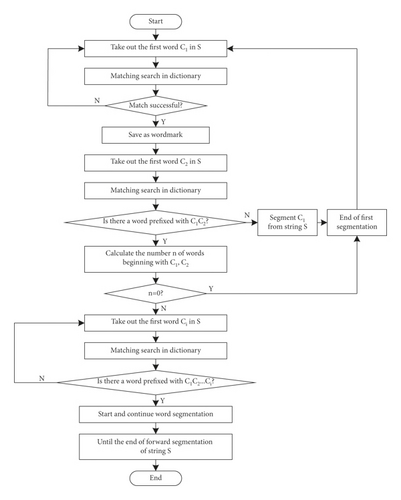

In the process of cultural management, there are many types of digital archives. Before extracting the key information of different types of digital archives, it is necessary to preprocess the key information of digital archives. The preprocessing process includes word segmentation and marking. Word segmentation refers to classifying the words in the text and setting the marks according to the categories, which lays the foundation for the key information extraction of digital archives in the future [10].

- (1)

First take out the first word C1 in S, and search in the dictionary to see if there are any words with C1 as the prefix. If there are, save them as word marks [11].

- (2)

Take a word C2 from S and match it with the dictionary to determine whether there is a word with C2 as the prefix.

- (3)

If it does not exist, split C1 from string S, ending with a word split.

- (4)

If there is, to determine whether C1C2 into words, calculate the number n headed by C1C2 words.

- (5)

If n = 0, the participle ends once [12].

- (6)

If n is not 0, then take a word Ci from S and match it with the dictionary to determine whether there is a word prefixed with C1, C2, …, Ci.

- (7)

If yes, go to Step 6.

- (8)

If it does not exist, split C1, C2, …, Ci−1 from string S, ending with a word split.

- (9)

Continue word segmentation from string Ci of S, repeat the above steps until the end of string S forward segmentation.

- (10)

Take out the last word Cn in S and match it in the dictionary to find whether there is a word with suffix C1. If so, save it as a word mark [13].

- (11)

Then take out a word Cn−1 from S and match it with the dictionary to judge whether there is a word with suffix C1C2.

- (12)

If it does not exist, it splits Cn from string S, ending with a word split.

- (13)

If there is, then judge whether Cn−1Cn is a word and count the number of words starting with Cn−1Cn, expressed by n.

- (14)

If n = 0, then the participle ends.

- (15)

If n is not 0, take out a word Ci from S and match it with the dictionary to determine whether there is a word with Ci, …, Cn−1Cn as the suffix.

- (16)

If yes, go to Step (15).

- (17)

If it does not exist, Ci, …, Cn−1Cn will be cut out from string S and a word segmentation will end.

- (18)

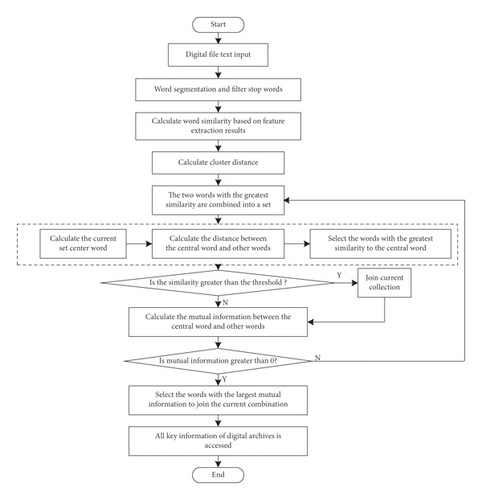

Continue word segmentation from word Ci of string S, and repeat the above steps until the end of reverse segmentation of string S, so as to remove the stop word. The specific implementation process is shown in Figure 1.

2.1.2. The Part of Speech Tagging

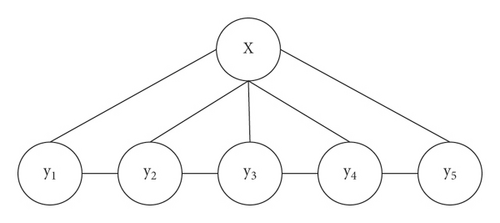

Part of speech is a grammatical attribute of vocabulary, which generally indicates the type of a word in the corpus. Part-of-speech tagging refers to the process and method of tagging the part of speech of each word. Some words contain multiple parts of speech, with different parts of speech and completely different ways of expression [14, 15]. However, in general, when a word contains one or more parts of speech, the frequency of its commonly used parts of speech is far greater than that of other parts of speech, so the accuracy of POS tagging can be ensured on the whole, and the POS tagging method can be applied to most application scenarios [16]. Conditional Random Field Algorithm (CRF) was proposed by Lafferty et al. in 2001. It is an undirected graph model combining the characteristics of the maximum entropy model and hidden Markov model. In recent years, good results have been achieved in sequence tagging tasks such as word segmentation, part-of-speech tagging, and named entity recognition [17]. One of the simplest conditional random fields is the chain structure, in this special conditional random field, the chain structure is composed of several character marks. In CRF models with only one order chain, the fully connected subgraph covers the set of the current marker and one marker before it, as well as the maximum connected graph of any subset of the observation sequence. The chained conditional random field is shown in Figure 2, and the set of vertices can be regarded as the maximum connected subgraph.

2.2. Key Information Feature Extraction of Digital Archives

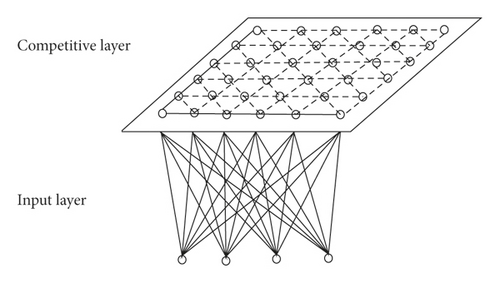

Self-organizing feature mapping neural network was proposed by a professor of neural network expert self-organizing feature mapping network of University of Helsinki, Finland in 1981 [21]. This network simulates the function of self-organizing feature mapping of the brain nervous system. It is a kind of competitive learning network, which can carry out self-organizing learning without supervision in learning [22]. This paper uses this method to extract the key information features of different types of digital archives. This can improve the accuracy and efficiency of extracting key information from archives.

The structure of self-organizing feature mapping neural network is shown in Figure 3.

We set the number of neurons in the input layer to be n, and the number of neurons in the competition layer to be M = m2. The input layer and the competition layer form a two-dimensional planar array. The two layers are connected, and sometimes neurons in the competing layer are also connected by edge inhibition [23]. There are two kinds of connection weights in the network, one is the connection weights of neurons responding to external inputs, and the other is the connection weights between neurons, whose size controls the size of interactions between neurons [24, 25].

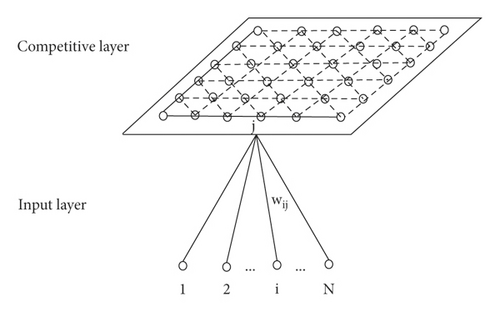

The connections of neurons at the competitive layer of each input neuron in the self-organizing feature mapping network structure shown in Figure 3 are extracted, as shown in Figure 4.

Set the input mode of the network as and the neuron vector of the competition layer as Aj = (aj1, aj2, …, ajm), j = 1,2, …, m. Where Pk is a continuous value and Aj is a numerical quantity. The connection vector between neuron j of the competition layer and neuron of the input layer is Wj = (wj1, wj2, …, wjm), j = 1,2, …, M.

- (1)

Initialization

-

Assign the network connection weight {wij} to the random value i = 1,2, …, N, j = 1,2, …, M in the interval [0, 1]. The initial value of learning rate η(t), η(t), 0 < η(t) < 1 was determined. Determine the initial value Ng(0) of neighborhood Ng(t). Neighborhood Ng(t) is essentially a region centered on the winning neuron g and contains several neurons. This area is generally uniformly symmetrical, most typically a square or circular area. The value of Ng(t) represents the number of neurons in the neighborhood during the t-th learning. Determine the total number of studies T.

- (2)

One of the q learning modes Pk, Pk is provided to the input layer of the network and normalized. The specific calculation formula is as follows:

(5) - (3)

Normalize the connection weight vector Wj = (wj1, wj2, …, wjN) and calculate the Euclidean distance between and . The calculation formula of is as follows:

(6) -

The Euclidean distance between and can be calculated by the following formula:

(7) - (4)

Find the minimum distance dg and determine the winning neuron g.

(8) - (5)

Adjust the connection weights, and modify the connection weights between all neurons in neighborhood Ng(t) of the competition layer and neurons of the input layer. The specific formula is as follows:

(9) -

In the above formula, η(t) is the learning rate at moment t.

- (6)

Select another learning mode to provide to the input layer of the network and return to step (3) until all q learning modes are provided to the network.

- (7)

Updated learning rate η(t) and neighborhood Ng(t).

(10) -

In the above formula, η(0) is the initial learning rate, t is the number of learning, and T is the total number of learning.

-

Assume that the coordinate value of a certain neuron g in the competition layer in the two-dimensional array is (xg, yg), then the range of neighborhood is point (xg + Ng(t), yg + Ng(t)) and point (xg − Ng(t), yg − Ng(t)) as the square in the upper right corner and the lower left corner, and the modified formula is as follows:

(11) -

In the above formula, INT(⋅) is the integral function.

- (8)

Let t = t + 1, return to step (2), until t = T.

2.3. Key Information Extraction Algorithm of Digital Archives

The process of key information extraction algorithm of digital archives is as follows:

-

Step 1: Calculate all candidate words and semantic similarities between wi and wj in digital archival text Sim(wi, wj).

- (1)

TF-IDF value is calculated, and word W = {W1, W2, …, WN} with word frequency greater than the threshold t is selected as the candidate key information. The calculation formula of TF-IDF value is as follows:

(15) -

In the above formula, tfi is the number of occurrences of the word in the current digitized archival text, N is the total number of digitized archival text, and ni is the number of digitized archives containing the word wi in the database.

- (2)

During initialization, each word {Wi} in the candidate word has a cluster Zi, a total of n clusters, and all of them are set with unaccessed markers.

- (3)

Among all non-visited word clusters, select the cluster pair (Cl, Ck) with the largest similarity, that is, the closest distance, by calculating the maximum value of Sim(wi, wj). If Sim(Cl, Ck) is less than the given threshold, turn to (6); otherwise, merged clusters Cl and Ck are new clusters C0 = Cl ∪ Ck. Set to current cluster C, C to no access flag, Cl and Ck to access flag.

- (4)

Calculate the semantic similarity among all unaccessed word clusters, and transfer to (4).

- (5)

After clustering, the first k words with better quality are selected from each cluster Zi as the final key information, so as to obtain the candidate word set W = {C1, C2, …, Cm}.

- (1)

-

Step 2: Treat each word in the text as a set Ci, a total of N sets (N is the number of words in the text).

-

Step 3: Select the two sets Ci and Cj with the greatest similarity from the N sets, and combine the two sets into a new set C.

-

Step 4: Select the center point of the current set: calculate the mutual information sum of the words in the current set and other words outside the set, and select the word with the largest mutual information value as the center point of the current set. If the calculated mutual information value between words is large, it indicates that they are also relatively large, on the contrary, it indicates that they are relatively small. The mutual information between wi and wj, that is, the public information between wi and wj, is calculated as follows:

(16) -

In the above formula, p(wi, wj) is the common frequency of wi and wj, p(wi) is the separate frequency of wi, and p(wj) is the separate frequency of wj. According to the above formula, when I(wi, wj) > 0, the greater the value, the more public information between wi and wj and the stronger the correlation; when I(wi, wj) = 0, there is less public information between wi and wj and the correlation is weak; when I(wi, wj) < 0, there is no correlation between wi and wj.

-

Step 5: Among other words outside the set, select the word with the highest similarity with the center point of the set. If the similarity value is greater than the threshold, add it to the current set C; calculate the mutual information between the central point of the current set and the words outside the set, and add the word with the largest mutual information value to the current set C.

-

Step 6: Turn to step 4 to update the current collection center point until all words are accessed. If the mutual information value between the central point of the set and other words outside the set is less than 0, perform step 3 for the remaining unreachable words until all the words are accessed and divided.

-

Step 7: In the final cluster set, select its first K central words as the key information of the text. The key information extraction algorithm flow of different types of digital archives is shown in Figure 5.

3. Results and Discussion

3.1. Experimental Scheme

In order to verify the effectiveness of the algorithm designed in this paper to extract archive information, we conducted simulation experiments. This experiment is a simulation experiment, so it is necessary to design the experimental parameters, consider various factors, compare various types of simulation software and computers, and complete the design of environmental parameters of the simulation experiment, as shown in Table 1.

| Experimental environment parameters | Configuration | Parameter |

|---|---|---|

| Hardware environment | CPU | Intel (R)Core (TM)i5-9400 |

| Frequency | 2.90 GHz | |

| RAM | 16.0 GB | |

| Software environment | Operating system | Windows 10 |

| Analog software language | APDL | |

| Simulation software | Matlab 7.2 | |

In the above formula, L represents the amount of key information accurately extracted.

In the above formula, ti represents the time taken for the i-th key information extraction step of digital archives.

3.2. Analysis and Discussion of Experimental Results

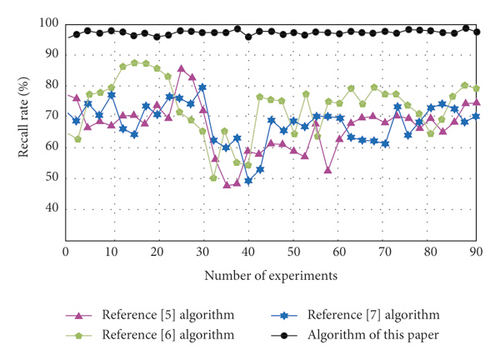

The recall rates of key information extraction of different types of digital archives of reference [5] algorithm, reference [6] algorithm, reference [7] algorithm, and algorithm of this paper are compared. The results are shown in Figure 6.

By analyzing the data in Figure 6, we can see that the recall rate of the algorithm in reference [5] changes in the range of 58%–85%, the recall rate of the algorithm in reference [6] changes in the range of 49%–79%, and the recall rate of the algorithm in reference [7] changes in the range of 50%–87%. Compared with the experimental comparison algorithm, the recall rate of the algorithm of this paper changes in the range of 96%–99%, which is always higher than the experimental comparison algorithm, it shows that the key information of digital archives can be extracted comprehensively by using this algorithm, and the integrity is higher.

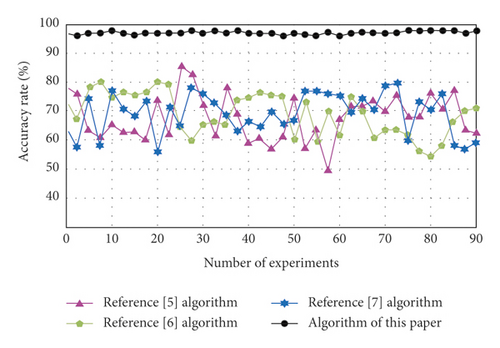

The key information extraction accuracy of different types of digital archives of reference [5] algorithm, reference [6] algorithm, reference [7] algorithm, and algorithm of this paper are compared. The results are shown in Figure 7.

By analyzing the data in Figure 7, we can see that the extraction accuracy of key information of digital archives of reference [5] algorithm is 49%–85%, the extraction accuracy of key information of digital archives of reference [6] algorithm is 54%–80%, and the extraction accuracy of key information of digital archives of reference [7] algorithm is 56%–80%. Compared with these algorithms, the extraction accuracy of key information of digital archives of the algorithm of this paper is 96%–98%. On the whole, the key information extraction accuracy of this algorithm is relatively stable, and there is no fluctuation of too high or too low, which indicates that the reliability of this algorithm in extracting key information is high. The accuracy of information extraction is higher, which can achieve the ultimate goal of accurately extracting the key information of different digital archives.

The extraction time of key information of different types of digital archives of reference [5] algorithm, reference [6] algorithm, reference [7] algorithm, and algorithm of this paper are compared. The comparison results are shown in Table 2.

| Number of experiments | Time (s) | |||

|---|---|---|---|---|

| Reference [5] algorithm | Reference [6] algorithm | Reference [7] algorithm | Algorithm of this paper | |

| 10 | 1.25 | 1.33 | 1.47 | 0.47 |

| 20 | 1.44 | 1.25 | 1.58 | 0.56 |

| 30 | 1.23 | 0.96 | 1.56 | 0.58 |

| 40 | 1.38 | 1.47 | 1.47 | 0.62 |

| 50 | 1.45 | 1.58 | 1.52 | 0.85 |

| 60 | 1.63 | 1.65 | 1.35 | 0.67 |

| 70 | 1.45 | 1.58 | 1.47 | 0.81 |

| 80 | 1.56 | 1.40 | 1.62 | 0.57 |

| 90 | 1.29 | 1.31 | 1.41 | 0.51 |

| Average value | 1.41 | 1.39 | 1.49 | 0.63 |

By analyzing the results in Table 2, it can be seen that the average time-consuming of digital archives key information extraction of reference [5] algorithm is 1.41 s, the average time-consuming of digital archives key information extraction of reference [6] algorithm is 1.39 s, and the average time-consuming of digital archives key information extraction of reference [7] algorithm is 1.49 s, which is the highest among the four algorithms. Compared with these algorithms, the average extraction time of key information of digital archives in this algorithm is 0.63 s, which has a shorter extraction time and higher efficiency, and can realize the rapid extraction of key information of digital archives.

To sum up, the recall rate of this algorithm changes in the range of 96%–99%, the accuracy of key information extraction of digital archives is 96%–98%, and the average time-consuming of key information extraction of digital archives is 0.63 s. It can achieve the goal of rapid and accurate extraction of key information of digital archives, solve a variety of problems existing in traditional methods, and can be widely used in many fields.

4. Conclusions

With the continuous optimization of cultural operation and management strategies, the level of cultural operation and management has been gradually improved, and digital archives management is an important part of cultural operation and management. Therefore, extracting the key information of different types of digital archives is of great significance to the level of cultural operation and management. Therefore, this paper designs a key information extraction algorithm of different types of digital archives for cultural operation and management. The experimental results show that the recall rate of the algorithm is between 96% and 99%, the accuracy of key information extraction of digital archives is 96%–98%, and the average time-consuming of key information extraction of digital archives is 0.63 s. It can achieve the goal of rapid and accurate extraction of key information of digital archives and can be widely used in cultural operation and management, in order to improve the quality of cultural operation and management to the greatest extent, promote the further development of the cultural industry. However, the convergence of this algorithm is not tested in the process of operation. In order to avoid falling into the local optimum, it is necessary to increase the optimization of the algorithm in future research work to avoid too many iterations or high errors.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability

The dataset can be accessed upon request.