Multiparty Dynamic Data Integration Scheme of Industrial Chain Collaboration Platform in Mobile Computing Environment

Abstract

At present, there are still many problems to be solved in the information interaction between internal platforms of different enterprises and third-party platform, especially the data integration problem between them, specifically mentioning the two aspects of data nonstandardization and difficult data integration. To solve the above problems, this paper proposes a multiparty dynamic data integration scheme for the industrial chain collaboration platform. On the one hand, this scheme builds a standard data classification system from the perspective of the type of data to solve the problem of data irregularity; on the other hand, it builds a multiparty dynamic data integration model based on rules and requests to solve the problem of difficult data integration. This paper verifies the feasibility, flexibility, and rationality of the scheme through case analysis and experimental analysis.

1. Introduction

In terms of providing new IT service models and business opportunities, cloud platforms have great potential. Whether large-scale group companies carry out big data analysis based on cloud platforms to mine multidimensional business value, or small and medium-sized enterprises carry out business collaboration based on cloud platforms to achieve informatization and digital popularization at a lower cost, are driving enterprises to accelerate the transformation to socialization, intensification, and specialization. The industrial chain collaboration platform adopts pay-as-you-go services to provide business collaboration and data analysis services for tens of thousands of enterprises in the automobile manufacturing industry chain (including suppliers, manufacturers, distributors, service providers, and logistics providers); thus, saving the operating costs of these enterprises has lowered the threshold for enterprise informatization [1, 2].

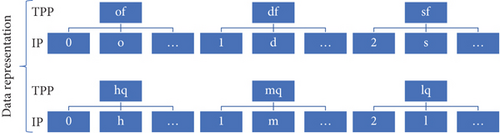

In the business collaboration environment of multiple enterprises, there are still many problems to be solved in the information interaction between the Internal Platforms (IP) of different enterprises and Third-Party Platforms (TPP), especially the data integration between multiple internal platforms of different enterprises and third-party platforms, which can be specifically reflected in the following aspects: (1) there are large differences in the data standards of internal platforms (systems) of various enterprises; (2) the data integration rules and processes of traditional platforms (systems) are static and difficult to meet the needs of enterprises for dynamic integration and real-time operations. On the one hand, these problems have affected the information interconnection of enterprises in the industrial chain, on the other hand, they have also prevented enterprises from maximizing data value.

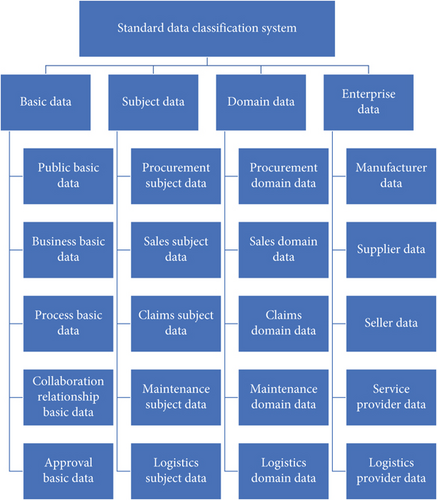

To address the aforementioned issues, this paper proposes a multiparty dynamic data integration scheme for the industrial chain collaboration platform based on the idea of attribute-based access control [3–5]. To begin, the scheme establishes a standard data classification system from the standpoints of basic data, subject data, domain data, and enterprise data in order to eliminate the impact of nonstandard data. Second, the scheme creates a multiparty dynamic data integration model based on data integration rules and requests to dynamically integrate data between multiple internal platforms of different enterprises and third-party platforms, allowing enterprises to meet real-time operational requirements.

2. Related Work

At present, the domestic and foreign scholars have mainly researched information integration of platforms (systems) from the aspects of data exchange and data integration and have achieved rich results. However, research on multiparty dynamic data integration technology for industrial chain collaboration platforms is relatively small.

In terms of data exchange, the traditional data exchange technology refers to the conversion of data conforming to the source mode into data conforming to the target mode which needs to be as accurate as possible and reflects the source data in a manner consistent with various dependencies [6]. In the early days of data exchange research, the main research direction was to find and solve the semantics and complexity of first-order queries from the context of data exchange based on relational models. Fagin et al. [7] proposed a data exchange framework on a probabilistic database and proved its consistency and robustness which was suitable for arbitrary pattern mapping. Tan et al. [8] proposed an XML data exchange scheme with target constraints, which first defined the mapping between source and target schemas through a rich language, and then constrained the targets based on the XML constraint model in the scheme and constructed a canonical target instance, and finally realized data exchange through XML target instances. Medina et al. [9] extended the classical data exchange method and used fuzzy data exchange as the framework of fuzzy data transformation. The most important attributes of the classical data exchange were remained in the new framework by analyzing the relationships between fuzzy data exchange and classical settings and between probability data exchange and fuzzy logic [10, 11]. With the rapid development of cloud platforms and cloud services, Wu et al. [12] applied feature-based data exchange to collaborative product development based on cloud-based design and manufacturing and proposed a service-oriented cloud-based design and manufacturing data exchange architecture.

In terms of data integration, traditional data integration techniques are used to coordinate mismatch between different data sources, integrate heterogeneously, distributed, and autonomous data to provide users with a unified view which can make the users transparently access different data sources 6. Tao et al. [13] proposed an ontology-based semantic integration scheme for XML data, which optimized the query execution plan generation algorithm based on the assumption that the number of nonintegrity roles is limited. Benedikt et al. [14] proposed a logical basis for information disclosure in the Ontology-Based Data Integration Method (OBDIM), which portrayed an ontology-based data integration system that allowed users to efficiently access the data located in multiple data sources through global patterns of ontology descriptions. Valle and Kenett [15] proposed a Social Media-Based Data Integration Method (SMDIM) that used interview-based customer survey data to calibrate online-generated big data and to calibrate the level of unbalanced responses in different data sources by resampling and data integration was performed using Bayesian networks to disseminate new rebalancing information. Lin et al. [16] proposed a probability coverage model for the data source selection problem of information integration to ensure the efficiency and effectiveness of information integration, which was used to evaluate the quality of data sources, and through the algorithm and two kinds of pruning to improve the efficiency and effectiveness of information integration without sacrificing accuracy.

On the one hand, the findings of this study cannot completely eliminate the impact of nonstandard data from multiple platforms (systems), and on the other hand, they cannot fully meet the needs of businesses for dynamic integration and real-time operation. As a result, this paper proposes a multiparty dynamic data integration scheme for the industrial chain collaboration platform in order to compensate for the shortcomings of the above research findings and to address the multiparty dynamic data integration issues.

3. The Multiparty Dynamic Data Integration Scheme

3.1. The Standard Data Classification System

- (1)

Data classification standard: According to the characteristics, functions, and associations of the data of the third-party platform, the data of the internal platform of the enterprise and the third-party platform are divided into basic data, subject data, domain data, and enterprise data. The four types of data are both related and different. The specific contents are as follows:

Basic data: This type of data is shared between the internal platform of the enterprise and the third-party platform, including public basic data, business basic data, process basic data, collaboration relationship basic data, and approval basic data.

Subject data: This type of data is generated by a basic business unit of an enterprise’s internal platform or a third-party cloud platform, including procurement subject data, sales subject data, claims subject data, maintenance subject data, and logistics subject data.

Domain data: This type of data is generated by a series of basic business units on an enterprise’s internal platform or a third-party cloud platform, including procurement domain data, sales domain data, claims domain data, maintenance domain data, and logistics domain data. The relationship between subject data and domain data is subordinate.

- (2)

Data adaptation standard: It can convert private representations with various data formats, identifiers, and codes into standard representations using key-value dictionary libraries and provide standard data sources for enterprises in the automotive manufacturing industry chain in order to achieve information interconnection and maximize data value. The adaptation standard, on the other hand, allows for reverse conversion (i.e., converting standard representation to private representation). Different internal platforms, for example, use different values to indicate that a person’s gender is “male.” It is necessary to build a unified dictionary library, as shown in Table 1, to convert the various private representations to the standard representation of the third-party platform “male.” The key’s value range is a collection of all possible representations, with the final standardized representation’s value being “male.”

| Key | The values of different internal platforms | The value of the third-party platform |

|---|---|---|

| Gender | “Male” | “Male” |

| “Man” | “Male” | |

| “0” | “Male” | |

| “1” | “Male” | |

| “M” | “Male” | |

| … | “Male” |

After the construction of the standard data classification system is completed, the next step is to integrate data between the internal platform of the enterprise and the third-party platform, which is convenient for enterprises in the automobile manufacturing industry chain to carry out business collaboration and data analysis.

3.2. Multiparty Dynamic Data Integration Model

The Multiparty Dynamic Data Integration Model (MDDIM) is composed of four basic elements: subject, object, entity, and action. Among them, subject, object, and entity are all represented by attributes. The subject represents the source platform and the destination platform involved in data integration which includes all internal platforms and third-party platforms. The object represents a set of data resources. The entity represents a key-value dictionary library. The action represents permission or denial of data integration. This section gives the following definitions to better describe the MDDIM.

Definition 1. ENT = {Ent1, Ent2, ⋯, Entn} indicates the set of enterprises that use a third-party platform, such as suppliers, manufacturers, sellers, and service providers (4S stores).

Definition 2. represents all the internal platform set of the enterprise (Enti), such as purchasing platform, sales platform, and service platform.

Definition 3. represents all data resource sets of the internal platform (IPi), such as basic data resources, subject data resources, domain data resources, and enterprise data resources. It can also represent all data resource sets of the TPP.

Definition 4. represents the set of attributes contained in a set of data resources (Resj). For example, a set of part data resources includes name attributes, supplier attributes, price attributes, origin attributes, and property rights attributes.

Definition 5. Att = {atn, typ, ran, rel} represents an attribute, which is a variable consisting of the specified data type and value range. Among them, atn represents the attribute name; typ represents the data type; ran = {val1, val2 ⋯ , valn} represents the range, which is divided into continuous range and discrete range; rel represents the different value relationships of the attribute, which are divided into three kinds, namely, Comparative Relationship (CR), Partial Order Relationship (PR), and Belonging Relationship (BR) according to the type of the range, as shown in Table 2.

Definition 6. anp = {atn, val} represents an attribute name-value pair (anp), which refers to a specific value of the attribute, and its mathematical expression is atn = val. In this paper, Sanp, Oanp, and Eanp are used to represent the attribute name-value pair of the subject, object, and entity, respectively.

Definition 7. apr = {atn, ⋈, val} represents an attribute predicate (apr), which is used to define the range of values of the attribute, and its mathematical expression is atn⋈val and ⋈∈{≤, <, ≥, >, ≠, = , ≼, ≺, ≽, ≻, ∈, ∉}. In this paper, Sapr, Oapr, and Eapr are used to represent the attribute predicates of subject, object, and entity, respectively.

Definition 8. RST = {[apr]anp, [apr]ANP, [APR]ANP} represents the satisfiability result of the anp and the apr, and its specific meaning is as follows.

| Types of the range | Value relationships | Mathematical expressions |

|---|---|---|

| Continuous | CR | rel = (vali⋈valj|vali, valj ∈ ran, ⋈∈{≤, <, ≥, >, ≠, = }) |

| Discrete | PR | rel = {vali⋈valj|vali, valj ∈ ran, ⋈∈{≼, ≺, ≽, ≻, ≠, = }} |

| Continuous or discrete | BR | rel = {vali⋈val|vali ∈ val, vali, val ∈ ran, ⋈∈{∈, ∉}} |

Definition 9. Req = {sas, oas} represents the data integration request, which is divided into two types: positive request and negative request. The positive request means the data integration request initiated from the third-party platform to the internal platform of the enterprise, and the negative request means the data integration request initiated from the internal platform of the enterprise to the third-party platform. The specific meanings are as follows:

sas = {Sanp1, Sanp2, ⋯, Sanpn} represents the set of subject attribute name-value pairs.

oas = {Oanp1, Oanp2, ⋯, Oanpn} represents the set of object attribute name-value pairs.

Definition 10. Rul = {Sas, Oas, Eas}⟶Act represents the data integration rule. It is a rule customized by enterprises (Enti) in the automobile manufacturing industry chain and the specific meanings are as follows. RUL = {Rul1, Rul2, ⋯, Ruln} represents a customized ruleset.

Sas = {Sapr1, Sapr2, ⋯, Saprn} represents the set of subject attribute predicate.

Oas = {Oapr1, Oapr2, ⋯, Oaprn} represents the set of object attribute predicate.

Eas = {Eapr1, Eapr2, ⋯, Eaprn} represents the set of entity attribute predicate.

Definition 11. SRT = {[Rul]Req, [RUL]Req} indicates the satisfiability result of the request and the rule of data integration, and its specific meaning is as follows:

[Rul]Req represents the satisfiability result of Req and Rul, that is, given Req = {sas, oas} and Rul = {Sas, Oas, Eas}⟶Act, if [Sas]sas∧[Oas]oas∧[Eas]oas is true, the satisfiability result ([Rul]Req) of Req and Rul is true (i.e., Rul satisfies Req), otherwise it is false (i.e., Rul does not satisfy Req), and its mathematical expression is as shown in Equation (4).

In Equation (5), if is true, then [RUL]Req is true, that is, there is at least one [Rul]Req which makes [RUL]Req true. If only one rule satisfies the request, data integration is performed directly, and the corresponding data and its relationship are stored. If two or more rules satisfy the request, data integration is performed based on the rule created at the latest time and the corresponding data and its relationship are stored.

It can be seen from Equation (6) that the model defines the multivariate attribute relationship between Req and Rul. If and only if all the multivariate attribute relationships in Equation (4) are true, the satisfiability result of Req and Rul is true. Under the condition that Req is unchanged, we can dynamically increase or decrease the attribute condition of Rul to control the granularity of dynamic integration of multiparty data (see Section 5.1), so MDDIM has good flexibility and can adapt to the complex data integration environment.

4. Integration Decision Algorithm

The integrated decision algorithm (See Algorithm 1) is the core algorithm to achieve the multiparty dynamic data integration model. It is used to solve the satisfiability result of requests (Req) and rules (Rul). If the satisfiability result ([RUL]Req) is false, data integration is refused to perform. If the satisfiability result is true and there is only one rule that satisfies the request, the data integration is allowed to be performed according to the rule (Rul). If the satisfiability result is true and there are at least two rules that satisfy the request, the rule created based on the latest time allows data integration to be performed. The specific content of the Algorithm 1 is as follows.

-

Algorithm 1: Integration Decision Algorithm (IDA).

-

Input:Req, RUL = {Rul1, Rul2, ⋯, Ruln}

-

Output: Permitting or denying data integration

-

1. IDA (Req, RUL)

-

2. Foreach i in n

-

3. If [Sas(i)]sas∧[Oas(i)]oas∧[Eas(i)]oas = = True then

-

4.

-

5.

-

6. Else

-

7.

-

8.

-

9. End if

-

10.End for

-

11.

-

12.If [RUL]Req = = True then

-

13.

-

14. If h> =2 then

-

15. Sort_Desc (Rul+(h))

-

16. Get top 1

-

17. Permitting data integration based on Rul

-

18. Else

-

19. Permitting data integration based on Rul

-

20. End if

-

21.Else

-

22. Denying data integration

-

23.End if

-

24.End IDA

5. Case Analysis and Experimental Analysis

5.1. Case Analysis

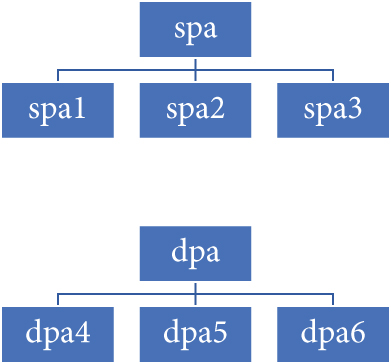

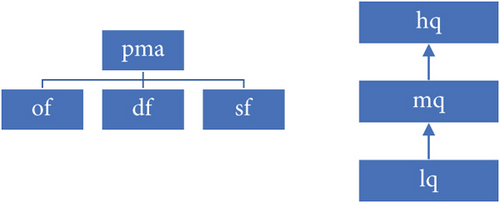

In this section, the “ASP/SaaS-based manufacturing industry value chain collaboration platform” [17] is used as an example to illustrate the implementation process of multiparty dynamic data integration. According to the actual collaboration requirements for parts procurement business, we give the relationship between the values of the subject attribute, object attribute, and entity attribute as shown in Figures 2(a), 2(b), and 2(c), respectively. The specific meanings are as follows.

The subject attribute adopts the source platform serial attribute (spa) and the destination platform serial attribute (dpa), where spa and dpa contain the serial numbers of the three source platforms and the destination platforms, respectively.

The object attribute adopts part manufacturer attributes (pma), part quality attributes (pqa), and part origin attributes (poa). Among them, pma includes original factory (of), deputy factory (df), and substitute factory (sf); pqa includes high quality (hq), medium quality (mq), and low quality (lq), and there is a relationship hqa≼mqa≼lqa. In this relationship, “≼ ” means that the left side of the symbol is better than or equal to the right side, and “≽ ” means that the left side of the symbol is worse than or equal to the right side.

The entity attribute adopts part manufacturer attributes (pma) and part quality attributes (pqa). The dictionary library of pma and pqa is shown in Figure 2(c).

The formal description of the data integration request (Req) is shown in Table 3, the formal description of the data integration rule (Rul) is shown in Table 4, and the definitions of the values of spa, dpa, pma, and pqa are shown in Figure 2.

| Req | sas | oas |

|---|---|---|

| Req1 | spa = spa1, dpa = dpa4 | pma = of |

| Req2 | spa = spa1, dpa = dpa5 | pma = df, pqa = lq |

| Req3 | spa = spa3, dpa = dpa5 | pma = df, pqa = mq |

| Req4 | spa = spa3, dpa = dpa4 | pma = sf, pqa = mq |

| Req5 | spa = spa3, dpa = dpa6 | pma = of, pqa = hq |

| Req6 | spa = spa3, dpa = dpa8 | pma = df, pqa = mq |

| Rul | S as | O as | E as | A ct |

|---|---|---|---|---|

| Rul1 |

|

|

|

p |

| Rul2 |

|

|

|

p |

| Rul3 |

|

|

|

p |

| Rul4 |

|

|

|

p |

| Rul5 |

|

|

|

d |

| Rul6 |

|

|

|

d |

Given Req (Table 3) and Rul (Table 4), the satisfiability results of rules and requests are solved through IDA. If there are multiple satisfiability results (that is, multiple rules satisfy the request), data integration is performed according to the latest rules, and the integration results are shown in Table 5. This paper assumes that “T” means that data integration is allowed, that is, at least one rule (Rul) satisfies the request (Req), and “F” means that data integration is rejected, that is, no rule (Rul) satisfies the request (Req).

| [ Rul]Req | Rul1 | Rul2 | Rul3 | Rul4 | Rul5 | Rul6 | Result |

|---|---|---|---|---|---|---|---|

| Req1 | T | F | F | F | F | F | T |

| Req2 | T | T | F | F | F | F | T |

| Req3 | F | F | T | F | F | F | T |

| Req4 | F | F | T | T | F | F | T |

| Req5 | F | F | F | F | F | F | F |

| Req6 | F | F | F | F | F | F | F |

It can be seen from Table 5 that both Req2 and Req4 have two rules that can be satisfied. Assuming descending order (i.e., in descending order of time: Rul6, Rul5, Rul4, Rul3, Rul2, Rul1) according to the subscript of the rules, it can be concluded that the latest rules of Req2 and Req4 are Rul2 and Rul4, and then, data integration is performed based on Rul2 and Rul4. Req5 and Req6 have no rules that can be satisfied, so their data integration requests will be rejected.

Based on the above case, we dynamically customize the data integration rules (Rul) based on MDDIM and expect to be able to control the granularity of multiparty dynamic data integration by increasing or decreasing Rul’s attribute conditions. For example, in the data integration ruleset (Table 4), we add an attribute condition to the Oas of Rul1 as shown in Table 6. Given Req (Table 3) and Rul1 (Table 6) in this case, the satisfiability results of the rules and requests solved by IDA are shown in Table 7. From Table 7, it can be seen that Rul1 does not satisfy all Req in Table 3. Similarly, we reduce an attribute condition (pqa≼lq) for Oas in Rul1 (Table 4), and Rul1 still does not satisfy all Req in Table 7. From the satisfiability results, it can be seen that increasing or decreasing Rul’s attribute conditions can effectively control the granularity of data integration and increase the flexibility of MDDIM.

| Rul | S as | O as | E as | A ct |

|---|---|---|---|---|

| Rul1 |

|

|

|

p |

| [ Rul]Req | Rul1 | Result |

|---|---|---|

| Req1 | F | F |

| Req2 | F | F |

| Req3 | F | F |

| Req4 | F | F |

| Req5 | F | F |

| Req6 | F | F |

5.2. Experimental Analysis

This section takes the parts procurement business collaboration of the “ASP/SaaS-based manufacturing industry value chain collaboration platform” 15 as an example to carry out experimental analysis on multiparty dynamic data integration. The experimental environment is a local area network and we do not consider the transmission delay. The computer configuration of the internal platform of the enterprise is Intel Xeon E5-2699 V4 2.2G, 32 G memory, and the operating system is Windows Server 2016, the computer configuration of the third-party platform is Intel Xeon E5-2680 V4 2.4G, 32 G memory, and the operating system is Windows Server 2016. The same data integration engine is deployed on the internal platform of the enterprise and the third-party platform, which is used to initiate integration requests, synchronize rules, and execute the algorithm.

eXtensible Access Control Markup Language (XACML) [18] is an open standard language based on Extensible Markup Language (XML), and it is also a general-purpose access control language for determining requests/responses. It is not only a framework for expressing and disseminating rules but also a framework for implementing integrated rules. XACML has good interactivity, versatility, and extensibility. In this section, XACML is used to provide unified writing specifications for data integration rules and requests.

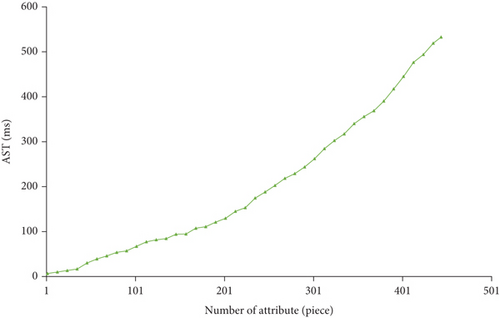

In the experiment analysis of rule complexity, we write 1 Req (with 400 attributes) and 40 Ruls (each with a different number of attributes and within 1-400) based on XACML. Under the same experimental configuration conditions, the satisfiability results of Req and Rul are solved by IDA and the process is repeated by 10 times, the corresponding solution time is recorded separately, and then, the average value is taken to form the Average Solution Time (AST). The relationship between the number of attributes and the ASTs is shown in Figure 3.

It can be seen from Figure 3 that as the number of rule attributes gradually increases, the corresponding AST also gradually increases. We can conclude that the relationship between the two is proportional, and also that the complexity of the rule depends on the number of attributes, that is, the greater the number of rule attributes, the higher the complexity, and the finer the corresponding data integration granularity. According to the data integration requirements of the internal platform of the enterprise and the third-party platform, the complexity of the rule is usually within 10-200 attributes, and the gap between the corresponding AST is small, so the complexity of the rule has a small impact on the performance of data integration.

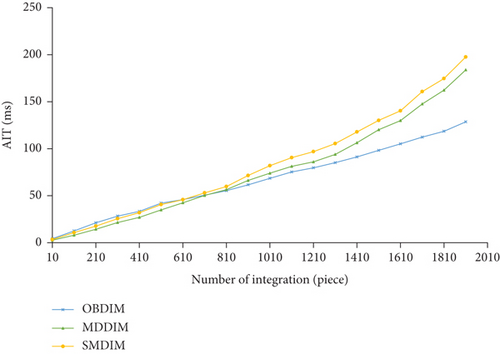

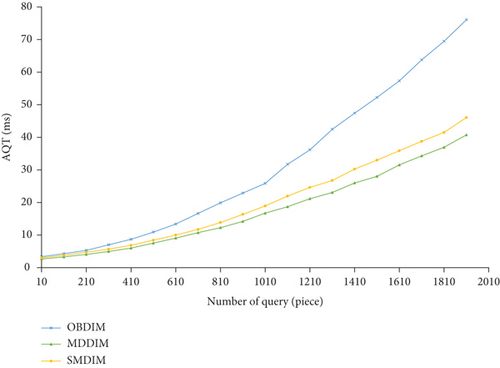

In the experimental analysis of the integration and query [19] of the data, we compare the MDDIM proposed in this paper with OBDIM 12 and SMDIM 13. Under the same experimental configuration conditions, the data integration and query are performed based on the above three schemes and the process is repeated by 10 times, the corresponding integration time and query time are recorded, respectively, and then, the average value is taken to form Average Integration Time (AIT) and Average Query Time (AQT). In the data integration comparison experiment, the relationship between the number of integrations and the AITs is shown in Figure 4(a). In the data query comparison experiment, the relationship between the number of queries and the AQTs is shown in Figure 4(b).

It can be seen from Figure 4(a) that when the number of data integration is less than 710, the AIT of MDDIM is better than OBDIM and SMDIM. When the number of data integration is higher than 710, the AIT of OBDIM is better than MDDIM and SMDIM, the reason of which is that MDDIM and SMDIM take more time to process more data based on rules, while OBDIM only needs to establish a mapping relationship, and does not need to process data, so its AIT is relatively low. It can be seen from Figure 4(b) that as the number of data queries gradually increases, the corresponding AQT also gradually increases. The AQT of MDDIM is superior to SMDIM and OBDIM. The reason is that OBDIM query data needs to handle the mapping relationship, while MDDIM and SMDIM can directly query data, so their AQT is lower than OBDIM. According to the data integration and query requirements of the enterprise’s internal platform and third-party platform, the number of integrations or queries at a time is usually less than 600. Clearly, from the experimental results, the MDDIM proposed in this paper is superior to SMDIM and OBDIM.

6. Conclusion

This paper was aimed at solving the problem of data integration between multiple enterprise internal platforms and third-party platforms and proposes a multiparty dynamic data integration scheme for industrial chain collaboration platforms. On the one hand, this scheme solves the problem of data irregularity by building a standard data classification system. On the other hand, it solves the problem of difficult data integration by building a multiparty dynamic data integration model. This paper verifies the feasibility, flexibility, and rationality of the multiparty dynamic data integration scheme through case analysis and experimental analysis.

This paper’s research is primarily focused on the automobile manufacturing industry chain, with the goal of assisting enterprises in the industry chain in achieving information interconnection and maximizing data value. To improve the experience of enterprises, the main work in the future will be to further optimize the way of customizing data integration rules and the visual interface of third-party platforms.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This work was supported by the National Key R&D Plan of China No. 2018YFB1701500 and No. 2018YFB1701502.

Open Research

Data Availability

The data used to support the findings of this study are included within the article.