Evaluation of Multimedia Popular Music Teaching Effect Based on Audio Frame Feature Recognition Technology

Abstract

Music education should pay attention to popular music that exists in students’ real life and deeply affects them. Moreover, it needs to be combined with “popular classical music” to make them happily learn popular music, appreciate popular music artistically, and feel popular music aesthetically. This study combines the audio frame feature recognition technology to evaluate the effect of multimedia popular music teaching and improve the quality of multimedia popular music teaching. Moreover, this study adaptively revises the speech spectrum technology to construct a multimedia pop music system based on audio frame feature recognition technology. Finally, this study verifies the performance of this system through experimental research. According to the results of experimental research, it can be seen that the effect of the system proposed in this study is very good.

1. Introduction

The composition of music art should be diverse, including both traditional music and modern music, and other mainstream and nonmainstream forms of music. Therefore, since we can attach importance to classical music and traditional music, we must also attach importance to modern music and popular music. Most popular music is passionate, full of emotions, sentimental, or happy and enmity, and it is always based on the principle of depicting and adapting to the public’s psychology to the maximum. In addition, it should be noted that the appreciation of popular music also requires some kind of artistic guidance and the artistic imagination of the audience. The need for spirit is the essence of popular music. Although popular music is as vast as a sea of smoke, it will always leave something shining after the big waves wash the sand. It will become classic and inspiration [1].

At present, our country is in a period of rapid development, and our society is in a period of transformation. Students living in such an era are facing heavy learning pressure on the one hand and are in a psychologically sensitive period on the other hand. They are in a special growth stage of transition from immaturity to maturity. Compared with the previous childhood and later adulthood, the psychology of this period has the characteristics of poor stability, high emotionality, high sentimentality, and strong sensitivity [2]. Popular music has a distinctly popular character. Most of its content is close to the lives of ordinary people and expresses the feelings of ordinary people. Today, popular music has become the mainstream music culture of the society. For teenagers, it is obviously different from children’s songs, and it has the atmosphere of the times, which can resonate with their own hearts. Moreover, popular music occupies an important part in the lives of college students [3].

This study combines audio frame feature recognition technology to evaluate the effect of multimedia popular music teaching, improve the quality of multimedia popular music teaching, improve the role of popular music in the growth of students, and promote the healthy development of students’ body and mind.

2. Related Work

Due to the rapid development of computer technology and informatics, and people’s demand for fast and effective audio recognition, audio recognition demonstrations using audio frame recognition have been widely used. Literature [4] laid a theoretical basis for the audio frame recognition technology. Literature [5] found a speech spectrogram and can automatically depict this spectrogram. People think that everyone’s fingerprints are different from each other, and it usually takes millions of people to find almost identical fingerprints. The same should be true for audio frames [6]. Literature [7] obtained a method based on pattern matching and probability statistical analysis to support the development of audio frame recognition technology. Many scholars paid attention to this, which pushed the audio frame recognition to a peak. During this period, everyone focuses on the feature extraction direction. Literature [8] proposed the UBM-MAP (Universal Background Model-Maximum Posterior Probability) structure in the speaker verification task, which made the audio frame recognition from the laboratory to the practical. Important contribution: UBM-MAP reduces the dependence of the statistical model GMM on the training set. When training the model, only a few sentences of the speaker are needed, so it is relatively simple and flexible to use, and its accuracy is relatively high. Subsequently, the support-vector machine (SVM) technology was introduced into the audio frame recognition and achieved good results [9].

Although there have been many matching algorithms such as GMM-SVM, the effect is not as good as GMM and GMM-UBM [10]. Under the current development trend, audio frame recognition has gradually moved from the original laboratory stage to the practical stage. When in a pure voice environment, the audio frame recognition rate can reach a high accuracy rate, but when in a noisy environment, it will reduce the accuracy rate a lot, so now noise has become one of the main reasons that affect the recognition performance. Therefore, the research on noise suppression algorithms is urgent. Among them, the speech enhancement technology is produced in this environment, and its purpose is to extract pure speech signals from noisy speech as much as possible [11]. Literature [12] proposed the use of spectral subtraction to eliminate noise; literature [13] studied Wiener filtering algorithms for noise removal. These algorithms based on short-time spectrum estimation are suitable for environments with relatively large signal-to-noise ratios, and the algorithm is simple and easy to implement, so it has always had a strong vitality, and many people still use it.

Due to the vigorous development of very large-scale integrated (VLSI) circuit technology, the possibility of real-time implementation of voice enhancement is provided. Literature [14] published an algorithm for soft decision noise removal; literature [15] applied the Kalman filter to speech denoising. However, these traditional various filters are processed by spectrum analysis technology, which is a method of using Fourier transform to map the signals one by one into the frequency domain and then analyze them. This method will only work when the selected signal is stable and the spectral characteristics are obviously different from the noise, but in real life people often encounter unstable signals, and the frequency band of the signal and the frequency band of the noise tend to overlap together, so traditional methods are becoming less and less satisfactory. The rapid development of mobile communication technology has given a realistic impetus to the research of speech enhancement technology. For example, wavelet decomposition technology [16] is proposed for speech signals with noise. This method is formed with the mathematical analysis method of wavelet decomposition. It is a time-domain and frequency-domain analysis with multiresolution characteristics. Because of this, the local characteristics of the signal can be combined with the time domain and frequency domain. This feature is superior in the analysis of nonstationary signals. At the same time, it also combines part of the theoretical basis of spectral subtraction, which is now the focus of multidisciplinary attention. But there is a weak point in wavelet denoising, that is, the energy of noise needs to be estimated, but people often do not know what noise is there. Therefore, the independent component analysis method [17] has been developed. Its central idea is to combine a set of observation signals linearly mixed from source signals (such as pure speech and noise), assuming that the source signals are independent of each other in time. The algorithm separates the source signal, and the signal and noise meet this point. This method does not need to understand the noise characteristics.

3. Audio Frame Feature Recognition Algorithm Model

Adaptive postfiltering is a technique that adaptively corrects the speech spectrum according to the spectral characteristics of the local speech in order to improve the quality of the synthesized speech. In order to essentially understand the principle of adaptive postfiltering in speech coding, it is explained in terms of Wiener filtering and the hearing model of the human ear.

A very important element of signal processing is to extract the signal from the noise or to suppress the companion noise to the maximum extent possible. One effective way to achieve this is to design a filter with optimal linear filtering characteristics.

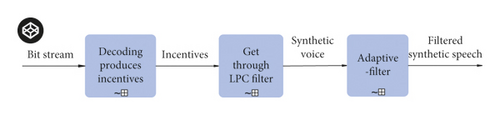

From formula (1), it can be seen that the gain of the filter is close to 1 at frequencies with a large signal-to-noise ratio (SNR). At frequencies with smaller SNR, the gain of the filter is correspondingly smaller. The postfilter of the conventional narrowband encoder is usually applied to the synthesized speech at the decoding end, as shown in Figure 1.

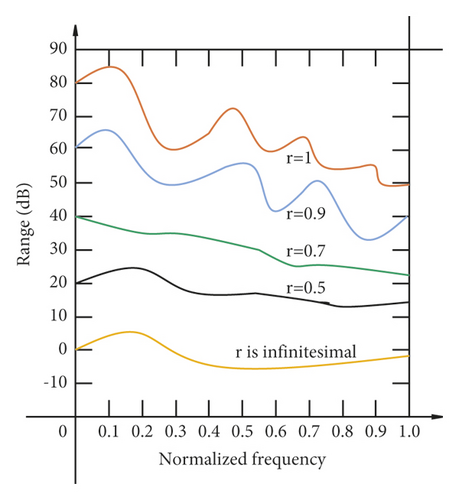

Among them, is the transfer function of LPC predictor coefficients, ai is the LPC predictor coefficients, p is the order of LPC predictor, and the corresponding transfer function of the LPC synthesis filter is 1/(1-A(z)). The scale factor γ corrects the LPC synthesis filter as shown in Figure 2.

If 1 − A(z/β) is used only as a short-time postfilter, it reduces noise, but it introduces a spectral skew with a low-pass effect, which can lead to a “muffled” sound. Therefore, a corresponding zero-point filter 1 − A(z/β) is introduced to reduce the spectral skew.

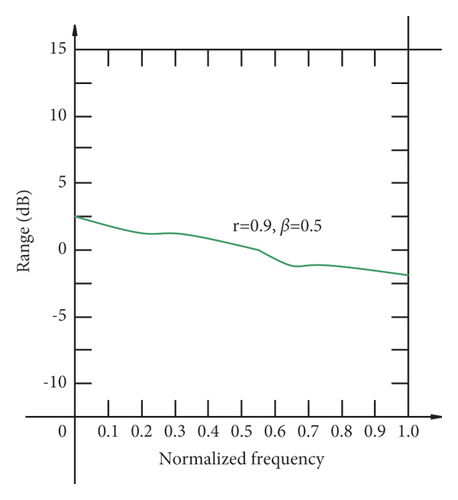

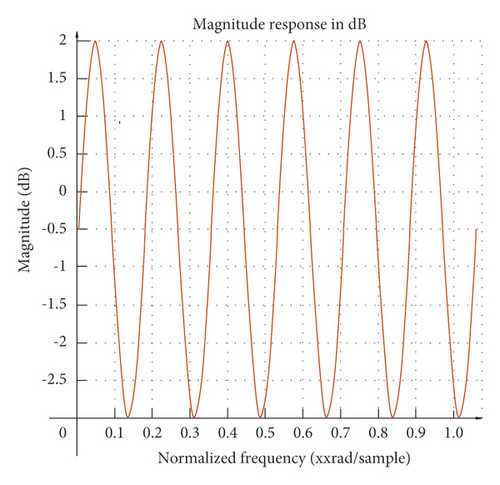

From formula (3), it can be seen that, in the logarithmic domain, the frequency response of H(z) is the difference between the frequency responses of the two weighted LPC synthetic filters so that some of the skews can be removed, as shown in Figure 3.

Usually, in order to further reduce the low-pass effect, a first-order filter with a transfer function of 1 − μz−1 can be added to cascade with a short-time postfilter.

Among them, G is the adaptive gain factor, p is the fundamental period, and 0 < λ < 1,0 < γ < 1.

The phases of the p poles of H(z) are 0, 2π/p, 4π/p, …, (p − 1)2π/p, corresponding to the peaks of the harmonics of the fundamental tone in turn. The phases of the p zeros of H(z) are π/p, 3π/p, …, (2p − 1)π/p, corresponding to the troughs between the harmonics of the fundamental, respectively. γ and λ vary with the clearness of the speech, thus controlling the degree of long-time postfiltering according to the periodicity of the speech.

The full polar part (denominator part) of the transfer function in formula (3) corresponds to the recursive infinite impact response (IIR) filtering operation. Its impact extends to future frames, and the full-zero part (numerator part) corresponds to the nonrecursive FR filtering operation, and its impact basically stays in the current frame. Therefore, in practical applications, a very small λ value is generally chosen, or even λ = 0. In this postfilter design of the wideband embedded speech encoder, the long-time postfilter used is the filter with no poles.

For an analytic-synthetic encoder like the CELP-based model, the optimal excitation parameters are searched in the perceptually weighted domain, obtained by minimizing the minimum mean square error between the input speech and the synthesized speech.

Among them, A′(z) is the linear prediction coefficient, and γ1 and γ2 are the control factors. In this way, the quantized noise (usually assumed to be white noise) is weighted by 1/W′(z), which also shapes the noise spectrum to have a resonant peak spectrum similar to the input speech signal.

A(z) is calculated on the basis of the pre-emphasized signal, so the tilt of 1/A(z/γ1) is smaller than the A(z) directly calculated on the input speech. At the same time, the synthesized speech has to be de-emphasized at the decoding end, that is, by 1/P(z). In this way, the spectral correction of the quantization error is W−1(z)P−1(z), that is, 1/A(z/γ1).

Although the noise spectrum is suppressed according to 1/A(z/γ1) shaping, the experiments show that there is still subtle noise in the synthesized speech, especially in the low code rate case, so it is necessary to introduce the postfiltering design at the decoding end.

Therefore, if the object of long-time postfiltering is the prediction error signal, it is better than the object of the speech signal. Moreover, the calculation of the control factor in the long-time postfilter is related to the turbidity of the speech, so the control factor can be calculated in the residual signal domain to obtain more accurate values.

The postfilter design in G729 proves the correctness of this idea. The synthesized speech is first passed through the short-time predictor to obtain the residual signal; then, the long-time postfilter is applied to this residual signal, and finally, the short-time postfilter is applied.

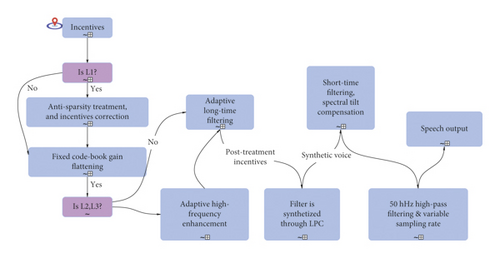

Figure 4 shows the postprocessing flowchart of this wideband embedded encoder, and the modules are described in detail in the following.

The antisparse processing is performed only at the rate of 8 kb/s, and it acts on the fixed codebook vector with the purpose of improving the low bit rate perception quality. This is because if only 8kb/s streams are received at the decoder, the fixed codebook vector has only three nonzero sample points per subframe (called “sparse”), and this sparsity causes subjective auditory unrealism. In order to reduce the artificial perception of this sparsity, antisparse processing is applied to the surrogate digital book vector.

Ev and Ec are the energy of adaptive codebook and fixed codebook, respectively, , and . The closer λ is to 0, the closer the frame is to pure turbid speech. The closer λ is to 1, the closer the frame is to pure clear speech.

Among them, p is the order of the line prediction coefficient, ispn is the ISP coefficient of the current frame, and ispn-1 is the ISP coefficient of the previous frame. The closer Q is to 1, the more stable the frame is.

- (1)

If the fixed codebook gain , the algorithm calculates and then compares tmp with . If , the algorithm sets tmp to . Its initial value of is 0.

- (2)

If the fixed codebook gain , the algorithm calculates tmp = 0.84 and then compares tmp with . If , the algorithm sets tmp to .

- (3)

The algorithm updates , that is, the algorithm sets up .

- (4)

Finally, the smoothed fixed codebook gain is obtained: .

The fixed codebook describes the details of speech, and the energy is mainly concentrated in the high-frequency part, and the low-frequency part has less energy. For pure turbid speech, adjusting the energy of the fixed codebook in low and high frequencies within a reasonable range can improve the perception of speech. The encoder uses high-frequency enhancement filters to enhance the first and second layers, as shown in Figure 5.

T is the integer fundamental delay of the current subframe. r = 0.5. G is the adaptive control factor, and 0 < g < 1, which allows adaptive control of the long-time postfilter, which is expressed as follows: if the current subframe excitation is strongly correlated with past excitations (for example, a clear tone), g tends to 1. Conversely, if the current subframe excitation is weakly correlated with past excitations (for example, a clear tone), g tends to 0, that is, it does not pass the long-time postfilter. The values of T and g are calculated by the following procedure.

Among them, r(n) is the current subframe excitation and rk(n) is the excitation code vector obtained by interpolating around T1. rk(n) is first obtained by an interpolation filter of length 33, and after finding the optimal fractional fundamental delay T, rk(n) is then rederived by an interpolation filter of length 129. When the R(k) calculated by the filter of length 129 is larger than the Z obtained by the filter of length 33, the filter of length 129 is chosen.

The core layer of this embedded encoder is the CELP model. At the same time, it is necessary to be able to handle both wideband speech (bandwidth 50–7000 Hz) and narrowband speech (bandwidth 300–4000 Hz). In order to improve the quality of synthesized speech for these two types of input speech, this study tries to introduce the traditional short-time postfilter.

Figure 7(a) shows the frequency response of the synthesis filter for one-frame speech and (b) shows the frequency response of . It can be seen from the figure that (b) can track the resonance peaks of the speech spectrum and weaken the energy between the resonance peaks, but this filter introduces a spectral tilt. By adding the spectral tilt compensation filter, the spectral tilt of the filter after a short time is reduced, as shown in Figure (c). So the synthesized speech has to undergo spectral tilt compensation and adaptive gain control after entering the short-time filter, and these three modules are one and the same.

Here, is the skew factor and . r is a constant, r, = 0.9 when , and ri = 0.2 when .

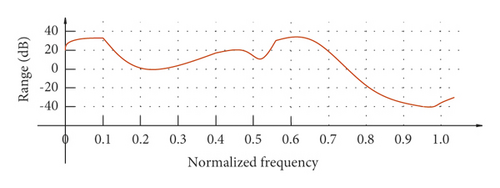

- (1)

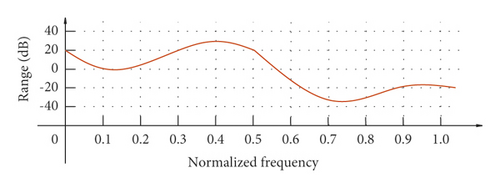

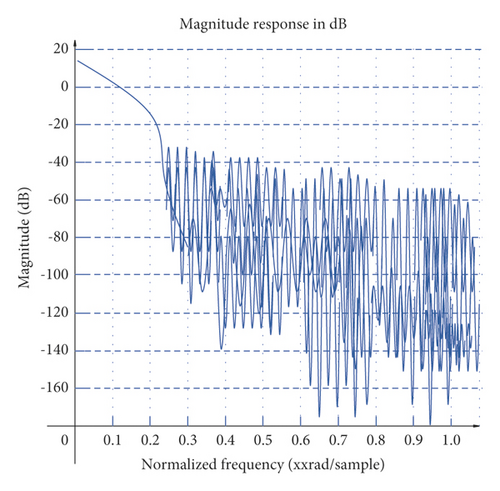

Algorithm performs downsampling from 16 kHz to 12.8 kHz. We set L = 4, M = 5, that is, 4/5 downsampling, and after conversion, the sampling rate is 12.8 kHz, that is, each frame of speech from 320 sample points to 256 sample points. The normalized cutoff frequency o for h(n) is 0.2 n, the length is N = 120, and the amplitude response is shown in Figure 8.

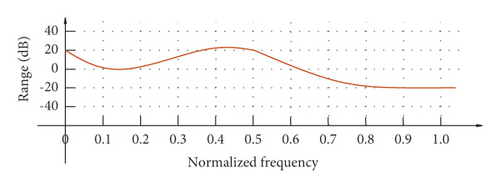

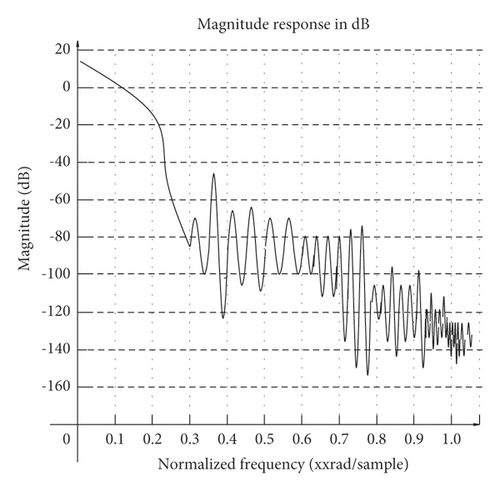

- (2)

Algorithm performs upsampling from 8 kHz to 12.8 kHz. We set up L = 8, M = 5, that is, 8/5 upsampling, and the converted sampling rate is 12.8 kHz, that is, each frame of speech changes from 160 sample points to 256 sample points. The normalized cutoff frequency ωx of h(n) is 0.125π, the length is N = 256, and the amplitude-frequency response is shown in Figure 9.

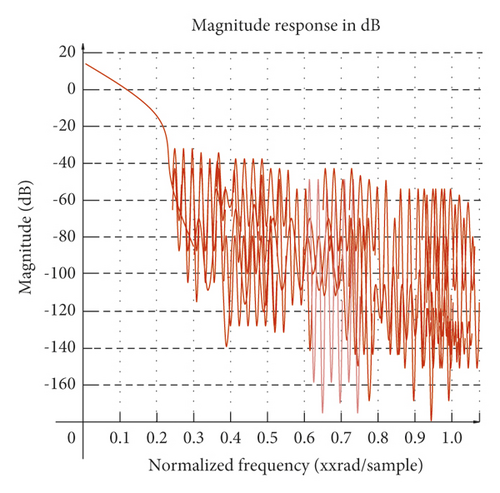

- (3)

Algorithm performs upsampling from 12.8 kHz to 16 kHz. The 4/5 upsampling is performed, and the converted sampling rate is 16 kHz, which means that each frame of speech changes from 256 sample points to 320 sample points. The amplitude response is shown in Figure 10, where L = 5, M = 4, h(n) normalized cutoff frequency ωx is 0.2π, and the length is N-120.

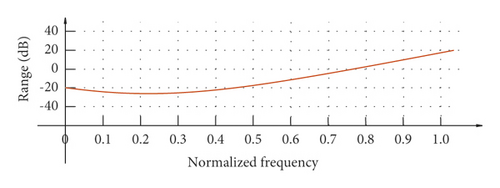

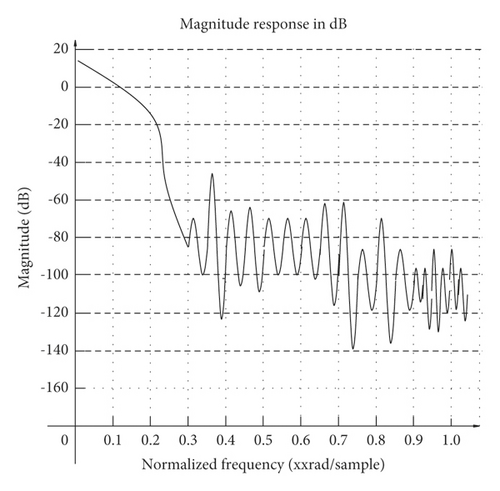

- (4)

Algorithm performs downsampling from 12.8 kHz to 8 kHz. The 5/8 downsampling is performed on the speech signal with a 12.8 kHz sampling rate, and the converted sampling rate is 8 kHz, which means that each frame of speech changes from 256 sample points to 160 sample points. Among them, L = 5, M = 8, h(n) normalized cutoff frequency ωx is 0.125π, the length is N = 240, and amplitude-frequency response normalized cutoff frequency is shown in Figure 11.

4. Evaluation of Multimedia Popular Music Teaching Effect Based on Audio Frame Feature Recognition

The music teaching system provides a variety of music learning services, online guidance, virtual environment learning, and intelligent evaluation. In order to realize its functions, the entire platform adopts a five-layer architecture, and from bottom to top, they are as follows: access layer, data processing layer, data storage layer, scene management layer, and application layer, as shown in Figure 12.

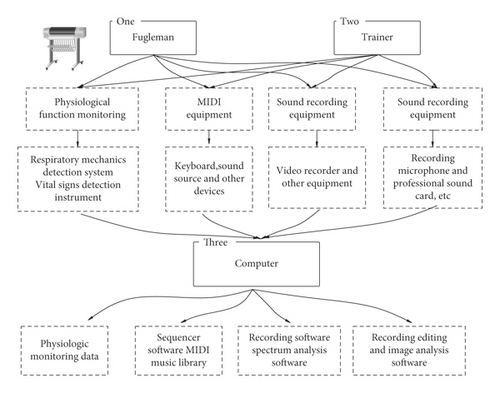

The system builds a corresponding database for students. Based on the traditional teaching experience, this study does a quantitative analysis of the teaching content at all levels. The statistical analysis and results of a large number of data can provide more powerful reference data for the teaching and training of teachers and students. The framework of the external facilities and equipment of the system is shown in Figure 13.

The audio frame feature recognition effect and teaching effect of the system proposed in this study are evaluated, and the results shown in Tables 1 and 2 below are obtained.

| No. | Audio frame recognition |

|---|---|

| 1 | 92.44 |

| 2 | 93.31 |

| 3 | 94.62 |

| 4 | 94.72 |

| 5 | 90.97 |

| 6 | 91.16 |

| 7 | 95.15 |

| 8 | 91.18 |

| 9 | 93.39 |

| 10 | 89.98 |

| 11 | 95.92 |

| 12 | 89.06 |

| 13 | 90.93 |

| 14 | 94.99 |

| 15 | 94.61 |

| 16 | 95.25 |

| 17 | 95.39 |

| 18 | 95.00 |

| 19 | 94.88 |

| 20 | 93.30 |

| 21 | 92.34 |

| 22 | 91.24 |

| 23 | 90.44 |

| 24 | 90.26 |

| 25 | 94.89 |

| 26 | 90.11 |

| 27 | 95.04 |

| 28 | 92.59 |

| 29 | 91.64 |

| 30 | 94.67 |

| 31 | 94.68 |

| 32 | 93.94 |

| 33 | 95.77 |

| 34 | 94.49 |

| 35 | 95.73 |

| 36 | 89.39 |

| No. | Teaching effect |

|---|---|

| 1 | 88.07 |

| 2 | 85.26 |

| 3 | 89.39 |

| 4 | 89.74 |

| 5 | 87.39 |

| 6 | 82.90 |

| 7 | 84.06 |

| 8 | 89.92 |

| 9 | 94.00 |

| 10 | 85.92 |

| 11 | 90.00 |

| 12 | 91.31 |

| 13 | 85.73 |

| 14 | 92.34 |

| 15 | 89.48 |

| 16 | 82.45 |

| 17 | 89.44 |

| 18 | 89.01 |

| 19 | 83.84 |

| 20 | 89.57 |

| 21 | 92.83 |

| 22 | 89.29 |

| 23 | 84.02 |

| 24 | 86.10 |

| 25 | 91.00 |

| 26 | 90.01 |

| 27 | 88.85 |

| 28 | 83.34 |

| 29 | 91.33 |

| 30 | 92.88 |

| 31 | 91.33 |

| 32 | 85.89 |

| 33 | 83.57 |

| 34 | 89.03 |

| 35 | 89.03 |

| 36 | 85.95 |

It can be seen from the above research that the multimedia popular music system based on audio frame feature recognition technology proposed in this study has good results, so the multimedia popular music system based on audio frame feature recognition technology can be practiced in actual teaching later.

5. Conclusions

Popular music is diversified, some are suitable for college students to appreciate, and some should really be kept away from college students. It is precisely because of this uneven development of popular music that as educators always worry that young students will be harmed, so they have an attitude of rejecting popular music. However, in the context of the entire society, this kind of educational rejection will not reduce the impact of popular music on college students. In the past, the theoretical circles’ rejection and criticism of popular music were somewhat influenced by the opposition between Eastern and Western ideologies. They subconsciously think that as long as they are imported from the West, they are corrupt and bad. Popular music is purely westernized regardless of its origin or its own content and form. Therefore, as a socialist country, we should resist it and protect young people from this decadent culture. Before the reform and opening up, this recognition lasted for a long time. This study combines audio frame feature recognition technology to evaluate the effect of multimedia popular music teaching, improve the quality of multimedia popular music teaching, improve the role of popular music in the growth of students, and promote the healthy development of students’ body and mind.

Conflicts of Interest

The author declares no competing interests.

Acknowledgments

This study was sponsored by the Shandong University of Arts.

Open Research

Data Availability

The labeled dataset used to support the findings of this study is available from the corresponding author upon request.