Short-Term Traffic Prediction considering Spatial-Temporal Characteristics of Freeway Flow

Abstract

This paper presents a short-term traffic prediction method, which takes the historical data of upstream points and prediction point itself and their spatial-temporal characteristics into consideration. First, the Gaussian mixture model (GMM) based on Kullback–Leibler divergence and Grey relation analysis coefficient calculated by the data in the corresponding period is proposed. It can select upstream points that have a great impact on prediction point to reduce computation and increase accuracy in the next prediction work. Second, the hybrid model constructed by long short-term memory and K-nearest neighbor (LSTM-KNN) algorithm using transformed grey wolf optimization is discussed. Parallel computing is used in this part to reduce complexity. Third, some meaningful experiments are carried out using real data with different upstream points, time steps, and prediction model structures. The results show that GMM can improve the accuracy of the multifactor models, such as the support vector machines, the KNN, and the multi-LSTM. Compared with other conventional models, the TGWO-LSTM-KNN prediction model has better accuracy and stability. Since the proposed method is able to export the prediction dataset of upstream and prediction points simultaneously, it can be applied to collaborative management and also has good potential prospects for application in freeway networks.

1. Introduction

Intelligent transportation system (ITS) has become an effective way to reduce pollution and improves the performance of freeways, while the short-term traffic flow prediction is an important part to support the smart management and control of freeways. The trend of short-term traffic flow prediction is changing from parametric statistical models to nonparametric models and mixed models. Time-series methods were widely used in parametric statistical models, including exponential smoothing [1–3], moving average [4, 5], and autoregressive integrated moving average (ARIMA) model [6–8]. Kalman filtering was also used for traffic flow prediction, such as adaptive Kalman filter [9–11], hybrid dual Kalman filter [12], and noise-identified Kalman filter [13]. With the rapid development of ITS and improvement of data quality, more nonparametric prediction methods are used in the prediction of traffic flow. K-Nearest Neighbor (KNN) nonparametric regression, a nonlinear prediction method, was used to calculate Euclidean distance to find the nearest neighbor for prediction [14]. The improved Bayesian combination model was proposed to increase the accuracy of prediction [15]. Support vector machines (SVM) were also used considering the weak sensitivity to outliers [16]. The combined algorithm based on wavelet packet analysis and least square support vector machines was used to resolve the uncertainty and randomness of data [17]. Particle swarm optimization (PSO) and other optimization algorithms were applied to SVM because of small model calculation and good prediction performance [18]. With the development of artificial intelligence (AI), deep learning models have been widely used in traffic prediction. Smith and Demetsky [19] used backpropagation (BP) neural network to do the prediction. Optimization algorithms such as PSO and genetic algorithm (GA) were also applied to BP, and the effect is obvious [20, 21]. Recurrent neural network (RNN) can realize long-term memory calculation and was used in prediction, but it had the problem of gradient explosion [22]. Long short-term memory (LSTM) network was proposed to solve it by using a forget gate [23, 24], which was not only used in natural language processing [25], for example, language generation [26], text classification [27], and phoneme classification [28], but also in prediction fields, such as short-term traffic flow prediction [29], housing load prediction [30], and pedestrian trajectory prediction [31]. Furthermore, improvements and combinations with other models have been proposed in many fields, from application in large-scale data problems [32] to the prediction of traffic flow, such as using GA to optimize the LSTM hyperparameters to get better performance [33]. The comparison of typical machine learning models is shown in Table 1.

| The basic model | Model performance | Improved models |

|---|---|---|

| Backpropagation neural network (BP) | Advantages [34] | GA-BP [21], PSO-BP [20], EEMD-IAGA-BP [35] |

| (1) Self-adaptive self-learning | ||

| (2) Strong nonlinear mapping ability | ||

| (3) Strong generalization ability | ||

| Disadvantages [34] | ||

| (1) Slow convergence speed down | ||

| (2) Easy to fall into the local optimal solution | ||

| Least-squares support vector machines (LSSVM) | Advantages [36] | W-LSSVM [17], PSO-SVM [18], EMD-GPSO-SVM [37] |

| (1) Suitable for small datasets | ||

| (2) Simple and convenient | ||

| (3) Strong nonlinear generalization ability | ||

| Disadvantages [36] | ||

| (1) Unable to handle large datasets | ||

| (2) Sensitive to missing values | ||

| Long short-term memory (LSTM) | Advantages [38] | GA-LSTM [33], SVD-PSO-LSTM [39], KNN-LSTM [40] |

| (1) High precision | ||

| (2) Solve the problem of RNN gradient | ||

| Disadvantages [38] | ||

| (1) Time-consuming calculation | ||

| (2) Large consumption of hardware resources | ||

| (3) Too many parameters, easy to overfit | ||

Deep learning models are widely used in traffic flow prediction, especially in short-term prediction [41]. However, traffic flow has strong spatial-temporal characteristics on time series [42, 43]. More attention was paid to this characteristic in recent years’ research of short-term traffic flow prediction [44–46]. Luo et al. [40] proposed a spatial-temporal traffic flow prediction model with KNN and LSTM to screen highly correlated upstream points and produced the prediction. Ma et al. put forward a method to select input data for daily traffic flow forecasting through contextual mining and intraday pattern recognition [47] and produced the daily traffic flow forecasting with CNN and LSTM [48]. Supervisory learning was used to mine the relationship between the factors of historical data and current traffic flow to train the predictor in advance so as to reduce the predicting time [49]. In addition, the match-then-predict method [50] and the fuzzy hybrid framework [51] with dynamic weights by mining spatial-temporal correlations were both proposed. Attention mechanisms were also combined in LSTM to increase the accuracy of prediction [52]. These methods that combine various factors using attention mechanism can reasonably allocate limited resources, increase the efficiency, and reduce computation.

In this paper, we propose the short-term traffic flow prediction model considering the spatial-temporal characteristics using LSTM and KNN under the concept of attention mechanism. First, the Gaussian mixture model (GMM) is used to select the upstream detection points to produce the prediction. Two parameters are used for the classification: one is the Kullback–Leibler Divergence (KL), also known as the relative entropy, which reflects the difference in the distribution of two datasets through approximate calculations, especially for large-sample traffic data. The other is the grey relation analysis (GRA) coefficient, which reflects the correlation between two groups of normalized data after similarity analysis. Second, the hybrid model of LSTM and KNN is proposed to produce the prediction using the selected data. LSTM is used to predict the traffic flow of upstream points as the training dataset of KNN. To solve the problem of time lag, input time of upstream data is changed in the model according to the space distance between the input point and the prediction point and the average speed of traffic flow. Moreover, transformed grey wolf optimizer (TGWO) is used to optimize key parameters, and Savitzky-Golay (SG) filter smoothing is used to reduce the noise in the model to improve the performance. The proposed TGWO-LSTM-KNN prediction model in this paper gives greater consideration to the spatial-temporal characteristic of freeway traffic flow to improve the accuracy of prediction and reduces the complexity of computation by selecting and preprocessing input data.

The rest of this paper is organized as follows. Section 2 introduces the methodology of the proposed model. Section 3 carries out the experiments and analysis of the proposed model with real-world traffic flow data. Section 4 presents the conclusions and the prospects of the research. The abbreviations used in the rest of the paper are listed in Table 2.

| Name | Abbreviation |

|---|---|

| Long short-term memory | LSTM |

| K-nearest neighbor algorithm | KNN |

| Kullback–Leibler | KL |

| Grey relation analysis | GRA |

| Gaussian mixture model | GMM |

| Expectation maximization | EM |

| Transformed grey wolf optimization | TGWO |

| Support vector machines | SVM |

| Backpropagation neural network | BP |

| Root mean square error | RMSE |

| Mean absolute error | MAE |

| Mean absolute percentage error | MAPE |

2. Methodology

2.1. Framework

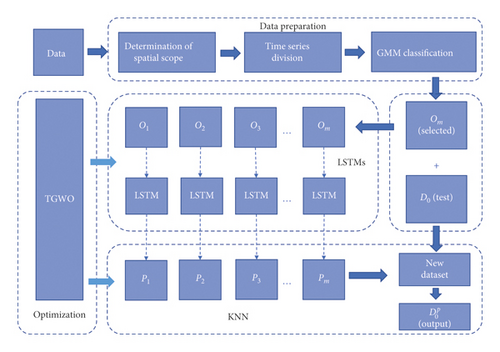

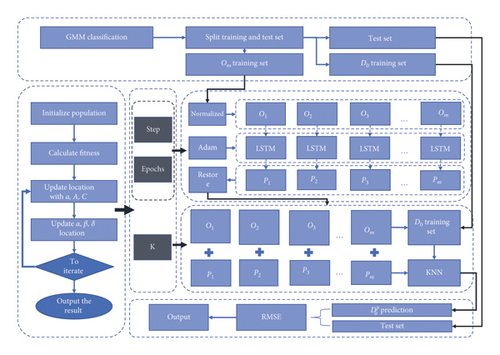

This paper proposes TGWO-LSTM-KNN with the GMM classification model, which includes two parts: data preparation and prediction. GMM is used to choose the input data in the data preparation part considering the spatial-temporal characteristics of freeway traffic flow, while the prediction part is composed of LSTM parallel computing module, KNN module, and TGWO module. The framework of the proposed model is shown in Figure 1.

2.1.1. Data Preparation

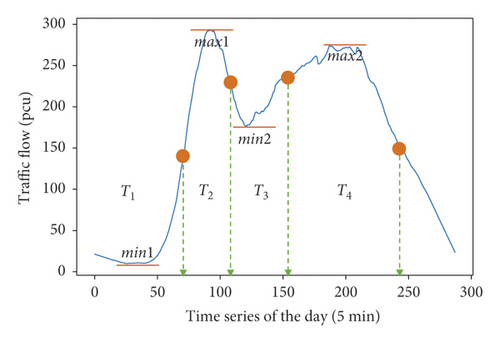

In the freeway network, the traffic flow of upstream correlates with the prediction point flow, which is considered as spatial correlation. Moreover, the traffic flow of the prediction point changes over time inter- and intraday, and each specific period may have different patterns, such as the morning peak hour and the off-peak hour. Therefore, time series are divided into different parts according to the flow patterns, which will help to improve the accuracy of prediction. The temporal characteristic of prediction point flow is observed through a flow chart. Then set the midpoint of two adjacent extreme points and complete the time series division task.

-

Step 1: use time step and speed to determine the space range. Note the upstream points within the scope as O1 ~ Om.

-

Step 2: divide day time into T1 ~ Tη.

-

Step 3: construct dataset. Divide the dataset into working days and nonworking days. Change the input time of upstream data to meet the time lag of the prediction point considering the distance and travel speed.

-

Step 4: calculate the KL divergence and the GRA coefficient in Ti of working days and nonworking days.

-

Step 5: input the KL divergence and the GRA coefficient into GMM for binary classification. O1 ~ Om are divided into two groups in each Ti. The group of points with the KL divergence close to 0 and the GRA coefficient close to 1 is used as the strongly related section of the prediction point for the next prediction.

2.1.2. Prediction

-

Step 1: use TGWO to optimize the steps and epochs in LSTM, K value in KNN.

-

Step 2: multithread LSTM parallel computing is used to reduce calculation by ignoring the relationship among upstream points. Each Oi is input into the corresponding LSTM module and then the output Pi set and D0 together form a new dataset.

-

Step 3: input the dataset into the KNN module to predict the traffic flow and output .

2.2. Data Preparation

There are three steps in data preparation: determination of spatial scope, time-series division, and GMM classification.

2.2.1. Determination of Spatial Scope

The accesses and ramps within the radius of the prediction point centered are selected as upstream points.

2.2.2. Time Series Division

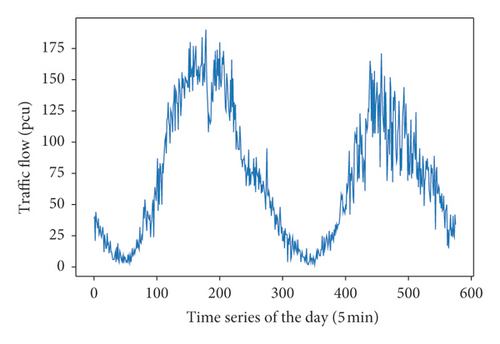

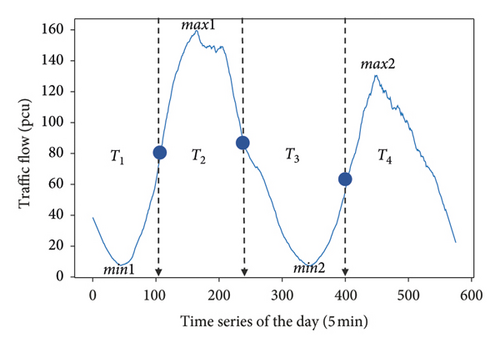

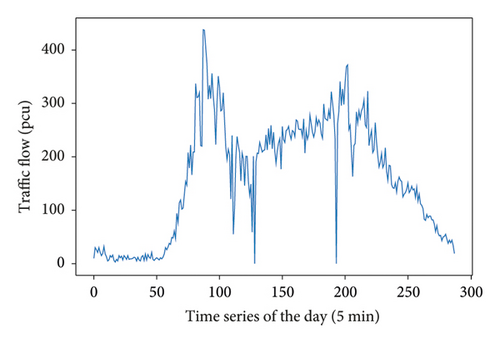

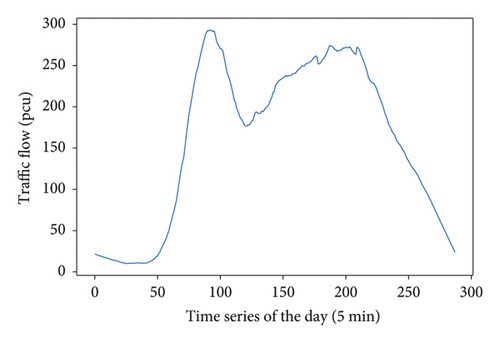

There are random fluctuations in daytime traffic flow, as shown in Figure 2. SG is a method to smooth data based on local least-squares polynomial approximation proposed by Savitzky and Golay [53], which is used to get the extreme traffic value points of the prediction point (D0) (see Figure 2). Set the point in the middle of the two adjacent extreme values, and each time series between two middle points is a time part (Ti) (see Figure 3).

2.2.3. SG Calculation Method

SG includes two parameters, which are the window length n and the order number k. For the window length n, with the increasing of n, the deviation between the processed data and the real data increases, and also the smoothness. For order number k, with the increasing of k, the deviation between the processed data and the real data decreases, and also the smoothness. According to the characteristics of highway traffic flow and existing research, the choice of n and k in this paper is 31 and 1, respectively.

The matrix is expressed as

Smoothing dataset is

2.2.4. GMM Classification

Gaussian mixture model (GMM) is a probabilistic model that assumes all the data points are generated from a mixture of a finite number of Gaussian distributions with unknown parameters [54], which is used to judge the correlation between upstream and predicted traffic flow, while EM is used to obtain the maximum likelihood estimation of GMM [55]. In this paper, KL-divergence and GRA coefficient are two parameters to do the binary classification.

The closer KL divergence is to 0, the more similar the two distributions are.

(2) GRA Coefficient. GRA is a method to judge the similarity of different datasets. Compared with traditional Pearson correlation [58], it can use a smaller amount of data to reflect the linear similarity between traffic flows [59]. The steps of GRA are as follows.

Define the prediction sequence (d0(1)GRA, d0(2)GRA, …, d0(n)GRA) as DGRA and the factor sequence (oi(1)GRA, oi(2)GRA, …, oi(n)GRA) as. OiGRA

Then use EM algorithm to calculate ϑ1, μ1, Σ1, ϑ2, μ2, Σ2.

EM-GMM pseudocode is as follows: (Algorithm 1)

-

Algorithm 1: EM-GMM pseudocode.

-

function EM − GMM(k⟵2, ϑ1, μ1, Σ1, ϑ2, μ2, Σ2, i⟵0)

-

do i⟵i + 1:

-

calculate p(x);

-

E − step:

-

-

-

-

-

-

until ln pi+1(x|ϑ, μ, Σ) − ln pi(x|ϑ, μ, Σ) < T;

-

returnϑ1, μ1, Σ1, ϑ2, μ2, Σ2;

-

end function

2.3. TGWO-LSTM-KNN Hybrid Model

TGWO-LSTM-KNN hybrid model consists of LSTMs module, KNN module, and TGWO module. The specific process is shown in Figure 4.

The TGWO-LSTM-KNN model first splits the dataset. The traffic flow of O1 ~ Om is trained in the LSTMs module to predict future traffic flow P1 ~ Pm in parallel. Then use traffic flow dataset consisting of O1 ~ Om, P1 ~ Pm, and D0 to predict in the KNN module. The step and epochs of LSTM are the parameters with great influence [25], and the coefficient K in the KNN module is also one of the key parameters. Then use the TGWO module to optimize these parameters.

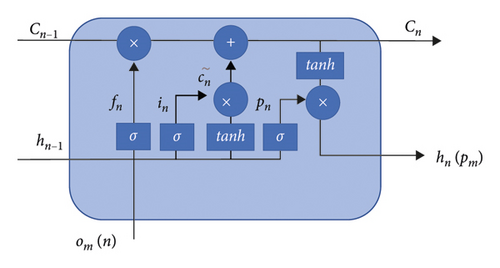

2.3.1. LSTMs Module

LSTM is a special RNN [24] with a forgetting gate. The sigmoid function is used to prevent gradient explosion and disappearance. The traffic flow data of upstream points are trained in different LSTM threads in parallel, which is defined as LSTMs. The data input time is also changed to reduce the lag of the module, which makes LSTM more accurate. The memory unit of the module is shown in Figure 5.

| Symbol | Meaning |

|---|---|

| The updated state of a memory cell | |

| in, fn, pn, cn, hn | Input gate, forgetting gate, output gate, memory cell, hidden layer output value |

| woc, wch | The weight of om(n) and the hidden layer |

| om(n) | The input of the n.th data at the m.th upstream point |

| cn−1, hn−1 | The output of the memory cell and the hidden layer at n − 1 |

| woi, whi, wci | The weight of the input gate of om(n), the hidden layer, and the memory cell |

| wof, whf, wcf | The weight of the forgetting gate of om(n), the hidden layer, and the memory cell |

| wop, whp, wcp | The weight of the output gate of om(n), the hidden layer, and the memory cell |

| bc, bi, bf, bp | Offset item |

| tanh and σ | Activation functions |

| ″∘″ | Dot product |

2.3.2. KNN Module

-

Algorithm 2: LSTM-KNN pseudocode.

-

function LSTM_KNN(step1, …, stepn, epochs1, …, epochsn, k):

-

transform dataset into MinMaxScaler(featurerange = (0, 1));

-

spilt dataset[′On′]into On trainand On test;

-

LSTMs − step:

-

thread1 : look_back⟵step1; reshape O1trainand O1testinto LSTMdatasetwith look_back;

-

model1.add(LSTM(nunit));

-

model1.dense(1);

-

model1.fit(O1train, epochs1, batchsize⟵number of days);

-

O1prediction⟵model1.predict(O1test);

-

return O1prediction;

-

⋮

-

threadn : look_back⟵stepn; reshape On train and On test into LSTMdataset with look_back;

-

modeln.add(LSTM(nunit));

-

modeln.dense(1);

-

modeln.fit(On train, epochsn, batchsize⟵number of days);

-

O1prediction⟵model1.predict(O1test);

-

return On prediction;

-

KNN − step:

-

knntest⟵stack O1prediction, …, On prediction in sequence horizontally;

-

knntrain⟵dataset;

-

knn.fit(knntrain[′On′], knntrain[′D0′]);

-

knnprediction⟵knn.predict(knntest, k);

-

restore knnprediction;

-

return knnprediction;

-

end function

The number of units in the hidden layer is nunit, and the data dimension D of LSTM in each thread is 1, so the time complexity is . On account of the parallel calculation structure, the total time does not change too much with the increasing number of threads. Compared with the original complexity , which reduces a lot of computation and improves efficiency. The time complexity of KNN is O(n), which is only related to the number of data, so the calculation speed is fast.

LSTM has good robustness in traffic prediction [60] and also improves performance by setting reasonable time lag [61] or forgetting layer. KNN can avoid large deviation directly because it calculates the closest Euclidean distance to produce the prediction [62]. These two positive aspects of robustness will support the proposed method to gain more adaptability on data fluctuations and environmental change.

2.3.3. TGWO Module

Grey wolves algorithm was put forward by scholars Mir Jalili Australia in 2014, the Grey wolf groups according to social relations are divided into four grades. Each wolf represents a candidate solution, while the most optimal solution is αT, the suboptimal solution is βT, the third optimal solution is δT, and the last is ωT. In each iteration, the three optimum solutions as αT, βT, δT determine the prey position and direct the ωT to update the position around it [63].

By using the improved adaptive convergence factor [64], the extremum can be quickly found when the step size is large in a global search. Besides, the extremum can be prevented from missing when the step size becomes smaller in the local search. The weight step size formula [64] adds the weight decreasing strategy, which can reduce unnecessary iterative processes and improve efficiency. The calculation method is as follows.

(2) Calculate Fitness. Input the element corresponding to each wolf into LSTM-KNN and compare the error. Define the optimal solution as αT, βT, δT, respectively.

3. Experiments and Analysis

3.1. Experimental Data

Whitemud Drive is an in-city highway across Edmonton, Alberta, Canada. It is 28 kilometers long with a basic speed limit of 80 kilometers per hour. As a test road, Whitemud Drive is equipped with seven traffic video cameras and seven loop detectors (VDS1017, VDS1037, VDS1034, VDS1031, VDS1029, VDS1027, and VDS1019) from west to east on the main road and gate road to observe the vehicle flow, the vehicle speed, and the vehicle density. In this paper, data of 15 working days are used as historical data for experiments.

3.2. GMM Selecting Test

VDS1019 is set as the prediction point D0, and the change of traffic flow within one day of the working day is plotted according to 5 mins (see Figure 6). To better carry out the time-division work, the data are smoothed by SG, and the image is reconstructed (see Figure 7).

Find the extreme values, set up the midpoints, and divide the time series (see Figure 8).

The time-division results are shown in Table 4.

| Time part name | Number of data | Time |

|---|---|---|

| T1 | 0–70 | 0 : 00–7 : 00 |

| T2 | 71–110 | 7 : 00–9 : 00 |

| T3 | 111–155 | 9 : 00–13 : 00 |

| T4 | 156–249 | 13 : 00–20 : 00 |

3.2.1. Reconstructing the Dataset by Time-Division

VDS1017, VDS1037, VDS1034, VDS1031, VDS1029, and VDS1027 are recorded as O1 ~ O6, their historical data on working days are divided into parts according to T1 ∼ T4, and the data in the same time part are put into the same column. Since the length of the road is 28 km and the speed limit is 80 km/h, we choose 60 km/h as the test speed. It takes 30 mins to go from VDS1017 to VDS1019. Considering the continuity of the road network, the vehicle passes through each point every 5 mins, so O2 ~ O6 is delayed by 5–25 mins for input. And the prediction point D0 is delayed by 30 mins for input. In this way, the data of each day are the delayed input to form a dataset.

Calculate KL divergence and GRA coefficient (see Table 5).

| KL/GRA result | T1 | T2 | T3 | T4 | ||||

|---|---|---|---|---|---|---|---|---|

| KL | GRA | KL | GRA | KL | GRA | KL | GRA | |

| O1 | 0.030 | 0.90 | 0.023 | 0.78 | 0.020 | 0.86 | 0.010 | 0.87 |

| O2 | 0.029 | 0.90 | 0.009 | 0.81 | 0.017 | 0.88 | 0.012 | 0.86 |

| O3 | 0.038 | 0.89 | 0.021 | 0.78 | 0.031 | 0.85 | 0.035 | 0.82 |

| O4 | 0.035 | 0.90 | 0.009 | 0.81 | 0.018 | 0.88 | 0.011 | 0.85 |

| O5 | 0.027 | 0.91 | 0.008 | 0.83 | 0.016 | 0.89 | 0.009 | 0.87 |

| O6 | 0.008 | 0.95 | 0.005 | 0.88 | 0.004 | 0.93 | 0.004 | 0.92 |

| D | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 |

Input the result table into the GMM module for classification.

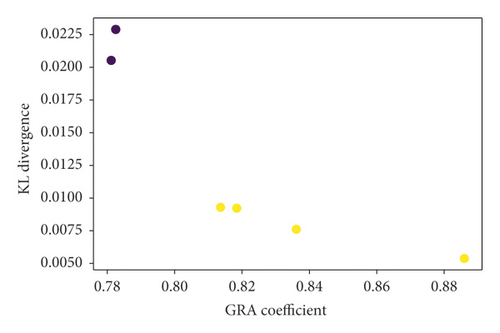

Taking T2 as an example, the GMM classification results are shown in Figure 9.

The classification results are two types. The closer the GRA coefficient is to 1, the better the correlation is, and the closer the KL divergence is to 0, the more similar the distribution is. So, choose the yellow mark points as the input points (see Figure 9). The following is the final classification results of four datasets (see Table 6).

| GMM result | T1 | T2 | T3 | T4 |

|---|---|---|---|---|

| D point | O6 | O2, O4, O5, O6 | O2, O4, O5, O6 | O1, O2, O4, O5, O6 |

The upstream points selected between T2 and T3 are the same, so T2 and T3 can be regarded as the same time part, which is 7 : 00–13 : 00, and the dataset T2+3 can be reconstructed. The next experimental data are T2+3.

3.3. Comparative Experiments at Different Upstream Points

Different upstream points are selected for model prediction and mean absolute percentage error (MAPE) comparison so as to verify the effect after classification. Results are shown in Table 7.

| Model/points | V34 | V34 + V31 | V34 + V31 + V29 | V34 + V31 + V29 + V27 | Including abandonment points |

|---|---|---|---|---|---|

| LSTM-KNN | 14.45 | 14.96 | 14.31 | 12.19 | 12.46 |

| Linear-SVM | 20.47 | 21.14 | 20.03 | 21.17 | 21.24 |

| Poly-SVM | 15.85 | 17.30 | 17.15 | 17.65 | 18.04 |

| RBF-SVM | 20.51 | 20.29 | 20.87 | 20.17 | 20.48 |

| Multi-LSTM | 14.32 | 14.51 | 11.96 | 9.51 | 23.14 |

In this dataset, it can be seen that the abandoned upstream points have little difference from the selected points (see Table 5). So it is not obvious in the accuracy improvement, which is reasonable. If in datasets with a large difference, the meaning of GMM classification operation will be reflected more.

3.4. TGWO-LSTM-KNN Experiments

3.4.1. LSTM-KNN Structural Test

Considering the operation time and efficiency, this paper constructs the following structures for testing. Since the training data are 15 days’ traffic flow, the batch size is set to 15, and the data are divided into 15 groups, which will ignore the data relationship between each group and reduce the risk of overfitting. If the batch size is too small, it is not conducive to the training model and is easy to overfitting. The test step is 3, epochs are 100, and the comparison results are shown in Table 8.

| Structure | RMSE | MAE | MAPE |

|---|---|---|---|

| Double-layer structure | 42.49 | 29.81 | 11.64 |

| LSTM (256 units) + | |||

| LSTM (128 units) | |||

| Four-layer structure | 44.87 | 31.33 | 12.25 |

| LSTM (256 units) + | |||

| Dropout (0.2) + | |||

| LSTM (128 units) + | |||

| Dropout (0.2) | |||

| Single-layer structure | 44.42 | 31.55 | 12.32 |

| LSTM (256 units) | |||

| Single-layer structure | 44.43 | 31.29 | 12.19 |

| LSTM (128 units) | |||

The results show that increasing the number of LSTM layers can improve the prediction accuracy, but it increases a lot of computing time and overfits easily. Increasing the forgetting layer (the forgetting rate is 0.2) will reduce the accuracy. In the first-layer structure, there is only a small difference between 256 units and 128 units; therefore, the single-layer 128 units structure is selected for prediction in the following LSTM.

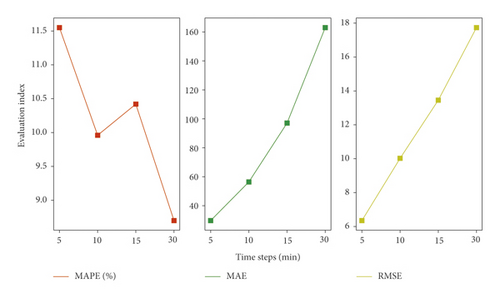

3.4.2. LSTM-KNN Time Steps Test

The model is tested under different time steps (5 mins, 10 mins, 15 mins, and 30 mins). The result shows that the model has good accuracy and stability (see Figure 10). The overall prediction accuracy shows a downward trend, and the absolute error shows an upward trend. It is worth noting that when the time step is 10 mins, the trend of accuracy will change and the error is lower than 15 mins. In general, even if the time step is different, the model still has good performance for the prediction accuracy.

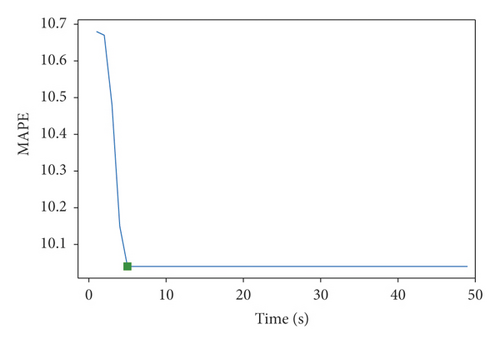

3.4.3. TGWO Optimization Test

The dataset has four upstream points, and the key parameters to be optimized are the steps of O2, O4, O5, O6, the training epochs in the LSTM module, and the K in the KNN module. So, M[i, j] = [step2, step4, step5, step6, epochs2, epochs4, epochs5, epochs6, k]. Set the upper and lower bounds: the step size ranges from 3 to 20. Training time ranges from 100 to 200. The coefficient K ranges from 5 to 50, the number of iterations t is 50, the number of wolves is N = 5, and the dimension S is 30. The training results are shown in Figure 11.

When the MAPE is 10.04 (green mark in Figure 11), it will reach the optimal solution Mbest = [5,6,5,6,120,100, 120,100,25]. The steps of O2, O5 and O4, O6 are 5 and 6, the training epochs are 120 and 100, and K is 25.

3.4.4. Comparison of TGWO-LSTM-KNN and Other Models

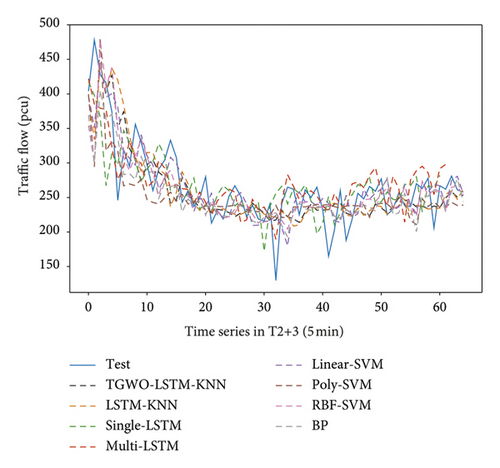

TGWO-LSTM-KNN is compared with SVM, LSTM, and BP. The parameters of TGWO-LSTM-KNN are the optimal solution. The step of LSTM-KNN is 3, K is 16, and the epochs is 100. LSTM has 256 units in the first layer and 128 units in the second layer of the double-hidden layer structure, with the step of 3 and epochs of 100. SVM uses three modes to fit, linear and poly, RBF. BP neural network is a three-layer structure, two fully connected layers, the middle increased the forgetting layer (rate is 0.2), and the epochs is 100. Results are shown in Figure 12.

The accuracy of LSTM-KNN can reach the level of the popular model. The accuracy of TGWO-LSTM-KNN can be improved by 15.27% compared with single-LSTM, 9.47% compared with BP, and 43.12% compared with poly-SVM (see Table 9). Besides, the advantage of the hybrid model is not accuracy, but being able to output the prediction set of upstream points for collaborative management.

| Model | RMSE | MAE | MAPE |

|---|---|---|---|

| TGWO-LSTM-KNN | 29.70 | 23.17 | 10.04 |

| LSTM-KNN | 44.43 | 31.29 | 12.19 |

| Single-LSTM | 36.75 | 27.88 | 11.85 |

| Multi-LSTM | 33.32 | 24.52 | 9.51 |

| BP | 37.59 | 27.59 | 11.09 |

| Linear-SVM | 38.78 | 27.03 | 21.17 |

| Poly-SVM | 45.36 | 33.87 | 17.65 |

| RBF-SVM | 40.62 | 28.29 | 20.17 |

4. Conclusions

In this paper, the TGWO-LSTM-KNN prediction model with GMM classification considering spatial-temporal characteristics under the concept of attention mechanism is proposed. The time series is divided into parts by using the temporal characteristic of the prediction point. And GMM through KL and GRA is used for further classification. The upstream points with a small difference in distribution and high linear similarity are selected to increase the accuracy and reduce the complexity. Then the hybrid model TGWO-LSTM-KNN is used to train and predict. Parallel computing is used in the LSTM module to improve efficiency.

GMM as an unsupervised model can be very flexible to classify. This model can be applied to the multifactor model to reduce complexity. KNN, as the next part of LSTM, fully combines upstream points data and prediction points data for prediction. Compared with SVM, KNN has the characteristics of fast speed and greater data processing ability, which is more suitable for multiple factors and complex data of freeway. LSTMs module can ignore the relationship between upstream points to perform the parallel computation. It can reduce the operation time and make the model more practical. TGWO is less likely to fall into local optimal solution and also has fast speed and good performance. To sum up, TGWO-LSTM-KNN with GMM classification can be better used in real freeways with complex data and multifactor with high accuracy, fast calculation speed, and strong adaptability. It can be applied in the real freeway to achieve the purpose of collaborative management.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

The authors want to thank Tianjiao Wang, Xi Chen, Xiang Wang, and Lingbin Kong for their help in the study. Thanks also should be given to the OpenITS platform (http://www.openits.cn/). The research and publication of this article were funded by the National Natural Science Foundation of China (52072129).

Open Research

Data Availability

The data used to support the findings of this study are openly available in the OpenITS platform for noncommercial purposes only and the website is https://www.openits.cn/openData1/700.jhtml.