[Retracted] Analysis of Psychological and Emotional Tendency Based on Brain Functional Imaging and Deep Learning

Abstract

When facing various pressures, human beings will have different degrees of bad psychological emotions, especially depression and anxiety. How to effectively obtain psychological data signals and use advanced intelligent technology to identify and make decisions is a research hotspot in psychology and computer science. Therefore, a personal emotional tendency analysis method based on brain functional imaging and deep learning is proposed. Firstly, the EEG forward model is established according to functional magnetic resonance imaging (fMRI), and the transfer matrix from the signal source at the cerebral cortex to the head surface electrode is obtained. Therefore, the activation results of fMRI emotional experiment can be mapped to the three-layer head model to obtain the EEG topographic map reflecting the degree of emotional correlation. Then, combining data enhancement (Mixup) with three-dimensional convolutional neural network (3D-CNN), an emotion-related EEG topographic map classification method based on M-3DCNN is proposed. Mixup is used to generate virtual data, the original data and virtual data are used to train the network together, the number of training samples is expanded, the overfitting phenomenon of 3D-CNN is alleviated, and 3D-CNN is used for feature extraction and classification. Experimental data analysis shows that, compared with traditional methods, the proposed method can retain emotion related EEG signals to a greater extent and obtain a higher accuracy of emotion five classifications under the same feature dimension.

1. Introduction

Emotion often involves people’s immediate needs and subjective attitude and often has complex interaction with other psychological processes. It is a comprehensive state of thought, feeling, and behavior. As an important part of mental state, the measurement and identification of emotion have always been a problem that people want to solve. Emotion recognition generally refers to the qualitative or quantitative identification (evaluation) of a person’s emotion by means of external observation and measurement. The premise of scientific identification of emotions is to have a scientific classification model for emotions [1–5]. Because emotion is often accompanied by high-level cognitive activities of the brain, involving a lot of subjective components, the objective and accurate evaluation of emotion has always been a difficult problem for researchers.

In recent years, with the continuous progress of modern neuroscience technology, a series of important achievements have been made in brain cognitive neuroscience by means of Electroencephalography (EEG), functional magnetic resonance imaging (fMRI), and functional near infrared spectrum instrument (fNIRS) [6, 7]. This makes new breakthroughs in the research of cognitive problems such as perception, attention, memory, planning, language, and consciousness at the level of brain nerve. The continuous development of cognitive neuroscience and brain activity measurement technology has gradually established a bridge between the subjective world and the objective world. Mankind is gradually entering the real brain reading era. In these means, EEG is to collect the spontaneous and rhythmic electrical activity signals of brain cell groups through electrodes, so as to reflect the relevant functional activities of the brain. This kind of electrical signal is always accompanied by life and reflects people’s many psychological activities and cognitive behaviors. In addition to the characteristics that cannot be disguised, EEG has the advantages of real-time difference and portability. Therefore, more and more scholars and institutions use EEG as a research tool for emotion recognition.

How to determine the EEG patterns of various emotional states through these EEG characteristics and how to classify untrained samples through corresponding EEG patterns are the tasks to be completed in the learning and classification of emotional patterns. Choosing a good classifier can play an important role in the interpretation and analysis of EEG patterns in different emotional states. EEG based emotion pattern recognition methods can be divided into unsupervised learning methods and supervised learning methods [8, 9]: (1) unsupervised learning methods include fuzzy clustering, k-nearest neighbor, and so on. They all classify samples automatically based on the Euclidean distance between samples; (2) different from unsupervised learning, when training the classifier, the supervised learning method knows or manually labels the category label of the sample and gradually modifies the classifier through the category information of the sample. Then, the trained classifier is used in the test sample set for testing. The commonly used supervised learning methods mainly include support vector machine (SVM), linear discriminant analysis (LDA), Gaussian naive Bayes (GNB), deep learning, etc. Among them, the better effect is deep learning.

At present, deep learning methods are developing very rapidly. Among them, convolution neural network (CNN) has been proved to have great development prospects in the field of computer vision and is widely used in image classification, target detection, and image segmentation [10–14]. CNN contains multiple hidden layers. Input information can be learned layer by layer to obtain high-level, abstract, and discriminative features at the high level of the network. High-level features can be used for classification to obtain good classification effect. Compared with the artificially designed feature extraction method, CNN method may be more suitable for emotion-related EEG terrain image classification.

2. Literature Review

After preprocessing and obtaining relatively pure EEG signals, it is also necessary to extract the features of EEG signals. In emotion recognition based on EEG, feature extraction is a very important link. Only by truly extracting emotion related features can we provide guarantee for the accuracy of final emotion recognition [15–17]. Mitsukura [18] designed band-pass filtering according to five common frequency bands to filter the original EEG, so as to calculate the frequency band energy corresponding to the five frequency bands as the EEG feature for emotion recognition. Bai et al. [19] used Fourier transform to calculate the power spectral density of the original EEG signal of each electrode in theta, alpha, and beta frequency bands as the EEG feature of emotion recognition. Masruroh et al. [20] used the method of nonlinear dynamics to extract the features from EEG signals and used the dimensional complexity to distinguish the two different states of rest and reflection. Wankhade and Doye [21] proposed the method of deep learning combined with wavelet decomposition to extract and recognize EEG frequency domain features, and the accuracy of emotion recognition and classification has been significantly improved. The extraction and selection of EEG features can provide a reliable source of information for the classifier, which is the key to affect the accuracy of emotion recognition. However, the above EEG-based emotion recognition methods still cannot meet the requirements in terms of stability, accuracy, and practicability. In addition, in order to improve the stability and accuracy of emotion recognition based on EEG, it is very important to select the appropriate classifier and make appropriate improvement, but there is still less research in this area.

CNN is a deep learning network model inspired by animal vision system. Its network composition imitates the principles of various cells in the vision system to build the network model [22–24]. CNN was originally designed for feature extraction of two-dimensional data. It can directly establish the mapping relationship from low-level features to high-level semantic features and has achieved remarkable results in the field of two-dimensional image classification. Zhao et al. [25] proposed a driver fatigue state recognition based on three-dimensional convolution network. A large number of theories and experiments show that 3D-CNN can extract features from the spatial information dimension and additional dimensions of the image at the same time, so as to improve the classification performance of the network. Cai et al. [26] proposed a video classification method based on three-dimensional (3D) convolution neural network (CNN), which uses convolution filter and global average pool layer to obtain more detailed features.

This paper presents a method of psychological emotion classification based on brain functional imaging and deep learning. Firstly, aiming at the selection of emotion related channels in EEG, fMRI is introduced for auxiliary analysis, and then an emotion-related EEG channel selection method based on EEG forward model is proposed, which can use the brain activation information obtained by fMRI to assist EEG channel selection. Then, aiming at the problem that the insufficient number of samples will lead to the overfitting phenomenon of the network, this paper combines data enhancement (Mixup) with 3D-CNN classification and proposes an emotion-related EEG topographic map classification method based on M-3DCNN. Finally, the effectiveness of the proposed method is verified by experimental data analysis.

3. Mental Emotion Classification Based on Brain Functional Imaging and Deep Learning

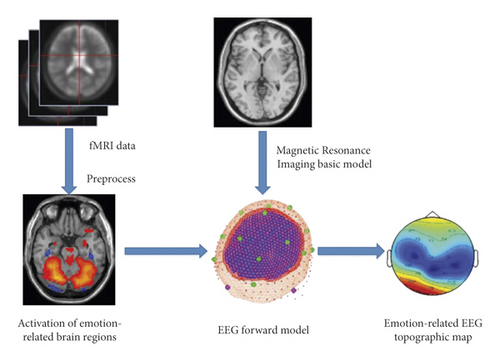

3.1. fMRI-Assisted Emotion-Related EEG Topographic Map

As two main neuroimaging tools for noninvasive brain function research, EEG and fMRI have been highly concerned and widely used in clinical diagnosis and academic research because of their advantages of high temporal resolution and high spatial resolution, respectively. At present, the fusion of EEG and fMRI has become a frontier hotspot. As an important technology in the field of neuroimaging, fMRI has the characteristics of high spatial resolution, which can make up for the lack of spatial information obtained by EEG to a certain extent.

On the basis of GLM, the robust GLM based on iterative reweighted least squares (IRLS) is more effective for small samples and large regression. The fMRI data processing software SPM8 (https://www.fil.ion.ucl.ac.uk/spm/software/spm8/) used in this paper uses this method.

- (1)

The activation of emotion-related brain regions was obtained from fMRI emotion experiment data.

- (2)

A standard head model is established through the brain standard structure image, and the data image of each individual is registered to the standard brain through the standardized steps of SPM software. By using the boundary element method (BEM) and inputting the obtained three-layer head model into the fieldtrip software, the transfer matrix L from the cortical grid to the head table can be obtained.

- (3)

The correlation between each channel and emotion was calculated by EEG forward model.

3.2. Mixup Method

In the classification task, the network usually adopts empirical risk minimization to optimize the model. However, the principle of empirical risk minimization is to memorize data rather than generalizing data during training. If the samples in the test set do not appear in the training set, it may lead to classification errors in the test set. In addition, according to the law of large numbers, when the number of samples tends to infinity, the empirical risk will tend to the expected risk. However, the emotion-related EEG topographic map has the characteristics of small sample size. Therefore, if this method is used to optimize the network in the emotion-related EEG topographic map classification task, the model may not be fully trained.

Using the Mixup method, the network can be trained by using the linear convex combination of the same or different types of samples and their labels, which can regularize the network. Therefore, this paper mixes up the pixels of emotion-related EEG topographic map to obtain the virtual data, randomly select a pair of data in the data set, mix the samples and labels according to the weight, and train the network with the virtual data generated by Mixup method and the original data.

3.3. Principle of 3D-CNN

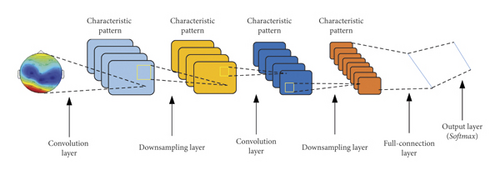

A complete CNN is usually composed of input layer, convolution layer (Conv), pooling layer, and fully connected (FC) and output layer. Its core structure is the hidden layer composed of convolution layer and pooling layer. A complete convolution divine network structure is shown in Figure 2.

CNN was originally designed for feature extraction of two-dimensional data. It can directly establish the mapping relationship from low-level features to high-level semantic features and has achieved remarkable results in the field of two-dimensional image classification. However, the information extracted in sliding calculation on two-dimensional plane is insufficient. A large number of theories and experiments show that 3D-CNN can extract features from the spatial information dimension and additional information dimension of the image at the same time, so as to improve the classification performance of the network.

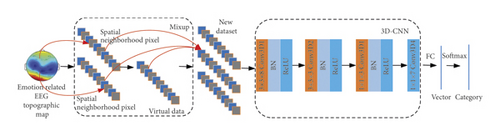

3.4. Emotion Classification Method Based on M-3DCNN

This paper combines data enhancement (Mixup) with 3D-CNN classification and proposes an emotion-related EEG topographic map classification method based on M-3DCNN. The M-3DCNN network uses the Mixup method for data enhancement. The data obtained by the Mixup method and the original data are sent to the constructed 3D-CNN network for feature extraction and classification. In order to reduce the loss of spatial resolution, M-3DCNN network uses step volume instead of pooling operation. The network structure is shown in Figure 3, and the network parameters are shown in Table 1.

| Layer name | Convolution kernel size | Step-size | Padding | BN layer | ReLU |

|---|---|---|---|---|---|

| Input layer | — | — | — | — | — |

| Conv3D1 | 3 × 3 × 8 | 0.844 | Same | Y | Y |

| Conv3D2 | 3 × 3 × 3 | 0.779 | Same | Y | Y |

| Conv3D3 | 1 × 1 × 3 | 0.798 | Same | Y | Y |

| Conv3D4 | 1 × 1 × 7 | 0.801 | Same | N | N |

| FC | — | — | — | Y | Y |

| Softmax | — | — | — | — | — |

Batch normalization (BN) is used in network construction. BN layer is also a widely used deep neural network training technology. Through batch normalization operation, the input of each layer of neural network in the training process is kept with the same distribution, so as to improve the training speed of the network, accelerate the convergence process, and alleviate the problem of gradient explosion. The pseudocode of M-3DCNN is shown in Table 2.

| M-3DCNN algorithm |

|---|

| 1. begin |

| 2. Input: the emotion-related EEG topographic map is divided into training set Tr and test set Tt, and the spatial neighborhood size is S. The samples of training set and test set are normalized |

| 3. Output: overall classification accuracy OA of confusion matrix, average classification accuracy AA, image classification results |

| 4. for each pixel |

| 5. Clipping the spatial neighborhood size of S × S |

| 6. Mixup |

| 7. Initialize the weight λ of the Mixup method |

| 8. for each training sample in the mini_batch |

| 9. for (x1, y1), (x2, y2) do |

| 10. x = λ × x1 + (1 − λ) × x2 |

| 11. y = λ × y1 + (1 − λ) × y2 |

| 12. end for |

| 13. Train and test the optimal M-3dCNN network |

| 14. Initialization learning rate ξ, network weight w, network offset b, and set the number of iterations of training epoch |

| 15. Network training |

| 16. The image data to be classified is input into the trained M-3DCNN network to predict the category of the target |

| 17. Calculate OA, AA |

| 18. The classification results of emotion-related EEG topographic map were obtained |

| 19. end |

4. Experiment and Analysis

4.1. Experimental Data Set and Platform

All fMRI and EEG experimental data were obtained by functional magnetic resonance imaging technology (Siemens 3.0T scanner). Specific scanning parameters are shown in Table 3.

| Parameter name | Parameter value |

|---|---|

| FOV (field of view) | 24 cm × 24 cm |

| TR (time of repetition) | 2000 ms |

| Acquisition matrix | 64 × 64 |

| Slice thickness | 4 mm |

| Slice gap | 1 mm |

| Axial slices | 32 layer |

| Flip angle | 90° |

4.2. Classification Effect Evaluation Criteria

- (1)

Overall classification accuracy (OA) represents the ratio of the number of correctly classified categories in the test set to the total number of sample categories in the test set, and the expression is shown in the following formula:

(7) -

where N represents the total number of categories of samples in the test set, n represents the number of categories of test samples, and pi represents that the samples actually belonging to category i are correctly classified into category i.

- (2)

Average classification accuracy (AA) represents the average value of the ratio of the number of correctly classified categories in each category in the test set to the total number of test samples of this category, and its calculation method is shown in the following formula:

(8) -

where OAi represents the overall classification accuracy of category i samples in the test set.

4.3. Emotion-Related EEG Topographic Map

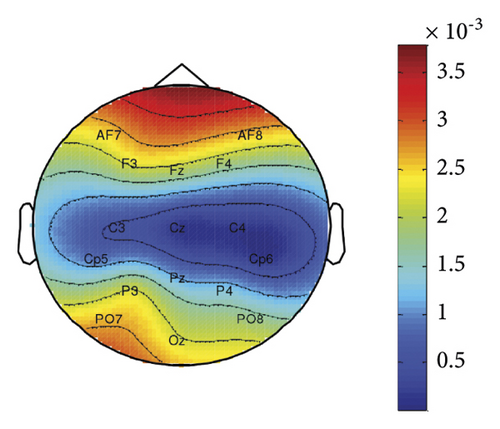

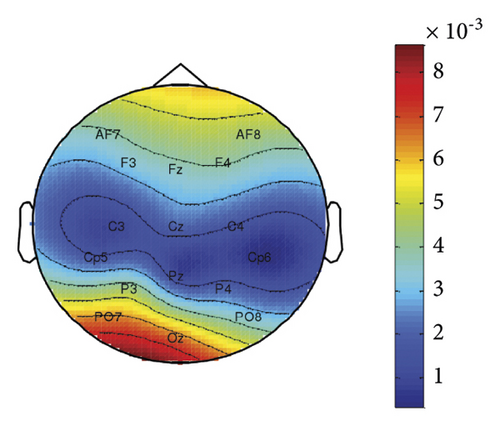

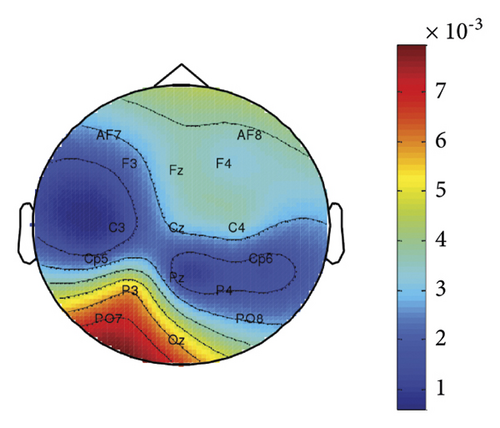

For three subjects, the emotion-related activated brain areas were extracted, respectively, the activation degree vector of cortical grid was calculated and mapped to the EEG topographic map through the forward model, and the results are shown in Figure 4.

As can be seen in Figure 4, the distribution of electrodes with a higher degree of emotion correlation is mostly concentrated in the frontal and occipital lobes. On the other hand, we can find that there is also a large intersubject variability in different brain regions of emotion activation, which is also reflected in the derived EEG emotion related topographic maps.

4.4. Network Parameter Selection

Two metrics: overall classification accuracy (OA) and average classification accuracy (AA), were used to evaluate the experimental results in this section. In the network parameter selection experiment, 10% of the samples were randomly selected as training samples, and the remaining samples were used for testing samples.

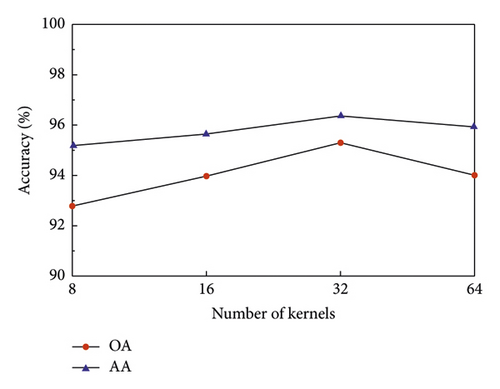

4.4.1. Determination of the Number of Convolution Kernels

The number of convolution kernels is one of the important factors affecting the classification accuracy of M-3DCNN network. The number k of convolution kernels selected in the first layer is 8, 16, 32, and 64, respectively. The initial learning rate is set to 0.003, the minimum number of batches is set to 100, and the number of rounds of network training is 300. The experimental results are shown in Figure 5. All results are the average of 10 repeated experiments.

As can be seen from Figure 5, the classification accuracy of emotion related topographic map will not continue to increase with the increase of the number of convolution cores, and the classification accuracy will reach saturation at a certain number of convolution cores. After the classification accuracy reaches saturation, increasing the number of convolution cores may lead to the degradation of the network, resulting in the decline of classification accuracy. Therefore, the optimal number of convolution kernels is 32.

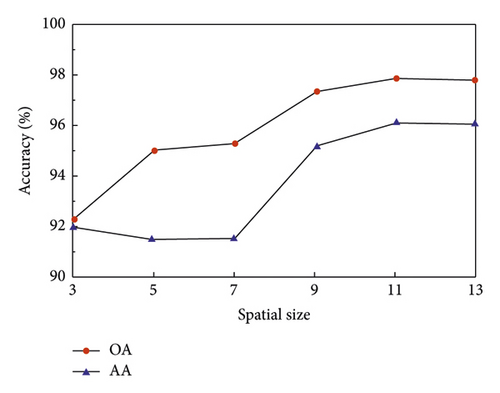

4.4.2. Determination of Space Size

If the selected sample space size is too small, the classification accuracy may be reduced due to incomplete information. In this paper, by comparing 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, and 13 × 13 to select the best space size, the initial learning rate is set to 0.003, the minimum number of batches is set to 100, and the number of rounds of network training is 300. The experimental results are shown in Figure 6. All results are the average value of repeated experiments for 10 times.

From Figure 6, it can be seen that the M-3DCNN does not continue to increase after reaching the optimal classification accuracy at the 11 × 11 spatial size, because the larger the spatial size of the input image, the longer the running time. With the combined classification accuracy and running time, the M-3DCNN chooses to input the 11 × 11 spatial size of the mood-related topographic map.

4.5. Comparative Analysis of Emotional Recognition

To validate the validity of the proposed M-3DCNN for emotional-related topographic map classification, five emotional categories (strong positive, weak positive, neutral, weak negative, and strong negative) were classified using M-3DCNN and other deep learning methods. The M-3DCNN network sets the convolution kernel number and spatial size as the optimal parameters. The weight of the Mixup method is set to 0.5. All results are the average of 10 repeated experiments. The comparison of classification results of different methods is shown in Table 4.

| SVM | 1D-CNN | 2D-CNN | 3D-CNN | M-3DCNN | |

|---|---|---|---|---|---|

| OA (%) | 92.67 | 92.75 | 93.64 | 98.12 | 98.57 |

| AA (%) | 93.78 | 94.89 | 94.99 | 98.10 | 98.51 |

| Time (min) | 0.27 | 12.23 | 9.22 | 22.45 | 22.25 |

- The bold values indicate the best performance.

It can be seen from Table 4 that M-3DCNN method has better classification effect than other deep learning methods. The OA and AA of emotion related topographic map classification method based on m-M-3DCNN are 5.87% and 4.73% higher than SVM, respectively; 5.82% and 3.62% higher than 1D-CNN; 4.93% and 5.52% higher than 2 D-CNN; and 0.99% and 0.51% higher than 3D-CNN.

5. Conclusions

This paper presents a method for recognizing psychological emotional tendency based on brain functional imaging and M-3DCNN. Based on the emotion-related EEG topographic map based on fMRI, the proposed method combines the Mixup method with 3D-CNN to alleviate the problem of overfitting of small image samples in the process of 3D-CNN deep network training. The experimental results show that, compared with other existing methods, the proposed method can improve the accuracy of emotion five classifications under the same time efficiency.

Conflicts of Interest

The authors declare that they have no conflicts of interest to report regarding the present study.

Open Research

Data Availability

The experimental data used to support the findings of this study are available from the corresponding author upon request.