New Bargaining Game Model for Collaborative Vehicular Network Services

Abstract

The wireless industry’s evolution from fourth generation (4G) to fifth generation (5G) will lead to extensive progress in new vehicular network environments, such as crowdsensing, cloud computing, and routing. Vehicular crowdsensing exploits the mobility of vehicles to provide location-based services, whereas vehicular cloud computing is a new hybrid technology that instantly uses vehicular network resources, such as computing, storage, and Internet for decision-making. In this study, novel crowdsensing, cloud computing, and routing algorithms are developed for a next-generation vehicular network, and they are combined into a hybrid scheme. To provide an effective solution toward an appropriate interactive vehicular network process, we focus on the paradigm of learning algorithms and game theory. Based on individual cooperation, our proposed scheme is designed as a triple-plane game model to adapt to the dynamics of a vehicular network system. The primary advantage of our game-based approach is to provide self-adaptability and responsiveness to current network environments. The performance of our hybrid scheme is evaluated and analyzed using simulation experiments in terms of the cloud service success ratio, normalized dissemination throughput, and crowdsensing success probability.

1. Introduction

With the rapid development of mobile communication and intelligent transportation technologies, Vehicular Ad hoc NETwork (VANET) has emerged as an important part of the intelligent transportation system (ITS). VANET is a unique type of Mobile Ad hoc NETwork (MANET). The similarity between VANET and MANET is characterized by the movement and self-organization of nodes. However, the primary difference is that the nodes in VANETs are mobile vehicles; they move at much higher speeds and possess substantial power resources, which is an advantage over traditional MANETs. As a newly introduced technology, the goal of VANET research is to develop vehicle-to-vehicle (V2V) communications to enable quick and cost-efficient data sharing for enhanced vehicle safety and comfort [1–3].

Typically, vehicles send and receive messages using an on-board unit (OBU), which is a portable data collector hardware installed on the vehicle. By a combination of information technology and transport infrastructure, VANETs support a wide range of applications including traffic control, road safety, route optimization, multimedia entertainment, and social interactions. With the advancement in the latest vehicular technology, automobile manufacturers and academia are heavily engaged in the blueprint of VANETs. Currently, 10% of moving vehicles on the road are wirelessly connected. By 2020, it is reported that 90% of vehicles will be connected [1, 4, 5].

In VANET systems, each vehicle can exchange messages in V2V communications while acting as a sender, receiver, and router. In addition, these vehicles perform vehicle-to-roadside (V2R) communications with roadside units (RSUs) [6]. The RSU operates as an access point to provide services to moving vehicles at anytime and anywhere while connecting to the backbone network. As Internet access points, RSUs provide the communication connectivity to passing vehicles. In VANET environments, RSUs and OBUs in vehicles repeatedly interact with each other and perform control decisions individually without centralized coordination [1,4–9].

During VANET operations, services can be categorized into three fundamental classes: vehicular cloud service (VCS), vehicular sensing service (VSS), and vehicular routing service (VRS). Vehicular cloud service is a new paradigm that exploits cloud computing to serve VANETs with several computational services [10]. Currently, computing requirements for vehicular applications are increasing rapidly, particularly with the growing interest in embedding a new class of interactive applications and services. Within the communication range of each RSU, the RSU controls the VCS through interactions with the vehicles and provides computation capacity, storage, and data resources. Vehicles requiring resources can obtain the available resources via interconnected RSUs. Therefore, depending on the roadside infrastructure, the VCS provides a significant market opportunity and overcomes challenges, making the technology more cost-effective [10, 11].

With the rapid development of VANETs, VSSs have broad potential for enhancing the public sensing domain. Vehicles with OBUs can be considered as mobile sensors that collect the local information requested by the RSUs. As a server, the RSU pays for the VSS’s participating vehicles according to their sensing qualities. As a type of vehicle-to-infrastructure communication, examples of VSSs are location-based road monitoring, real-time traffic condition reporting, pollution level measurements, and public information sharing. Owning to the mobility of the vehicles, the sensing coverage of location-based services can be expanded, and VSSs will benefit a wide scope of consumers [7, 8, 12].

In VANETs, vehicles are equipped with OBUs that can communicate with each other, also known as VRS. The VRS requires the suitable V2V communication technology, especially the routing method to address various challenges of VANETs. Typically, VANET is considered a subclass of MANET, and MANET-based routing protocols have been extensively studied. However, the VANET has several characteristics that distinguish it from MANET. To obtain adaptive vehicular routing protocols, high mobility, rapid network topology change, and an unlimited power supply must be considered to design an effective VRS algorithm [13–15].

In this study, we propose a new VANET control scheme, by considering the issues of VCS, VRS, and VSS. Based on the joint design of different vehicular operations, our integrated approach can obtain a synergy effect while attaining an appropriate performance balance. However, it is an extremely challenging task to combine the VCS, VRS, and VSS algorithms into a holistic scheme. Therefore, a new control paradigm is required. Currently, game theory has been widely applied to analyze the performance of VANET systems. More specifically, VANET system agents are assumed as game players, and the interaction among players can be formulated as a game model [6].

1.1. Motivation and Contribution

The aim of this study is to propose novel VCS, VRS, and VSS algorithms based on the different game models. For the VANET operations, game theory serves as an effective model of interactive situations among rational VANET agents. In the VCS algorithm, vehicles share the cloud resource in the RSU to increase the quality of service. The interactions among vehicles and RSUs are modeled as a non-cooperative game model. In the VRS algorithm, vehicles attempt to increase the quality and reliability of routing services. In the VSS algorithm, vehicles act as mobile sensors to collect the required information. As system servers, RSUs operate to share and process several types of collected information. To collect information, RSUs use a learning-based incentive mechanism, and individual vehicles adopt the cooperative bargaining approach among their VCSs, VSSs, and VRSs to balance their performance.

The proposed VCS, VRS, and VSS algorithms may need to coexist and complement each other to meet the diverse requirements of VANETs. To investigate the strategic interactions among different control algorithms, we develop a new triple-plane game model across the VCS, VRS, and VSS algorithms. For effective VANET management, vehicles in the VSS algorithm select their strategies from the viewpoint of a bargaining solution. In a distributed manner, the idea of the classical Nash bargaining solution is used to implement a triple-plane bargaining process in each vehicle. The proposed triple-plane bargaining approach imitating the interactive sequential game process is suitable to negotiate their conflicts of interest while ensuring the system practicality.

The main contributions of this study can be summarized as follows: (i) we developed novel VCS, VRS, and VSS algorithms for VANETs; (ii) we employed a new game model to provide satisfactory services; (iii) we adopted a distributed triple-plane bargaining approach to balance contradictory requirements; (iv) self-adaptability is effectively implemented in each control decision process; and (v) we captured the dynamic interactions of game players depending on their different viewpoints. According to the reciprocal combination of optimality and practicality, our approach can provide a desirable solution for real-world VANET applications. To the best of our knowledge, little research has been conducted on integrated-bargaining-based algorithms for VANET control problems.

1.2. Organization

The remainder of this article is organized as follows. In the next section, we review some related VANET schemes and their problems. In section 3, we provide a detailed description of the VCS, VRS, and VSS algorithms before defining the triple-plane bargaining game model. In particular, this section provides fresh insights into the design benefit of our game-based approach. For convenience, the primary steps of the proposed scheme are listed. In section 4, we validate the performance of the proposed scheme by means of a comparison with some existing methods. Finally, we present our conclusion and discuss some ideas for the future work.

2. Related Work

There have been considerable researches into the design of VANET control schemes. Cheng et al. [16] investigate the opportunistic spectrum access for cognitive radio vehicular ad hoc networks. In particular, the spectrum access process is modeled as a non-cooperative congestion game. Therefore, the proposed scheme in [16] opportunistically accesses licensed channels in a distributed manner and is designed to achieve a pure Nash equilibrium with high efficiency and fairness. By using the statistics of the channel availability, this approach can exploit the spatial and temporal access opportunities of the licensed spectrum. Through the simulation results, they conform that the proposed approach achieves higher utility and fairness compared to a random access scheme [16].

The paper [17] focuses on how to stimulate message forwarding in VANETs. To implement this mechanism, it is crucial to make sure that vehicles have incentives to forward messages for others. Especially, this study adopts the coalitional game theory to solve the forwarding cooperation problem and rigorously analyzing it in VANETs. The main goal is to ensure that whenever a message needs to be forwarded in a VANET, all involved vehicles gets their incentives to form a grand coalition. In addition, this approach is extended to taking the limited storage space of each node. Experiments on testbed trace data verify that the proposed method is effective for the stimulating cooperation of message forwarding in VANETs [17].

In [18], the main focus is to achieve a higher throughput by selecting the best data rate and the best network or channel based on the cognitive radio VANET mechanism. By changing wireless channels and data rate in heterogeneous wireless networks, the scheme in [18] is designed as a game theoretic model to achieve a higher throughput for vehicular users. In addition, a new idea adopted to find optimal number of APs for given VANET scenarios [18]. Even though the papers [16–18] have considered some game theory-based VANET control algorithms, they are designed as one-sided protocols while focusing a specific control issue. Therefore, the system interaction process is not fully investigated.

Recently, the vehicular microcloud technique has been considered one of the solutions to address the challenges and issues of VANETs. It is a new hybrid technology that has a remarkable impact on traffic management and road safety. By instantly clustering, cars help aggregating collected data that are transferred to some backend [19, 20]. The paper [19] introduces the concept of vehicular microcloud as a virtual edge server for efficient connections between cars and backend infrastructure. To realize such virtual edge servers, map-based clustering technique is needed to cope with the dynamicity of vehicular networks. This approach can optimize the data processing and aggregation before sending them to a backend [19].

F. Hagenauer et al. propose an idea that vehicular microcloud clusters of parked cars act as RSUs by investigating the virtual vehicular network infrastructure [20]. Especially, they focus on two control questions: (i) the selection of gateway nodes to connect moving cars to the cluster and (ii) a mechanism for seamless handover between gateways. For the first question, they select only a subset of all parked cars as gateways. This gateways selection helps significantly to reduce the channel load. For the second question, they enable driving cars to find better suited gateways while allowing the driving car to maintain connections [20]. The ideas in [19, 20] are very interesting. However, they can be used for special situations. Therefore, it is difficult to apply these ideas in general VANET operation scenarios.

During VANETs’ operations, there are two fundamental techniques to disseminate data for vehicular applications: Vehicle-to-infrastructure (V2I) and vehicle-to-vehicle (V2V) communications. Recently, Kim proposes novel V2I and V2V algorithms for the effective VANET management [6]. For V2I communications, a novel vertical game model is developed as an incentive-based crowdsensing algorithm. For V2V communications, a new horizontal game model is designed to implement a learning-based intervehicle routing protocol. Under dynamically changing VANET environments, the developed scheme in [6] is formulated as a dual-plane vertical-horizontal game model. By considering the real-world VANET environments, this approach imitates the interactive sequential game process by using self-monitoring and distributed learning techniques. It is suitable to ensure the system practicality while effectively maximizing the expected benefits [6].

In this paper, we develop a novel control scheme for vehicular network services. Our proposed scheme also includes sensing and routing algorithms for the operations of VANETs. Therefore, our proposed scheme may look similar to the method in [6]. First of all, the resemblance can be summarized as follows: (i) in the vehicular routing algorithm, link status and path cost estimation methods and each vehicle’s utility function are designed in the same manner; (ii) in the crowdsensing algorithm, utility functions of vehicle and RSU are defined similarly; and (iii) in the learning process, strategy selection probabilities are estimated based on the Boltzmann distribution equation. While both schemes have some similarities, there are several key differences. First, the scheme in [6] is designed as a dual-plane game model, but our proposed scheme is extended as a triple-plane game model. Second, a new cloud computing algorithm is developed to share the cloud resource in the RSU while increasing the quality of service. Third, to reduce computation complexity, entropy-based routing route decision mechanism is replaced by an online route decision mechanism. Fourth, the scheme in [6] is modeled as a non-cooperative game approach. However, our proposed scheme adopts a dynamic bargaining solution. Therefore, in our proposed sensing algorithm, each individual vehicle decides his strategy as a cooperative game manner. Fifth, our proposed scheme fully considers cloud computing, sensing, and routing payoffs and calculates the bargaining powers to respond to current VANET situations. Most of all, the main difference between the scheme in [6] and our proposed scheme is a control paradigm. Simply, in the paper [6], V2I and V2V algorithms are combined merely based on the competitive approach. However, in this paper, sensing, cloud computing, and routing algorithms work together in a collaborative fashion and act cooperatively with each, other based on the interactive feedback process.

The Multiobjective Vehicular Interaction Game (MVIG) scheme [21] proposes a new game theoretic scheme to control the on-demand service provision in a vehicular cloud. Based on a game theoretic approach, this scheme can balance the overall game while enhancing vehicles’ service costs. The game system in the MVIG scheme differs from other conventional models, as it allows vehicles to prioritize their preferences. In addition, a quality-of-experience framework is also developed to provision various types of services in a vehicular cloud; it is a simple but an effective model to determine vehicle preferences while ensuring fair game treatment. The simulation results show that the MVIG scheme outperforms other conventional models [21].

The Prefetching-Based Vehicular Data Dissemination (PVDD) scheme [22] devises a vehicle route-based data prefetching approach while improving the data transmission accessibility under dynamic wireless connectivity and limited data storage environments. To put it more concretely, the PVDD scheme develops two control algorithms to determine how to prefetch a data set from a data center to roadside wireless access points. Based on the greedy approach, the first control algorithm is developed to solve the dissemination problem. Based on the online learning manner, the second control algorithm gradually learns the success rate of unknown network connectivity and determines an optimal binary decision matrix at each iteration. Finally, this study proves that the first control algorithm could find a suboptimal solution in polynomial time, and the optimal solution of the second control algorithm converges to a globally optimal solution in a certain number of iterations using regret analysis [22].

The Cooperative Relaying Vehicular Cloud (CRVC) scheme [23] proposes a novel cooperative vehicular relaying algorithm over a long-term evolution-advanced (LTE-A) network. To maximize the vehicular network capacity, this scheme uses vehicles for cooperative relaying nodes in broadband cellular networks. With new functionalities, the CRVC scheme can (i) reduce power consumption, (ii) provide a higher throughput, (iii) lower operational expenditures, (iv) ensure a more flexibility, and (v) increase a covering area. In a heavily populated urban area, the CRVC scheme is useful owning to a large number of relaying vehicles. Finally, the performance improvement is demonstrated through the simulation analysis [23]. In this paper, we compare the performance of our proposed scheme with the existing MVIG [21], PVDD [22], CRVC [23] schemes.

3. The Proposed VCS, VRS, and VSS Algorithms

In this section, the proposed VCS, VRS, and VSS algorithms are presented in detail. Based on the learning algorithm and game-based approach, these algorithms form a new triple-plane bargaining game model to adapt the fast changing VANET environments.

3.1. Game Models for the VCS, VRS, and VSS Algorithms

- (i)

is the finite set of VCS game players , where represents a RSU and = is a set of multiple vehicles in the ’s coverage area

- (ii)

is the cloud computation capacity, i.e., number of CPU cycles per second in the

- (iii)

is a set of ’s strategies, where means the price level to execute one basic cloud service unit (BCSU). is the ’s available strategies , where represents the amount of cloud services of the and is specified in terms of BCSUs

- (iv)

is the payoff received by the RSU, and is the payoff received by during the VCS process

- (v)

is the ’s learning value for the strategy ; is used to estimate the probability distribution () for the next ’s strategy selection

- (vi)

denotes time, which is represented by a sequence of time steps with imperfect information for the VCS game process

- (i)

is the finite set of game players , where n is the number of vehicles for the game.

- (ii)

is the set of ’s one-hope neighboring vehicles.

- (iii)

is the finite set of ’s available strategies, where represents the selection of to relay a routing packet.

- (iv)

is the payoff received by the during the VRS process.

- (v)

is the same as the T in .

- (i)

is the finite set of game players for the game; is the same as in .

- (ii)

is the finite set of sensing tasks in , where s is the number of total sensing tasks.

- (iii)

is a vector of ’s strategies, where represents the strategy set for the . In the game, means the set of price levels for the crowdsensing work for the task during a time period (). Like as the game, is defined as .

- (iv)

is the vector of ’s strategies, where represents the strategy for . In the game, means the ’s active VSS participation, i.e., , or not, i.e., , the during .

- (v)

is the payoff received by the RSU, and is the payoff received by during the VSS process.

- (vi)

is the learning value for the strategy ; ℨ is used to estimate the probability distribution () for the next ’s strategy selection.

- (vii)

T is the same as T in and .

3.2. The VCS Algorithm Based on a Non-Cooperative Game Model

In the next-generation VANET paradigm, diverse and miscellaneous applications require significant computation resources. However, in general, the computational capabilities of the vehicles are limited. To address this resource restriction problem, vehicular cloud technology is widely considered as a new paradigm to improve the VANET service performance. In the VCS process, applications can be offloaded to the remote cloud server to improve the resource utilization and computation performance [24].

Vehicular cloud servers are located in RSUs and execute the computation tasks received from vehicles. However, the service area of each RSU may be limited by the radio coverage. Due to the high mobility, vehicles may pass through several RSUs during the task offloading process. Therefore, service results must be migrated to another RSU, which can reach the corresponding vehicle. From the viewpoints of RSUs and vehicles, payoff maximization is their main concern. To reach a win-win situation, they rationally select their strategies. During the VCS process, each RSU is a proposer and individual vehicles are responders; they interact with each other for their objectives during the VCS process.

3.3. The VRS Algorithm Based on a Non-Cooperative Game Model

3.4. The VSS Algorithm Based on the Triple-Plane Bargaining Game

Recently, the VSS has attracted great interests and becomes one of the most valuable features in the VANET system. Some VANET applications involve generation of huge amount of sensed data. With the advance of vehicular sensor technology, vehicles that are equipped with OBUs are expected to effectively monitor the physical world. In VANET infrastructures, RSUs request a number of sensing tasks while collecting the local information sensed by vehicles. According to the requested tasks, OBUs in vehicles sense the requested local information and transmit their sensing results to the RSU. Although some excellent researches have been done on the VSS process, there are still significant challenges that need to be addressed. Most of all, conducting the sensing task and reporting the sensing data usually consume resource of vehicles. Therefore, selfish vehicles should be paid by the RSU as the compensation for their VSSs. To recruit an optimal set of vehicles to cover the monitoring area while ensuring vehicles to provide their full sensing efforts, the RSU stimulates sufficient vehicles with proper incentives to fulfill VSS tasks [6, 8, 27].

3.5. Main Steps of Proposed Triple-Plane Bargaining Algorithm

In this study, we design a novel triple-plane bargaining game model through a systematic interactive game process. In the proposed VCS, VRS, and VSS algorithms, the RSUs and vehicles are game players to maximize their payoffs. In particular, the RSUs learn the current VANET situation based on the learning methods, and the vehicles determine their best strategies while balancing their VCS, VRS, and VSS requirements. The primary steps of the proposed scheme are described as follows.

Step 1. Control parameters are determined by the simulation scenario (Table 1).

| Parameter | Value | Description |

|---|---|---|

| 0.2, 0.4, 0.6, 0.8, 1 | Predefined price levels for cloud service (min = 1, max = 5) | |

| 1, 2, 3, 4, 5 | The amount of cloud services in terms of BCSUs | |

| 0.2, 0.4, 0.6, 0.8, 1 | Predefined price levels for sensing service (min = 1, max = 5) | |

| θ | 0.2 | A coefficient factor of cost calculation |

| 0.8 | A profit factor of vehicle if one BCSU is processed | |

| α | 0.5 | The weight control factor to the distance and velocity |

| χ | 0.2 | A learning rate to update the values |

| 5 | The routing outcome at each time period | |

| 0.1 | A coefficient factor to estimate the incentive payment | |

| {,, , } | 0.1, 0.2, 0.3, 0.4 | Predefined cost for each task sensing |

| {, , , } | 10, 10, 10, 10 | Predefined profit for each sensing task |

| , , , | 5, 5, 5, 5 | Predefined requirement for each sensing task |

| β | 0.3 | A control parameter to estimate the learning value ℨ |

| 0.3 | A discount factor to estimate the learning value ℨ |

Step 2. At the initial time, the ℨ and learning values in the RSUs are equally distributed. This starting estimation guarantees that each RSU’s strategy benefits similarly at the beginning of the and games.

Step 3. During the game, the proposer RSU selects its strategy to maximize its payoff () according to (1), (3), and (4). As responders, vehicles select their strategy to maximize their payoffs () according to (2) while considering their current virtual money (Γ).

Step 4. At every time step (), the RSU adjusts the learning values and the probability distribution () based on equations (3) and (4).

Step 5. During the game, individual vehicles estimate the wireless link states () according to equation (5). At each time period, the values are estimated online based on the vehicle’s relative distance and speed.

Step 6. During the game, the source vehicle configures a multihop routing path using the Bellman–Ford algorithm based on equation (6). The source vehicle’s payoff (U) is decided according to (7) while considering their current virtual money (Γ).

Step 7. During the game, the proposer RSU selects its strategy to maximize its payoff () according to (11). As responders, vehicles select their strategy to maximize their combined payoff (CP) according to (9) while adjusting each bargaining power (ψ) based on equation (10).

Step 8. At every time step (), the RSU adjusts the learning values (ℨ(·)) and the probability distribution () according to equations (12) and (13).

Step 9. Based on the interactive feedback process, the dynamics of our , , and games cause a cascade of interactions among the game players, who choose their best strategies in an online distributed manner.

Step 10. Under the dynamic VANET environment, the individual game players constantly self-monitor for the next game process; go to Step 3.

4. Performance Evaluation

4.1. Simulation Setup

- (i)

The simulated system was assumed to be a TDMA packet system for VANETs.

- (ii)

The number of vehicles that passed over a RSU was the rate of the Poisson process (ρ). The offered range was varied from 0 to 3.0.

- (iii)

Fifty RSUs were distributed randomly over the 100 km road area, and the velocity of each mobile vehicle was randomly selected to be 36 km/h, 72 km/h, or 108 km/h.

- (iv)

The maximum wireless coverage range of each vehicle was set to 500 m.

- (v)

Cloud computation capacity () is 5 GHz, and one BCSU is the minimum amount (e.g., 20 MHz/s in our system) of the cloud service unit.

- (vi)

The number (s) of sensing task in each is 4, i.e., = {,, , }.

- (vii)

The source and destination vehicles were randomly selected. Initially, virtual money (Γ) in each vehicle was set to 100.

- (viii)

At the source node, data dissemination was generated at a rate of λ (packets/s). According to this assumption, the time duration in our simulation model is one second.

- (ix)

Network performance measures obtained on the basis of 100 simulation runs are plotted as functions of the vehicle distribution (ρ).

To demonstrate the validity of our proposed method, we measured the cloud service success ratio, normalized dissemination throughput, and crowdsensing success probability. Table 1 shows the control factors and coefficients used in the simulation. Each parameter has its own characteristics [6].

4.2. Results and Discussion

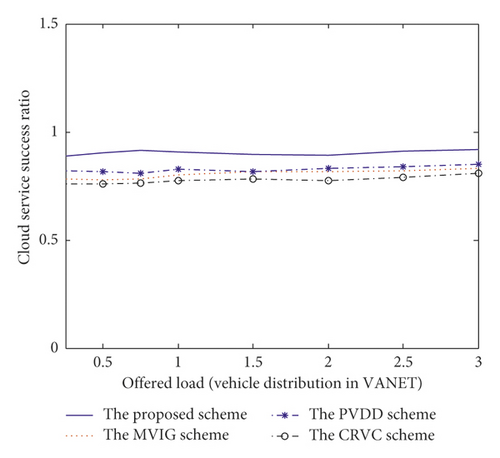

Figure 1 compares the cloud service success ratio of each scheme. In this study, the cloud service success ratio represents the rate of cloud services that was completed successfully. This is a key performance evaluation factor in the VCS operation. As shown in Figure 1, the cloud service success ratios of all schemes are similar to each other; however, the proposed scheme adopts an interactive environmental feedback mechanism, and the RSUs in our scheme adaptively adjust their VCS costs. This approach can improve the VCS performance than the existing MVIG, PVDD, and CRVC schemes. Therefore, we outperformed the existing methods from low to high vehicle distribution intensities.

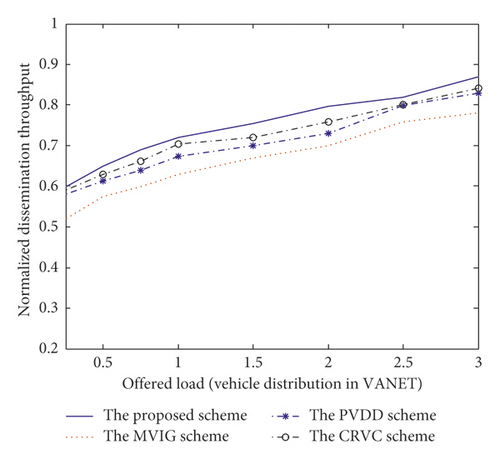

Figure 2 compares the normalized dissemination throughput in VANETs. Typically, the network throughput is measured as bits per second of network access. In this study, the dissemination throughput is defined as the ratio of data amount successfully received in destination vehicles to the total generated data amount in the source vehicles. The throughput improvement achieved by the proposed scheme is a result of our game paradigm. During the VRS operations, each vehicle in the proposed scheme can select the most efficient routing path with real-time adaptability and self-flexibility. Hence, we attained a higher dissemination throughput compared to other existing approaches that are designed as lopsided and one-way methods and do not effectively adapt to the dynamic and diversified VANET conditions.

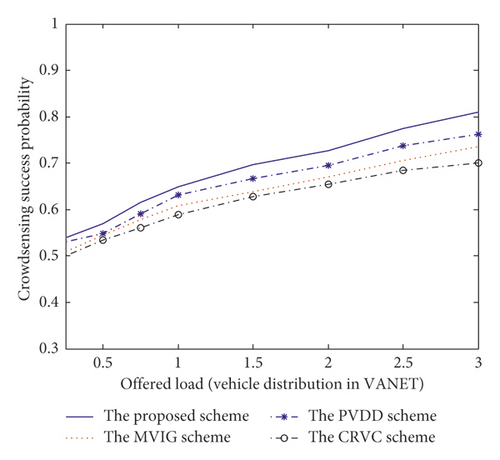

The crowdsensing success probability, which is shown in Figure 3, represents the efficiency of the VANET system. In the proposed scheme, we employed the learning-based triple-plane game model to perform control decisions in a distributed online manner. According to the interactive operations of the VCS, VSS, and VRS, our game-based approach can improve the crowdsensing success probability more effectively than the other schemes. The simulation results shown in Figures 1 to 3 demonstrate that the proposed scheme can attain an appropriate performance balance; in contrast, the MVIG [21], PVDD [22], and CRVC [23] schemes cannot offer this outcome under widely different and diversified VANET situations.

5. Summary and Conclusions

The VANET using vehicle-based sensory technology is becoming more popular. It can provide vehicular sensing, routing, and clouding services for 5G network applications. Therefore, the design of next-generation VANET management schemes is important to satisfy the new demands. Herein, we focused on the paradigm of a learning algorithm and game theory to design the VCS, VRS, and VSS algorithms. By combining the VCS, VRS, and VSS algorithms, a new triple-plane bargaining game model was developed to provide an appropriate performance balance. During the VANET operations, the RSUs learned their strategies better under dynamic VANET environments, and the vehicles considered the mutual-interaction relationships of their strategies. As game players, they considered the obtained information to adapt to the dynamics of the VANET environment and performed control decisions intelligently by self-adaptation. According to the unique features of VANETs, our joint design approach is suitable to provide satisfactory services under incomplete information environments. In the future, we would like to consider privacy issues such as the differential privacy during the VANET operation. Furthermore, we will investigate probabilistic algorithms to estimate the service quality of sensing, routing, and clouding services. In addition, we plan to investigate smart city applications, where the different sensory information of a given area can be combined to provide a complete view of the smart city development.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This research was supported by the MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2018-2018-0-01799) supervised by the IITP (Institute for Information and Communications Technology Promotion) grant funded by the Korea government (MSIT) (no. 2017-0-00498; A Development of Deidentification Technique Based on Differential Privacy).

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.