A Perception-Driven Transcale Display Scheme for Space Image Sequences

Abstract

With the rapid development of multimedia technology, the way of obtaining high-quality motion reproduction for space targets has attracted much attention in recent years. This paper proposes a Perception-driven Transcale Display Scheme, which significantly improves the awareness of multimedia processing. This new scheme contains two important modules, transcale description based on visual saliency and perception-driven display of space image sequences. The former concentrates on describing the transcle feature of space targets, including three algorithms, attention region computing, frame rate conversion, and image resolution resizing. On this basis, the latter focuses on high-quality display of space movements under different scales, including three algorithms, namely, target trajectory computing, space transcale display, and space movement display. Extensive quantitative and qualitative experimental evaluations demonstrate the effectiveness of the proposed scheme.

1. Introduction

With the rapid development of computer technology, spatial rendezvous and docking needs a large amount of image information between navigation information systems. As an important information form, space image sequences play an important role, such as docking control, docking mechanism design, and track interactive control, and they need to be transmitted between these navigation information systems to measure the relative position, relative velocity, and relative attitude of spacecraft [1–4]. For the spatial image sequence, transcale is its important feature. So, demanding multimedia processing methods are different from those of traditional image processing. At present, the study of space image sequences is still at the initial stage and further exploration is still on the way. Specifically, there are mainly two unresolved problems. The first one is how to achieve the task of high-quality monitoring space targets. For example, the continuous attention to space targets should be strengthened due to the lack of the details of space movements. The second one is how to improve the ability of processing images, such as smooth motion reproduction, accurate trajectory description, and different display effects; all of them should be fine exhibited to enhance the awareness of multimedia processing. To solve the above problems, a transcale display method [5–7] emerged as a new direction for image processing and the challenges are mainly shown as follows.

(1) The change of the attention scale: The existing image processing methods only use the inherent characteristics of the image sequences and lack the attention perceived by observers, which needs to be converted from the description of the whole sequences to that of space targets, so as to better capture fine details of space image sequences.

(2) The change of the scale of the frame rate: The existing frame interpolation methods have the problem that the details of the interpolation frames are not clear during the process of changing the frame rates, which affects the smoothness of space image sequences. Thereby, new methods need to be studied to obtain the optimal interpolation frames.

(3) The change of the resolution scale: Because of the limited screen sizes of different devices, the same image sequence is frequently required to be displayed in the different sizes. However, the important contents are not changed the scales in the existing resizing methods, so new methods need to be proposed to improve the definition of important contents under the guarantee of the global resizing effects.

Facing the above challenges, we propose a Perception-driven Transcale Display Scheme (PTDS) to achieve high-quality space motion reproduction. The main contributions have three points. Firstly, we construct a transcale display framework by providing a different perspective, which significantly improves the awareness of multimedia processing. This framework contains two important modules, transcale description based on visual saliency and perception-driven display of space image sequences. Secondly, the module of transcale description is presented to solve the transcale problems, which is the core of PTDS. Finally, the module of perception-driven display is proposed to realize the trajectory description and movement display for space targets under the condition of changing scales. To sum, PTDS could serve for navigation information systems.

The rest of the paper is structured as follows. Section 2 discusses the framework of PTDS. Sections 3 and 4 describe in detail the formulation of PTDS, namely, transcale description and perception-driven display. Section 5 presents experimental work carried out to demonstrate the effectiveness of PTDS. Section 6 concludes the paper.

2. The Framework of PTDS

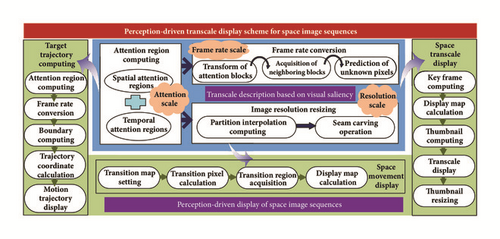

Figure 1 shows the framework of PTDS and it contains two important modules, transcale description and perception-driven display. The first one is to improve the definitions of the important contents perceived by observers under the condition of changing different scales, including the attention scale, the scale of frame rate, and the resolution scale. The way to determine the attention regions from the observers’ viewpoint is naturally becoming a key issue. Recently, the visual saliency technique is more and more widely used in the multimedia field [8–11]. The reasons for using this technique in image processing contain the following three points. Firstly, it can provide the capability of choosing for image description, strengthen the description details of moving targets, so as to priority allocation to achieve the desired image analysis and synthesis of computing resources. Secondly, it can accomplish auxiliary construction of the flexible description scheme by discriminating the saliency regions and non-saliency regions. Finally, it can improve the cognitive ability and decision-making ability for space image sequences. Therefore, we adopt the visual saliency technique to address the above key issue. This module contains the following three parts:

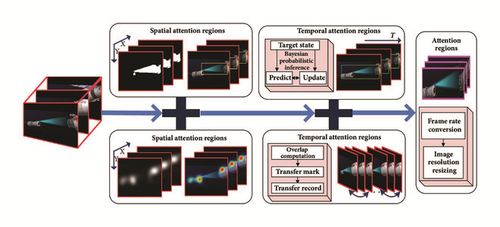

(1) Attention region computing: It focuses on the problem of the change of the attention scale and then captures the visual attention regions for space image sequences. The calculation process mainly includes two aspects: spatial attention regions and temporal attention regions.

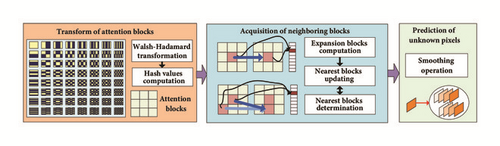

(2) Frame rate conversion: It focuses on the problem of the change of the frame rate scale and improves the motion smoothness of space sequences. The calculation process mainly includes three steps: transform of attention blocks, acquisition of neighboring blocks and prediction of unknown pixels.

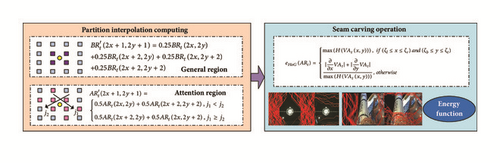

(3) Image resolution resizing: It focuses on the problem of the change of the resolution scale and improves the definition of space sequences with different resolutions under the guarantee of the global visual effects. The calculation process mainly includes two steps: partition interpolation computing and seam carving operation.

On the basis of transcale description, the module of perception-driven display is presented to achieve the task of high-quality spatial motion display under the condition of changing the different scales. And this module is an application of the first module and it could serve for the space navigation information processing system. This module also contains the following three parts:

(1) Target trajectory computing: It could adaptively display the motion trajectory of space targets according to the setting of scale parameters. The calculation process mainly includes five steps: attention region computing, frame rate conversion, boundary computing, trajectory coordinate calculation, and motion trajectory display.

(2) Space transcale display. It obtains clear motion details under different time scales and spatial scales, and the calculation process mainly includes five steps: key frame computing, display map calculation, thumbnail computing, transcale display, and thumbnail resizing.

(3) Space movement display. It realizes the motion overview of the space targets in a narrative way. The calculation process mainly includes four steps: transition map setting, transition pixel calculation, transition region acquisition, and display map calculation.

3. The Module of Transcale Description

In this section, we will go over the individual parts of transcale description for space image sequences, including attention region computing, frame rate conversion, and image resolution resizing.

3.1. Attention Region Computing

Then we quantify ξxj,yj, which has the larger value of spatial saliency, putting it into the set SALt = {ξxj,yj}. Because ξxj,yj may be in a very small, relatively unimportant region, so it is necessary to eliminate such a pixel. There are three elimination rules, shown as follows.

Rule 1. For ∀ε, if and , then is eliminated from SALt, where ε = 1,2, …s.

Rule 2. For ∀ε′ and ∀ε, if and , then is eliminated from SALt.

Rule 3. For ∀ε′′ and ∀ε, if and , then is eliminated from SALt.

As ε′ = 1,2.., (winitial − j), ε′′ = 1,2.., (hinitial − j), and winitial and hinitial are the initial resolution of image sequences. On this basis, the boundary values of VAS(It) can be acquired by using the technology of bounding box.

3.2. Frame Rate Conversion

To enhance motion smoothness of space image sequences, frame rate conversion is performed, whose core is to compute the high quality intermediate frames, i.e., how to calculate the pixel values of the intermediate frames. Here the partition method is used for calculation. Moreover, for non-attention regions, the values of pixels are unchanged. And for the attention regions, the values of pixels are accurately computed and the main computation steps contain transform of attention blocks, acquisition of neighboring blocks, and prediction of unknown pixels, which is shown in Figure 3. Specifically, attention regions in any two consecutive frames, i.e., VA(It) and VA(It+1), are divided into μ × μ overlapping image blocks, and each block is defined as an attention block, VAB for short. Note that we choose three different values for μ, namely, 8, 16, and 32, and we find that the appropriate value is 16 according to the obtained experiment results. The set of VAB denotes as and the computation procedure for the contained pixels is elaborated as follows.

Then the transformed result is assigned a hash value to accelerate VAB projection, setting in the corresponding hash table TBm[hm(bz)](1 ≤ m ≤ ϕ).

Finally, the predicted value of the pixels in the attention regions can be computed through a smoothing operation of the obtained nearest blocks (for detailed algorithm, see Algorithm 1).

-

Algorithm 1: Frame rate conversion algorithm.

-

Input: A spatial image sequence I

-

Output: Changed image sequence I′

-

(1) Compute the frame number of I as len.

-

(2) Give the first frame of I as the current frame I1.

-

(3) Give the following frame of I1 as I2 and calculate their attention regions VA1 and VA2 according to the method in Section 3.1.

-

(4) Divide VA1 and VA2 into image block ST with μ × μ.

-

(5) Compute the transformed value of each ST using equation (8) and create hash tables.

-

(6) FOR i = 1… the number of hash tables

-

FOR each transformed ST in VA1

-

Obtain the expansion blocks according to spatial and temporal expansion rules;

-

Compute the value of pixels in VA1;

-

END FOR

-

END FOR

-

(7) Merge all the transformed ST.

-

(8) Compute interpolated frame by combining the attention region with non-attention region.

-

(9) Give the following frame of I2 as I3, I3 → I2.

-

(10) Repeat steps (3) to (9) until the frame number of the current frame is (len-1);

-

(11) Synthetic interpolated frames and original frames

-

Return I′

3.3. Image Resolution Resizing

4. The Module of Perception-Driven Display

In this section, we describe in detail the formulation of our proposed the module of perception-driven display, containing three algorithms, namely, target trajectory computing, space transcale display, and space movement display. To some extent, all of them are an application of the module of transcale description and could be directly applied in the navigation information systems.

(1) Target Trajectory Computing. To display the motion trajectories of space targets under different scales, the approach of target trajectory computing is presented and it contains the following five steps:

Step 1. For each frame in It (1≤t≤n), VAt is computed by utilizing the method of attention region computing.

Step 2. Using the method of frame rate conversion, I [1, n] is changed into HI [1, N′]={HIt}(1≤t≤N′ and N′ >n) in which N is the number of changed frames and VAt is updated in HIt.

Step 3. The four boundary values are computed for each VAt, including left boundary BVL, right boundary BVR, top boundary BVT, and bottom boundary BVB:

Step 4. For each VAt, trajectory coordinates are calculated, including the value of horizontal coordinate VArow and the value of vertical coordinate VAcolumn:

Step 5. Motion trajectory ATυ with the different scale υ is computed using the following formula:

(2) Space Transcale Display. The aim of transcale display is not only to show motion pictures at different time scales but also to display with the different resolution scales. This algorithm mainly includes the following five steps:

Step 1. For each It, the key frame Kj (j = 1 … m) is computed and then the corresponding attention region VAKj is obtained.

Step 2. The transcale display map KM is captured by merging the attention regions under the condition of different key frames.

Step 3. For each VAKj (j = 1 … m), the corresponding thumbnail VUj is computed by reducing the original image to the same resolution.

Step 4. The transcale display map KM′ for It is computed:

Step 5. Using the method of image resolution resizing, VUj could also have high-quality display with the different resolutions.

(3) Space Movement Display. To outline the motion process of space targets, a movement display algorithm is proposed, which focuses on smooth transition of space movement. Specifically, let Kp and Kq be the continuous key frames in It and the transition regions of them be KRp(u,v) and KRq(u′, v′), respectively, in which u, u′∈[1,height], v ∈[weight-r+1,weight], v′∈ [1,r], r denotes the transition radius, and height and weight are the current resolution of key frames. The process of this algorithm consists of the following four steps:

Step 1. Both KRp and KRq are resized to target resolution height×2r, and the transition region maps TMapi (i = p, q) are obtained.

Step 2. The value of pixel KR(u,v) in the transition region is computed:

in which c1 and c2 are transition parameters, c1=1-c2, c2=v/2r, and x and y are the position coordinates.

Step 3. Repeat Steps 1 and 2 until all the keyframe transition regions are calculated, and then new key frame K′ is recorded.

Step 4. All the obtained key frames are merged to form display map MD:

5. Experimental Results and Discussion

In this section, we conduct the quantitative and qualitative experiments to evaluate the performance of PTDS, including the transcale performance (see Sections 5.1, 5.2, and 5.3) and the display performance (see Sections 5.4, 5.5, and 5.6). We downloaded space video from Youku and they are segmented the video clips. Then we changed these clips to image sequences and formed our data shown in the Table 1. In all our experiments, we used the MATLAB platform and a PC with a 2.60 GHz Intel(R) Pentium(R) Dual-Core CPU processor with 1.96 GB of main memory.

| Sequence ID | Space image sequence | Initial resolution | Number of frames |

|---|---|---|---|

| S1 | Aircraft automatic control | 320×240 | 204 |

| S2 | Aircraft automatic homing | 320×240 | 230 |

| S3 | Aircraft lift | 640×480 | 238 |

| S4 | Aircraft docking | 640×480 | 277 |

| S5 | Spacecraft detection | 352×240 | 157 |

| S6 | Spacecraft launch | 352×240 | 101 |

| S7 | Launch base | 320×145 | 208 |

| S8 | Spacecraft flight | 576×432 | 161 |

| S9 | Aircraft flight | 1024×436 | 130 |

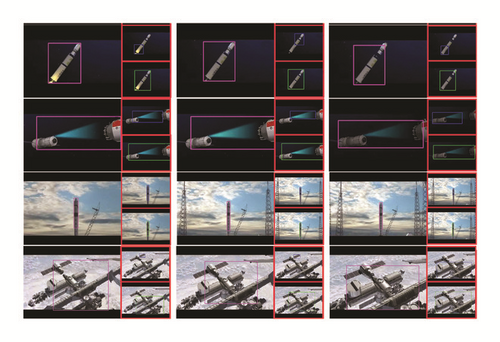

5.1. Evaluation of Attention Scale

Figure 5 shows the obtained attention regions using our method, in which each row exhibits the results of different space image sequences and each column exhibits sample frames of each sequences. In addition, each subfigure consists of three parts: the purple-bordered region in the left marks the attention regions, the blue-bordered region in the upper right marks the spatial attention region, and the green-border region in the lower right marks the temporal attention region. Specifically, the first row shows the results for frames 100, 128, and 134 from S1, and the second row shows the results for frames 119, 121, and 135 from S2. Observing these rows, we can see that the spatial attention regions only capture part of space targets, for example, the result in the first row and first column. This means that both the calculations of spatial attention regions and temporal attention regions are necessary for obtaining the accurate attention regions, especially for image sequences with large motion amplitude. Moreover, the third row shows the results for frame 10, 24 and 30 from S3, and the last row shows the results for frames 8, 45, and 88 from S4. These sequences have a relatively complicated background, which causes great interference to the detection of attention regions. However, the proposed method still obtains good results and these results lay the foundation for transcale display.

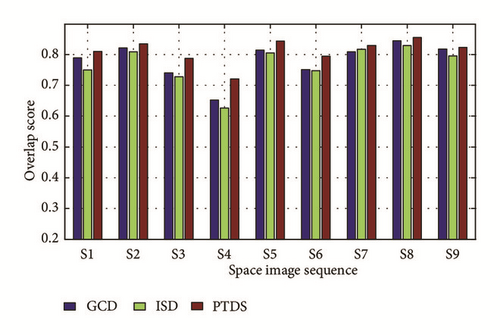

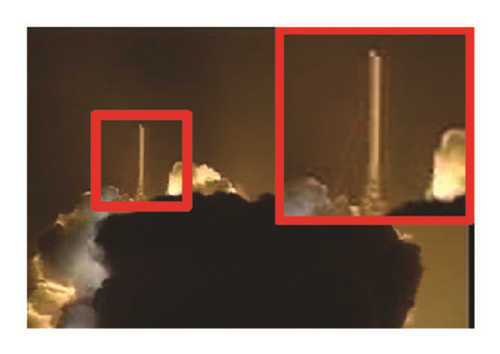

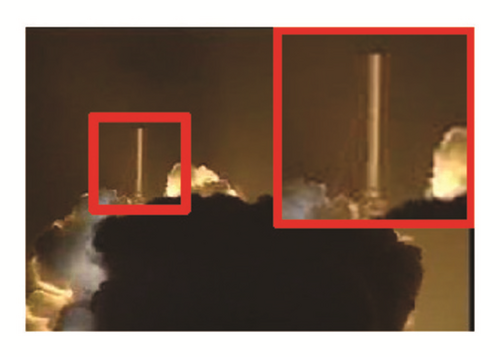

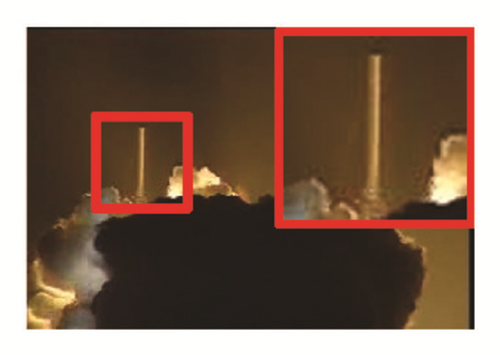

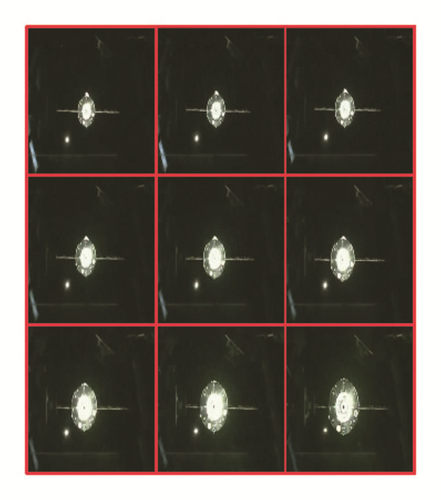

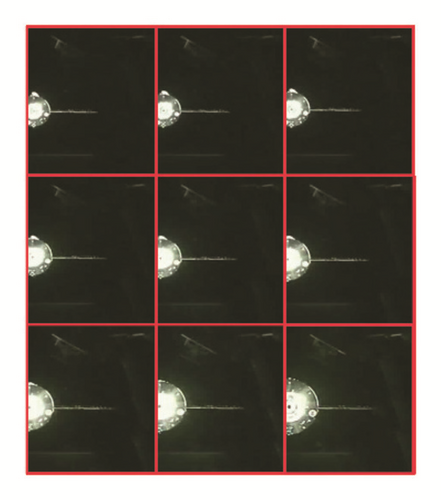

5.2. Evaluation of Frame Rate Scale

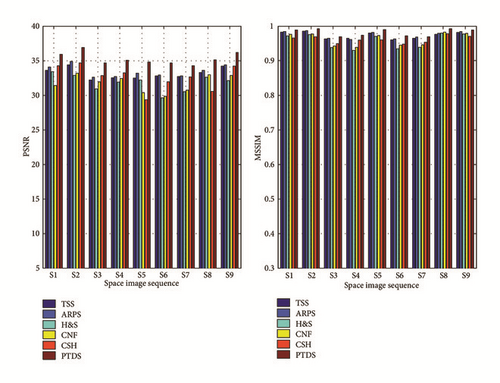

The quality of interpolated frames is a key factor to evaluate the change of the scale of frame rate, and in experiments, we removed one in every two consecutive frames of the original image sequence and reconstructed the removed one adopting the different methods, including three-step search (TSS), adaptive rood pattern search (ARPS), Horn and Schunck (H&S), CNF [20], CSH [21] and our proposed method. Figures 7 and 8 show the frame interpolation results for sequence S5 and S6, and from the red-bordered region of each subfigure, the visual differences among algorithms can be determined. TSS and ARPS exhibit a poor interpolation effect, H&S and CNF introduce a suspension effect, CSH produced disappointing effects, and PTDS shows the clear details of spacecraft. From these figures, it is evident that the proposed method shows comparatively better performance in terms of visual quality.

Figure 9 shows the average quantitative values of each image sequence by using the different methods. The left part demonstrates the average PSNR values, which is traditional quantitative measure in the term of accuracy, and the right part demonstrates the MSSIM results, which assess the image visibility quality from the viewpoint of image formation, under the assumption of a correlation between human visual perception and image structural information. In this figure, it is obvious that our method could achieve the highest average values.

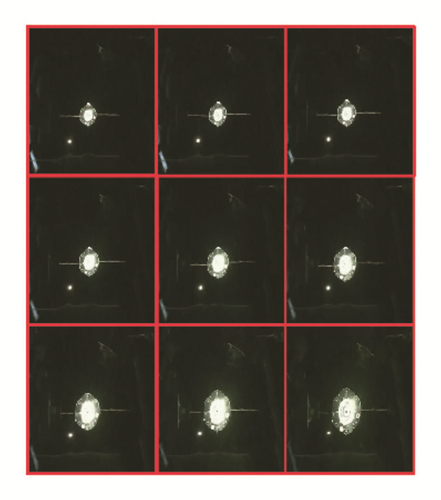

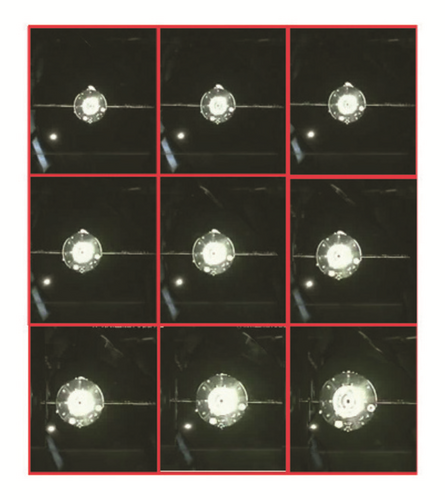

5.3. Evaluation of Resolution Scale

We resize the original image sequences by using the different methods, including scaling, the best cropping, improved seam carving (ISC) [22], and our method, and then the obtained results are compared to evaluate the performance on the change of resolution scales.

Figure 10 shows the comparison results of sequence S7 from 320×145 to 500×230. Figure 10(a) shows the original frames, in which the numbers of frames are 131, 138, 147, 151, 174, 179, 189, 203, and 208. Figure 10(b) shows that using scaling method, the launch base becomes vaguer than before. Figure 10(c) shows that using the best cropping method, the launch base is only partly displayed, resulting in the original information becoming missing. Figure 10(d) shows that using the improved seam carving method, the prominent part of launch base becomes smaller than before, indicating that ISC is not suitable for image enlarging. Figure 10(e) shows that our method clearly displays the prominent objects of the original frames and ensures a global visual effect when the resolution scales are changed. Similarly, resizing results of the sequence S8 are shown in Figure 11.

5.4. Evaluation of Target Trajectory Computing

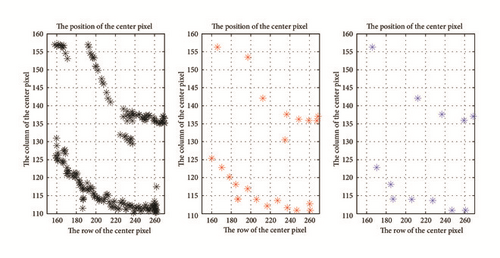

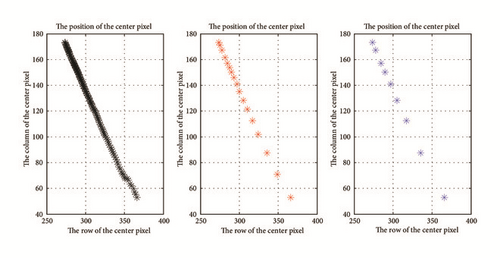

Figure 12 shows target trajectory of sequence S2 at scales υ = 1, υ = 10, and υ = 20. As can be seen from this figure, as the value of υ increases, the movement amplitude of space targets becomes greater and the description of the trajectory could be coarser, which clearly characterizes the change of the movement of automatic homing. Similarly, target trajectory of sequence S5 is shown in Figure 13.

Figure 14 shows the detailed positions of target trajectories corresponding to Figure 12, which are described in way of computing the position of the center pixel in attention regions. And it can be seen that the larger the scale υ is, the fewer the description points are and the rougher the position trajectory of center point is and vice versa. Similarly, Figure 15 shows target trajectories corresponding to Figure 13. In short, PDTS exhibits good performance for displaying space target trajectories under different scales, which can lay a foundation for space tasks, such as condition monitoring and motion tracking.

5.5. Evaluation of Space Transcale Display

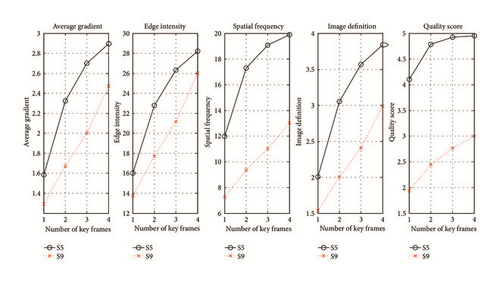

Figure 16 gives transcale displays for sequences S5 and S9, in which the attention regions are shown in the left part of each subfigure. Figure 16(a) shows both the whole process of detecting from near to far and the motion details of the space targets. Similarly, the process of movement from far to near is given in Figure 16(b). From this figure, we can see that the proposed space transcale display algorithm can clearly exhibit the motion process of the space targets at different scales. And by observing the accompanying attention regions, motion details of space targets, such as target pose and operating status, are displayed at different distances and time, which fully reflects the transcale characteristics of the PTDS scheme.

Figure 17 summarizes the objective evaluation values of space transcale display corresponding to Figure 16, including average gradient, edge intensity, spatial frequency, image definition, and quality score. With the increase of the number of key frames, the values of the above evaluation index are also increasing, indicating that the space transcale display algorithm can obtain high-quality transcale display map.

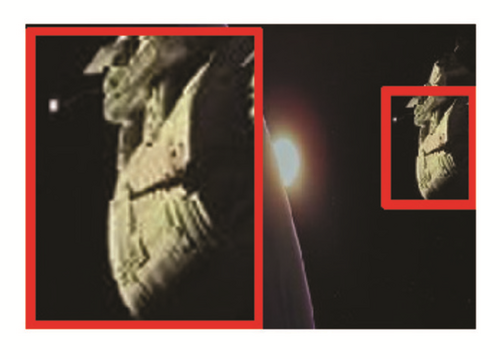

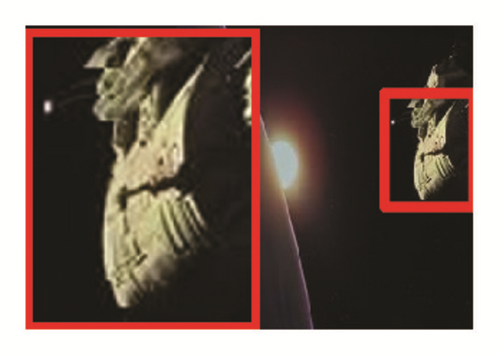

5.6. Evaluation of Space Movement Display

Figure 18 shows Space movement display for space image sequences, in which a, b, and c are, respectively, S3, S4, and S6. By adopting the narrative diagram, the complete movement of the space targets are clearly exhibited. Figures 18(b), 18(d), and 18(f) are their corresponding transition area details, depicting the comparison results using direct fusion method in the left and our method in the right. For the left part, it is obvious to see a straight line to distinguish between two key frames, while for the right part, smooth and delicate transition areas are displayed to achieve a perfect representation of the movement process.

6. Conclusions

In this paper, we focus on the transcale problem of spatial image sequences and propose a novel display scheme which improves the awareness of multimedia processing. The contribution of this scheme manifests in two aspects. On the one hand, space targets sustained attention by using visual saliency technology and then the details of the movement of the targets are enhanced. On the other hand, the motion of spatial image can be smoothly reproduced and effectively showed on the display device with different sizes and resolutions. Experimental results show that the proposed method outperforms the representative methods in terms of image visualization and quantitative measures. In the future, we will further study the transcale characteristics of space image sequences and explore the mutual influence between the different scales. On this basis, we will also establish a robust transcale processing mechanism to better serve spatial rendezvous and docking.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this article.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (61702241, 61602227), the Foundation of the Education Department of Liaoning Province (LJYL019), and the Doctoral Starting Up Foundation of Science Project of Liaoning Province (201601365).

Open Research

Data Availability

The data used to support the findings of this study have not been made available because the data is confidential.