The Maximal Strichartz Family of Gaussian Distributions: Fisher Information, Index of Dispersion, and Stochastic Ordering

Abstract

We define and study several properties of what we call Maximal Strichartz Family of Gaussian Distributions. This is a subfamily of the family of Gaussian Distributions that arises naturally in the context of the Linear Schrödinger Equation and Harmonic Analysis, as the set of maximizers of certain norms introduced by Strichartz. From a statistical perspective, this family carries with itself some extrastructure with respect to the general family of Gaussian Distributions. In this paper, we analyse this extrastructure in several ways. We first compute the Fisher Information Matrix of the family, then introduce some measures of statistical dispersion, and, finally, introduce a Partial Stochastic Order on the family. Moreover, we indicate how these tools can be used to distinguish between distributions which belong to the family and distributions which do not. We show also that all our results are in accordance with the dispersive PDE nature of the family.

1. Introduction

The most important multivariate distribution is the Multivariate Normal (MVN) Distribution. To fix the notation, we give here its definition.

Definition 1. One says that a random variable X is distributed as a Multivariate Normal Distribution if its probability density function (pdf)

Its importance derives mainly (but not only) from the Multivariate Central Limit Theorem which has the following statement.

Theorem 2. Suppose that X = (x1, …, xn) T is a random vector with Variance-Covariance Matrix Σ. Assume also that for every i = 1, …, n. If X1, X2, X3, … is a sequence of i.i.d. random variables distributed as X, then

Due to its importance, several authors have tried to give characterizations of this family of distributions. See, for example, [1, 2] for an extended discussion on multivariate distributions and their properties. Here, we concentrate on characterizing the MVN through variational principles, such as the maximization of certain functionals. A well-known characterization of the Gaussian Distribution is through the maximization of the Differential Entropy, under the constraint of fixed variance Σ. We focus on the case of when the support of the pdf is the whole Euclidean Space Rn.

Theorem 3. Let X be a random variable whose pdf is fX. The Differential Entropy h(X) is defined by the following functional:

We refer to Appendix for a proof of this well-known theorem. This characterization is, in some sense, not completely satisfactory because it is given just with the restriction of fixed variance. A more general characterization of the Gaussian Distribution has been given in a setting which, at first sight, seems very far, and it is the one of Harmonic Analysis and Partial Differential Equations. We first introduce the so-called admissible exponents.

Definition 4. Fix n ≥ 1. One calls a set of exponents (q, r) admissible if 2 ≤ q, r ≤ +∞ and

Remark 5. These exponents are characteristic quantities of certain norms, the Strichartz Norms, naturally arising in the context of Dispersive Equations and can vary from equation to equation. We refer to [3] for more details.

Here is the precise characterization of the Multivariate Normal Distribution, through Strichartz Estimates.

Theorem 6 (see [4]–[7].)Suppose n = 1 or n = 2. Then, for every (q, r) and admissible and for every such that , we have

For several other important results on Strichartz Estimates, we refer to [8–11] and the references therein.

Remark 7. This characterization does not need the restriction of fixed variance as the one achieved using the Differential Entropy Functional and so it is, in some sense, more “general.” The result is conjectured to be true for any dimension n ≥ 1. See, for example, [7], where the optimal constant has been computed in any dimension n ≥ 1, under the hypothesis that the maximizers are Gaussians also in dimension n ≥ 3.

We refer to [7] for the relation of this result with harmonic analysis and restriction theorems.

Strichartz Estimates are a fundamental tool in the problem of global well-posedness of PDEs and measure a particular type of dispersion (see, e.g., [3–5, 7, 12, 13]). Strichartz Estimates bring with themselves some interesting statistical features and this is what we want to analyse in the present paper.

Theorem 8. Consider p(t, x), a probability distribution function belonging to the Maximal Strichartz Family of Gaussian Distributions , defined in (9). The vector of parameters θ, indexing , is given by

- (i)

in the spherical case (RTR = σ2Id) by

(12) - (ii)

in the elliptical case (; see Section 3 for the precise definition) by

(13)

Remark 9. Technically, the only possible case inside the Maximal Strichartz Family of Gaussian Distributions is when RTR = Idn×n, since R ∈ SO(n) (the spherical case, with σ2 = 1). The form of the Fisher Information Matrix, in that case, is simplified to a lower dimension. Nevertheless, the computation performed in the way we did gives the possibility to compute a distance (in some sense centred at the Maximal Strichartz Family of Gaussian Distributions) between members of the Maximal Strichartz Family of Gaussian Distributions and other Gaussian Distributions, for which the orthogonal matrix condition RTR = Idn×n is not necessarily satisfied. In particular, it can distinguish between Gaussians evolving through the PDE flow (see Section 2) and Gaussians which do not.

Remark 10. We believe that using the flow of a Partial Differential Equation is a natural way to produce probability density functions, in particular, in this case, since the flow of the PDE, that we are using, preserves the probability constraint. See Section 2.2 for more details on this comment.

As we said, Strichartz Estimates are a way to measure the dispersion caused by the flow of the PDE to which they are related. In statistics, dispersion explains how stretched or squeezed is a distribution. A measure of statistical dispersion is a nonnegative real number which is small for data which are very concentrated and increases as the data become more spread-out. Common examples of measures of statistical dispersion are the variance, the standard deviation, the range, and many others. Here, we connect the two closely related concepts (dispersion in statistics and PDEs) by introducing some measures of statistical dispersion like the Index of Dispersion in Definition 38 (see Section 4) which reflect the dispersive PDE nature of the Maximal Strichartz Family of Gaussian Distributions.

Definition 11. Consider the norms ‖·‖a and ‖·‖b on the space of Variance-Covariance Matrices Σ and ‖·‖c on the space of mean values μ. One defines the following Index of Dispersion:

We compute this Index of Dispersion for our family of distributions and show that it is consistent with PDE results. We refer to Definition 38 for more details.

Another important concept in probability and statistics is the one of Stochastic Order. A Stochastic Order is a way to consistently put a set of random variables in a sequence. Most of the Stochastic Orders are partial orders, in the sense that an order between the random variables exists, but not all the random variables can be put in the same sequence. Many different Stochastic Orders exist and have different applications. For more details on Stochastic Orders, we refer to [14]. Here, we use our Index of Dispersion to define a Stochastic Order on the Maximal Strichartz Family of Gaussian Distributions and see how there are natural ways of partially ranking the distributions of the family (see Section 5), in agreement with the flow of the PDE.

Definition 12. Consider two random variables X1 and X2 such that , for any θ1 and θ2. One says that the two random variables are ordered according to their Dispersion Index if and only if the following condition is satisfied:

Remark 13. In this definition the index can vary according to the context and the choices of the norms in the definition of the index.

An important tool which will be fundamental in our analysis is what we call 1/α-Characteristic Function (see Section 2 and [7, 15]). We conclude the paper with an appendix in which, among the other things, we use the concept of 1/α-Characteristic Function to define generalized types of Momenta that exist also for the Multivariate Cauchy Distributions.

2. Construction of the Maximal Strichartz Family of Gaussian Distributions

- (1)

We define 1/α-Characteristic Functions.

- (2)

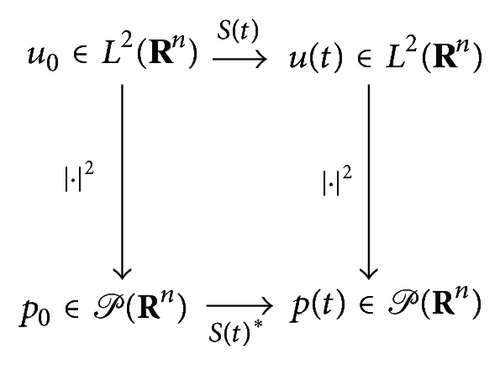

We prove that if u0 generates a probability distribution p0(x), then u(t, x) = eitΔu0 (see below its precise definition) still defines a probability distribution pt(x) = |u(t, x)|2.

- (3)

By means of 1/α-Characteristic Functions, we give the explicit expression of u(t, x) the generator of the family.

- (4)

We use symmetries and invariances to build the complete family .

2.1. The 1/α-Characteristic Functions

Following the program, we first need to introduce the tool of 1/α-Characteristic Functions to characterize . It is basically the Fourier Transform, but, differently from the Characteristic Function, the average is not taken with the pdf, but with a power of the pdf.

Definition 14. Consider u : Rn → C to be a Schwartz function, namely, a function belonging to the space

We refer to the note [15] for examples and properties of 1/α-Characteristic Functions and to Appendix for a simple straightforward application of this tool. In particular, we notice that .

Remark 15. If u is complex valued (not just real valued) and, for example, α = n ∈ N, then there are n-distinct complex roots of |u|2. In our discussion, this will not create to us any problem, because our process starts with u and produces |u|2. We want to remark that the map |u|α ↦ u is a multivalued function. For this reason, we cannot reconstruct uniquely a generator, given the family that it generates. See formula (39) below and [15] for more details.

Remark 16. We could define 1/α-Characteristic Functions for more general functions u : X → F with X a locally compact abelian group and F a general field. We do not pursue this direction here and we will leave it for a future work. We notice that can be considered also as a 1/α-Expected Value:

2.2. Conservation of Mass and Flow on the Space of Probability Measures

In this subsection, we show that if defines a probability distribution, then also defines a probability distribution. This is mainly a consequence of the property of eitΔ of being a unitary operator.

Theorem 17. Consider , the set of all probability distributions on Rn and u : (0, ∞) × Rn → C a solution to (27). Then u induces a flow in the space of probability distributions.

Proof. Consider u0 : Rn → C such that ; so is a probability distribution on Rn. Consider u(t, x), the solution of (27) with initial datum u0. Then

Remark 18. This situation is in striking contrast with respect to the heat equation, where if you start with a probability distribution as initial datum, instantaneously the constraint of being a probability measure is broken.

2.3. Fundamental Solution for the Linear Schrödinger Equation Using 1/α-Characteristic Functions

We first notice that the initial datum becomes a probability density function, if and only if multiplied by a constant. But, since the equation is linear, we can do everything without that constant and then include it at the end.

Remark 19. These computations are well known, but we perform them in detail here, in order to clarify what we will compute in the context of 1/α-Characteristic Functions.

Remark 20. This procedure works because the Gaussian Distribution is, up to constants, a fixed point of the 1/α-Characteristic Function. Indeed, if u is Gaussian, then for some normalizing constant c > 0. Moreover, the Schrödinger flow preserves Gaussian Distributions; namely, if your initial datum is Gaussian, then the solution is Gaussian for any future and past times.

2.4. Strichartz Estimates and Their Symmetries

In this subsection, we deduce the Strichartz Estimates for the Schrödinger equation in the case of probability distributions and discuss their symmetries. For clarity, we repeat here the definition of admissible exponents and the Strichartz Estimate.

Definition 21. Fix n ≥ 1. One calls a set of exponents (q, r) admissible if 2 ≤ q, r ≤ +∞ and

Theorem 22 (see [4]–[7].)For dimension n = 1 or n = 2 (and for any n ≥ 1, supposing that Gaussians are maximizers) and (q, r) admissible pair, the Sharp Homogeneous Strichartz Constant Sh(n, q, r) = Sh(n, r) defined by

This is the version of the theorem on Strichartz Estimates without the restriction , as proved in [7]. From this, we can very easily deduce Theorem 6.

Proof of Theorem 6. Just substitute the condition in all the statements.

As explained for example in [12], Strichartz Estimates are invariant by the following set of symmetries.

Lemma 23 (see [12].)Let be the group of transformations generated by

- (i)

space-time translations: u(t, x) ↦ u(t + t0, x + x0), with t0 ∈ R, x0 ∈ Rn;

- (ii)

parabolic dilations: u(t, x) ↦ u(λ2t, λx), with λ > 0;

- (iii)

change of scale: u(t, x) ↦ μu(t, x), with μ > 0;

- (iv)

space rotations: u(t, x) ↦ u(t, Rx), with R ∈ SO(n);

- (v)

phase shifts: u(t, x) ↦ eiθu(t, x), with θ ∈ R;

- (vi)

Galilean transformations:

(47)with v ∈ Rn.

The only point here is that not all these symmetries leave the set of probability distributions invariant. Therefore, we need to reduce the set of symmetries in our treatment and, in particular, we need to combine the scaling and the parabolic dilations in order to have all the family inside the space of probability distributions .

Lemma 24. Consider uμ,λ = μu(λ2t, λx) such that maximizes (6); then μ = λn/2.

Proof. Consider

Remark 25. We notice that some of the symmetries can be seen just at the level of the generator of the family u but not by the family of probability distributions pt(x). For example, the phase shifts u(t, x) ↦ eiθu(t, x), with θ ∈ R, give rise to the same probability distribution function because and, partially, the Galilean transformations , with v ∈ Rn, reduces to a space translation with x0 = vt, since . In some sense, the parameter θ can be seen as a latent variable.

Therefore, we have the complete set of probability distributions induced by the generator u(t, x).

Theorem 26. Consider pt(x) = |u(t, x)|2 a probability distribution function generated by u(t, x) (see Section 2.3). Let be the group of transformations generated by

- (i)

inertial-space translations and time translations: p(t, x) ↦ p(t + t0, x + x0 + vt), with t0 ∈ R, x0 ∈ Rn and v ∈ Rn;

- (ii)

scaling-parabolic dilations: u(t, x) ↦ λnu(λ2t, λx), with λ > 0;

- (iii)

space rotations: u(t, x) ↦ u(t, Rx), with R ∈ SO(n);

This theorem produces the following definition.

Definition 27. One calls Maximal Strichartz Family of Gaussian Distributions the following family of distributions:

Remark 28. Let p(t, x) be the pdf defined in (39). Then, choose with R = Id, x0 = v0 = 0, and λ = 0. This implies that . For this reason, we call p(t, x) the Family Generator of . We notice also that, in the definition of the family and with respect to Theorem 26, we used as scale parameter λ1/2 instead of λ. This is done without loss of generality, since λ > 0.

Right away we can compute the Variance-Covariance Matrix and Mean Vector of the family.

Corollary 29. Suppose X is a random variable with pdf . Then its Expected Value is

Proof. The proof is a direct computation.

Remark 30. We see here that, differently from the general family of Gaussian distributions, here the Mean Vector and the Variance-Covariance Matrix are related by a parameter, which represents the time flow.

3. The Fisher Information Metric of the Maximal Strichartz Family

Information geometry is a branch of mathematics that applies the techniques of differential geometry to the field of statistics and probability theory. This is done by interpreting probability distributions of a statistical model as the points of a Riemannian Manifold, forming in this way a statistical manifold. The Fisher Information Metric provides a natural Riemannian Metric for this manifold, but it is not the only possible one. With this tool, we can define and compute meaningful distances between probability distributions, in both the discrete and the continuous cases. Crucial is then the set of parameters on which a certain family of distributions is indexed and the geometrical structure of the parameter set is also crucial. We refer to [16] for a general reference on information geometry. The first one to introduce the notion of distance between two probability distributions has been Rao in [17], who used the Fisher Information Matrix as a Riemannian Metric on the space of parameters.

In this section, we restrict our attention to the Fisher Information Metric of the Maximal Strichartz Family of Gaussian Distributions and provide details on the additional structure that the family has with respect to the hyperbolic model of the general Family of Gaussian Distributions. See, for example, [18–20].

3.1. The Fisher Information Metric for the Multivariate Gaussian Distribution

First, we give the general definition of the Fisher Information Metric.

Definition 31. Consider a statistical manifold , with coordinates given by θ = (θ1, θ2, …, θn) and with probability density function p(x; θ). Here, x is a specific observation of the discrete or continuous random variables X. The probability is normalized, so that ∫Xp(x, θ)dx = 1 for every . The Fisher Information Metric Iij is defined by the following formula:

Remark 32. The integral is performed over all values x that the random variable X can take. Again, the variable θ is understood as a coordinate on the statistical manifold , intended as a Riemannian Manifold. Under certain regularity conditions (any that allows integration by parts), Iij can be rewritten as

Now, to compute explicitly the Fisher Information Matrix of the family , we use the following theorem that you can find in [21].

Theorem 33. The Fisher Information Matrix for an n-variate Gaussian Distribution can be computed in the following way. Let

Remark 34. We remark again that μ and Σ depend on some common parameters, like the time t.

3.2. Proof of Theorem 8: The Spherical Multivariate Gaussian Distribution

- (i)

i = 1, …, n, j = 1, …, n

(61) - (ii)

i = 1, …, n, j = n + 1, …, 2n

(62) - (iii)

i = 1, …, n, j = 2n + 1

(63)because μ does not depend on λ and Σ does not depend on x0; - (iv)

i = 1, …, n, j = 2n + 2

(64)because μ does not depend on σ2 and Σ does not depend on x0; - (v)

i = 1, …, n, j = 2n + 3

(65) - (vi)

i = n + 1, …, 2n, j = n + 1, …, 2n

(66) - (vii)

i = n + 1, …, 2n, j = 2n + 1

(67)because μ does not on λ and Σ does not depend on v0; - (viii)

i = n + 1, …, 2n, j = 2n + 2

(68)because μ does not depend on σ2 and Σ does not depend on v0; - (ix)

i = n + 1, …, 2n, j = 2n + 3

(69) - (x)

i = 2n + 1, j = 2n + 1

(70) - (xi)

i = 2n + 1, j = 2n + 2

(71) - (xii)

i = 2n + 1, j = 2n + 3

(72) - (xiii)

i = 2n + 2, j = 2n + 2

(73) - (xiv)

i = 2n + 2, j = 2n + 3

(74) - (xv)

i = 2n + 3, j = 2n + 3

(75)

3.3. Proof of Theorem 8: The Elliptical Multivariate Gaussian Distribution

- (i)

i = 1, …, n, j = 1, …, n

(79) - (ii)

i = 1, …, n, j = n + 1, …, 2n

(80) - (iii)

i = 1, …, n, j = 2n + 1

(81)because μ does not depend on λ and Σ does not depend on x0; - (iv)

i = 1, …, n, j = 2n + 2

(82)because μ does not depend on σ2 and Σ does not depend on x0; - (v)

i = 1, …, n, j = 2n + 3

(83) - (vi)

i = n + 1, …, 2n, j = n + 1, …, 2n

(84) - (vii)

i = n + 1, …, 2n, j = 2n + 1

(85)because μ does not depend on λ and Σ does not depend on v0; - (viii)

i = n + 1, …, 2n, j = 2n + 2

(86)because μ does not depend on σ2 and Σ does not depend on v0; - (ix)

i = n + 1, …, 2n, j = 2n + 3

(87) - (x)

i = 2n + 1, j = 2n + 1

(88) - (xi)

i = 2n + 1, j = 2n + 2

(89) - (xii)

i = 2n + 1, j = 2n + 3

(90) - (xiii)

i = 2n + 2, j = 2n + 2

(91) - (xiv)

i = 2n + 2, j = 2n + 3

(92) - (xv)

i = 2n + 3, j = 2n + 3

(93)

3.4. The General Multivariate Gaussian Distribution

As pointed out in [18, 19], for general Multivariate Normal Distributions, the explicit form of the Fisher distance has not been computed in closed form yet even in the simple case where the parameters are t = 0, λ = 0, and v0 = 0. From a technical point of view, as pointed out in [18, 19], the main difficulty arises from the fact that the sectional curvatures of the Riemannian Manifold induced by and endowed with the Fisher Information Metric are not all constant. We remark again here that the distance induced by our Fisher Information Matrix is centred at the Maximal Strichartz Family of Gaussian Distributions, to enlighten the difference between members of the Maximal Strichartz Family of Gaussian Distributions and other Gaussian Distributions, for which RTR = Idn×n is not necessarily satisfied. In particular, our metric distinguishes between Gaussians evolving through the PDE flow (see Section 2) and Gaussians who do not.

Remark 35. We say that two parameters α and β are orthogonal if the elements of the corresponding rows and columns of the Fisher Information Matrix are zero. Orthogonal parameters are easy to deal with in the sense that their maximum likelihood estimates are independent and can be calculated separately. In particular, for our family the parameters x0 and v0 are both orthogonal to both the parameters λ and σ2. Some partial results, for example, when either mean or variance is kept constant, can be deduced. See, for example, [18–20].

Remark 36. The Fisher Information Metric is not the only possible choice to compute distances between pdfs of the family of Gaussian Distributions. For example, in [20], the authors parametrize the family of normal distribution as the symmetric space SL(n + 1)/SO(n + 1) endowed with the following metric:

Remark 37. If we consider just the submanifold given by the restriction to the coordinates i = 1, …, n and i = 2n + 2 on the ellipse λ2 + 16t2 = 4, we recover the hyperbolic distance:

4. Overdispersion, Equidispersion, and Underdispersion for the Family

As we said, Strichartz Estimates are a way to measure the dispersion caused by the flow of the PDE to which they are related. In statistics, dispersion explains how a distribution is spread-out. In this section, we connect the two closely related concepts (dispersion in statistics and PDEs) by introducing some measures of statistical dispersion like the Index of Dispersion in Definition 38 (see Section 4) which reflect the dispersive PDE nature of the Maximal Strichartz Family of Gaussian Distributions. We compute this Index of Dispersion for our family of distributions and show that it is consistent with PDE results.

Definition 38. Consider the norms ‖·‖a and ‖·‖b on the space of Variance-Covariance Matrices Σ and ‖·‖c on the space of mean values μ. One defines the following Index of Dispersion:

- (i)

abc-overdispersed, if ;

- (ii)

abc-equidispersed, if ;

- (iii)

abc-underdispersed, if .

- (i)

a-overdispersed, if ;

- (ii)

a-equidispersed, if ;

- (iii)

a-underdispersed, if .

Here, we discuss some particular cases and compute the dispersion indexes and for certain specific norms ‖·‖a, ‖·‖b and ‖·‖c.

- (i)

a-overdispersed, if (1/4)λ2σ2 < 1;

- (ii)

a-equidispersed, if (1/4)λ2σ2 = 1;

- (iii)

a-underdispersed, if (1/4)λ2σ2 > 1.

Remark 39. In the strictly Strichartz case σ2 = 1, we have that the dispersion is measured just by the scaling factor λ.

- (i)

a-overdispersed, if n(1/4)λ2σ2 < 1;

- (ii)

a-equidispersed, if n(1/4)λ2σ2 = 1;

- (iii)

a-underdispersed, if n(1/4)λ2σ2 > 1.

- (i)

abc-overdispersed, if ;

- (ii)

abc-equidispersed, if ;

- (iii)

abc-underdispersed, if .

Remark 40. In particular, from this, we notice that if at t = 0 the distribution is a-equidispersed, an instant after the distribution is abc-overdispersed, in fact

Remark 41. This index is different from the Fisher Index which is basically the variance to mean ratio

Remark 42. The characterization of the Gaussian Distribution given by Theorems 6 and 22 can be used also to give a measure of dispersion with respect to the Maximal Family of Gaussian Distributions, considering the Strichartz Norm:

5. Partial Stochastic Ordering on

Using the concept of Index of Dispersion, we can give a Partial Stochastic Order to the family . For a more complete treatment on Stochastic Orders, we refer to [14]. We start the analysis of this section with the definition of Mean-Preserving Spread.

Definition 43. A Mean-Preserving Spread (MPS) is a map from to itself

The concept of a Mean-Preserving Spread provides a partial ordering of probability distributions according to their level of dispersion. We then give the following definition.

Definition 44. Consider two random variables X1 and X2 such that , for any θ1 and θ2. One says that the two random variables are ordered according to their Dispersion Index if and only if the following condition is satisfied:

Now, we give some examples of ordering according to the Indexes of Dispersion that we discussed previously.

Remark 45. In the strictly Strichartz case σ2 = 1, we have that the Stochastic Order is given just by the scaling factor λ.

Remark 46. Again, in the strictly Strichartz case σ2 = 1, we have that the Stochastic Order is given just by the scaling factor λ.

Remark 47. In the case of the the a-Static Dispersion Index of the Maximal Family of Gaussians , the role of σ2 and λ2 seems interchangeable. This suggests a dimensional reduction in the parameter space, but, when t ≠ 0, σ2 and the parameter λ decouple and start to play a slightly different role. This suggests again a way to distinguish between Gaussian Distributions which come from the family and Gaussians which do not and so to distinguish between Gaussians which are solutions of the Linear Schrödinger Equation and Gaussians which are not.

Remark 48. Using the definition of Entropy, we deduce that, for Gaussian Distributions, h(X) = (1/2)log(2πe) ndet(Σ). We see that, for our family , the Entropy increases, every time we increase λ, σ2, and t, but not when we increase x0 and v0. In particular, the fact that the Entropy increases with t is in accordance with the Second Principle of Thermodynamics.

Remark 49. It seems that the construction of similar indexes can be performed in more general situations. In particular, we think that an index similar to can be computed in every situation in which a family of distributions has the Variance-Covariance Matrix and the Expected Value which depend on common parameters.

6. Conclusions

In this paper, we have constructed and studied the Maximal Strichartz Family of Gaussian Distributions. This subfamily of the family of Gaussian Distributions arises naturally in the context of Partial Differential Equations and Harmonic Analysis, as the set of maximizers of certain functionals introduced by Strichartz [4] in the context of the Schrödinger Equation. We analysed the Fisher Information Matrix of the family and we showed that this matrix possesses an extrastructure with respect to the general family of Gaussian Distributions. We studied the spherical and elliptical case and computed explicitly the Fisher Information Metric in both cases. We interpreted the Fisher Information Metric as a distance which can distinguish between Gaussians which maximize the Strichartz Norm and Gaussians which do not and also as a distance between Gaussians which are solutions of the Linear Schrödinger Equation and Gaussians which are not. After this, we introduced some measures of statistical dispersion that we called abc-Dispersion Index of the Maximal Family of Gaussian Distributions and a-Static Dispersion Index of the Maximal Family of Gaussian Distributions. We showed that these Indexes of Dispersion are consistent with the dispersive nature of the Schrödinger Equation and can be used again to distinguish between Gaussians belonging to the family and other Gaussians. Moreover, we showed that our Indexes of Dispersion induce a Partial Stochastic Order on the Maximal Strichartz Family of Gaussian Distributions, which is in accordance with the flow of the PDE.

Competing Interests

The author declares that he has no competing interests.

Acknowledgments

The author wants to thank their family and Victoria Ban for their constant support. They thank Professor Maung Min-Oo for useful discussions on the Fisher Information Metric. The author thanks also their Supervisor Professor Narayanaswamy Balakrishnan for his constant help and guidance and for an inspiring talk on dispersion indexes.

Appendix

In this Appendix, we give the proof of Theorem 3 (for completeness) and we will use the concept of 1/α-Characteristic Function to define a generalized type of Moments that exist also for the Multivariate Cauchy Distribution.

A. Proof of Theorem 3

B. On the 1/α-Momenta of Order k and the Cauchy Distribution

In this subsection, we discuss another application of the concept of 1/α-Characteristic Functions. In particular, we build 1/α-Momenta in a similar way of what happens for the usual characteristic function and usual Momenta. We apply this tool to the case of the Cauchy Distribution and see that, in certain cases, in contrast to the well-known case of α = 1, we can build some finite generalized Momenta. We refer to [15] for a more detailed discussion on 1/α-Characteristic Functions.

Definition 50. Consider the 1/α-Characteristic Function of u:

Proof. This is a direct and simple computation.

Remark 51. Here, we do not consider the possibility of different roots of the unity that can appear in the computation of the 1/α-Characteristic Function. We refer, for the precise theory, to [15].

From now on, we concentrate only on the case of the Multivariate Cauchy Distribution.

Definition 52. One says that a random variable X is distributed as a Multivariate Cauchy Distribution if and only if its pdf f(x; μ, Σ, n) takes the form