Mixture Augmented Lagrange Multiplier Method for Tensor Recovery and Its Applications

Abstract

The problem of data recovery in multiway arrays (i.e., tensors) arises in many fields such as computer vision, image processing, and traffic data analysis. In this paper, we propose a scalable and fast algorithm for recovering a low-n-rank tensor with an unknown fraction of its entries being arbitrarily corrupted. In the new algorithm, the tensor recovery problem is formulated as a mixture convex multilinear Robust Principal Component Analysis (RPCA) optimization problem by minimizing a sum of the nuclear norm and the ℓ1-norm. The problem is well structured in both the objective function and constraints. We apply augmented Lagrange multiplier method which can make use of the good structure for efficiently solving this problem. In the experiments, the algorithm is compared with the state-of-art algorithm both on synthetic data and real data including traffic data, image data, and video data.

1. Introduction

A tensor is a multidimensional array. It is the higher-order generalization of vector and matrix, which has many applications in information sciences, computer vision, graph analysis [1], and traffic data analysis [2–4]. In the real world, as the size and the amount of redundancy of the data increase fast and nearly all of the existing high-dimensional real world data either have the natural form of tensor (e.g., multichannel images) or can be grouped into the form of tensor (e.g., tensor face [5], traffic data tensor model [2–4], and videos), challenges come up in many scientific areas when someone confronts with the high-dimensional real world data. Because of some reasons, one wants to capture the underlying low-dimensional structure of the tensor data or seeks to detect the irregular sparse patterns of the tensor data, such as image compression [6], foreground segmentation [7], saliency detection [8], and traffic data completion [2, 3]. As a consequence, it is desirable to develop algorithms that can capture the low-dimensional structure or the irregular sparse patterns in the high-dimensional tensor data.

In this paper, a new algorithm for low-n-tensor recovery, which is termed as Mixture Augmented Lagrange Multiplier Method for Tensor Recovery (MALM-TR), is proposed. In the new algorithm, analogy to the RSTD [12], we convert the tensor recovery problem into a mixture convex optimization problem by adopting the relaxation technique strategy which eliminates the interdependent trace norm and ℓ1 norm constrain. Actually, the elements involved in problem are all in matrix case. Thus, it can be treated as a multilinear extension of the RPCA problem and subsumes the matrix RPCA problem as a special case. Lin et al. [14] have proved that the matrix RPCA problem can be solved by ALM with achieving higher precision, being less storage/memory demanding, and having a pleasing Q-linear convergence speed. Inspired by these merits of ALM, we try to extend the augmented Lagrange multiplier method (ALM) to the multilinear RPCA problem and prove that ALM is not only fit to solve the matrix RPCA problem but also suitable to solve the multilinear RPCA problem.

For the usage of this algorithm, it is applied to real world data recovery including traffic data recovery, image restoration, and background modeling.

In traffic data analysis area, due to detector and communication malfunctions, traffic data often confronts with the noising data phenomenon, especially the outlier value noise, which has a great impact on the performance of Intelligent Transportation System (ITS). Therefore, it is essential to solve the issues caused by outlier data in order to fully explore the applicability of the data and realize the ITS applications. In the application part of this paper, we introduce the tensor form to model the traffic data, which can encode the multimode (e.g., week, day, record) correlations of the traffic data simultaneously and preserve the multiway nature of traffic data. For example, it is assumed that a loop detector collects traffic volume data every 15 minutes. Thus, it will have 96 records in a day. If we have 20 weeks traffic volume data, these data can be formed into a tensor of size 20 × 7 × 96. Then, the proposed tensor-based method which can well mine the multimode correlations of traffic data mentioned above is used to remove outlier noise of the traffic data.

It is observed that the multichannel image can be seen as a tensor with multidimensions. For example, RGB image has three channels including Red channel, Green channel, and Black channel. Thus, it can be represented as width × height × 3 which is a 3-dimensional tensor. For the application, the proposed method is used to remove the noise of the image. Though the method would not be reasonable for some natural images, it has many applications for visual data such as structured images (e.g., the façade image), CT/MRI data, and multispectral image. Besides images, video data can be grouped into the form of tensor. For example, there is a video which has 300 gray frames and each of which is in size of 200 × 200. These video data can form a tensor of size 200 × 200 × 300. For the video application, the proposed method will be used for background modeling.

The rest of the paper is organized as follows. Section 2 presents some notations and states some basic properties of tensors. Section 3 discusses the detailed process of our proposed algorithm. Section 4 tests the algorithm on different settings, varying from simulated data to applications in computer vision, image processing, and traffic recovery. Finally, some concluding remarks are provided in Section 5.

2. Notation and Basics on Tensor Model

Therefore, , where . Accordingly, its inverse operator fold can be defined as fold(A(n), n) = 𝒜.

If the n-rank is very small related to the size of the tensor, we call it low-n-rank tensor.

The corresponding Frobenius norm is . Besides, the ℓ0 norm of a tensor 𝒜, denoted by ∥𝒜∥0, is the number of nonzero elements in 𝒜 and the ℓ1 norm is defined as . It is clear that , and for any 1 ≤ n ≤ N.

3. MALM-TR

This section is separated into 2 parts. In Section 3.1, we convert the low-n-tensor recovery problem into a multilinear RPCA problem. Section 3.2 simply introduces the ALM approach, extends ALM approach to solve the multilinear RPCA problem, and presents the details of the proposed algorithm.

3.1. The Multilinear RPCA Problem

3.2. Optimization Process

The core idea of solving the optimization problem in (17) is to optimize a group of variables while fixing the other groups. The variables in the optimization are M(1), …, M(n), N(1), …, N(n), S(i), L(i) which can be divided into 2n + 2 groups. To achieve the optimal solution, the method estimates M(i), N(i) , S(i), L(i) sequentially, followed by certain refinement in each iteration.

The operator can be extended to the matrix or tensor case by performing the shrinkage operator towards each element.

The pseudo-code of the proposed MALM-TR algorithm is summarized in Algorithm 1.

-

Algorithm 1: MALM-TR: MALM for tensor recovery.

-

Input: n-mode tensor 𝒜.

-

Parameters: α, β, γ, λ, η, ρ.

-

(1) Initialization: M(i) = L(i), N(i) = 0, k = 1, ρ > 0.

-

(2) Repeat until convergence

-

(3) for i = 1 to n

-

(4) ,

-

Where .

-

(5) .

-

(6) ,

-

(7) ,

-

(8) .

-

(9) end for

-

(10) ,

-

(11) .

-

(12) α = ρα, β = ρβ, γ = ργ.

-

(13) k = k + 1.

-

(14) End

-

Output: n-mode tensor ℒ, 𝒮.

Under some rather general conditions, when {α, β, γ} is an increasing sequence and both the objective function and the constraints are continuously differentiable functions, it has been proven in [20] that the Lagrange multipliers {Yi, Zi, Wi} produced by Algorithm 1 converge Q-linearly to the optimal solution when {α, β, γ} is bounded and super-Q-linearly when {α, β, γ} is unbounded. Another merit of MALM-TR is that the optimal step size to update {Yi, Zi, Wi} is proven to be the chosen penalty parameters {α, β, γ}, making the parameter tuning much easier. A third merit of MALM-TR is that the algorithm converges to the exact optimal solution, even without requiring {α, β, γ} to approach infinity [20].

4. Experiments

In this section, using both the numerical simulations and the real world data, we evaluate the performance of our proposed algorithm and then compare the results with RSTD on the low-n-rank tensor recovery problem.

In all the experiments, the Lanczos bidiagonalization algorithm with partial reorthogonalization [24] is adopted to obtain a few singular values and vectors in all iterations. A major challenge of our algorithm is the selection of parameters. We simply set the parameters for all experiments, where Imax = max{Ii}. Similarly, we choose as suggested in [11] and tune λ with the change of η. For comparing with RSTD [12], we also use the difference of ℒ and 𝒮 in successive iterations against a certain tolerance as the stopping criterion. All the experiments are conducted and timed on the same desktop with a Pentium (R) Dual-Core 2.50 GHz CPU that has 4 GB memory, running on Windows 7 and Matlab.

4.1. Numerical Simulations

A low-n-rank tensor ℒ0 is generated as follows. The N-way Tensor Toolbox [25] is used to generate a third-order tensor with the size of I1 × I2 × I3 and the relative small n-rank [r1 r2 r3]. The generated tensor is in Tucker model [13] described as ℒ0 = 𝒞×1X×2Y×3Z. To impose these rank conditions, 𝒞 is core tensor with each entry being sampled independently from a standard Gaussian distribution 𝒩(0,1), X, Y and Z are I1 × r1, I2 × r2, I3 × r3 factor matrices generated by randomly choosing each entry from 𝒩(0,1). Here without loss of generality we make the factor matrices orthogonal. But one major difference is that the n-ranks are always different along each mode while the column rank and row rank of a matrix are equal to each other. For simplicity, in this paper we set the mode-n ranks with the same value.

The entries of sparse tensor 𝒮0 are independently distributed, each taking on value 0 with probability 1 − spr, and each taking on impulsive value with probability spr. The recovered tensor 𝒜0 is generated as 𝒜0 = ℒ0 + 𝒮0.

Tables 1 and 2 present the average results (across 10 instances) for different sparse ratio. The results demonstrate that our proposed algorithm MALM-TR outperforms RSTD on either efficiency or accuracy.

| spr | Algorithm: MALM-TR | Algorithm: RSTD | ||||||

|---|---|---|---|---|---|---|---|---|

RSE_ℒ0 (e − 5) |

RSE_𝒮0 (e − 5) |

# iter | Time (s) | RSE_ℒ0 (e − 5) |

RSE_𝒮0 (e − 5) |

# iter | Time (s) | |

| 0.05 | 0.01 | 0.006 | 136 | 17.7 | 450 | 430 | 226 | 19.5 |

| 0.15 | 0.06 | 0.02 | 167 | 19.5 | 910 | 440 | 330 | 27.4 |

| 0.25 | 1.1 | 0.3 | 281 | 29.1 | 1510 | 490 | 714 | 44.4 |

| 0.35 | 2010 | 390 | 450 | 41.9 | 5620 | 1140 | 608 | 44.1 |

| spr | Algorithm: MALM-TR | Algorithm: RSTD | ||||||

|---|---|---|---|---|---|---|---|---|

RSE_ℒ0 (e − 5) |

RSE_𝒮0 (e − 5) |

# iter | Time (s) | RSE_ℒ0 (e − 5) |

RSE_𝒮0 (e − 5) |

# iter | Time (s) | |

| 0.05 | 0.2 | 0.1 | 243 | 29.4 | 400 | 230 | 411 | 33.8 |

| 0.10 | 10.1 | 4.2 | 323 | 43.5 | 520 | 270 | 568 | 51.3 |

| 0.15 | 120 | 42 | 596 | 62.5 | 650 | 260 | 1103 | 68.5 |

| 0.20 | 1250 | 370 | 972 | 88.3 | 2650 | 780 | 1235 | 88.1 |

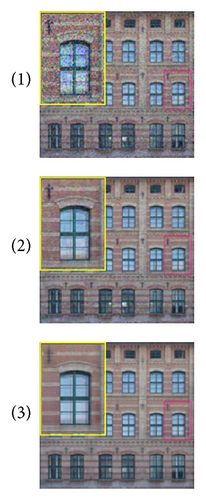

4.2. Image Restoration

One straightforward application of our algorithm is the image restoration. Same as [12] pointed, our algorithm also assumes the image to be well structured. Though the assumption would not be reasonable for some natural images, it has many applications for visual data such as structured images (e.g., the façade image), CT/MRI data, and multispectral image. In experiments, we apply the algorithm on image restoration of the façade image, which is also used in [12, 17]. We add different percent of random impulsive noise to the image and compare MALM-TR with RSTD. The results produced by both algorithms are shown in Figure 1.

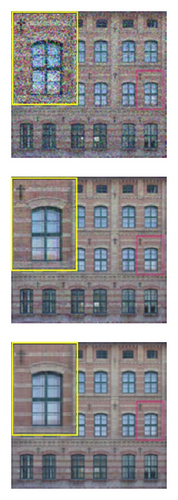

4.3. Background Modeling

Another application of our algorithm is to estimate a good model for the background variations in a scene (i.e., background modeling). In this situation, it is natural to model the background variation as approximately low rank. Foreground objects generally occupy only a fraction of the image pixels and hence can be treated as sparse part.

We test our algorithm using an example from [26] and compare with RSTD [12]. The visual comparisons of the background modeling are shown in Figure 2. It is observed that our algorithm is effective in separating the background which is even a dynamic scene. The results are also comparable to RSTD.

4.4. Traffic Data Recovery

In our previous work [3, 4], we have proposed two tensor-based methods on traffic data application. In [3], a tensor imputation method based on Tucker decomposition is developed to estimate the missing value. As a fact that the exact coordinate and the number of the missing data in the tensor form can be observed and obtained because if an element in the tensor form is missing, it doesn’t have value so we can recognize it easily. While this paper recovers a low-n-rank tensor that is arbitrarily corrupted by a fraction of noise based on the trace norm and ℓ1-norm optimization. The number and the coordinate of the corrupted data are unknown or not easy to obtain. That means that it is hard to recognize the corrupted data, because the corrupted data have values and hardly be separated from the correct data. The problems solved by the two papers are two different problems. Paper [4] is written from the point of traffic data recovery application which is the same problem that will be solved in this section. The main difference of the two proposed methods is how to use the constraint condition 𝒜 = ℒ + 𝒮. Reference [4] puts the constraint condition to the minimized function with only one parameter γ, which leads the objective function to contain not only tensor but also matrix. However, as the size and structure of each mode of the given tensor data are not always the same, the contribution of each mode of the tensor to the final result may be different. In order to utilize the information of the constraint condition as much as possible, this paper unfolds constraint condition along each mode and use weighted parameters γi to obtain the new constraint condition in matrix versions which is put into the minimized function using the augmented Lagrange multiplier strategy. With different objective functions, the optimized process is different too. More details can be found in [4].

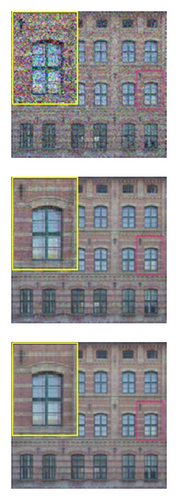

In the fourth part of the experiment section, we will apply the proposed algorithm to traffic data recovery. The data used in the experiment are collected by a fixed loop in Sacramento County and downloaded from http://pems.dot.ca.gov/. The period of the data lasts for 77 days from March 14 to May 29, 2011. The traffic volume data are recorded every 5 minutes. Therefore, a daily traffic volume series for a loop detector contains 288 records. To finish traffic data recovery by the proposed algorithm, the first step is to convert the mass traffic data into a tensor form. In this part, we choose 8-week complete traffic volume data from the 77 days. Then, the 8-week data are formed into a tensor model of size 8 × 7 × 288 as Figure 3 shows. In this model, “8” stands for 8 weeks, “7” stands for seven days in one week, and “288” stands for 288 records in one day.

In our previous work [3], the similarity coefficient [27] had been used to analyze the high multimode correlation (“link” mode, “week” mode, “day mode,” and “hour” mode) of traffic data from the point of view of statistic characteristic. For the high multicorrelations of traffic data, the tensor form of size 8 × 7 × 288 can be approximated by a low-n-rank tensor.

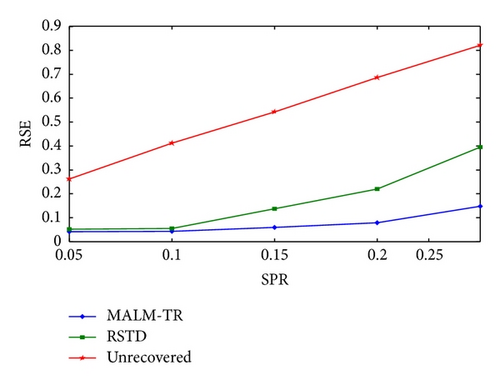

Table 3 tabulates the RSEs by sparse impulsive noise with different ratio on traffic data. Especially, the unrecovered column presents the RSE between corrupted data and the original data. From data in the table, it is observed that the RSEs obtained by MALM-TR and RSTD are much smaller than the unrecovered data, which means that both algorithm can improve the quality of the corrupted data. Moreover, the RSEs of MALM-TR are smaller than RSTD. From the curves of Figure 4, it is vividly shown that our method performs better than RSTD.

| SPR | MALM-TR(RSE) | RSTD(RSE) | Unrecovered(RSE) |

|---|---|---|---|

| 0.05 | 0.0406 | 0.0525 | 0.2614 |

| 0.10 | 0.0432 | 0.0543 | 0.4117 |

| 0.15 | 0.0593 | 0.1370 | 0.5427 |

| 0.20 | 0.0784 | 0.2198 | 0.6859 |

| 0.25 | 0.1478 | 0.3959 | 0.8218 |

5. Conclusion

In this paper, we extend the matrix recovery problem to low-n-rank tensor recovery and propose an efficient algorithm based on mixture augmented Lagrange multiplier method. The proposed algorithm can automatically separate the low-n-rank tensor data and sparse part. Experiments show that the proposed algorithm is more stable and accurate in most cases and has excellent convergence rate. Different application examples show the broad applicability of our proposed algorithm in computer vision, image processing, and traffic data recovery.

In the future, we would like to investigate how to automatically choose the parameters in our algorithm and develop more efficient method for tensor recovery problem. Also we will explore more applications of our method.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgments

The research was supported by NSFC (Grant nos. 61271376, 51308115, and 91120010), the National Basic Research Program of China (973 Program: no. 2012CB725405), and Beijing Natural Science Foundation (4122067). The authors would like to thank Professor Bin Ran from the University of Wisconsin-Madison and Yong Li from the University of Notre Dame for the suggestive discussions.