A Fast Alternating Minimization Algorithm for Nonlocal Vectorial Total Variational Multichannel Image Denoising

Abstract

The variational models with nonlocal regularization offer superior image restoration quality over traditional method. But the processing speed remains a bottleneck due to the calculation quantity brought by the recent iterative algorithms. In this paper, a fast algorithm is proposed to restore the multichannel image in the presence of additive Gaussian noise by minimizing an energy function consisting of an -norm fidelity term and a nonlocal vectorial total variational regularization term. This algorithm is based on the variable splitting and penalty techniques in optimization. Following our previous work on the proof of the existence and the uniqueness of the solution of the model, we establish and prove the convergence properties of this algorithm, which are the finite convergence for some variables and the q-linear convergence for the rest. Experiments show that this model has a fabulous texture-preserving property in restoring color images. Both the theoretical derivation of the computation complexity analysis and the experimental results show that the proposed algorithm performs favorably in comparison to the widely used fixed point algorithm.

1. Introduction

The restoration of multichannel image is one of the most important inverse problems in image processing. Among all the methods for restoration, the vectorial total variational (VTV) regularization is most popular for preserving discontinuities in restored images. VTV has been introduced by [1] into multichannel image restoration as a vectorial extension of total variation (TV) in gray scale image processing.

The famous total variational (TV) methods have drawbacks due to its local property while calculating the gradient of the image. Thus the images that contain many small structures or textures cannot get satisfying restored results. As a result, TV method based on nonlocal (NL) operator is generally used in gray scale image processing because of its structure preserving property. This method is generalized from the Yaroslavsky denoising and the block method. The main idea is using the similar blocks in the whole image to estimate current pixel value. It was introduced by Efros and Leung [2] while studying texture synthesis. Then Buades et al. [3] generalized nonlocal method and proposed the well-known neighbor denoising filter, namely, nonlocal means (NLM). After that, Gilboa and Osher [4] united nonlocal method into the regularization framework by defining the nonlocal formed TV model including NL-TV- model, NL-TV- model, and NL-TV-G model and proposed the algorithms separately. NL-TV model can both preserve the edges and the other small structures in images and has excellent application effect.

Our previews works extends NL-TV regularization to NL-VTV regularization for multichannel image restoration (see [5]). The corresponding algorithms are based on the variation calculus by solving the Euler-Lagrangian equations by fixed point iterations. This kind of methods is tested effectively but has a low convergence rate.

Recently, the optimization algorithms based on variable-splitting and penalty techniques have attracted much attention in image and signal processing, such as the dual methods, the Bregman methods, and the alternating direction method of multipliers (ADMM) methods (see [6–8]). Correspondingly, these methods have been extended for multichannel images. Bresson and Chan [6] has extended Chambolle’s dual algorithm for TV problem to vectorial images by defining the standard dual definition of VTV. Wang et al. [9, 10] has applied the alternating minimization algorithm to VTV model for multichannel image deblurring.

In this paper, we propose a fast alternating algorithm for NL-VTV model to restore color images from noisy observations. The following sections are organized as follows. Section 2 is the brief introduction of the NL-VTV model and classical fixed point algorithm. In Section 3, we propose the alternating algorithm for NL-VTV model. In Section 4, we do the convergence analysis of this algorithm. In Section 5, we applied this algorithm to color image denoising to present the texture-preserving effect of the NL-VTV model and the high convergent rate of the proposed algorithm.

2. Nonlocal Vectorial Total Variation Model

In this section, we will briefly introduce the nonlocal vectorial total variational model for multichannel image processing. The usually used iterative algorithm will be introduced as well, which will be compared to our algorithm in Section 5.

2.1. Vectorial Total Variation Model

2.2. Nonlocal Functional

2.3. Nonlocal Vectorial Total Variation Model and the Fixed Point Iterative Algorithm

In this section, the nonlocal gradient will be introduced to reconstruct the variation norm in the vectorial total variation model to deduce the NL-VTV model.

The existence and the uniqueness of the solution to (15) have been proven in our previews work, in which we proposed a fixed point iterative algorithm to solve (15) as well. Here we will give out a brief introduction as follows.

According to (19), when the weight function is fixed, the Euler-Lagrange equation is linear, which could be solved by the gradient descent kind of algorithm as follows.

As for functions, the discrete inner product is 〈u, v〉≔∑i(uivi), while, as for vectors, the discrete dot product is (p, q) i≔∑j(pi,jqi,j), the discrete inner product is 〈p, q〉≔∑i∑j(pi,jqi,j). The norm of vector is .

The convergence property of the fixed point algorithm has been proved in our in-press work. However, when |∇wu | = 0, the NLTV functional is nondifferentiable, which is similar to the classical TV model. Thus a regularization is involved in the calculation of to escape the denominator to be zero. Consequently, this algorithm takes many iteration steps to obtain the best restoration result. Thus we consider designing a fast alternating minimization algorithm instead.

3. The Alternating Minimization Algorithm

For the sake of conquering the nondifferentiability of the VTV functional, we design an alternating minimization algorithm in this section to solve the problem (15). The main idea of the alternating minimization is introducing an auxiliary variable. Then the original problem could be split into two subproblems of which one is differential with respect to the original variable, while the other is convex with respect to the auxiliary variable, respectively.

However, the nonlocal gradient operator ∇w in (15) is not block circulant. We will figure out another method to speed up the algorithm. We briefly propose the algorithm in this section. The convergence of this algorithm will be analysed in Section 4, as well as others properties of this algorithm.

3.1. Variable Splitting (Half-Quadratic Formulation of (15))

3.2. Alternating Minimization Algorithm

To conclude this section, we rewrite the alternating minimization algorithm as in Table 1.

| SNR | Noisy image | VTV | NLVTV |

|---|---|---|---|

| Barbara image | 8.9013 | 13.1212 | 13.2873 |

| Baboon image | 9.2373 | 13.1618 | 12.5936 |

4. Convergence Analysis

Since it has been proved in our in-press work that the model (15) has the unique solution, in this section, we prove that the solution obtained by Algorithm 1 is the unique one. First, the convergence of the quadratic penalty method as the penalty parameter goes to infinity is well known [13]. That is, as β → ∞, the solution of (31) converges to that of (15). We analyze the convergence properties of Algorithm 1 for a fixed β > 0. In this section, we show that, for any fixed β, the algorithm of minimizing u and d alternately has finite convergence for some variables and q-linear convergence for the rest.

-

Algorithm 1: Alternating minimization algorithm for nonlocal VTV denoising.

-

Initialization:

-

for k = 0 to K, do

-

Solve dk+1 = shrink(∇wuk+1, 1/β).

-

Solve uk+1 = (1 − (2/β))I(u0 − (1/β)divwdk)+(2/β)W(u0 − (1/β)divwdk).

-

end for

Theorem 1. The iterative sequence {(dk, uk)} generated by (30) and (37) from any starting point {(d0, u0)} converges to the solution of the minimization problem (31).

Proof. According to (30) and (37), the functional E(u, d) in (28) satisfies

Therefore, the sequence {E(uk, dk)} is nonincreasing. Thus it is convergent in because of its nonnegativity as well. We denote the limit of the sequence by m. We prove that m = E(u*, d*) followed, where u* and d* are the solution of (31).

Because E is coercive and the sequence {E(uk, dk)} is convergent, the sequence {(uk, dk)} is bounded. Thus there exists a subsequence {(uk, dk)}, which converges to an accumulation point when nk → ∞. According to (30) and (37), for all , d,

We denote as the accumulation point of the sequence , because of that the functional E is continuous, according to (38),

Because of that the threshold function, shrink, in (30), is continuous, we compute the limits of both sides of the equation and get

Thus . According to (41), . On the other hand, E is strictly convex with respect to d, then we have because of the uniqueness of the minimizer of . Similarly, we prove that .

We compute the limits of (39) and (40),

According to (43), . Thus the limit points of all of the subsequence of {(uk, dk)} are the solutions to (28). As a result, {(uk, dk)} converges to (u*, d*).

Let S1/β(∇wu) = shrink(∇wu, 1/β) and let P = (I − (2β/λ)Δw) −1. We define set , where ν < 1 is a positive constant. Then we have the following conclusion.

Theorem 2 (q-linear convergence). Assume that the sequence ; then there is a constant β0 > 0, such that for all β > β0, the sequence {uk} generated by (30) and (37) is q-linear convergence.

Proof. Combine (30) and (37); we have

We write τ = 1/(1 + (2/β)−(2/β)ν) < 1. Then by uniting (46) and (47), we have

Let . Then for all β > β0, the sequence {uk} generated by (30) and (37) is q-linear convergent to u*.

5. Experiments

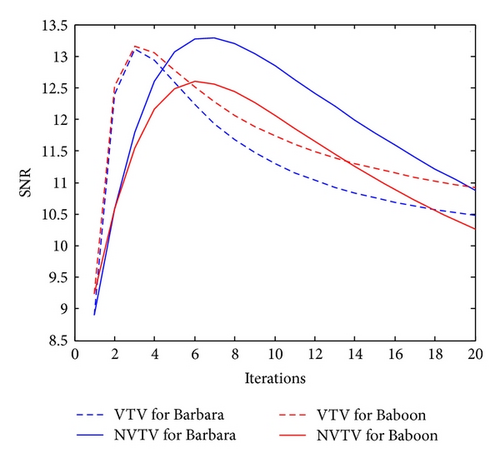

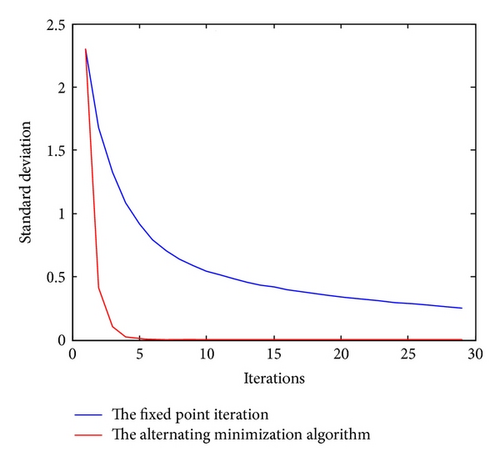

In this section, we apply Algorithm 1 to color image denoising to test its efficiency. We compare this method to that in [10] to show the detail-preserving property of the nonlocal VTV model. Then we compare the convergent rate of this algorithm with the fixed point algorithm presented in Section 2.

5.1. Texture-Preserving Property of the NLVTV Model

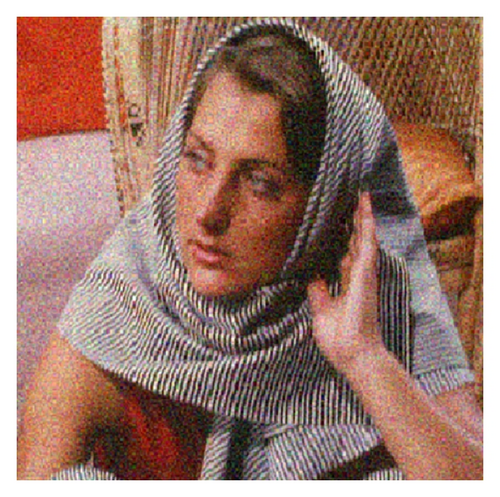

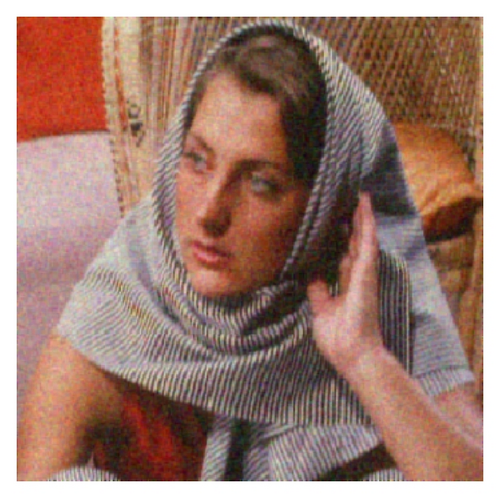

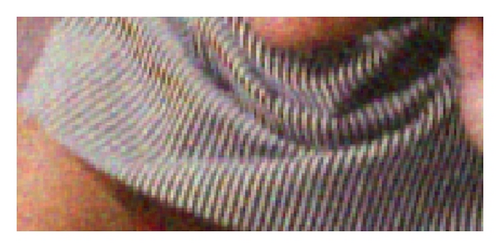

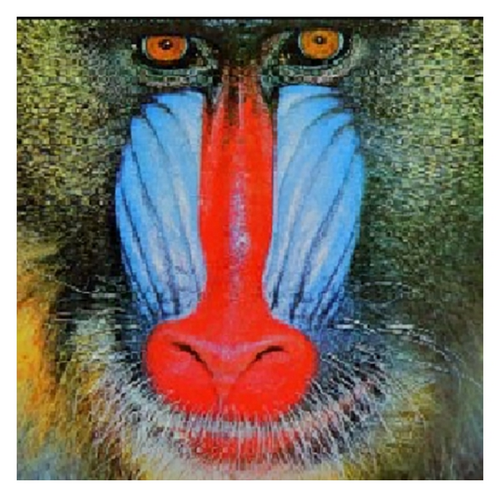

As is shown in Figure 1 and Table 1, the NLVTV model obtains similar SNR to VTV model. In the experiment of restoring the color Barbara picture, we choose the restored image by NLVTV model under the SNR (13.0678) lower than but close to that of the VTV model (SNR = 13.1212) instead of the restored image under the best SNR (13.2873). Figure 2 shows the results. Figure 3 shows the zoom part of the restored image, that is, the woman’s scarf. We can see that the NVTV model preserves the details better than the VTV model. Figures 4 and 5 show the results of the experiment of restoring the color Baboon picture. Although the NLVTV model gets lower SNR than the VTV model, the textures in this picture are better preserved by the NLVTV model rather than the VTV model.

5.2. Computation Complexity Analysis and the Convergent Rate Comparison

In this section, we compare the computation complexity and the convergent rate of this algorithm to the fixed point algorithm.

First, we analyze the computation complexities of the two algorithms, respectively. Assume the size of the multichannel image is n × n × M. The size of the nonlocal searching window is m × m. We do not consider the computation complexity of the calculation of the nonlocal weight, because that is executed exactly the same in both algorithms.

At each iterative step of Algorithm 1, namely, (30) and (37), the dominant calculation of (30) contains the calculation of the nonlocal gradient and the threshold computation of M(nm) 2 times. Thus the computation complexity is O(Mn4) + O(M(nm) 2). The dominant calculation of (37) contains a step of nonlocal divergence computation with the computation complexity of O(Mn2) and a matrix multiplication with the computation complexity of O(Mn4). As a result, the total computation complexity for each iteration is O(Mn4) + O(M(nm) 2) + O(Mn2) + O(Mn4).

On the other hand, each iterative step of the fixed point iteration contains the nonlocal curvature with the computation complexity of O(Mn4) and three matrix multiplications with the computation complexity of O(Mn4) + O(Mn4) + O(Mn4). As a result, the total computation complexity for each iteration is O(Mn4) + O(Mn4) + O(Mn4) + O(Mn4).

Consequently, the computation complexity of the proposed algorithm is much less than the fixed point algorithm.

6. Conclusion

In this paper, we propose a fast alternating minimization algorithm for multichannel image processing, based on the new nonlocal vectorial total variational model introduced recently. We use the variable splitting and penalty techniques to split the energy minimization problem into two optimization subproblems. One is a simple minimization problem, which is solved by the threshold method. The other is a simple minimization problem, which is solved by the variation calculus. As a result, this algorithm is faster than the classical algorithm because of avoiding dealing with the nondifferentiability of the nonlocal TV norm.

The main contribution of this paper is introducing the alternating minimization algorithm for the nonlocal vectorial total variational model, which is proved fast and can be applied to many multichannel image processing problems. In this paper, we apply this algorithm to the RGB color image denoising as an example. It can be easily extended to other vectorial problems, such as color image deblurring, and to other color image models, such as hue-saturation-value (HSV) model, as well.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgments

This paper and the related works are supported by grants from the National Natural Science Foundation of China under Contracts 61072142, 61271437, and 61201337 and the Science Research Project of National University of Defense Technology under Contracts JC12-02-05 and JC13-02-03.