An Improved Proportionate Normalized Least-Mean-Square Algorithm for Broadband Multipath Channel Estimation

Abstract

To make use of the sparsity property of broadband multipath wireless communication channels, we mathematically propose an lp-norm-constrained proportionate normalized least-mean-square (LP-PNLMS) sparse channel estimation algorithm. A general lp-norm is weighted by the gain matrix and is incorporated into the cost function of the proportionate normalized least-mean-square (PNLMS) algorithm. This integration is equivalent to adding a zero attractor to the iterations, by which the convergence speed and steady-state performance of the inactive taps are significantly improved. Our simulation results demonstrate that the proposed algorithm can effectively improve the estimation performance of the PNLMS-based algorithm for sparse channel estimation applications.

1. Introduction

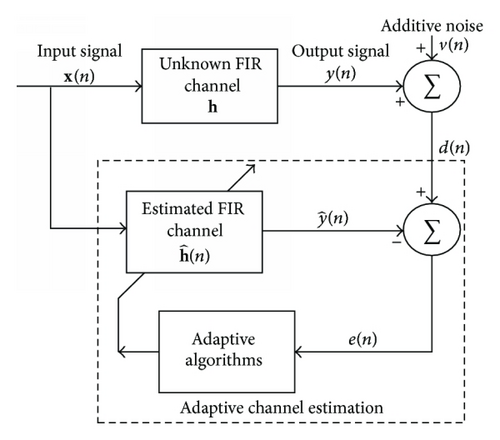

Broadband signal transmission is becoming a commonly used high-data-rate technique for next-generation wireless communication systems, such as 3 GPP long-term evolution (LTE) and worldwide interoperability for microwave access (WiMAX) [1]. The transmission performance of coherent detection for such broadband communication systems strongly depends on the quality of channel estimation [2–5]. Fortunately, broadband multipath channels can be accurately estimated using adaptive filter techniques [6–10] such as the normalized least-mean-square (NLMS) algorithm, which has low complexity and can be easily implemented at the receiver. On the other hand, channel measurements have shown that broadband wireless multipath channels can often be described by only a small number of propagation paths with long delays [4, 11, 12]. Thus, a broadband multipath channel can be regarded as a sparse channel with only a few active dominant taps, while the other inactive taps are zero or close to zero. This inherent sparsity of the channel impulse response (CIR) can be exploited to improve the quality of channel estimation. However, such classical NLMS algorithms with a uniform step size across all filter coefficients have slow convergence when estimating sparse impulse response signals such as those in broadband sparse wireless multipath channels [11]. Consequently, corresponding algorithms have recently received significant attention in the context of compressed sensing (CS) [5, 12–14] and were already considered for channel estimation prior to the CS era [5, 12]. However, these CS channel estimation algorithms are sensitive to the noise in wireless multipath channels.

Inspired by the CS theory [12–14], several zero-attracting (ZA) algorithms have been proposed and investigated by combining the CS theory and the standard least-mean-square (LMS) algorithm for echo cancellation and system identification, which are known as the zero-attracting LMS (ZA-LMS) and reweighted ZA-LMS (RZA-LMS) algorithms, respectively [15]. Recently, this technique has been expanded to the NLMS algorithm and other adaptive filter algorithms to improve their convergence speed in a sparse environment [9, 16–18]. However, these approaches are mainly designed for nonproportionate adaptive algorithms. On the other hand, to utilize the advantages of the NLMS algorithm, such as stable performance and low complexity, the proportionate normalized least-mean-square (PNLMS) algorithm has been proposed and studied to exploit the sparsity in nature [19] and has been applied to echo cancellation in telephone networks. Although the PNLMS algorithm can utilize the sparsity characteristics of a sparse signal and obtain faster convergence at the initial stage by assigning independent magnitudes to the active taps, the convergence speed is reduced by even more than that of the NLMS algorithm for the inactive taps after the active taps converge. Consequently, several algorithms have been proposed to improve the convergence speed of the PNLMS algorithm [20–27], which include the use of the l1-norm technique and a variable step size. Although these algorithms have significantly improved the convergence speed of the PNLMS algorithm, they still converge slowly after the active taps converge. In addition, some of them are inferior to the NLMS and PNLMS algorithms in terms of the steady-state error when the sparsity decreases. From these previously proposed sparse signal estimation algorithms, we know that the ZA algorithms mainly exert a penalty on the inactive channel taps through the integration of the l1-norm constraint into the cost function of the standard LMS algorithms to achieve better estimation performance, while the PNLMS algorithm updates each filter coefficient with an independent step size, which improves the convergence of the active taps.

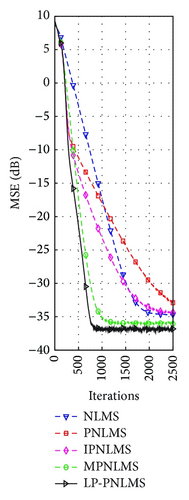

Motivated by the CS theory [13, 14] and ZA technique [15–18], we propose an lp-norm-constrained PNLMS (LP-PNLMS) algorithm that incorporates the lp-norm into the cost function of the PNLMS algorithm, resulting in an improved proportionate adaptive algorithm. The difference between the proposed LP-PNLMS algorithm and the ZA algorithms is that the gain-matrix-weighted lp-norm is used in our proposed LP-PNLMS algorithm instead of the general l1-norm to expand the application of ZA algorithms [15]. Also, this integration is equivalent to adding a zero attractor in the iterations of the PNLMS algorithm to obtain the benefits of both the PNLMS and ZA algorithms. Thus, our proposed LP-PNLMS algorithm can achieve fast convergence at the initial stage for the active taps. After the convergence of these active taps, the ZA technique in the LP-PNLMS algorithm acts as another force to attract the inactive taps to zero to arrest the slow convergence of the PNLMS algorithm. Furthermore, our proposed LP-PNLMS algorithm achieves a lower mean square error than the PNLMS algorithm and its related improved algorithms, such as the improved PNLMS (IPNLMS) [20] and μ-law PNLMS (MPNLMS) [21] algorithms. In this study, our proposed LP-PNLMS algorithm is verified over a sparse multipath channel by comparison with the NLMS, PNLMS, IPNLMS, and MPNLMS algorithms. The simulation results demonstrate that the LP-PNLMS algorithm achieves better channel estimation performance in terms of both convergence speed and steady-state behavior for sparse channel estimation.

The remainder of this paper is organized as follows. Section 2 briefly reviews the standard NLMS, PNLMS, and improved PNLMS algorithms, including the IPNLMS and MPNLMS algorithms. In Section 3, we describe in detail the proposed LP-PNLMS algorithm, which employs the Lagrange multiplier method. In Section 4, the estimation performance of the proposed LP-PNLMS algorithm is verified over sparse channels and compared with other commonly used algorithms. Finally, this paper is concluded in Section 5.

2. Related Channel Estimation Algorithms

2.1. Normalized Least-Mean-Square Algorithm

2.2. Proportionate Normalized Least-Mean-Square Algorithm

2.3. Improved Proportionate Normalized Least-Mean-Square Algorithms

2.3.1. IPNLMS Algorithm

We can see that the IPNLMS is identical to the NLMS algorithm for α = −1, while the IPNLMS behaves identically to the PNLMS algorithm when α = 1. In practical engineering applications, a suitable value for α is 0 or −0.5.

2.3.2. MPNLMS Algorithm

3. Proposed LP-PNLMS Algorithm

4. Results and Discussions

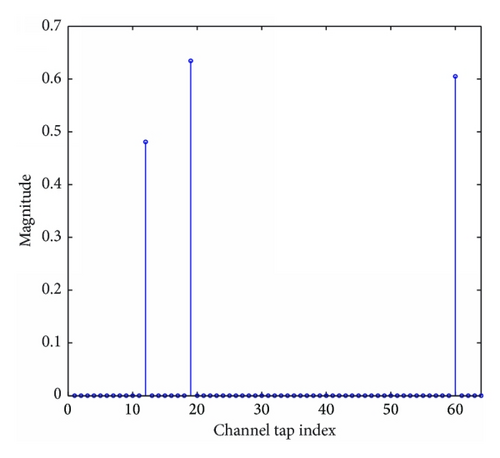

In these simulations, the simulation parameters are chosen to be μNLMS = μPNLMS = μIPNLMS = μLP = 0.5, δNLMS = 0.01, ɛ = 0.001, α = 0, ɛp = 0.05, ρLP = 1 × 10−5, δp = 0.01, ρg = 5/N, ϑ = 1000, p = 0.5, and SNR = 30 dB. When we change one of these parameters, the other parameters remain constant.

4.1. Estimation Performance of the Proposed LP-PNLMS Algorithm

4.1.1. Effects of Parameters on the Proposed LP-PNLMS Algorithm

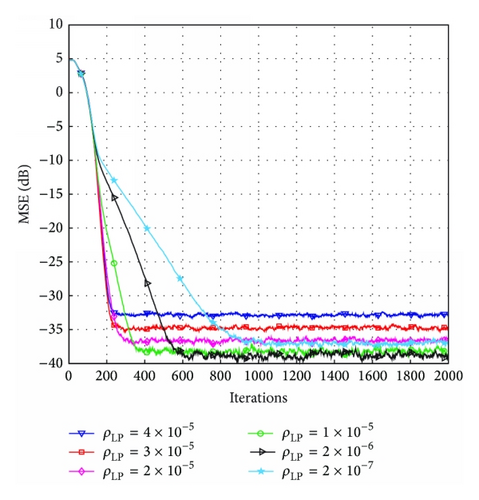

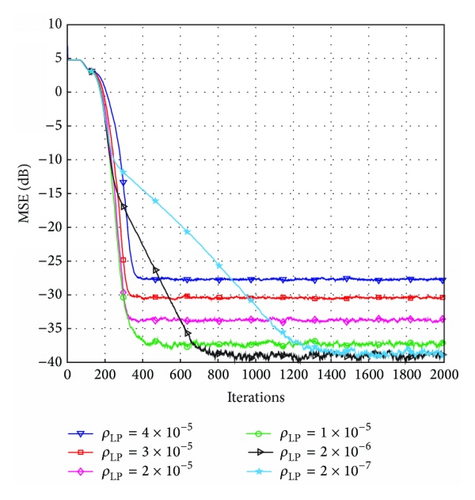

In the proposed LP-PNLMS algorithm, there are two extra parameters, p and ρLP, compared with the PNLMS algorithm, which are introduced to design the zero attractor. Next, we show how these two parameters affect the proposed LP-PNLMS algorithm over a sparse channel with N = 64 or 128 and K = 4. The simulation results for different values of ρLP and p are shown in Figures 3 and 4, respectively. From Figure 3(a), we can see that the steady-state error of the LP-PNLMS algorithm decreases with decreasing ρLP when ρLP ≥ 2 × 10−6, while it increases again when ρLP is less than 2 × 10−6. Furthermore, the convergence speed of the LP-PNLMS algorithm rapidly decreases when ρLP is less than 1 × 10−5. This is because a small ρLP results in a low ZA strength, which consequently reduces the convergence speed. In the case of N = 128 shown in Figure 3(b), we observe that both the convergence speed and the steady-state performance are improved with decreasing ρLP for ρLP ≥ 1 × 10−5. When ρLP < 1 × 10−5, the convergence speed of the LP-PNLMS algorithm decreases while the steady-state error remains constant.

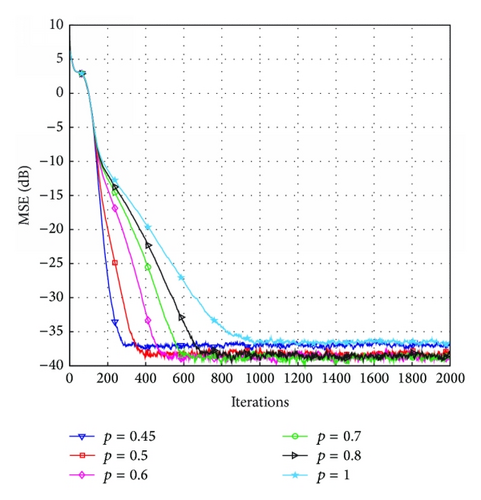

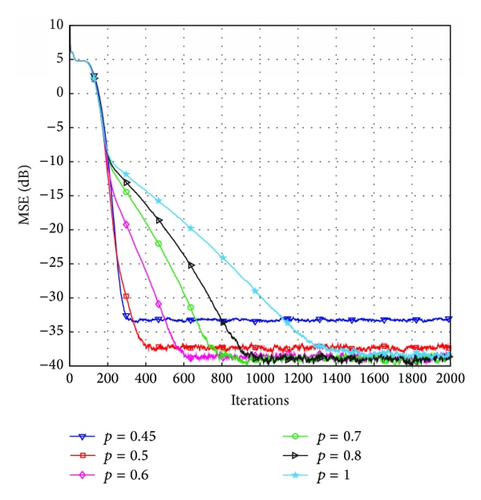

Figure 4 demonstrates the effects of the parameter p. We can see from Figure 4(a) that the convergence speed of the proposed LP-PNLMS algorithm rapidly decreases with increasing p for N = 64. Moreover, the steady-state error is reduced with p ranging from 0.45 to 0.5, while it remains constant for p = 0.6, 0.7, and 0.8. However, the steady-state performance for p = 1 is inferior to that for p = 0.8. This is because the proposed LP-PNLMS algorithm is an l1-norm-penalized PNLMS algorithm, which cannot distinguish between active taps and inactive taps, reducing its convergence speed and steady-state performance. When N = 128, as shown in Figure 4(b), the steady-state performance is improved as p increases from 0.45 to 0.6. Thus, we should carefully select the parameters ρLP and p to balance the convergence speed and steady-state performance for the proposed LP-PNLMS algorithm.

4.1.2. Effects of Sparsity Level on the Proposed LP-PNLMS Algorithm

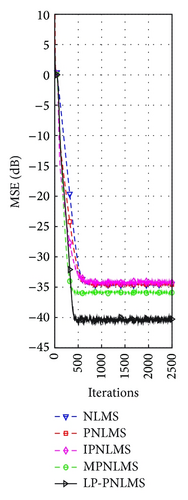

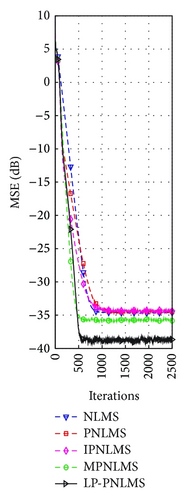

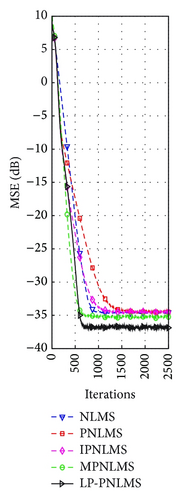

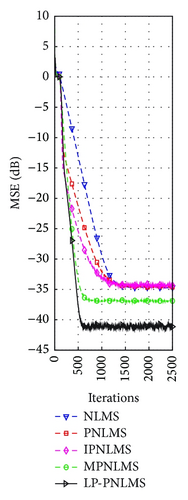

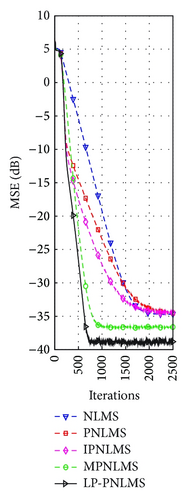

On the basis of the results discussed in Section 4.1.1 for our proposed LP-PNLMS algorithm, we choose p = 0.5 and ρLP = 1 × 10−5 to evaluate the channel estimation performance of the LP-PNLMS algorithm over a sparse channel with different channel lengths of N = 64 and 128, for which the obtained simulation results are given in Figures 5 and 6, respectively. From Figure 5, we see that our proposed LP-PNLMS algorithm has the same convergence speed as the PNLMS algorithm at the initial stage. The proposed LP-PNLMS algorithm converges faster than the PNLMS algorithm as well as the IPNLMS and NLMS algorithms for all sparsity levels K, while its convergence is slightly slower than that of the MPNLMS algorithm before it reaches a steady stage. However, the proposed LP-PNLMS algorithm has the smallest steady-state error for N = 64. When N = 128, we see from Figure 6 that our proposed LP-PNLMS algorithm not only has the highest convergence speed but also possesses the best steady-state performance. This is because with increasing sparsity, our proposed LP-PNLMS algorithm attracts the inactive taps to zero quickly and hence the convergence speed is significantly improved, while the previously proposed PNLMS algorithms mainly adjust the step size of the active taps and thus they only impact on the convergence speed at the early iteration stage. Additionally, we see from Figures 5 and 6 that both the convergence speed and the steady-state performance of all the PNLMS algorithms deteriorate when the sparsity level K increases for both N = 64 and 128. In particular, when K = 8, the convergence speeds of the PNLMS and IPNLMS algorithms are greater than that of the NLMS algorithm at the early iteration stage, while after this fast initial convergence, their convergence speeds decrease to less than that of the NLMS algorithm before reaching a steady stage. Furthermore, we observe that the MPNLMS algorithm is sensitive to the length N of the channel, and its convergence speed for N = 128 is less than that for N = 64 at the same sparsity level K and less than that of the proposed LP-PNLMS algorithm. Thus, we conclude that our proposed LP-PNLMS algorithm is superior to the previously proposed PNLMS algorithms in terms of both the convergence speed and the steady-state performance with the appropriate selection of the related parameters p and ρLP. From the above discussion, we believe that the gain-matrix-weighted lp-norm method in the LP-PNLMS algorithm can be used to further improve the channel estimation performance of the IPNLMS and MPNLMS algorithms.

4.2. Computational Complexity

Finally, we discuss the computational complexity of the proposed LP-PNLMS algorithm and compare it with those of the NLMS, PNLMS, IPNLMS, and MPNLMS algorithms. Here, the computational complexity is the arithmetic complexity, which includes additions, multiplications, and divisions. The computational complexities of the proposed LP-PNLMS algorithm and the related PNLMS and NLMS algorithms are shown in Table 1.

| Algorithms | Additions | Multiplications | Divisions |

|---|---|---|---|

| NLMS | 3N | 3N + 1 | 1 |

| PNLMS | 4N + 3 | 6N + 3 | N + 2 |

| IPNLMS | 4N + 7 | 5N + 5 | N + 2 |

| MPNLMS | 5N + 3 | 7N + 3 | N + 3 |

| LP-PNLMS | 4N + 4 | 9N + 4 | 2N + 2 |

From Table 1, we see that the computational complexity of our proposed LP-PNLMS algorithm is slightly higher than those of the MPNLMS and PNLMS algorithms, which is due to the calculation of the gradient of the lp-norm. Furthermore, the MPNLMS algorithm has an additional logarithm operation, which increases its complexity but is not included in the Table 1. However, the LP-PNLMS algorithm noticeably increases the convergence speed and significantly improves the steady-state performance of the PNLMS algorithm. In addition, it also has a higher convergence speed and lower steady-state error than the IPNLMS and MPNLMS algorithms when the channel length is large.

5. Conclusion

In this paper, we have proposed an LP-PNLMS algorithm to exploit the sparsity of broadband multipath channels and to improve both the convergence speed and steady-state performance of the PNLMS algorithm. This algorithm was mainly developed by incorporating the gain-matrix-weighted lp-norm into the cost function of the PNLMS algorithm, which significantly improves its convergence speed and steady-state performance. The simulation results demonstrated that our proposed LP-PNLMS algorithm, which has an acceptable increase in computational complexity, increases the convergence speed and reduces the steady-state error compared with the previously proposed PNLMS algorithms.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.