Efficient Simulation Budget Allocation for Ranking the Top m Designs

Abstract

We consider the problem of ranking the top m designs out of k alternatives. Using the optimal computing budget allocation framework, we formulate this problem as that of maximizing the probability of correctly ranking the top m designs subject to the constraint of a fixed limited simulation budget. We derive the convergence rate of the false ranking probability based on the large deviation theory. The asymptotically optimal allocation rule is obtained by maximizing this convergence rate function. To implement the simulation budget allocation rule, we suggest a heuristic sequential algorithm. Numerical experiments are conducted to compare the effectiveness of the proposed simulation budget allocation rule. The numerical results indicate that the proposed asymptotically optimal allocation rule performs the best comparing with other allocation rules.

1. Introduction

Discrete event system (DES) simulation has been widely used for analyzing and evaluating complex systems since the assumptions for deriving an analytical solution are rarely satisfied in real situation. While DES simulation has been successful in solving many practical problems in a variety of areas such as supply chain systems, healthcare systems, and manufacturing systems, the concerns on the efficiency have never ended [1]. To obtain a statistically significant value, a large number of simulation replications are needed for each design. The performance of each design is then estimated by its sample mean. The ultimate accuracy of this estimator cannot be improved faster than , where N is the number of simulation replications.

Ordinal optimization (OO) which aims to obtain a good estimate through ordinal comparisons although the estimated value is still poor emerges as a way to improve the simulation efficiency [2]. If the goal of the simulation experiment is to identify the good designs instead of finding the accurate estimate of the true performance value, which is true in many real applications, OO can reduce the number of simulation replications significantly [3, 4]. Intuitively, a larger portion of the total simulation replications should be allocated to those designs that are critical in identifying the best design in order to achieve a high probability of correct selection. Based on this idea, an optimal computing budget allocation (OCBA) framework has been proposed to enhance the simulation efficiency further [5, 6]. OCBA focuses on the efficiency of simulation by intelligently allocating further replications based on both the mean and the variance. In parallel with OCBA, two other well-known ranking and selection procedures frequently used in simulation are the indifference zone (IZ) procedure and value information procedure (VIP). The IZ procedure focuses on finding a feasible way to guarantee that the prespecified probability of correct selection can be achieved [7]. The VIP uses the Bayesian posterior distribution to describe the evidence of correct selection and allocates further replications based on maximizing the value information [8, 9]. The three popular procedures of finding the best design are compared in [10]. Extensions of the research in OCBA and ranking and selection include subset selection [11–13], selecting the Pareto set for multiple objective functions [14], selecting the best design subject to stochastic constraints [15, 16], and complete ranking [17]. A detailed summary of the existing work in OCBA can be found in [18].

To the best of our knowledge, no previous research has considered the simulation budget allocation for ranking the top m designs out of k alternatives. The developed top m ranking procedure is most useful when the designs for comparison have multiple dimensions of performance measurements with some qualitative criteria such as environmental consideration and political feasibility. Top m ranking procedure can help the decision makers to identify the ranking of the top m designs based on the quantitative performance measurement. Final decision can be made by incorporating other qualitative performance measurements. Hence, the top m ranking gives decision makers a more flexible and people oriented way to support the decision making process. In addition, the top m ranking procedure can also be integrated into some evolutionary search algorithms, where the ranking information of the top solutions is needed in order to determine the search direction of next iteration. For example, the ranking of the top candidate solutions may be needed in each iteration of the genetic algorithm since better candidate solutions are given higher probabilities to reproduce in genetic algorithms.

In this paper, we consider the problem of ranking the top m designs out of total k alternatives, where the performance value of each design can only be estimated with noise via simulation. The objective of this paper is to determine how to allocate the simulation replications among the k designs such that the probability of correctly ranking the top m designs can be maximized. The organization of this paper is as follows. Section 2 provides the mathematical formulation of the top m ranking problem using OCBA framework. In Section 3, we derive the convergence rate function of the false ranking probability. Section 4 derives the asymptotically optimal allocation rule using large deviation theory. A sequential allocation algorithm is proposed in Section 5. Section 6 conducts numerical experiments to demonstrate the effectiveness of the proposed simulation budget allocation rule. Finally, we conclude our paper in Section 7.

2. Problem Formulation

Consider the problem of ranking the top m designs out of k alternatives. The performance of each design can only be estimated with noise via simulation. The mean performance is used as the ranking criterion. In order to have a steady mean performance value, a large number of simulation replications are needed because of the randomness of individual samples. Given that a total of n simulation replications are available, the objective is to find the best allocation of the total n replications to the k designs in order to maximize the probability of correctly ranking the top m designs.

Denote the mean performances of each design by μ1, …, μk such that μ1 < ⋯<μi < ⋯<μk and μi+1 − μi≥δ, i = 1, …, k − 1, where δ is a positive number. Let α = (α1, …, αk) denote the proportion of the total computing budget n to be allocated to each design such that = 1, i = 1, …, k. Let denote the sample mean of design i, where () are the samples from population i. We ignore the case when αin is not an integer because we could let αin be ⌊αin⌋, the greatest integer less than αin. The analysis remains unaffected. The objective of this paper is to find the optimal allocation strategy such that the probability of correctly ranking the top m designs can be maximized with a fixed limited computing budget n.

Maximizing the probability of correctly ranking the top m designs is equivalent to minimizing the false ranking probability of the top m designs. Using asymptotical analysis, this is also equivalent to maximizing the convergence rate at which the false ranking probability goes to zero. In this paper, we use large deviation theory to derive this convergence rate function and reformulate the optimization model (2) by maximizing the convergence rate function. The assumptions needed in this paper are stated as follows.

Assumption 1. The performance of every design is independently simulated.

The independence of each design ensures that the samples for each i = 1, …, k generated are independent. Thus, the results we obtained will not be affected by the correlations among different designs.

Define the cumulant generating function of sample mean to be . The effective domain of any function f : Df → R* is the set {x ∈ Df : f(x) < ∞}, while the range is R* = R ∪ {+∞}. Let and . For any set A, Ao denotes its interior and denotes its closure.

Assumption 2. For each i = 1, …, k,

- (1)

the limit is well defined as an extended real number for all θ;

- (2)

the origin belongs to ;

- (3)

Λi(·) is strictly convex and steep, that is, , where {θn} is a sequence converging to the boundary point of ;

- (4)

.

Assumption 2 implies that with almost surely when n → ∞. Furthermore, it indicates that the sample mean satisfies the large deviation principle. The last condition of Assumption 2 ensures that the sample mean of every design can take any value between μ1 and μk and [19].

3. Rate Function of the False Ranking Probability

Theorem 3 formally states that the limit exists and the overall convergence rate function is the minimum rate function of each probability. The convergence rate can be understood as the speed at which false ranking probability goes to zero.

Theorem 3. The rate function of P(FRm) is given by

Proof. If there exists a function Gi(·) such that

Then, it can be concluded that

Now we are in the position to derive the assumed function Gi(·). Define . The cumulant moment generating function of Yn can be written as

Under Assumption 2,

By the Gärtner-Ellis Theorem [20], {Yn, n = 1,2, …} satisfies large deviation principle with good rate function which can be expressed as follows:

Hence, from large deviation principle,

Similarly,

Therefore, the convergence rate function of the false ranking probability can be expressed as follows:

4. Asymptotically Optimal Allocation

Since model (18) is strictly concave and the functions of α are continuous, a unique optimal solution must exist and the Karush-Kuhn-Tucker (KKT) conditions are necessary and sufficient for global optimality.

Theorem 4. Under Assumptions 1 and 2 in Section 2, problems (18) and (19) are equivalent; that is, a solution is the optimal solution to (18) if and only if it is also an optimal solution to (19).

Proof. We assume that a point satisfying the KKT condition of (18) is also feasible to (19). We first prove the forward and backward assertions. We then prove that the assumption that a point satisfying the KKT condition of (18) is also feasible to (19) is indeed correct.

Suppose α* is the optimal solution to (18). Since the feasible region of (19) is a subset of that of (18), if the optimal solution to (18) is feasible to (19), it must be optimal to (19). Since the KKT conditions are necessary and sufficient for optimality in (18), α*must satisfy the KKT conditions of (18). Hence, α* is feasible to (19). Therefore, if a point satisfies the KKT condition in (18), it must be optimal to (19).

Suppose the optimal solution to (18) is α* and the optimal solution to (19) is , and . Since the KKT conditions are necessary and sufficient condition to (18), thus, α* must satisfy the KKT conditions. Furthermore, the objective function of (19) is the same as that of (18), and the feasible region of (19) is a subset of that of (18). Therefore, α* must be infeasible to (19). However, we assumed that a point satisfying the KKT conditions of (18) must be feasible to (19). We have thus reached a contradiction. So we must have .

We are now in the position to prove that a point satisfying the KKT conditions of (18) must be feasible to (19). If we let αi = 1/k, we can have z > 0 for problem (18). However, any αi = 0 for i = 1, …, m − 1 will lead to αi+1infxIi+1(x) = αi+1Ii+1(μi+1) = 0. If αj = 0 for j = m + 1, …, k, we will have αminfxIm(x) = αmIm(μm) = 0. Therefore, the optimal solution must satisfy αi > 0 for every i = 1, …, k.

Since the problem (18) is a concave optimization problem, the first order condition is also the optimality condition. According to the KKT conditions, there exist λi ≥ 0, λj ≥ 0, i ∈ {1, …, m − 1}, j ∈ {m + 1, …, k}, and γ > 0 such that

If λ1 = 0, γ will be zero. Thus, we could conclude that all λi, λj = 0, j = m + 1, …, k, by putting γ = 0 into (21) to (24). Substituting λ1 = 0 and γ = 0 into (22) and (23) will result in λi = 0, i = 2, …, m − 1. However, this contradicts with (20) which requires at least one λi, λj > 0. Thus, we conclude that λ1 > 0 and γ > 0 because , i = 1, …, k − 1, is strictly positive. Similarly, we could conclude that λj > 0, j = m + 1, …, k, from (24), and max{λi, λi+1} > 0, i = 1, …, m − 1, from (22).

Based on the results that λ1 > 0, λj > 0, j ∈ {m + 1, …, k}, max{λi, λi+1} > 0, i = 2, …, m − 1, and constraints of (18), we have the following equality from the complementary slackness condition in (25) and (26). For i ∈ {2, …, m − 1}, j ∈ {m + 1, …, k},

So we have proved the assertion that a point satisfying the KKT conditions of (18) must be feasible to (19). This completes the proof of Theorem 4.

5. Sequential Allocation

Based on the results from Theorem 4, we can compute the simulation budget allocation rule using (28) for design performances with any arbitrary distributions or using (29) for normally distributed performances if the parameters of the design performance distribution are given. However, no information on the design performance distribution is known before simulation experiments are conducted. To overcome this dilemma, we suggest a heuristic sequential allocation algorithm in order to implement the allocation rule. The algorithm for sequential allocation is summarized in Algorithm 1.

-

Algorithm 1: Sequential allocation algorithm.

-

INPUT: m, k, n, n0, Δ (n − kn0 is a multiple of Δ and n0 ≥ 5)

-

INITIALIZE: Perform n0 simulation replications for all designs.

-

-

WHILE DO

-

(1) Increase the computing budget by Δ

-

(2) Use (28) to determine the new budget allocation rule, that is, .

-

The population distribution parameters are estimated by the sample statistics.

-

(3) Simulate additional for each design i = 1, …, k.

-

END OF WHILE

Define l to be the iteration number and define , i = 1, …, k to be the total number of simulation replications that have been allocated to design i up to iteration l. n is the total number of simulation replications available. Δ is the number of incremental simulation replications for each iteration. n0 is the initial number of simulation runs for each design.

As the simulation continues, design i will be ranked number i for all i ≤ m. The ranking of the top m designs may change from iteration to iteration although it will converge to the true ranking when the total computating budget goes to infinity. When the ranking of the top m designs changes, the budget allocation in the loop will be applied immediately. Therefore, the actual proportion of budget for every system will converge to the optimal proportion when the number of iterations is sufficiently large.

Furthermore, we need to take note of n0, the initial number of replications for every design. n0 cannot be too small because the estimation of the rate function can be poor especially when the variance of the performance is large. On the other hand, if n0 is too large, some designs will be allocated excessively compared with their optimal allocation numbers. When the total budget is very limited, designs that need more replications may suffer from large n0 and this would eventually affect the simulation results. Other than the initial number of replications, the incremental budget Δ is also important in the implementation process. Large Δ results in wasting of budget, while small Δ will lead to expensive computation in the loop.

It is also worthy to note that there will be significant variation in the estimate of the performance value. Denote the empirical cumulant generating function and rate function of system i as and , respectively. In Algorithm 1, we are using to estimate Gi(αi, αi+1) for the optimal allocation rule. Thus, the true optimal allocation is actually estimated by . As argued in [19], the empirical estimation of the optimal allocation is consistent; that is, , for all i = 1, …, k almost surely when n → +∞.

6. Numerical Experiments

In this section, we test the proposed simulation budget allocation rule for ranking the top m designs by comparing it with different allocation rules: equal allocation which simulates each design equally and the OCBA-m procedure [12]. Although OCBA-m only considers the selection of the top m designs and do not aim to identify the ranking of the top m designs, we can use it here for benchmarking purpose. In all the experiments below, the performance of each design is assumed to follow the normal distribution. Therefore, the optimal allocation rule can be obtained by solving (29). The assumption of normal distribution is generally held in simulation experiments since the output is obtained from an average performance or batch means, so that the central limit theorem effect holds.

6.1. Computing Budget Allocation Rules

Equal Allocation. The simulation replications are allocated equally to each design; that is, αi = 1/k, i = 1, …, k. This is the simplest allocation rule and it can serve as a benchmark for other allocation procedures.

Top m Ranking (OCBA-Rm). The simulation budget allocation is derived using (29) and implemented using the sequential allocation algorithm proposed in Section 5. The allocation rule is solved by using the solver “fminimax” in Matlab.

6.2. Numerical Results for Different Allocation Procedures

To compare the performance of the procedures, we carried out numerical experiments for the different allocation procedures discussed above. In comparing the procedures, the effectiveness of the procedures is measured by the probability of correctly ranking the top m designs (P(CRm)) which is estimated by the fraction of the times that the procedure successfully identifies the correct ranking of the top m designs out of 10,000 independent simulation runs.

Each of the procedures simulates each of the k designs for n0 = 20 replications initially as recommended in [1, 12]. The simulation budget is increased by Δ = 40 for each iteration. Table 1 summarizes the mean and variance for the three experiments that will be conducted in this section. In this experiment, the total number of designs is 20, that is, k = 20 and the objective is to identify the ranking of the top 5 designs, that is, m = 5.

| Design | Equal spacing | Equal variance | Increasing spacing decreasing variance | |||

|---|---|---|---|---|---|---|

| Mean | Variance | Mean | Variance | Mean | Variance | |

| I | 2 | 400 | 1 | 100 | 1 | 400 |

| II | 4 | 361 | 2 | 100 | 2 | 361 |

| III | 6 | 324 | 4 | 100 | 4 | 324 |

| IV | 8 | 289 | 7 | 100 | 7 | 289 |

| V | 10 | 256 | 11 | 100 | 11 | 256 |

| VI | 12 | 225 | 16 | 100 | 16 | 225 |

| VII | 14 | 196 | 22 | 100 | 22 | 196 |

| VIII | 16 | 169 | 29 | 100 | 29 | 169 |

| IX | 18 | 144 | 37 | 100 | 37 | 144 |

| X | 20 | 121 | 46 | 100 | 46 | 121 |

| XI | 22 | 100 | 56 | 100 | 56 | 100 |

| XII | 24 | 81 | 67 | 100 | 67 | 81 |

| XIII | 26 | 64 | 79 | 100 | 79 | 64 |

| XIV | 28 | 49 | 92 | 100 | 92 | 49 |

| XV | 30 | 36 | 106 | 100 | 106 | 36 |

| XVI | 32 | 25 | 121 | 100 | 121 | 25 |

| XVII | 34 | 16 | 137 | 100 | 137 | 16 |

| XVIII | 36 | 9 | 154 | 100 | 154 | 9 |

| XIX | 38 | 4 | 172 | 100 | 172 | 4 |

| XX | 40 | 1 | 191 | 100 | 191 | 1 |

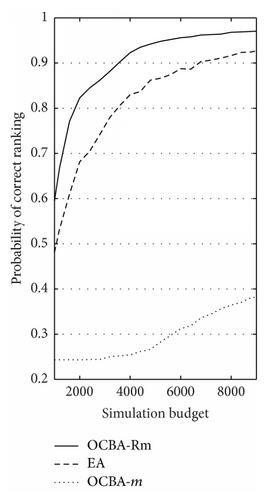

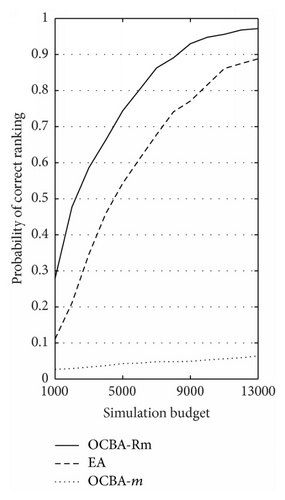

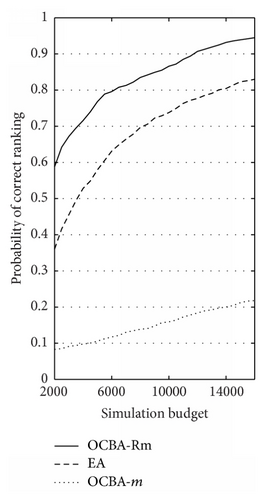

Figure 1 shows the numerical comparison for the three allocation rules, where (a) is for the equal spacing scenario, (b) is for the equal variance scenario, and (c) is for the increasing spacing but decreasing variance scenario.

The experiment results show that the proposed simulation budget allocation rule OCBA-Rm performs the best in all three experiments. It is also interesting to note that OCBA-m, which performs significantly better than EA when the objective is selecting the top m designs, fares much worse than EA in all three experiments when the objective is to identify the ranking of the top m designs. This is because OCBA-m only focuses on distinguishing the set of the top m designs and the set of the nontop m designs without considering the ranking within the top m designs. Based on the numerical results, we can see that it is important to derive the OCBA-Rm when the ranking of the top m alternatives is needed. By using the proposed OCBA-Rm allocation rule, significant number of simulation budgets can be saved compared with EA and OCBA-m.

7. Conclusion

In this paper, we study the problem of simulation budget allocation of ranking the top m designs out of k alternatives. Based on the large deviation theory, we have derived the asymptotically optimal allocation rule to maximize the probability of correctly ranking the top m designs. In addition, a heuristic sequential allocation algorithm is suggested to implement the simulation budget allocation rule. Numerical experiments are conducted to compare the effectiveness of the proposed simulation budget allocation with some existing allocation procedures. The contribution of this paper is twofold. From a ranking and selection perspective, we offer a heuristic for ranking the top m designs out of k alternatives, where our empirical studies show that it can be more efficient than the existing methods. From the computing budget allocation perspective, our heuristic illustrates how the previous OCBA method for identifying the single best design can be modified to identify the ranking of the top m designs. In particular, we derive the asymptotically optimal allocation using large deviation theory, which allows us to remove the assumption that the performance of each design must be normally distributed.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgments

The authors would like to thank the editor and the anonymous reviewers for the constructive comments which have improved the quality of this paper.