Levenberg-Marquardt Algorithm for Mackey-Glass Chaotic Time Series Prediction

Abstract

For decades, Mackey-Glass chaotic time series prediction has attracted more and more attention. When the multilayer perceptron is used to predict the Mackey-Glass chaotic time series, what we should do is to minimize the loss function. As is well known, the convergence speed of the loss function is rapid in the beginning of the learning process, while the convergence speed is very slow when the parameter is near to the minimum point. In order to overcome these problems, we introduce the Levenberg-Marquardt algorithm (LMA). Firstly, a rough introduction is given to the multilayer perceptron, including the structure and the model approximation method. Secondly, we introduce the LMA and discuss how to implement the LMA. Lastly, an illustrative example is carried out to show the prediction efficiency of the LMA. Simulations show that the LMA can give more accurate prediction than the gradient descent method.

1. Introduction

They suggested that many physiological disorders, called dynamical diseases, were characterized by changes in qualitative features of dynamics. The qualitative changes of physiological dynamics corresponded mathematically to bifurcations in the dynamics of the system. The bifurcations in the equation dynamics could be induced by changes in the parameters of the system, as might arise from disease or environmental factors, such as drugs or changes in the structure of the system [1, 2].

The Mackey-Glass equation has also had an impact on more rigorous mathematical studies of delay-differential equations. Methods for analysis of some of the properties of delay differential equations, such as the existence of solutions and stability of equilibria and periodic solutions, had already been developed [3]. However, the existence of chaotic dynamics in delay-differential equations was unknown. Subsequent studies of delay differential equations with monotonic feedback have provided significant insight into the conditions needed for oscillation and properties of oscillations [4–6]. For delay differential equations with nonmonotonic feedback, mathematical analysis has proven much more difficult. However, rigorous proofs for chaotic dynamics have been obtained for the differential delay equation dx/dt = g(x(t − 1)) for special classes of the feedback function g [7]. Further, although a proof of chaotic dynamics in the Mackey-Glass equation has still not been found, advances in understanding the properties of delay differential equations is going on, such as (2), that contain both exponential decay and nonmonotonic delayed feedback [8]. The study of this equation remains a topic of vigorous research.

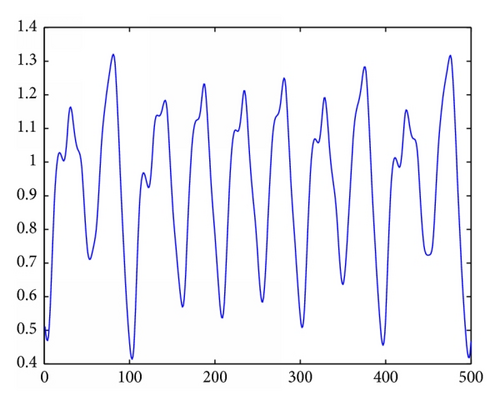

The Mackey-Glass chaotic time series prediction is a very difficult task. The aim is to predict the future state x(t + ΔT) using the current and the past time series x(t), x(t − 1), …, x(t − n) (Figure 2). Until now, there are many literatures about the Mackey-Glass chaotic time series prediction [9–14]. However, as far as the prediction accuracy is concerned, most of the results in the literature are not ideal.

In this paper, we will predict the Mackey-Glass chaotic time series by the MLP. While minimizing the loss function, we introduce the LMA, which can adjust the convergence speed and obtain good convergence efficiency.

The rest of the paper is organized as follows. In Section 2, we describe the multilayer perceptron. Section 3 introduces the LMA and discusses how to implement the LMA. In Section 4, we give a numerical example to demonstrate the prediction efficiency. Section 5 is the conclusions and discussions of the paper.

2. Preliminaries

2.1. Multilayer Perceptrons

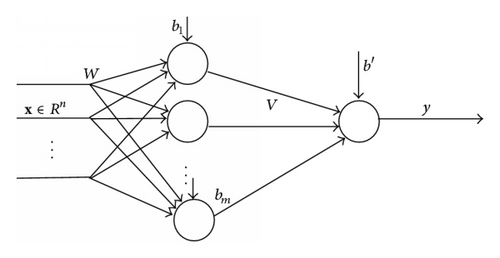

A multilayer perceptron (MLP) is a feedforward artificial neural network model that maps sets of input data onto a set of appropriate outputs. A MLP consists of multiple layers of nodes in a directed graph, with each layer fully connected to the next one. Except for the input nodes, each node is a neuron (or processing element) with a nonlinear activation function. The multilayer perceptron with only one hidden layer is depicted as in Figure 1 [15].

In Figure 1, x = [x1, x2, …, xn] T ∈ Rn is the model input, y is the model output, W = {wij}, i = 1,2, … , n, j = 1,2, …, m, is the connection weight from the xi to the jth hidden unit, V = {vj} is the connection weight from the jth hidden unit to the output unit, bj, j = 1,2, …, m, and b′ are the bias.

One of the serious problems in minimizing the mean square error is that the convergence speed of the loss function is rapid in the beginning of the learning process, while the convergence speed is very slow in the region of the minimum [18]. In order to overcome these problems, we will introduce the Levenberg-Marquardt algorithm (LMA) in the next section.

2.2. The Levenberg-Marquardt Algorithm

In mathematics and computing, the Levenberg-Marquardt algorithm (LMA) [18–20], also known as the damped least-squares (DLS) method, is used to solve nonlinear least squares problems. These minimization problems arise especially in least squares curve fitting.

The LMA is interpolates between the Gauss-Newton algorithm (GNA) and the gradient descent algorithm (GDA). As far as the robustness is concerned, the LMA performs better than the GNA, which means that in many cases it finds a solution even if it starts very far away from the minimum. However, for well-behaved functions and reasonable starting parameters, the LMA tends to be a bit slower than the GNA.

In many real applications for solving model fitting problems, we often adopt the LMA. However, like many other fitting algorithms, the LMA finds only a local minimum, which is always not the global minimum.

The LMA is an iterative algorithm and the parameter θ is adjusted in each iteration step. Generally speaking, we choose an initial parameter randomly, for example, θi ~ U(−1,1), i = 1,2, …, n, where n is the dimension of parameter θ.

In the process of iteration, if either the length of the calculated step Δθ or the reduction of L(θ) from the latest parameter vector θ + Δθ falls below the predefined limits, iteration process stops and then we take the last parameter vector θ as the final solution.

3. Application of LMA for Mackey-Glass Chaotic Time Series

In this section, we will derive the LMA when the MLP is used for the Mackey-Glass chaotic time series prediction. Suppose that we use the x(t) − ΔT0, x(t − ΔT1), … , x(t − ΔTn−1) to predict the future variable x(t + ΔT), where ΔT0 = 0.

As we know, θ = (b1, …, bm, w11, …, wn1, w12, …, wn2, … , w1m, …, wnm, b′, v1, …, vm) ∈ R(n+1)m+m+1, so Ji, the ith row of J, can be easily obtained according to (21). J is calculated and when MLP is used for Mackey-Glass chaotic time series prediction, the LMA can also be obtained.

4. Numerical Simulations

Example 1. We will conduct an experiment to show the efficiency of the Levenberg-Marquardt algorithm. We choose a chaotic time series created by the Mackey-Glass delay-difference equation:

Such a series has some short-range time coherence, but long-term prediction is very difficult. The need to predict such a time series arises in detecting arrhythmias in heartbeats.

The network is given no information about the generator of the time series and is asked to predict the future of the time series from a few samples of the history of the time series. In our example, we trained the network to predict the value at time T + ΔT, from inputs at time T, T − 6, T − 12, and T − 18, and we will adopt ΔT = 50 here.

In the simulation, 3000 training examples and 500 test examples are generated by (22). We use the following multilayer perceptron for fitting the generated training examples:

Let ; then, we can obtain the following equation according to (21):

The initial values of the parameters are selected randomly:

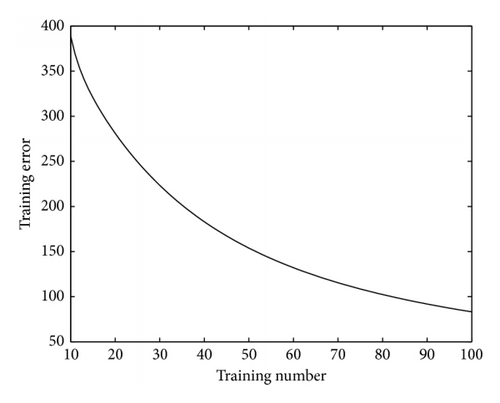

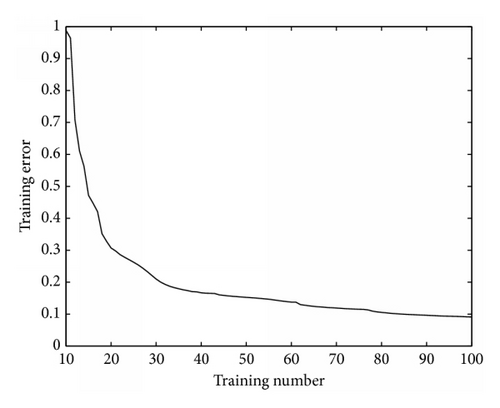

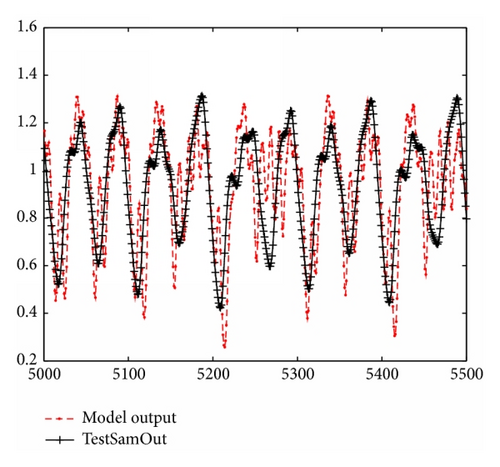

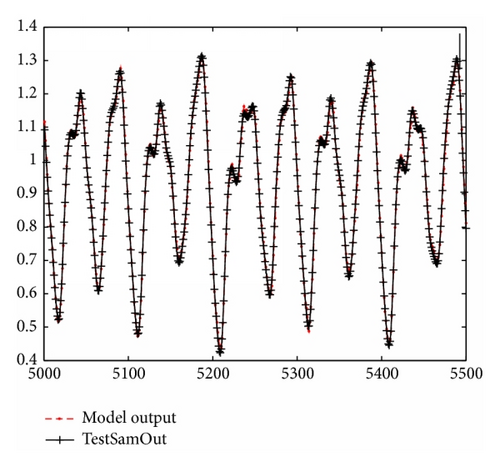

The learning curves of the error function and the fitting result of LMA and GDA are shown in Figures 3, 4, 5, and 6, respectively.

The learning curves of LMA and GNA are shown in Figures 3 and 4, respectively. The training error of LMA can reach 0.1, while the final training error of GDA is more than 90. Furthermore, the final mean test error of LMA is much smaller than 0.2296, which is the final test error of GDA.

As far as the fitting effect is concerned, the performance of LMA is much better than that of the GDA. This is very obvious from Figures 5 and 6.

All of these suggest that when we predict the Mackey-Glass chaotic time series, the performance of LMA is very good. It can effectively overcome the difficulties which may arise in the GDA.

5. Conclusions and Discussions

In this paper, we discussed the application of the Levenberg-Marquardt algorithm for the Mackey-Glass chaotic time series prediction. We used the multilayer perceptron with 20 hidden units to approximate and predict the Mackey-Glass chaotic time series. In the process of minimizing the error function, we adopted the Levenberg-Marquardt algorithm. If reduction of L(θ) is rapid, a smaller value damping factor λ can be used, bringing the algorithm closer to the Gauss-Newton algorithm, whereas if an iteration gives insufficient reduction in the residual, λ can be increased, giving a step closer to the gradient descent direction. In this paper, the learning mode is batch. At last, we demonstrate the performance of the LMA. Simulations show that the LMA can achieve much better prediction efficiency than the gradient descent method.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgment

This project is supported by the National Natural Science Foundation of China under Grants 61174076, 11471152, and 61403178.