A Prediction-Correction Dynamic Method for Large-Scale Generalized Eigenvalue Problems

Abstract

This paper gives a new prediction-correction method based on the dynamical system of differential-algebraic equations for the smallest generalized eigenvalue problem. First, the smallest generalized eigenvalue problem is converted into an equivalent-constrained optimization problem. Second, according to the Karush-Kuhn-Tucker conditions of this special equality-constrained problem, a special continuous dynamical system of differential-algebraic equations is obtained. Third, based on the implicit Euler method and an analogous trust-region technique, a prediction-correction method is constructed to follow this system of differential-algebraic equations to compute its steady-state solution. Consequently, the smallest generalized eigenvalue of the original problem is obtained. The local superlinear convergence property for this new algorithm is also established. Finally, in comparison with other methods, some promising numerical experiments are presented.

1. Introduction

In this paper, we consider the smallest generalized eigenvalue problem, which is often encountered in engineering applications such as automatic control, dynamical analysis of structure, electronic structure calculations, and quantum chemistry (see [1, 2] and references therein). For this old and active problem, recently, Gao et al. gave an interesting continuous projected method [3, 4]. This article follows this line and gives a new prediction-correction method based on the dynamical system of differential-algebraic equations (DAEs) for this problem.

First, we convert the smallest eigenvalue problem into an equivalent equality-constrained optimization problem. Second, from the Karush-Kuhn-Tucker conditions of this optimization problem, we obtain a variant of the Rayleigh quotient gradient flow [5, 6], which is formulated by a system of DAEs. Third, applying the implicit Euler method [7], a projected technique [4, 8, 9], and a new time-stepping strategy to that dynamical system of DAEs, we construct a new prediction-correction method to compute a steady-state solution of that special dynamical system. Consequently, we obtain the smallest generalized eigenvalue of (A, B). We also establish the local superlinear convergence property for this new method. Last, in comparison with other methods, some promising numerical experiments are presented. Throughout this article, ∥·∥ denotes the Euclidean vector norm and the corresponding induced matrix norm.

2. Continuous Dynamical Model

Property 1 (Gao et al., 2008 [4]). If x* is an optimal solution of problem (2) and λ* is the corresponding Lagrange multiplier, then they satisfy the second-order optimality condition

3. A Prediction-Correction Method

In this section, in order to obtain a steady-state solution of DAEs (5)-(6), we construct a prediction-correction method to follow its trajectory. According to the discussion of Section 2, we know that a steady-state solution of DAEs (5)-(6) is a generalized eigenvector associated with the smallest generalized eigenvalue of (A, B). Therefore, by following the discrete trajectory of DAEs (5)-(6), we can compute an approximate eigenvector associated with the smallest generalized eigenvalue and consequently obtain the smallest generalized eigenvalue.

Note that we mainly consider the steady state of the system of DAEs (5)-(6) and do not care about an accurate solution at its transient-state phase. In order to avoid consuming unnecessary computing time, we adopt the first-order implicit Euler method to follow its trajectory. The time steps of the implicit Euler method are not restricted by the absolute stability property for the linear test equation dx/dt = −μx, where μ > 0 [7], and consequently the implicit Euler method can take large steps at the steady-state phase so that the iteration sequence {xk} converges rapidly to a stationary point x* of the system of DAEs (5)-(6).

According to the previous discussion, we give the following discrete dynamical method for the smallest generalized eigenvalue of (A, B).

Algorithm 1. Prediction-correction method for the smallest generalized eigenvalue.

Step 1. Initialize the parameters. Specify a tolerated error TOL and an initial point x0 to satisfy

Step 2. Repeat for k = 0,1, 2, … until rk = ∥Axk − λkBxk∥ ≤ TOL:

- (a)

solve (13) to obtain the predicted point ;

- (b)

evaluate (17) to obtain the corrected point xk+1;

- (c)

compute (21) to update the Lagrange multiplier λk+1;

- (d)

compute the residual

() - (e)

update the time step Δtk+1 according to the time-stepping scheme (22)-(23);

- (f)

accept the trial vector. If ρk < η2, let xk = xk+1, λk = λk+1 and rk = rk+1;

- (g)

try again starting at (a) with k ← k + 1.

4. Local Convergence Analysis

The sequence (xk, λk) generated by Algorithm 1 follows the continuous trajectory (5)-(6). Adopting the similar estimation technique in [15, 16], we obtain that the local truncation error of xk is O(Δtk). According to the standard analysis of numerical ordinary differential equations [7, 11], we know that xk is close to x(tk) when Δtk is small enough in the finite time interval [0, T]. Therefore, we only need to estimate the local convergence rate of xk in the steady-state phase.

For the convenience of analysis, we decompose matrix B as B = LTL and denote C = L−TAL−1. Then, the smallest generalized eigenvalue λ* of (A, B) and associated with the eigenvector x* is equivalent to the smallest eigenvalue of symmetric matrix C and associated with the eigenvector y* = Lx*, respectively. Therefore, we only need to consider the convergence property of (yk, λk), where yk = Lxk. xk is generated by Algorithm 1.

According to the previous discussion, we give the following local convergence property for Algorithm 1.

Theorem 2. Assume that the sequence (xk, λk) is generated by Algorithm 1. Then, the sequence of error angles ϕk tends to zero superlinearly.

Proof. From (21) and Cy* = λ*y*, we have

Consequently, we obtain

We can verify that there exists a positive constant εt such that all the time steps Δtk are bounded below by Δtmin ; that is, Δtk ≥ Δtmin . Actually, from (13), we know that will be close to ∥xk∥, when Δtk is small enough. Consequently, for small enough Δtk, we have

On the other hand, from (13) and (27), we obtain

Since uk is orthogonal to y* and ∥uk∥ = 1, uk can be decomposed as uk = α2z2 + ⋯+αnzn, where . Let μi (i = 1,2, …, n) be the eigenvalues of C = L−TAL−1 in ascending order. Thus, from the spectral decomposition of C, for large enough k, we obtain

Combining (33) and (39), we know that ϕk converges linearly to zero; namely, yk converges linearly to y*, when we choose the initial k such that (yk, λk) is close enough (y*, λ*).

Since (yk, λk) converges linearly to (y*, λ*), according to the time-stepping strategy (23), we obtain that the time step Δtk will tend to infinity. Consequently, from inequality (39), we know that ϕk superlinearly converges to 0.

Remark 3. From (28) and inequality (39), we have

Remark 4. The convergence rate of the Rayleigh quotient iteration is cubic; however, its iterate point sequence {xk} is not guaranteed to converge to the smallest generalized eigenvalue of (A, B) [17]. The convergence rate of Algorithm 1 is less than the convergence rate of the Rayleigh quotient iteration, but the iterate point sequence {xk} of Algorithm 1 follows the trajectory of the special dynamical system of DAEs (5)-(6), and consequently it converges to a stationary point x* of the system of DAEs (5)-(6), that is, one of generalized eigenvectors associated with the smallest generalized eigenvalue λ* of (A, B).

5. Numerical Experiments

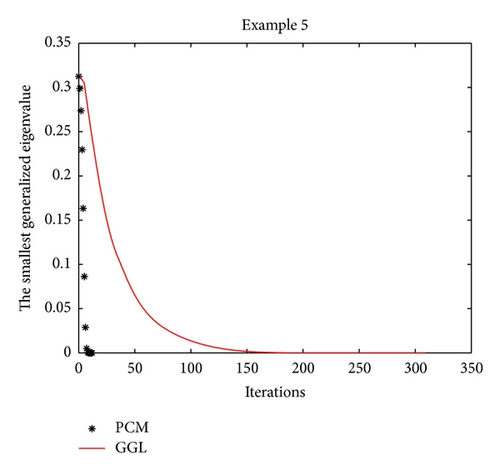

Example 5 (see [4], [21].)This test problem has four generalized eigenvalues. The first three generalized eigenvalues are 0, 0, and 0, and the other is 2. The stiffness and mass matrices are given as follows:

It is not difficult to verify that matrix B is positive definite. We choose an initial point x0 = [0.2,0.6, −0.8,0.6] T.

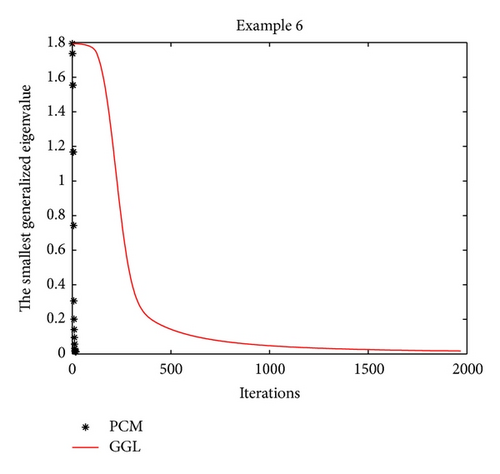

Example 6 (see [4], [21].)The stiffness and mass matrices are given as follows:

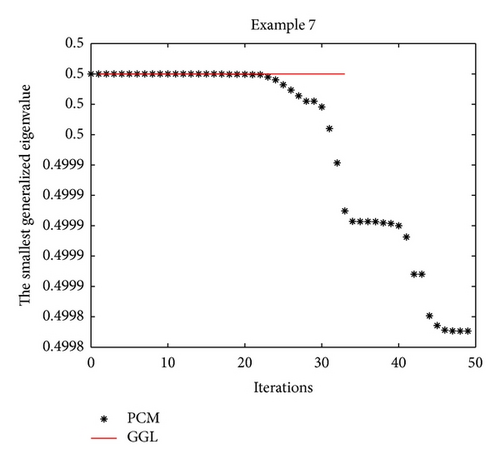

Example 7 (see [22].)This test problem is from fluid flow generalized eigenvalues (see the Bcsstruc1 set in [22]).

Consider the following

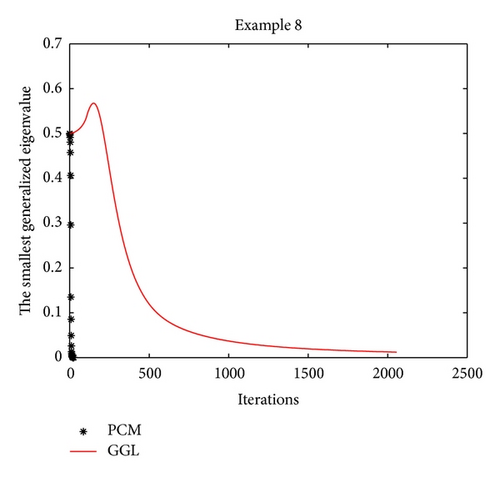

Example 8. In order to test the performance of these two methods for large-scale problems, we construct a large-sparse matrix A as follows:

- (1)

let A1 = sparse(diag (ones(n, 1)));

- (2)

let A2 = sparse(diag (ones(n − 1,1), 1));

- (3)

let A3 = sparse(diag (ones(n − 1,1), −1));

- (4)

let A = 2A1 + A2 + A3;

- (5)

let B = 4A1 + A2 + A3.

Here, sparse.m, diag.m, and ones.m are the Matlab functions, and n is the dimension of A. In our numerical experiments, we set n = 1000 for this test problem.

Numerical results for these four test problems are reported in Table 1. Iter and CPU designate the number of iterations and the computed time (given in seconds), respectively. From the computed results for Examples 5 and 8 in Table 1, we see that the proposed method (the PCM method) manages to find a smaller minimum eigenvalue than the compared method (the GGL method). Furthermore, the computed time of the PCM method is less than the GGL method and the JDQZ method for these test problems. For Example 5, the EIGS method fails since it gives the message: “(A-sigma*B) is singular. The shift is an eigenvalue. Try to use some other shift please,” when we execute it.

In order to record the convergence history, we draw the iteration trajectory of the smallest eigenvalue computed by the PCM method and by the GGL method for every test problem in Figures 1, 2, 3, and 4. From those figures, we find that Algorithm 1 (the PCM method) achieves the steady state of the dynamical system faster than the GGL method.

- (I)

η1 = 0.25, η2 = 0.75;

- (II)

η1 = 0.1, η2 = 0.9;

- (III)

η1 = 0.3, η2 = 0.7;

- (IV)

η1 = 0.4, η2 = 0.8.

The numerical results are reported in Table 2. From Table 2, we find that Algorithm 1 is not sensitive for different parameters η1 around 0.25 and η2 around 0.75. Thus, we set the default parameters η1 = 0.25 and η2 = 0.75 in Algorithm 1.

6. Conclusions

This paper discusses the connection between the smallest generalized eigenvalue problem and the continuous dynamical system of DAEs. The trajectory of this special dynamical system is followed by a new prediction-correction method (Algorithm 1), which is derived from the implicit Euler formula and an analogous trust-region technique. Consequently, an equilibrium point x* of this continuous dynamical system is obtained. This equilibrium point x* is also a generalized eigenvector associated with the smallest generalized eigenvalue. The local superlinear property for the new prediction-correction method is presented. Numerical experiments indicate that Algorithm 1 is promising for the smallest generalized eigenvalue problem. Another interesting issue is whether or not the second-order, pseudo-transient method [23] obtains the superior numerical property for this special dynamical system of DAEs (5)-(6). We would like to consider this issue in our future works.

Acknowledgments

Xin-long Luo is grateful to Proffessor Li-Zhi Liao for fruitful discussions when he visited Hong Kong Baptist University in winter, 2011. This work was supported in part by Grant 2009CB320401 from the National Basic Research Program of China, Grant YBWL2011085 from Huawei Technologies Co., Ltd., and Grant YJCB2011003HI from the Innovation Research Program of Huawei Technologies Co., Ltd., Grant BUPT2009RC0118 from the Fundamental Research Funds for the Central Universities.