Robust Almost Periodic Dynamics for Interval Neural Networks with Mixed Time-Varying Delays and Discontinuous Activation Functions

Abstract

The robust almost periodic dynamical behavior is investigated for interval neural networks with mixed time-varying delays and discontinuous activation functions. Firstly, based on the definition of the solution in the sense of Filippov for differential equations with discontinuous right-hand sides and the differential inclusions theory, the existence and asymptotically almost periodicity of the solution of interval network system are proved. Secondly, by constructing appropriate generalized Lyapunov functional and employing linear matrix inequality (LMI) techniques, a delay-dependent criterion is achieved to guarantee the existence, uniqueness, and global robust exponential stability of almost periodic solution in terms of LMIs. Moreover, as special cases, the obtained results can be used to check the global robust exponential stability of a unique periodic solution/equilibrium for discontinuous interval neural networks with mixed time-varying delays and periodic/constant external inputs. Finally, an illustrative example is given to demonstrate the validity of the theoretical results.

1. Introduction

In the past few decades, there was an increasing interest in different classes of neural networks such as Hopfield, cellular, Cohen-Grossberg, and bidirectional associative neural networks due to their potential applications in many areas such as classification, signal and image processing, parallel computing, associate memories, optimization, and cryptography. In the design of practical neural networks, the qualitative analysis of neural network dynamics plays an important role; for example, to solve problems of optimization, neural control, and signal processing, neural networks have to be designed in such a way that, for a given external input, they exhibit only one globally asymptotically/exponentially stable equilibrium point. Hence, exploring the global stability of neural networks is of primary importance.

In recent years, the global stability of neural networks with discontinuous activations has received extensive attention from a lot of scholars under the Filippov framework, see, for example, [1–29] and references therein. In [1], Forti and Nistri firstly dealt with the global asymptotic stability (GAS) and global convergence in finite time of a unique equilibrium point for neural networks modeled by a differential equations with discontinuous right-hand sides, and by using Lyapunov diagonally stable (LDS) matrix and constructing suitable Lyapunov function, several stability conditions were derived. In [2, 3], by applying generalized Lyapunov approach and M-matrix, Forti et al. discussed the global exponential stability (GES) of neural networks with discontinuous or non-Lipschitz activation functions. Arguing as in [1], in [4], Lu and Chen dealt with GES and GAS of Cohen-Grossberg neural networks with discontinuous activation functions. In [5–11], by using differential inclusion and Lyapunov functional approach, a series of results has been obtained for the global stability of the unique equilibrium point of neural networks with a single constant time-delay and discontinuous activations. In [12], under the framework of Filippov solutions, by using matrix measure approach, Liu et al. investigated the global dissipativity and quasi synchronization for the time-varying delayed neural networks with discontinuous activations and parameter mismatches. In [13], similar to the method employed in [12], Liu et al. discussed the quasi-synchronization control issue of switched complex networks.

It is well known that equilibrium point can be regarded as a special case of periodic solution for a neuron system with arbitrary period or zero amplitude. Hence, through the study on periodic solution, more general results can be obtained than those of the study on equilibrium point for a neuron system. Recently, at the same time to study the global stability of the equilibrium point of neural networks with discontinuous activation functions, much attention has been paid to deal with the stability of periodic solution for various neural network systems with discontinuous activations (see [15–29]). Under the influence of Forti and Nistri, in [15], Chen et al. considered the global convergence in finite time toward a unique periodic solution for Hopfield neural networks with discontinuous activations. In [16, 17], the authors explored the periodic dynamical behavior of neural networks with time-varying delays and discontinuous activation functions; some conditions were proposed to ensure the existence and GES of the unique periodic solution. In [17–23], under the Filippov inclusion framework, by using Leray-Schauder alternative theorem and Lyapunov approach, the authors presented some conditions on the existence and GES or GAS of the unique periodic solution for Hopfield neural networks or BAM neural networks with discontinuous activation functions. In [24], take discontinuous activations as an example, Cheng et al. presented the existence of anti-periodic solutions of discontinuous neural networks. In [25, 26], Wu et al. discussed the existence and GES of the unique periodic solution for neural networks with discontinuous activation functions under impulsive control. In [28, 29], under the framework of Filippov solutions, by using Lyapunov approach and H-matrix, the authors presented the stability results of periodic solution for delayed Cohen-Grossberg neural networks with a single constant time-delay and discontinuous activation functions.

It should be pointed out that the results reported in [1–29] are concerned with the stability analysis of equilibrium point or periodic solution and neglect the effect of almost periodicity for neural networks with discontinuous activation functions. However, the almost periodicity is one of the basic properties for dynamical neural systems and appears to retrace their paths through phase space, but not exactly. Meantime, almost periodic functions, with a superior spatial structure, can be regarded as a generalization of periodic functions. In practice, as shown in [30, 31], almost periodic phenomenon is more common than periodic phenomenon, and almost periodic oscillatory behavior is more accordant with reality. Hence, exploring the global stability of almost periodic solution of dynamical neural systems is of primary importance. Very recently, under the framework of the theory of Filippov differential inclusions, Allegretto et al. proved the common asymptotic behavior of almost periodic solution for discontinuous, delayed and impulsive neural networks in [30]. In [31, 32], Lu and Chen, Qin et al. discussed the existence and uniqueness of almost periodic solution (as well as its global exponential stability) of delayed neural networks with almost periodic coefficients and discontinuous activations. In [33], Wang and Huang studied the almost periodicity for a class of delayed Cohen-Grossberg neural networks with discontinuous activations. It should be noted that the network model explored in [30–33] is a class of discontinuous neural networks with a single constant time-delay, and the stability conditions were achieved by using Lyapunov diagonally stable matrix or M-matrix. Compared with the stability conditions expressed in terms of LMIs, it is obvious that the results obtained in [30–33] are very conservative.

In hardware implementation of the neural networks, due to unavoidable factors, such as modeling error, external perturbation, and parameter fluctuation, the neural networks model certainly involves uncertainties such as perturbations and component variations, which will change the stability of neural networks. Therefore, it is of great importance to study the global robust stability of neural networks with time-varying delay. Generally speaking, two kinds of parameter uncertainty, the interval uncertainty and the norm-bounded uncertainty, are considered frequently at present. In [34, 35], based on Lyapunov stability theory and matrix inequality analysis techniques, the global robust stability of a unique equilibrium point for neural networks with norm-bounded uncertainties and discontinuous neuron activations has been discussed. In [36], Guo and Huang analyzed the global robust stability for interval neural networks with discontinuous activations. In [37], Liu and Cao discussed the robust state estimation issue for time-varying delayed neural networks with discontinuous activation functions via differential inclusions, and some criteria have been established to guarantee the existence of robust state estimator.

It should be noted that, in the above literatures [34–36], almost all results treated of the robust stability of equilibrium point for neural networks with parameter uncertainty and discontinuous neuron activations. Moreover, most of the above-mentioned results deal with only discrete time delays. Forti et al. pointed out that it would be interesting to investigate discontinuous neural networks with more general delays, such as time-varying or distributed ones. For example, in electronic implementation of analog neural networks, the delays between neurons are usually time varying and sometimes vary violently with time due to the finite switching speed of amplifiers and faults in the electrical circuit. This motivates us to consider more general types of delays, such as discrete time-varying and distributed ones, which are in general more complex and, therefore, more difficult to be dealt with. To the best of our knowledge, up to now, only a few researchers dealt with the global robust stability issue for almost periodic solution of discontinuous neural networks with mixed time-varying delays, which motivates the work of this paper.

In this paper, our aim is to study the delay-dependent robust exponential stability problem for almost periodic solution of interval neural networks with mixed time-varying delays and discontinuous activation functions. Under the framework of Filippov differential inclusions, by applying the nonsmooth Lyapunov stability theory and employing the highly efficient LMI approach, a new delay-dependent criterion is presented to ensure the existence and global robust exponentially stability of almost periodic solution in terms of LMIs. Moreover, the obtained conclusion is applied to prove the existence and robust stability of periodic solution (or equilibrium point) for neural networks with mixed time-varying delays and discontinuous activations.

For convenience, some notation, are introduced as follows. ℝ denotes the set of real numbers, ℝn denotes the n-dimensional Euclidean space, and ℝm×n denotes the set of all m × n real matrices. For any matrix A, A > 0 (A < 0) means that A is positive definite (negative definite). A−1 denotes the inverse of A. AT denotes the transpose of A. λmax (A) and λmin (A) denote the maximum and minimum eigenvalue of A, respectively. E denotes the identity matrix with compatible dimensions. The ellipsis “⋆” denotes the transposed elements in symmetric positions. Given the vectors , , , . ∥A∥ denotes the 2-norm of A; that is, , where λ(ATA) denotes the spectral radius of ATA. For r > 0, C([−r, 0]; ℝn) denotes the family of continuous function φ from [−r, 0] to ℝn with the norm ∥φ∥ = sup −r≤s≤0 | φ(s)|. denotes the derivative of x(t).

Given a set C ⊂ ℝn, K[C] denotes the closure of the convex hull of C; Pkc(C) denotes the collection of all nonempty, closed, and convex subsets of C.

Let Y, Z be Hausdorff topological spaces and G(·) : Y↪2Z∖{∅}. We say that the set-valued map G(·) is upper semicontinuous, if, for all nonempty closed subset C of Z, G−1(C) = {y ∈ Y : G(y)⋂ C ≠ ∅} is closed in Y.

The set-valued map G(·) is said to have a closed (convex, compact) image if, for each x ∈ E, G(x) is closed (convex, compact).

The rest of this paper is organized as follows. In Section 2, the model formulation and some preliminaries are given. In Section 3, the existence and asymptotically almost periodic behavior of Filippov solutions are analyzed. Moreover, the proof of the existence of almost periodic solution is given. The global robust exponential stability is discussed, and a delay-dependent criterion is established in terms of LMIs. In Section 4, a numerical example is presented to demonstrate the validity of the proposed results. Some conclusions are drawn in Section 5.

2. Model Description and Preliminaries

-

(A1) (1) gi, i = 1, …, n, is piecewise continuous; that is, gi is continuous in ℝ except a countable set of jump discontinuous points and in every compact set of ℝ has only a finite number of jump discontinuous points.

-

(2) gi, i = 1,2, …, n, is nondecreasing.

Under assumption (A1), g(x) is undefined at the points where g(x) is discontinuous, and , where , i = 1, …, n. System (3) is a differential equation with discontinuous right-hand side. For system (3), we adopt the following definition of the solution in the sense of Filippov [39].

Definition 1. A function x : [−ι, T) → ℝn, T ∈ (0, +∞] is a solution of system (3) on [−ι, T) if

- (1)

x(t) is continuous on [−ι, T) and absolutely continuous on [0, T);

- (2)

x(t) satisfies

()

where ι = max {τM, σM}.

The function γ(t) in (8) is called an output solution associated with the state variable x(t) and represents the vector of neural network outputs.

Definition 2. For any continuous function ϕ : [−ι, 0] → ℝn and any measurable selection ψ : [−ι, 0] → ℝn, such that ψ(s) ∈ K[g(ϕ(s))] for a.a. s ∈ [−ι, 0]. An absolute continuous function x(t) = x(t, ϕ, ψ) associated with a measurable function γ(t) is said to be a solution of the initial value problem (IVP) for system (3) on [0, T) (T might be ∞) with initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0], if

Definition 3 (see [41].)A continuous function x(t) : ℝ → ℝn is said to be almost periodic on ℝ if, for any scalar ε > 0, there exist scalars l = l(ε) > 0 and ω = ω(ε) in any interval with the length of l, such that ∥x(t + ω) − x(t)∥ < ε for all t ∈ ℝ.

Definition 4. The almost periodic solution x*(t) of interval neural network (3) is said to be global robust exponentially stable if, for any D ∈ 𝔻, A ∈ 𝔸, B ∈ 𝔹, C ∈ ℂ, there exist scalars α > 0 and δ > 0, such that

Lemma 5 (chain rule [38]). If V(x) : ℝn → ℝ is C-regular and x(t) : [0, +∞) → ℝn is absolutely continuous on any compact interval of [0, +∞), then x(t) and V(x(t)):[0, +∞) → ℝ are differential for a.a. t ∈ [0, +∞), and

Lemma 6 (Jensen’s inequality [17]). For any constant matrix A > 0, any scalars a and b with b > a and a vector function x(t):[a, b] → ℝn such that the integrals are concerned as well defined, then

Lemma 7 (see [42].)Given any real matrices Q1, Q2, Q3 of appropriate dimensions and a scalar ε > 0, if , then the following inequality holds:

Lemma 8 (see [35].)Let U, V, and W be real matrices of appropriate dimension with M satisfying M = MT, then

Lemma 9 (see [36].)For any , , one has

Lemma 10 (see [43].)For sequence {fn} ⊂ L(E), if there exists F(x) ∈ L(E), such that |fn(x)| < F(x), and lim n→∞fn = f, a.e. x ∈ E, then f ∈ L(E), and

-

(A2) Ii(t), τ(t), and σ(t) are continuous functions and possess almost periodic property that is, for any ε > 0, there exist l = l(ε) > 0 and ω = ω(ε) in any interval with the length of l, such that

() -

(A3) For any ηi ∈ K[gi(xi)], ζi ∈ K[gi(yi)], ζi ≠ ηi, there exists constant ei > 0, such that

() -

(A4) For a given constant δ > 0, there exist positive matrices P, R, and H and a positive definite diagonal matrix Q, such that

3. Main Results

Theorem 11. Suppose that assumptions (A1), (A2), and (A4) are satisfied. Then interval neural network system (3) has a solution of IVP on [0, +∞) for any initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0].

Proof. For any initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0], similar to the proof of Lemma 1 in [2], under the assumptions (A1)(1), system (3) has a local solution x(t) associated with a measurable function γ(t) with initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0] on [0, T), where T ∈ (0, +∞) or T = +∞, and [0, T) is the maximal right-side existence interval of the local solution.

Consider the following Lyapunov functional candidate:

Θ1 can be rearranged as

In view of Lemma 8, Θ1 < 0 is equivalent to

Theorem 12. Suppose that the assumptions (A1)–(A4) are satisfied. Then the solution of IVP of interval neural network system (3) is asymptotically almost periodic.

Proof. Let x(t) be a solution of IVP of system (3) associated with a measurable function γ(t) with initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0]. Set y(t) = x(t + ω) − x(t), we have

Remark 13. In the proof of Theorem 12, the assumption (A3) plays an important role. Under this assumption, ∥ρ(ω, t)∥ < ε can be ensured.

Theorem 14. If the assumptions (A1)–(A4) hold, then interval neural network system (3) has a unique almost periodic solution which is global robust exponentially stable.

Proof. Firstly, we prove the existence of the almost periodic solution for interval neural network system (3).

By Theorem 12, for any initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0], interval neural network (3) has a solution which is asymptotically almost periodic. Let x(t) be any solution of system (3) associated with a measurable function γ(t) with the initial value (ϕ(s), ψ(s)), s ∈ [−ι, 0]. Then

By using (40), we can pick a sequence {tk} satisfying lim k→+∞tk = +∞ and ∥ρ(tk, t)∥ < 1/k, for all t ≥ 0, where ρ(ω, t) is defined in (36). In addition, the sequence {x(t + tk)} is equicontinuous and uniformly bounded. By Arzela-Ascoli theorem and diagonal selection principle, we can select a subsequence of {tk} (still denoted by {tk}), such that {x(t + tk)} uniformly converges to a absolute continuous function x*(t) on any compact set of ℝ.

On the other hand, since γ(t + tk) ∈ K[g(x(t + tk))] and K[g(x(t + tk))] is bounded by the boundedness of x(t), the sequence {γ(t + tk)} is bounded. Hence, we can also select a subsequence of tk (still denoted by {tk}), such that {γ(t + tk)} converges to a measurable function γ*(t) for any t ∈ [−ι, +∞). According to the fact that

- (i)

K[g(·)] is an upper semicontinuous set-valued map,

- (ii)

for t ∈ [−ι, +∞), x(t + tk) → x*(t) as k → +∞,

we can get that for any ϵ > 0, there exists N > 0, such that K[g(x(t + tk))]⊆K[g(x*(t))] + ϵℬ for k > N and t ∈ [−ι, +∞), where ℬ is an n-dimensional unit ball. Hence, the fact γ(t + tk) ∈ K[g(x(t + tk))] implies that γ(t + tk) ∈ K[g(x*(t))] + ϵℬ. On the other hand, since K[g(x*(t))] + ϵℬ is a compact subset of ℝn, we have γ*(t) = lim k→+∞γ(t + tk) ∈ K[g(x*(t))] + ϵℬ. Noting the arbitrariness of ϵ, it follows that γ*(t) ∈ K[g(x*(t))] for a.a. t ∈ [−ι, +∞).

By Lebesgue’s dominated convergence theorem (Lemma 10),

Notice that x(t) is asymptotically almost periodic. Then, for any ε > 0, there exist T > 0, l = l(ε), and ω = ω(ε) in any interval with the length of l, such that ∥x(t + ω) − x(t)∥ < ε, for all t > T. Therefore, there exists a constant N > 0, when k > N, ∥x(t + tk + ω) − x(t + tk)∥ < ε, for any t ∈ [−ι, +∞). Let k → +∞, it follows that ∥x*(t + ω) − x*(t)∥ < ε, for any t ∈ [−ι, +∞). This shows that x*(t) is an almost periodic solution of system (3).

Secondly, we prove that the almost periodic solution of interval neural network system (3) is global robust exponentially stable.

Let x(t) be an arbitrary, solution and let x*(t) be an almost solution of interval neural network system (3) associated with outputs ξ(t) and γ*(t). Consider the change of variables z(t) = x(t) − x*(t), which transforms (3) into the differential equation

Similar to V(t) in (21), define a Lyapunov functional candidate as

Remark 15. As far as we know, all the existing results concerning the almost periodic dynamical behaviors of neural networks with discontinuous activation functions [30–33] have not considered the global robust exponential stability performance. In this paper, by constructing appropriate generalized Lyapunov functional, we have obtained a delay-dependent criterion, which guarantee the existence, uniqueness, and global robust exponential stability of almost periodic solution. Moreover, the given result is formulated by LMIs, which can be easily verified by the existing powerful tools, such as the LMI toolbox of MATLAB. Therefore, results of this paper improve corresponding parts of those in [30–33].

Remark 16. In [34–36], some criteria on the robust stability of an equilibrium point for neural networks with discontinuous activation functions have been given. Compared to the main results in [34–36], our results make the following improvements.

- (1)

In [34, 35], the activation function gi is assumed to be monotonic nondecreasing and bounded. However, from the assumption (A1), we can see that the activation function gi can be unbounded.

- (2)

Although the assumption of boundedness was dropped in [36], the monotonic nondecreasing and the growth condition were indispensable. In this paper, the activation function is only assumed to be monotonic nondecreasing.

- (3)

In contrast to the models in [34–36], distributed time-varying delays are considered in this paper. If we choose σ(t) = 0 and I(t) = I, then the models in these papers are the special cases of our model.

Notice that periodic function can be regarded as a special almost periodic function. Hence, based on Theorems 11 and 14, we can obtain the following.

Corollary 17. Suppose that I(t), τ(t), and σ(t) are periodic functions, if the assumptions (A1), (A3), and (A4) are satisfied. Then

4. Illustrative Example

Example 1. Consider the third-order interval neural network (3) with the following system parameters:

Let δ = 0.5. Solving the LMI in (A4) by using appropriate LMI solver in the MATLAB, the feasible positive definite matrices P, R, and H and positive definite diagonal matrix Q could be as

In view of Corollary 17, when the external input I(t) is a periodic function, this neural network has a unique periodic solution which is global robust exponentially stable, as well as the similar result of an equilibrium for the system with constant input.

As a special case, we choose the system as follows:

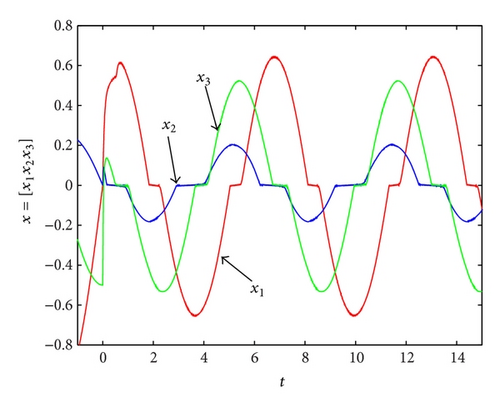

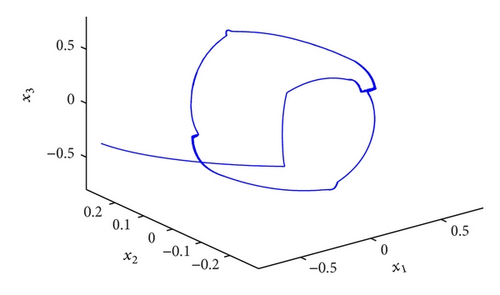

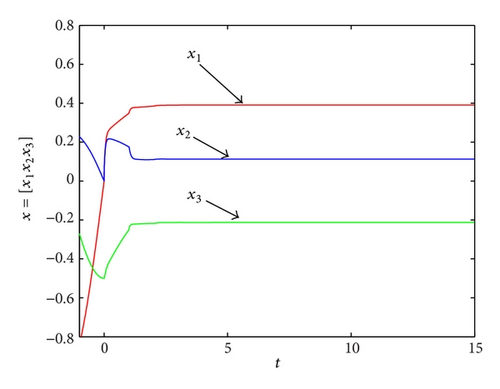

Figures 1 and 2 display the state trajectories of this neural network with initial value ϕ(t) = (sin t, −0.3tanh t, −0.5cos t) T, t ∈ [−1,0] when I(t) = (15sin t,10cos t,15cos t)T. It can be seen that these trajectories converge to a unique periodic. This is in accordance with the conclusion of Corollary 17. Figure 3 displays the state trajectories of this neural network with initial values ϕ(t) = (sin t,−0.3tanh t,−0.5cos t)T, t ∈ [−1,0] when I(t) = (10,5,−10)T. It can be seen that these trajectories converge to a unique equilibrium point. This is in accordance with the conclusion of Corollary 18.

5. Conclusion

In this paper, under the framework of Filippov differential inclusions, by constructing generalized Lyapunov-Krasovskii functional and applying LMI techniques, a sufficient condition which ensures the existence, uniqueness, and global robust exponential stability of almost periodic solution has been obtained in terms of LMIs, which is easy to be checked and applied in practice. A numerical example has been given to illustrate the validity of the theoretical results.

In [2], Forti et al. conjectured that all solutions of delayed neural networks with discontinuous neuron activations and periodic inputs converge to an asymptotically stable limit cycle. In this paper, under the assumptions (A1)–(A4), the results obtained conform that Forti’s conjecture is true for interval neural networks with mixed time-varying delays and discontinuous activation functions. Note that the synchronization or sliding mode control issues have been studied in [44–47] by using the delay-fractioning approach, and the obtained results have less conservative. Whether it is effective to deal with the time-delays for discontinuous neural networks via delay-fractioning approach will be the topic of our further research.

Acknowledgments

This work was supported by the Natural Science Foundation of Hebei Province of China (A2011203103) and the Hebei Province Education Foundation of China (2009157).